#Server for HPC workloads

Explore tagged Tumblr posts

Text

Boost Enterprise Computing with the HexaData HD-H252-3C0 VER GEN001 Server

The HexaData HD-H252-3C0 VER GEN001 is a powerful 2U high-density server designed to meet the demands of enterprise-level computing. Featuring a 4-node architecture with support for 3rd Gen Intel® Xeon® Scalable processors, it delivers exceptional performance, scalability, and energy efficiency. Ideal for virtualization, data centers, and high-performance computing, this server offers advanced memory, storage, and network capabilities — making it a smart solution for modern IT infrastructure. Learn more: HexaData HD-H252-3C0 VER GEN001.

#High-density server#2U rack server#Intel Xeon Scalable server#Enterprise server solutions#Data center hardware#HexaData servers#Virtualization-ready server#Multi-node server#Server for HPC workloads#Scalable infrastructure

0 notes

Text

A3 Ultra VMs With NVIDIA H200 GPUs Pre-launch This Month

Strong infrastructure advancements for your future that prioritizes AI

To increase customer performance, usability, and cost-effectiveness, Google Cloud implemented improvements throughout the AI Hypercomputer stack this year. Google Cloud at the App Dev & Infrastructure Summit:

Trillium, Google’s sixth-generation TPU, is currently available for preview.

Next month, A3 Ultra VMs with NVIDIA H200 Tensor Core GPUs will be available for preview.

Google’s new, highly scalable clustering system, Hypercompute Cluster, will be accessible beginning with A3 Ultra VMs.

Based on Axion, Google’s proprietary Arm processors, C4A virtual machines (VMs) are now widely accessible

AI workload-focused additions to Titanium, Google Cloud’s host offload capability, and Jupiter, its data center network.

Google Cloud’s AI/ML-focused block storage service, Hyperdisk ML, is widely accessible.

Trillium A new era of TPU performance

Trillium A new era of TPU performance is being ushered in by TPUs, which power Google’s most sophisticated models like Gemini, well-known Google services like Maps, Photos, and Search, as well as scientific innovations like AlphaFold 2, which was just awarded a Nobel Prize! We are happy to inform that Google Cloud users can now preview Trillium, our sixth-generation TPU.

Taking advantage of NVIDIA Accelerated Computing to broaden perspectives

By fusing the best of Google Cloud’s data center, infrastructure, and software skills with the NVIDIA AI platform which is exemplified by A3 and A3 Mega VMs powered by NVIDIA H100 Tensor Core GPUs it also keeps investing in its partnership and capabilities with NVIDIA.

Google Cloud announced that the new A3 Ultra VMs featuring NVIDIA H200 Tensor Core GPUs will be available on Google Cloud starting next month.

Compared to earlier versions, A3 Ultra VMs offer a notable performance improvement. Their foundation is NVIDIA ConnectX-7 network interface cards (NICs) and servers equipped with new Titanium ML network adapter, which is tailored to provide a safe, high-performance cloud experience for AI workloads. A3 Ultra VMs provide non-blocking 3.2 Tbps of GPU-to-GPU traffic using RDMA over Converged Ethernet (RoCE) when paired with our datacenter-wide 4-way rail-aligned network.

In contrast to A3 Mega, A3 Ultra provides:

With the support of Google’s Jupiter data center network and Google Cloud’s Titanium ML network adapter, double the GPU-to-GPU networking bandwidth

With almost twice the memory capacity and 1.4 times the memory bandwidth, LLM inferencing performance can increase by up to 2 times.

Capacity to expand to tens of thousands of GPUs in a dense cluster with performance optimization for heavy workloads in HPC and AI.

Google Kubernetes Engine (GKE), which offers an open, portable, extensible, and highly scalable platform for large-scale training and AI workloads, will also offer A3 Ultra VMs.

Hypercompute Cluster: Simplify and expand clusters of AI accelerators

It’s not just about individual accelerators or virtual machines, though; when dealing with AI and HPC workloads, you have to deploy, maintain, and optimize a huge number of AI accelerators along with the networking and storage that go along with them. This may be difficult and time-consuming. For this reason, Google Cloud is introducing Hypercompute Cluster, which simplifies the provisioning of workloads and infrastructure as well as the continuous operations of AI supercomputers with tens of thousands of accelerators.

Fundamentally, Hypercompute Cluster integrates the most advanced AI infrastructure technologies from Google Cloud, enabling you to install and operate several accelerators as a single, seamless unit. You can run your most demanding AI and HPC workloads with confidence thanks to Hypercompute Cluster’s exceptional performance and resilience, which includes features like targeted workload placement, dense resource co-location with ultra-low latency networking, and sophisticated maintenance controls to reduce workload disruptions.

For dependable and repeatable deployments, you can use pre-configured and validated templates to build up a Hypercompute Cluster with just one API call. This include containerized software with orchestration (e.g., GKE, Slurm), framework and reference implementations (e.g., JAX, PyTorch, MaxText), and well-known open models like Gemma2 and Llama3. As part of the AI Hypercomputer architecture, each pre-configured template is available and has been verified for effectiveness and performance, allowing you to concentrate on business innovation.

A3 Ultra VMs will be the first Hypercompute Cluster to be made available next month.

An early look at the NVIDIA GB200 NVL72

Google Cloud is also awaiting the developments made possible by NVIDIA GB200 NVL72 GPUs, and we’ll be providing more information about this fascinating improvement soon. Here is a preview of the racks Google constructing in the meantime to deliver the NVIDIA Blackwell platform’s performance advantages to Google Cloud’s cutting-edge, environmentally friendly data centers in the early months of next year.

Redefining CPU efficiency and performance with Google Axion Processors

CPUs are a cost-effective solution for a variety of general-purpose workloads, and they are frequently utilized in combination with AI workloads to produce complicated applications, even if TPUs and GPUs are superior at specialized jobs. Google Axion Processors, its first specially made Arm-based CPUs for the data center, at Google Cloud Next ’24. Customers using Google Cloud may now benefit from C4A virtual machines, the first Axion-based VM series, which offer up to 10% better price-performance compared to the newest Arm-based instances offered by other top cloud providers.

Additionally, compared to comparable current-generation x86-based instances, C4A offers up to 60% more energy efficiency and up to 65% better price performance for general-purpose workloads such as media processing, AI inferencing applications, web and app servers, containerized microservices, open-source databases, in-memory caches, and data analytics engines.

Titanium and Jupiter Network: Making AI possible at the speed of light

Titanium, the offload technology system that supports Google’s infrastructure, has been improved to accommodate workloads related to artificial intelligence. Titanium provides greater compute and memory resources for your applications by lowering the host’s processing overhead through a combination of on-host and off-host offloads. Furthermore, although Titanium’s fundamental features can be applied to AI infrastructure, the accelerator-to-accelerator performance needs of AI workloads are distinct.

Google has released a new Titanium ML network adapter to address these demands, which incorporates and expands upon NVIDIA ConnectX-7 NICs to provide further support for virtualization, traffic encryption, and VPCs. The system offers best-in-class security and infrastructure management along with non-blocking 3.2 Tbps of GPU-to-GPU traffic across RoCE when combined with its data center’s 4-way rail-aligned network.

Google’s Jupiter optical circuit switching network fabric and its updated data center network significantly expand Titanium’s capabilities. With native 400 Gb/s link rates and a total bisection bandwidth of 13.1 Pb/s (a practical bandwidth metric that reflects how one half of the network can connect to the other), Jupiter could handle a video conversation for every person on Earth at the same time. In order to meet the increasing demands of AI computation, this enormous scale is essential.

Hyperdisk ML is widely accessible

For computing resources to continue to be effectively utilized, system-level performance maximized, and economical, high-performance storage is essential. Google launched its AI-powered block storage solution, Hyperdisk ML, in April 2024. Now widely accessible, it adds dedicated storage for AI and HPC workloads to the networking and computing advancements.

Hyperdisk ML efficiently speeds up data load times. It drives up to 11.9x faster model load time for inference workloads and up to 4.3x quicker training time for training workloads.

With 1.2 TB/s of aggregate throughput per volume, you may attach 2500 instances to the same volume. This is more than 100 times more than what big block storage competitors are giving.

Reduced accelerator idle time and increased cost efficiency are the results of shorter data load times.

Multi-zone volumes are now automatically created for your data by GKE. In addition to quicker model loading with Hyperdisk ML, this enables you to run across zones for more computing flexibility (such as lowering Spot preemption).

Developing AI’s future

Google Cloud enables companies and researchers to push the limits of AI innovation with these developments in AI infrastructure. It anticipates that this strong foundation will give rise to revolutionary new AI applications.

Read more on Govindhtech.com

#A3UltraVMs#NVIDIAH200#AI#Trillium#HypercomputeCluster#GoogleAxionProcessors#Titanium#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

2 notes

·

View notes

Text

In the fast-paced digital world of today, businesses and industries are relying more than ever on efficient and scalable solutions for managing their infrastructure. One of the most promising innovations is the combination of cloud computing infrastructure and artificial intelligence (AI). Together, they are transforming how we handle infrastructure asset management and optimizing industries such as energy. This blog will explain how these technologies work together and the impacts that they are having across a wide array of sectors, including in the USA energy markets

What is Cloud Computing Infrastructure?

Cloud computing infrastructure refers to the systems that serve as the basis for delivering cloud services. This may include virtual servers, storage systems, networking capabilities, and databases. They are offered to businesses and consumers through the internet. Instead of having to hold expensive physical infrastructure, a company can use cloud infrastructure solutions to scale its operations very efficiently.

Businesses do not have to be concerned about the capital expenses for on-premise infrastructure maintenance and upgrades. With cloud service provision, organizations are enabled with tools for the management of cloud infrastructure on digital resources to watch out for them seamlessly. With cloud computing in the energy industry, companies run their simulations and manage the output without having to buy large, expensive hardware.

Changing the Face of Computing Infrastructure

The Role of AI Technology

Artificial intelligence (AI refers to computer systems designed to perform tasks that typically require human intelligence, such as learning, reasoning, and problem-solving. AI is revolutionizing how infrastructure is managed by enabling automated systems to make decisions based on data and real-time analysis.

In the energy industry, for instance, AI technology can be used in analyzing large volumes of data to optimize operations, predict failures, and recommend improvements. Such is vital in industries like US energy markets, where AI solutions can predict market fluctuations, optimize energy distribution, and increase overall efficiency.

Artificial Intelligence in Cloud Computing

When artificial intelligence in cloud computing is introduced, the possibilities expand exponentially. AI-based cloud solutions allow businesses to benefit from AI capabilities without requiring investment in dedicated hardware or a specialized team. For example, companies can utilize AI cloud computing benefits to analyze large data sets stored in the cloud, forecast energy demands, or predict equipment failures in real time.

AI and Cloud Computing for Asset Management

Among the benefits that come from the integration of AI with cloud computing infrastructure is infrastructure asset management. It is complex managing equipment, machines, or even digital services. AI algorithms help in optimizing this by identifying patterns and predicting when assets will require maintenance or replacement.

#ai infrastructure#cloudstorage#cloud computing#artificial intelligence tools#artificial intelligence

0 notes

Text

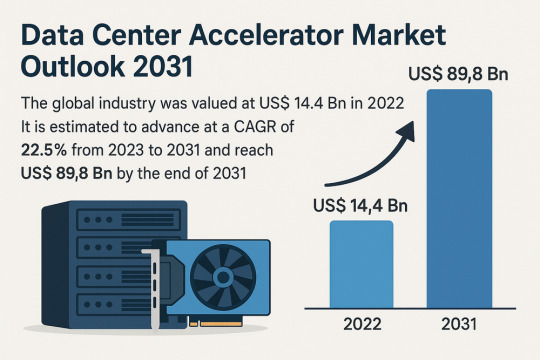

Data Center Accelerator Market Set to Transform AI Infrastructure Landscape by 2031

The global data center accelerator market is poised for exponential growth, projected to rise from USD 14.4 Bn in 2022 to a staggering USD 89.8 Bn by 2031, advancing at a CAGR of 22.5% during the forecast period from 2023 to 2031. Rapid adoption of Artificial Intelligence (AI), Machine Learning (ML), and High-Performance Computing (HPC) is the primary catalyst driving this expansion.

Market Overview: Data center accelerators are specialized hardware components that improve computing performance by efficiently handling intensive workloads. These include Graphics Processing Units (GPUs), Tensor Processing Units (TPUs), Field Programmable Gate Arrays (FPGAs), and Application-Specific Integrated Circuits (ASICs), which complement CPUs by expediting data processing.

Accelerators enable data centers to process massive datasets more efficiently, reduce reliance on servers, and optimize costs a significant advantage in a data-driven world.

Market Drivers & Trends

Rising Demand for High-performance Computing (HPC): The proliferation of data-intensive applications across industries such as healthcare, autonomous driving, financial modeling, and weather forecasting is fueling demand for robust computing resources.

Boom in AI and ML Technologies: The computational requirements of AI and ML are driving the need for accelerators that can handle parallel operations and manage extensive datasets efficiently.

Cloud Computing Expansion: Major players like AWS, Azure, and Google Cloud are investing in infrastructure that leverages accelerators to deliver faster AI-as-a-service platforms.

Latest Market Trends

GPU Dominance: GPUs continue to dominate the market, especially in AI training and inference workloads, due to their capability to handle parallel computations.

Custom Chip Development: Tech giants are increasingly developing custom chips (e.g., Meta’s MTIA and Google's TPUs) tailored to their specific AI processing needs.

Energy Efficiency Focus: Companies are prioritizing the design of accelerators that deliver high computational power with reduced energy consumption, aligning with green data center initiatives.

Key Players and Industry Leaders

Prominent companies shaping the data center accelerator landscape include:

NVIDIA Corporation – A global leader in GPUs powering AI, gaming, and cloud computing.

Intel Corporation – Investing heavily in FPGA and ASIC-based accelerators.

Advanced Micro Devices (AMD) – Recently expanded its EPYC CPU lineup for data centers.

Meta Inc. – Introduced Meta Training and Inference Accelerator (MTIA) chips for internal AI applications.

Google (Alphabet Inc.) – Continues deploying TPUs across its cloud platforms.

Other notable players include Huawei Technologies, Cisco Systems, Dell Inc., Fujitsu, Enflame Technology, Graphcore, and SambaNova Systems.

Recent Developments

March 2023 – NVIDIA introduced a comprehensive Data Center Platform strategy at GTC 2023 to address diverse computational requirements.

June 2023 – AMD launched new EPYC CPUs designed to complement GPU-powered accelerator frameworks.

2023 – Meta Inc. revealed the MTIA chip to improve performance for internal AI workloads.

2023 – Intel announced a four-year roadmap for data center innovation focused on Infrastructure Processing Units (IPUs).

Gain an understanding of key findings from our Report in this sample - https://www.transparencymarketresearch.com/sample/sample.php?flag=S&rep_id=82760

Market Opportunities

Edge Data Center Integration: As computing shifts closer to the edge, opportunities arise for compact and energy-efficient accelerators in edge data centers for real-time analytics and decision-making.

AI in Healthcare and Automotive: As AI adoption grows in precision medicine and autonomous vehicles, demand for accelerators tuned for domain-specific processing will soar.

Emerging Markets: Rising digitization in emerging economies presents substantial opportunities for data center expansion and accelerator deployment.

Future Outlook

With AI, ML, and analytics forming the foundation of next-generation applications, the demand for enhanced computational capabilities will continue to climb. By 2031, the data center accelerator market will likely transform into a foundational element of global IT infrastructure.

Analysts anticipate increasing collaboration between hardware manufacturers and AI software developers to optimize performance across the board. As digital transformation accelerates, companies investing in custom accelerator architectures will gain significant competitive advantages.

Market Segmentation

By Type:

Central Processing Unit (CPU)

Graphics Processing Unit (GPU)

Application-Specific Integrated Circuit (ASIC)

Field-Programmable Gate Array (FPGA)

Others

By Application:

Advanced Data Analytics

AI/ML Training and Inference

Computing

Security and Encryption

Network Functions

Others

Regional Insights

Asia Pacific dominates the global market due to explosive digital content consumption and rapid infrastructure development in countries such as China, India, Japan, and South Korea.

North America holds a significant share due to the presence of major cloud providers, AI startups, and heavy investment in advanced infrastructure. The U.S. remains a critical hub for data center deployment and innovation.

Europe is steadily adopting AI and cloud computing technologies, contributing to increased demand for accelerators in enterprise data centers.

Why Buy This Report?

Comprehensive insights into market drivers, restraints, trends, and opportunities

In-depth analysis of the competitive landscape

Region-wise segmentation with revenue forecasts

Includes strategic developments and key product innovations

Covers historical data from 2017 and forecast till 2031

Delivered in convenient PDF and Excel formats

Frequently Asked Questions (FAQs)

1. What was the size of the global data center accelerator market in 2022? The market was valued at US$ 14.4 Bn in 2022.

2. What is the projected market value by 2031? It is projected to reach US$ 89.8 Bn by the end of 2031.

3. What is the key factor driving market growth? The surge in demand for AI/ML processing and high-performance computing is the major driver.

4. Which region holds the largest market share? Asia Pacific is expected to dominate the global data center accelerator market from 2023 to 2031.

5. Who are the leading companies in the market? Top players include NVIDIA, Intel, AMD, Meta, Google, Huawei, Dell, and Cisco.

6. What type of accelerator dominates the market? GPUs currently dominate the market due to their parallel processing efficiency and widespread adoption in AI/ML applications.

7. What applications are fueling growth? Applications like AI/ML training, advanced analytics, and network security are major contributors to the market's growth.

Explore Latest Research Reports by Transparency Market Research: Tactile Switches Market: https://www.transparencymarketresearch.com/tactile-switches-market.html

GaN Epitaxial Wafers Market: https://www.transparencymarketresearch.com/gan-epitaxial-wafers-market.html

Silicon Carbide MOSFETs Market: https://www.transparencymarketresearch.com/silicon-carbide-mosfets-market.html

Chip Metal Oxide Varistor (MOV) Market: https://www.transparencymarketresearch.com/chip-metal-oxide-varistor-mov-market.html

About Transparency Market Research Transparency Market Research, a global market research company registered at Wilmington, Delaware, United States, provides custom research and consulting services. Our exclusive blend of quantitative forecasting and trends analysis provides forward-looking insights for thousands of decision makers. Our experienced team of Analysts, Researchers, and Consultants use proprietary data sources and various tools & techniques to gather and analyses information. Our data repository is continuously updated and revised by a team of research experts, so that it always reflects the latest trends and information. With a broad research and analysis capability, Transparency Market Research employs rigorous primary and secondary research techniques in developing distinctive data sets and research material for business reports. Contact: Transparency Market Research Inc. CORPORATE HEADQUARTER DOWNTOWN, 1000 N. West Street, Suite 1200, Wilmington, Delaware 19801 USA Tel: +1-518-618-1030 USA - Canada Toll Free: 866-552-3453 Website: https://www.transparencymarketresearch.com Email: [email protected] of Form

Bottom of Form

0 notes

Text

HPE Servers' Performance in Data Centers

HPE servers are widely regarded as high-performing, reliable, and well-suited for enterprise data center environments, consistently ranking among the top vendors globally. Here’s a breakdown of their performance across key dimensions:

1. Reliability & Stability (RAS Features)

Mission-Critical Uptime: HPE ProLiant (Gen10/Gen11), Synergy, and Integrity servers incorporate robust RAS (Reliability, Availability, Serviceability) features:

iLO (Integrated Lights-Out): Advanced remote management for monitoring, diagnostics, and repairs.

Smart Array Controllers: Hardware RAID with cache protection against power loss.

Silicon Root of Trust: Hardware-enforced security against firmware tampering.

Predictive analytics via HPE InfoSight for preemptive failure detection.

Result: High MTBF (Mean Time Between Failures) and minimal unplanned downtime.

2. Performance & Scalability

Latest Hardware: Support for newest Intel Xeon Scalable & AMD EPYC CPUs, DDR5 memory, PCIe 5.0, and high-speed NVMe storage.

Workload-Optimized:

ProLiant DL/ML: Versatile for virtualization, databases, and HCI.

Synergy: Composable infrastructure for dynamic resource pooling.

Apollo: High-density compute for HPC/AI.

Scalability: Modular designs (e.g., Synergy frames) allow scaling compute/storage independently.

3. Management & Automation

HPE OneView: Unified infrastructure management for servers, storage, and networking (automates provisioning, updates, and compliance).

Cloud Integration: Native tools for hybrid cloud (e.g., HPE GreenLake) and APIs for Terraform/Ansible.

HPE InfoSight: AI-driven analytics for optimizing performance and predicting issues.

4. Energy Efficiency & Cooling

Silent Smart Cooling: Dynamic fan control tuned for variable workloads.

Thermal Design: Optimized airflow (e.g., HPE Apollo 4000 supports direct liquid cooling).

Energy Star Certifications: ProLiant servers often exceed efficiency standards, reducing power/cooling costs.

5. Security

Firmware Integrity: Silicon Root of Trust ensures secure boot.

Cyber Resilience: Runtime intrusion detection, encrypted memory (AMD SEV-SNP, Intel SGX), and secure erase.

Zero Trust Architecture: Integrated with HPE Aruba networking for end-to-end security.

6. Hybrid Cloud & Edge Integration

HPE GreenLake: Consumption-based "as-a-service" model for on-premises data centers.

Edge Solutions: Compact servers (e.g., Edgeline EL8000) for rugged/remote deployments.

7. Support & Services

HPE Pointnext: Proactive 24/7 support, certified spare parts, and global service coverage.

Firmware/Driver Ecosystem: Regular updates with long-term lifecycle support.

Ideal Use Cases

Enterprise Virtualization: VMware/Hyper-V clusters on ProLiant.

Hybrid Cloud: GreenLake-managed private/hybrid environments.

AI/HPC: Apollo systems for GPU-heavy workloads.

SAP/Oracle: Mission-critical applications on Superdome Flex.

Considerations & Challenges

Cost: Premium pricing vs. white-box/OEM alternatives.

Complexity: Advanced features (e.g., Synergy/OneView) require training.

Ecosystem Lock-in: Best with HPE storage/networking for full integration.

Competitive Positioning

vs Dell PowerEdge: Comparable performance; HPE leads in composable infrastructure (Synergy) and AI-driven ops (InfoSight).

vs Cisco UCS: UCS excels in unified networking; HPE offers broader edge-to-cloud portfolio.

vs Lenovo ThinkSystem: Similar RAS; HPE has stronger hybrid cloud services (GreenLake).

Summary: HPE Server Strengths in Data Centers

Reliability: Industry-leading RAS + iLO management. Automation: AI-driven ops (InfoSight) + composability (Synergy). Efficiency: Energy-optimized designs + liquid cooling support. Security: End-to-end Zero Trust + firmware hardening. Hybrid Cloud: GreenLake consumption model + consistent API-driven management.

Bottom Line: HPE servers excel in demanding, large-scale data centers prioritizing stability, automation, and hybrid cloud flexibility. While priced at a premium, their RAS capabilities, management ecosystem, and global support justify the investment for enterprises with critical workloads. For SMBs or hyperscale web-tier deployments, cost may drive consideration of alternatives.

0 notes

Text

Data Center Liquid Cooling Market Size, Forecast & Growth Opportunities

In 2025 and beyond, the data center liquid cooling market size is poised for significant growth, reshaping the cooling landscape of hyperscale and enterprise data centers. As data volumes surge due to cloud computing, AI workloads, and edge deployments, traditional air-cooling systems are struggling to keep up. Enter liquid cooling—a next-gen solution gaining traction among CTOs, infrastructure heads, and facility engineers globally.

Market Size Overview: A Surge in Demand

The global data center liquid cooling market size was valued at USD 21.14 billion in 2030, and it is projected to grow at a CAGR of over 33.2% between 2025 and 2030. By 2030, fueled by escalating energy costs, density of server racks, and the drive for energy-efficient and sustainable operations.

This growth is also spurred by tech giants like Google, Microsoft, and Meta aggressively investing in high-density AI data centers, where air cooling simply cannot meet the thermal requirements.

What’s Driving the Market Growth?

AI & HPC Workloads The rise of artificial intelligence (AI), deep learning, and high-performance computing (HPC) applications demand massive processing power, generating heat loads that exceed air cooling thresholds.

Edge Computing Expansion With 5G and IoT adoption, edge data centers are becoming mainstream. These compact centers often lack space for elaborate air-cooling systems, making liquid cooling ideal.

Sustainability Mandates Governments and corporations are pushing toward net-zero carbon goals. Liquid cooling can reduce power usage effectiveness (PUE) and water usage, aligning with green data center goals.

Space and Energy Efficiency Liquid cooling systems allow for greater rack density, reducing the physical footprint and optimizing cooling efficiency, which directly translates to lower operational costs.

Key Technology Trends Reshaping the Market

Direct-to-Chip (D2C) Cooling: Coolant circulates directly to the heat source, offering precise thermal management.

Immersion Cooling: Servers are submerged in thermally conductive dielectric fluid, offering superior heat dissipation.

Rear Door Heat Exchangers: These allow retrofitting of existing setups with minimal disruption.

Modular Cooling Systems: Plug-and-play liquid cooling solutions that reduce deployment complexity in edge and micro-data centers.

Regional Insights: Where the Growth Is Concentrated

North America leads the market, driven by early technology adoption and hyperscale investments.

Asia-Pacific is witnessing exponential growth, especially in India, China, and Singapore, where government-backed digitalization and smart city projects are expanding rapidly.

Europe is catching up fast, with sustainability regulations pushing enterprises to adopt liquid cooling for energy-efficient operations.

Download PDF Brochure - Get in-depth insights, market segmentation, and technology trends

Key Players in the Liquid Cooling Space

Some of the major players influencing the data center liquid cooling market size include:

Vertiv Holdings

Schneider Electric

LiquidStack

Submer

Iceotope Technologies

Asetek

Midas Green Technologies

These innovators are offering scalable and energy-optimized solutions tailored for the evolving data center architecture.

Forecast Outlook: What CTOs Need to Know

CTOs must now factor in thermal design power (TDP) thresholds, AI-driven workloads, and sustainability mandates in their IT roadmap. Liquid cooling is no longer experimental—it is a strategic infrastructure choice.

By 2027, more than 40% of new data center builds are expected to integrate liquid cooling systems, according to recent industry forecasts. This shift will dramatically influence procurement strategies, energy models, and facility designs.

Request sample report - Dive into market size, trends, and future

Conclusion:

The data center liquid cooling market size is set to witness a paradigm shift in the coming years. With its ability to handle intense compute loads, reduce energy consumption, and offer environmental benefits, liquid cooling is becoming a must-have for forward-thinking organizations. It is time to evaluate and invest in liquid cooling infrastructure now—not just to stay competitive, but to future-proof their data center operations for the AI era.

#data center cooling#liquid cooling market#data center liquid cooling#market forecast#cooling technology trends#data center infrastructure#thermal management#liquid cooling solutions#data center growth#edge computing#HPC cooling#cooling systems market#future of data centers#liquid immersion cooling#server cooling technologies

0 notes

Link

Discover how InnoChill’s single-phase immersion cooling delivers ultra-efficient, low-PUE solutions for AI-driven data centers and HPC workloads. Cut energy costs, extend server life, and meet sustainability goals today.

0 notes

Text

Liquid Cooling for Data Center Market Growth Analysis, Market 2025

The global Liquid Cooling for Data Center market was valued at approximately USD 1,982 million in 2023, and it is projected to reach USD 11,101.99 million by 2032, reflecting a robust CAGR of 21.10% during the forecast period. This rapid growth trajectory is attributed to the increasing need for efficient thermal management in data centers, especially as organizations adopt AI, IoT, and other data-intensive technologies.

Get free sample of this report at : https://www.intelmarketresearch.com/download-free-sample/913/Liquid-Cooling-for-Data-Center-Market+

Liquid cooling for data centers refers to the use of liquid-based technologies typically water or specialized coolants to absorb and dissipate heat generated by high-performance computing (HPC) systems, servers, storage devices, and networking hardware. The global liquid cooling for data centre market is experiencing rapid growth as hyperscale and enterprise data centres face increasing demands for high performance and energy efficiency. Traditional air cooling methods are struggling to manage the heat generated by high-density computing workloads, especially with the rise of AI, machine learning, and high-performance computing (HPC). As a result, liquid cooling technologies such as direct-to-chip and immersion cooling are gaining traction due to their ability to reduce power usage effectiveness (PUE) and support sustainable operations.

For instance, Meta announced plans to deploy immersion cooling technologies across select data centres to reduce energy consumption and carbon footprint highlighting a shift toward environmentally conscious infrastructure.

Market Size

Global Liquid Cooling for Data Center Market Size and Forecast

In North America, the market was estimated at USD 720.15 million in 2023 and is anticipated to expand at a CAGR of 18.09% from 2025 through 2032. The United States leads the regional market due to the presence of numerous hyperscale data centers and cloud service providers.

The market expansion is also supported by growing investments in green data center infrastructure, along with regulatory mandates aimed at improving energy efficiency and reducing greenhouse gas emissions. The long-term outlook for the liquid cooling market is promising, with continued innovation and adoption of advanced technologies across the globe.

Market Dynamics (Drivers, Restraints, Opportunities, and Challenges)

Drivers

Rising Data Processing Demands Are Driving the Shift to Liquid Cooling

The rise in data processing demands, especially from AI, big data analytics, and high-performance computing (HPC), is one of the main factors propelling the expansion of liquid cooling in data centers. Compared to conventional applications, these workloads produce a lot more heat, which makes air cooling ineffective and expensive. Liquid cooling technologies such as direct-to-chip and immersion cooling offer up to 1,000 times greater heat dissipation efficiency than air-based systems.

For instance, Intel and Submer collaborated to implement next-generation immersion cooling in Intel's data centers, claiming better thermal performance for AI workloads and lower energy consumption. This is in line with a larger trend in the industry, where liquid cooling is being used more and more by hyperscale data centers to preserve operational stability and satisfy ESG objectives.

Restraints

High Initial Investment and Infrastructure Complexity Limit Adoption

Despite its benefits, the high initial cost and difficulty of integrating these systems into the current data center infrastructure pose serious barriers to the widespread use of liquid cooling technologies. Large-scale server rack, plumbing, and safety system redesigns are frequently necessary when retrofitting legacy buildings with liquid cooling, which can interfere with ongoing operations and raise the risk of downtime.For instance, many small to medium-sized data centre operators hesitate to adopt liquid cooling because the costs and operational challenges outweigh the immediate benefits, slowing widespread market penetration.

Opportunities

Growing Demand from Hyperscale Data Centres

A major growth opportunity for the liquid cooling market is the quick development of hyperscale data centers, which are being fueled by cloud computing giants like Microsoft Azure, Google Cloud, and Amazon Web Services. To handle the excessive heat loads produced by dense server configurations, these facilities need cooling solutions that are both scalable and extremely efficient.One efficient method for raising energy efficiency and lowering operating expenses on a large scale is liquid cooling.Moreover the growing demand for high-performance computing and AI workloads has led to significant investments in liquid cooling technologies. Companies are forming strategic partnerships to enhance cooling efficiency and reduce environmental impact.

For instance, in May 2025, Microsoft and NVIDIA announced a collaboration to integrate NVIDIA's next-generation GPUs with Microsoft's liquid cooling systems. This partnership aims to optimize AI workloads by providing efficient thermal management solutions. The integration is expected to enhance computational performance while maintaining energy efficiency.

Challenges

Lack of Industry Standards and Interoperability Slows Adoption

The absence of unified industry standards and interoperability frameworks for liquid cooling systems presents a significant challenge. Data centre operators often deal with proprietary solutions that lack compatibility with diverse server hardware, which complicates integration and raises vendor lock-in concerns.For example, while companies like Submer, LiquidStack, and Vertiv offer cutting-edge immersion and direct-to-chip solutions, their systems can differ widely in design, connector types, and thermal interface materials. This fragmentation makes it difficult for operators to scale or transition between providers without major redesigns.

According to a survey by Castrol, For the industry to continue seeing performance gains, experts predict that immersion cooling must be implemented within the next three years. Those who don't do this run the risk of lagging behind competitors at a time when data centers are under a lot of strain due to AI surges.

Regional Analysis

Market Trends by Region

North America remains at the forefront of adopting liquid cooling technologies, primarily driven by the escalating demands of AI and high-performance computing workloads. Meta has initiated a transition to direct-to-chip liquid cooling for its AI infrastructure, aiming to enhance energy efficiency and support higher-density computing. Europe is witnessing significant advancements, propelled by stringent sustainability goals and innovative collaborations. In Germany, Equinix has partnered with local entities to channel excess heat from its Frankfurt data centres into a district heating system, set to supply approximately 1,000 households starting in 2025. Similarly, in the Netherlands, Equinix signed a letter of intent with the Municipality of Diemen to explore utilizing residual heat from its AM4 data centre to support local heating needs.Asia-Pacific is emerging as a dynamic market for liquid cooling solutions. In Japan, NTT Communications, in collaboration with Mitsubishi Heavy Industries and NEC, commenced a demonstration of two-phase direct-to-chip cooling in an operational Tokyo data centre. This initiative aims to enhance cooling capacity without significant modifications to existing facilities, aligning with energy-saving and CO₂ reduction goals.South America's data centre market is experiencing significant growth, driven by the increasing adoption of cloud services, digital transformation initiatives, and a focus on sustainable infrastructure.Amazon Web Services (AWS) announced a $4 billion investment to establish its first data centres in Chile, marking its third cloud region in Latin America after Brazil and Mexico.The MEA region is witnessing a surge in data centre developments, fueled by digital transformation, increased internet penetration, and government initiatives promoting technological advancement

Competitor Analysis

Major Players and Market Landscape

The Liquid Cooling for Data Center market is moderately consolidated with several global and regional players competing based on product innovation, energy efficiency, scalability, and reliability.

Key players include:

Vertiv: Offers integrated liquid cooling systems with scalable modularity.

Stulz: Specializes in precision cooling and modular cooling technologies.

CoolIT Systems: Known for direct-to-chip liquid cooling.

Schneider Electric: Provides EcoStruxure cooling systems for high-density environments.

Submer and Green Revolution Cooling: Leaders in immersion cooling solutions.

Most companies are focusing on partnerships, R&D investments, and strategic acquisitions to strengthen their market position and expand their product portfolios.

2025, Intel advanced its Superfluid cooling technology, utilizing microbubble injection and dielectric fluids to improve heat transfer. This innovation supports Nvidia's megawatt-class rack servers, addressing the thermal demands of high-performance AI infrastructures.

October 2024,Schneider Electric agreed to buy a majority share in Motivair Corp.for about USD 850 million, a leader in liquid cooling for high-performance computing. By taking this action, Schneider Electric hopes to improve its standing in the data center cooling industry.

December 2024, Vertiv acquired BiXin Energy (China), specializing in centrifugal chiller technology, enhancing Vertiv's capabilities in high-performance computing and AI cooling solutions.

December 2023,Equinix, Inc.a global digital infrastructure company, announced plans to extend support for cutting-edge liquid cooling technologies, such as direct-to-chip, to over 100 of its International Business Exchange® (IBX®) data centers located in over 45 metropolitan areas worldwide.

December 2023, Vertiv expanded its portfolio of cutting-edge cooling technologies with the acquisition of CoolTera Ltd (UK), a business specializing in liquid cooling infrastructure solutions.

Global Liquid Cooling for Data Center Market: Market Segmentation Analysis

This report provides a deep insight into the global Liquid Cooling for Data Center Market, covering all its essential aspects. This ranges from a macro overview of the market to micro details of the market size, competitive landscape, development trend, niche market, key market drivers and challenges, SWOT analysis, value chain analysis, etc.

The analysis helps the reader to shape the competition within the industries and strategies for the competitive environment to enhance the potential profit. Furthermore, it provides a simple framework for evaluating and assessing the position of the business organization. The report structure also focuses on the competitive landscape of the Global Liquid Cooling for Data Center Market. This report introduces in detail the market share, market performance, product situation, operation situation, etc., of the main players, which helps the readers in the industry to identify the main competitors and deeply understand the competition pattern of the market.

In a word, this report is a must-read for industry players, investors, researchers, consultants, business strategists, and all those who have any kind of stake or are planning to foray into the Liquid Cooling for Data Center Market in any manner.

Market Segmentation (by Cooling Type)

Direct-to-Chip (Cold Plate) Cooling

Immersion Cooling

Other Liquid Cooling Solutions

Market Segmentation (by Data Center Type)

Hyperscale Data Centers

Enterprise Data Centers

Colocation Providers

Modular/Edge Data Centers

Cloud Providers

Market Segmentation (by End Use Industry)

IT & Telecom

BFSI (Banking, Financial Services, and Insurance)

Healthcare

Government & Defense

Energy & Utilities

Manufacturing

Cloud & Hyperscale Providers

Others

Key Company

Vertiv

Stulz

Midas Immersion Cooling

Rittal

Envicool

CoolIT

Schneider Electric

Sugon

Submer

Huawei

Green Revolution Cooling

Eco-atlas

Geographic Segmentation

North America (USA, Canada, Mexico)

Europe (Germany, UK, France, Russia, Italy, Rest of Europe)

Asia-Pacific (China, Japan, South Korea, India, Southeast Asia, Rest of Asia-Pacific)

South America (Brazil, Argentina, Columbia, Rest of South America)

The Middle East and Africa (Saudi Arabia, UAE, Egypt, Nigeria, South Africa, Rest of MEA)

FAQs :

▶ What is the current market size of the Liquid Cooling for Data Center Market?

As of 2023, the global Liquid Cooling for Data Center market was valued at approximately USD 1,982 million.

▶ Which are the key companies operating in the Liquid Cooling for Data Center Market?

Major players include Vertiv, Stulz, CoolIT Systems, Schneider Electric, Submer, Huawei, and Green Revolution Cooling, among others.

▶ What are the key growth drivers in the Liquid Cooling for Data Center Market?

Key growth drivers include rising power densities in data centers, the demand for energy-efficient solutions, and the growing deployment of AI and HPC applications.

▶ Which regions dominate the Liquid Cooling for Data Center Market?

North America currently leads the market, followed by Europe and Asia-Pacific. Asia-Pacific is expected to witness the fastest growth in the forecast period.

▶ What are the emerging trends in the Liquid Cooling for Data Center Market?

Emerging trends include the growing adoption of immersion cooling, development of sustainable coolant technologies, and integration of AI-based monitoring systems for thermal management.

Get free sample of this report at : https://www.intelmarketresearch.com/download-free-sample/913/Liquid-Cooling-for-Data-Center-Market+

0 notes

Text

Amazon DCV 2024.0 Supports Ubuntu 24.04 LTS With Security

NICE DCV is a different entity now. Along with improvements and bug fixes, NICE DCV is now known as Amazon DCV with the 2024.0 release.

The DCV protocol that powers Amazon Web Services(AWS) managed services like Amazon AppStream 2.0 and Amazon WorkSpaces is now regularly referred to by its new moniker.

What’s new with version 2024.0?

A number of improvements and updates are included in Amazon DCV 2024.0 for better usability, security, and performance. The most recent Ubuntu 24.04 LTS is now supported by the 2024.0 release, which also offers extended long-term support to ease system maintenance and the most recent security patches. Wayland support is incorporated into the DCV client on Ubuntu 24.04, which improves application isolation and graphical rendering efficiency. Furthermore, DCV 2024.0 now activates the QUIC UDP protocol by default, providing clients with optimal streaming performance. Additionally, when a remote user connects, the update adds the option to wipe the Linux host screen, blocking local access and interaction with the distant session.

What is Amazon DCV?

Customers may securely provide remote desktops and application streaming from any cloud or data center to any device, over a variety of network conditions, with Amazon DCV, a high-performance remote display protocol. Customers can run graphic-intensive programs remotely on EC2 instances and stream their user interface to less complex client PCs, doing away with the requirement for pricey dedicated workstations, thanks to Amazon DCV and Amazon EC2. Customers use Amazon DCV for their remote visualization needs across a wide spectrum of HPC workloads. Moreover, well-known services like Amazon Appstream 2.0, AWS Nimble Studio, and AWS RoboMaker use the Amazon DCV streaming protocol.

Advantages

Elevated Efficiency

You don’t have to pick between responsiveness and visual quality when using Amazon DCV. With no loss of image accuracy, it can respond to your apps almost instantly thanks to the bandwidth-adaptive streaming protocol.

Reduced Costs

Customers may run graphics-intensive apps remotely and avoid spending a lot of money on dedicated workstations or moving big volumes of data from the cloud to client PCs thanks to a very responsive streaming experience. It also allows several sessions to share a single GPU on Linux servers, which further reduces server infrastructure expenses for clients.

Adaptable Implementations

Service providers have access to a reliable and adaptable protocol for streaming apps that supports both on-premises and cloud usage thanks to browser-based access and cross-OS interoperability.

Entire Security

To protect customer data privacy, it sends pixels rather than geometry. To further guarantee the security of client data, it uses TLS protocol to secure end-user inputs as well as pixels.

Features

In addition to native clients for Windows, Linux, and MacOS and an HTML5 client for web browser access, it supports remote environments running both Windows and Linux. Multiple displays, 4K resolution, USB devices, multi-channel audio, smart cards, stylus/touch capabilities, and file redirection are all supported by native clients.

The lifecycle of it session may be easily created and managed programmatically across a fleet of servers with the help of DCV Session Manager. Developers can create personalized Amazon DCV web browser client applications with the help of the Amazon DCV web client SDK.

How to Install DCV on Amazon EC2?

Implement:

Sign up for an AWS account and activate it.

Open the AWS Management Console and log in.

Either download and install the relevant Amazon DCV server on your EC2 instance, or choose the proper Amazon DCV AMI from the Amazon Web Services Marketplace, then create an AMI using your application stack.

After confirming that traffic on port 8443 is permitted by your security group’s inbound rules, deploy EC2 instances with the Amazon DCV server installed.

Link:

On your device, download and install the relevant Amazon DCV native client.

Use the web client or native Amazon DCV client to connect to your distant computer at https://:8443.

Stream:

Use AmazonDCV to stream your graphics apps across several devices.

Use cases

Visualization of 3D Graphics

HPC workloads are becoming more complicated and consuming enormous volumes of data in a variety of industrial verticals, including Oil & Gas, Life Sciences, and Design & Engineering. The streaming protocol offered by Amazon DCV makes it unnecessary to send output files to client devices and offers a seamless, bandwidth-efficient remote streaming experience for HPC 3D graphics.

Application Access via a Browser

The Web Client for Amazon DCV is compatible with all HTML5 browsers and offers a mobile device-portable streaming experience. By removing the need to manage native clients without sacrificing streaming speed, the Web Client significantly lessens the operational pressure on IT departments. With the Amazon DCV Web Client SDK, you can create your own DCV Web Client.

Personalized Remote Apps

The simplicity with which it offers streaming protocol integration might be advantageous for custom remote applications and managed services. With native clients that support up to 4 monitors at 4K resolution each, Amazon DCV uses end-to-end AES-256 encryption to safeguard both pixels and end-user inputs.

Amazon DCV Pricing

Amazon Entire Cloud:

Using Amazon DCV on AWS does not incur any additional fees. Clients only have to pay for the EC2 resources they really utilize.

On-site and third-party cloud computing

Please get in touch with DCV distributors or resellers in your area here for more information about licensing and pricing for Amazon DCV.

Read more on Govindhtech.com

#AmazonDCV#Ubuntu24.04LTS#Ubuntu#DCV#AmazonWebServices#AmazonAppStream#EC2instances#AmazonEC2#News#TechNews#TechnologyNews#Technologytrends#technology#govindhtech

2 notes

·

View notes

Text

High-Performance 2U 4-Node Server – Hexadata HD-H252-Z10

The Hexadata HD-H252-Z10 Ver: Gen001 is a cutting-edge 2U high-density server featuring 4 rear-access nodes, each powered by AMD EPYC™ 7003 series processors. Designed for HPC, AI, and data analytics workloads, it offers 8-channel DDR4 memory across 32 DIMMs, 24 hot-swappable NVMe/SATA SSD bays, and 8 M.2 PCIe Gen3 x4 slots. With advanced features like OCP 3.0 readiness, Aspeed® AST2500 remote management, and 2000W 80 PLUS Platinum redundant PSU, this server ensures optimal performance and scalability for modern data centers. for more details, Visit- Hexadata Server Page

#High-density server#AMD EPYC Server#2U 4-Node Server#Data Center Solutions#HPC Server#AI Infrastructure#NVMe Storage Server#OCP 3.0 Ready#Remote Server Management#HexaData servers

0 notes

Text

Shell’s immersive cooling liquids the first to receive official certification from Intel

Air-cooling methods for processors and servers handling intense high-performance computing (HPC) and AI workloads have proven to be woefully inadequate, driving up energy use and subsequent costs. Alternative methods have emerged, such as liquid immersion cooling, which uses fluid instead of air to keep electronics from overheating, however, as of yet, there hasn’t been a standard to prove the…

0 notes

Text

Empowering AI Innovation with SharonAI and the NVIDIA L40 GPU

As artificial intelligence (AI), machine learning, and high-performance computing (HPC) continue to evolve, the demand for powerful, scalable infrastructure becomes increasingly urgent. One company rising to meet this demand is SharonAI—a next-generation computing platform designed to provide seamless access to cutting-edge GPU resources. At the core of this offering is the NVIDIA L40 GPU, a powerful graphics processing unit that is engineered to supercharge AI workloads, simulations, and large-scale data processing.

The Growing Need for AI Infrastructure

In recent years, businesses and research institutions have faced mounting challenges in training complex AI models. These models require not just intelligent algorithms but also vast amounts of computing power. Traditional CPUs often fall short in handling the enormous data sets and mathematical computations that underpin today’s AI systems.

This is where SharonAI enters the scene. By offering high-performance GPU resources, SharonAI makes it possible for startups, research teams, and enterprise-level organizations to access the same level of infrastructure once reserved for tech giants.

Why SharonAI Stands Out

SharonAI distinguishes itself by combining three essential components: performance, scalability, and sustainability. Its infrastructure is designed with flexibility in mind, offering both on-demand and term-based GPU server options. Whether you need a single GPU for a brief task or a multi-GPU setup for a month-long project, SharonAI provides the flexibility to choose.

Another compelling aspect of SharonAI is its commitment to eco-conscious innovation. With data centers powered by green energy and plans for net-zero operations, SharonAI is setting a benchmark for sustainability in the tech sector.

The Game-Changer: NVIDIA L40 GPU

The NVIDIA L40 GPU is the technological foundation that powers SharonAI’s infrastructure. Built on the Ada Lovelace architecture, it’s specifically optimized for AI training, rendering, and scientific simulations.

Key Features of the NVIDIA L40 GPU:

18,176 CUDA Cores for ultra-fast parallel processing

568 Tensor Cores for AI acceleration

142 RT Cores for advanced ray tracing

48 GB GDDR6 ECC memory for handling massive data sets

864 GB/s bandwidth to eliminate data bottlenecks

300W power envelope for energy-efficient performance

This GPU is a powerhouse that bridges the gap between data center performance and real-time AI application needs. It enables faster training cycles, more accurate simulations, and fluid high-resolution rendering.

Flexible Deployment Options

SharonAI offers tailored solutions based on client needs. For dynamic workloads, on-demand servers with 1 to 8 NVIDIA L40 GPUs are available at hourly rates. For long-term, stable operations, monthly term-based GPU servers offer dedicated resources at competitive pricing. These options make SharonAI accessible for a wide range of users—from solo developers to enterprise AI teams.

Real-World Applications

The integration of the NVIDIA L40 GPU into SharonAI’s infrastructure opens new frontiers across multiple industries:

Healthcare: Accelerates drug discovery and medical imaging through deep learning.

Finance: Powers real-time fraud detection and predictive analytics.

Entertainment: Enhances CGI rendering and visual effects with ultra-fast processing.

Academia and Research: Supports complex simulations in physics, climate science, and bioinformatics.

These use cases showcase the versatility and power of combining SharonAI with the NVIDIA L40 GPU.

Looking Ahead

The future of computing lies in the convergence of AI, big data, and scalable infrastructure. SharonAI, backed by the incredible performance of the NVIDIA L40 GPU, is playing a pivotal role in this transformation. By democratizing access to high-end GPUs, SharonAI is empowering developers, scientists, and businesses to push the boundaries of what’s possible in AI.

Whether you're running a deep learning pipeline, developing real-time applications, or conducting breakthrough research, SharonAI provides the tools and horsepower to get the job done.

0 notes

Text

Server CPU Model Code Analysis

Decoding Server CPU Model Numbers: A Comprehensive Guide

Server CPU model numbers are not arbitrary strings of letters and digits; they encode critical technical specifications, performance tiers, and use-case optimizations. This article breaks down the naming conventions for server processors, using Intel Xeon as a primary example. While other vendors (e.g., AMD EPYC) may follow different rules, the core principles of hierarchical classification and feature encoding remain similar.

1. Brand and Tier Identification

Brand: The prefix identifies the product family. For instance, Intel Xeon denotes a server/workstation-focused processor line.

Tier: Reflects performance and market positioning:

Pre-2017: Tiers were marked by prefixes like E3 (entry-level), E5 (mid-range), and E7 (high-end).

Post-2017: Intel introduced a metal-based tiering system:

Platinum: Models start with 8 or 9 (e.g., 8480H). Designed for mission-critical workloads, these CPUs support maximum core counts, advanced UPI interconnects, and enterprise-grade features.

Gold: Begins with 5 or 6 (e.g., 6448Y). Targets general-purpose servers and balanced performance.

Silver: Starts with 4 (e.g., 4410T). Optimized for lightweight workloads and edge computing.

2. Generation Identifier

The first digit after the tier indicates the processor generation. Higher numbers represent newer architectures:

1: 1st Gen Scalable Processors (2017, Skylake-SP, 14nm).

2: 2nd Gen Scalable Processors (2019, Cascade Lake, 14nm).

3: 3rd Gen Scalable Processors (2020–2021, Ice Lake/Cooper Lake, 10nm/14nm).

4: 4th Gen Scalable Processors (2023, Sapphire Rapids, Intel 7 process, Golden Cove architecture).

Example: In Platinum 8462V, the “4” signifies a 4th Gen (Sapphire Rapids) CPU.

3. SKU Number

The trailing digits (usually 2–3) differentiate SKUs within the same generation and tier. Higher SKU numbers generally imply better performance (e.g., more cores, larger cache):

Example: Gold 6448Y vs. Gold 6468Y: The latter (SKU 68) has more cores and higher clock speeds than the former (SKU 48).

4. Suffix Letters

Suffixes denote specialized features or optimizations:

C: Single-socket only (no multi-CPU support).

N: Enhanced for networking/NFV (Network Functions Virtualization).

T: Long-lifecycle support (10+ years).

Q: Liquid-cooling compatibility.

P/V: Optimized for cloud workloads (P for IaaS, V for SaaS).

Example: 4410T includes the “T” suffix for extended reliability in industrial applications.

5. Architecture and Interconnect Technologies

On-Die Architecture:

Ring Bus (pre-2017): Limited scalability due to latency spikes as core counts increased.

Mesh Architecture (post-2017): Grid-based core layout improves scalability (e.g., up to 40 cores in Ice Lake).

Interconnects:

UPI (Ultra Path Interconnect): Facilitates communication between multiple CPUs. Platinum-tier CPUs often support 3–4 UPI links (10.4–20.8 GT/s).

PCIe Support: Newer generations integrate updated PCIe standards (e.g., Sapphire Rapids supports PCIe 5.0).

Application-Based Selection Guide

High-Performance Computing (HPC): Prioritize Platinum CPUs (e.g., 8480H) with high core counts and UPI bandwidth.

Cloud Infrastructure: Choose P (IaaS) or V (SaaS) variants (e.g., 6454S).

Edge/Telecom: Opt for N-suffix models (e.g., 6338N) with network acceleration.

Industrial/Embedded Systems: Select T-suffix CPUs (e.g., 4410T) for extended lifecycle support.

Server CPU model numbers act as a shorthand for technical capabilities, enabling IT teams to quickly assess a processor’s performance tier, generation, and specialized features. By understanding these codes, organizations can align hardware choices with workload demands—whether deploying AI clusters, cloud-native apps, or ruggedized edge systems. For precise specifications, always cross-reference vendor resources like Intel’s ARK database or AMD’s technical briefs.

0 notes

Text

GPU Dedicated Servers for AI, ML & HPC Workloads

Discover the power of GPU dedicated servers for AI, machine learning, and high-performance computing. Achieve faster model training, advanced data processing, and superior performance with scalable, enterprise-grade GPU solutions.

Click Now :- https://www.infinitivehost.com/gpu-dedicated-server

1 note

·

View note