#Trusted-ai-audio-annotation

Explore tagged Tumblr posts

Text

https://pixelannotation.com/audio-annotation-services/

#Trusted-ai-audio-annotation-services-in-india#Trusted-ai-audio-annotation-company#Trusted-ai-audio-annotation

0 notes

Text

From Talks to Timeless Records: The Hidden Strength of Precision Transcription

Accurate transcription can change how knowledge travels through time. A clear record of spoken words becomes a reliable reference, a teaching tool, and even a historical artifact. This article uncovers why precision transcription matters far beyond simple typing, and how it transforms lectures and interviews into lasting legacies.

When Every Word Counts

Imagine a landmark lecture slipping through memory’s cracks. Key insights vanish. Precise transcription captures each nuance. It picks up on tone shifts, pauses, and emphasis—the subtle markers that give speech its full meaning. Capturing these details creates a document that reads with the speaker’s original intent intact. And that level of fidelity can influence research, policy decisions, and cultural archives for decades.

Behind the Scenes of Quality Transcripts

Transcription isn’t just fast typing. It demands subject‑matter knowledge and sharp listening skills. Transcribers learn to navigate accents, jargon, and technical terms. They distinguish overlapping voices and mark speaker changes. This craft often combines human expertise with AI tools. The human ear corrects machine misreads. Together they form a workflow that balances speed with nuance.

Integrating Tech Without Losing Human Touch

Modern platforms offer automated transcription. They promise instant outputs at a click. But raw AI text can misinterpret specialized vocabulary or context. That’s where human editors step in. They refine timestamps, validate terminology, and ensure logical flow. This blend of automation and human review defines the best transcription services available today.

Why Scholars Rely on Precision

Academic research hinges on credible sources. A misquoted phrase can derail an argument or misrepresent findings. Researchers often turn to academic transcription services for dissertation interviews, focus groups, and oral histories. These providers follow strict confidentiality protocols and deliver documents formatted to meet publication standards. The result is a trustworthy foundation for rigorous scholarship.

Beyond Academia: Business and Media Uses

Transcription drives content creation in marketing, journalism, and legal fields. Video producers extract captions for SEO and accessibility. Lawyers use verbatim court transcripts for appeals. Podcasters publish show notes that improve discoverability and user engagement. In each case, accuracy shapes credibility and audience trust.

Extra Layers: Timestamping and Metadata

Good transcripts include more than plain text. They feature timestamps that link text to audio or video. They log speaker identities and annotate non‑verbal cues like laughter or applause. Metadata tags highlight keywords and themes, making documents searchable. This structure turns raw transcripts into dynamic research tools.

Training Transcribers: The Human Element

Apprenticeship remains vital. New transcribers learn through hands‑on practice under mentors. They refine ear training by working on diverse audio samples—from noisy conference halls to intimate interviews. Peer reviews and feedback loops help maintain high standards. This communal learning fosters a culture of continual improvement.

Ethics and Confidentiality

Handling sensitive material requires discretion. Professional transcribers abide by non‑disclosure agreements and secure data protocols. They respect privacy laws and data‑protection regulations. Transparent workflows and encrypted file storage ensure client trust. In fields like medicine or law, this rigor is non‑negotiable.

Future Horizons: AI and Beyond

AI models continue to evolve, offering better speech recognition and language understanding. As they improve, turnaround times shrink and costs drop. Yet human oversight remains essential. Transcription will likely shift toward real‑time editing and multilingual support. Regardless of tools, the core mission stays the same: to preserve every spoken insight faithfully.

Conclusion

Precision transcription bridges the gap between spoken word and enduring record. It safeguards knowledge, supports scholarship, and empowers diverse industries. By combining human expertise with smart technology, today’s transcription services ensure that lectures, interviews, and meetings leave an indelible mark. Quality transcripts transform fleeting talks into timeless legacies.

0 notes

Text

Accelerate AI Development

Artificial Intelligence (AI) is no longer a futuristic concept — it’s a present-day driver of innovation, efficiency, and automation. From self-driving cars to intelligent customer service chatbots, AI is reshaping the way industries operate. But behind every smart algorithm lies an essential component that often doesn’t get the spotlight it deserves: data.

No matter how advanced an AI model may be, its potential is directly tied to the quality, volume, and relevance of the data it’s trained on. That’s why companies looking to move fast in AI development are turning their attention to something beyond algorithms: high-quality, ready-to-use datasets.

The Speed Factor in AI

Time-to-market is critical. Whether you’re a startup prototyping a new feature or a large enterprise deploying AI at scale, delays in sourcing, cleaning, and labeling data can slow down innovation. Traditional data collection methods — manual scraping, internal sourcing, or custom annotation — can take weeks or even months. This timeline doesn’t align with the rapid iteration cycles that AI teams are expected to maintain.

The solution? Pre-collected, curated datasets that are immediately usable for training machine learning models.

Why Pre-Collected Datasets Matter

Pre-collected datasets offer a shortcut without compromising on quality. These datasets are:

Professionally Curated: Built with consistency, structure, and clear labeling standards.

Domain-Specific: Tailored to key AI areas like computer vision, natural language processing (NLP), and audio recognition.

Scalable: Ready to support models at different stages of development — from testing hypotheses to deploying production systems.

Instead of spending months building your own data pipeline, you can start training and refining your models from day one.

Use Cases That Benefit

The applications of AI are vast, but certain use cases especially benefit from rapid access to quality data:

Computer Vision: For tasks like facial recognition, object detection, autonomous driving, and medical imaging, visual datasets are vital. High-resolution, diverse, and well-annotated images can shave weeks off development time.

Natural Language Processing (NLP): Chatbots, sentiment analysis tools, and machine translation systems need text datasets that reflect linguistic diversity and nuance.

Audio AI: Whether it’s voice assistants, transcription tools, or sound classification systems, audio datasets provide the foundation for robust auditory understanding.

With pre-curated datasets available, teams can start experimenting, fine-tuning, and validating their models immediately — accelerating everything from R&D to deployment.

Data Quality = Model Performance

It’s a simple equation: garbage in, garbage out. The best algorithms can’t overcome poor data. And while it’s tempting to rely on publicly available datasets, they’re often outdated, inconsistent, or not representative of real-world complexity.

Using high-quality, professionally sourced datasets ensures that your model is trained on the type of data it will encounter in the real world. This improves performance metrics, reduces bias, and increases trust in your AI outputs — especially critical in sensitive fields like healthcare, finance, and security.

Save Time, Save Budget

Data acquisition can be one of the most expensive parts of an AI project. It requires technical infrastructure, human resources for annotation, and ongoing quality control. By purchasing pre-collected data, companies reduce:

Operational Overhead: No need to build an internal data pipeline from scratch.

Hiring Costs: Avoid the expense of large annotation or data engineering teams.

Project Delays: Eliminate waiting periods for data readiness.

It’s not just about moving fast — it’s about being cost-effective and agile.

Build Better, Faster

When you eliminate the friction of data collection, you unlock your team’s potential to focus on what truly matters: experimentation, innovation, and performance tuning. You free up data scientists to iterate more often. You allow product teams to move from ideation to MVP more quickly. And you increase your competitive edge in a fast-moving market.

Where to Start

If you’re looking to power up your AI development with reliable data, explore BuyData.Pro. We provide a wide range of high-quality, pre-labeled datasets in computer vision, NLP, and audio. Whether you’re building your first model or optimizing one for production, our datasets are built to accelerate your journey.

Website: https://buydata.pro Contact: [email protected]

0 notes

Text

Powering the AI Revolution Starts with Wisepl Where Intelligence Meets Precision

Wisepl specialize in high-quality data labeling services that serve as the backbone of every successful AI model. From autonomous vehicles to agriculture, healthcare to NLP - we annotate with accuracy, speed, and integrity.

🔹 Manual & Semi-Automated Labeling 🔹 Bounding Boxes | Polygons | Keypoints | Segmentation 🔹 Image, Video, Text, and Audio Annotation 🔹 Multilingual & Domain-Specific Expertise 🔹 Industry-Specific Use Cases: Medical, Legal, Automotive, Drones, Retail

India-based. Globally Trusted. AI-Focused.

Let your AI see the world clearly - through Wisepl’s eyes.

Ready to scale your AI training? Contact us now at www.wisepl.com or [email protected] Because every smart machine needs smart data.

#Wisepl#DataAnnotation#AITrainingData#MachineLearning#ArtificialIntelligence#DataLabeling#ComputerVision#NLP#DeepLearning#IndianAI#TechKerala#StartupIndia#AIDevelopment#VisionAI#SmartDataSmartAI#PrecisionLabeling#WiseplAI#AIProjects#GlobalAnnotationPartner

0 notes

Text

Streamline Your AI Development Process with EnFuse Solutions' Data Labeling Services

EnFuse Solutions offers expert data labeling services to streamline your AI development. Whether your project involves images, text, audio, or video, they provide reliable annotations that boost model accuracy. Trust EnFuse to handle complex labeling tasks while you focus on building smarter, faster, and more effective AI applications. Visit here to see how EnFuse’s expert data labeling elevates your AI model performance: https://www.enfuse-solutions.com/services/ai-ml-enablement/labeling-curation/

#DataLabeling#DataLabelingServices#DataCurationServices#ImageLabeling#AudioLabeling#VideoLabeling#TextLabeling#DataLabelingCompaniesIndia#DataLabelingAndAnnotation#AnnotationServices#EnFuseSolutions#EnFuseSolutionsIndia

0 notes

Text

Essential Meeting Room Technologies for Modern Offices in 2025

The virtual office is evolving, and at the very core of this transformation is meeting room technology. In 2025, companies of all sizes are investing in smart, connected solutions that heighten co-operation, lessen downtime, and facilitate hybrid teams. Whether you're a startup or a corporate firm, enhancement of meeting and conference room technology is no longer a choice.

Below are technologies that are a "must-have" that are changing the contemporary meeting idea in 2025.

1. Smart Video Conferencing Solutions

With remote and hybrid work being the order of the day, video conferencing solutions have established themselves at the center of workplace communication. Modern-day solutions now offer high-definition video, noise cancellation, auto-framing cameras, and seamless integration with platforms like Zoom, Microsoft Teams, and Google Meet.

Video conferencing setups that encourage real-time collaboration between various locations are given precedence in Australia and beyond. Cutting-edge video conferencing systems assure clarity and professionalism till the very end-Whether conducting client meetings or team brainstorming sessions.

2. AI-Powered Meeting Assistants

Artificial-intelligence is a meeting room technology that efficiently manages meetings. It can allow AI assistants to transcribe meetings in real time, identify key points, and assign tasks from discussions. They free meeting participants from manual minutes and thus establish a dialogue between partners even after the meeting has concluded.

When integrated into your meeting room hardware, AI assistants can also automate room scheduling, adjust lighting, and manage AV settings based on preferences or time of day.

3. Interactive Displays and Digital Whiteboards

Forget traditional whiteboards. In 2025, meeting room technology embraces interactive displays for live annotations, touchscreen usages, and wireless content sharing. These smart boards are capable of permitting teams to brainstorm, draw, and present ideas in real-time, whether those participants are in-the-room participants or others dialing from an opposite point in the world.

Many meeting and conference room technologies now feature multi-user collaboration, cloud integration, and easy export options, making idea sharing fast and frictionless.

4. Advanced AV (Audio-Visual) Solutions

Clear communication always starts with quality AV. Modern meeting room technology AV solutions deliver omnidirectional-mic function capability, ceiling-mounted speaker deployment, and smart audio processing. They work to entirely obliterate echo and distant background noises, ensuring an absolute clarity of sound from all participants.

To ensure visual clarity, 4K displays, laser projectors, and wide-angle cameras form a fantastic triumvirate for ensuring identity and heard voices. Trust-building and active engagement go hand in hand; especially when it comes to high-stake client or boardroom meetings, investing in solid AV tech cannot be forgotten.

5. Seamless Room Booking and Scheduling Systems

Time is money. It is a smart scheduling system that helps employees find and book available rooms with ease. They integrate with the existing calendars and showcase real-time availability outside the meeting room.

Some implementations are also capable of check-in activities that do not require physical interaction via smartphone or RFID access cards. In conjunction with occupancy sensors and analytics, those room booking systems can provide usage insight into a location. This helps companies in optimizing resources and cutting down the related overhead cost.

6. Secure Cloud Integration

As data privacy becomes a top priority, meeting room hardware now comes with built-in security features. For the utmost protection of confidentiality, cloud-based systems with end-to-end encryption guarantee privacy in your confidential meetings.

Further, in cases when compliance matters, such as financial, legal, or healthcare industries, other typical features of these cloud types include remote updates, user access controls, and audit trails.

Final Thoughts:

The meeting room technology in 2025 will be smarter, faster, and more integrated than ever before. Everything from AI assistants, video conferencing tools, and intelligent AV systems to cloud security comes together to facilitate productive and seamless meetings.

Either immediately upon the retrofitting of the current setup or while building a new work environment will be a good opportunity to consider all these essential technologies to future-proof operational areas. With the increased need for agile, tech-enabled collaboration, the investments in appropriate meeting and conference room technology will set your business apart.

Ready to Upgrade Your Meeting Room Technology? At Engagis, we help modern offices across Australia transform their meeting spaces with cutting-edge AV solutions, video conferencing tools, and smart collaboration systems. Whether you're a small business or a growing enterprise, we’ll design and install the perfect setup for your needs.

Contact Engagis today for a free consultation and discover how our tailored meeting room solutions can boost your team's productivity.

#Meeting room technology#meeting room av solutions#video conferencing australia#meeting room hardware#hybrid meetings#smart office tools

0 notes

Text

Revolutionizing AI Development: Why GTS is the Data Labeling Company You Can Trust

A learning model’s performance is primarily dependent on the quality of the data on which it is trained. In computer vision, accurate data labeling serves the purposes of allowing that trained model to be-uh-accurate in interpreting, processing, and analyzing the information across autonomous vehicles to healthcare. As industries start ramping up their dependence on AI solutions, the demand for a reliable data labeling company is high. Enter GTS, one of the most trusted partners for top data labeling solutions catering to various industry needs.

What is Data Labeling and Why Death is it Important, or Just the Information You Need?

And data labeling is simply tagging or annotating data, such as images, videos, texts, or audio, for training machine learning models. Labeled data enables the AI system to identify patterns, make predictions, and fairly accomplish tasks. For instance, labeled images of road signs, pedestrians, and vehicles allow the AI system to make accurate decisions while driving. Without proper data labeling, there can be failures among even the best AI algorithms. Due to the inclusion of erroneously labeled data, modeling might turn biased, leading to instances of wrong prediction and, therefore, convictions of mistakes in actual real-world deployments.

Why Choose GTS for Data Labeling?

At GTS (gts.ai), we know no two AI projects are alike; therefore, they require tailor-made solutions in order to yield good results. Here’s what sets us apart from the rest:

1. A Full Range of Labeling Services At GTS, we provide a complete lineup of data labeling services:

Image and Video Annotation: Bounding boxes, polygon annotations, semantic segmentation, and so much in this case for the autonomous vehicles, retail, and agriculture domains. Text Annotation: Named entity recognition, sentiment analysis, part-of-speech tagging to improve natural language processing models. Audio Annotation: Speech recognition, speaker identification, and sound classification improve the function of voice-activated systems.

2. Cutting-Edge Tools and Technology: With the help of advanced technologies, GTS delivers top-notch annotations at rapid speed. The platform is technology-enabled by AI tools that offer enhanced accuracy while minimizing manual workloads. 3. Experienced Workforce: There is a strong team behind every good AI model. Scalability and top-notch standards across the entire project are assured at GTS, via a worldwide fair share of highly trained annotators. 4. Data Protection and Compliance: GTS observes data privacy in the highest standards and abides by international data protection standards. Clients can rest assured that their data is handled with extreme care, thanks to full and stringent security measures. 5. Customer-Oriented: GTS believes in fostering long-term relationships with clients. Thus, its team collaborates closely with each client to understand their requirements thoroughly and offer flexible solutions according to project goals and budgets.

How GTS Is Transforming Industries

Autonomous Vehicles: The rise of self-driving technology necessitates proper image and video annotation. To this end, GTS offers high-quality labeled datasets aimed at boosting object detection, lane tracking, and pedestrian detection. Healthcare: Accurate data annotation in the medical world can sometimes lead to saving lives. GTS has annotated images for use in the medical field and in devices that assist doctors in providing care. Retail and E-commerce: GTS works with the retail sector to ensure improved AI models that enhance customer experience through product tagging and sentiment analysis. Agriculture: Through annotation of field data and satellite imagery, GTS supports precision agriculture, helping farmers monitor crop health and maximize yields.

GTS Advantage Real-World Instances

One of our clients in the autonomous driving sector encountered issues acquiring accurately labeled datasets. GTS provided annotation services that would boost its performance by a huge margin. Within months, the client started reporting better object detection performance and quicker model deployment. In another case, a healthcare startup approached GTS for support in labeling thousands of medical images. We worked with a streamlined work model that accommodated QA processes to ensure delivery within timelines, enabling the client to advance their AI-based diagnostic tool.

Conclusion

Choosing the right data labeling partner can be the decisive aspect determining the success of an AI project. Equipped with broad service ranges, cutting-edge technology, and a customer-centric approach, GTS.AI stands to make the foreground in the data labeling industry. Whether it be in automotive, healthcare, retail, or agriculture, GTS has the expertise and resources to address your data labelling needs. Looking to gain an edge in developing your AI models using the best-labeled data? Simply visit us at gts.ai and see how we can help transform your AI dreams into reality.

0 notes

Text

Unlocking the Power of AI: The Role of High-Quality Datasets

Artificial Intelligence (AI) has transformed industries worldwide, empowering businesses with smart solutions that drive efficiency and innovation. However, the foundation of any successful AI project lies in its data. High-quality AI data sets are the lifeblood of machine learning algorithms, enabling them to learn, predict, and make decisions with accuracy. In this blog, we will explore the significance of AI datasets, what makes a dataset valuable, and how to access top-notch artificial intelligence datasets for your projects.

Why AI Datasets Are Essential

AI and machine learning models rely on data to function effectively. These datasets act as the training ground where models learn patterns, relationships, and behaviors. Here’s why they are so important:

Model Accuracy: The quality and quantity of the dataset directly impact the accuracy of the AI model. Clean, well-labeled datasets lead to more reliable predictions.

Versatility Across Applications: From facial recognition to autonomous vehicles, diverse datasets enable AI systems to adapt to various real-world scenarios.

Accelerated Development: A robust dataset minimizes the time spent on data preprocessing, allowing developers to focus on refining algorithms.

Characteristics of a High-Quality AI Dataset

Not all datasets are created equal. For an AI dataset to be effective, it must possess certain qualities:

Relevance: The data should align with the specific problem the AI model aims to solve.

Diversity: A dataset must cover a broad range of scenarios and variables to avoid bias.

Accuracy: Well-labeled and verified data ensure that the AI model learns correctly.

Volume: Larger datasets provide more information, enabling the model to generalize better.

Accessibility: The dataset should be easy to integrate and compatible with existing tools and frameworks.

Popular Types of AI Datasets

AI datasets come in various forms, tailored to different applications:

Image Datasets: Used for computer vision tasks like object detection, facial recognition, and image classification.

Text Datasets: Ideal for natural language processing (NLP) applications such as chatbots, translation, and sentiment analysis.

Audio Datasets: Crucial for speech recognition, sound classification, and voice assistants.

Time-Series Datasets: Suitable for forecasting and anomaly detection in fields like finance and IoT.

Tabular Datasets: Commonly used in structured data analysis, including customer segmentation and fraud detection.

Where to Find High-Quality AI Datasets

Finding reliable AI datasets can be challenging, especially when dealing with niche applications. Thankfully, platforms like GTS AI provide a one-stop solution for all your data needs. GTS AI offers:

Curated AI Datasets: Handpicked datasets that are ready to use and cater to various industries.

Custom Dataset Creation: Tailored datasets designed to meet specific project requirements.

Data Annotation Services: Expert labeling for image, text, and audio data to ensure quality.

With GTS AI, you can streamline your AI projects by accessing accurate, diverse datasets compatible with modern AI frameworks.

Benefits of Partnering with GTS AI

Collaborating with a trusted data provider like GTS AI comes with numerous advantages:

Expertise: Leverage years of experience in creating and managing AI datasets.

Scalability: Access datasets that scale with the growing needs of your AI projects.

Compliance: Ensure ethical and legal compliance with industry standards for data collection and labeling.

Cost-Effectiveness: Save time and resources with pre-labeled and ready-to-use datasets.

Conclusion

High-quality AI datasets are the backbone of successful artificial intelligence systems. They not only enhance model performance but also ensure adaptability across diverse applications. Whether you’re working on image recognition, natural language processing, or predictive analytics, investing in the right datasets is crucial.

Explore the world of possibilities with GTS AI, your trusted partner for premium artificial intelligence datasets. Empower your projects with the data they need to drive innovation and stay ahead in the competitive AI landscape. Ready to elevate your AI? Visit GTS AI today and discover a data-driven future!

0 notes

Text

How to Improve Your Virtual Meeting Experience with Advanced Video Conferencing Tools

Tools have revolutionized the way we conduct virtual meetings, making it possible to connect with team members and clients from anywhere in the world. However, we've all experienced the frustration of poor video quality, dropped calls, and lack of engagement in virtual meetings. These common challenges can lead to decreased productivity, miscommunication, and a lack of trust among team members.

Upgrading your video conferencing tools can be a game-changer for your business. With advanced features and technology, you can enhance collaboration, increase engagement, and streamline your virtual meetings. By investing in the right tools, you can take your virtual meetings to the next level and achieve better results.

Advanced video conferencing tools offer a range of features that can improve meeting efficiency and engagement. For instance, high-definition video and crystal-clear audio ensure that you can see and hear each other clearly, reducing misunderstandings and miscommunications. Screen sharing and annotation tools enable you to present information in a more engaging and interactive way, while virtual whiteboards and breakout rooms facilitate collaboration and brainstorming. Additionally, features like automated transcription and recording enable you to review and reference meetings later, saving you time and increasing productivity.

AON Meetings takes virtual meeting experiences to the next level with its advanced technology. Our platform offers a range of innovative features, including AI-powered meeting insights, personalized meeting recommendations, and seamless integration with your calendar and collaboration tools. With AON Meetings, you can enjoy a more immersive and interactive virtual meeting experience that feels like you're in the same room.

To boost virtual meeting productivity, try the following practical tips. First, establish a clear agenda and set of goals for each meeting to keep everyone focused and on track. Second, encourage active participation and engagement by using interactive tools and features. Third, minimize distractions by choosing a quiet and private space for your virtual meetings. Finally, follow up with action items and next steps to ensure that everyone is on the same page.

To sum up, investing in advanced video conferencing tools is crucial for better virtual meeting experiences and results. By upgrading your tools and adopting best practices, you can enhance collaboration, increase engagement, and achieve more in less time. Don't settle for mediocre virtual meetings – try video conferencing with AON Meetings today and experience the difference for yourself. Book an appointment on our website https://aonmeetings.com/ to get started.

0 notes

Text

The Importance of Audio Data Collection in AI and Machine Learning

Audio data collection is becoming increasingly significant in the fields of artificial intelligence (AI) and machine learning. As technology evolves, the ability to process and understand audio data opens up a plethora of applications, from virtual assistants to advanced security systems. This article delves into the importance, methods, and challenges associated with audio data collection.

Importance of Audio Data Collection

Enhancing Speech Recognition Systems:

Virtual Assistants: Devices like Amazon's Alexa, Apple's Siri, and Google Assistant rely heavily on high-quality audio data to understand and respond to user commands accurately.

Transcription Services: Automated transcription services, which are used in various industries, need extensive audio data to improve their accuracy and efficiency.

Developing Natural Language Processing (NLP):

Language Translation: Audio data helps in creating more accurate and nuanced language translation systems.

Sentiment Analysis: By analyzing the tone and emotion in voice recordings, systems can better understand user sentiments, which is valuable for customer service applications.

Improving Security Systems:

Voice Biometrics: Audio data collection is essential for developing voice recognition technologies used in security and authentication processes.

Surveillance: Advanced audio processing can enhance security systems by detecting unusual sounds or voices in sensitive areas.

Methods of Audio Data Collection

Microphone Arrays:

These devices consist of multiple microphones working together to capture high-fidelity audio data. They are used in various environments, from quiet office settings to noisy outdoor locations.

Mobile Devices:

Smartphones and tablets equipped with high-quality microphones are increasingly used for audio data collection. These devices are portable and ubiquitous, making them ideal for gathering large volumes of data.

Wearable Technology:

Devices like smartwatches and fitness trackers with built-in microphones are also becoming valuable tools for audio data collection, especially in health monitoring applications.

Challenges in Audio Data Collection

Noise and Interference:

Environmental Noise: Collecting clean audio data in noisy environments can be challenging. Advanced filtering and noise reduction techniques are necessary to improve data quality.

Interference: Other electronic devices and signals can interfere with audio data collection, requiring sophisticated methods to mitigate these issues.

Privacy Concerns:

Ethical Considerations: Collecting audio data raises privacy concerns, as individuals may not always be aware that their voices are being recorded. It's crucial to obtain proper consent and ensure data is used ethically.

Data Security: Protecting collected audio data from unauthorized access and breaches is paramount. Implementing robust security measures is essential to maintaining trust and compliance with regulations.

Data Annotation and Labeling:

Manual Effort: Annotating and labeling audio data accurately is a labor-intensive process. It requires significant human effort to ensure that the data is correctly categorized for machine learning purposes.

Automated Solutions: Developing automated solutions for audio data annotation can help streamline this process, though it remains a complex challenge.

Conclusion

Audio data collection is a critical component in the advancement of AI and machine learning technologies. By improving speech recognition, enhancing NLP applications, and bolstering security systems, high-quality audio data can lead to significant technological breakthroughs. However, addressing the challenges of noise, privacy, and data annotation is essential to fully harness the potential of audio data in these fields. As technology progresses, the methods and techniques for collecting and utilizing audio data will continue to evolve, paving the way for more sophisticated and capable AI systems.

0 notes

Text

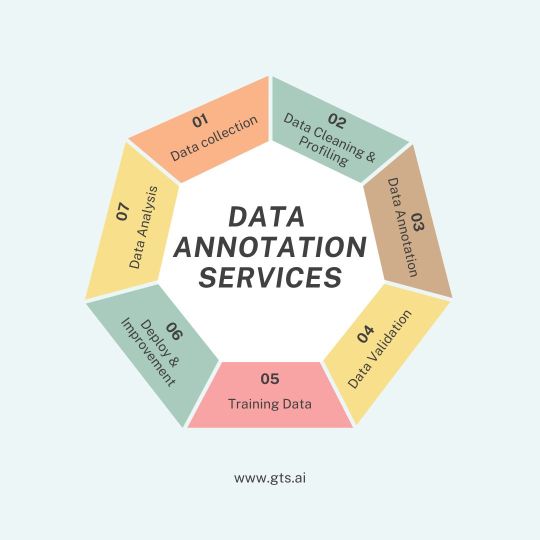

Unlock the full potential of your AI and machine learning models with GTS expert data annotation services. Our team specializes in precise and accurate labeling, ensuring your data is meticulously prepared for high-performance algorithms. From image and video annotation to text and audio tagging, we offer a comprehensive range of services tailored to meet your specific needs. Trust us to deliver the quality and reliability that your AI projects demand.

1 note

·

View note

Text

Human In the Loop for Machine Learning

The majority of machine learning models rely on human-created data. But the interaction between humans and machines does not end there; the most powerful systems are designed to allow both sides to interact continuously via a mechanism known as “Human in the loop” (HITL).

HUMAN-IN-THE-LOOP (HITL) machine learning necessitates human inspecting, validating, or changing some aspect of the AI development process. This philosophy extends to those who collect, label and perform quality control (QC) on data for machine learning.

We are confident that AI will not fire its most trusted employees anytime soon. In reality, AI systems supplement and augment human capabilities rather than replace them. The nature of our work may change in the coming years as a result of AI. The fundamental principle, however, is the elimination of mundane tasks and increased efficiency for tasks that require human input.

Recent advancements in the field of artificial intelligence (AI) have given rise to techniques such as active learning and cooperative learning. Data is the foundation of any machine learning algorithm, and these datasets are typically unlabeled (e.g. Images). During the training stage, a human must manually label this dataset (the output, such as a cat or dog).

This data is then used to train the machine learning model, which is known as supervised learning. The algorithms in this technique learn from labeled data to predict previously unseen cases. Using what we already know, we can go deeper and develop more sophisticated techniques to uncover other insights and features in the training dataset, resulting in more accurate and automated results.

Human and machine expertise are combined during the testing and evaluation phase by allowing the human to correct any incorrect results that have been produced. In this case, the human will specifically correct the labels that the machine was unable to detect with high accuracy (i.e. classified a dog for a cat). When the machine is overly confident about a wrong prediction, the human takes the same approach.

The algorithm’s performance will improve with each iteration, paving the way for automated lifelong learning by reducing the need for future human intervention. When such work is completed, the results are forwarded to a domain expert who makes decisions that have a greater impact.

Machine learning with a human-in-the-loop

When you have a large enough dataset, an algorithm can make accurate decisions based on it. However, the machine must first learn how to properly identify relevant criteria and thus arrive at the correct conclusion. Here is where human intelligence comes into play: Machine learning with human-in-the-loop (HITL) combines human and machine intelligence to form a continuous circle in which the algorithm is trained, tested, and tuned. With each loop, the machine becomes smarter, more confident, and more accurate.

Machine learning can’t function without human input. The algorithm cannot learn everything necessary to reach the correct conclusion on its own. For example, without human explanation, a model does not understand what is shown in an image. This means that, especially in the case of unstructured data, data labeling must be the first step toward developing a reliable algorithm.

The algorithm is unable to comprehend unstructured data that has not been properly labeled, such as images, audio, video, and social media posts. As a result, along the way, the human-in-the-loop approach is required. Specific instructions must be followed when labeling the data sets.

What benefit does HITL offer to Machine Learning applications?

1. Many times data are incomplete and unambiguous. Humans annotate/label raw data to provide meaningful context so that machine learning models can learn to produce desired results, identify patterns, and make correct decisions.

2. Humans check the models for over-fitting. They teach the model about extreme cases or unexpected scenarios.

3. Humans evaluate if the algorithm is overconfident or low in confidence to determine correct decisions. If the accuracy is low, the machine goes through an active learning cycle wherein humans give feedback for the machine to reach the correct result and increase its predictability.

4. It offers a significant enhancement in transparency as application no longer appears as a Black box with humans involved in each and every step in the process.

5. It incorporates human judgment in the most effective ways and shifts pressure away from building “100% machine perfect ” algorithms to optimal models offering maximum business benefit. This in turn offers more powerful and useful applications.

At the end of the day, AI systems are built to help humans. The value of such systems lies not solely in efficiency or correctness, but also in human preference and agency. The Humans-in-the-loop system puts humans in the decision loop.

Three Stages of Human-in-the-Loop Machine Learning

Training – Data is frequently incomplete or jumbled. Labels are added to raw data by humans to provide meaningful context for machine learning models to learn to produce desired results, identify patterns, and make correct decisions. Data labeling is an important step in the development of AI models because properly labeled datasets provide a foundation for further application and development.

Tuning – At this stage, humans inspect the data for overfitting. While data labeling lays the groundwork for accurate output, overfitting occurs when the model trains the data too well. When the model memorizes the training dataset, it may generalize, rendering it unable to perform against new data. It allows for a margin of error to allow for unpredictability in real-world scenarios.

It is also during the tuning stage that humans teach the model about edge cases or unexpected scenarios. For example, facial recognition provides convenience but is vulnerable to gender and ethnicity bias when datasets are misrepresented.

Testing – Finally, humans assess whether the algorithm is overly confident or lacking in making an incorrect decision. If the accuracy rate is low, the machine enters an active learning cycle in which humans provide feedback to the machine in order for the machine to reach the correct result or increase its predictability.

Final Thoughts

As people’s interest in artificial intelligence and machine learning grows, it’s important to remember that people still play an important role in the process of creating algorithms. The human-in-the-loop concept is one of today’s most valuable. While this implies that you will need to hire people to do some work (which may appear to be the polar opposite of process automation), it is still impossible to obtain a high-performing, sophisticated, and accurate ML model otherwise.

TagX stands out in the fast-paced, tech-dominated industry with its people-first culture. We offer data collection, annotation, and evaluation services to power the most cutting-edge AI solutions. We can handle complex, large-scale data labeling projects whether you’re developing computer vision or natural language processing (NLP) applications.

Visit us , https://www.tagxdata.com/human-in-the-loop-for-machine-learning

Original source , https://tagxdata1.blogspot.com/2024/04/human-in-loop-for-machine-learning.html

0 notes

Text

Enhance Your Data with Expert Annotation Services from EnFuse

Step into the future with EnFuse’s robust annotation services tailored to take AI projects to the next level. They are trusted by companies globally, for delivering meticulous data labeling and annotation that is essential for enhanced machine learning outputs. Their professional team works on text, image, audio, and video data, ensuring in-depth analysis and precise annotation.

Click on the below link to get the best annotation services from EnFuse Solutions: https://www.enfuse-solutions.com/services/ai-ml-enablement/annotation/

#DataAnnotationServices#ImageAnnotationServices#VideoAnnotationServices#AudioAnnotationServices#AIAnnotationServices#DataAnnotationsCompanies#DataAnnotationCompanyinIndia#EnFuseSolutions#EnFuseSolutionsIndia

0 notes

Text

Transforming Audio into Text: Reliable Transcription & Annotation Services | Haivo AI

Haivo AI specializes in transforming audio into text with precision. Our transcription and Audio annotation services ensure the accurate and reliable conversion of audio files. Trust our advanced technology and experienced professionals for your transcription needs.

0 notes

Text

Crowd Workers Required To Build AI Models

Let's discuss the role and benefits of crowd workers in developing AI learning and ML algorithms.

Our efforts to develop robust and unbiased AI solutions must be based on a wide range of data. We should ensure that the data used in training models is representative of the entire dataset. It is essential that credible AI solutions are developed using data collected. Crowd worker has become a critical part of our data collection strategy.

Crowd workers can source data for you.

AI-based solution designers can quickly distribute micro tasks, gather wide-ranging observations quickly, and at a relatively affordable cost, by engaging a diverse crowd of workers.

Crowd workers in AI projects offer several benefits.

Faster Market Time:According Cognilytica research, nearly 88% data collection activities are spent on data cleaning, labeling, and aggregate. Only 20% of the time goes to development and training. It is now possible to quickly recruit large numbers of data contributors, eliminating the traditional barriers that prevent data generation.

Cost Effective Solution: Crowdsourced information collection saves time and energy on recruiting, training, and bringing in new staff members. Since the workforce is paid per job, this reduces the time and cost of data collection.

Improves Diversity of the Dataset: Data variety is essential for the entire AI solution training. In order for a model's to give objective results, it must be trained with a diverse dataset. Crowd-sourcing makes it easy to generate diverse (geographically, languages, and dialects), datasets quickly and inexpensively.

Enhances Scalability. With reliable crowd workers you can ensure highquality AI Training Datasets that can easily be scaled based upon your project needs.

Bridging gap between crowdsource workers (and the requestor)

There is a need to bridge between crowd workers (and requestors) and those who are not in the same industry.

It is obvious that the worker's are not provided with the necessary information. While workers may be given micro tasks such recording dialogues, they rarely get context. They do not know why they are doing this and how to improve it. This information is detrimental to the quality for crowd-sourced.

Being able to see all the context gives a person clarity and purpose in their work.

A non-disclosure agreement (NDA) is another aspect of NDA that limits the amount of information crowd workers can access. This withholding of information is seen as a sign of lack of trust and diminished importance for crowd workers.

This is because the worker doesn't have the same information. The requestor does not understand the worker being hired to do the job. Certain projects might need a particular worker. But, for most projects there is no ambiguity. This ground truth means that it can be difficult to provide feedback and evaluate the training.

Crowd workers are required to create AI models.

Humans generate lots of data. Yet, only a tiny fraction of that data is of any value. Lack of data benchmarking standards has meant that the majority of the data collected are either biased, contaminated with quality issues or not representative the environment. There is an increasing demand for better, diverse and more varied datasets, as more machinelearning and deeplearning models are developed that can take advantage of large amounts data.

It's where crowd workers step in.

Crowd-sourcing is the collaborative creation of Audio Datasets with large numbers of people. Crowd workers use human intelligence to create artificial intelligence.

Crowdsourcing platforms enable data collection and annotation microtasks among a broad range of people. Crowdsourcing is a way for companies to reach a large, dynamic and cost-effective workforce that can be scaled.

Amazon Mechanical Turk, which is the most popular crowd-sourcing platform, was successful in sourcing 11 thousand human to-human dialogues in less than 15 hours. For each dialogue that succeeded, workers $0.35 were paid . Crowd workers have been paid such a small sum that it is highlighting the importance of establishing ethical data-sourcing standards.

Although it sounds smart, it is hard to execute. The anonymity of crowd workers has resulted in issues like low pay, disregarding worker rights, and low quality work which can impact the AI model performance.

0 notes

Text

The Power of Precision: How GTS.AI is Revolutionizing Data Labeling

The powered AI world of today relies heavily on high-quality labeled data for building strong machine-learning models. With the high stakes put on AI systems, the accuracy of the model will rely, in a great deal, on how precisely and reliably the training data that fed it was prepared. Data Labeling company therefore play a great role in transforming raw data into a structured and meaning-laden information for AI applications. Among these key players of the field, GTS.AI serves as an innovative and trusted partner in delivering cutting edge data labeling solutions, custom-made for the ever-changing needs of businesses around the world.

The Vital Role of Data Labeling in AI

Data labeling is the process of attaching labels to data; this could be images, audio, text, or video- in contrast to other who earn livelihoods offering these and various other services. Without proper labeling, AI model development will not be able to work out object detection, human language understanding, or accurate future predictions. Comprehensive datasets put AI in a better decision-making state which subsequently aids in the domains of autonomous driving, healthcare, finance, among others. Yet it is not that simple. Data labeling involves human intellect, automation, and a solid quality control forgetting everything just right. Wrong labels translate into biased models and hence, unreliable AI systems. This is the niche in which GTS.AI excels by providing a smooth high-quality data labeling service fortified by state-of-the-art AI-assisted tools and expert human annotators.

Why should GTS.AI be selected for Data Labeling?

Advanced AI-Powered Annotation Tools: GTS.AI uses state-of-the-art technology to enhance the data labeling process. Our AI-assisted annotation tools streamline the labeling process, thereby reducing errors and improving efficiency. Be it bounding boxes, semantic segmentation, key-point annotation, or NLP data tagging, we ensure high accuracy and consistency from our platform.

Scaling for Every Industry Need: The AI applications are skyrocketing across industries, along with their data requirements. At GTS.AI, we're providing scalable data labeling solutions that suit the data demand from various industries such as autonomous vehicles, medical imaging, e-commerce, and security. We can deliver high-quality labeled datasets at whatever complexity or scale they involve.

The Human-in-the-Loop Process: While automation can generally improve efficiency, human intelligence plays a crucial role in maintaining quality. Our human-in-the-loop methodology combines AI-assisted automation with expert human validation so that the accuracy in the data labeling process holds high. Using this hybrid approach, it minimizes errors and elevates model performance.

Rigid Quality Control Procedures: At GTS.AI, our priority is quality. We conduct a strong quality control regime during different stages of labeling procedures. This multilayered validation process consists of expert reviewers and automated validation tools to assure compliance of the dataset with industry standards.

Flexible and Economical Solutions: We realize that different businesses have different requirements. So, we offer custom-tailored solutions that fit within your budget and scope and size of projects. For one-time dataset annotation or time-to-time data labeling support, GTS.AI provides a solution that is cost-effective and does not compromise on the quality.

How GTS.AI is Shaping the Future of AI

With the demands of AI changing rapidly, the need for high-quality labeled data is soaring. GTS.AI is leading this charge by helping organizations attain the utmost potential in AI through quality data label services. The intellectual power with small-scale data handling, unyielding insistence on quality, and spirit of inventiveness fills us with the sophistication necessary to be a reliable partner to AI organizations in every corner of the globe. From self-driving cars, smart chatbots, and improved medical diagnosis; our labeled datasets tantamount to breakthroughs in AI. Back the GTS.AI to fast-track their AI development with confidence, assured of its data quality.

Conclusion

The conclusion of this is that GTS.AI is basically focused in providing fully-labeled data in order to guarantee maximum output from your AI application. Accompanied by some of the best automation, human work, and thorough quality check; GTS.AI ensures that your AI models will be trained with the best of data. If you wish to have a reliable data labelling partner, GTS.AI will take your AI project to the next level. You can visit GTS.AI at https://gts.ai/ to learn more on how we can make your AI initiatives a success.

0 notes