#data annotation

Explore tagged Tumblr posts

Text

The Rise of Data Annotation Services in Machine Learning Projects

4 notes

·

View notes

Text

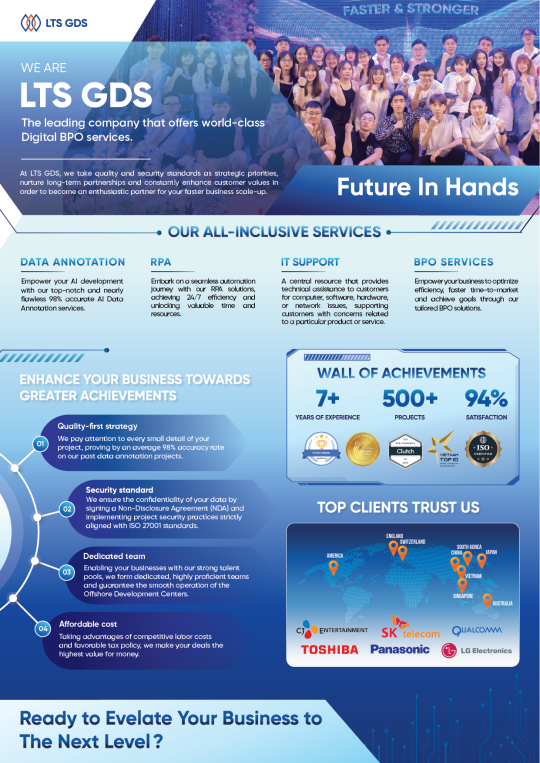

Our IT Services

With more than 7 years of experience in the data annotation industry, LTS Global Digital Services has been honored to receive major domestic awards and trust from significant customers in the US, Germany, Korea, and Japan. Besides, having experienced hundreds of projects in different fields such as Automobile, Retail, Manufacturing, Construction, and Sports, our company confidently completes projects and ensures accuracy of up to 99.9%. This has also been confirmed by 97% of customers using the service.

If you are looking for an outsourcing company that meets the above criteria, contact LTS Global Digital Service for advice and trial!

2 notes

·

View notes

Text

Now Hiring!!! This Ireland-based tech company is hiring international workers for the position of Map Evaluator. Purely Work at home part-time or full-time. Experience is not necessary. No phone/online interview. Click or copy the link to apply.

https://bit.ly/3IzrLeD

3 notes

·

View notes

Text

The Power of AI and Human Collaboration in Media Content Analysis

In today’s world binge watching has become a way of life not just for Gen-Z but also for many baby boomers. Viewers are watching more content than ever. In particular, Over-The-Top (OTT) and Video-On-Demand (VOD) platforms provide a rich selection of content choices anytime, anywhere, and on any screen. With proliferating content volumes, media companies are facing challenges in preparing and managing their content. This is crucial to provide a high-quality viewing experience and better monetizing content.

Some of the use cases involved are,

Finding opening of credits, Intro start, Intro end, recap start, recap end and other video segments

Choosing the right spots to insert advertisements to ensure logical pause for users

Creating automated personalized trailers by getting interesting themes from videos

Identify audio and video synchronization issues

While these approaches were traditionally handled by large teams of trained human workforces, many AI based approaches have evolved such as Amazon Rekognition’s video segmentation API. AI models are getting better at addressing above mentioned use cases, but they are typically pre-trained on a different type of content and may not be accurate for your content library. So, what if we use AI enabled human in the loop approach to reduce cost and improve accuracy of video segmentation tasks.

In our approach, the AI based APIs can provide weaker labels to detect video segments and send for review to be trained human reviewers for creating picture perfect segments. The approach tremendously improves your media content understanding and helps generate ground truth to fine-tune AI models. Below is workflow of end-2-end solution,

Raw media content is uploaded to Amazon S3 cloud storage. The content may need to be preprocessed or transcoded to make it suitable for streaming platform (e.g convert to .mp4, upsample or downsample)

AWS Elemental MediaConvert transcodes file-based content into live stream assets quickly and reliably. Convert content libraries of any size for broadcast and streaming. Media files are transcoded to .mp4 format

Amazon Rekognition Video provides an API that identifies useful segments of video, such as black frames and end credits.

Objectways has developed a Video segmentation annotator custom workflow with SageMaker Ground Truth labeling service that can ingest labels from Amazon Rekognition. Optionally, you can skip step#3 if you want to create your own labels for training custom ML model or applying directly to your content.

The content may have privacy and digitial rights management requirements and protection. The Objectway’s Video Segmentaton tool also supports Digital Rights Management provider integration to ensure only authorized analyst can look at the content. Moreover, the content analysts operate out of SOC2 TYPE2 compliant facilities where no downloads or screen capture are allowed.

The media analysts at Objectways’ are experts in content understanding and video segmentation labeling for a variety of use cases. Depending on your accuracy requirements, each video can be reviewed or annotated by two independent analysts and segment time codes difference thresholds are used for weeding out human bias (e.g., out of consensus if time code differs by 5 milliseconds). The out of consensus labels can be adjudicated by senior quality analyst to provide higher quality guarantees.

The Objectways Media analyst team provides throughput and quality gurantees and continues to deliver daily throughtput depending on your business needs. The segmented content labels are then saved to Amazon S3 as JSON manifest format and can be directly ingested into your Media streaming platform.

Conclusion

Artificial intelligence (AI) has become ubiquitous in Media and Entertainment to improve content understanding to increase user engagement and also drive ad revenue. The AI enabled Human in the loop approach outlined is best of breed solution to reduce the human cost and provide highest quality. The approach can be also extended to other use cases such as content moderation, ad placement and personalized trailer generation.

Contact [email protected] for more information.

2 notes

·

View notes

Text

AI is rewriting the insurance playbook—and at the core of this transformation is Data Annotation.

From automating claims to detecting fraud and assessing risk, annotated data is helping insurers drive efficiency, accuracy, and customer trust. Explore how data labeling is enabling smarter, faster, and more personalized insurance services. Check out the full infographic to see how it's reshaping the industry!

0 notes

Text

Data Annotation: Fueling the Intelligence Behind AI Systems

Data annotation is the backbone of machine learning, enabling AI to interpret images, text, audio, and video accurately. Specialized services offer scalable, human-powered annotation solutions that ensure data quality and precision. These efforts are critical for training reliable AI models across sectors like healthcare, automotive, retail, and finance.

0 notes

Text

What Are the Key Elements of Gen AI Prompts ?

The transformative power of Gen AI Prompts in annotation services, designed to enhance accuracy and efficiency in data labeling. These prompts leverage advanced algorithms to streamline the annotation process, ensuring high-quality outputs that meet your specific needs. With customizable options, users can tailor prompts to suit various projects, significantly reducing turnaround times while maintaining consistency. Elevate your data management strategy with Gen AI Prompts, where innovation meets precision for superior results.

1 note

·

View note

Text

Supported Agritech Harvesting by Powering Robots with Precise Training Datasets

Cogito Tech offered precise training datasets featuring fruits and vegetables at multiple ripening stages under different lighting and environmental conditions. Advanced data labeling, including pixel-level segmentation and bounding boxes, ensured accurate fruit detection (ripe/unripe or rotten).

Read More : https://www.cogitotech.com/case-studies/supported-agritech-harvesting-by-powering-robots-with-precise-training-datasets/

0 notes

Text

Image Annotation Services: Powering AI with Precision

Image annotation services play a critical role in training computer vision models by adding metadata to images. This process involves labeling objects, boundaries, and other visual elements within images, enabling machines to recognize and interpret visual data accurately. From bounding boxes and semantic segmentation to landmark and polygon annotations, these services lay the groundwork for developing AI systems used in self-driving cars, facial recognition, retail automation, and more.

High-quality image annotation requires a blend of skilled human annotators and advanced tools to ensure accuracy, consistency, and scalability. Industries such as healthcare, agriculture, and e-commerce increasingly rely on annotated image datasets to power applications like disease detection, crop monitoring, and product categorization.

At Macgence, our image annotation services combine precision, scalability, and customization. We support a wide range of annotation types tailored to specific use cases, ensuring that your AI models are trained on high-quality, well-structured data. With a commitment to quality assurance and data security, we help businesses accelerate their AI initiatives with confidence.

Whether you're building object detection algorithms or fine-tuning machine learning models, image annotation is the foundation that drives performance and accuracy—making it a vital step in any AI development pipeline.

0 notes

Text

Data labeling and annotation

Boost your AI and machine learning models with professional data labeling and annotation services. Accurate and high-quality annotations enhance model performance by providing reliable training data. Whether for image, text, or video, our data labeling ensures precise categorization and tagging, accelerating AI development. Outsource your annotation tasks to save time, reduce costs, and scale efficiently. Choose expert data labeling and annotation solutions to drive smarter automation and better decision-making. Ideal for startups, enterprises, and research institutions alike.

#artificial intelligence#ai prompts#data analytics#datascience#data annotation#ai agency#ai & machine learning#aws

0 notes

Text

Data Annotation and Labeling Services

We specialize in data labeling and annotation to prepare raw data for AI systems. Our expert team ensures each dataset is carefully labeled, following strict accuracy standards. Whether you need image, text, audio, or video annotation, we provide high-quality training data for machine learning models. More Information: https://www.lapizdigital.com/data-annotation-services/

0 notes

Text

Exploring the Indian Signboard Image Dataset: A Visual Journey

Introduction

Signboards constitute a vital element of the lively streetscape in India, showcasing a blend of languages, scripts, and artistic expressions. Whether in bustling urban centers or secluded rural areas, these signboards play an essential role in communication. The Indian Signboard Image Dataset documents this diversity, offering a significant resource for researchers and developers engaged in areas such as computer vision, optical character recognition (OCR), and AI-based language processing.

Understanding the Indian Signboard Image Dataset

The Indian Signboard Image Dataset comprises a variety of images showcasing signboards from different regions of India. These signboards feature:

Multilingual text, including Hindi, English, Tamil, Bengali, Telugu, among others

A range of font styles and sizes

Various backgrounds and lighting situations

Both handwritten and printed signboards

This dataset plays a vital role in training artificial intelligence models to recognize and interpret multilingual text in real-world environments. Given the linguistic diversity of India, such datasets are indispensable for enhancing optical character recognition (OCR) systems, enabling them to accurately extract text from images, even under challenging conditions such as blurriness, distortion, or low light.

Applications of the Dataset

The Indian Signboard Image Dataset plays a crucial role in various aspects of artificial intelligence research and development:

Enhancing Optical Character Recognition (OCR)

Training OCR systems on a wide range of datasets enables improved identification and processing of multilingual signboards. This capability is particularly beneficial for navigation applications, document digitization, and AI-driven translation services.

Advancing AI-Driven Translation Solutions

As the demand for instantaneous translation increases, AI models must be adept at recognizing various scripts and fonts. This dataset is instrumental in training models to effectively translate signboards into multiple languages, catering to the needs of travelers and businesses alike.

Improving Smart Navigation and Accessibility Features

AI-powered signboard readers can offer audio descriptions for visually impaired users. Utilizing this dataset allows developers to create assistive technologies that enhance accessibility for all individuals.

Supporting Autonomous Vehicles and Smart City Initiatives

AI models are essential for interpreting street signs in autonomous vehicles and smart city applications. This dataset contributes to the improved recognition of road signs, directions, and warnings, thereby enhancing navigation safety and efficiency.

Challenges in Processing Indian Signboard Images

Working with Indian signboards, while beneficial, poses several challenges:

Diversity in scripts and fonts – India recognizes more than 22 official languages, each characterized by distinct writing systems.

Environmental influences – Factors such as lighting conditions, weather variations, and the physical deterioration of signboards can hinder recognition.

Handwritten inscriptions – Numerous small enterprises utilize handwritten signage, which presents greater difficulties for AI interpretation.

To overcome these obstacles, it is essential to develop advanced deep learning models that are trained on varied datasets, such as the Indian Signboard Image Dataset.

Get Access to the Dataset

For researchers, developers, and AI enthusiasts, this dataset offers valuable resources to enhance the intelligence and inclusivity of AI systems. You may explore and download the Indian Signboard Image Dataset at the following link: Globose Technology Solution

Conclusion

The Indian Signboard Image Dataset represents more than a mere assortment of images; it serves as a portal for developing artificial intelligence solutions capable of traversing India's intricate linguistic environment. This dataset offers significant opportunities for advancements in areas such as enhancing optical character recognition accuracy, facilitating real-time translations, and improving smart navigation systems, thereby fostering AI-driven innovation.

Are you prepared to explore? Download the dataset today and commence the development of the next generation of intelligent applications.

0 notes

Text

A Guide to Choosing a Data Annotation Outsourcing Company

Clarify the Requirements: Before evaluating outsourcing partners, it's crucial to clearly define your data annotation requirements. Consider aspects such as the type and volume of data needing annotation, the complexity of annotations required, and any industry-specific or regulatory standards to adhere to.

Expertise and Experience: Seek out outsourcing companies with a proven track record in data annotation. Assess their expertise within your industry vertical and their experience handling similar projects. Evaluate factors such as the quality of annotations, adherence to deadlines, and client testimonials.

Data Security and Compliance: Data security is paramount when outsourcing sensitive information. Ensure that the outsourcing company has robust security measures in place to safeguard your data and comply with relevant data privacy regulations such as GDPR or HIPAA.

Scalability and Flexibility: Opt for an outsourcing partner capable of scaling with your evolving needs. Whether it's a small pilot project or a large-scale deployment, ensure the company has the resources and flexibility to meet your requirements without compromising quality or turnaround time.

Cost and Pricing Structure: While cost is important, it shouldn't be the sole determining factor. Evaluate the pricing structure of potential partners, considering factors like hourly rates, project-based pricing, or subscription models. Strike a balance between cost and quality of service.

Quality Assurance Processes: Inquire about the quality assurance processes employed by the outsourcing company to ensure the accuracy and reliability of annotated data. This may include quality checks, error detection mechanisms, and ongoing training of annotation teams.

Prototype: Consider requesting a trial run or pilot project before finalizing an agreement. This allows you to evaluate the quality of annotated data, project timelines, and the proficiency of annotators. For complex projects, negotiate a Proof of Concept (PoC) to gain a clear understanding of requirements.

For detailed information, see the full article here!

2 notes

·

View notes

Text

Guide to Partner with Data Annotation Service Provider

Data annotation demand has rapidly grown with the rise in AI and ML projects. Partnering with a third party is a comprehensive solution to get hands on accurate and efficient annotated data. Checkout some of the factors to hire an outsourcing data annotation service company.

#data annotation#data annotation service#image annotation services#video annotation services#audio annotation services#image labeling services#data annotation solution#data annotation outsourcing

2 notes

·

View notes

Text

The Ultimate Guide to Finding the Best Datasets for Machine Learning Projects

Introductions:

Datasets for Machine Learning Projects, high-quality datasets are crucial for the development, training, and evaluation of models. Regardless of whether one is a novice or a seasoned data scientist, access to well-organized datasets is vital for creating precise and dependable machine-learning models. This detailed guide examines a variety of datasets across multiple fields, highlighting their sources, applications, and the necessary preparations for machine learning initiatives.

Significance of Quality Datasets in Machine Learning

The performance of a machine learning model can be greatly influenced by the dataset utilized. Factors such as the quality, size, and diversity of the dataset play a critical role in determining how effectively a model can generalize to new, unseen data. The following are essential criteria that contribute to dataset quality:

Relevance: The dataset must correspond to the specific problem being addressed.

Completeness: The presence of missing values should be minimal, and all critical features should be included.

Diversity: A dataset should encompass a range of examples to enhance the model's ability to generalize.

Accuracy: Properly labeled data is essential for effective training and assessment.

Size: Generally, larger datasets facilitate improved generalization, although they also demand greater computational resources.

Categories of Datasets for Machine Learning

Machine learning datasets can be classified based on their structure and intended use. The most prevalent categories include:

Structured vs. Unstructured Datasets

Structured Data: This type is organized in formats such as tables, spreadsheets, or databases, featuring clearly defined relationships (e.g., numerical, categorical, or time-series data).

Unstructured Data: This encompasses formats such as images, videos, audio, and free-text data.

Supervised vs. Unsupervised Datasets

Supervised Learning Datasets: These datasets consist of labeled examples where the target variable is known (e.g., tasks involving classification and regression).

Unsupervised Learning Datasets: These do not contain labeled target variables and are often employed for purposes such as clustering, anomaly detection, and dimensionality reduction.

Domain-Specific Datasets

Healthcare: Medical imaging, patient records, and diagnostic data.

Finance: Stock prices, credit risk assessment, and fraud detection.

Natural Language Processing (NLP): Text data for sentiment analysis, translation, and chatbot training.

Computer Vision: Image recognition, object detection, and facial recognition datasets.

Autonomous Vehicles: Sensor data, LiDAR, and road traffic information.

Numerous online repositories offer open-access datasets suitable for machine learning applications. Below are some well-known sources:

UCI Machine Learning Repository

The UCI Machine Learning Repository hosts a wide array of datasets frequently utilized in academic research and practical implementations.

Noteworthy datasets comprise:

Iris Dataset (Multiclass Classification)

Wine Quality Dataset

Banknote Authentication Dataset

Google Dataset Search

Google Dataset Search facilitates the discovery of datasets available on the internet, consolidating information from public sources, governmental bodies, and research institutions.

AWS Open Data Registry

Amazon offers a registry of open datasets available on AWS, encompassing areas such as geospatial data, climate studies, and healthcare.

Image and Video Datasets

COCO (Common Objects in Context): COCO Dataset

ImageNet: ImageNet

Labeled Faces in the Wild (LFW): LFW Dataset

Natural Language Processing Datasets

Sentiment140 (Twitter Sentiment Analysis)

SQuAD (Stanford Question Answering Dataset)

20 Newsgroups Text Classification

Preparing Datasets for Machine Learning Projects

Prior to the training of a machine learning model, it is essential to conduct data preprocessing. The following are the primary steps involved:

Data Cleaning

Managing missing values (through imputation, removal, or interpolation)

Eliminating duplicate entries

Resolving inconsistencies within the data

Data Transformation

Normalization and standardization processes

Feature scaling techniques

Encoding of categorical variables

Data Augmentation (Applicable to Image and Text Data)

Techniques such as image flipping, rotation, and color adjustments

Utilizing synonym replacement and text paraphrasing for natural language processing tasks.

Notable Machine Learning Initiatives and Their Associated Datasets

Image Classification (Utilizing ImageNet)

Objective: Train a deep learning model to categorize images into distinct classes.

Sentiment Analysis (Employing Sentiment140)

Objective: Evaluate the sentiment of tweets and classify them as either positive or negative.

Fraud Detection (Leveraging Credit Card Fraud Dataset)

Objective: Construct a model to identify fraudulent transactions.

Predicting Real Estate Prices (Using Boston Housing Dataset)

Objective: Create a regression model to estimate property prices based on various attributes.

Chatbot Creation (Utilizing SQuAD Dataset)

Objective: Train a natural language processing model for question-answering tasks.

Conclusion

Selecting the appropriate dataset is essential for the success of any machine learning endeavor. Whether addressing challenges in computer vision, natural language processing, or structured data analysis, the careful selection and preparation of datasets are vital. By utilizing publicly available datasets and implementing effective preprocessing methods, one can develop precise and efficient machine learning models applicable to real-world scenarios.

For those seeking high-quality datasets specifically designed for various AI applications, consider exploring platforms such as Globose Technology Solutions for advanced datasets and AI solutions.

0 notes