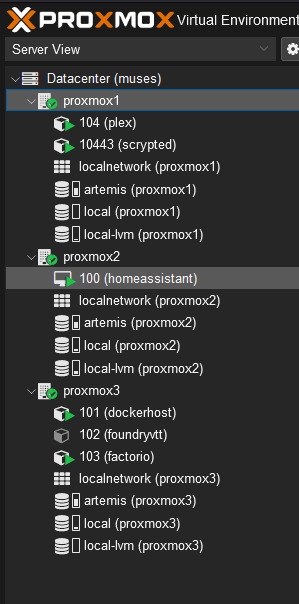

#and build a couple compute heavy nodes

Explore tagged Tumblr posts

Text

Watch me doing things for free that most people get paid to do :negative:

#next I gotta set up ceph#and build a couple compute heavy nodes#and get more n100 based mini-PCs so my plex and scrypted containers have HA failover#seriously the intel n100 is an amazing little box of fun#and at some point I guess I should also p2v the one remaining windows server in my home lab

1 note

·

View note

Text

FFXIV Write 2021 Prompt #13

Oneirophrenia -a hallucinatory, dream-like state caused by several conditions such as prolonged sleep deprivation, sensory deprivation, or drugs

Not many people outside of the staff of Skysteel Manufactory have a chance to visit the rooms on its upper floors. They contain a series of workshops, offices for senior staff, and a couple of rooms dedicated to sleeping for when a shift has to go extra long.

One of the workshops was permanently leased out to the Warriors of Light, and Old Man Franks tended to be the sole user of said space. Under normal circumstances it had spares of approximately a third of the tools he in kept his workspace in the Rising Stones, today it was full to near-bursting with a large supply of Allagan computational nodes and cables connecting them all.

Franks stood in front of the sole connected display, watching the code he'd fed to the assembled cluster via his Ironworks-created magitek grimoire churn through the calculations it had been generating a mere bell ago. If this worked, if all of the mathematics went to plan? Well, maybe, just maybe, he would finally be able to program an arcanima array that would open a portal to another world on his own.

If Stephanivien had any concept of what he was working on in here, he'd likely chastise him for misuse of Manufactory resources. He...hadn't been entirely truthful when he told the head of the guild what he was doing with their rented space, but he couldn't do this work within the Rising Stones.

Mostly because he'd worked through nearly two suns without sleeping between building the cluster and writing the code. Tataru would have had his hide for taking such poor care of himself.

He sat and waited, forcing himself to take his eyes off the display. His eyes were beginning to hurt from staring at a lighted surface. Or maybe that was the lack of sleep. Actually, probably both. He shut them just for a few seconds.

The console sounded to signal that it had finished running, but it was not the upbeat ping that signaled successful completion. Instead, Franks' eyes shot open at the rapid triple-beeps that signaled the computations had failed...again

He ran to the display console. "No no no....DAMMIT!"

"Power requirements insufficient to initialize and/or sustain generation of cross-dimensional gateway aperture" was displayed on the screen. As it had been for every other attempt he'd made at solving this problem. The theory was sound, the problem, as always, was of a practical nature. No force on Hydaelyn, it seemed, was capable of generating the necessary energy to power the creation of a portal. He'd tried modeling everything from ceruleum engines up to a truly ludicrous amount of corrupted crystals. Everything had failed.

He slammed his fists on the nearby table. The heavy nodes didn't budge.

This was POSSIBLE, he knew it! He'd ended up on this star by falling through one! The Exarch's own magicks had brought heroes through to their version of the First from other worlds that had their own versions of the Source and Shards, but that had been temporary, and even that was only accomplished with a great deal of the power of the Crystal Tower. Creating a stable rift, it seemed, was an order of magnitude more complicated. So what in all of creation had generated the one that brought him to Hydaelyn?

He'd gone back to take readings with the various aetherological instruments the Scions had at their disposal. Not much energy was consumed in the sustaining of the rift that had brought him here, so he hypothesized that the amount needed to create one was similarly not overwhelming, but all of his calculations had arrived at the contrary. Something had generated that rift between worlds, and while he lacked both the equipment and the desire to return to the other side of that rift and examine it from that end, he suspected that he would find no answers there as well. Portal magic within the world itself was common enough, but the energy required to open gateways between that world and it's various demiplanes varied greatly. Whatever metaphysical distance or barrier separated entire worlds of existence seemed to be much greater in scope than anything he'd seen before.

Franks slumped onto the table in defeat. For what seemed like only a few minutes, he lay there, contemplating what to even consider using as a possible energy model next, when he heard a voice.

"Once again, my love, you are working far too hard."

He sat up and smiled in the direction of the doorway. It was a gentle, loving chastisement that he'd heard many a time from a voice he would never grow tired of hearing.

At the door stood a woman, tall in height with a heart-shaped face. Long graying hair fell from her head to her shoulders (no, past her shoulders, it was almost down to her chest, now). She wore simple robes of red and gray, and had just finished propping up a green gem-topped staff against the nearest workbench.

She strode over to him, a happy smile on her face as she wrapped her arms around him, holding him gently behind the neck.

Franks wrapped his own arms around her waist and took a moment to admire her beautiful features, taking an extra second or two to lose himself in those sea-green eyes, one slightly darker than the other, before finally bringing their lips together. Another thing he would never tire of.

They break a few seconds later. Franks chuckles. "I know, my dearest. But you know how much of an amazing discovery this would be if I could somehow pull it off! Think of all the good that could be done if universes of like minded people banded together to solve problems! And....well, the world's not currently in peril, so it feels like I should take the time when it's there, y'know?"

She placed a single finger on his lips. "I know, I know, you never could stand being idle. But we have that meeting with Dahkar in the morning, so we both need to get some sleep, yes?"

He cocked his head to the side. "Wait, what meeting? Dahkar is....isn't he off in Doma at the moment? What would we have to meet with him about?

She smiled (her smile is wider than he remembered, almost too big for her face). "We're coordinating the orders for the assault on the Broken Shore, you silly man. Did you forget?"

A throbbing pain suddenly shot into his temple, and he released her to grab the sides of his head. The pain is excruciating, some of the worst he can remember, and he has to brace himself on the nearby table for a moment, looking down. He did remember the meeting she had spoken about, but....that meeting, that assault....it had been years ago. She was talking about another Dahkar....but his mind had been broken, hadn't it? He looked at his own hands. These...these weren't his hands. They didn't look like this. Wait...she didn't look like that, not anymore....

He lifted his head and turned to her. A pale and sallow form stood before him, robes torn into rags. Her gray hair was short and decaying, a green nest above the face that seemed to be melding off of its body. Green pools were replaced by black voids, a single burning yellow spec within.

She opened her mouth. A shriek emerged. He yelled in despair.

WIth a jolt, Franks sat up. He was alone in the room once more. The display on the node had entered an idle state, which displayed only the time. Franks leaned over to read it.

Six bells had past since he'd last looked at the error messages.

With a sigh, he flipped the switch that shut down the entire cluster, only to realize that his linkpearl was pinging.

He tapped it, sending the small jolt of his own aether needed to activate it. "This-". He coughed, his voice still rough from too little sleep. "This is Franks"

"Franks, it's Tataru. Where are you?"

"Ishgard. Worked a little too late and decided to just sleep here." He felt a little bad about lying to Tataru, but he still felt extremely rattled by that....dream, he supposed, and was definitely not in a state where he could accept the chastisement she would doubtless dispose on him with any kind of grace.

"You need to get back to the Rising Stones, ASAP. Y'shtola wants all of us there. She says it's an emergency but she won't say what is going on. All I know is that she has a guest with her."

"Very well, I'm on my way."

He disconnects the linkpearl, and with a final sad look at the nodes, begin channeling the necessary magicks to travel along the aetheryte network back to Mor Dhona.

#Final Fantasy XIV#FFXIV 2021 Writing Challenge#oldmanfranks#Old lady franks?#hmmmmmm#wonder what the emergency might be

12 notes

·

View notes

Text

Tips and best practices for optimizing your smart home

You’ve figured out the basics of setting up your smart home, now it’s time to raise your game. I’ve spent years installing, configuring, and tweaking dozens of smart home products in virtually every product category. Along the way I’ve figured out a lot of the secrets they don’t tell you in the manual or the FAQs, ranging from modest suggestions that can make your smart home configuration less complex, to essential decisions that can save you from having to start over from scratch a few years later.

Here’s my best advice on how to optimize your smart home tools, top tips and best practices.

1. Choose a master platform at the start These days, an Amazon or Google/Nest smart speaker or smart display can fill the role of a smart home hub (and some Amazon Echo devices are equipped with Zigbee radios).

There are three major smart home platforms on the market, and your smart home will probably have at least one of them installed: Amazon Alexa, Google Assistant, or Apple Home Kit. The industry now revolves around these three systems, and virtually every significant smart home device that hits the market will support at least one of them, if not all three.

These platforms are different, of course. Alexa and Google Assistant are voice assistants/smart speakers first, but the addition of features that can control your smart devices has become a key selling point for each. Home Kit is a different animal, designed as more of a hub that streamlines setup and management. But since Home Kit interacts , it too offers voice assistant features provided you have your iPhone in hand or have an Apple Home Pod.

All three of these platforms will peacefully coexist, but you definitely don’t need both Alexa and Google Assistant in the same home, and managing both will become an ordeal as your smart home grows larger. It’s also completely fine to use Home Kit for setting up products and then using Alexa or Google Assistant for control. If you have a Home Kit hub device (either an AppleTV or a Home Pod), you’ll want to use it, as it really does simplify setup.

2. You don’t necessarily need a smart home hub In the early days of the smart home, two wireless standards, Zigbee and Z-Wave, were going to be the future. These low-power radios offer mesh networking features that are designed to make it easy to cover your whole home with smart devices without needing to worry about coverage gaps or congestion issues.

The main problem with Zigbee and Z-Wave devices is that they require a special hub that acts as a bridge to your Wi-Fi network, so you can interact with them using a smartphone, tablet, or your computer (while you’re home and when you’re away, via the internet). Samsung SmartThings is the only worthwhile DIY product in this category at present; its only credible competitor used to be Wink, a company that is now on its third owner and which has a questionable future at best. The Ring Alarm system has both Z-Wave radios onboard, but it’s much more focused on home security than home control.

As simple as Smart Things and Ring Alarm are, you’ll still face a learning curve to master them, and if your home-control aspirations are basic, you might find it easier to use devices (and the apps that control them) that connect directly to your Wi-Fi network and rely on one of the three platforms mentioned above for integration. It’s worth noting here than the 800-pound gorilla in the smart lighting world—Signify, with its Philips Hue product line now offers families of smart bulbs that rely on Bluetooth instead, so they don’t require the $50 Hue Bridge.

That said, however, you’re limited to controlling 10 Hue bulbs over Bluetooth. The Hue Bridge is required beyond that, and it’s also required if you set up Hue lighting fixtures, including its outdoor lighting line.

The bottom line on this point: Unless you want to build out a highly sophisticated smart home system, I recommend sticking with products that connect directly to your network via Wi-Fi, rendering a central hub unnecessary.

3. Range issues can create big problems

The downside of installing Wi-Fi only gear is that everything in the house will need to connect directly to your router. If your router isn’t centrally located and your house is spread out, this can create range issues, particularly in areas where interference is heavy: the kitchen, bathrooms, and anything outside.

Your best bet is to check your Wi-Fi coverage both inside and outside the house before you start installing gear. Make a map of dead zones and decide whether you can live with them. If not, you’ll want to consider relocating your router or moving up to a mesh Wi-Fi network with two or more nodes. You can read more about mesh Wi-Fi networks here.

Interference can also be a troubling problem that changes over time. If your next-door neighbor upgrades or moves his router, you may find that an area of the house with a once-solid signal has suddenly become erratic. You can tinker with the Wi-Fi channel settings in your router’s administration tool, but deploying a mesh network is a more sure-fire solution. Netgear even has an Orbi mesh node that can be installed outdoors to cover your backyard.

4. You don’t need smart gear everywhere

Many a smart home enthusiast has dreamed of wiring his entire home from top to bottom with smart products. A smart switch in every room and a smart outlet on every wall sounds like a high-tech dream; in reality, it can spiral into a nightmare.

The biggest problem is that while smart gear can be amazingly convenient, it also adds complexity to your environment because all of it must be carefully managed. Does installing 50 firmware updates sound like a great way to spend the weekend? Or troubleshooting that one switch that just won’t suddenly connect properly? Deploying smart speakers all over house, so you don’t need to yell for one to hear you, sounds like a great idea, too—that is, until the speakers have difficulty deciding exactly which one you’re talking to.

Devices such as Leviton’s Decora Smart Voice Dimmer with Amazon Alexa make it easy to put Amazon’s digital assistant in every room, which sounds like a great idea until they start fighting each other to answer your commands. In choosing where to install smart gear, think first about necessity. The hard-to-reach socket where you always plug in your Christmas tree is a perfect place for a smart outlet that can be set on a recurring schedule. The kitchen is a great option for voice control, so you don’t need to touch anything with dirty hands. My living room feature is lighted by three lamps which would normally have to be turned off and on individually; with smart bulbs and Alexa, it’s easy to power them on with a couple of spoken words. But does the overhead light in the master closet really need to have any of these features?

And finally, there’s the obvious issue: Smart gear isn’t cheap, and outfitting a large home with smart gear can quickly become exorbitantly expensive. Think about what happens when your gear becomes outdated (and out of warranty)?

The bottom line: While it’s a great idea to install everything you think you’re going to use at the start of your project, don’t overdo it. You can always add on to your system down the road. Install smart gear only where you legitimately know you will use it.

5. Consolidate vendors It might sound like common knowledge to suggest you try to stick with a single vendor when it comes to all your switches or light bulbs, but it’s easy to be wooed by a product that promises new features or better performance. Avoid taking the bait: Over time, bouncing from one vendor to the next will leave you managing multiple apps, and you’ll likely get confused about which one goes with which device.

Many smart outlets and switches don’t carry a visible brand logo, so it isn’t always as easy as just checking the hardware itself to see where you should go. (Making matters worse, many smart products use a management app with a name that that has no relation to the hardware’s name.) And while most HomeKit-capable apps can control other vendors’ Home Kit devices, you’ll still usually need the official app to get things set up initially and to perform regular maintenance.

The good news is that Tech Hive has plenty of buying guides in almost every smart home category to help take the guesswork out of figuring out which brands to build your home around, so you needn’t experiment to find the best products on the market.

6. Give your gear short, logical names By default, many smart products will give themselves a name during setup that consists of generic terms and random digits, none of which will be helpful to you in identifying them later. It’s best to give your gear a short but logical and easy-to-remember name when you first set it up.

Start by giving all the rooms in your house a name in the management app, even if they don’t have any gear in them. (You might install equipment there later.) “Bedroom” is not a good name unless you only have only one. You’ll want to use the most logical but unique names possible here: “Master bedroom,” “Zoe’s bedroom,” “Guest bedroom,” and so on.

Now, when you install a product, standardize names using both the room name and a description of the item—or what the item controls. For example: “Master bedroom overhead lights” for a wall switch or “Office desk lamp” for a smart plug connected to said lamp. In rooms where you have multiple products, you can use a longer descriptor, numerical ID (1, 2, 3…), or something similar. In my living room, the three lamp smart bulbs are named Living room lamp left, center, and right, so if one isn’t working in the app, it’s easy for me to figure out which is which.

Doing this work up front will save you time if and when you connect your gear to a voice assistant. Not only does having a standardized, logical naming system make it easy for you to remember what to say, changing the name of a product in its app generally means having to re-discover the product within your voice assistant app, which is a hassle.

7. Wiring never looks like it does in the pictures

Manuals and online guides always make in-wall wiring look like a standard, well-organized affair, but I can assure you that many an electrician has taken some significant liberties with the way that switches and outlets are wired in the average home. Don’t be surprised to find multiple black line/load wires when you expected to find just two, strange in-wall hardware that doesn’t look like the picture, and wiring that simply doesn’t make sense.

The neutral wire required by the vast majority of smart switches and outlets is typically white. So which of these two white wires is the neutral? Of course, you can always experiment as long as you’re patient. There’s little risk of damaging the product if you miswire it the first time. Just make sure you’re turning the power off at the circuit breaker before you touch anything.

As a last tip on wiring, note that neutral (typically white) wiring is essential for most of the smart switches on the market. If there is no neutral wire in the electrical box where you want to install a smart switch, you’ll need to seek out the handful smart switches and dimmers that don’t require a neutral wire, like these C by GE models or certain switches.

8. Expect problems to emerge without warning

You know how your computer suddenly starts crashing every day, or your printer abruptly vanishes from the network? The same kind of things happen to smart home gear, which, after all, are miniature computers of their own, all prone to the same types of issues. Expect the occasional product to abruptly disconnect from your network, vanish from the management app, or stop working altogether—even after months or years of otherwise trouble-free operation, without any discernable reason. In many cases, you’ll need to manually reset the product to get it to reconnect to the app. Sometimes the app will guide you through this process, otherwise a quick Google search can get you squared away.

9. Pay attention to battery life

Devices not attached directly to the grid rely on battery power to operate. Door/window and motion sensors, smart locks, smart doorbells, many cameras, smoke alarms, and more are all likely to require regular battery replacements or recharging, and while many devices claim to last for multiple months or even years, the reality is often shorter than that.

Take stock of the batteries each of these devices use—some are truly oddball cells that you won’t have in the junk drawer—and keep spares on hand for when they die. Devices that use a rechargeable battery like the Ring Doorbell are supposed to alert you via the app when the battery is running low, so you can recharge it before it goes totally dead, but my experience is that these alerts are rarely actually delivered (or end up being ignored).

If your Ring Video Doorbell’s battery is dead, you’ll never know if someone’s ringing the bell (which, in my case, usually means a “missed delivery” slip from FedEx). I check my Ring’s battery life in the app once a week (it’s under Device Health), and when it hits about 35 percent, I remove the cell and charge it back up (you can also buy spare Ring batteries and just swap a dying battery for a freshly charged one).

10. Dimmers can be particularly problematic

Electrical dimmers like the old-school wall-mounted dial type work by lowering the amount of electrical current being sent to the load device, which will, say, lower the brightness of an incandescent bulb or slow down a fan. Unfortunately, dimmers pose particular problems for many devices. Smart home devices are especially problematic when dimmers are attached, because they contain electronics and radios that simply won’t work if the power isn’t coming through at full strength. As such, it’s a bad idea to connect devices like smart light bulbs to circuits that are connected to a dimmer.

On a similar front, you’ll need to be especially observant if you replace an old toggle switch with a smart dimmer. As a shortcut, sometimes switches are wired with pass-through circuitry that is meant to pass along current to other devices (such as a nearby power outlet). If you swap out this switch with a dimmer, you might inadvertently connect the dimmer to those outlets, causing them to lose all or partial power, making for a complex troubleshooting session.

1 note

·

View note

Text

Nodes

Summary: Beca discovers about Chloe’s vocal nodule surgery over spring break after their acapella performance with the brunette’s change to the setlist. She decides to visit.

Entry for Day 5 - Why Are You Here?

AO3

-

It was the beginning of spring break and Beca couldn't feel more unhappy.

She could see the steam blow out of Aubrey’s head and Beca felt tears rise to her own eyes when the blonde made the choice of removing the brunette from the Bellas.

And no one stood up for her.

Not Fat Amy, not Stacie, not even Jessica, the most optimistic and smiley person in the group, no. Not even Chloe Beale, the co-captain. The only person who did defend the brunette, Beca screamed frustratedly at. She had turned right on her heel and stormed out of the auditorium.

Because that's what she did best.

If all else fails, she runs.

-

Beca misses strolling over to rehearsal every day at 4:00, even if she wasn't particularly fond of the captain or the cardio activity. She misses the parts where Stacie couldn't stop groping herself and the group would end up in a laughing fit. Beca misses how Fat Amy occasionally orders pizza during cardio and would dine in front of the girls with absolutely zero fucks given. She misses Lilly’s ominous comments and how her face would spontaneously pucker up.

Most of all, she misses that person who she sang Titanium in the shower with.

Beca misses Chloe Beale with her bright blue eyes full of hope.

As cliché as it may sound, the redhead made practice more enjoyable and worthwhile. The little winks Chloe would throw Beca during their stretching, the compliments of how good Beca executes a dance move even though the brunette is aware of how she's been half-heartedly doing these dance moves for the past couple of months. She misses how Chloe and she would usually be the last ones to leave rehearsal because the redhead insisted on walking Beca back to her dormitory.

Those were times Beca took for granted and now she may not even see the girls on a regular basis. Her first female friend group disappeared right before Beca’s very eyes just like that.

Everyone has each other's phone numbers, Aubrey created a Bellas group chat with everyone's number on it and was left with a text from Chloe.

Bree and I are proud of everyone's hard work put into this season… hopefully, you guys can carry on and get into the Championship next year! xxx

It was left on read by everyone, even Aubrey… looks like everyone was bitter after that performance. No one has texted the group chat ever since the performance which isn't surprising. Hell, no one even texted one another separately, even if Beca was on good terms with the other Bellas - must've felt awkward.

At this point, Beca didn't have any friends around with the exception of her roommate Kimmy Jin. Well, more like she's the only person that the brunette is able to communicate with… the Asian roommate still wasn't fond of Beca. Even if that may be the case, Beca still preferred to keep to pent up all of her frustrations.

She didn't know what else to do.

-

Beca's huddled up in the corner of her small bed, watching a movie. She's sniffling and crying when she notices her phone vibrate - it's been on vibrate ever since the group's fallout. The brunette wipes her tears away and picks up the phone and notices her father’s name.

Dad 1 text message

Beca quirked up an eyebrow as she removed her headphones, it's odd that her father would message her out of the blue, the two haven't talked or seen each other since Beca had gotten arrested even if they’re on the same campus. Before the brunette could answer, her phone pings again.

Dad 2 text messages

Beca decides to open the texting app.

Is your friend Chloe okay? I heard she got surgery and that’s why she hasn’t been attending study groups lately.

Surgery? What could Chloe be getting surgery for? Beca begins to text until her father sends another message.

Do you not know?

Beca swiftly types across her keyboard, head tilted.

havent talked to her since the performance

Oh. How’s the Bellas?

Beca looks up to the ceiling to prevent more tears from falling. havent talked to them since the performance.

I’m sorry.

Beca hovers her thumbs over the keyboard, circling around letters. She tugs at her bottom lip, she knows what she will ask might become a mess - but Beca is tired of running. do u know the address of the hospital?

Oh! Let me ask one of the students here… Chloe’s really close with the study group people.

The brunette nodded and removed the blanket on top of her along with the bulky black headphones. She shut down her laptop as she waited for her father to respond, slipping her boots on. Her phone pings and Beca immediately opened her phone.

423 Carnegie Way. You planning to visit?

It was too obvious at this point to lie. yes. can i take ur car?

Go ahead. Parked by your dormitory. You have the spare key right?

yeah

Okay, drive safely.

Beca shuts her phone off and just as she’s about to run out the door, her roommate stops her.

“Your makeup, idiot.” Kimmy Jim deadpans, the brunette turns around with a slightly amused expression as she walks over to her mirror. She notices her eyeliner smudged from the crying and somehow forgotten. Beca walks over to her bedside drawer and grabs a packet of makeup wipes then walks back out. “Beca?”

The brunette turns around. “Yeah what’s up?”

“Good job.” Kimmy Jins answers, Beca could tell she was fighting back a smile.

“Cya Kimmy Jin.”

The brunette exits the dormitory building towards her father’s car in the parking lot. Beca unlocks the vehicle and sits in the driver’s seat, wiping off the heavy eyeliner from her face and immediately starts the car once her makeup is completely removed. She pulls out of the parking lot as she starts the GPS for the hospital Chloe is located at. This is either going to be a big mistake or the greatest thing Beca has done.

The brunette parks her father’s car which is intact - Beca accidentally scratched his car against a tree during high school and he won’t forget it. Beca turns off the engine and exits the vehicle and enters the quiet building. She walks towards the receptionist and notices the “Visting Hours” sign is lit, luck is on Beca’s side today. The receptionist looks up and smiles gently at Beca, she looks like she hasn’t received much sleep.

“How may I help you?”

Beca clears her throat and speaks in a lower octave. “Is there anyone by the name of Chloe Beale here?”

The receptionist quirks up an eyebrow. “Who may you be? Visitors can only be friends and family.”

“Oh, I’m her friend. I’m in the same acapella group as her, the Barden Bellas.” Beca groans at herself internally, she has a tendency to overshare when nervously speaking with strangers.

“Alright… yes, she’s here. Would you like to visit her?” Beca nods. The receptionist logs information into the computer and grabs the untearable visitor bands from underneath her desk. Beca holds out her wrist as the receptionist wraps the band around her wrist and cuts off the excess part. “She’s on level 3, room 303. Enjoy your visit.”

Beca waves goodbye at the friendly receptionist and walks to the elevators, pressing the third-floor button. She feels her heart rate pick up and hands go clammy, not sure whether if she’s nervous for Chloe’s reaction or seeing the redhead in general. The brunette’s mouth goes dry as the elevator doors open, Beca immediately being able to see her room on the right-hand side of the building. She slowly approaches the door and takes a shaky breath. The brunette opens the door.

Chloe is dressed in a hospital gown and she manages to make those displeasing gowns look good. She’s staring out of the window, earbuds plugged into her ear as she nods slowly along with a beat. Beca walks closer to her bed and the redhead slowly turns her head towards the brunette, she gapes her mouth open as she removes her earbuds.

“Hi Chlo…” Beca awkwardly waves, confused when Chloe turns away. She’s relieved to find the redhead turn back around with a pen and notepad.

Why are you here?

Beca takes a seat at the edge of her bed. “Just wanted to see how you were… did your surgery go well? What was it for?” The brunette asks, nervously fidgeting with her hands. Chloe smiles and writes her response down once again, Beca notices she switched hands for writing this time… ambidextrous.

It went well, I’m on vocal rest. And it’s cute how you worry. Remember my nodes? I removed them…

The brunette’s jaw drops as she inches closer to Chloe. “Oh wow, that’s… shit. Can you still sing?” Chloe nods and writes a note down.

Can’t sing above a G# maybe ever. Probably have to take voice therapy for like four to six weeks.

Beca brushes a stray hair behind her ear out of nervousness. “I’m sorry about that. At least you can still sing after right?” The redhead nods and writes a reply down.

You’re the first person to visit me you know? I expected maybe Aubrey or something but no… it's you. How come?

“I don’t know… felt like I was required too. You’re my friend.” Chloe’s smile washes over her face, that’s the first she’s smiled since Beca walked in. “Also… I’m really sorry for what I said to you after the performance. It was so fucked up and I wish I could take it back.” The redhead grabs Beca’s hand as she writes down another note.

No, it’s fine. I’m sorry too, I should’ve stood up to Bree. And that’s the first time I’ve heard you mention that I’m your friend :)

Beca laughs at the smiley face drawn at the end. “Yeah… don’t tell anyone. I have this whole ‘badassery’ vibe going on here.” The brunette gestures to her body with the hand not being held by Chloe’s. The redhead rolls her eyes and the smile grows wider. There’s silence between the two as Beca stares into Chloe’s bright blue eyes, blushing at the sight of her smile. Beca breathes in and lets out a shaky breath. “I really missed you Chlo.” The redhead’s eyes widen a bit as she writes once again.

I missed you too. Have you talked to any of the other girls yet?

Beca shakes her head no.

Wow, I’m the first? I’m special, aren’t I ;)

“Don’t get too cocky there Beale.” The brunette teases while smirking. “You just, I miss seeing your smile and going to practices with you and shit…” Chloe tilts her head. “I’ve never really felt close to someone until you? Maybe that’s because you saw me naked within like a week of meeting each other… that’s at least two bases you skipped there.” Beca jokes, causing Chloe to bite down her lip to prevent laughing too much. “I just… really really like you and I was worried about you and… yeah.” Beca is confused as to why Chloe’s eyes were huge until she realized what she just said. She stands from the bed, covering her mouth. “Shit I- fuck.” Chloe quickly scribbles down something on her notepad.

Wait, Beca no it’s okay! Sit back down.

The brunette clenches her hands into fists and slowly sits down. “I’m sorry I just… I tend to ramble and just, ugh fuck! I’m just so bad with this type of stuff…” Chloe gestures for Beca to come closer and so Beca does. The redhead plants a soft kiss on the corner of Beca’s mouth and smiles when Beca appears to be dumbfounded. Chloe immediately scribbles something on her notepad.

I really like you too idiot.

Beca rolls her eyes as she slowly grazes over the corner of her mouth with her fingertips, the feeling of Chloe’s lips still lingering. The brunette blushes as Chloe slips her hand into Beca’s. The redhead notices the time and frowns. She writes in her notepad with her free hand.

Hospital people don’t like it when you stay for too long… you should probably get going.

Beca frowned as she slowly stood up, still holding hands with Chloe. “Yeah… probably.” Chloe scribbles something down.

I hope the Bellas will regroup sometime soon.

The brunette nods. “Yeah me too…” Beca plants a kiss on Chloe’s forehead and waves goodbye to her possible girlfriend. The brunette leaves the hospital with a smile on her face and the feeling of Chloe’s lips still tingling the corner of her mouth. When she enters her father’s car, she immediately gets a text from Chloe.

FOOTNOTES LEADER WAS IN HIGH SCHOOL AND GROUP WAS DISQUALIFIED. WE’RE BACK IN BEC!

She smiles as she starts the car… luck was really on her side today.

56 notes

·

View notes

Text

Pivx Wallet

PIVX, a cryptocurrency using a strong focus on level of privacy is definitely an offshoot of RUSH. It arrived to living when the crypto group couldn�t concur on the possible future of DASH. According to insider Crypto S. I. the DASH community has been ripped between building typically the ultimate privateness coin and scaling to arrive at a large audience. When they couldn�t consent on this some sort of few members of the local community decided to follow size use and to launch PIVX. PIVX Website Exactly what is PIVX? Material [Show] PIVX normally takes this best features of DASH and even adds some sort of couple of exclusive features. The cryptocurrency works on the privateness protocol named zk-SNARKs or maybe zero-knowledge evidence which is also used in zCash, Monero and even DASH. That is similar to ZCoin in that it as well works with a custom variant regarding the Zerocoin protocol. It has an various attribute to send private cash. Users can mail fragmentary; sectional levels and mail cash instantly to a good receiving pocket book. With this sense it is definitely the amalgam between RUSH and ZCoin, incorporating comfort and Evidence of Stake (PoS). It is said that will PIVX is the simply Proof of Stake cryptocurrency (PoS) that has enforced the entire list of requirements as set out in the Zerocoin whitepaper. Electrum Pivx Wallet is a new cryptocurrency that was recommended in a new paper by simply professor Matt D. Natural and a couple of graduate learners. Its target is in order to extend the Bitcoin process with true nameless transactions. In this sense, PIVX has superior privacy features compared to Bitcoin. Precisely what Are the Advantages of PIVX? PIVX is more vitality efficient than other Substantiation of Function (PoW) cryptocurrencies due to the Detras comprehensive agreement mechanism. There can be as well a network involving masternodes that maintain the PIVX blockchain. Masternodes govern the network and may vote on decisions with regards to future development of the coin. There is likewise some sort of self-funding treasury the fact that releases resources for brand-new advancement of the PIVX blockchain. What Features Make PIVX Different From SPLASH? As an alternative of being constructed on a PoW formula like DASH, where miners have to spend computer system solutions to verify the particular blockchain, the PIVX methods is dependent on PoS. PIVX is also completely various to other privacy gold coins like Monero (XMR) plus ZCash (ZEC) in this this doesn�t require mining or prospecting to generate new coins however holders earn a position or reward for just holding PIVX in a good wallet. Proof of Risk is a method of sent out consensus. Stands of PIVX are recognized for getting the coins into their pocket. Another great feature connected with PIVX is the see-saw formula and Instant Dealings applying SwiftTX. 3Commas SPLASH versus PIVX What will be a PIVX Masternode?

A new PIVX Masternode needs 15 000 PIVX for being secured in a wallet for the server as collateral. Masternodes earn rewards for giving services to the PIVX system. The nature regarding the masternodes principle is that they must become fully decentralized and trustless. The returns to masternode holders are usually slightly bigger returns in order to finances holder who risk PIVX with regard to rewards. All these rewards can be variable and are also determined by way of a see-saw protocol. Precisely what is The See-saw criteria? This particular custom algorithm is definitely a ingenious response to help networks that become too masternode heavy. This leads to issues related to governance. Using DASH masternode owners have got voting rights and larger control over the network. The greater masternodes anyone offers the additional influence these people have over judgements. This makes influence more central and that access to be able to owning a masternode is only accessible to a small number of. The see-saw criteria of PIVX checks often the amount of masternodes inside comparison to the sum of PIVX staked at the same moment. In case the volume of masternodes is usually too many then the particular modus operandi adjusts the particular advantages that are released to help masternode owners. They can acquire less rewards. PIVX Electrum Wallet is in order to incentivize masternode proprietors in order to rather share coins and give up voting rights. With this sense right now there is a greater decentralization of voting rights plus a greater syndication of PIVX holders. Contrary to most cryptocurrencies, the supply of PIVX is usually endless. What is definitely SwiftTX? PIVX�s SwiftTX is usually near fast transaction instances where deals are proved within mere seconds. This will be accomplished through the circle of masternodes and dealings do not need many confirmations like Bitcoin in advance of it is spendable. Exactly why Does PIVX Have A great Unlimited Coin Supply? In contrast to Bitcoin which has the maximum coin supply regarding 21 thousand Bitcoin, PIVX does not have that limit. The reasons guiding this is that whilst Bitcoin is more regarding a a digital resource, PIVX acts like a digital currency. The supply regarding any kind of money should boost and turn into subject to increase. This is called quantitative easing in the key banking world. The PIVX supply will increase simply by five per cent per year. Often the reasoning is people will be incentivized to help work with PIVX, definitely not hold it. It will certainly not increase in value nevertheless end up being deflationary like fiat money. Instead of a central bank benefiting by the currency decline, the particular new coins that happen to be created are returned to be able to the masternode and finances holders by way of PoS incentives. This rewards the group directly. The amount paid of masternodes will not advance inside future and in fact always be affordable which is the added bonus. Buying life insurance PIVX? You are not capable to buy PIVX using �Fiat� foreign currency so you will need to 1st buy another currency : the perfect to buy are usually Bitcoin or perhaps Ethereum which you can do from Coinbase using a traditional bank exchange or debit hcg diet plan charge card purchase and then simply trade that will for PIVX at an exchange which will lists the symbol. Create sure you use each of our hyperlink to signup you is going to be credited using $20 in free bitcoin if you make your very first getting $100. Coinbase Website May buy PIVX on the following Exchanges: Bittrex UpBit Binance Cryptopia CoinRoom YoBit LiveCoin Are usually Roadmap regarding PIVX? The event team can be busy applying the Zerocoin protocol in to deterministic wallets for PIVX. This standard protocol is called zPIV. The great in addition to unique feature of zPIV is of which your wallet balance might be masked. This is good for safety measures and regarding deterring potential online hackers from stealing your funds. This is also a good totally unique have to only PIVX. Is zPIV A new Different Coin to PIVX? No, zPIV and PIVX are the same cryptocurrency. The zPIV method regularly the private purchases on pIVX using zero-knowledge proofs. What is the Deterministic PIVX Wallet? Some sort of deterministic wallet is some sort of wallet that can simply be restored or backup utilizing a backup phrase. This is definitely usually a new long collection of words. An individual does definitely not need to find out the private key for you to regain typically the wallet. Considering the zPIV process runs with zero knowledge proofs there is no web page link in between typically the sender and recipient. Sending PIVX applying the zPIV process is usually 100% anonymous together with untraceable. This is good reports while users will not really have to keep backups just about every time they great new private PIVX cash. This will include foreseeable future and even past backups connected with just about any private PIVX minted. Besides the budget features they also want to introduce staking of zPIV called zProof-of-stake (zPOS). PIVX Wallets What is zPOS? A lot like staking normal PIVX coins, cases can also stake zPIV coins. The advantage associated with this is that they can possibly be able to earn increased rewards. Some sort of new stop prize system is proposed that will see zPIV stands acquire 50% greater rewards than holding normal PIVX gold and silver coins. To earn gains, end users must keep their wallets and handbags open up 24/7. This specific generates a greater network regarding nodes which have been instantly accessible. What Does the Staff Look LIke? Since typically the PIVX or maybe is the privacy cryptocurrency, a good good deal of the contributors prefer to keep their identities concealed. Very few of the actual business friends are shown on the website. Anyone that is a trusted member in the PIVX group and even is energetic factor for you to the project can easily inquired to be listed on their website simply by reaching out upon the open DIscord funnel. Summary PIVX is a new next generation cryptocurrency that focuses on decentralization, level of privacy in addition to real-world adoption seeing as monthly payment system. To this end it can handle fast transactions, is secure and offers the private delivering connected with funds.

1 note

·

View note

Text

The Good Thing That Came Out of the COVID-19 Pandemic

Dear Readers,

As you know, it has been a really tough 2020 so far, worldwide.

Here in the U.S. we’re still battling COVID-19; dealing with hurricanes, social unrest from racial conflict; a very divisive political situation, and here in California where I live, forest fires (about 400 burning at the same time at one point) enough to cause air quality warnings far away from the fires.

I know some of you are in Europe, Asia, Australia and the Middle East. I hope things aren’t so bad over there.

But enough of that. We must focus on living and make necessary adjustments to carry on with our lives.

There is an old Chinese saying that goes something like this: From crisis, there is opportunity (forgive me if I butchered it; no insult intended).

For the COVID pandemic, this turned out to be true: millions, if not billions of people all over the world learned that they could do a lot of things that they normally did in person, online. And for those who already did this well before COVID, they learned how to do it even better.

Shopping, buying groceries and sundries, attending school, working, holding meetings, attending church services, getting music lessons, and socializing are just some of the activities people learned how to effectively do online, thanks to being quarantined.

And, in my opinion, the most significant thing people are doing more of online, thanks to COVID: healthcare. Telemedicine, also called telehealth involves using a telephone and/or webcam to communicate with a health professional instead of in person, face-to-face for the purpose of improving one’s health. It also encompasses “consuming” health care content in digital format via the internet such as pre-recorded videos, slides, images, flow charts, white papers, and audio files and podcasts. I wrote about this over five years ago when I decided to transition my practice to a telehealth model.

Telehealth was just starting to gain traction right before COVID, but the pandemic accelerated its acceptance. The need to quarantine and social distance forced doctors and their patients to interact online, and things will never be the same (in a good way). We were hesitating at the edge of the swimming pool and COVID pushed us into that cold water, figuratively speaking.

Webcams, Internet, Wireless Connectivity and Mobile Devices Finally Transform Healthcare

The “planets aligned” for telemedicine, and very soon it’s going to be as common as buying groceries. To me, it’s overdue. I hope that telehealth not only enables healthcare for millions more lives on the planet, it will drive healthcare costs down. The cost savings to hospitals are obvious; and those savings should be passed on to the insured and paying patients. We’ll see if that happens. While I know people are used to tradition, starting from the days of the old country doctor with good bedside manners I think in 2020 and beyond, people are going to be just fine seeing their doctor online for simple and routine visits.

And the implications go beyond the actual care: telemedicine will save time and money on a macroeconomic scale, and will be actually good for the environment in more ways than one: less cars on the road (no need to drive to see your doctor); less electricity and other overhead expenses needed to keep a large building operable, less printed paper, etc.

Telehealth Is Ideal for your Average Doctor Visit

The vast majority of things that cause people to seek a doctor are non-emergency, and lifestyle related. Non-emergency means not life-threatening, or risk of serious injury. Lifestyle related means conditions that are largely borne out of lifestyle choices—high-calorie/ junk food diets; alcohol use, smoking, inadequate exercise, occupational/work-related, etc. and are usually chronic; i.e. having a long history–diabetes, high blood pressure, indigestion, arthritis, joint pain, etc. These conditions can be self-managed with proper medical guidance provided remotely via webcam. I believe that if lifestyle choices can cause illness, different lifestyle choices can reverse or minimize those same illnesses, which can be taught via telehealth.

Then there are the cases that are non-emergency, single incident: fevers, rashes, stomach aches, allergies, minor cuts and scrapes, and things of that nature. Sure, some cases of stomach aches and headaches can actually be something dire like cancer. But doctors know that such “red flag” scenarios are comparatively rare, as in less than one percent of all cases; therefore, the vast majority of them can be handled via telehealth. Besides, the doctor can decide at the initial telehealth session if the patient should come in the office, if he/she suspects a red flag.

A Typical In-Office Doctor Visit

Typically when you go to a doctor/ primary care physician, you are given a list of disorders and told to check off any that apply to you recently—stomach pain, headaches, vomiting, fever, etc.

Then, you are asked a bunch of questions related to your complaint. This is called taking your history (of your condition). The nurse practitioner or doctor may do this.

The doctor may or may not examine you, such as checking your eyes, ears, nose, and mouth; temperature, blood pressure, heart rate, lungs and so on depending on your history and complaint.

The doctor then takes this information and comes up with a diagnosis or two. You may be referred for diagnostic testing, again depending on what you came in for, such as an X-ray, MRI, ultrasound or blood test.

You may get a prescription for medications or medical device, and a printout of home care instructions, and then you’re done with your office visit.

With the exception of a physical examination involving touching and diagnostic tests, everything I just explained can be done via a telehealth visit on your computer. But as technology advances, more and more medical procedures will be performed remotely via a secure internet connection.

I believe that in the very near future, there will be apps and computer peripherals capable of doing diagnostic tests which will allow your doctor to get real-time diagnostic data during your telehealth visit. It’s already possible for blood sugar, body temperature, heart and lung auscultation and blood pressure.

Imagine wearing gloves with special, embedded sensors in the fingertips that transfer sensory information via the internet to “receiver” gloves that your doctor wears, 20 miles away. During a telehealth visit, you can palpate (feel) your glands, abdomen, lymph nodes, etc. and this sensory information is immediately felt by your doctor, as though he was right there palpating and examining you.

Or, imagine an ultrasound device that plugs into your HD port that transfers images of your thyroid to your doctor via the internet.

The possibilities are endless, and it bodes well for global health. Imagine all the people who can be helped, all over the world, via telehealth. It’s truly an exciting time in healthcare.

Telemedicine for Muscle and Joint Pain and Injuries

Every day, millions of people worldwide sustain or develop some sort of musculoskeletal (affecting muscles, joints, tendons, ligaments, bone) pain, whether it’s their low back, neck, shoulder, hip, knee, hand or other body part. If not treated right, it can become permanent or chronic.

Chronic pain, and even acute (recent onset) musculoskeletal pain can effectively be addressed via telehealth (this is the domain of my platform, Pain and Injury Doctor, and it’s my goal to help a million people worldwide eliminate their pain).

Available medical procedures for musculoskeletal conditions requiring an in-office visit such as surgery and cortisone injection are usually not the first intervention choice for such pain. Conservative care is the standard of care for the vast majority of non-emergency musculoskeletal pain and injury–an ideal application for telehealth.

For example, if you were to go to your doctor for sudden onset low back pain, you would most likely be given a prescription for anti-inflammatory medications, if not advised to just take over-the-counter NSAIDs such as Motrin, and rest. You would also be given a printout of home care instructions, such as applying ice every two hours; avoiding heavy lifting and certain body positions; and doing certain stretches and exercises. As you can imagine, such an office visit could easily be accomplished via a telehealth session. No need to drive yourself to the doctor’s office for this.

But what about chiropractic or physical therapy? You can’t get these physical treatments through your webcam. Yes, chiropractic has been shown to be effective for acute and chronic low back pain, but available studies typically don’t conclude that chiropractic for low back pain is superior or more economical than exercise instruction or traditional medical care. Same with physical therapy. However, as a “biased” chiropractor myself, I believe the benefit of spinal adjustments is not just pain relief, but improved soft tissue healing and structural alignment; two things that I believe can help reduce the chance of flare ups/ chronicity.

So get a couple of chiropractic adjustments if you can, but know that you can overcome typical back pain through self-rehabilitation as well (see my video on how to treat low back pain).

Many Types of Pain Can Be Self-Cured

Take a second to look at my logo. It looks like a red cross, but it’s actually four converging red arrows that form a figure of a person showing vitality, with arms and legs apart. The four arrows represent four pillars of self-care that my platform, The Pain and Injury Doctor, centers on:

Lifestyle modification (nutrition, mindset, healthy habits)

Using select home therapy equipment

Rehabilitative exercises

Manual therapy

These are four things that people suffering from pain are capable of doing by themselves, and sometimes with the help of a partner (manual therapy). All of the Self Treatment Videos on Pain and Injury Doctor incorporate these four elements of self-care (some are still being produced as of this writing). Isn’t this more interesting than a bottle of Motrin?

Conclusion

I will close with this: research shows that when patients are actively engaged in their healthcare, they tend to experience better health outcomes and it’s not hard to figure out why. By participating in your own health, you have “skin in the game;” i.e. you are invested in your health rather than being passive and wanting health to be “given” to you by a doctor through medicine or treatments. Mindset is what drives behavior, and those who are passive about their health are the ones who pay no attention until it’s too late—they don’t eat healthy; they don’t exercise enough; they voluntarily ingest toxins (junk food, alcohol, and smoking) and engage in health-risky behaviors. For many health conditions, by the time the primary symptom is noticeable, the disease has already set in; for example, onset of bone pain from metastasized cancer; or the first sign of pain and stiffness from knee osteoarthritis.

Being actively engaged and invested in one’s health will pay huge dividends in one’s quality of life, and longevity. So, in order for telemedicine/ telehealth to work for you, you need to have this mindset. You have to “do the work.” I can show you clinically proven self-treatment techniques to treat common neck pain, but they obviously won’t work if you don’t do them, and do them diligently.

Self-care for managing musculoskeletal pain is a natural fit for the telemedicine model of health care, which made its world debut this year. I’m excited to produce content that can help you defeat pain, without visiting a doctor’s office. I’m especially excited if your are one of the millions of people who don’t have health insurance or access to a health professional, and I am able to help improve your quality of life by showing you how to self-manage your pain.

If there is anyone you know who can benefit from this site, please share. Take care.

Dr. P

0 notes

Text

JAMstack: The What, The Why and The How

JAMstack stands for Javascript, API and Markup.

Javascript (), often abbreviated as JS, is a programming language that conforms to the ECMAScript specification.

API: An application programming interface (API) is a computing interface exposed by a particular software program, library, operating system or internet service, to allow third parties to use the functionality of that software application.

Markup: A markup language is not a programming language. It's a series of special markings, interspersed with plain text, which if removed or ignored, leave the plain text as a complete whole.

And the idea behind it is that you can build highly reactive, SEO-tuned, content-aware web applications with these three technologies (and HTML + CSS of course).

To be fair, a fourth part also is important: A Static site generator like Gatsby.js or Jekyll. At least that is required to unleash the full power of the JAMStack.

What the heck is JAMstack?

The term JAMstack is related to Javascript and modern web development architecture, it is an ecosystem, a set of tools on their own.

It's a new way of creating websites and applications that renders better performance, higher security, lower cost of scaling, and even better developer experience and it is not about some specific technology. JAMstack sites are not built on the fly. So they stand opposite to sites built on legacy stacks like Wordpress, Drupal, and similar LAMPstack based setups that by definition have to be executed every time someone visits.

JAMstack is an attempt to talk of the two megatrends happening in web development right now, as a single joint category. The revolution of Frontend development and the API economy coming together as a new and better way of creating web projects.

Advantages of JAMstack

It’s FAST

In its major advantages, the speed and the swiftness come initially. To minimize the loading time, nothing beats its pre-built files served over a CDN. JAMstack sites websites are super fast all because of the HTML that is generated already all whilst deploying time and just served via CDN without any interference or any backend delays.

It’s EFFICIENT

Since there is no backend, there are no bottlenecks (e.g. no database).

It’s CHEAPER

Since serving the resources through a CDN is way less costly than serving them through a backend server. It’s more SECURE since the backend is exposed only through an API.

Why use it for your business?

In human words, it is a better way to develop the web. For concrete business oriented reasons:

You can serve static content (has multiple benefits)

Applications become up to 5x faster

Websites and apps can be made much more secure

JAMstack apps are far less expensive to maintain and develop

Future-proof technologies mean a long life span for your websites and applications (lower costs in the long run)

No tight coupling between heavy back end frameworks (less technical debt)

SEO, faster sites with good PWA optimisation rank better in Google

Developer friendliness (developers are human too!)

Why the JAMstack?

Better Performance

Cheaper, Easier Scaling

Higher Security

Better Developer Experience

The JAMstack application has minimal things to do during runtime which increases performance, reliability, and scale. This is because it requires no application servers. The static content is a highly cacheable and easily distributed Cloud Content provider. This means minimal lock-in to specific vendors.

Enterprise solutions can be built using JAMstack with quicker speed-to-market and lower costs. This is because it requires fewer resources to manage and support the application in production as well as for development. It requires only a small team of developers, and everything can be done with Javascript and markup. It’s not a prerequisite of JAMstack, but it does make sense to reduce the skill-sets needed when delivering for the web.

Pre-rendering with static site generators

We need a tool that is capable of pre-rendering markup. Static site generators are designed for this purpose. There are a few static site generators out there today, most of which are based on popular JavaScript frontend frameworks such as React (Gatsby, Next.js) and Vue (Nuxt.js, Gridsome). There are also a few that are non-JavaScript based, such as Jekyll (Ruby) and Hugo (Go aka Golang).

Hello World – Using Gatsby

Install the Gatsby CLI. The Gatsby CLI helps you create new sites using Gatsby starters (like this one!)# install the Gatsby CLI globally npm install -g gatsby-cli

Create a Gatsby site. Use the Gatsby CLI to create a new site, specifying the default starter.# create a new Gatsby site using the default starter gatsby new hello-world

Start developing.Navigate into your new site’s directory and start it up.cd hello-world/ gatsby develop

Open the source code and start editing!Your site is now running at http://localhost:8000. Open the hello-world directory in your code editor of choice and edit src/pages/index.js. Save your changes and the browser will update in real time!

Installed App Explained –

.NODE_MODULES

.SRC

.GITIGNORE

.PRETTIERRC

.GATSBY-BROWSER.JS

.GATSBY-CONFIG.JS

.GATSBY-NODE.JS

.GATSBY-SSR.JS

.LICENSE

.PACKAGE-LOCK.JSON

.PACKAGE.JSON

.README.MD

.YARN.LOCK

/node_modules: The directory where all of the modules of code that your project depends on (npm packages) are automatically installed.

/src: This directory will contain all of the code related to what you will see on the front-end of your site (what you see in the browser), like your site header, or a page template. “Src” is a convention for “source code”.

.gitignore: This file tells git which files it should not track / not maintain a version history for.

.prettierrc: This is a configuration file for a tool called Prettier, which is a tool to help keep the formatting of your code consistent.

gatsby-browser.js: This file is where Gatsby expects to find any usage of the Gatsby browser APIs (if any). These allow customization/extension of default Gatsby settings affecting the browser.

gatsby-config.js: This is the main configuration file for a Gatsby site. This is where you can specify information about your site (metadata) like the site title and description, which Gatsby plugins you’d like to include, etc. (Check out the config docs for more detail).

gatsby-node.js: This file is where Gatsby expects to find any usage of the Gatsby node APIs (if any). These allow customization/extension of default Gatsby settings affecting pieces of the site build process.

gatsby-ssr.js: This file is where Gatsby expects to find any usage of the Gatsby server-side rendering APIs (if any). These allow customization of default Gatsby settings affecting server-side rendering.

LICENSE: Gatsby is licensed under the MIT license.

package-lock.json (See package.json below, first). This is an automatically generated file based on the exact versions of your npm dependencies that were installed for your project. (You won’t change this file directly).

package.json: A manifest file for Node.js projects, which includes things like metadata (the project’s name, author, etc). This manifest is how npm knows which packages to install for your project.

README.md: A text file containing useful reference information about your project.

yarn.lock: Yarn is a package manager alternative to npm. You can use either yarn or npm, though all of the Gatsby docs reference npm. This file serves essentially the same purpose as package-lock.json, just for a different package management system.

Final Thoughts

JAMstack, like most great technological trends, is a pretty awesome solution with a crummy name. It’s not an impeccable solution by any stretch, but it empowers front-end developers to build all kinds of sites and applications using their existing skills.

So what are you waiting for? Get out there and build something!

0 notes

Text

Amazon Managed Blockchain Now Supports AWS CloudFormation

You can too read Get Started Making a Hyperledger Fabric Blockchain Network Using Amazon Rolls Block Managed Blockchain. Amazon Managed Blockchain now supports AWS CloudFormation for creating and configuring networks, members, and peer nodes. Amazon Web Services, the cloud computing platform from Amazon, right now announced the overall availability of Amazon Managed Blockchain, a completely managed service that makes it straightforward to create and manage scalable blockchain networks. Once created, you possibly can simply handle and maintain your blockchain network. Each member is a distinct identity throughout the network, and is seen within the network. With CloudFormation support for Managed Blockchain, you'll be able to create new blockchain networks and define network configurations, create a member and join an current community, and describe member and network particulars such as voting policies. As well as to creating it straightforward to set up and handle blockchain networks, Amazon Managed Blockchain provides easy APIs that enable prospects to vote on memberships in their networks and to scale up or down extra easily. Amazon Managed Blockchain offers a range of situations with totally different mixtures of compute and reminiscence capacity to give clients the ability to decide on the proper mix of assets for their blockchain applications. Amazon Managed Blockchain gives businesses the opportunity to remove the heavy-lifting usually required in infrastructure setup. “MOBI hopes to build a worldwide network of cities, infrastructure providers, customers, and producers of mobility companies in order to comprehend the various potential benefits of blockchain know-how. You possibly can handle certificates, invite new members, and scale out peer node capacity in an effort to course of transactions more rapidly.

The Starter Edition is designed for take a look at networks and small production networks, with a most of 5 members per network and a couple of peer nodes per member. The usual Edition is designed for scalable manufacturing use, with as much as 14 members per network and three peer nodes per member (check out the Amazon Managed Blockchain Pricing to study extra about each editions). I'm comfortable to announce that the preview is full and that Amazon Managed Blockchain is now out there for production use in the US East (N. To find out how to do that, learn Build and deploy an utility for Hyperledger Fabric on Amazon Managed Blockchain. Amazon Managed Blockchain takes care of the remainder, making a blockchain community that may span a number of AWS accounts and configuring the software program, safety, and network settings. My community enters the Creating standing, and that i take a fast break to stroll my dog! I can create my very own scalable blockchain community from the AWS Management Console, AWS Command Line Interface (CLI) (aws managedblockchain create-network), or API (CreateNetwork). “At Accenture, blockchain is driving business transformation in just about each industry—from aerospace to not-for-earnings,” said Prasad Sankaran, Senior Managing Director of Accenture’s Intelligent Cloud & Infrastructure enterprise group. “Ever since overcoming the bodily limitations of open-outcry buying and selling pits, technology has been fundamental to the transformation of critical monetary-market infrastructures,” said Andrew Koay, Head of Blockchain Technology, SGX. We announced Amazon Managed Blockchain at AWS re:Invent 2018 and invited you to join a preview. Amazon Managed Blockchain takes care of provisioning nodes, setting up the network, managing certificates, and safety, and scaling the network. Read the full article

0 notes

Text

SEO & Progressive Web Apps: Looking to the Future - Moz

Practitioners of SEO have always been mistrustful of JavaScript.

This is partly based on experience; the ability of search engines to discover, crawl, and accurately index content which is heavily reliant on JavaScript has historically been poor. But it’s also habitual, born of a general wariness towards JavaScript in all its forms that isn’t based on understanding or experience. This manifests itself as dependence on traditional SEO techniques that have not been relevant for years, and a conviction that to be good at technical SEO does not require an understanding of modern web development.

As Mike King wrote in his post The Technical SEO Renaissance, these attitudes are contributing to “an ever-growing technical knowledge gap within SEO as a marketing field, making it difficult for many SEOs to solve our new problems”. They also put SEO practitioners at risk of being left behind, since too many of us refuse to explore – let alone embrace – technologies such as Progressive Web Apps (PWAs), modern JavaScript frameworks, and other such advancements which are increasingly being seen as the future of the web.

In this article, I’ll be taking a fresh look at PWAs. As well as exploring implications for both SEO and usability, I’ll be showcasing some modern frameworks and build tools which you may not have heard of, and suggesting ways in which we need to adapt if we’re to put ourselves at the technological forefront of the web.

1. Recap: PWAs, SPAs, and service workers

Progressive Web Apps are essentially websites which provide a user experience akin to that of a native app. Features like push notifications enable easy re-engagement with your audience, while users can add their favorite sites to their home screen without the complication of app stores. PWAs can continue to function offline or on low-quality networks, and they allow a top-level, full-screen experience on mobile devices which is closer to that offered by native iOS and Android apps.

Best of all, PWAs do this while retaining - and even enhancing - the fundamentally open and accessible nature of the web. As suggested by the name they are progressive and responsive, designed to function for every user regardless of their choice of browser or device. They can also be kept up-to-date automatically and — as we shall see — are discoverable and linkable like traditional websites. Finally, it’s not all or nothing: existing websites can deploy a limited subset of these technologies (using a simple service worker) and start reaping the benefits immediately.

The spec is still fairly young, and naturally, there are areas which need work, but that doesn’t stop them from being one of the biggest advancements in the capabilities of the web in a decade. Adoption of PWAs is growing rapidly, and organizations are discovering the myriad of real-world business goals they can impact.

You can read more about the features and requirements of PWAs over on Google Developers, but two of the key technologies which make PWAs possible are:

Note that these technologies are not mutually exclusive; the single page app model (brought to maturity with AngularJS in 2010) obviously predates service workers and PWAs by some time. As we shall see, it’s also entirely possible to create a PWA which isn’t built as a single page app. For the purposes of this article, however, we’re going to be focusing on the ���typical’ approach to developing modern PWAs, exploring the SEO implications — and opportunities — faced by teams that choose to join the rapidly-growing number of organizations that make use of the two technologies described above.

We’ll start with the app shell architecture and the rendering implications of the single page app model.

2. The app shell architecture

In a nutshell, the app shell architecture involves aggressively caching static assets (the bare minimum of UI and functionality) and then loading the actual content dynamically, using JavaScript. Most modern JavaScript SPA frameworks encourage something resembling this approach, and the separation of logic and content in this way benefits both speed and usability. Interactions feel instantaneous, much like those on a native app, and data usage can be highly economical.

Credit to https://developers.google.com/web/fundamentals/architecture/app-shell

As I alluded to in the introduction, a heavy reliance on client-side JavaScript is a problem for SEO. Historically, many of these issues centered around the fact that while search crawlers require unique URLs to discover and index content, single page apps don’t need to change the URL for each state of the application or website (hence the phrase ‘single page’). The reliance on fragment identifiers — which aren’t sent as part of an HTTP request — to dynamically manipulate content without reloading the page was a major headache for SEO. Legacy solutions involved replacing the hash with a so-called hashbang (#!) and the _escaped_fragment_ parameter, a hack which has long-since been deprecated and which we won’t be exploring today.

Thanks to the HTML5 history API and pushState method, we now have a better solution. The browser’s URL bar can be changed using JavaScript without reloading the page, thereby keeping it in sync with the state of your application or site and allowing the user to make effective use of the browser’s ‘back’ button. While this solution isn’t a magic bullet — your server must be configured to respond to requests for these deep URLs by loading the app in its correct initial state — it does provide us with the tools to solve the problem of URLs in SPAs.

The bigger problem facing SEO today is actually much easier to understand: rendering content, namely when and how it gets done.

Rendering content

Note that when I refer to rendering here, I’m referring to the process of constructing the HTML. We’re focusing on how the actual content gets to the browser, not the process of drawing pixels to the screen.

In the early days of the web, things were simpler on this front. The server would typically return all the HTML that was necessary to render a page. Nowadays, however, many sites which utilize a single page app framework deliver only minimal HTML from the server and delegate the heavy lifting to the client (be that a user or a bot). Given the scale of the web this requires a lot of time and computational resource, and as Google made clear at its I/O conference in 2018, this poses a major problem for search engines:

“The rendering of JavaScript-powered websites in Google Search is deferred until Googlebot has resources available to process that content.”

On larger sites, this second wave of indexation can sometimes be delayed for several days. On top of this, you are likely to encounter a myriad of problems with crucial information like canonical tags and metadata being missed completely. I would highly recommend watching the video of Google’s excellent talk on this subject for a rundown of some of the challenges faced by modern search crawlers.

Google is one of the very few search engines that renders JavaScript at all. What’s more, it does so using a web rendering service that until very recently was based on Chrome 41 (released in 2015). Obviously, this has implications outside of just single page apps, and the wider subject of JavaScript SEO is a fascinating area right now. Rachel Costello’s recent white paper on JavaScript SEO is the best resource I’ve read on the subject, and it includes contributions from other experts like Bartosz Góralewicz, Alexis Sanders, Addy Osmani, and a great many more.

For the purposes of this article, the key takeaway here is that in 2019 you cannot rely on search engines to accurately crawl and render your JavaScript-dependent web app. If your content is rendered client-side, it will be resource-intensive for Google to crawl, and your site will underperform in search. No matter what you’ve heard to the contrary, if organic search is a valuable channel for your website, you need to make provisions for server-side rendering.

But server-side rendering is a concept which is frequently misunderstood…

“Implement server-side rendering”

This is a common SEO audit recommendation which I often hear thrown around as if it were a self-contained, easily-actioned solution. At best it’s an oversimplification of an enormous technical undertaking, and at worst it’s a misunderstanding of what’s possible/necessary/beneficial for the website in question. Server-side rendering is an outcome of many possible setups and can be achieved in many different ways; ultimately, though, we’re concerned with getting our server to return static HTML.

So, what are our options? Let’s break down the concept of server-side rendered content a little and explore our options. These are the high-level approaches which Google outlined at the aforementioned I/O conference: