#aws fargate introduction

Explore tagged Tumblr posts

Text

Deploying ColdFusion Microservices Using AWS Fargate and ECS

#Deploying ColdFusion Microservices Using AWS Fargate and ECS#Deploying ColdFusion Microservices Using AWS Fargate#Deploying ColdFusion Microservices Using AWS ECS

0 notes

Text

Deploying Containers on AWS ECS with Fargate

Introduction

Amazon Elastic Container Service (ECS) with AWS Fargate enables developers to deploy and manage containers without managing the underlying infrastructure. Fargate eliminates the need to provision or scale EC2 instances, providing a serverless approach to containerized applications.

This guide walks through deploying a containerized application on AWS ECS with Fargate using AWS CLI, Terraform, or the AWS Management Console.

1. Understanding AWS ECS and Fargate

✅ What is AWS ECS?

Amazon ECS (Elastic Container Service) is a fully managed container orchestration service that allows running Docker containers on AWS.

✅ What is AWS Fargate?

AWS Fargate is a serverless compute engine for ECS that removes the need to manage EC2 instances, providing:

Automatic scaling

Per-second billing

Enhanced security (isolation at the task level)

Reduced operational overhead

✅ Why Choose ECS with Fargate?

✔ No need to manage EC2 instances ✔ Pay only for the resources your containers consume ✔ Simplified networking and security ✔ Seamless integration with AWS services (CloudWatch, IAM, ALB)

2. Prerequisites

Before deploying, ensure you have:

AWS Account with permissions for ECS, Fargate, IAM, and VPC

AWS CLI installed and configured

Docker installed to build container images

An existing ECR (Elastic Container Registry) repository

3. Steps to Deploy Containers on AWS ECS with Fargate

Step 1: Create a Dockerized Application

First, create a simple Dockerfile for a Node.js or Python application.

Example: Node.js DockerfiledockerfileFROM node:16-alpine WORKDIR /app COPY package.json . RUN npm install COPY . . CMD ["node", "server.js"] EXPOSE 3000

Build and push the image to AWS ECR:shaws ecr create-repository --repository-name my-app docker build -t my-app . docker tag my-app:latest <AWS_ACCOUNT_ID>.dkr.ecr.<REGION>.amazonaws.com/my-app:latest aws ecr get-login-password --region <REGION> | docker login --username AWS --password-stdin <AWS_ACCOUNT_ID>.dkr.ecr.<REGION>.amazonaws.com docker push <AWS_ACCOUNT_ID>.dkr.ecr.<REGION>.amazonaws.com/my-app:latest

Step 2: Create an ECS Cluster

Use the AWS CLI to create a cluster:shaws ecs create-cluster --cluster-name my-cluster

Or use Terraform:hclresource "aws_ecs_cluster" "my_cluster" { name = "my-cluster" }

Step 3: Define a Task Definition for Fargate

The task definition specifies how the container runs.

Create a task-definition.js{ "family": "my-task", "networkMode": "awsvpc", "executionRoleArn": "arn:aws:iam::<AWS_ACCOUNT_ID>:role/ecsTaskExecutionRole", "cpu": "512", "memory": "1024", "requiresCompatibilities": ["FARGATE"], "containerDefinitions": [ { "name": "my-container", "image": "<AWS_ACCOUNT_ID>.dkr.ecr.<REGION>.amazonaws.com/my-app:latest", "portMappings": [{"containerPort": 3000, "hostPort": 3000}], "essential": true } ] }

Register the task definition:shaws ecs register-task-definition --cli-input-json file://task-definition.json

Step 4: Create an ECS Service

Use AWS CLI:shaws ecs create-service --cluster my-cluster --service-name my-service --task-definition my-task --desired-count 1 --launch-type FARGATE --network-configuration "awsvpcConfiguration={subnets=[subnet-xyz],securityGroups=[sg-xyz],assignPublicIp=\"ENABLED\"}"

Or Terraform:hclresource "aws_ecs_service" "my_service" { name = "my-service" cluster = aws_ecs_cluster.my_cluster.id task_definition = aws_ecs_task_definition.my_task.arn desired_count = 1 launch_type = "FARGATE" network_configuration { subnets = ["subnet-xyz"] security_groups = ["sg-xyz"] assign_public_ip = true } }

Step 5: Configure a Load Balancer (Optional)

If the service needs internet access, configure an Application Load Balancer (ALB).

Create an ALB in your VPC.

Add an ECS service to the target group.

Configure a listener rule for routing traffic.

4. Monitoring & Scaling

🔹 Monitor ECS Service

Use AWS CloudWatch to monitor logs and performance.shaws logs describe-log-groups

🔹 Auto Scaling ECS Tasks

Configure an Auto Scaling Policy:sh aws application-autoscaling register-scalable-target \ --service-namespace ecs \ --scalable-dimension ecs:service:DesiredCount \ --resource-id service/my-cluster/my-service \ --min-capacity 1 \ --max-capacity 5

5. Cleaning Up Resources

After testing, clean up resources to avoid unnecessary charges.shaws ecs delete-service --cluster my-cluster --service my-service --force aws ecs delete-cluster --cluster my-cluster aws ecr delete-repository --repository-name my-app --force

Conclusion

AWS ECS with Fargate simplifies container deployment by eliminating the need to manage servers. By following this guide, you can deploy scalable, cost-efficient, and secure applications using serverless containers.

WEBSITE: https://www.ficusoft.in/aws-training-in-chennai/

0 notes

Text

Deploying a Microservices Architecture with AWS ECS and Fargate

Introduction Deploying a Microservices Architecture with AWS ECS and Fargate is a crucial step in modernizing your application architecture. This tutorial will guide you through the process of deploying a microservices architecture using Amazon Elastic Container Service (ECS) and Amazon Fargate. By the end of this tutorial, you will have a comprehensive understanding of how to deploy a…

0 notes

Video

youtube

AWS Live | AWS Fargate Tutorial | AWS Tutorial | AWS Certification Training | #Edureka #Fargate #DataScience #Datalytical #AWS 🔥AWS Training: This Edureka tutorial on AWS Fargate will help you understand how to run containers on Amazon ECS without having to configure & manage underlying virtual machines.

#aws architect training#aws certification training#aws ecs fargate tutorial#aws fargate ci#aws fargate ci cd#aws fargate cli#aws fargate deep dive#aws fargate demo#aws fargate deployment#aws fargate docker#aws fargate example#aws fargate introduction#aws fargate jenkins#aws fargate lambda#aws fargate load balancer#aws fargate overview#aws fargate vs ecs#aws fragate#aws fragate tutorial#aws training#aws tutorial#edureka#yt:cc=on

0 notes

Text

AWS Reserved Instances and Savings Plans: Challenges and Solutions

When Amazon Web Services got started way back in 2006, EC2 On-Demand pricing was an immediate success with developers. They loved the ease of spinning up a wide variety of AWS EC2 instance types whenever they needed them, all for a reasonable price, and then terminate them when done. This was a great improvement on waiting and waiting for IT to order new machines, configure them, or more critically, not even being able to do tests on machines that were far too expensive for short-term projects.

In this article, we will discuss how the new-found cloud freedoms quickly spawned a whole host of challenges, what AWS did to address these issues, and how the current overabundance of options has, in turn, created a unique set of challenges and potential solutions.

To dive deeper into these challenges and how to solve them, join Spotinst VP of Cloud Services, Patrick Gartlan, and Cloud Academy’s AWS Content & Security Lead, Stuart Scott on February 26 for AWS Cost Savings: Ending Decision Paralysis When Trying to Optimize Spend with Reserved Instances and Savings Plans.

AWS Cost Savings: Ending Decision Paralysis When Trying to Optimize Spend with Reserved Instances and Savings Plans

Reserved Instances to the rescue for runaway EC2 costs

While EC2 On-Demand adoption was massive, the cost of running instances On-Demand for long periods — whether intentionally or by accident — was also quite massive, and most organizations were surprised by how quickly their cloud bill became a very significant part of their IT spend.

To address the intentional part of the rising costs, specifically steady-state, long-term usage, AWS introduced Reserved Instances (RIs) in 2009, where one could make an upfront payment for a 1- or 3-year commitment, and in exchange, receive ~70% discount for On-Demand pricing.

Reserved Instances Characteristics

Challenge: Reserved Instance lock-in blocks adoption

As RIs initially were quite rigid in their pricing structure, customers with dynamic environments were very hesitant to use them, with the legitimate concern that they would get locked-in to a pricing structure that offered no flexibility for change.

Some customers who bought RIs and then had unexpected changes in their workload requirements would get stuck with a sunken investment, and even negative return on investment (ROI). As a result, many AWS customers who could have benefited from RIs stayed away.

AWS continuously increases reserved flexibility

To remedy this issue with RIs, over the years AWS introduced various services and new pricing structures that allowed for more flexibility for more potential customers:

In 2012 AWS Marketplace was opened as a secondary market where one could buy and sell RIs from other AWS customers. This potentially mitigates the risk of getting stuck with an unused RI.

In 2014 AWS allowed RI users to manually modify unused RI and re-apply them within the same instance family, based on their normalization factors (this was done either by combining two RIs into a larger one, or breaking an RI down into two or more smaller instances).

In 2016 AWS introduced:

Convertible RIs which allow users to manually modify RIs across different family types, OS, and sizes.

Regional RIs automatically apply unused RIs to any other running EC2 instances within that same family (for Linux) or to exact same instance size (for all other OSs), with the added flexibility of being transferable to any availability zone within that region.

In 2017 Instance Size Flexibility was introduced to allow for automatic application of an unused RI to other EC2 instances within the same family that match up, based on the normalization factor.

In 2019 AWS introduced Savings Plans (“EC2 Savings Plans” and “Compute Savings Plans”) where customers can commit to spend a desired amount per hour, e.g. $35/hour, for either 1 or 3 years. In this example, anything spent up to $35 will be charged in accordance with Savings Plans rates (between 66-72% savings). Any spend above the committed amount will be charged at On-Demand rates.

Reserved Instances vs. Savings Plans

With the introduction of AWS Savings Plans, the obvious question is whether RIs will remain relevant. For the time being there are sufficient differences between the two pricing options, creating pros and cons for AWS customers depending on their specific use case.

So now in early 2020, when planning your cloud finances, here are some points to keep in mind:

Savings Plans can only be applied to EC2 and Fargate, while Reserved Instances have broader applications for EC2, RDS, Redshift, and ElastiCache.

Convertible Reserved Instances allow for the increase of commitment (e.g., add additional reservations to cover more EC2 Instances) during the contracted term, without the need to increase the term. This is especially helpful when needs change and committing to a new 1- to 3-year term doesn’t make sense. With Savings Plans, any addition to the original contract is done with a new contract that starts from day 0.

EC2 Instance Savings Plans will apply usage across any given instance family, regardless of OS or tenancy. Standard Reserved Instances can also apply to usage across any given instance type family, but require the instances to be Linux and default tenancy.

Standard Reserved Instances can be bought and sold on the AWS Marketplace allowing for greater flexibility, while Savings Plans cannot, requiring you to keep your committed spend at the level you defined.

Convertible Reserved Instances are scoped to a specific instance type, OS tenancy, and region while Compute Savings Plans will apply across all of your usage types in multiple regions.

Solution: The paradox of choice

One might think that all these different AWS pricing options would guarantee fully optimized cloud spend. But all too often, having so many choices can lead to “decision paralysis,” especially when handling large, complex deployments that require rigorous capacity planning, and cost-benefit analysis by DevOps and Finance teams.

In our experience enterprises invest a huge amount of human resources for a few weeks every quarter or two, just for RIs and Savings Plans planning and procurement.

First, various project or application teams need to give an estimate of how much compute resources they require for the upcoming period. This then needs review by the TechOps or DevOps teams to confirm that the planned project(s) indeed requires the requested compute power. Finally, the finance team needs to review the specific flavors of RIs or Savings Plans being suggested and ensure that the recommended reserved capacity will truly deliver a positive ROI.

Much of this is often done working with cumbersome spreadsheets, with tedious review historical cloud usage and performing complex projections of expected compute capacity needs.

Fortunately, Spotinst Eco has an incredible track-record helping companies completely streamline the process of identifying when to buy AWS reserved capacity, and what type as well. Not stopping there, Spotinst Eco fully handles ongoing management of the reserved capacity, ensuring maximum utilization and ROI with the minimum amount of financial lock-in possible. Check out a few snapshots of the analysis dashboard using Spotinst Eco.[Source]-https://cloudacademy.com/blog/aws-reserved-instances-and-savings-plans-challenges-and-solutions/

AWS Training for Beginners Courses in Mumbai. 30 hours practical training program on all avenues of Amazon Web Services. Learn under AWS Expert

0 notes

Text

Actcastのアーキテクチャ紹介

まえがき

2020年になり、弊社の提供するIoTプラットフォームサービスであるActcastも正式版をリリースしました。まだまだ改善余地はありますが、現状のActcastを支えているAWS上のアーキテクチャを紹介します。

参考

エッジコンピューティングプラットフォームActcastの正式版をリリース - PR TIMES

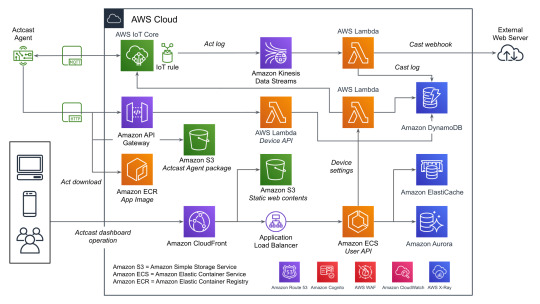

全体の概要

データをやり取りする主要なコンポーネントとしては、以下の3つがあげられます。下記の図を参照する際にこれを念頭においてください。

User API: ウェブのダッシュボードから使用され、グループやデバイスの管理などに使われます。

Device API: エッジデバイスから使用され、デバイスの設定や認証情報などを取得するのに使われます。

AWS IoT Core: MQTTを用いてデバイス側へ通知を送ったり、デバイス側からデータを送信するのに使われます。

すべてを記載しているわけではないですが典型的なデータのながれに着目して図にしたものがこちらになります。(WAFやCognitoなどはスペースの都合でアイコンだけになっています)

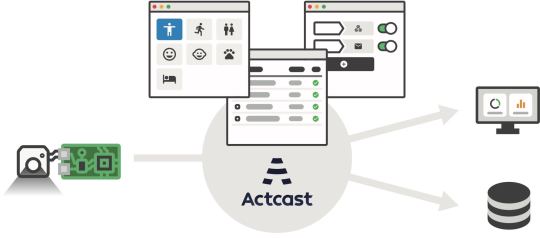

Actcast特有の概念であるActやCastという用語についてドキュメントから引用し、そのあと全体の説明をします。

Actとは

デバイス上で実行され、デバイスに様々な振舞いをさせるソフトウェアを Act と呼んでいます。 Actcast に用意されているアプリケーションに、お好みの設定を与えたものが Act になります。

注: 上記の図ではアプリケーションはAppと記載されています。

Castとは

Cast とは Act から届いたデータをインターネットにつなげるものです。 Cast は「どのような場合にインターネットにつなげるか」を指定するトリガー部分と「どのようにインターネットにつなげるか」を指定するアクション部分からなります。

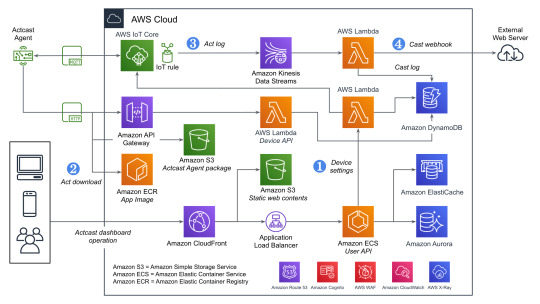

Actcastでのデータの流れ

ユーザーの操作とエッジデバイス上でのデータの流れに着目すると以下のようになります。

Actcastのユーザーはダッシュボードを通じてActのインストールやCastの設定をおこなう

エッジデバイス上で実行されているActcast Agentが設定に基づいたアプリケーションを起動する(Act)

Actが必要に応じてデータを生成する

Castの設定に基づいて生成されたデータを外���システムへ送信する(webhook)

先程の図に上記の番号を記載したのがこちらの図です。

それぞれについて実際のAWSのリソースと絡めながら説明していきます。

1. Device Settings

良くあるウェブアプリケーションと同じ部分は箇条書きで簡単に説明します。

負荷分散はCloudFrontやAWS WAFなどをはさみつつALBを使用

アプリケーションの実行環境としてはECSをFargateで実行(User API)

データの永続化は基本的にAmazon Aurora(PostgreSQL)

キャッシュはElastiCache(Redis)

一部のデータはエッジデバイスから参照されるためワークロードの変化が読めなかったり、スケーラビリティが重要になったりするためDynamoDBを使用する形になっています。ECSのタスクから直接DynamoDBを触っていないのはDynamoDBに関するアクセス権をLambda側に分離するためです。もともとはすべてのDynamoDBへのアクセス��ターンごとにLambdaを分けていましたが、さまざまな理由から最近は統合されました。

また、ダッシュボードでユーザー操作があった際にその設定をDynamoDBに保存すると同時にAWS IoTのMQTT経由でActcast Agentに通知を送り、それを契機にAgent自身でDevice APIを使って設定を取得します。Device API自体はAWS IoTのデバイス証明書を用いて認証を行っています。

2. Act download

DynamoDBから設定を取得したActcast Agentは、実行対象のアプリケーションイメージをECRから取得します。(ECRの認証情報はDevice APIから取得しています)

その後、設定に基づきイメージをActとして実行します。設定はアプリケーションによってことなり、典型的には後述のAct logを生成する条件が指定できます(推論結果の確度などを用いて)。

3. Act log

Actは条件によってデータ(Act log)を生成することがあります。 例えば、年齢性別推定を行うActはカメラに写った画像から以下のようなデータを生成します。

{ "age": 29.397336210511124, "style": "Male", "timestamp": 1579154844.8495722 }

生成したデータはAWS IoTを経由して一旦Kinesisのシャードに追加されていきます。Kinesisを挟むことでDynamoDBに対する負荷が急激に上昇した場合でもデータの欠損が発生しにくいようにしています。

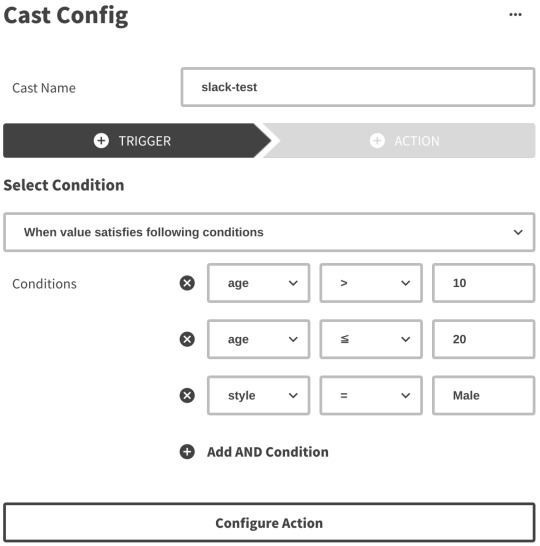

4. Cast webhook

Kinesisのシャードに追加されたデータをLambdaのコンシューマーが処理していきます。 この際に、Castの設定(TriggerとAction)をもとにwebhookをするかや送信先を決定します。

Triggerではいくつかの条件が満たされているときに限りActionを実行するように設定することができます。

Actionではwebhook先のURLや送信するHTTPリクエストのボディなどを設定できますが詳細はドキュメントを確認してください。

ユーザー設定に基づきリクエスト先が変わるためSSRFなどが起きないような対策もしています。

苦労話

AWS IoT

Device Shadow

AWS IoTのDeviceとして登録するとそれに対応したShadowというものがAWS IoT上から操作できます。 これはShadowに設定した状態をDevice側に同期させるような場合に使えますが、このShadowで保持できるデータのサイズがなかなか厳しく最終的にはDynamoDB側に自前で同じようなデータをもたせる方針に切り替えました。

Job

AWS IoTにはJobというものがありますが、同時実行数の制限などが厳しく(Active continuous jobs: max 100)Actcastのようにデバイス数がどんどん増えていくような場合には使えませんでした。こちらもDevice Shadowと同じようにDynamoDB上に自前で似たような仕組みを作っています。

Amazon Aurora

Amazon Aurora with PostgreSQL Compatibility

MySQLではなくPostgreSQLのAuroraを使っていると直面する課題ですが、Auroraの機能としてメジャーバージョンを更新する方法が提供されていないということが挙げられます。

Upgrading an Aurora PostgreSQL DB Cluster Engine Version - Amazon Aurora

ダウンタイムを抑えつつバージョンを更新するためには新旧のAuroraクラスタを用意し、データを同期しつつどこかのタイミングでアプリケーションから接続する先を変更するということが必要です(本当はもう少し複雑です)。

更新元のバージョンが9.xか10.xかでPostgreSQLのロジカルレプリケーションが使えるかが変わってくるのも難しいポイントです。もし9.x系であれば外部のツール(bucardoなど)を使う必要があります。

pg_upgrade相当の機能を実現してもらえればダウンタイムがあるとはいえ運用負荷は相当下がるのですがなかなか実現されていないようです。

今後の改善

ログの追跡

現状でもX-Rayを導入したり、CloudWatch Logsからログを確認したりなどは行っていますが今回紹介していないものも含め全体を構成する要素が非常に多いため問題が起きたさいに関連箇所を調べるのはなかなか大変な状態です。この部分を改善していくための手法を検討している段階です。

まとめ

AWS上には様々なサービスがあり、IoT関係も含めてすべてAWSのサービスだけで構築することができました。 今後は安定性やスケーラビリティの観点で改善を続けていきます。

ここでは言及していませんが、ECSやLambdaの上でRustを使う話も別途記事として公開する予定なのでお楽しみに。

この記事はwatikoがお送りしました。

0 notes

Text

Introduction to AWS Fargate

youtube

See the rest of the story at http://recentupdates.fatherandsonhomeremodeling.com/introduction-to-aws-fargate/

0 notes

Text

Sheffield Devops - September 2018 Meetup

https://www.eventbrite.co.uk/e/sheffield-devops-september-2018-meetup-tickets-49425388668

The next Sheffield Devops event takes place on Thursday 13th September and is generously sponsored and hosted by CMS, with food and beers, wines and soft drinks provided on arrival.

Event opens at 6pm for food and drinks, with the first talk starting at 6:30pm.

6:00 - 6:30: Doors open

6:30 - 7:15: Chris Walker - Hack Days, Improvement & Developer Tooling in AWS

7:15 - 7:30: Break

7:30 - 8:15: Another cool Devops talk TBC

About the Speakers

The first will be about "Hack Days, Improvement & Developer Tooling in AWS" by Chris Walker, a Lead Software Engineer at Sky Betting and Gaming.

On a recent hack day at Sky Bet, the focus was on "improvement". The talk will cover taking part in a hack day, getting SonarQube running in AWS (Fargate & RDS) and how running Developer Tooling in AWS can be a great introduction to AWS.

The second talk will be announced in due course.

Venue

CMS LLP 1 South Quay Victoria Quays Sheffield S2 5SY

Parking is available... just buzz in at the barriers and say you're here for the Sheffield DevOps event.

https://www.google.com/maps/dir/Current+Location/53.384683,-1.460541

Sponsors

CMS

CMS is proud to be a long term sponsor of Sheffield Devops. CMS is an international law firm with a specific sector focus on the technology and digital sectors. Ben Hendry, a partner in CMS's Sheffield office said “We are passionate about supporting the growth of the Sheffield City Region. Like the Sheffield DevOps team, we want Sheffield to be the UKs next big technology hub, attracting the best tech businesses and talent from across the UK and beyond, and encouraging innovation. Supporting organisations like Sheffield DevOps is a vital part of this strategy and we are very much looking forward to working with both in order to achieve our common goal.” For more information on CMS's technology focus click here: http://www.nabarro.com/services/sectors/technology/ https://cms.law/en/jurisdiction/global-reach/Europe/United-Kingdom/CMS-CMNO/TMT-Technology-Media-Telecommunications

More Info

If you'd like to find out more about Sheffield Devops then head to http://www.sheffielddevops.org.uk/ or talk to us on twitter @sheffieldDevops.Look forward to seeing you there!

Code of Conduct: http://www.sheffielddevops.org.uk/post/166824850299/code-of-conduct

https://www.eventbrite.co.uk/e/sheffield-devops-september-2018-meetup-tickets-49425388668

0 notes

Photo

#364: Angular 5.1 Released

This week's JavaScript news — Read this e-mail on the Web

JavaScript Weekly

Issue 364 — December 8, 2017

Parcel: A Fast, Zero-Configuration Webapp Bundler

In this introductory article, Parcel’s creator explains how it solves key problems with existing bundlers like Browserify and Webpack: performance and complex configs.

Devon Govett

A Different Way of Understanding `this` in JavaScript

A perennial topic, but Dr. Axel has an interesting take on it that might clarify your thinking on how the this keyword works.

Dr. Axel Rauschmayer

Learn React Fundamentals and Advanced Patterns Courses

Two and a half hours of new (beginner and advanced) React material are now available for free on Egghead.

Kent C. Dodds

Build Fully Interactive JavaScript Charts 📈 In Minutes

Your solution for modern charting and visualization needs. ZingChart is fully featured, integrates with popular JS frameworks, and has a robust API with endless customization options. Get started with a free download.

ZingChart Sponsor

Angular 5.1 Released

The latest (minor) version of Angular is here, plus Angular CLI 1.6 and the first stable release of Angular Material.

Stephen Fluin

What People in Tech Said About JavaScript On Its Debut

JavaScript was first announced this week 22 years ago, but who was singing its praises in its earliest form?

Chris Brandrick

Webpack: A Gentle Introduction to the Module Bundler

Not ready for Parcel (above)? Here you can learn the basics of Webpack and how to configure it for your web application.

Prosper Otemuyiwa

Jobs

Full-stack JavaScript Developer at X-Team (Remote)We help our developers keep learning and growing every day. Unleash your potential. Work from anywhere. Join X-Team. X-Team

Software Engineer – Web Clients, ReactJS+ReduxJoin a growing engineering team that builds highly performant video apps across Web, iOS, Android, FireTV, Roku, tvOS, Xbox and more! Discovery Digital Media

Looking for a Job at a Company That Prioritizes JavaScript?Try Vettery and we’ll connect you directly with thousands of companies looking for talented front-end devs. Vettery

In Brief

A Frontend Developer’s Guide to GraphQL tutorial A very gentle introduction if GraphQL seems confusing. CSS Tricks

Creating Neural Networks in JS with deeplearn.js tutorial Robin Wieruch

'await' vs 'return' vs 'return await': Picking The Right One tutorial Jake Archibald

How TypeScript 2.4's Weak Type Detection Helps You Avoid Bugs tutorial Marius Schulz

Creating a Heatmap of Your Location History with JS & Google Maps tutorial Brandon Morelli

Getting to Know the JavaScript Internationalization API tutorial A cursory introduction. Netanel Basal

`for-await-of` and Synchronous Iterables tutorial Dr. Axel Rauschmayer

How to Cancel Promises tutorial Seva Zaikov

Learn Everything About AWS’ New Container Products AWS ECS vs. AWS EKS vs. AWS Fargate - confused? Learn more in our latest blog post. Codeship Sponsor

6 Developers Reflect on JavaScript in 2017 opinion Tools like Prettier, Jest, and Next.js get big shoutouts. Sacha Greif

Angular... It’s You, Not Me: A Breakup Letter opinion Dan Ward

Will The Future of JavaScript Be Less JavaScript? opinion Daniel Borowski

JavaScript Metaprogramming: ES6 Proxy Use and Abuse video Eirik Vullum

Use SQL in Mongodb? But of Course You Can! We'll Show You How. And there's so much more to discover. But see for yourself - Download it for 14 days here. Studio 3T Sponsor

jsvu: JavaScript (Engine) Version Updater tools A tool for installing new JavaScript engines without compiling them. Google

Reshader: A Library to Get Shades of Colors code Guilherme Oderdenge

Muuri: A JS Layout Engine for Responsive and Sortable Grid Layouts code There’s a live demo here. Haltu

Unistore: A 650 Byte State Container with Component Actions for Preact code Jason Miller

Lowdb: A Small Local JSON Database Powered by Lodash code Supports Node, Electron and the browser. typicode

🚀 View and Annotate PDFs inside your Web App in No Time PSPDFKit Sponsor

Curated by Peter Cooper and published by Cooperpress.

Like this? You may also enjoy: FrontEnd Focus : Node Weekly : React Status

Stop getting JavaScript Weekly : Change email address : Read this issue on the Web

© Cooperpress Ltd. Fairfield Enterprise Centre, Lincoln Way, Louth, LN11 0LS, UK

by via JavaScript Weekly http://ift.tt/2iIvniB

0 notes

Text

What Are the Benefits of Running ColdFusion on AWS Fargate?

#What Are the Benefits of Running ColdFusion on AWS Fargate?#Benefits of Running ColdFusion on AWS Fargate

0 notes

Text

Right-Sizing Your Resources: Optimizing AWS Costs

Introduction

AWS provides flexible cloud resources, but improper provisioning can lead to unnecessary expenses. Right-sizing ensures that your workloads run efficiently at the lowest possible cost. In this guide, we’ll explore how to analyze, optimize, and scale AWS resources for cost efficiency.

What Is Right-Sizing in AWS?

Right-sizing involves analyzing AWS workloads and adjusting resource allocations to match demand without over-provisioning or under-provisioning. This applies to EC2 instances, RDS databases, Lambda functions, EBS volumes, and more.

Benefits of Right-Sizing

✅ Lower cloud costs by eliminating unused or oversized resources. ✅ Improved performance by selecting the right resource type and size. ✅ Increased efficiency with automated scaling and monitoring.

Step 1: Analyzing Resource Utilization

1. Use AWS Cost Explorer

AWS Cost Explorer provides insights into spending patterns and underutilized resources.

Identify low-utilization EC2 instances.

View historical trends for CPU, memory, and network usage.

2. Enable AWS Trusted Advisor

AWS Trusted Advisor offers cost optimization recommendations, including:

Underutilized EC2 instances.

Unattached EBS volumes.

Idle Elastic Load Balancers.

3. Leverage CloudWatch Metrics

Monitor CPU, memory, disk I/O, and network traffic to determine if instances are over-provisioned or struggling with performance.

Step 2: Right-Sizing Compute Resources

1. Optimize EC2 Instances

Identify underutilized instances with low CPU or memory usage.

Switch to smaller instance types (e.g., m5.large → t3.medium).

Use EC2 Auto Scaling for demand-based adjustments.

2. Consider AWS Compute Savings Plans

If workloads are predictable, Savings Plans offer discounts on EC2, Lambda, and Fargate.

Use Spot Instances for non-critical or batch workloads.

3. Optimize AWS Lambda Memory Allocation

Use AWS Lambda Power Tuning to find the best memory-to-performance ratio.

Avoid over-allocating memory for short-running functions.

Step 3: Optimizing Storage Costs

1. Reduce Unused EBS Volumes

Delete unattached EBS volumes to avoid unnecessary charges.

Move infrequent-use volumes to Amazon EBS Cold HDD (sc1).

2. Leverage S3 Storage Classes

Use S3 Intelligent-Tiering for automatic cost savings.

Move archived data to S3 Glacier for long-term storage.

3. Enable AWS Backup and Lifecycle Policies

Automate backup retention policies to prevent over-storage costs.

Set up S3 Lifecycle Rules to transition data to lower-cost storage.

Step 4: Optimizing Networking Costs

1. Reduce Data Transfer Costs

Use AWS PrivateLink to minimize cross-region data transfer fees.

Consolidate traffic through AWS Transit Gateway instead of multiple VPC peering connections.

2. Optimize AWS CloudFront Usage

Use CloudFront with S3 to reduce outbound bandwidth costs.

Implement Edge Locations to cache content and reduce origin fetches.

Step 5: Automating Cost Optimization

1. Use AWS Instance Scheduler

Automate start/stop schedules for non-production instances.

Reduce costs by running workloads only during business hours.

2. Enable Auto Scaling for Dynamic Workloads

Set up EC2 Auto Scaling to match traffic demand.

Implement Application Auto Scaling for DynamoDB, ECS, and RDS.

3. Implement AWS Budgets and Alerts

Create AWS Budgets to monitor cost thresholds.

Set up alerts via SNS notifications when usage exceeds limits.

Conclusion

Right-sizing AWS resources is key to cost savings, efficiency, and performance. By monitoring usage, selecting appropriate instance sizes, and leveraging automation, businesses can significantly reduce cloud costs without sacrificing performance.

WEBSITE: https://www.ficusoft.in/aws-training-in-chennai/

0 notes

Text

Understanding AWS Pricing: Tips to Save Money

Introduction

Amazon Web Services (AWS) provides a powerful cloud platform with flexible pricing, allowing businesses to pay only for what they use. However, without proper planning, AWS costs can quickly escalate. This guide will help you understand AWS pricing models and provide actionable strategies to optimize costs and save money.

1. AWS Pricing Models Explained

AWS offers multiple pricing models to cater to different workloads. Understanding these models can help you choose the most cost-effective option for your use case.

a) Pay-as-You-Go

Charged based on actual usage with no upfront commitments.

Ideal for startups and unpredictable workloads.

Example: Running an EC2 instance for a few hours and paying only for that time.

b) Reserved Instances (RIs)

Offers significant discounts (up to 72%) compared to on-demand pricing in exchange for a long-term commitment (1 or 3 years).

Best for applications with predictable, steady-state workloads.

Example: A database server that runs 24/7 would benefit from a Reserved Instance to reduce costs.

c) Savings Plans

Flexible alternative to Reserved Instances that provides savings based on a committed spend per hour.

Covers services like EC2, Fargate, and Lambda.

Example: Committing to $100 per hour on EC2 usage across any instance type rather than reserving a specific instance.

d) Spot Instances

Allows you to purchase unused EC2 capacity at steep discounts (up to 90%).

Ideal for batch processing, CI/CD pipelines, and machine learning workloads.

Example: Running a nightly data processing job using Spot Instances to save costs.

e) Free Tier & Budgeting Tools

AWS Free Tier offers limited services for free, ideal for small-scale experiments.

AWS Budgets & Cost Explorer help track and analyze cloud expenses.

Example: AWS Lambda includes 1 million free requests per month, reducing costs for event-driven applications.

2. Key Cost-Saving Strategies

Effectively managing AWS resources can lead to substantial cost reductions. Below are some best practices for optimizing your AWS expenses.

a) Right-Sizing Resources

Many businesses overprovision EC2 instances, leading to unnecessary costs.

Use AWS Compute Optimizer to identify underutilized instances and adjust them.

Example: Switching from an m5.large instance to an m5.medium if CPU utilization is consistently below 30%.

b) Auto Scaling & Load Balancing

Automatically scales resources based on traffic demand.

Combine with Elastic Load Balancing (ELB) to distribute traffic efficiently.

Example: An e-commerce website that experiences traffic spikes during sales events can use Auto Scaling to avoid overpaying for unused capacity during off-peak times.

c) Storage Cost Optimization

AWS storage costs can be reduced by choosing the right storage class.

Move infrequently accessed data to S3 Intelligent-Tiering or S3 Glacier.

Example: Archive old log files using S3 Glacier, which is much cheaper than keeping them in standard S3 storage.

d) Optimize Data Transfer Costs

Inter-region and cross-AZ data transfers can be costly.

Use AWS PrivateLink, Direct Connect, and edge locations to reduce transfer costs.

Example: Keeping all resources within a single AWS region minimizes inter-region transfer fees.

e) Serverless & Managed Services

AWS Lambda, DynamoDB, and Fargate reduce infrastructure management costs.

Example: Instead of running an EC2 instance for a cron job, use AWS Lambda, which runs only when needed, reducing idle costs.

f) Leverage Savings Plans & Reserved Instances

Choose Savings Plans for predictable workloads to reduce compute costs.

Reserve database instances (RDS, ElastiCache) for additional savings.

Example: Committing to a Savings Plan for consistent EC2 usage can reduce expenses significantly compared to on-demand pricing.

g) Monitor and Control Costs

Enable AWS Cost Anomaly Detection to identify unexpected charges.

Use AWS Budgets to set spending limits and receive alerts.

Example: Setting a budget limit of $500 per month and receiving alerts when 80% of the budget is reached.

3. AWS Cost Management Tools

AWS provides several tools to help you monitor and optimize costs. Familiarizing yourself with these tools can prevent overspending.

a) AWS Cost Explorer

Helps visualize and analyze cost and usage trends.

Example: Identify which services are driving the highest costs and optimize them.

b) AWS Budgets

Allows users to set custom spending limits and receive notifications.

Example: Setting a monthly budget for EC2 instances and receiving alerts when nearing the limit.

c) AWS Trusted Advisor

Provides real-time recommendations on cost savings, security, and performance.

Example: Recommends deleting unused Elastic IPs to avoid unnecessary charges.

d) AWS Compute Optimizer

Suggests right-sizing recommendations for EC2 instances.

Example: If an instance is underutilized, Compute Optimizer recommends switching to a smaller instance type.

Conclusion

AWS pricing is complex, but cost optimization strategies can help businesses save money. By understanding different pricing models, leveraging AWS cost management tools, and optimizing resource usage, companies can efficiently manage their AWS expenses.

Regularly reviewing AWS billing and usage reports ensures that organizations stay within budget and avoid unexpected charges.

WEBSITE: https://www.ficusoft.in/aws-training-in-chennai/

0 notes

Text

Serverless DevOps: How Lambda and Cloud Functions Fit In

Introduction to Serverless DevOps: What It Is and Why It Matters

1. What Is Serverless DevOps?

Serverless DevOps is a modern approach to software development and operations that leverages serverless computing for automating CI/CD pipelines, infrastructure management, and deployments — without provisioning or maintaining servers.

Key Features of Serverless DevOps

✅ No Server Management: Developers focus on writing code while cloud providers handle scaling and infrastructure. ✅ Event-Driven Automation: Functions (e.g., AWS Lambda, Google Cloud Functions) are triggered by events like code commits or API requests. ✅ Cost-Efficient: Pay only for execution time, reducing costs compared to always-on infrastructure. ✅ Scalability: Auto-scales based on demand, ensuring high availability. ✅ Faster Development & Deployment: CI/CD pipelines can be entirely serverless, improving deployment speed.

2. Why Does Serverless Matter for DevOps?

Traditional DevOps practices require managing infrastructure for CI/CD, monitoring, and deployments. Serverless DevOps eliminates this complexity, making DevOps workflows:

🔹 More Agile → Deploy new features quickly without worrying about infrastructure. 🔹 More Reliable → Auto-scaling and built-in fault tolerance ensure high availability. 🔹 More Cost-Effective → No need to run VMs or containers 24/7. 🔹 More Efficient → Automate workflows using functions instead of dedicated servers.

3. How Serverless DevOps Works

A typical Serverless DevOps workflow consists of:

1️⃣ Code Commit → Developer pushes code to a repository (GitHub, CodeCommit, Bitbucket). 2️⃣ Trigger Build Process → AWS Lambda or Cloud Functions start the CI/CD process. 3️⃣ Code Testing & Packaging → AWS Code Build or Cloud Build compiles and tests code. 4️⃣ Deployment → Code is deployed to a serverless platform (AWS Lambda, Cloud Functions, or Fargate). 5️⃣ Monitoring & Logging → Serverless monitoring tools track performance and errors.

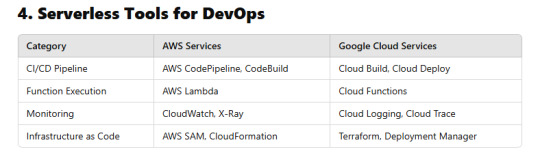

4. Serverless Tools for DevOps

5. Conclusion

Serverless DevOps accelerates software delivery, reduces costs, and improves scalability by automating deployments without managing servers.

Whether using AWS Lambda or Google Cloud Functions, integrating serverless into DevOps workflows enables faster, more efficient development cycles.

WEBSITE: https://www.ficusoft.in/devops-training-in-chennai/

0 notes

Text

A Practical Guide to Deploying Microservices with AWS ECS and Fargate

A Practical Guide to Deploying a Microservices Architecture with AWS ECS and Fargate Introduction In this comprehensive guide, we will walk you through the process of deploying a microservices architecture using Amazon Web Services (AWS) Elastic Container Service (ECS) and Amazon ECS Fargate. This guide is designed for developers and system administrators who want to learn how to deploy a…

0 notes

Text

Deploying a Scalable Microservices Architecture on AWS Fargate

Introduction Hands-on: Deploying a Scalable Microservices Architecture on AWS Fargate is a comprehensive tutorial that guides you through the process of building a scalable microservices architecture on AWS Fargate. This tutorial is designed for developers and system administrators who want to learn how to deploy a scalable microservices architecture on AWS Fargate. In this tutorial, you will…

0 notes