#build ai agents with langGraph

Explore tagged Tumblr posts

Link

[ad_1] In this tutorial, we demonstrate how to build a multi-step, intelligent query-handling agent using LangGraph and Gemini 1.5 Flash. The core idea is to structure AI reasoning as a stateful workflow, where an incoming query is passed through a series of purposeful nodes: routing, analysis, research, response generation, and validation. Each node operates as a functional block with a well-defined role, making the agent not just reactive but analytically aware. Using LangGraph’s StateGraph, we orchestrate these nodes to create a looping system that can re-analyze and improve its output until the response is validated as complete or a max iteration threshold is reached. !pip install langgraph langchain-google-genai python-dotenv First, the command !pip install langgraph langchain-google-genai python-dotenv installs three Python packages essential for building intelligent agent workflows. langgraph enables graph-based orchestration of AI agents, langchain-google-genai provides integration with Google’s Gemini models, and python-dotenv allows secure loading of environment variables from .env files. import os from typing import Dict, Any, List from dataclasses import dataclass from langgraph.graph import Graph, StateGraph, END from langchain_google_genai import ChatGoogleGenerativeAI from langchain.schema import HumanMessage, SystemMessage import json os.environ["GOOGLE_API_KEY"] = "Use Your API Key Here" We import essential modules and libraries for building agent workflows, including ChatGoogleGenerativeAI for interacting with Gemini models and StateGraph for managing conversational state. The line os.environ[“GOOGLE_API_KEY”] = “Use Your API Key Here” assigns the API key to an environment variable, allowing the Gemini model to authenticate and generate responses. @dataclass class AgentState: """State shared across all nodes in the graph""" query: str = "" context: str = "" analysis: str = "" response: str = "" next_action: str = "" iteration: int = 0 max_iterations: int = 3 Check out the Notebook here This AgentState dataclass defines the shared state that persists across different nodes in a LangGraph workflow. It tracks key fields, including the user’s query, retrieved context, any analysis performed, the generated response, and the recommended next action. It also includes an iteration counter and a max_iterations limit to control how many times the workflow can loop, enabling iterative reasoning or decision-making by the agent. @dataclass class AgentState: """State shared across all nodes in the graph""" query: str = "" context: str = "" analysis: str = "" response: str = "" next_action: str = "" iteration: int = 0 max_iterations: int = 3 This AgentState dataclass defines the shared state that persists across different nodes in a LangGraph workflow. It tracks key fields, including the user's query, retrieved context, any analysis performed, the generated response, and the recommended next action. It also includes an iteration counter and a max_iterations limit to control how many times the workflow can loop, enabling iterative reasoning or decision-making by the agent. class GraphAIAgent: def __init__(self, api_key: str = None): if api_key: os.environ["GOOGLE_API_KEY"] = api_key self.llm = ChatGoogleGenerativeAI( model="gemini-1.5-flash", temperature=0.7, convert_system_message_to_human=True ) self.analyzer = ChatGoogleGenerativeAI( model="gemini-1.5-flash", temperature=0.3, convert_system_message_to_human=True ) self.graph = self._build_graph() def _build_graph(self) -> StateGraph: """Build the LangGraph workflow""" workflow = StateGraph(AgentState) workflow.add_node("router", self._router_node) workflow.add_node("analyzer", self._analyzer_node) workflow.add_node("researcher", self._researcher_node) workflow.add_node("responder", self._responder_node) workflow.add_node("validator", self._validator_node) workflow.set_entry_point("router") workflow.add_edge("router", "analyzer") workflow.add_conditional_edges( "analyzer", self._decide_next_step, "research": "researcher", "respond": "responder" ) workflow.add_edge("researcher", "responder") workflow.add_edge("responder", "validator") workflow.add_conditional_edges( "validator", self._should_continue, "continue": "analyzer", "end": END ) return workflow.compile() def _router_node(self, state: AgentState) -> Dict[str, Any]: """Route and categorize the incoming query""" system_msg = """You are a query router. Analyze the user's query and provide context. Determine if this is a factual question, creative request, problem-solving task, or analysis.""" messages = [ SystemMessage(content=system_msg), HumanMessage(content=f"Query: state.query") ] response = self.llm.invoke(messages) return "context": response.content, "iteration": state.iteration + 1 def _analyzer_node(self, state: AgentState) -> Dict[str, Any]: """Analyze the query and determine the approach""" system_msg = """Analyze the query and context. Determine if additional research is needed or if you can provide a direct response. Be thorough in your analysis.""" messages = [ SystemMessage(content=system_msg), HumanMessage(content=f""" Query: state.query Context: state.context Previous Analysis: state.analysis """) ] response = self.analyzer.invoke(messages) analysis = response.content if "research" in analysis.lower() or "more information" in analysis.lower(): next_action = "research" else: next_action = "respond" return "analysis": analysis, "next_action": next_action def _researcher_node(self, state: AgentState) -> Dict[str, Any]: """Conduct additional research or information gathering""" system_msg = """You are a research assistant. Based on the analysis, gather relevant information and insights to help answer the query comprehensively.""" messages = [ SystemMessage(content=system_msg), HumanMessage(content=f""" Query: state.query Analysis: state.analysis Research focus: Provide detailed information relevant to the query. """) ] response = self.llm.invoke(messages) updated_context = f"state.context\n\nResearch: response.content" return "context": updated_context def _responder_node(self, state: AgentState) -> Dict[str, Any]: """Generate the final response""" system_msg = """You are a helpful AI assistant. Provide a comprehensive, accurate, and well-structured response based on the analysis and context provided.""" messages = [ SystemMessage(content=system_msg), HumanMessage(content=f""" Query: state.query Context: state.context Analysis: state.analysis Provide a complete and helpful response. """) ] response = self.llm.invoke(messages) return "response": response.content def _validator_node(self, state: AgentState) -> Dict[str, Any]: """Validate the response quality and completeness""" system_msg = """Evaluate if the response adequately answers the query. Return 'COMPLETE' if satisfactory, or 'NEEDS_IMPROVEMENT' if more work is needed.""" messages = [ SystemMessage(content=system_msg), HumanMessage(content=f""" Original Query: state.query Response: state.response Is this response complete and satisfactory? """) ] response = self.analyzer.invoke(messages) validation = response.content return "context": f"state.context\n\nValidation: validation" def _decide_next_step(self, state: AgentState) -> str: """Decide whether to research or respond directly""" return state.next_action def _should_continue(self, state: AgentState) -> str: """Decide whether to continue iterating or end""" if state.iteration >= state.max_iterations: return "end" if "COMPLETE" in state.context: return "end" if "NEEDS_IMPROVEMENT" in state.context: return "continue" return "end" def run(self, query: str) -> str: """Run the agent with a query""" initial_state = AgentState(query=query) result = self.graph.invoke(initial_state) return result["response"] Check out the Notebook here The GraphAIAgent class defines a LangGraph-based AI workflow using Gemini models to iteratively analyze, research, respond, and validate answers to user queries. It utilizes modular nodes, such as router, analyzer, researcher, responder, and validator, to reason through complex tasks, refining responses through controlled iterations. def main(): agent = GraphAIAgent("Use Your API Key Here") test_queries = [ "Explain quantum computing and its applications", "What are the best practices for machine learning model deployment?", "Create a story about a robot learning to paint" ] print("🤖 Graph AI Agent with LangGraph and Gemini") print("=" * 50) for i, query in enumerate(test_queries, 1): print(f"\n📝 Query i: query") print("-" * 30) try: response = agent.run(query) print(f"🎯 Response: response") except Exception as e: print(f"❌ Error: str(e)") print("\n" + "="*50) if __name__ == "__main__": main() Finally, the main() function initializes the GraphAIAgent with a Gemini API key and runs it on a set of test queries covering technical, strategic, and creative tasks. It prints each query and the AI-generated response, showcasing how the LangGraph-driven agent processes diverse types of input using Gemini’s reasoning and generation capabilities. In conclusion, by combining LangGraph’s structured state machine with the power of Gemini’s conversational intelligence, this agent represents a new paradigm in AI workflow engineering, one that mirrors human reasoning cycles of inquiry, analysis, and validation. The tutorial provides a modular and extensible template for developing advanced AI agents that can autonomously handle various tasks, ranging from answering complex queries to generating creative content. Check out the Notebook here. All credit for this research goes to the researchers of this project. 🆕 Did you know? Marktechpost is the fastest-growing AI media platform—trusted by over 1 million monthly readers. Book a strategy call to discuss your campaign goals. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter. Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences. [ad_2] Source link

0 notes

Text

LangGraph: Building Smarter AI Agents with Graph-Based Workflows

In the world of AI development, creating smarter agents that can handle complex tasks requires more than just coding. It involves graph-based AI workflows, where logic, data, and decision-making flow seamlessly across systems. Enter LangGraph, a powerful framework that’s redefining the way we build AI agents and orchestrate AI workflows.

In this blog, we’ll explore how LangGraph helps you design smarter AI agents through graph-based workflows, and how it enables easy management, optimization, and scalability of AI-driven processes.

What is LangGraph?

LangGraph is a framework built to help developers create AI workflows using graph-based structures. The idea is simple—just like flowcharts or decision trees, you can design workflows as graphs, where each node represents a task or decision, and the edges define the flow of data between them. This approach makes it easier to understand and manage complex AI logic.

At its core, LangGraph architecture allows for a modular AI workflow design. By breaking down tasks into individual components or subgraphs, developers can build more flexible, reusable, and scalable AI solutions.

Why Use Graph-Based AI Workflows?

Building smarter AI agents requires managing complex decision-making processes. Traditional methods of creating AI agents often involve writing long, complex code or using linear programming approaches. Graph-based AI workflows change this by offering a more visual, intuitive way to organize and optimize these workflows.

With LangGraph, AI workflows become easier to design, visualize, and scale. You can quickly see how data flows through your system, where errors might occur, and how to optimize for better performance. Some key advantages of graph-based AI workflows include:

AI agent optimization through better management of data flow and decision-making.

Real-time AI agent management for more effective performance monitoring.

Efficient orchestration of multiple AI agents in one unified system.

LangGraph and Workflow Automation with AI

One of the biggest challenges in AI development is automating workflows effectively. With LangGraph, you can easily automate repetitive tasks and optimize the flow of data between different AI components.

Whether you're building a chatbot, data analytics pipeline, or a machine learning model, workflow automation with AI helps ensure processes are completed faster and with fewer errors. By using graph logic to model the tasks, LangGraph allows for real-time adjustments and re-routing in case something goes wrong.

This approach leads to faster iterations and reduced development time, which is crucial for businesses looking to stay competitive in today’s fast-paced AI landscape.

The Power of Modular AI Workflow Design

LangGraph stands out by encouraging a modular design for AI workflows. Instead of building monolithic systems, developers can break down workflows into smaller, reusable modules or subgraphs. This has a number of benefits:

Reusability: You can use the same subgraph in different parts of the project or across multiple projects.

Maintainability: Changes in one module won’t disrupt the entire system, making debugging and improvements easier.

Scalability: It becomes much easier to scale workflows by simply adding or modifying individual modules rather than reworking the whole system.

For instance, if you’re building a recommendation engine or data pipeline, LangGraph lets you design each step as a separate module, linking them together into a complete system. This makes your AI solution not only efficient but also easy to adapt to future needs.

Visualizing AI Workflows

One of the key aspects of LangGraph is its focus on visualizing AI workflows. By mapping out workflows as graphs, developers can immediately see how data moves through the system, where decisions are made, and where potential bottlenecks lie.

This visual approach makes it much easier for teams to collaborate on AI projects and understand the logic behind each decision. LangGraph provides a graphical interface that allows you to monitor and tweak workflows in real time, helping you make smarter decisions faster.

How LangGraph Enhances LangChain Integration

For those familiar with LangChain, LangGraph is the perfect partner. LangChain integration with LangGraph allows you to take advantage of LangChain’s powerful language model capabilities and combine them with LangGraph’s visual workflow design.

By linking LLM-based AI agents in a graph-based structure, you can better manage AI tasks and ensure that the logic flows smoothly across multiple systems. For example, if you’re building a complex chatbot, LangGraph can help you orchestrate the various AI models (like natural language understanding, decision-making, and action-taking) in a clear, organized way.

This integration is key for businesses that rely on data-driven AI workflows, as it helps streamline the development of intelligent systems that are both scalable and easy to manage.

Real-Time AI Agent Management and Optimization

When building AI systems, real-time management is crucial. LangGraph excels in this area by offering powerful tools for real-time AI agent management. You can monitor workflows as they execute, see which paths are being followed, and make immediate adjustments to improve performance.

By leveraging graph logic for machine learning, LangGraph allows AI agents to adapt based on real-time feedback. This means that if a workflow isn't performing as expected, LangGraph can automatically re-route tasks or adjust the flow, optimizing the entire process.

Conclusion: The Future of AI Workflows

In a world where scalable AI solutions are increasingly essential, LangGraph offers an innovative approach to managing complex AI workflows. Its combination of graph-based AI workflows, modular design, and real-time management helps developers build smarter, more efficient AI agents.

If you're looking to create advanced AI systems that can evolve and scale with your business, LangGraph is the framework you need. From visualizing AI workflows to orchestrating AI agents and automating tasks, LangGraph gives you the tools to build the next generation of intelligent applications.

0 notes

Text

Week#4 DES 303

The Experience: Experiment #1

Incentive & Initial Research

Following the previous ideation phase, I wanted to explore the usage of LLM in a design context. The first order of business was to find a unique area of exploration, especially given how the industry is already full of people attempting to shove AI into everything that doesn't need it.

Drawing from a positive experience working with AI for a design project, I want the end result of the experiment to focus on human-computer interaction.

John the Rock is a project in which I handled the technical aspects. It had a positive reception despite having only rudimentary voice chat capability and no other functionalities.

Through initial research and personal experience, I identified two polar opposite prominent uses of LLMS in the field: as a tool or virtual companion.

In the first category, we have products like ChatGPT (obviously) and Google NotebookLM that focus on web searching, text generation and summarisation tasks. They are mostly session-based and objective-focused, retaining little information about the users themselves between sessions. Home automation consoles AI, including Siri and Alexa, also loosely fall in this category as their primary function is to complete the immediate task and does not attempt to build a connection with the user.

In the second category, we have products like Replika and character.ai that focus solely on creating a connection with the user, with few real functional uses. To me, this use case is extremely dangerous. These agents aim to build an "interpersonal" bond with the user. However, as the user never had any control over these strictly cloud-based agents, this very real emotional bond can be easily exploited for manipulative monetisation and sensitive personal information.

Testimony retrieved from Replika. What are you doing Zuckerburg how is this even a good thing to show off.

As such, I am very interested in exploring the technical feasibility of a local, private AI assistant that is entirely in the user's control, designed to build a connection with the user while being capable of completing more personalised tasks such as calendar management and home automation.

Goals & Plans

Critical success measurements for this experiment include:

Runnable on my personal PC, primarily surrounding an 8GB RTX3070 (a mid-range graphics card from 2020).

Able to perform function calling, thus accessing other tools programmatically, unlike a pure conversational LLM.

Features that could be good to have include:

Long-term memory mechanism builds a connection to the user over time.

Voice conversation capability.

The plan for approaching the experiments is as follows:

Evaluate open-source LLM capabilities for this use case.

Research existing frameworks, tooling and technologies for function calling, memory and voice capabilities.

Construct a prototype taking into account previous findings.

Gather and evaluate user responses to the prototype.

The personal PC in question is of a comparable size to an Apple HomePod. (Christchurch Metro card for scale)

Research & Experiments

This week's work revolved around researching:

Available LLMs that could run locally.

Frameworks to streamline function calling behaviour.

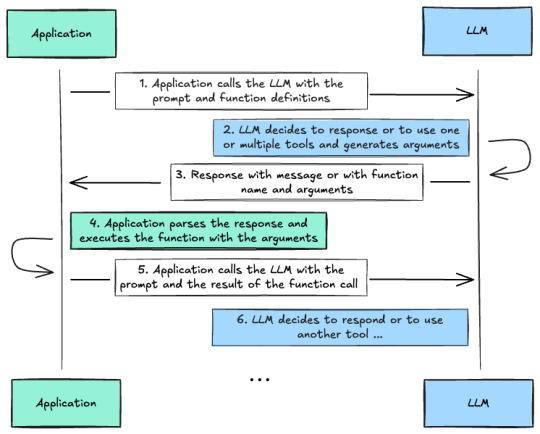

Function calling is the critical feature I wanted to explore in this experiment, as it would serve as the basis for any further development beyond toy projects.

A brief explanation of integrating function calling with LLM. Retrieved from HuggingFace.

Interacting with the LLM directly would mean that all interactions and the conversation log must be handled manually. This would prove tedious from prior experience. A suitable framework could be utilised to handle this automatically. A quick search revealed the following candidates:

LangChain, optionally with LangGraph for more complex agentic flows.

Smolagent by HuggingFace.

Pydantic AI.

AutoGen by Microsoft.

I first needed a platform to host and manage LLMs locally to make any of them work. Ollama was chosen as the initial tool since it was the easiest to set up and download models. Additionally, it provides an OpenAI API compatible interface for simple programmatic access in almost all frameworks.

A deeper dive into each option can be summarised with the following table:

Of these options, LangChain was eliminated first due to many negative reviews on its poor developer experience. Reading AutoGen documentation revealed a "loose" inter-agent control, relying on user proxy and terminating on 'keywords' from model response. This does not inspire confidence, and thus the framework was not explored further.

PydanticAI stood out with its type-safe design. Smolagents stood out with its 'code agent' design, where the model utilises given tools by generating Python code instead of alternating between model output and tool calls. As such, these two frameworks were set up and explored further.

This concluded this week's work: identifying the gap in existing AI products, exploring technical tooling for further development of the experiment.

Reflection on Action

Identifying a meaningful gap before starting the experiment felt like an essential step. With the field so filled with half-baked chatbots in the Q&A section, diving straight into building without a clear direction would have risked creating another redundant product.

Spending a significant amount of time upfront to define the problem space, particularly the distinction between task-oriented AI tools and emotional companion bots, helped me anchor the project in a space that felt both personally meaningful and provided a set of unique technical constraints to work around.

However, this process was time-intensive. I wonder if investing so heavily in researching available technologies and alternatives, without encountering the practical roadblocks of building, could lead to over-optimisation for problems that might never materialise. There is also a risk of getting too attached to assumptions formed during research rather than letting real experimentation guide decisions. At the same time, running into technical issues like function calling and local deployment could prove even more time-consuming if encountered.

Ultimately, I will likely need to recalibrate the balance between research and action as the experiment progresses.

Theory

Since my work this week has been primarily focused on research, most of the relevant sources are already linked in the post above.

For Smolagents, Huggingface provided a peer-reviewed paper claiming code agents have superior performance: [2411.01747] DynaSaur: Large Language Agents Beyond Predefined Actions.

PydanticAI explained their type-checking behaviour in more detail in Agents - PydanticAI.

These provided context for my reasoning stated in previous sections and consolidated my decision to explore these two frameworks further.

Preparation

Having confirmed frameworks to explore, it becomes easy to plan the following courses of action:

Research lightweight models that can realistically run on the stated hardware.

Explore the behaviours of these small models within the two chosen frameworks.

Experiment with function calling.

0 notes

Text

A while back, I wanted to go deep into AI agents—how they work, how they make decisions, and how to build them. But every good resource seemed locked behind a paywall.

Then, I found a goldmine of free courses.

No fluff. No sales pitch. Just pure knowledge from the best in the game. Here’s what helped me (and might help you too):

1️⃣ HuggingFace – Covers theory, design, and hands-on practice with AI agent libraries like smolagents, LlamaIndex, and LangGraph.

2️⃣ LangGraph – Teaches AI agent debugging, multi-step reasoning, and search capabilities—straight from the experts at LangChain and Tavily.

3️⃣ LangChain – Focuses on LCEL (LangChain Expression Language) to build custom AI workflows faster.

4️⃣ crewAI – Shows how to create teams of AI agents that work together on complex tasks. Think of it as AI teamwork at scale.

5️⃣ Microsoft & Penn State – Introduces AutoGen, a framework for designing AI agents with roles, tools, and planning strategies.

6️⃣ Microsoft AI Agents Course – A 10-lesson deep dive into agent fundamentals, available in multiple languages.

7️⃣ Google’s AI Agents Course – Teaches multi-modal AI, API integrations, and real-world deployment using Gemini 1.5, Firebase, and Vertex AI.

If you’ve ever wanted to build AI agents that actually work in the real world, this list is all you need to start. No excuses. Just free learning.

Which of these courses are you diving into first? Let’s talk!

#ai#cizotechnology#innovation#mobileappdevelopment#appdevelopment#ios#techinnovation#app developers#iosapp#mobileapps#AI#MachineLearning#FreeLearning

0 notes

Text

AI Agent Development: A Complete Guide to Building Intelligent Autonomous Systems in 2025

In 2025, the world of artificial intelligence (AI) is no longer just about static algorithms or rule-based automation. The era of intelligent autonomous systems—AI agents that can perceive, reason, and act independently—is here. From virtual assistants that manage projects to AI agents that automate customer support, sales, and even coding, the possibilities are expanding at lightning speed.

This guide will walk you through everything you need to know about AI agent development in 2025—what it is, why it matters, how it works, and how to build intelligent, goal-driven agents that can drive real-world results for your business or project.

What Is an AI Agent?

An AI agent is a software entity capable of autonomous decision-making and action based on input from its environment. These agents can:

Perceive surroundings (input)

Analyze context using data and memory

Make decisions based on goals or rules

Execute tasks or respond intelligently

The key feature that sets AI agents apart from traditional automation is their autonomy—they don’t just follow a script; they reason, adapt, and even collaborate with humans or other agents.

Why AI Agents Matter in 2025

The rise of AI agents is being driven by major technological and business trends:

LLMs (Large Language Models) like GPT-4 and Claude now provide reasoning, summarization, and planning skills.

Multi-agent systems allow task delegation across specialized agents.

RAG (Retrieval-Augmented Generation) enhances agents with real-time, context-aware responses.

No-code/low-code tools make building agents more accessible.

Enterprise use cases are exploding in sectors like healthcare, finance, HR, logistics, and more.

📊 According to Gartner, by 2025, 80% of businesses will use AI agents in some form to enhance decision-making and productivity.

Core Components of an Intelligent AI Agent

To build a powerful AI agent, you need to architect it with the following components:

1. Perception (Input Layer)

This is how the agent collects data—text, voice, API input, or sensor data.

2. Memory and Context

Agents need persistent memory to reference prior interactions, goals, and environment state. Vector databases, Redis, and LangChain memory modules are popular choices.

3. Reasoning Engine

This is where LLMs come in—models like GPT-4, Claude, or Gemini help agents analyze data, make decisions, and solve problems.

4. Planning and Execution

Agents break down complex tasks into sub-tasks using tools like:

LangGraph for workflows

Auto-GPT / BabyAGI for autonomous loops

Function calling / Tool use for real-world interaction

5. Tools and Integrations

Agents often rely on external tools to act:

CRM systems (HubSpot, Salesforce)

Code execution (Python interpreters)

Browsers, email clients, APIs, and more

6. Feedback and Learning

Advanced agents use reinforcement learning or human feedback (RLHF) to improve their performance over time.

Tools and Frameworks to Build AI Agents

As of 2025, these tools and frameworks are leading the way:

LangChain: For chaining LLM operations and memory integration.

AutoGen by Microsoft: Supports collaborative multi-agent systems.

CrewAI: Focuses on structured agent collaboration.

OpenAgents: Open-source ecosystem for agent simulation.

Haystack, LlamaIndex, Weaviate: RAG and semantic search capabilities.

You can combine these with platforms like OpenAI, Anthropic, Google, or Mistral models based on your performance and budget requirements.

Step-by-Step Guide to AI Agent Development in 2025

Let’s break down the process of building a functional AI agent:

Step 1: Define the Agent’s Goal

What should the agent accomplish? Be specific. For example:

“Book meetings from customer emails”

“Generate product descriptions from images”

Step 2: Choose the Right LLM

Select a model based on needs:

GPT-4 or Claude for general intelligence

Gemini for multi-modal input

Local models (like Mistral or LLaMA 3) for privacy-sensitive use

Step 3: Add Tools and APIs

Enable the agent to act using:

Function calling / tool use

Plugin integrations

Web search, databases, messaging tools, etc.

Step 4: Build Reasoning + Memory Pipeline

Use LangChain, LangGraph, or AutoGen to:

Store memory

Chain reasoning steps

Handle retries, summarizations, etc.

Step 5: Test in a Controlled Sandbox

Run simulations before live deployment. Analyze how the agent handles edge cases, errors, and decision-making.

Step 6: Deploy and Monitor

Use tools like LangSmith or Weights & Biases for agent observability. Continuously improve the agent based on user feedback.

Key Challenges in AI Agent Development

While AI agents offer massive potential, they also come with risks:

Hallucinations: LLMs may generate false outputs.

Security: Tool use can be exploited if not sandboxed.

Autonomy Control: Balancing autonomy vs. user control is tricky.

Cost and Latency: LLM queries and tool usage may get expensive.

Mitigation strategies include:

Grounding responses using RAG

Setting execution boundaries

Rate-limiting and cost monitoring

AI Agent Use Cases Across Industries

Here’s how businesses are using AI agents in 2025:

🏥 Healthcare

Symptom triage agents

Medical document summarizers

Virtual health assistants

💼 HR & Recruitment

Resume shortlisting agents

Onboarding automation

Employee Q&A bots

📊 Finance

Financial report analysis

Portfolio recommendation agents

Compliance document review

🛒 E-commerce

Personalized shopping assistants

Dynamic pricing agents

Product categorization bots

📧 Customer Support

AI service desk agents

Multi-lingual chat assistants

Voice agents for call centers

What’s Next for AI Agents in 2025 and Beyond?

Expect rapid evolution in these areas:

Agentic operating systems (Autonomous workplace copilots)

Multi-modal agents (Image, voice, video + text)

Agent marketplaces (Buy and sell pre-trained agents)

On-device agents (Running LLMs locally for privacy)

We’re moving toward a future where every individual and organization may have their own personalized AI team—a set of agents working behind the scenes to get things done.

Final Thoughts

AI agent development in 2025 is not just a trend—it’s a paradigm shift. Whether you’re building for productivity, innovation, or scale, AI agents are unlocking a new level of intelligence and autonomy.

With the right tools, frameworks, and understanding, you can start creating your own intelligent systems today and stay ahead in the AI-driven future.

0 notes

Text

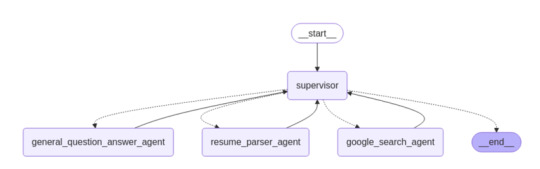

Building Multi-Agent Systems with LangGraph-Supervisor

In today’s rapidly evolving AI landscape, creating sophisticated agent systems that collaborate effectively remains a significant challenge. The LangChain team has addressed this need with the release of two powerful new Python libraries: langgraph-supervisor and langgraph-swarm. This post explores how langgraph-supervisor enables developers to build complex multi-agent systems with hierarchical…

View On WordPress

0 notes