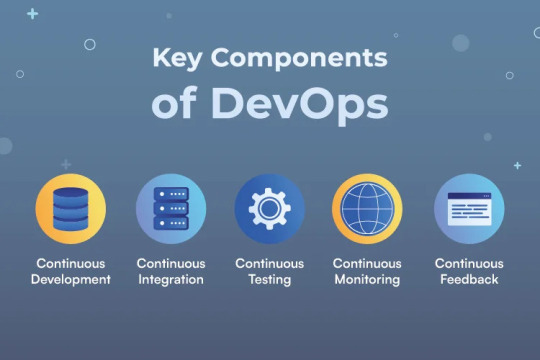

#components of devops lifecycle

Explore tagged Tumblr posts

Text

#DevOps lifecycle#components of devops lifecycle#different phases in devops lifecycle#best devops consulting in toronto#best devops consulting in canada#DevOps#kubernetes#docker#agile

2 notes

·

View notes

Text

Devops lifecycle is a methodology used by software development developers to bring products to market faster and more efficiently.

0 notes

Text

What is Argo CD? And When Was Argo CD Established?

What Is Argo CD?

Argo CD is declarative Kubernetes GitOps continuous delivery.

In DevOps, ArgoCD is a Continuous Delivery (CD) technology that has become well-liked for delivering applications to Kubernetes. It is based on the GitOps deployment methodology.

When was Argo CD Established?

Argo CD was created at Intuit and made publicly available following Applatix’s 2018 acquisition by Intuit. The founding developers of Applatix, Hong Wang, Jesse Suen, and Alexander Matyushentsev, made the Argo project open-source in 2017.

Why Argo CD?

Declarative and version-controlled application definitions, configurations, and environments are ideal. Automated, auditable, and easily comprehensible application deployment and lifecycle management are essential.

Getting Started

Quick Start

kubectl create namespace argocd kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

For some features, more user-friendly documentation is offered. Refer to the upgrade guide if you want to upgrade your Argo CD. Those interested in creating third-party connectors can access developer-oriented resources.

How it works

Argo CD defines the intended application state by employing Git repositories as the source of truth, in accordance with the GitOps pattern. There are various approaches to specify Kubernetes manifests:

Applications for Customization

Helm charts

JSONNET files

Simple YAML/JSON manifest directory

Any custom configuration management tool that is set up as a plugin

The deployment of the intended application states in the designated target settings is automated by Argo CD. Deployments of applications can monitor changes to branches, tags, or pinned to a particular manifest version at a Git commit.

Architecture

The implementation of Argo CD is a Kubernetes controller that continually observes active apps and contrasts their present, live state with the target state (as defined in the Git repository). Out Of Sync is the term used to describe a deployed application whose live state differs from the target state. In addition to reporting and visualizing the differences, Argo CD offers the ability to manually or automatically sync the current state back to the intended goal state. The designated target environments can automatically apply and reflect any changes made to the intended target state in the Git repository.

Components

API Server

The Web UI, CLI, and CI/CD systems use the API, which is exposed by the gRPC/REST server. Its duties include the following:

Status reporting and application management

Launching application functions (such as rollback, sync, and user-defined actions)

Cluster credential management and repository (k8s secrets)

RBAC enforcement

Authentication, and auth delegation to outside identity providers

Git webhook event listener/forwarder

Repository Server

An internal service called the repository server keeps a local cache of the Git repository containing the application manifests. When given the following inputs, it is in charge of creating and returning the Kubernetes manifests:

URL of the repository

Revision (tag, branch, commit)

Path of the application

Template-specific configurations: helm values.yaml, parameters

A Kubernetes controller known as the application controller keeps an eye on all active apps and contrasts their actual, live state with the intended target state as defined in the repository. When it identifies an Out Of Sync application state, it may take remedial action. It is in charge of calling any user-specified hooks for lifecycle events (Sync, PostSync, and PreSync).

Features

Applications are automatically deployed to designated target environments.

Multiple configuration management/templating tools (Kustomize, Helm, Jsonnet, and plain-YAML) are supported.

Capacity to oversee and implement across several clusters

Integration of SSO (OIDC, OAuth2, LDAP, SAML 2.0, Microsoft, LinkedIn, GitHub, GitLab)

RBAC and multi-tenancy authorization policies

Rollback/Roll-anywhere to any Git repository-committed application configuration

Analysis of the application resources’ health state

Automated visualization and detection of configuration drift

Applications can be synced manually or automatically to their desired state.

Web user interface that shows program activity in real time

CLI for CI integration and automation

Integration of webhooks (GitHub, BitBucket, GitLab)

Tokens of access for automation

Hooks for PreSync, Sync, and PostSync to facilitate intricate application rollouts (such as canary and blue/green upgrades)

Application event and API call audit trails

Prometheus measurements

To override helm parameters in Git, use parameter overrides.

Read more on Govindhtech.com

#ArgoCD#CD#GitOps#API#Kubernetes#Git#Argoproject#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

Azure DevOps Training

Azure DevOps Training Programs

In today's rapidly evolving tech landscape, mastering Azure DevOps has become indispensable for organizations aiming to streamline their software development and delivery processes. As businesses increasingly migrate their operations to the cloud, the demand for skilled professionals proficient in Azure DevOps continues to soar. In this comprehensive guide, we'll delve into the significance of Azure DevOps training and explore the myriad benefits it offers to both individuals and enterprises.

Understanding Azure DevOps:

Before we delve into the realm of Azure DevOps training, let's first grasp the essence of Azure DevOps itself. Azure DevOps is a robust suite of tools offered by Microsoft Azure that facilitates collaboration, automation, and orchestration across the entire software development lifecycle. From planning and coding to building, testing, and deployment, Azure DevOps provides a unified platform for managing and executing diverse DevOps tasks seamlessly.

Why Azure DevOps Training Matters:

With Azure DevOps emerging as the cornerstone of modern DevOps practices, acquiring proficiency in this domain has become imperative for IT professionals seeking to stay ahead of the curve. Azure DevOps training equips individuals with the knowledge and skills necessary to leverage Microsoft Azure's suite of tools effectively. Whether you're a developer, IT administrator, or project manager, undergoing Azure DevOps training can significantly enhance your career prospects and empower you to drive innovation within your organization.

Key Components of Azure DevOps Training Programs:

Azure DevOps training programs are meticulously designed to cover a wide array of topics essential for mastering the intricacies of Azure DevOps. From basic concepts to advanced techniques, these programs encompass the following key components:

Azure DevOps Fundamentals: An in-depth introduction to Azure DevOps, including its core features, functionalities, and architecture.

Agile Methodologies: Understanding Agile principles and practices, and how they align with Azure DevOps for efficient project management and delivery.

Continuous Integration (CI): Learning to automate the process of integrating code changes into a shared repository, thereby enabling early detection of defects and ensuring software quality.

Continuous Deployment (CD): Exploring the principles of continuous deployment and mastering techniques for automating the deployment of applications to production environments.

Azure Pipelines: Harnessing the power of Azure Pipelines for building, testing, and deploying code across diverse platforms and environments.

Infrastructure as Code (IaC): Leveraging Infrastructure as Code principles to automate the provisioning and management of cloud resources using tools like Azure Resource Manager (ARM) templates.

Monitoring and Logging: Implementing robust monitoring and logging solutions to gain insights into application performance and troubleshoot issues effectively.

Security and Compliance: Understanding best practices for ensuring the security and compliance of Azure DevOps environments, including identity and access management, data protection, and regulatory compliance.

The Benefits of Azure DevOps Certification:

Obtaining Azure DevOps certification not only validates your expertise in Azure DevOps but also serves as a testament to your commitment to continuous learning and professional development. Azure DevOps certifications offered by Microsoft Azure are recognized globally and can open doors to exciting career opportunities in various domains, including cloud computing, software development, and DevOps engineering.

Conclusion:

In conclusion, Azure DevOps training is indispensable for IT professionals looking to enhance their skills and stay relevant in today's dynamic tech landscape. By undergoing comprehensive Azure DevOps training programs and obtaining relevant certifications, individuals can unlock a world of opportunities and propel their careers to new heights. Whether you're aiming to streamline your organization's software delivery processes or embark on a rewarding career journey, mastering Azure DevOps is undoubtedly a game-changer. So why wait? Start your Azure DevOps training journey today and pave the way for a brighter tomorrow.

5 notes

·

View notes

Text

Why DevOps Training Matters: A Deep Dive into the Benefits

In the ever-evolving landscape of software development and IT operations, DevOps has emerged as a transformative approach that promises to revolutionize the way organizations build, deploy, and manage software. However, embracing DevOps is not just about adopting a set of tools and practices; it's about fostering a culture of collaboration, automation, and continuous improvement. DevOps training is the linchpin that empowers professionals and organizations to unlock the full potential of this methodology.

In this comprehensive guide, we will delve into the myriad benefits of DevOps training, explore why it is crucial in today's tech-driven world, and highlight the role of ACTE Technologies in providing top-tier DevOps training programs.

Why is DevOps Training Crucial?

Before we dive into the specific advantages of DevOps training, it's essential to understand why training in this field is so pivotal:

1. A Paradigm Shift: DevOps represents a paradigm shift in software development and IT operations. It demands a new way of thinking, collaborating, and working. DevOps training equips professionals with the knowledge and skills needed to navigate this transformation successfully.

2. Evolving Skill Set: DevOps requires a diverse skill set that spans development, operations, automation, and collaboration. Training ensures that individuals are well-rounded in these areas, making them valuable assets to their organizations.

3. Continuous Learning: DevOps is not a one-time implementation; it's an ongoing journey of continuous improvement. DevOps training instills a mindset of continual learning and adaptation, ensuring that professionals stay relevant in a rapidly changing tech landscape.

The Key Benefits of DevOps Training:

Now that we've established the importance of DevOps training, let's explore its key benefits:

1. Improved Collaboration:

The main goal of DevOps is to eliminate silos between the development and operations teams. It fosters collaboration and communication throughout the software development lifecycle. DevOps training teaches professionals how to facilitate seamless interaction between these traditionally separate groups, resulting in faster issue resolution and enhanced efficiency.

2. Continuous Integration and Deployment (CI/CD):

One of the cornerstones of DevOps is the implementation of CI/CD pipelines. These pipelines automate code integration, testing, and deployment processes. DevOps training equips practitioners with the skills to design and manage CI/CD pipelines, leading to quicker releases, reduced errors, and improved software quality.

3. Automation Skills:

Automation is a fundamental aspect of DevOps. It streamlines repetitive tasks, reduces manual errors, and accelerates processes. DevOps training provides hands-on experience with automation tools and practices, enabling professionals to automate tasks such as infrastructure provisioning, configuration management, and testing.

4. Enhanced Problem-Solving:

DevOps encourages proactive problem-solving. Through real-time monitoring and alerting, professionals can identify and resolve issues swiftly, minimizing downtime and ensuring a seamless user experience. DevOps training imparts essential monitoring and troubleshooting skills.

5. Scalability:

As organizations grow, their software and infrastructure must scale to accommodate increased demand. DevOps training teaches professionals how to design and implement scalable solutions that can adapt to changing workloads and requirements.

6. Security:

Security is an integral part of DevOps, with "DevSecOps" practices being widely adopted. DevOps training emphasizes the importance of security measures throughout the development process, ensuring that security is not an afterthought but an integral component of every stage.

7. Cost Efficiency:

By automating processes and optimizing resource utilization, DevOps can lead to significant cost savings. DevOps training helps professionals identify cost-saving opportunities within their organizations, making them valuable assets in cost-conscious environments.

8. Career Advancement:

Professionals with DevOps skills are in high demand. DevOps training can open doors to better job opportunities, career growth, and higher salaries. It's a strategic investment in your career advancement.

In a tech landscape where agility, efficiency, and collaboration are paramount, DevOps training is the key to unlocking your potential as a DevOps professional. The benefits are undeniable, ranging from improved collaboration and problem-solving to career advancement and cost efficiency.

If you're considering pursuing DevOps training, ACTE Technologies can be your trusted partner on this transformative journey. Their expert guidance, comprehensive courses, and hands-on learning experiences will not only help you pass certification exams but also excel in your DevOps career.

Don't miss out on the opportunity to master this transformative methodology. Start your DevOps training journey today with ACTE Technologies and pave the way for a successful and fulfilling career in the world of DevOps!

9 notes

·

View notes

Text

Why DevOps and Microservices Are a Perfect Match for Modern Software Delivery

In today’s time, businesses are using scalable and agile software development methods. Two of the most transformative technologies, DevOps and microservices, have achieved substantial momentum. Both of these have advantages, but their full potential is seen when used together. DevOps gives automation and cooperation, and microservices divide complex monolithic apps into manageable services. They form a powerful combination and allow faster releases, higher quality, and more scalable systems.

Here's why DevOps and microservices are ideal for modern software delivery:

1. Independent Deployments Align Perfectly with Continuous Delivery

One of the best features of microservices is that each service can be built, tested, and deployed separately. This decoupling allows businesses to release features or changes without building or testing the complete program. DevOps, which focuses on continuous integration and delivery (CI/CD), thrives in this environment. Individual microservices can be fitted into CI/CD pipelines to enable more frequent and dependable deployments. The result is faster innovation cycles and reduced risk, as smaller changes are easier to manage and roll back if needed.

2. Team Autonomy Enhances Ownership and Accountability

Microservices encourage small, cross-functional teams to take ownership of specialized services from start to finish. This is consistent with the DevOps principle of breaking down the division between development and operations. Teams that receive experienced DevOps consulting services are better equipped to handle the full lifecycle, from development and testing to deployment and monitoring, by implementing best practices and automation tools.

3. Scalability Is Easier to Manage with Automation

Scaling a monolithic application often entails scaling the entire thing, even if only a portion is under demand. Microservices address this by enabling each service to scale independently based on demand. DevOps approaches like infrastructure-as-code (IaC), containerization, and orchestration technologies like Kubernetes make scaling strategies easier to automate. Whether scaling up a payment module during the holiday season or shutting down less-used services overnight, DevOps automation complements microservices by ensuring systems scale efficiently and cost-effectively.

4. Fault Isolation and Faster Recovery with Monitoring

DevOps encourages proactive monitoring, alerting, and issue response, which are critical to the success of distributed microservices systems. Because microservices isolate failures inside specific components, they limit the potential impact of a crash or performance issue. DevOps tools monitor service health, collect logs, and evaluate performance data. This visibility allows for faster detection and resolution of issues, resulting in less downtime and a better user experience.

5. Shorter Development Cycles with Parallel Workflows

Microservices allow teams to work on multiple components in parallel without waiting for each other. Microservices development services help enterprises in structuring their applications to support loosely connected services. When combined with DevOps, which promotes CI/CD automation and streamlined approvals, teams can implement code changes more quickly and frequently. Parallelism greatly reduces development cycles and enhances response to market demands.

6. Better Fit for Cloud-Native and Containerized Environments

Modern software delivery is becoming more cloud-native, and both microservices and DevOps support this trend. Microservices are deployed in containers, which are lightweight, portable, and isolated. DevOps tools are used to automate processes for deployment, scaling, and upgrades. This compatibility guarantees smooth delivery pipelines, consistent environments from development to production, and seamless rollback capabilities when required.

7. Streamlined Testing and Quality Assurance

Microservices allow for more modular testing. Each service may be unit-tested, integration-tested, and load-tested separately, increasing test accuracy and speed. DevOps incorporates test automation into the CI/CD pipeline, guaranteeing that every code push is validated without manual intervention. This collaboration results in greater software quality, faster problem identification, and reduced stress during deployments, especially in large, dynamic systems.

8. Security and Compliance Become More Manageable

Security can be implemented more accurately in a microservices architecture since services are isolated and can be managed by service-level access controls. DevOps incorporates DevSecOps, which involves integrating security checks into the CI/CD pipeline. This means security scans, compliance checks, and vulnerability assessments are performed early and frequently. Microservices and DevOps work together to help enterprises adopt a shift-left security approach. They make securing systems easier while not slowing development.

9. Continuous Improvement with Feedback Loops

DevOps and microservices work best with feedback. DevOps stresses real-time monitoring and feedback loops to continuously improve systems. Microservices make it easy to assess the performance of individual services, find inefficiencies, and improve them. When these feedback loops are integrated into the CI/CD process, teams can act quickly on insights, improving performance, reliability, and user satisfaction.

Conclusion

DevOps and microservices are not only compatible but also complementary forces that drive the next generation of software delivery. While microservices simplify complexity, DevOps guarantees that those units are efficiently produced, tested, deployed, and monitored. The combination enables teams to develop high-quality software at scale, quickly and confidently. Adopting DevOps and microservices is helpful and necessary for enterprises seeking to remain competitive and agile in a rapidly changing market.

#devops#microservices#software#services#solutions#business#microservices development#devops services#devops consulting services

0 notes

Text

Developing and Deploying AI/ML Applications on Red Hat OpenShift AI (AI268)

As AI and Machine Learning continue to reshape industries, the need for scalable, secure, and efficient platforms to build and deploy these workloads is more critical than ever. That’s where Red Hat OpenShift AI comes in—a powerful solution designed to operationalize AI/ML at scale across hybrid and multicloud environments.

With the AI268 course – Developing and Deploying AI/ML Applications on Red Hat OpenShift AI – developers, data scientists, and IT professionals can learn to build intelligent applications using enterprise-grade tools and MLOps practices on a container-based platform.

🌟 What is Red Hat OpenShift AI?

Red Hat OpenShift AI (formerly Red Hat OpenShift Data Science) is a comprehensive, Kubernetes-native platform tailored for developing, training, testing, and deploying machine learning models in a consistent and governed way. It provides tools like:

Jupyter Notebooks

TensorFlow, PyTorch, Scikit-learn

Apache Spark

KServe & OpenVINO for inference

Pipelines & GitOps for MLOps

The platform ensures seamless collaboration between data scientists, ML engineers, and developers—without the overhead of managing infrastructure.

📘 Course Overview: What You’ll Learn in AI268

AI268 focuses on equipping learners with hands-on skills in designing, developing, and deploying AI/ML workloads on Red Hat OpenShift AI. Here’s a quick snapshot of the course outcomes:

✅ 1. Explore OpenShift AI Components

Understand the ecosystem—JupyterHub, Pipelines, Model Serving, GPU support, and the OperatorHub.

✅ 2. Data Science Workspaces

Set up and manage development environments using Jupyter notebooks integrated with OpenShift’s security and scalability features.

✅ 3. Training and Managing Models

Use libraries like PyTorch or Scikit-learn to train models. Learn to leverage pipelines for versioning and reproducibility.

✅ 4. MLOps Integration

Implement CI/CD for ML using OpenShift Pipelines and GitOps to manage lifecycle workflows across environments.

✅ 5. Model Deployment and Inference

Serve models using tools like KServe, automate inference pipelines, and monitor performance in real-time.

🧠 Why Take This Course?

Whether you're a data scientist looking to deploy models into production or a developer aiming to integrate AI into your apps, AI268 bridges the gap between experimentation and scalable delivery. The course is ideal for:

Data Scientists exploring enterprise deployment techniques

DevOps/MLOps Engineers automating AI pipelines

Developers integrating ML models into cloud-native applications

Architects designing AI-first enterprise solutions

🎯 Final Thoughts

AI/ML is no longer confined to research labs—it’s at the core of digital transformation across sectors. With Red Hat OpenShift AI, you get an enterprise-ready MLOps platform that lets you go from notebook to production with confidence.

If you're looking to modernize your AI/ML strategy and unlock true operational value, AI268 is your launchpad.

👉 Ready to build and deploy smarter, faster, and at scale? Join the AI268 course and start your journey into Enterprise AI with Red Hat OpenShift.

For more details www.hawkstack.com

0 notes

Text

Self-Healing Test Automation: The Future of Reliable Testing with ideyaLabs

Revolutionize Your Quality Assurance with Self-Healing Test Automation Self-Healing Test Automation stands at the forefront of the software development lifecycle. ideyaLabs brings this transformative approach to organizations that demand efficiency, agility, and reliability. Automated tests often falter when changes occur in the codebase. This evolution often slows down deployment and increases maintenance workloads. Self-Healing Test Automation resolves these challenges.

What is Self-Healing Test Automation? Self-Healing Test Automation uses advanced algorithms to detect and fix test script failures automatically. The system identifies changes in the user interface or back-end structure. Whenever tests fail due to altered elements, the self-healing engine analyzes patterns, locates the new elements, and updates the scripts in real time. Testing teams monitor and validate without manual interference.

How ideyaLabs Implements Self-Healing Test Automation ideyaLabs tailors self-healing solutions to address unique business needs. Automation frameworks integrate seamlessly with existing tools. Machine learning components track the behavior of test scripts. Automated systems ensure that test cases dynamically adjust when updates in application interfaces occur. Teams enjoy uninterrupted test execution cycles. Projects stay on schedule and defects come under control.

Benefits of Self-Healing Test Automation with ideyaLabs Organizations achieve significant time savings. Maintenance costs drop as test scripts self-correct without human intervention. Automation frameworks remain robust, even during rapid software releases. Teams focus on valuable exploratory testing. Reliable tests accelerate go-to-market strategies. ideyaLabs clients boost product quality and operational velocity.

Eliminate Manual Test Maintenance Manual intervention often derails productivity. Self-Healing Test Automation eradicates mundane and repetitive test maintenance tasks. ideyaLabs empowers teams to shift attention toward innovative work. Engineers concentrate on developing new features, while the self-healing engine manages test scripts.

Continuous Integration with Zero Downtime Seamless integration with CI/CD pipelines marks a key strength of Self-Healing Test Automation. ideyaLabs optimizes pipelines by automating script updates during every build and deployment. Software updates no longer break automated tests. Teams receive instant feedback on application stability.

Machine Learning Enhances Self-Healing Accuracy Machine learning principles drive the efficiency of self-healing test scripts. ideyaLabs equips frameworks with smart algorithms. Systems recognize element locators, assess historical changes, and intelligently predict likely replacements. This elevated accuracy delivers resilience in the face of UI or code updates.

Accelerate Digital Transformation Businesses shift to digital-first approaches. Rapid updates remain vital in today’s marketplaces. Self-Healing Test Automation allows organizations to innovate faster. ideyaLabs ensures software releases meet the highest quality standards, supporting agile and DevOps methodologies.

Reduced Risk in Production Releases Poorly maintained test scripts result in hidden bugs and failed deployments. Self-Healing Test Automation eliminates such risks. ideyaLabs delivers proactive identification and correction of test script issues. Releases progress smoothly. Operational confidence increases across engineering teams.

Scalability for Enterprise Applications Large enterprise applications feature complex UIs and frequent changes. ideyaLabs deploys scalable self-healing automation frameworks to handle vast test suites. Test maintenance remains minimal, regardless of application size or complexity. QA departments experience less friction during expansion.

Elevate Collaboration Across Teams Test automation becomes more approachable and efficient with self-healing capabilities. ideyaLabs fosters cross-functional collaboration between testers, developers, and business users. Teams access real-time dashboards with test outcomes. Responsibility for quality becomes a shared objective.

Security and Compliance Prioritized Automated adaptation of test scripts preserves test coverage for critical functionalities. ideyaLabs frameworks comply with rigorous security and industry standards. Sensitive data remains protected during testing cycles. Compliance audits receive comprehensive evidence.

Faster Feedback Loops Drive Continuous Improvement Feedback speed drives rapid improvement. ideyaLabs speeds up reporting cycles with real-time detection and healing of test failures. Developers receive faster insights. Corrections occur sooner in the development stream.

Enhanced ROI from QA Investments Investment in QA yields greater returns with self-healing technology. ideyaLabs clients notice measurable improvements in delivery speed, reliability, and cost savings. Automated processes reduce overhead for maintenance. Business leaders realize faster payback periods.

Self-Healing Test Automation: A Must for Modern Organizations Organizations demand predictable outputs and high software quality. Self-Healing Test Automation addresses these needs. ideyaLabs provides cutting-edge services and solutions tailored to modern development challenges.

How to Start with ideyaLabs Self-Healing Test Automation Initiating a project requires collaborative assessment and planning. ideyaLabs experts consult with stakeholders to understand project requirements. Teams receive walkthroughs on integration with current frameworks. The implementation phase unfolds with minimal disruption. Support covers every step.

Case Studies Highlight Success Stories ideyaLabs has spearheaded automation success for clients in finance, healthcare, retail, and technology. Self-healing frameworks reduced test maintenance by up to 85% in diverse projects. Clients report on improved system reliability and faster deployment cycles.

Preparing for the Future of Testing Trends continue to shift. ideyaLabs positions clients at the forefront of innovation. Self-Healing Test Automation stands as the bedrock for future-ready QA processes. Automation adapts, learns, and evolves with each release.

Partner with ideyaLabs for Test Automation Excellence Choose ideyaLabs for seamless, sustainable, and scalable test automation. Experience superior quality assurance with minimal maintenance effort. Contact ideyaLabs today to transform your testing approach with Self-Healing Test Automation.

0 notes

Text

From DevOps to DevSecOps: Building Security Into Every Step

In today’s fast-paced digital environment, security has become one of the most critical concerns for organizations. With the constant rise in cyber threats and data breaches, businesses need to adopt robust security measures that align with their development and operational workflows. Traditional security models often fall short in meeting the demand for rapid deployment and frequent updates. Enter DevOps, a methodology that blends development and operations to streamline workflows. And now, with the rise of DevSecOps, the integration of security into the DevOps process, organizations can enhance security without compromising the speed and agility that DevOps promises.

A DevOps provider plays a key role in helping businesses adopt and implement DevOps practices, ensuring that security is embedded throughout the entire development lifecycle. By making security an integral part of DevOps, organizations can proactively address security vulnerabilities, automate security testing, and mitigate risks before they affect production environments. In this blog, we will explore how DevOps improves security through DevSecOps, the benefits it offers, and the future of secure development practices.

What Is DevSecOps?

DevSecOps is a natural evolution of the DevOps methodology, which traditionally focuses on collaboration between development and operations teams to deliver software faster and more reliably. DevSecOps, however, takes it a step further by integrating security into every phase of the software development lifecycle (SDLC). In a DevSecOps framework, security is not a separate function or a last-minute addition to the process, but a continuous and collaborative effort across all teams involved in development and operations.

The goal of DevSecOps is to shift security "left" — meaning that security concerns are addressed from the very beginning of the development process, rather than being bolted on at the end. This proactive approach helps in identifying potential vulnerabilities early on and reduces the chances of security flaws being introduced into production environments.

How DevOps Improves Security

One of the key ways DevOps improves security is through automation. Automation tools integrated into the DevOps pipeline allow for continuous testing, monitoring, and vulnerability scanning, ensuring that security issues are detected and addressed as early as possible. By automating routine security tasks, teams can focus on more complex security challenges while maintaining a rapid development pace.

Another advantage of DevOps is the use of infrastructure as code (IaC). This enables the definition of security policies and configurations in a programmatic manner, making it easier to enforce consistent security standards across the infrastructure. IaC helps avoid configuration drift, where security settings could unintentionally change over time, introducing vulnerabilities into the environment.

Security testing also becomes part of the Continuous Integration/Continuous Deployment (CI/CD) pipeline in DevSecOps. With each new code commit, automated security tests are performed to ensure that new code doesn’t introduce security vulnerabilities. This continuous testing enables teams to fix vulnerabilities before they reach production, reducing the risk of exploitation.

Moreover, DevSecOps emphasizes collaboration between security, development, and operations teams. Security is no longer the sole responsibility of the security team; it is a shared responsibility across all stages of development. This cultural shift fosters a more security-conscious environment and encourages proactive identification and resolution of potential threats.

The Role of Automation in DevSecOps

Automation is one of the most critical components of DevSecOps. By integrating automated security tools into the DevOps pipeline, organizations can ensure that security checks are continuously performed without slowing down the development process. Some key areas where automation plays a significant role include:

Vulnerability Scanning: Automated tools can scan the codebase for known vulnerabilities in real-time, ensuring that potential threats are detected before they can be exploited.

Configuration Management: Automation ensures that security configurations are consistently applied across environments, reducing the risk of misconfigurations that could lead to security breaches.

Compliance Monitoring: DevSecOps tools can automatically check for compliance with industry regulations such as GDPR, HIPAA, or PCI DSS, ensuring that applications and infrastructure adhere to legal requirements.

Incident Response: Automation tools can help with rapid identification and response to security incidents, minimizing damage and reducing recovery time.

By automating these critical security tasks, businesses can achieve greater efficiency and consistency, while ensuring that security is always top of mind during development.

If you're interested in exploring the benefits of devops solutions for your business, we encourage you to book an appointment with our team of experts.

Book an Appointment

DevSecOps in the Mobile App Development Process

Mobile app development presents unique security challenges due to the variety of platforms, devices, and networks that apps interact with. With cyber threats increasingly targeting mobile applications, integrating security into the mobile app development process is essential. DevSecOps provides a framework for embedding security into every stage of mobile app development, from planning to deployment.

For businesses developing mobile apps, understanding the costs associated with development is critical. Using a mobile app cost calculator can help estimate the budget required for developing a secure app while incorporating necessary security measures such as encryption, secure authentication, and code obfuscation. With DevSecOps practices in place, security can be seamlessly integrated into the mobile app development lifecycle, reducing the likelihood of vulnerabilities while controlling costs.

The iterative nature of DevSecOps means that developers can continuously monitor and improve the security of their mobile apps, ensuring that they remain protected from emerging threats as they evolve. By using DevSecOps principles, mobile app developers can create secure applications that meet both user expectations and regulatory compliance requirements.

The Future of DevSecOps

As cyber threats continue to grow in sophistication, the need for robust security measures will only increase. The future of DevSecOps is promising, with organizations recognizing that security is not just an afterthought but a core component of the software development lifecycle.

As the tools and technologies surrounding DevSecOps evolve, we can expect to see even more automation, deeper integrations with artificial intelligence (AI) and machine learning (ML), and improved threat detection capabilities. These advancements will further reduce manual efforts, enhance the speed of security checks, and improve the accuracy of identifying potential vulnerabilities.

Moreover, as the adoption of cloud-native technologies and microservices architecture increases, DevSecOps will play an even more critical role in securing complex, distributed environments. Organizations will need to continue adapting and evolving their security practices to stay ahead of emerging threats, and DevSecOps will remain at the forefront of these efforts.

Conclusion: The Role of DevOps Solutions in Security

Incorporating security into the DevOps process through DevOps solutions is no longer optional; it is a necessity for modern businesses. DevSecOps enables organizations to deliver secure software at speed, ensuring that security is built into the development process from the start. With automated security testing, infrastructure as code, and continuous monitoring, DevSecOps helps organizations identify vulnerabilities early, reduce risks, and accelerate time-to-market.

By embracing DevSecOps, organizations can foster a culture of security, enabling teams to work collaboratively and proactively to address security challenges. As threats continue to evolve, integrating security into every aspect of the development lifecycle will be key to maintaining the integrity of applications and protecting sensitive data.

0 notes

Text

Fuel Your Coding Journey with Full Stack Development Mastery

In today’s fast-paced digital world, technology is evolving rapidly—and so are the demands for skilled developers who can build, optimize, and maintain both the front-end and back-end of web applications. This is where full stack development enters the spotlight. If you’re aspiring to become a well-rounded developer, it’s time to fuel your coding journey with full stack development mastery.

Mastering full stack development opens doors to a variety of career paths in software engineering, product development, and tech entrepreneurship. Whether you're a beginner or an experienced programmer, choosing the right training institute plays a crucial role in shaping your future.

What is Full Stack Development?

Full stack development refers to the ability to work on both the client-side (frontend) and server-side (backend) of web applications. A full stack developer is a versatile professional capable of building complete, functional, and scalable digital products.

The core components of full stack development include:

Frontend Technologies: HTML, CSS, JavaScript, React, Angular

Backend Technologies: Java, Node.js, Python, PHP

Databases: MySQL, MongoDB, PostgreSQL

Version Control Systems: Git, GitHub

Deployment & DevOps: Docker, Jenkins, AWS

A comprehensive understanding of these technologies allows developers to create seamless and user-friendly applications from start to finish.

Why Choose Full Stack as a Career?

The demand for full stack developers continues to grow as companies seek professionals who can manage complete project lifecycles. Here are some compelling reasons to fuel your coding journey with full stack development mastery:

Versatility: Ability to work on multiple layers of technology.

High Demand: Recruiters prefer candidates who can take ownership of both backend and frontend tasks.

Lucrative Salaries: Skilled full stack developers command competitive compensation.

Entrepreneurial Edge: Perfect skillset for launching tech startups or freelance projects.

Job Security: Wide range of industries need full stack expertise, from fintech to healthcare.

How to Start Your Full Stack Development Journey?

The first step is choosing the best full stack development training institute in Pune or your local area. Pune, being a rising IT hub, is home to several reputed institutes offering hands-on training with real-world projects.

A quality training institute should offer:

Practical Learning: Focus on live projects and real-time development.

Experienced Faculty: Mentors with real industry experience.

Career Support: Resume building, interview prep, and placement assistance.

Certifications: Industry-recognized credentials that enhance your profile.

Why Java is Still Relevant in Full Stack?

Even though newer technologies are emerging, Java continues to be a cornerstone in enterprise-level development. It is stable, secure, and widely used in backend systems.

If you’re starting from scratch or looking to strengthen your backend development skills, enrolling in a Java training institute in Pune can be a great foundation. Java-based frameworks like Spring Boot and Hibernate are still highly in demand in full stack job roles.

Benefits of Learning Java for Full Stack Development:

Robust and platform-independent.

Easy integration with front-end frameworks.

Strong community support and libraries.

Powerful tools for backend development.

What Makes Pune a Learning Hotspot?

Pune has emerged as a favorite destination for aspiring developers due to its balance of affordable education and booming tech industry. With the presence of numerous MNCs and startups, Pune provides a dynamic environment for learners.

When looking for the best full stack development training institute in Pune, make sure the institute provides:

Updated curriculum aligned with industry needs.

Mentorship from software architects and engineers.

Job placement records and success stories.

Internship opportunities with local IT firms.

Features to Look for in a Full Stack Course

Before enrolling, ensure the course offers:

Complete Stack Coverage: HTML5, CSS3, Bootstrap, JavaScript, React, Node.js, Express.js, MongoDB/MySQL, Git.

Project-Based Learning: Build projects like e-commerce platforms, dashboards, or portfolio websites.

Interactive Classes: Regular assessments, code reviews, and Q&A sessions.

Capstone Projects: Opportunity to showcase your skills through a final project.

Kickstart Your Journey Today

Whether you’re passionate about building web apps or aiming for a career switch, full stack development is the ideal launchpad. It equips you with everything you need to stand out in today’s competitive job market.

Remember, the right mentorship can make all the difference. Seek out a Java training institute in Pune that also offers full stack development programs. Or better, opt for the best full stack development training institute in Pune that gives you exposure to multiple technologies, industry insights, and placement opportunities.

Final Thoughts

It’s not just about learning to code; it’s about transforming the way you think and create digital solutions. When you fuel your coding journey with full stack development mastery, you equip yourself with tools and knowledge that go beyond theory.

Take the leap. Choose the right course. Surround yourself with the right mentors. And soon, you’ll not just be coding—you’ll be building, innovating, and leading the digital future.

0 notes

Text

DevOps for Mobile: CI/CD Tools Every Flutter/React Native Dev Needs

Does shipping a new version of your mobile app feel like orchestrating a mammoth undertaking, prone to late nights, manual errors, and stressed-out developers? You're not alone. Many teams building with flexible frameworks like Flutter and React Native grapple with antiquated, laborious release processes. The dynamic landscape of mobile demands agility, speed, and unwavering quality – traits often antithetical to manual builds, testing, and deployment. Bridging this gap requires a dedicated approach: DevOps for Mobile. And central to that approach are robust CI/CD tools.

The Bottlenecks in Mobile App Delivery

Mobile application programming inherently carries complexity. Multiple platforms (iOS and Android), diverse device types, intricate testing matrices, app store submission hurdles, and the constant churn of framework and SDK updates contribute to a multifaceted environment. Without disciplined processes, delivering a high-quality, stable application with consistent velocity becomes a significant challenge.

Common Pitfalls Hindering Release Speed

Often, teams find themselves wrestling with several recurring issues that sabotage their release pipelines:

Manual Builds and Testing: Relying on developers to manually build app binaries for each platform is not only time-consuming but also highly susceptible to inconsistencies. Did you use the right signing certificate? Was the correct environment variable set? Manual testing on devices adds another layer of potential omission and delays.

Code Integration Nightmares: When multiple developers merge their code infrequently, the integration phase can devolve into a stressful period of resolving complex conflicts, often introducing unexpected bugs.

Inconsistent Environments: The "it works on my machine" syndrome is pervasive. Differences in SDK versions, build tools, or operating systems between developer machines and build servers lead to unpredictable outcomes.

Lack of Automated Feedback: Without automated testing and analysis, issues like code quality degradation, performance regressions, or critical bugs might only be discovered late in the development cycle, making them expensive and time-consuming to fix.

Laborious Deployment Procedures: Getting a mobile app from a built binary onto beta testers' devices or into the app stores often involves numerous manual steps – uploading artifacts, filling out metadata, managing releases. This is boring work ripe for automation and error.

The aggregate effect of these bottlenecks is a slow, unpredictable release cycle, preventing teams from iterating quickly based on user feedback and market demands. It's a recalcitrant problem needing a systemic resolution.

What DevOps for Mobile Truly Means

DevOps for Mobile applies the foundational principles of the broader DevOps philosophy – collaboration, automation, continuous improvement – specifically to the mobile development lifecycle. It's about fostering a culture where development and operations aspects (though mobile operations are different from traditional server ops) work seamlessly.

Shifting Left and Automation Imperative

A core tenet is "shifting left" – identifying and resolving problems as early as possible in the pipeline. Catching a build issue during commit is vastly preferable to discovering it hours later during manual testing, or worse, after deployment. This early detection is overwhelmingly facilitated by automation. Automation is not merely a convenience in DevOps for Mobile; it's an imperative. From automated code analysis and testing to automated building and distribution, machinery handles the repetitive, error-prone tasks. This frees up developers to focus on writing features and solving complex problems, simultaneously enhancing the speed, reliability, and quality of releases. As an observed pattern, teams that prioritize this shift typically exhibit higher morale and deliver better software.

Core Components of Mobile App Development Automation

Building an effective DevOps for Mobile pipeline, especially for Flutter or React Native apps, centers around implementing Continuous Integration (CI) and Continuous Delivery/Deployment (CD).

The CI/CD Tools Spectrum

Continuous Integration (CI): Every time a developer commits code to a shared repository, an automated process triggers a build. This build compiles the code, runs unit and integration tests, performs static code analysis, and potentially other checks. The goal is to detect integration problems immediately. A failed build means someone broke something, and the automated feedback loop notifies the team instantly.

Continuous Delivery (CD): Building on CI, this process automatically prepares the app for release after a successful build and testing phase. This could involve signing the application, packaging it, and making it available in a repository or artifact store, ready for manual deployment to staging or production environments.

Continuous Deployment (CD): The next evolution of CD. If all automated tests pass and other quality gates are met, the application is automatically deployed directly to production (e.g., app stores or internal distribution). This requires a high level of confidence in your automated testing and monitoring.

Implementing these components requires selecting the right CI/CD tools that understand the nuances of building for iOS and Android using Flutter and React Native.

Essential CI/CD Tools for Flutter & React Native Devs

The ecosystem of CI/CD tools is extensive, ranging from versatile, self-hosted platforms to specialized cloud-based mobile solutions. Choosing the right ones depends on team size, budget, technical expertise, and specific needs.

Picking the Right Platforms

Several platforms stand out for their capabilities in handling mobile CI/CD:

Jenkins: A venerable, open-source automation server. It's highly extensible via a myriad of plugins, offering immense flexibility. However, setting up mobile builds, especially on macOS agents for iOS, can be complex and require substantial configuration and maintenance effort.

GitLab CI/CD: Integrated directly into GitLab repositories, this offers a compelling, unified platform experience. Configuration is via a `.gitlab-ci.yml` file, making it part of the code repository itself. It's robust but also requires managing runners (build agents), including macOS ones.

GitHub Actions: Tightly integrated with GitHub repositories, Actions use YAML workflows (`.github/workflows`) to define automation pipelines. It provides hosted runners for Linux, Windows, and macOS, making iOS builds simpler out-of-the-box compared to purely self-hosted options. It's become a ubiquitous choice for projects hosted on GitHub.

Bitrise: A cloud-based CI/CD specifically designed for mobile apps. Bitrise offers pre-configured build steps (called "Workflows") and integrations tailored for iOS, Android, Flutter, React Native, and more. This specialization greatly simplifies setup and configuration, though it comes as a managed service with associated costs.

AppCenter (Microsoft): Provides integrated CI/CD, testing, distribution, and analytics for mobile apps, including React Native and Flutter support (though Flutter support might be through specific configurations). It aims for a comprehensive mobile development platform experience.

Fastlane: While not a CI server itself, Fastlane is an open-source toolset written in Ruby that simplifies cumbersome iOS and Android deployment tasks (like managing signing, taking screenshots, uploading to stores). It's almost an indispensable complement to any mobile CI system, as the CI server can invoke Fastlane commands to handle complex distribution steps.

The selection often boils down to the build environment you need (especially macOS for iOS), the required level of customization, integration with your existing VCS, and whether you prefer a managed service or self-hosting.

Specific Flutter CI/CD Considerations

Flutter projects require the Flutter SDK to be present on the build agents. Both iOS and Android builds originate from the single Flutter codebase.

Setup: The CI system needs access to the Flutter SDK. Some platforms, like Bitrise, have steps explicitly for this. On Jenkins/GitLab/GitHub Actions, you'll need a step to set up the Flutter environment (often using tools like `flutter doctor`).

Platform-Specific Builds: Within the CI pipeline, you'll trigger commands like `flutter build ios` and `flutter build apk` or `flutter build appbundle`.

Testing: `flutter test` should run unit and widget tests. You might need device/emulator setups or cloud testing services (like Firebase Test Lab, Sauce Labs, BrowserStack) for integration/end-to-end tests, though this adds complexity.

Signing: Signing both Android APKs/App Bundles and iOS IPAs is crucial and requires careful management of keystores and provisioning profiles on the CI server. Fastlane is particularly useful here for iOS signing complexity management.

Teams observed grappling with Flutter CI/CD often struggle most with the iOS signing process on CI platforms.

Specific React Native CI/CD Considerations

React Native projects involve native build tools (Xcode for iOS, Gradle for Android) in addition to Node.js and yarn/npm for the JavaScript parts.

Setup: The build agent needs Node.js, npm/yarn, Android SDK tools, and Xcode (on macOS). NVM (Node Version Manager) or similar tools are helpful for managing Node versions on the build agent.

Platform-Specific Steps: The CI pipeline will have distinct steps for Android (`./gradlew assembleRelease` or `bundleRelease`) and iOS (`xcodebuild archive` and `xcodebuild exportArchive`).

Dependencies: Ensure npm/yarn dependencies (`yarn install` or `npm install`) and CocoaPods dependencies for iOS (`pod install` from within the `ios` directory) are handled by the pipeline before the native build steps.

Testing: Jest is common for unit tests. Detox or Appium are popular for end-to-end testing, often requiring dedicated testing infrastructure or cloud services.

Signing: Similar to Flutter, secure management of signing credentials (Android keystores, iOS certificates/profiles) is essential on the CI server. Fastlane is highly relevant for React Native iOS as well.

Based on project analysis, React Native CI/CD complexity often arises from the interaction between the JavaScript/Node layer and the native build processes, particularly dependency management (`node_modules`, CocoaPods) and environmental differences.

Implementing a Robust Mobile CI/CD Pipeline

Building your Mobile App Development Automation pipeline is not a weekend project. It requires deliberate steps and iteration.

Phased Approach to Adoption

Approaching CI/CD implementation incrementally yields better results and less disruption.

Phase One: Code Quality and Basic CI

Set up automated linters (e.g., ESLint/Prettier for React Native, `flutter analyze` for Flutter).

Configure CI to run these linters on every push or pull request. Fail the build on lint errors.

Integrate unit and widget tests into the CI build process. Fail the build on test failures. This is your foundational CI.

Phase Two: Automated Building and Artifacts

Extend the CI process to automatically build unsigned Android APK/App Bundle and iOS IPA artifacts on successful commits to main/develop branches.

Store these artifacts securely (e.g., S3, built-in CI artifact storage).

Focus on ensuring the build environment is stable and consistent.

Phase Three: Signing and Internal Distribution (CD)

Securely manage signing credentials on your CI platform (using secrets management).

Automate the signing of Android and iOS artifacts.

Automate distribution to internal testers or staging environments (e.g., using Firebase App Distribution, HockeyApp/AppCenter, TestFlight). This is where Fastlane becomes exceedingly helpful.

Phase Four: Automated Testing Enhancement

Integrate automated UI/integration/end-to-end tests (e.g., Detox, Appium) into your pipeline, running on emulators/simulators or device farms. Make passing these tests a mandatory step for deployment.

Consider performance tests or security scans if applicable.

Phase Five: App Store Distribution (Advanced CD/CD)

Automate the process of uploading signed builds to the Apple App Store Connect and Google Play Console using tools like Fastlane or platform-specific integrations.

Start with automating beta releases to app stores.

Move towards automating production releases cautiously, building confidence in your automated tests and monitoring.

Integrating Testing and Code Signing

These two elements are pragmatic pillars of trust in your automated pipeline.

Testing: Automated tests at various levels (unit, integration, UI, E2E) are your primary quality gate. No pipeline step should proceed without relevant tests passing. This reduces the likelihood of bugs reaching users. Integrate code coverage tools into your CI to monitor test effectiveness.

Code Signing: This is non-negotiable for distributing mobile apps. Your CI system must handle the complexities of managing and applying signing identities securely. Using features like secret variables on your CI platform to store certificates, keys, and keystore passwords is essential. Avoid hardcoding credentials.

Adopting a systematic approach, starting simple and progressively adding complexity and automation, is the recommended trajectory.

Common Errors and How to Navigate Them

Even with excellent tools, teams stumble during DevOps for Mobile adoption. Understanding common missteps helps circumvent them.

Avoiding Integration Headaches

Ignoring Native Layer Nuances: Flutter and React Native abstraction is powerful, but builds eventually hit the native iOS/Android toolchains. Errors often stem from misconfigured native environments (Xcode versions, Gradle issues, signing problems) on the CI agent. Ensure your CI environment precisely mirrors your development environment or uses reproducible setups (like Docker if applicable, though tricky for macOS).

Credential Management Snafus: Hardcoding API keys, signing credentials, or environment-specific secrets into code or build scripts is a critical security vulnerability. Always use the CI platform's secret management features.

Flaky Tests: If your automated tests are unreliable (sometimes passing, sometimes failing for no obvious code reason), they become a major bottleneck and erode trust. Invest time in making tests deterministic and robust, especially UI/E2E tests running on emulators/devices.

Maintaining Pipeline Health

Neglecting Pipeline Maintenance: CI/CD pipelines need attention. Dependency updates (SDKs, Fastlane versions, etc.), changes in app store requirements, or tool updates can break pipelines. Regularly allocate time for pipeline maintenance.

Slow Builds: Long build times kill productivity and developer flow. Continuously optimize build times by leveraging caching (Gradle cache, CocoaPods cache), using faster machines (if self-hosting), or optimizing build steps.

Over-Automating Too Soon: While the goal is automation, attempting to automate production deployment from day one without robust testing, monitoring, and rollback strategies is foolhardy. Progress gradually, building confidence at each phase.

The vicissitudes of platform updates and tooling compatibility necessitate continuous vigilance in pipeline maintenance.

Future Trends in Mobile App Development Automation

The domain of Mobile App Development Automation isn't static. Emerging trends suggest even more sophisticated pipelines in 2025 and beyond.

AI/ML in Testing and Monitoring

We might see greater integration of Artificial Intelligence and Machine Learning:

AI-Assisted Test Case Generation: Tools suggesting new test cases based on code changes or user behavior data.

Smart Test Selection: ML models identifying which tests are most relevant to run based on code changes, potentially reducing build times for small changes.

Anomaly Detection: Using ML to monitor app performance and crash data, automatically flagging potential issues surfaced during or after deployment.

Low-Code/No-Code DevOps

As CI/CD tools mature, expect more platforms to offer low-code or no-code interfaces for building pipelines, abstracting away YAML or scripting complexities. This could make sophisticated DevOps for Mobile accessible to a wider range of teams. The paradigm is shifting towards usability.

Key Takeaways

Here are the essential points for Flutter and React Native developers considering or improving their DevOps for Mobile practice:

Manual mobile release processes are inefficient, error-prone, and hinder rapid iteration.

DevOps for Mobile, centered on CI/CD automation, is imperative for quality and speed.

CI/CD tools automate building, testing, and deploying, enabling faster feedback loops.

Choose CI/CD tools wisely, considering mobile-specific needs like macOS builds and signing.

Platforms like Bitrise specialize in mobile, while Jenkins, GitLab CI, and GitHub Actions are versatile options often enhanced by tools like Fastlane.

Implement your Robust Mobile CI/CD pipeline in phases, starting with code quality and basic CI, progressing to automated distribution and testing.

Prioritize automated testing at all levels and secure code signing management in your pipeline.

Be mindful of common errors such as native layer configuration issues, insecure credential handling, flaky tests, and neglecting pipeline maintenance.

The future involves more intelligent automation via AI/ML and more accessible pipeline configuration through low-code/no-code approaches.

Frequently Asked Questions

What are the key benefits of 'DevOps for Mobile: CI/CD Tools Every Flutter/React Native Dev Needs'?

Adopting CI/CD drastically speeds up mobile development and increases application reliability.

How does 'DevOps for Mobile: CI/CD Tools Every Flutter/React Native Dev Needs' help reduce errors?

Automation within CI/CD pipelines minimizes human errors common in manual build and release steps.

Why is 'DevOps for Mobile: CI/CD Tools Every Flutter/React Native Dev Needs' vital for team collaboration?

CI ensures code integration issues are detected early, fostering better collaboration and less conflict.

Can 'DevOps for Mobile: CI/CD Tools Every Flutter/React Native Dev Needs' apply to small projects?

Yes, even small teams benefit significantly from the stability and efficiency gains provided by automation.

Where does 'DevOps for Mobile: CI/CD Tools Every Flutter/React Native Dev Needs' save the most time?

Significant time savings come from automating repetitive tasks like building, testing, and distributing.

Recommendations

To streamline your Mobile App Development Automation, especially within the dynamic world of Flutter and React Native, embracing CI/CD is non-negotiable for competitive delivery. The choice of CI/CD tools will hinge on your team's particular pragmatic needs and infrastructure. Begin by automating the most painful parts of your current process – likely building and basic testing. Incrementally layer in more sophistication, focusing on solidifying testing and perfecting secure distribution methods. Stay abreast of evolving tooling and methodologies to keep your pipeline performant and relevant. The investment in DevOps for Mobile pays exponential dividends in terms of developer satisfaction, product quality, and business agility. Start planning your CI/CD adoption strategy today and experience the transformation from manual burden to automated excellence. Share your experiences or ask questions in the comments below to foster collective learning.

#DevOps#MobileDevOps#FlutterDevelopment#ReactNative#CI/CD#AppDevelopment#MobileAppDevelopment#FlutterDev#ReactNativeDev#DeveloperTools#SoftwareDevelopment#AutomationTools#MobileDevelopmentTips#ContinuousIntegration#ContinuousDeployment#CodePipeline#TechStack#BuildAutomation#AppDeployment#2025Development

0 notes

Text

The Role of AI in Software Testing: Empowering the Future with Genqe

Introduction

Software testing is an essential component of the software development lifecycle. It ensures that the software behaves as intended and is free of bugs, vulnerabilities, and defects. As applications grow more complex and the pace of development accelerates due to Agile and DevOps methodologies, traditional manual testing methods are struggling to keep up. This is where Artificial Intelligence (AI) enters the scene.

AI is transforming every facet of technology — and software testing is no exception. Among the AI-driven testing tools available, Genqe is carving a niche for itself with its powerful, intuitive, and intelligent test automation capabilities. In this article, we explore the evolving role of AI in software testing with a deep dive into how Genqe is revolutionizing the space.

Why Traditional Testing Is Not Enough

Manual testing, although thorough, is time-consuming, repetitive, and often prone to human error. Even traditional automation frameworks, while faster than manual efforts, require significant coding, setup, and maintenance. As software changes frequently, automation scripts must be updated continuously, which adds overhead.

Here are some common challenges faced by traditional testing teams:

Test maintenance becomes expensive and tedious.

Test coverage remains limited due to time constraints.

Human error can lead to overlooked bugs or flawed logic.

Regression testing is difficult to scale.

Lack of adaptability in existing test scripts.

AI addresses these pain points, and Genqe takes it a step further with an innovative, no-code platform built specifically for intelligent software testing.

Understanding the Role of AI in Testing

AI brings cognitive capabilities to testing tools, enabling them to learn from data, predict risks, generate test cases, and even self-heal test scripts. Here’s how AI adds value:

1. Test Case Generation

AI can analyze requirements, user stories, and application behavior to automatically generate test cases. This saves time and ensures broader test coverage. Genqe uses natural language processing to understand application flows and translate them into executable test cases.

2. Self-Healing Test Scripts

One of the biggest frustrations in test automation is broken scripts due to minor UI changes. AI-powered tools like Genqe can automatically detect and update scripts when UI elements change, eliminating the need for constant manual intervention.

3. Smart Test Execution

AI prioritizes test cases based on risk, usage frequency, or historical defect data. Genqe optimizes execution by focusing on the most critical parts of your application first, saving time and resources.

4. Defect Prediction

By analyzing code history, bug patterns, and commit data, AI can predict where defects are most likely to occur. Genqe uses intelligent analytics to suggest focus areas for testing, reducing the chances of post-release bugs.

5. Intelligent Test Maintenance

Instead of updating hundreds of scripts manually, Genqe uses AI to identify outdated tests, obsolete steps, or missing validations. This helps keep the test suite healthy and effective over time.

6. Natural Language Interface

Genqe’s AI allows testers to write test cases in plain English. No coding required. Just describe what you want to test, and Genqe converts it into executable automation scripts using its proprietary AI engine.

Genqe: The Future of AI-Powered Testing

Genqe is a next-generation test automation platform that harnesses the full potential of AI to simplify and supercharge software testing. Unlike conventional tools that require manual coding and upkeep, Genqe offers a seamless, no-code environment where even non-technical users can create, execute, and maintain automated tests.

Key Features of Genqe:

AI-Based Test Case Creation: Write in natural language; Genqe converts it to automated scripts.

Cross-Platform Testing: Supports web, mobile, desktop, and API testing from a single interface.

Visual Testing: Genqe can compare UI elements pixel-by-pixel using AI vision to catch subtle changes.

Self-Healing Tests: Automatically updates locators and UI paths when the application changes.

Smart Dashboards: Real-time insights into test results, flaky tests, and potential risk areas.

Collaboration Ready: Integrates with CI/CD tools and collaboration platforms for team-based testing.

By reducing the technical barrier, Genqe empowers manual testers, business analysts, and QA engineers alike to participate in the test automation process.

How Genqe Enhances the Testing Lifecycle

Let’s walk through how Genqe transforms the typical testing lifecycle with AI:

1. Requirement Analysis

Genqe can interpret user stories and requirements to create a list of test scenarios. This ensures alignment with business goals and prevents missing test coverage.

2. Test Creation

Instead of writing lines of code, users type scenarios like:

“Login with valid credentials and verify the dashboard loads.” Genqe instantly generates the automated script for it, complete with validations.

3. Test Execution

Tests can be scheduled, run in parallel, or triggered automatically from CI/CD pipelines. Genqe supports all major browsers and devices for comprehensive coverage.

4. Test Maintenance

When an element’s ID or structure changes, Genqe auto-corrects the test script using its AI engine. This drastically reduces downtime and manual rework.

5. Reporting & Insights

Genqe’s AI-driven analytics identify trends, pinpoint flaky tests, and provide actionable feedback to development teams — making quality assurance a strategic contributor, not just a checkpoint.

Use Cases of AI in Testing with Genqe

E-Commerce Platform

An online shopping website sees frequent updates. Genqe helps automate the entire purchase flow while adapting to UI changes daily, ensuring checkout processes are never broken.

Mobile App Testing

With hundreds of devices and screen sizes, Genqe’s AI can detect responsive issues and functional bugs across platforms, reducing the burden on QA teams.

Agile Sprint Testing

In fast-moving Agile teams, Genqe enables testers to generate and run tests within minutes, keeping up with rapid development cycles.

Benefits of Using Genqe

Faster Time to Market Automation reduces regression testing time drastically. With Genqe, you can deploy confidently in shorter cycles.

Reduced Costs No need for large automation teams or long training hours. Genqe simplifies testing and lowers overhead.

High Accuracy AI reduces the likelihood of missing edge cases and inconsistencies, ensuring higher-quality releases.

Increased Collaboration With Genqe’s no-code platform, developers, testers, and product managers can all contribute to quality assurance.

Conclusion

AI is no longer a futuristic add-on; it’s an essential component of modern software testing. Tools like Genqe are leading this transformation by making testing smarter, faster, and more accessible. Genqe’s unique ability to combine natural language processing, self-healing automation, and AI-driven analytics creates a seamless testing experience from creation to execution.

As organizations strive to release high-quality software at lightning speed, leveraging AI tools like Genqe is not just an advantage — it’s a necessity.

In a world where software quality defines brand reputation, Genqe ensures you’re always a step ahead.

0 notes

Text

Why Cross Platform Mobile App Development Services Dominate 2025

The demand for fast, scalable, and user-friendly mobile applications has led to the dominance of cross platform mobile app development services in 2025. Businesses now realize that developing separate apps for Android and iOS not only doubles their efforts but also increases maintenance costs. With cross-platform frameworks evolving rapidly, it has become easier to deliver native-like experiences on multiple devices using a single codebase.

By leveraging a single development cycle, cross-platform tools help companies achieve better time-to-market, broader user reach, and efficient use of resources. These advantages make such services an integral part of modern digital strategies.

In this article, we explore why cross-platform development continues to thrive and highlight companies like Autuskeyl, known for delivering cutting-edge app solutions.

The Cross-Platform Advantage

Cross-platform development enables developers to write code once and deploy it across various platforms. This not only reduces time but also ensures uniformity in app functionality and UI.

Key Benefits:

Faster development and deployment.

Lower cost compared to native app development.

Consistent user experience across devices.

Easier updates and maintenance.

Thanks to advancements in technology, cross-platform apps today can rival native apps in terms of performance, aesthetics, and usability.

Most Popular Cross-Platform Frameworks in 2025

Flutter

Flutter remains a favorite in 2025. It’s backed by Google and provides a native performance feel using the Dart language.

Offers beautiful, customizable UI components.

Built-in hot reload speeds up development.

Extensive documentation and community support.

Flutter’s efficiency makes it ideal for startups and enterprises looking to launch their products faster.

React Native

Developed by Meta, React Native is widely adopted thanks to its JavaScript foundation. It integrates well with existing web technologies and offers strong community support.

Enables up to 90% code reuse.

Smooth integration with native modules.

Trusted by big names like Facebook, Airbnb, and Walmart.

React Native is well-suited for apps with dynamic user interfaces and regular feature updates.

Xamarin

Xamarin, supported by Microsoft, is best for apps that need robust backend integration with Azure and .NET.

Uses C# for logic, improving code manageability.

MAUI support in 2025 simplifies cross-platform UI design.

Ideal for enterprise-grade applications.

It is often chosen by corporations needing reliability, security, and seamless cloud integration.

Emerging Players to Watch

Kotlin Multiplatform

Kotlin Multiplatform Mobile (KMM) is gaining traction for its ability to share business logic while keeping platform-specific UIs.

Native performance with Kotlin’s simplicity.

Official support from JetBrains and Google.

Best for companies that want a mix of native UX and shared backend logic.

Ionic + Capacitor

Ionic has evolved with Capacitor, its native runtime, allowing better integration with native functionality.

Based on web technologies.

Allows progressive web app (PWA) development too.

Ideal for businesses focusing on web-first apps with mobile reach.

Autuskeyl: A Trusted Cross-Platform Development Partner

Among the leading companies offering cross platform mobile app development services, Autuskeyl stands out. Known for its strategic approach and technical proficiency, Autuskeyl builds apps that are scalable, secure, and visually engaging.

Why Choose Autuskeyl:

Expert teams proficient in Flutter, React Native, Xamarin, and more.

Emphasis on user experience, performance, and future readiness.

Complete product lifecycle management from concept to launch.

Autuskeyl’s transparent communication and focus on timely delivery have helped them build long-term relationships with clients across industries. They also integrate DevOps practices to ensure smooth deployment and maintenance.

Real-World Applications of Cross-Platform Apps

In 2025, industries ranging from healthcare to eCommerce are relying on cross-platform apps to engage their customers. These apps enable:

Appointment booking systems for clinics.

Seamless shopping experiences across mobile devices.

Secure banking and fintech apps with minimal code redundancy.

Logistics apps that function in real time across platforms.