#demo2

Explore tagged Tumblr posts

Photo

Source “Demo II: ZZT-OOP Programming Language” by David Pinkston (1996) [DEMO2.ZZT] - “Menu” Play This World Online

0 notes

Text

THE HELLHOUND DELIVERS. . .

ㅤㅤㅤㅤㅤㅤㅤㅤTHE GRIEFER DISCORD LAYOUT !!

ㅤㅤㅤㅤꔫㅤ ༽ ㅤnf2uㅤ.ㅤ.ㅤ.ㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤ ㅤㅤㅤㅤㅤnotes !ㅤィㅤyet another one, wake up blocktales fans self indulgent the little griefer is made by @/kelcair

#𝝑𝝔ㅤ𝓖raphics !ㅤᐢ..ᐢ#ㅤSelf Indulgent Graphics#rentry#rentry graphic#rentry stuff#rentry graphics#editing resources#rentry decor#rentry gif#rentry icon#rentry inspo#rentry layouts#discord#discord banner#discord pfp#discord layouts#blocktales#blocktales demo2#griefer#griefer blocktales

138 notes

·

View notes

Text

headcanon that griefer gains shape-shifting power after demo2

#and hatred again because I like it#especially hatred builderman#block tales#hatred#griefer#toxichero#myart

2K notes

·

View notes

Text

Random thoughts and ideas. I wanna make kinsona's, I like kinning characters... there are a few that I kind of want to list, but the main ones I've been wanting to make are gonna be listed,,, and the reason why.

1x1x1x1 (Forsaken version or smt) - purely because from what i've heard, he was the creation of hatred from Shedletsky. I want to more so input most of my anger like shit into this kinsona, and if j do make it... I want it to be pimk,,,,, grins because silly

Shelly (Dandy's World) - more so just because of my average life in school and shit. I'm here, people acknowledge that I'm here, but its not like I'm that important and I often get egnored (also why I really like Shelly, I want to give her the love she doesnt really get. I do this with a lot of my comfort characters, where I see them suffer similar things as to me and I usually get really clingy or smt to them because I want to give them a better life then what I have sometimes???)

Yatta (Dandy's World) - pretty self explanatory, I'M HYPER LOUD AND SHIT RAGHHHH, I feel like Yatta just over all fits me, she's just mecore. Me in another world 🫶

Greifer/Brad (Blocktales) - from what i've seen out of blocktales, he was arrogant and really "selfish" in demo2, but later on (atleast until like. Demo4 came out) he turned out to only be doing what he did because he didn't feel like he was enough for his dad. Personally, THIS IS ME. If I get yelled at or like. Told not to talk abt smt I'm DESTINED TO HAVE OR PROTECT, IM SUPPOSE TO DO OR SMT. Yeah, I'll feel like I'm not good enough either and I'd attempt to be as good as possible too man. (Also I'm so joyous that we get to save Brad vro 🫶)

These are like. My biggest ones that I want to make Kinsona's out of. Oh and Nothing There because I like this thing.

#rant or smt#EGNORE ME PLEASE. I JUST NEED SOMEWHERE TO TALK ABOUT THIS UGH.#ig personal stuff?????#personal

4 notes

·

View notes

Note

I might’ve sent this already but whatever

so like it took me like a month to beat cruel king (I beat em in demo2 and uhh I started playing block tales a week after it released lol) and GOD I was so relieved to win and I wanted to tweak the hell out but I was on vacation so I couldn’t tweak out ;-; I beat him with someone who was also trying to beat him , shoutout to that guy LOL

-> VERSION.6

Well congrats! (I giggled, did you tweak out as soon as you got home?)

#BT IC Confessions#BT IC Version.6#block tales#blocktales#confession blog#block tales roblox#roblox block tales

3 notes

·

View notes

Text

2 notes

·

View notes

Text

JFET増幅回路連結でもっと歪むブースター

前回紹介した、JFET差動増幅回路を使ったブースターに少し手を加えてみました。

前回の記事はこちら。

ここで使っているJFET差動増幅回路は、バイアスをかけずに(0Vを中心にプラスマイナス両方に振れる)信号を増幅できます。ということで、これを単純に直列に2つ連結してみました。回路としてはこんな感じです。

こうするとゲインはおよそ58.4dBほどになりました。dBでは2倍ですが、振幅で見ると25倍×25倍で225倍、とてもハイゲインです。

三角でシミュレーションすると思いっきり歪みますね。また、よく見ていただくと分かるのですが、上下で若干非対称なクリッピングになっています。これは、前回のものからカレントミラー回路(トランジスタに流れる電流を制限する回路)の抵抗値を変えた(R7、R20を12kから10kに変更した)ことで差動増幅回路の出力電圧が全体的に下がり(一般的なトランジスタ回路においてバイアス電圧が下がったことに相当)、これによって出力バッファ部分のトランジスタでハードクリッピングが発生しているためです。

実際の出音はこんな感じです。

この回路はカレントミラー回路や差動増幅回路の抵抗値を変えるとクリッピングの仕方や動作点が変わり、結果として歪み方も変化するので、試行錯誤してみるとよさそうです(続く)。

2 notes

·

View notes

Text

can i admit something. every time griefer called us punk in demo2 i got flustered

0 notes

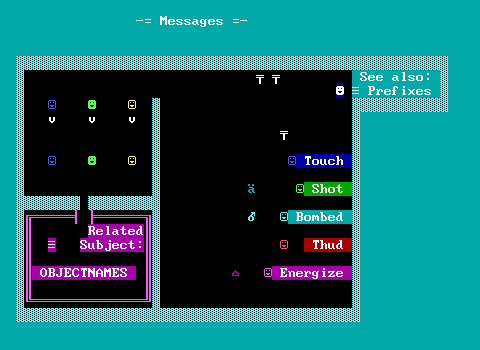

Photo

Source “Demo II: ZZT-OOP Programming Language” by David Pinkston (1996) [DEMO2.ZZT] - “Messages” Play This World Online

1 note

·

View note

Audio

(moodybluezzzz)

0 notes

Text

flyswatter

demo2

buddha

cheshire cat

dude ranch

enema of the state

take off your pants and jacket

untitled

neighborhoods

california

nine

one more time...

1 note

·

View note

Text

Recording gameplay for the next vid

And lemme tell ya rn I'M SUFFERING-WHY DOES EVERYONE HAVE MULTIPLE ATTACKS IN DEMO2-

0 notes

Text

Melody Ep7 (Demo2) + Orquestra

1 note

·

View note

Text

alright ok

1 note

·

View note