#document parsing api

Explore tagged Tumblr posts

Note

sorry if this is too personal and pls ignore if you want to, i remember you said you work as a writer before. do you mind explaining what kind of writing you do and how you got into it?

i work as a technical writer. more specifically i work as a technical writer for a software company. right now i'm writing client-facing stuff but previously (at my last company) i worked on aws and rest api documentation for engineers

as for how i got into it, my degree is in something completely unrelated. but i went to a prestigious (relative to where i live) school with an extremely good internship program, and because the program wasn't restricted to only jobs related to your degree, i was able to get jobs in technical writing despite the zero correlation. i was really lucky to have my first few internships be with massive companies which helped build my resume for after i graduated

this is of course not the most representative experience. from what i can tell most technical writers are either a) people who majored in something like english and then took online certifications in coding, or b) people who majored in computer science, engineering, etc. who happen to be good writers

having an above average understanding of any coding language but especially javascript (typescript), go, python, and java helps a lot in landing jobs in tech. at my old job my coworker (who had the same responsibilities as me) really struggled with the workload that i found very easy, mainly because he didn't know any coding languages and so it took him a lot longer to parse the information we were given

5 notes

·

View notes

Text

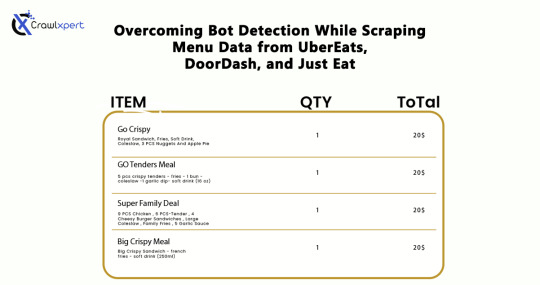

Overcoming Bot Detection While Scraping Menu Data from UberEats, DoorDash, and Just Eat

Introduction

In industries where menu data collection is concerned, web scraping would serve very well for us: UberEats, DoorDash, and Just Eat are the some examples. However, websites use very elaborate bot detection methods to stop the automated collection of information. In overcoming these factors, advanced scraping techniques would apply with huge relevance: rotating IPs, headless browsing, CAPTCHA solving, and AI methodology.

This guide will discuss how to bypass bot detection during menu data scraping and all challenges with the best practices for seamless and ethical data extraction.

Understanding Bot Detection on Food Delivery Platforms

1. Common Bot Detection Techniques

Food delivery platforms use various methods to block automated scrapers:

IP Blocking – Detects repeated requests from the same IP and blocks access.

User-Agent Tracking – Identifies and blocks non-human browsing patterns.

CAPTCHA Challenges – Requires solving puzzles to verify human presence.

JavaScript Challenges – Uses scripts to detect bots attempting to load pages without interaction.

Behavioral Analysis – Tracks mouse movements, scrolling, and keystrokes to differentiate bots from humans.

2. Rate Limiting and Request Patterns

Platforms monitor the frequency of requests coming from a specific IP or user session. If a scraper makes too many requests within a short time frame, it triggers rate limiting, causing the scraper to receive 403 Forbidden or 429 Too Many Requests errors.

3. Device Fingerprinting

Many websites use sophisticated techniques to detect unique attributes of a browser and device. This includes screen resolution, installed plugins, and system fonts. If a scraper runs on a known bot signature, it gets flagged.

Techniques to Overcome Bot Detection

1. IP Rotation and Proxy Management

Using a pool of rotating IPs helps avoid detection and blocking.

Use residential proxies instead of data center IPs.

Rotate IPs with each request to simulate different users.

Leverage proxy providers like Bright Data, ScraperAPI, and Smartproxy.

Implement session-based IP switching to maintain persistence.

2. Mimic Human Browsing Behavior

To appear more human-like, scrapers should:

Introduce random time delays between requests.

Use headless browsers like Puppeteer or Playwright to simulate real interactions.

Scroll pages and click elements programmatically to mimic real user behavior.

Randomize mouse movements and keyboard inputs.

Avoid loading pages at robotic speeds; introduce a natural browsing flow.

3. Bypassing CAPTCHA Challenges

Implement automated CAPTCHA-solving services like 2Captcha, Anti-Captcha, or DeathByCaptcha.

Use machine learning models to recognize and solve simple CAPTCHAs.

Avoid triggering CAPTCHAs by limiting request frequency and mimicking human navigation.

Employ AI-based CAPTCHA solvers that use pattern recognition to bypass common challenges.

4. Handling JavaScript-Rendered Content

Use Selenium, Puppeteer, or Playwright to interact with JavaScript-heavy pages.

Extract data directly from network requests instead of parsing the rendered HTML.

Load pages dynamically to prevent detection through static scrapers.

Emulate browser interactions by executing JavaScript code as real users would.

Cache previously scraped data to minimize redundant requests.

5. API-Based Extraction (Where Possible)

Some food delivery platforms offer APIs to access menu data. If available:

Check the official API documentation for pricing and access conditions.

Use API keys responsibly and avoid exceeding rate limits.

Combine API-based and web scraping approaches for optimal efficiency.

6. Using AI for Advanced Scraping

Machine learning models can help scrapers adapt to evolving anti-bot measures by:

Detecting and avoiding honeypots designed to catch bots.

Using natural language processing (NLP) to extract and categorize menu data efficiently.

Predicting changes in website structure to maintain scraper functionality.

Best Practices for Ethical Web Scraping

While overcoming bot detection is necessary, ethical web scraping ensures compliance with legal and industry standards:

Respect Robots.txt – Follow site policies on data access.

Avoid Excessive Requests – Scrape efficiently to prevent server overload.

Use Data Responsibly – Extracted data should be used for legitimate business insights only.

Maintain Transparency – If possible, obtain permission before scraping sensitive data.

Ensure Data Accuracy – Validate extracted data to avoid misleading information.

Challenges and Solutions for Long-Term Scraping Success

1. Managing Dynamic Website Changes

Food delivery platforms frequently update their website structure. Strategies to mitigate this include:

Monitoring website changes with automated UI tests.

Using XPath selectors instead of fixed HTML elements.

Implementing fallback scraping techniques in case of site modifications.

2. Avoiding Account Bans and Detection

If scraping requires logging into an account, prevent bans by:

Using multiple accounts to distribute request loads.

Avoiding excessive logins from the same device or IP.

Randomizing browser fingerprints using tools like Multilogin.

3. Cost Considerations for Large-Scale Scraping

Maintaining an advanced scraping infrastructure can be expensive. Cost optimization strategies include:

Using serverless functions to run scrapers on demand.

Choosing affordable proxy providers that balance performance and cost.

Optimizing scraper efficiency to reduce unnecessary requests.

Future Trends in Web Scraping for Food Delivery Data

As web scraping evolves, new advancements are shaping how businesses collect menu data:

AI-Powered Scrapers – Machine learning models will adapt more efficiently to website changes.

Increased Use of APIs – Companies will increasingly rely on API access instead of web scraping.

Stronger Anti-Scraping Technologies – Platforms will develop more advanced security measures.

Ethical Scraping Frameworks – Legal guidelines and compliance measures will become more standardized.

Conclusion

Uber Eats, DoorDash, and Just Eat represent great challenges for menu data scraping, mainly due to their advanced bot detection systems. Nevertheless, if IP rotation, headless browsing, solutions to CAPTCHA, and JavaScript execution methodologies, augmented with AI tools, are applied, businesses can easily scrape valuable data without incurring the wrath of anti-scraping measures.

If you are an automated and reliable web scraper, CrawlXpert is the solution for you, which specializes in tools and services to extract menu data with efficiency while staying legally and ethically compliant. The right techniques, along with updates on recent trends in web scrapping, will keep the food delivery data collection effort successful long into the foreseeable future.

Know More : https://www.crawlxpert.com/blog/scraping-menu-data-from-ubereats-doordash-and-just-eat

#ScrapingMenuDatafromUberEats#ScrapingMenuDatafromDoorDash#ScrapingMenuDatafromJustEat#ScrapingforFoodDeliveryData

0 notes

Text

The best receipt OCR engine is the Tabscanner API

WHY? it wins 1st place on:

Accuracy at 99% (amazing AI engineering and massive dataset testing)

Speed - sub second receipt scanning, parsing and processing (usually within 2 seconds but often much faster)

specialist in POS receipts for all industries and markets. Other companies also do invoices and other documents. Tabscanner specialises in POS receipts. They do some invoices but the format of those is like receipts.

#tabscanner#receipt ocr#ocr receipt#api#python#json#csharp#javascript#dev#app developers#expenses#loyalty

1 note

·

View note

Text

OCR-Driven PAN Verification with Real-Time Results

Users can simply click a photo of their PAN card, and SprintVerify’s KYC API extracts all relevant fields—name, DOB, PAN number—using OCR, and validates them with backend data. It’s quick, lightweight, and eliminates the need for manual entry or server-side document parsing. This is particularly helpful for platforms that require tax identity verification like stock brokers, NBFCs, or lending apps.

0 notes

Text

The Role of OCR in Digital KYC

OCR (Optical Character Recognition) enables automatic data extraction from user-submitted documents like Aadhaar, PAN, and Voter ID. SprintVerify’s OCR API reads these documents, parses names, addresses, and document numbers, and returns structured JSON data in seconds. This eliminates manual entry, reduces errors, and accelerates KYC workflows. Ideal for platforms handling high KYC volumes, OCR makes identity verification scalable and efficient. Combined with other SprintVerify APIs, it creates a complete, automated onboarding engine. Whether you're onboarding 100 or 10,000 users daily, OCR ensures your processes are fast, accurate, and ready for growth.

0 notes

Text

How to Find Unit Number in Property Finder Listings Instantly:

A Complete Guide for Dubai Property Buyers

In Dubai’s fast-paced and transparent real estate market, having access to precise property details can make or break a deal. Among those key details is the unit number—especially relevant for buyers, investors, and even agents looking to verify property listings or cross-reference ownership. However, finding unit numbers on listing platforms isn’t always straightforward. That’s why knowing how to Find Unit Number in Property Finder Listings Instantly is now more important than ever in 2025.

This guide will walk you through the importance of unit numbers, the challenges users face, and the best tools or methods to reveal them—even when they're not visible at first glance.

Why Are Unit Numbers Important in Dubai Real Estate?

Dubai’s real estate sector is highly regulated, with each unit registered under a unique number through the Dubai Land Department (DLD). This unit number acts like a fingerprint for the property. It can be used to:

Verify the ownership and legal status of the property

Check mortgage or lien status through DLD services

Review exact floor location and floor plans

Match the property listing with official government records

For buyers, it ensures they’re investing in a verified and existing unit. For agents, it prevents the risk of duplicating or falsely listing unavailable properties.

But despite its importance, unit numbers are rarely shown on public listings—largely due to privacy rules and platform limitations.

Challenges of Finding Unit Numbers on Property Portals

On portals like Property Finder, Bayut, or Dubizzle, listings typically show details like community, building name, number of bedrooms, and size. But often, they stop short of revealing the unit number. This creates challenges for:

Verifying that the unit is real and currently on the market

Avoiding fake or duplicated listings

Conducting due diligence when purchasing off-plan or secondary properties

That's where the need to Find Unit Number in Property Finder Listings Instantly comes in. And now, smart tools and tricks can help you do just that.

How to Find Unit Number in Property Finder Listings Instantly

There are several ways you can attempt to retrieve unit numbers, depending on how the listing is structured:

1. Check Attached Brochures or Floor Plans

Sometimes agents upload official developer brochures or building floor plans. These files often contain unit numbers for each apartment or villa. Scrutinize these documents carefully.

2. Contact the Agent Directly

If you see a property you're interested in, call or WhatsApp the agent and request the unit number. Be professional and let them know you're doing due diligence.

3. Use a property finder unit number finder Tool

Several Chrome extensions and AI-based tools have emerged in 2025 that allow users to extract hidden metadata or detect property information embedded in the page. A reliable property finder unit number finder can analyze the HTML of the listing, parse documents, and even reference DLD APIs to suggest likely unit numbers.

These tools are becoming increasingly popular with brokers, investors, and even property tech startups.

Tips for Using a property finder unit number finder Effectively

When using such tools, make sure to:

Only download browser extensions from trusted real estate technology providers

Cross-check results with Dubai Land Department data (via REST App or DLD website)

Always confirm the unit with the listing agent before proceeding with documentation

Keep in mind that while these tools are helpful, they are meant to assist—not replace—manual verification.

Conclusion: Get Ahead with Better Property Insight

In a competitive and digitally driven property market like Dubai, being informed is your greatest advantage. Learning how to Find Unit Number in Property Finder Listings Instantly saves time, increases transparency, and protects you from making the wrong investment. With the rise of smart real estate tools, a reliable property finder unit number finder can help you unlock crucial data and boost your confidence in every deal.

Whether you're an agent working with high-volume listings or a buyer seeking investment property, mastering this process in 2025 gives you a serious edge.

0 notes

Text

What Are the Key Technologies Behind Successful Generative AI Platform Development for Modern Enterprises?

The rise of generative AI has shifted the gears of enterprise innovation. From dynamic content creation and hyper-personalized marketing to real-time decision support and autonomous workflows, generative AI is no longer just a trend—it’s a transformative business enabler. But behind every successful generative AI platform lies a complex stack of powerful technologies working in unison.

So, what exactly powers these platforms? In this blog, we’ll break down the key technologies driving enterprise-grade generative AI platform development and how they collectively enable scalability, adaptability, and business impact.

1. Large Language Models (LLMs): The Cognitive Core

At the heart of generative AI platforms are Large Language Models (LLMs) like GPT, LLaMA, Claude, and Mistral. These models are trained on vast datasets and exhibit emergent abilities to reason, summarize, translate, and generate human-like text.

Why LLMs matter:

They form the foundational layer for text-based generation, reasoning, and conversation.

They enable multi-turn interactions, intent recognition, and contextual understanding.

Enterprise-grade platforms fine-tune LLMs on domain-specific corpora for better performance.

2. Vector Databases: The Memory Layer for Contextual Intelligence

Generative AI isn’t just about creating something new—it’s also about recalling relevant context. This is where vector databases like Pinecone, Weaviate, FAISS, and Qdrant come into play.

Key benefits:

Store and retrieve high-dimensional embeddings that represent knowledge in context.

Facilitate semantic search and RAG (Retrieval-Augmented Generation) pipelines.

Power real-time personalization, document Q&A, and multi-modal experiences.

3. Retrieval-Augmented Generation (RAG): Bridging Static Models with Live Knowledge

LLMs are powerful but static. RAG systems make them dynamic by injecting real-time, relevant data during inference. This technique combines document retrieval with generative output.

Why RAG is a game-changer:

Combines the precision of search engines with the fluency of LLMs.

Ensures outputs are grounded in verified, current knowledge—ideal for enterprise use cases.

Reduces hallucinations and enhances trust in AI responses.

4. Multi-Modal Learning and APIs: Going Beyond Text

Modern enterprises need more than text. Generative AI platforms now incorporate multi-modal capabilities—understanding and generating not just text, but also images, audio, code, and structured data.

Supporting technologies:

Vision models (e.g., CLIP, DALL·E, Gemini)

Speech-to-text and TTS (e.g., Whisper, ElevenLabs)

Code generation models (e.g., Code LLaMA, AlphaCode)

API orchestration for handling media, file parsing, and real-world tools

5. MLOps and Model Orchestration: Managing Models at Scale

Without proper orchestration, even the best AI model is just code. MLOps (Machine Learning Operations) ensures that generative models are scalable, maintainable, and production-ready.

Essential tools and practices:

ML pipeline automation (e.g., Kubeflow, MLflow)

Continuous training, evaluation, and model drift detection

CI/CD pipelines for prompt engineering and deployment

Role-based access and observability for compliance

6. Prompt Engineering and Prompt Orchestration Frameworks

Crafting the right prompts is essential to get accurate, reliable, and task-specific results from LLMs. Prompt engineering tools and libraries like LangChain, Semantic Kernel, and PromptLayer play a major role.

Why this matters:

Templates and chains allow consistency across agents and tasks.

Enable composability across use cases: summarization, extraction, Q&A, rewriting, etc.

Enhance reusability and traceability across user sessions.

7. Secure and Scalable Cloud Infrastructure

Enterprise-grade generative AI platforms require robust infrastructure that supports high computational loads, secure data handling, and elastic scalability.

Common tech stack includes:

GPU-accelerated cloud compute (e.g., AWS SageMaker, Azure OpenAI, Google Vertex AI)

Kubernetes-based deployment for scalability

IAM and VPC configurations for enterprise security

Serverless backend and function-as-a-service (FaaS) for lightweight interactions

8. Fine-Tuning and Custom Model Training

Out-of-the-box models can’t always deliver domain-specific value. Fine-tuning using transfer learning, LoRA (Low-Rank Adaptation), or PEFT (Parameter-Efficient Fine-Tuning) helps mold generic LLMs into business-ready agents.

Use cases:

Legal document summarization

Pharma-specific regulatory Q&A

Financial report analysis

Customer support personalization

9. Governance, Compliance, and Explainability Layer

As enterprises adopt generative AI, they face mounting pressure to ensure AI governance, compliance, and auditability. Explainable AI (XAI) technologies, model interpretability tools, and usage tracking systems are essential.

Technologies that help:

Responsible AI frameworks (e.g., Microsoft Responsible AI Dashboard)

Policy enforcement engines (e.g., Open Policy Agent)

Consent-aware data management (for HIPAA, GDPR, SOC 2, etc.)

AI usage dashboards and token consumption monitoring

10. Agent Frameworks for Task Automation

Generative AI platform Development are evolving beyond chat. Modern solutions include autonomous agents that can plan, execute, and adapt to tasks using APIs, memory, and tools.

Tools powering agents:

LangChain Agents

AutoGen by Microsoft

CrewAI, BabyAGI, OpenAgents

Planner-executor models and tool calling (OpenAI function calling, ReAct, etc.)

Conclusion

The future of generative AI for enterprises lies in modular, multi-layered platforms built with precision. It's no longer just about having a powerful model—it’s about integrating it with the right memory, orchestration, compliance, and multi-modal capabilities. These technologies don’t just enable cool demos—they drive real business transformation, turning AI into a strategic asset.

For modern enterprises, investing in these core technologies means unlocking a future where every department, process, and decision can be enhanced with intelligent automation.

0 notes

Text

Decoding Data in PHP: The Ultimate Guide to Reading File Stream Data to String in 2025 Reading file content into a string is one of the most common tasks in PHP development. Whether you're parsing configuration files like JSON or INI, processing uploaded documents, or consuming data from streams and APIs, being able to efficiently and correctly read file data into a string is essential. With PHP 8.x, developers have access to mature, robust file handling functions, but choosing the right one—and understanding how to handle character encoding, memory efficiency, and errors—is key to writing performant and reliable code. In this comprehensive guide, we’ll walk through the best ways to read file stream data into a string in PHP as of 2025, complete with modern practices, working code, and real-world insights. Why Read File Stream Data to String in PHP? There are many scenarios in PHP applications where you need to convert a file's contents into a string: Parsing Configuration Files: Formats like JSON, INI, and YAML are typically read as strings before being parsed into arrays or objects. Reading Text Documents: Applications often need to display or analyze user-uploaded documents. Processing Network Streams: APIs or socket streams may provide data that needs to be read and handled as strings. General File Processing: Logging, data import/export, and command-line tools often require reading file data as text. Methods for Reading and Converting File Stream Data to String in PHP 1. Using file_get_contents() This is the simplest and most widely used method to read an entire file into a string. ✅ How it works: It takes a filename (or URL) and returns the file content as a string. 📄 Code Example: phpCopyEdit 📌 Pros: Very concise. Ideal for small to medium-sized files. ⚠️ Cons: Loads the entire file into memory—can be problematic with large files. Error handling must be explicitly added (@ or try/catch via wrappers). 2. Using fread() with fopen() This method provides more control, allowing you to read file contents in chunks or all at once. 📄 Code Example: phpCopyEdit 📌 Pros: Greater control over how much data is read. Better for handling large files in chunks. ⚠️ Cons: Requires manual file handling. filesize() may not be reliable for network streams or special files. 3. Reading Line-by-Line Using fgets() Useful when you want to process large files without loading them entirely into memory. 📄 Code Example: phpCopyEdit 📌 Pros: Memory-efficient. Great for log processing or large data files. ⚠️ Cons: Slower than reading in one go. More code required to build the final string. 4. Using stream_get_contents() Works well with generic stream resources (e.g., file streams, network connections). 📄 Code Example: phpCopyEdit 📌 Pros: Works with open file or network streams. Less verbose than fread() in some contexts. ⚠️ Cons: Still reads entire file into memory. Not ideal for very large data sets. 5. Reading Binary Data as a String To read raw binary data, use binary mode 'rb' and understand the data's encoding. 📄 Code Example: phpCopyEdit 📌 Pros: Necessary for binary/text hybrids. Ensures data integrity with explicit encoding. ⚠️ Cons: You must know the original encoding. Risk of misinterpreting binary data as text. Handling Character Encoding in PHP Handling character encoding properly is crucial when working with file data, especially in multilingual or international applications. 🔧 Best Practices: Use UTF-8 wherever possible—it is the most compatible encoding. Check the encoding of files before reading using tools like file or mb_detect_encoding(). Use mb_convert_encoding() to convert encodings explicitly: phpCopyEdit$content = mb_convert_encoding($content, 'UTF-8', 'ISO-8859-1'); Set default encoding in php.ini:

iniCopyEditdefault_charset = "UTF-8" Be cautious when outputting string data to browsers or databases—set correct headers (Content-Type: text/html; charset=UTF-8). Error Handling in PHP File Operations Proper error handling ensures your application fails gracefully. ✅ Tips: Always check return values (fopen(), fread(), file_get_contents()). Use try...catch blocks if using stream wrappers that support exceptions. Log or report errors clearly for debugging. 📄 Basic Error Check Example: phpCopyEdit Best Practices for Reading File Stream Data to String in PHP ✅ Use file_get_contents() for small files and quick reads. ✅ Use fread()/fgets() for large files or when you need precise control. ✅ Close file handles with fclose() to free system resources. ✅ Check and convert character encoding as needed. ✅ Implement error handling using conditionals or exceptions. ✅ Avoid reading huge files all at once—use chunked or line-by-line methods. ✅ Use streams for remote sources (e.g., php://input, php://memory). Conclusion Reading file stream data into a string is a foundational PHP skill that underpins many applications—from file processing to configuration management and beyond. PHP 8.x offers a robust set of functions to handle this task with flexibility and precision. Whether you’re using file_get_contents() for quick reads, fgets() for memory-efficient processing, or stream_get_contents() for stream-based applications, the key is understanding the trade-offs and ensuring proper character encoding and error handling. Mastering these techniques will help you write cleaner, safer, and more efficient PHP code—an essential skill for every modern PHP developer. 📘 External Resources: PHP: file_get_contents() - Manual PHP: fread() - Manual PHP: stream_get_contents() - Manual

0 notes

Text

Harnessing the Power of MuleSoft Intelligent Document Processing with RAVA Global Solutions

In the era of digital acceleration, businesses are constantly looking for smarter ways to manage growing volumes of documents, data, and processes. For companies in the United States seeking seamless data integration and automation, MuleSoft Intelligent Document Processing (IDP) is emerging as a game-changer. And when it comes to implementing this technology effectively, RAVA Global Solutions stands out as the best MuleSoft service provider USA businesses can trust.

What is MuleSoft Intelligent Document Processing?

Intelligent Document Processing (IDP) with MuleSoft goes beyond traditional OCR and basic data extraction. It leverages AI, machine learning, and natural language processing (NLP) to automatically ingest, interpret, and integrate structured and unstructured data from diverse documents — contracts, invoices, forms, emails, PDFs, and more — into core business systems.

Whether it's feeding parsed invoice data into Salesforce or routing customer forms to ServiceNow, MuleSoft ensures every piece of information flows intelligently and securely across your enterprise architecture.

Why MuleSoft for Document Processing?

MuleSoft’s Anypoint Platform is already well-known for its robust API-led integration capabilities. When combined with intelligent document processing features and AI integrations, it allows organizations to:

Eliminate manual data entry

Accelerate decision-making

Ensure data accuracy and compliance

Enhance customer experiences through faster turnaround

Reduce operational costs

From healthcare and legal firms to logistics and financial services, MuleSoft IDP is helping businesses automate what used to be labor-intensive workflows.

RAVA Global Solutions: Best MuleSoft Service Provider USA

At RAVA Global Solutions, we help businesses unlock the full power of MuleSoft with a strong focus on intelligent automation. Our certified MuleSoft experts design and deploy intelligent document workflows tailored to your industry, compliance requirements, and operational goals.

Here’s why U.S. enterprises consider RAVA the best MuleSoft service provider USA has to offer:

✅ Strategic MuleSoft Consulting

We understand that no two businesses are the same. Our team begins with a discovery phase, assessing your document lifecycle, data bottlenecks, and integration gaps.

✅ AI-Powered IDP Workflows

We implement custom AI models trained on your document types to extract, validate, and route information automatically — eliminating the need for human intervention.

✅ Seamless API Integration

Whether you use Salesforce, Workday, Oracle, or legacy systems, we ensure your extracted data is routed efficiently using MuleSoft APIs.

✅ Scalable and Secure

Our solutions are built for scale, compliant with U.S. industry standards like HIPAA, SOC 2, and GDPR, ensuring your data is always secure.

A Real-World Example: How We Transformed Document Workflows

A mid-sized insurance firm in the U.S. approached RAVA with a common challenge: they were manually processing hundreds of client claims each day. Each claim came with 5–6 documents, making manual data entry time-consuming and error-prone.

Using MuleSoft IDP, we built a workflow that:

Scanned incoming emails and attachments in real time

Used NLP to identify key claim data and extract it

Validated entries via MuleSoft’s business rules

Automatically pushed data into their claims management platform

Result: ✅ Processing time reduced by 70% ✅ Data errors reduced to nearly zero ✅ Increased team capacity without hiring

This transformation not only improved internal efficiency but drastically enhanced the customer experience — a critical competitive factor in insurance.

Why Now Is the Time for IDP

As remote work, digitization, and compliance pressure continue to rise, automating document workflows isn't a luxury — it’s a necessity. Businesses that invest in IDP today will be better positioned to compete tomorrow.

With MuleSoft intelligent document processing and the expertise of RAVA Global Solutions, your organization can future-proof its operations while reducing overhead and human error.

Partner with RAVA Global Solutions

Whether you're just beginning your automation journey or looking to enhance an existing MuleSoft infrastructure, RAVA Global Solutions is the partner to call. Recognized as the best MuleSoft service provider USA, we deliver more than technology — we deliver transformation.

📞 Get Started Today Explore how MuleSoft IDP can revolutionize your business. Contact RAVA Global Solutions for a personalized consultation.

🔗 Related Reading:

Top Benefits of MuleSoft for Data Integration

How API-Led Connectivity Accelerates Business Growth

#salesforce#crm#it consulting#odoo#erp#agatha all along#artists on tumblr#mulesoft#software development#ravaglobalsolutions

0 notes

Text

The Best APIs for Verifying International Addresses in Real-Time

In an increasingly globalized economy, businesses need accurate and verified address data to streamline operations, avoid delivery failures, and maintain a professional brand image. Real-time international address verification APIs ensure that address data entered into your system is accurate, standardized, and deliverable. Here’s an in-depth look at the best APIs for real-time international address verification in 2025.

1. Loqate by GBG

Loqate is one of the leading players in the global address verification space. With coverage across over 245 countries and territories, Loqate offers:

Real-time validation and autocompletion

Address parsing and formatting per country

Geocoding capabilities

High-speed performance and uptime

Businesses in ecommerce, logistics, finance, and government sectors rely on Loqate for its accuracy and robust global coverage.

2. Google Maps Address Validation API

Google’s Address Validation API brings the power of Google’s mapping and location data to address verification. Key features include:

Autocomplete with real-time suggestions

Parsing and component-level validation

Coverage in over 240 countries

Seamless integration with other Google services

While best suited for customer-facing applications, Google’s offering is a powerful tool for businesses needing intuitive, accurate data entry.

3. Melissa Global Address Verification API

Melissa has been a trusted data quality provider for decades. Their API offers:

Address verification in 240+ countries

Postal formatting and transliteration

Apartment/suite-level precision

Built-in duplicate detection

Melissa’s tools are particularly beneficial for large-scale database hygiene and CRM optimization.

4. Smarty (formerly SmartyStreets)

Smarty offers a high-performance international address verification API with features such as:

Intelligent address parsing

Local postal standard formatting

High accuracy and uptime

On-premise and cloud solutions

Smarty is known for its ease of integration and developer-friendly documentation.

5. PostGrid Address Verification API

PostGrid is a modern address verification platform built for developers and marketers. It provides:

Real-time address validation and autocomplete

CASS and SERP certifications

Global address standardization

Geolocation and ZIP+4 enhancements

With scalable pricing and robust APIs, PostGrid is perfect for startups and enterprises alike.

6. Experian Address Verification

Known for its data expertise, Experian’s address verification API includes:

Real-time and batch address verification

Coverage in 245+ countries

Integrated data enrichment tools

API or UI-based interaction

Experian’s API is enterprise-grade and ideal for regulated industries like banking and insurance.

7. HERE Location Services

HERE offers advanced geolocation tools along with powerful address verification. Its APIs provide:

Autocomplete and predictive address entry

Location-based verification

Regionalized formatting

Geocoding and routing capabilities

HERE is widely used in logistics, supply chain, and mobility solutions for its accurate mapping services.

8. Tommorrow.io’s Address Intelligence API

For businesses focused on logistics and environmental data, Tomorrow.io provides address validation alongside:

Real-time weather and climate data integration

Address clustering for regional delivery

Optimization of delivery routes

Perfect for companies in food delivery, outdoor event management, and field services.

Why Real-Time Verification Matters

Address verification APIs do more than just clean up data:

Reduce failed deliveries and returned mail

Lower shipping and logistics costs

Improve checkout and user experience

Ensure regulatory compliance

Support effective marketing segmentation

Key Features to Look for in an API

Global Coverage: The more countries and regions supported, the better.

Speed & Uptime: Real-time means nothing without fast, reliable responses.

Scalability: Choose APIs that scale with your traffic and data volume.

Data Privacy Compliance: GDPR, CCPA, and other regulatory considerations.

Support and Documentation: Quality developer support speeds up implementation.

Final Thoughts

Selecting the best real-time international address verification API depends on your business type, volume of addresses, and integration needs. Each of the APIs listed here offers robust functionality and global reach. By integrating a reliable address verification API, you can streamline operations, enhance data integrity, and deliver better customer experiences worldwide.

Investing in accurate, real-time address verification is not just a technical upgrade—it’s a strategic move that directly influences your bottom line and brand reputation.

youtube

SITES WE SUPPORT

API To Print Mails – Wix

1 note

·

View note

Text

Cognitive QA: The Next Evolution in Software Testing with AI

In the ever-accelerating digital landscape, conventional QA practices are struggling to keep pace with rapid releases, complex user experiences, and sprawling ecosystems of applications and APIs. As organizations push toward hyperautomation and smarter delivery pipelines, the concept of Cognitive QA has emerged as the next frontier in intelligent software testing.

Cognitive QA leverages the full spectrum of artificial intelligence — machine learning, natural language processing, predictive analytics, and reasoning — to go beyond traditional automation. It introduces a new paradigm where software can understand, learn, adapt, and recommend testing strategies much like a human would — but at machine scale and speed.

What is Cognitive QA?

Unlike conventional test automation that relies on scripted inputs and fixed outcomes, Cognitive QA mimics the way a human tester thinks. It understands the context of an application, analyzes test cases semantically, learns from past failures, and evolves continuously.

Core capabilities include:

Contextual understanding of requirements and test documentation

Self-healing test scripts that adapt to UI or logic changes

AI-generated test cases based on user behavior analytics

Continuous learning from production feedback and test outcomes

Predictive insights into defect trends and potential regressions

In essence, Cognitive QA fuses the precision of machines with the intuition of human testers, creating smarter, more responsive QA processes.

Key Components of a Cognitive QA Framework

1. Natural Language Processing (NLP)

Using NLP, Cognitive QA tools can parse user stories, requirements, and even emails to auto-generate relevant test cases — bridging the gap between business and engineering.

2. Machine Learning Models

Historical test data is fed into ML models to spot defect trends, suggest areas of risk, and prioritize test execution accordingly. Over time, the system becomes better at predicting where bugs are most likely to appear.

3. Cognitive Automation

This refers to autonomous test design, execution, and result analysis. These systems don’t just run tests — they reason through them, adapting test flows dynamically in real time based on application behavior.

4. Visual and Behavioral Testing

Cognitive QA platforms often incorporate visual validation tools and user journey simulations to ensure that changes don’t impact the perceived quality of the app — something traditional automation may miss.

Why Cognitive QA Matters Now

🔹 Faster Releases with Confidence

Modern software development is agile, fast, and iterative. Cognitive QA brings intelligent testing that scales with rapid changes — enabling faster releases without compromising quality.

🔹 Reducing Manual Overhead

By learning and adapting continuously, these systems reduce the need for manual intervention in test creation and maintenance — freeing up QA teams to focus on exploratory and creative testing.

🔹 Smart Test Prioritization

Instead of running thousands of regressions blindly, Cognitive QA can prioritize tests based on code changes, usage frequency, and defect probability — optimizing for both time and coverage.

Use Cases Across Industries

Finance: Predicting where bugs may appear in high-risk workflows like transactions or reporting.

Retail: Simulating diverse user behaviors during seasonal surges or A/B tests.

Healthcare: Ensuring compliance and stability in applications handling sensitive patient data.

Enterprise SaaS: Automatically adapting test cases to evolving UI/UX designs and new features.

Platforms Powering the Shift

Leading platforms such as Genqe.ai are pioneering the adoption of Cognitive QA with solutions that integrate seamlessly into DevOps pipelines. These platforms use AI to dynamically assess quality metrics, generate intelligent test scenarios, and provide real-time insights into system health — all while learning from each test cycle.

By harnessing Genqe.ai capabilities, organizations can transition from reactive to proactive quality assurance, identifying issues long before they affect users.

The Future of QA is Cognitive

As software becomes more intelligent, interconnected, and user-driven, testing must evolve to match its complexity. Cognitive QA represents a move toward human-in-the-loop systems, where AI assists testers in making faster, smarter, and more informed decisions.

Far from replacing QA professionals, Cognitive QA amplifies their abilities — automating the repetitive while elevating the creative and strategic.

Conclusion: Embracing the Intelligent QA Revolution

In 2025 and beyond, businesses that embrace Cognitive QA will lead the race in digital quality and resilience. With AI as a co-pilot, QA shifts from a bottleneck to a competitive advantage, accelerating releases, reducing costs, and delighting users.

Whether you’re modernizing legacy testing frameworks or launching a new product at scale, integrating platforms like Genqe.ai into your pipeline could be the smartest next step toward cognitive, context-aware quality assurance.

0 notes

Text

How a Bank Statement Analyser Helps in Fast-Tracking Loan Approvals

In today’s fast-paced financial ecosystem, speed and accuracy are critical to lending. Whether it's a personal loan, a business loan, or a mortgage, applicants expect a swift response from lenders. One of the biggest bottlenecks in the loan approval process has traditionally been the manual assessment of bank statements. Fortunately, with the emergence of the Bank Statement Analyser, this process has become faster, more accurate, and significantly more efficient.

What is a Bank Statement Analyser?

A Bank Statement Analyser is a digital tool or software that automatically extracts, categorizes, and interprets financial data from bank statements. These statements can be uploaded in various formats such as PDF, Excel, or directly fetched through APIs. Once processed, the tool generates insights into income patterns, expenses, EMIs, overdrafts, and cash flows—crucial for evaluating a borrower’s creditworthiness.

Instead of sifting through pages of bank transactions manually, underwriters and credit officers can access a clean, categorized report in seconds.

Why Speed Matters in Lending

Time is money, especially in lending. Customers expect real-time decisions, and financial institutions are under pressure to reduce turnaround time (TAT) for loan processing. Traditional methods involve manual data entry, validation, and risk evaluation, which can take several days. A Bank Statement Analyser compresses this timeline to just a few minutes, enabling near-instant decisions.

Fast loan approval not only improves customer experience but also increases the lender’s competitive advantage and loan conversion rates.

How a Bank Statement Analyser Accelerates Loan Approvals

1. Automated Data Extraction

The tool automatically reads and extracts data from uploaded statements, eliminating human error and manual entry delays. This includes parsing unstructured data like salary credits, NEFT/IMPS/RTGS transfers, utility payments, and more.

2. Income & Expense Categorization

A robust Bank Statement Analyser uses AI or rule-based logic to categorize transactions into buckets such as salary, rent, EMI payments, discretionary spending, and cash deposits. This financial profiling is key for assessing an applicant’s repayment ability.

3. Cash Flow Analysis

Understanding monthly cash inflows and outflows is crucial for risk assessment. The analyser identifies consistent patterns, flagging anomalies like sudden dips in income or frequent overdrafts, which may indicate financial stress.

4. Real-Time Risk Indicators

The analyser can instantly flag red flags such as bounced cheques, frequent minimum balance penalties, or excessive cash withdrawals. These indicators help lenders reject high-risk applications early in the process, saving time and resources.

5. Fraud Detection

Advanced tools come with built-in fraud detection algorithms that identify manipulated or tampered bank statements. By flagging inconsistencies, they protect lenders from processing fake or altered financial documents.

6. Customizable Reports for Underwriters

The output from a Bank Statement Analyser is typically available in customizable dashboards or downloadable reports. These insights empower credit teams to make informed decisions based on real-time data rather than intuition or guesswork.

Benefits for Lenders

Faster TAT: Reduce loan processing time from days to minutes.

Higher Accuracy: Minimize errors from manual review.

Better Risk Assessment: Granular financial profiling helps mitigate defaults.

Improved Scalability: Process thousands of applications simultaneously without hiring more staff.

Enhanced Customer Experience: Instant loan decisions improve satisfaction and retention.

Final Thoughts

In a digital-first lending environment, automating the review process is not just a luxury—it’s a necessity. A Bank Statement Analyser empowers lenders to accelerate loan approvals, reduce risk, and deliver a seamless borrower experience. As competition in the lending space heats up, adopting smart tools like these will be key to staying ahead of the curve.

Whether you're a bank, NBFC, or fintech startup, integrating a Bank Statement Analyser into your loan processing workflow is a step toward faster, smarter, and more secure lending.

0 notes

Text

How Accurate Is the OCR API in Real Use Cases?

Built with AI and pre-trained models, the OCR API delivers high accuracy in reading printed and handwritten text. It intelligently parses names, dates, numbers, and other details—even from complex or low-quality documents—ensuring reliable output for critical business processes.

0 notes

Text

Aisentr: The Smart, Free Alternative to ChatGPT for Business

As businesses search for flexible and cost-effective AI tools, many are turning to Aisentr—a powerful alternative to ChatGPT free of complexity and unnecessary fees. While tools like ChatGPT AI chatbot and Playground Chat GPT are popular, they often come with limitations like token restrictions, unclear pricing, or lack of business-focused integrations.

ChatGPT Limitations Holding You Back?

If you're currently using tools like ChatGPT, you may be familiar with these challenges:

ChatGPT token limit that restricts how long or complex your interactions can be.

Questions like “Is ChatGPT API free?” that often reveal hidden costs.

Limited ability to upload PDF to ChatGPT, slowing down document-driven workflows.

Aisentr removes these roadblocks with:

Unlimited conversation length

Native support to parse and chat with PDFs

Transparent pricing designed for SMEs

True Business AI, Built for Growth

Aisentr isn’t just another chatbot—it’s a full AI platform that supports business machine learning needs with tools for:

Sales and customer support via free chatbot AI features

Staff onboarding with Homebase live chat integrations

Industry-specific uses like chatbot in healthcare or retail

Compare Platforms: Aisentr vs ChatGPT vs Others

Instead of juggling multiple disconnected tools like Gpts Hunter, ChatGPT similar tools, or websites similar to ChatGPT, Aisentr gives you a single solution that:

Supports live engagement via Tidio live chat alternatives

Provides WhatsApp automation tools with attention to WhatsApp character limits

Allows embedding through iframe websites, Notion embed, or embed website options

Developer-Friendly, Ready for Scale

With built-in support for Slack API website workflows and an intuitive API for chatbot access, Aisentr works well with modern tech stacks. Whether you’re using Chat IO, AI bot talk, or building new integrations, Aisentr is flexible enough to support your roadmap.

Designed for Your Platform

From marketing to support, Aisentr works with your site, apps, and communication platforms:

Use it to power chatbots WhatsApp conversations

Deploy AI agents across web pages via chat bot platforms

Integrate seamlessly with Notion, Slack, or your internal CRM

Final Thoughts

If you’re searching for an alternative for ChatGPT that works across departments and scales with your business, Aisentr is your answer. It’s cost-effective, simple to implement, and powerful enough to grow alongside your company.Explore more at Aisentr and discover how easy smart automation can be. https://www.aisentr.com/

0 notes

Text

The Future of Alltick API: AI, Quantum Computing, and Beyond

Alltick isn’t just today’s fastest data feed—it’s building the future of trading tech.

This article explores: ✔ AI-driven predictive analytics ✔ Quantum-resistant encryption ✔ Decentralized data validation (Blockchain)

1. AI-Powered Predictive Markets

Alltick’s Nexus-9 AI now: 🤖 Predicts FOMC decisions with 89% accuracy 📈 Forecasts Bitcoin halving rallies 6 months early

2. Quantum Computing Integration

Quantum timestamps → Picosecond precision

Post-quantum encryption → Unhackable data streams

3. Decentralized Data Validation

Blockchain-based consensus → No single point of failure

Tokenized data rewards → Earn $ALLT for providing liquidity

The Next 5 Years: What’s Coming

🚀 2025: Neural network price forecasting 🛡️ 2026: AI-driven SEC compliance bots 🌐 2027: Global decentralized data mesh

Websocket

import json import websocket # pip install websocket-client ''' # Special Note: # GitHub: https://github.com/alltick/realtime-forex-crypto-stock-tick-finance-websocket-api # Token Application: https://alltick.co # Replace "testtoken" in the URL below with your own token # API addresses for forex, cryptocurrencies, and precious metals: # wss://quote.tradeswitcher.com/quote-b-ws-api # Stock API address: # wss://quote.tradeswitcher.com/quote-stock-b-ws-api ''' class Feed(object): def __init__(self): self.url = 'wss://quote.tradeswitcher.com/quote-stock-b-ws-api?token=testtoken' # Enter your websocket URL here self.ws = None def on_open(self, ws): """ Callback object which is called at opening websocket. 1 argument: @ ws: the WebSocketApp object """ print('A new WebSocketApp is opened!') # Start subscribing (an example) sub_param = { "cmd_id": 22002, "seq_id": 123, "trace":"3baaa938-f92c-4a74-a228-fd49d5e2f8bc-1678419657806", "data":{ "symbol_list":[ { "code": "700.HK", "depth_level": 5, }, { "code": "UNH.US", "depth_level": 5, } ] } } # If you want to run for a long time, you need to modify the code to send heartbeats periodically to avoid disconnection, please refer to the API documentation for details sub_str = json.dumps(sub_param) ws.send(sub_str) print("depth quote are subscribed!") def on_data(self, ws, string, type, continue_flag): """ 4 arguments. The 1st argument is this class object. The 2nd argument is utf-8 string which we get from the server. The 3rd argument is data type. ABNF.OPCODE_TEXT or ABNF.OPCODE_BINARY will be came. The 4th argument is continue flag. If 0, the data continue """ def on_message(self, ws, message): """ Callback object which is called when received data. 2 arguments: @ ws: the WebSocketApp object @ message: utf-8 data received from the server """ # Parse the received message result = eval(message) print(result) def on_error(self, ws, error): """ Callback object which is called when got an error. 2 arguments: @ ws: the WebSocketApp object @ error: exception object """ print(error) def on_close(self, ws, close_status_code, close_msg): """ Callback object which is called when the connection is closed. 2 arguments: @ ws: the WebSocketApp object @ close_status_code @ close_msg """ print('The connection is closed!') def start(self): self.ws = websocket.WebSocketApp( self.url, on_open=self.on_open, on_message=self.on_message, on_data=self.on_data, on_error=self.on_error, on_close=self.on_close, ) self.ws.run_forever() if __name__ == "__main__": feed = Feed() feed.start()

The Data Feed of Tomorrow

Alltick isn’t just keeping up with the future—it’s inventing it.

Join the revolution: [Explore Alltick.co]

0 notes

Text

Will AI Replace Developer Jobs? An In-Depth Analysis

The rise of artificial intelligence (AI) has sparked intense debate about its potential to disrupt industries, and software development is no exception. Tools like GitHub Copilot, ChatGPT, and Amazon CodeWhisperer have already begun transforming how developers work, automating repetitive tasks and accelerating coding processes. But does this mean AI will replace developers? The answer is nuanced: while AI will significantly reshape the role of developers, it is unlikely to eliminate the need for human expertise. Instead, it will augment productivity, redefine responsibilities, and create new opportunities.

1. The Current State of AI in Software Development

AI-powered tools are already embedded in developers’ workflows:

Code Generation: Tools like GitHub Copilot suggest code snippets, auto-complete functions, and even generate boilerplate code.

Debugging & Testing: AI algorithms scan codebases for vulnerabilities, optimize test cases, and predict bugs.

Documentation & Maintenance: AI can parse legacy code, generate documentation, and refactor outdated systems.

These tools act as "AI pair programmers," reducing grunt work and allowing developers to focus on complex problem-solving. However, they lack the creativity, intuition, and contextual understanding required for higher-level tasks.

2. What AI Can (and Can’t) Do

Tasks AI Can Handle:

Repetitive Coding: Automating boilerplate code for CRUD operations, APIs, or UI templates.

Code Optimization: Identifying inefficient algorithms or memory leaks.

Rapid Prototyping: Generating basic app frameworks based on natural language prompts.

Limitations of AI:

Understanding Context: AI struggles with ambiguous requirements, business logic, or user experience nuances.

Ethical Judgment: Decisions about privacy, fairness, and security require human oversight.

Innovation: AI can’t invent novel solutions, design architectures, or envision products that meet unmet market needs.

In essence, AI excels at execution but falters at strategy.

3. How Developer Roles Will Evolve

Rather than replacing developers, AI will shift their responsibilities:

From Coding to Orchestration: Developers will oversee AI-generated code, ensuring alignment with business goals and quality standards.

Focus on Complex Problems: Roles will prioritize system design, ethical AI integration, and cross-functional collaboration.

Upskilling Opportunities: Demand will grow for specialists in AI/ML engineering, prompt engineering, and AI ethics.

For example, a developer might use AI to draft a microservice but will still need to refine its logic, integrate it with other systems, and validate its performance.

4. Economic and Industry Implications

Job Displacement Concerns: Entry-level roles involving repetitive tasks (e.g., basic testing, code translation) may decline. However, history shows that technology often creates more jobs than it displaces (e.g., the rise of cloud computing).

Increased Productivity: AI could lower barriers to entry, enabling smaller teams to build robust software faster. This might expand the market for tech solutions, driving demand for skilled developers.

New Specializations: Roles like "AI Trainer" (fine-tuning models for coding) or "AI Auditor" (ensuring compliance and fairness) will emerge.

5. Case Studies: AI in Action

GitHub Copilot: Used by 46% of developers in 2023, it accelerates coding but requires human review to ensure accuracy.

Tesla’s Autopilot Team: Engineers use AI to generate simulation code but rely on human expertise to validate safety-critical systems.

Low-Code Platforms: While AI-powered tools like OutSystems enable non-developers to build apps, complex projects still require professional oversight.

6. The Human Edge: Why Developers Will Stay Relevant

Creativity: AI lacks the ability to brainstorm innovative features or pivot based on user feedback.

Domain Knowledge: Understanding industry-specific challenges (e.g., healthcare compliance, fintech security) requires human experience.

Soft Skills: Collaboration, communication, and leadership remain irreplaceable in cross-functional teams.

7. Preparing for an AI-Augmented Future

Developers can future-proof their careers by:

Embracing AI Tools: Learn to leverage AI for productivity gains.

Upskilling: Focus on system design, AI ethics, and domain-specific knowledge.

Adopting a Growth Mindset: Continuously adapt to new tools and methodologies.

Conclusion

AI is a transformative tool, not a replacement for developers. Just as compilers and IDEs revolutionized coding without eliminating jobs, AI will free developers from mundane tasks and empower them to tackle more ambitious challenges. The future belongs to developers who harness AI as a collaborator, combining its efficiency with human ingenuity to build better software, faster.

1 note

·

View note