#fiducial camera

Text

Flexible circuit boards manufacturing (JLCPCB, 2023)

Inkjet print head uses a fiducial camera for registering an FPC panel, and after alignment it prints graphics with UV-cureable epoxy in two passes

#jlcpcb#pcb#fpc#manufacturing#manufacture#factory#electronics#fiducial camera#camera#cv#opencv#computer vision

114 notes

·

View notes

Text

Corsa contro il tempo in Parlamento prima della pausa estiva: sedute notturne alla Camera per anticipare le ferie

Due decreti da convertire e tre fiducie da incassare, più la delega fiscale. Il Senato verso la chiusura entro il 4 agosto e Montecitorio, che dovrebbe terminare i lavori il 9, prova ad allinearsi e chiudere in settimana: parte il rush finale prima del mese di stop

(di Gabriele Bartoloni – repubblica.it) – ROMA – Ultimo giorno di luglio e sui palazzi del potere incombe il consueto effetto-imbuto…

View On WordPress

0 notes

Text

Aerial Photography Basics

Aerial Photography is the process of photographing the world from above. It can be used to capture a variety of subjects, including sports events, snippets of live action, property disputes, environmental assessments and mapping.

A Birds-eye View

The ability to see the world from a birds-eye perspective is one of the most exciting parts of aerial photography. It brings the landscape to life and offers a unique look at land size, use, colour and depth.

Throughout history, people have longed to capture the beauty of the world from the sky. Early uses included the likes of explorers, archaeologists and military personnel who used aerial reconnaissance to help them understand and map their surroundings.

Today, there are a number of affordable drones on the market that allow people with a camera to take aerial photos. While they aren't as high-tech as a full-size aircraft, they can still provide beautiful and high-quality pictures and videos that can be shared on social media.

Scale

There are several different types of aerial photos, based on scale. These include small scale, large scale and fiducial marks.

Small Scale - Small scale photos (e.g. 1:50 000) cover areas of the ground in less detail than larger scale photos. They are useful for studying relatively large areas of the landscape with features that you're not looking to measure or map in great detail.

While it may be tempting to shoot every detail in your aerial photo, try to keep your composition clean and simple. This will make your image more memorable and will draw viewers' attention to a specific point of interest in the photo.

0 notes

Text

Imagecast green button

This can then be copied to an image cast member or loaded into texture memory for viewing. If the thresholded image was generated (debugging is on), replace the current video image in the DART xtra with the thresholded image.If corner refinement is not used, blue dots show the corners used. If corner refinement is used, red and green dots show the crude and refined corners. If debugging is on, the thresholded image is computed when the marker tracker is run, and small red, blue and green dots are placed in the image.The parameter is a boolean, indicating if debugging of the marker tracker should be turned on or not.The correct values depend on many factors (lighting, the distance between markers, etc.).If the pixel moves by less than this distance, stop refining. the second paramter is iterations, the maximum number of iterations to refine the estimate.the first parameter is size, the radius in pixels of the window to look for the best corner in.Sets the ARtoolkit to refine the corners before using the OpenCV tracker." videoMarkerCornerRefinement int, int, float ".Run marker tracking on the saved video frame.Copy the currently saved video frame to the provided texture.Return 0 if there was no video, and the timestamp if there was a video frame.Read the next frame from the camera, saving it in the xtra.Assumes that the first large texture it finds is the correct one. Find the texture to be used for background video.When lighting is good, the history will cause the tracker to be smoother and have less drop-outs. The lite tracker does not take history into account, and should be used when there is a lot of noice and false recognition (which would cause problems with the history mechanism). Says whether to use the "lite" marker tracker (1) or not (0).The threshold is a number from 0 to 255, which defines the brightness of a pixel above which it should be considered white (pixel brightness below this value are considered black). Set the threshold for the ARToolkit fiducial tracker.the panel number for this panel is returned.This panel is added to the list of known panels. the first parameter is the path to a single fiducial panel file to be loaded and used.returns the number of fiducial panels (-1 on error).The fourth parameter is a boolean, saying if OpenCV should be used (should always be 1).int panelId, int timestamp, poseMatrix.For each marker panel found, a message will be sent of the form.the third parameter is the lingo callback for marker poses.the second parameter is the ARToolkit threshold value.You can add additional panels using the loadPanel command below. This file contains a list of all panels to use. the first parameter is the path to the fiducial panel definition file.Initialize marker tracking on the video stream." videoMarkerTrackingInit string, int, string, int".All paths to marker pattern files and camera calibration files are relative to this directory. Define where on your hard disk the data directory is.This method is used to undistort the given x,y point using the current camera calibration file.the return value is a list of the resulting un-distorted values.the second paramter is the y value of the (x,y) location for which to perform the undistortion.the first parameter is the x value of the (x,y) location for which to perform the undistortion.This method is used to distort the given x,y point using the current camera calibration file.get the depth (in bits) of the video stream.get the height(in pixels) of the video stream.get the width (in pixels) of the video stream.The parameters are the width, height and depth of the images.Not actually used during replay in DART, since we can replay without the xtra, but this is needed to support loading images into texture memory, or running the ARToolkit on them.Set the size of the video that will be fed in when replaying.The parameter is the camera configuration string.The first parameter is the path the camera calibration data file.initialize the video subsystem, but don't open the camera yet.Currently, only one camera can be open at a time. The commands that deal with cameras, video and marker tracking within video. Here are the current calls into the DART Xtra. DART Xtra interface docs DART Xtra interface docs

0 notes

Text

What Exactly Is a Craniotomy?

An operation called a craniotomy enables a surgeon to enter the brain. Typically, this procedure involves removing a flap of skull bone. Craniotomies can be carried out for a variety of ailments. For example, craniotomies may be carried out for hydrocephalus or trigeminal neuralgia. A neurosurgical operation called an orbitozygomatic craniotomy enables doctors to access the cranial base while limiting brain retraction. The two basic versions of this approach involve raising operative angles and expanding the operating area. Except for removing the orbital roof, the surgery is comparable to a traditional craniotomy. In this article, the writers outline a condensed technique and review the procedure's justifications.

In 1982, the orbitozygomatic technique was first explained. Jane and colleagues modified a frontal craniotomy by creating a bone flap, including the orbital roof and the lateral frontal lobe. These modifications made it possible to reach the anterior skull base and orbital floor. A single bone flap is made using the personal approach, which connects three boreholes. A computer-based imaging module and fiducial markers are used in a specific cranial surgery called fragmentary frameless stereotaxy to guide the surgeons. During surgery, the imaging gives the surgeon continuous, "real-time" information and aids in pinpointing the exact position of a lesion. Large brain tumors can be surgically removed using this method with particular success.

The procedure is carried out while the patient is unconscious and requires a minor incision on the scalp. The surgical team uses cameras to record fiducials on the patient's scalp. Before surgery, the patient has a minimal amount of shaving. The skull is then cleansed, and a small aperture is created. An opening about the size of a quarter exposes the dura, and a stereotactic biopsy needle is placed using a neuronavigation device into the desired location.

Recently, my cousin underwent a craniotomy for trigeminal neuralgia, but her symptoms persisted. Despite receiving several treatments, such as nerve blocks, a three-day IV infusion, botox, physical therapy, and natural cures, she has endured persistent pain for more than six months. She has also undergone three successful nerve decompression procedures. She has received various therapies, but sadly, her agony prevents her from working. The face, among other parts of the head, can be impacted by trigeminal neuralgia. It impacts the trigeminal nerve and transmits feeling to the sinus cavities, face, and mouth. Frequently, a particular action, like chewing food or smiling, sets off an episode, which may grow more frequently or last longer.

The degenerative brain condition known as hydrocephalus puts pressure on the brain's tissues. Though it can happen to people of any age, it mainly affects young children and older adults. The symptoms vary from person to person and can include increased intracranial pressure and loss of function. Through a series of tests, doctors can identify whether a patient has hydrocephalus.

Most of the time, patients receiving a craniotomy are laid on a table and given general anesthesia. Additionally, a ventilator-connected breathing tube might be given to them. This will guarantee that the patient gets oxygen during the entire procedure. The head is subsequently secured to the table using a 3-pin skull clamp. The area of skin and muscle around the intended incision line is shaved to a width of 1/4 inch before the procedure.

A surgical procedure called a craniotomy is used to treat childhood epilepsy. A tiny part of the skull must be removed and opened to access the brain, typically the hippocampus. To activate particular regions of the brain, electrodes may also be inserted there. The surgery is typically carried out while the patient is asleep. However, when testing the brain's functionality or pain management, the patient may occasionally be awake during the operation.

An evaluation of the patient by a medical team will come before any epilepsy surgery. The team will then test the proper surgical site and determine whether brain functions are compromised. These examinations can be carried out in a hospital or as an outpatient treatment. A baseline electroencephalogram, for instance, measures electrical activity in the brain when a patient is not having seizures and can help a doctor determine which parts of the brain may be impacted.

Hematoma is a common postoperative complication following craniotomies. This illness is mainly preventable. Postoperative hematoma risk factors have been established in Fukamachi A, Koizumi H, and Nukui H studies. It is yet unknown what causes cerebellar hematoma, though. The hematoma typically forms at the site of operation, though it can also happen in other parts of the brain. After supratentorial craniotomies, a cerebellar hematoma can occasionally develop.

0 notes

Text

Basic Concepts Of Aerial Photography

Photography is an excellent skill with a number of forms, and aerial photography is one of them. In simple terms, aerial photography is a type of photoshoot that is taken from the air. Aerial photography in Pensacola is used for various purposes. Generally, aerial photography is used to capture remote areas which are difficult for the human eye to catch. This kind of photography requires high efficiency captured from a vertical angle from an aircraft. There are several factors that involve aerial photography that differ it from the rest, such as film. Scale, angle, and overlap. Furthermore, many concepts like stereoscopic coverage, fiducial marks, focal length, roll and frame numbers, and flight lines and index maps are essential to learning in aerial photography.

Here, we have discussed some fundamental concepts of aerial photography that you should know if you are interested in it.

Characteristics of Aerial Photography

Following are the main characteristics of aerial photography.

● Synoptic view- Photographers having aerial photography skills have bird's eyes, and they can capture stunning views from a spatial context.

● Time freezing time - Aerial photography has spatial features, so they serve as historical evidence as the photographs are different at every angle in different geometrical areas.

● Cost-efficient- Aerial photography in Pensacola is usually cheaper than other photography or photography from the ground. It's good to capture a large area at a minimal cost.

● Easily available- Aerial photographers are available at every location as different industries are using the service of aerial photography that assures their availability.

● Stereoscopic coverage- This kind of photography gives stereoscopic coverage as the photographers use it to capture the location from two different locations.

● Large spectral range- Through aerial photography, pictures can be captured beyond the spectrum of the human eye, and the skills of this photography are performed in infrared and microwave portions.

Factors Influence Aerial Photography

● Scale- Scale is the ratio between two images on an aerial photograph and the same distance between the two points on the ground level. Furthermore, it can also be determined by finding the ratio between the camera's focal length and the plane's altitude. It can be expressed in three ways; unit equivalent, representative fraction, and ratio. It can be varied because of different flying heights and displacements.

● Camera/Filter/Films- Special cameras are used for Aerial Photography Panama City FL with high geometric and radiometric accuracy. Aerial films are usually available in rolls with multi-layer emulsion, and it comes in variant types and variant filters for different locations.

● Atmospheric condition- Atmosphere affects the quality of aerial photography as small particles such as dust, smoke, and dirt reduce the contrast and scatter the photograph.

To The Sum Up

With an ordinary view of the human eye, pictures can't be captured with a fine view and angle. Aerial photography is an excellent way to capture the micro contents of remote areas. There are many companies like Pelican Drones providing high-quality drones for aerial photography in Pensacola. You can get the best quality drones from them at a very minimal range.

0 notes

Text

Perché votare SI al Referendum

di Marco Travaglio per "Il Fatto Quotidiano" (...) a provo a spiegare, con dati certi e argomenti dimostrabili, perché dicevo e dico Sì al taglio dei deputati (da 630 a 400) e dei senatori (da 315 a 200). 1. Combattendo le controriforme di B. e di Renzi, abbiamo sempre detto che la Costituzione non si stravolge per metà o un terzo. Meglio aggiornarla con aggiustamenti chirurgici, nello spirito dell’art.138. Se Renzi si fosse limitato a tagliare i parlamentari (tutti, non solo i senatori) e il Cnel, avrebbe stravinto il referendum anche col mio voto, anzi nessuno si sarebbe sognato di scomodare gli elettori per un esito scontato. 2. Il “populismo” non c’entra nulla con questa riforma, invocata da molti, specie a sinistra, da oltre 40 anni: simile a quella della commissione Bozzi (1983), identica a quella della bicamerale Iotti-De Mita (‘93), in linea col programma dell’Ulivo (‘96). Il fatto che l’abbiano portata a casa i 5Stelle, con la stragrande maggioranza delle Camere, trasforma in populisti pure Prodi, De Mita, Bozzi e la Iotti? La scena mai vista di un Parlamento che si autoriduce contro gli interessi dei suoi membri e fa risparmiare allo Stato 80-100 milioni all’anno (quasi mezzo miliardo a legislatura) è l’esatto opposto dell’opportunismo. E il miglior antidoto all’anti-parlamentarismo: i cittadini, chiamati da anni a fare sacrifici, apprezzeranno un’istituzione che dà finalmente il buon esempio in casa propria. 3. La Carta dei padri costituenti ci azzecca poco con l’attuale numero dei parlamentari, deciso non nel 1948, ma nel ‘63: allora il potere legislativo era esclusiva del Parlamento, oggi molte leggi sono dell’Ue e delle Regioni. Infatti anche altrove, da Londra a Parigi, si progetta di ridurre gli eletti. 4. È vero: il Parlamento è stato trasformato dalle ultime tre leggi elettorali e da troppi decreti e fiducie in un’assemblea di yesman (peraltro volontari). Ma non dipende dal loro numero: se non cambiano la legge elettorale e i regolamenti, resteranno yesman sia in 945 sia in 600. Anzi, il taglio impone una nuova legge elettorale che, si spera, cancellerà la vergogna delle liste bloccate e ridarà potere, dignità e autorevolezza ai singoli parlamentari. Più rappresentativi, riconoscibili, responsabilizzati e un po’ meno inclini a votare Ruby nipote di Mubarak o a chiedere il bonus-povertà. 5. Ridurre i parlamentari – come ha deciso 4 volte il Parlamento, non i suoi nemici, con maggioranze oceaniche (all’ultima lettura 553 Sì, 14 No e 2 astenuti) – non implica affatto il “superamento del Parlamento” (che certo non vuole il M5S, essendovi il gruppo più numeroso) né il “presidenzialismo” (che vuole solo Salvini, isolato da tutti gli altri, inclusa FI). Ma proprio un “rilancio del Parlamento” che, diventando meno pletorico, sarà più credibile, efficiente e funzionale perché composto da eletti meno indistinti e dunque più forti, autonomi e autorevoli. Difendere un’assemblea-monstre di quasi mille persone, di cui un terzo diserta una votazione su tre, due terzi non ricoprono alcun ruolo e solo il 10% assomma più di un incarico, è ridicolo. 6. È falso che la riforma faccia dell’Italia il Paese con meno eletti in rapporti agli elettori. L’unica altra democrazia a bicameralismo paritario ed elettivo sono gli Usa: hanno il sestuplo dei nostri abitanti e un Congresso con 535 fra deputati e senatori (65 meno del nostro Parlamento post-taglio), che mai si sono sentiti deboli perché pochi, anzi. Sulle altre democrazie, il confronto va fatto solo con le Camere basse elette direttamente: Camera dei Comuni britannica (630 eletti contro i nostri 600, ma con 6 milioni di abitanti in più); Bundestag tedesco (709, ma con 20 milioni in più); Assemblée Nationale francese (577, ma con 7 milioni in più). Dopo il taglio l’Italia avrebbe 1 parlamentare ogni 85 mila elettori, contro una media di 1 su 190 mila delle democrazie con più di 30milioni di abitanti. 7. Dire che il taglio “renderà difficile funzionamento e ruolo” delle Camere è un nonsense: l’efficienza di un’assemblea è inversamente proporzionale al numero dei suoi membri. E affermare che “sarà impossibile la proporzionalità al Senato in 9 Regioni”, “tanti territori saranno sottorappresentati” e avremo solo 3 o 4 partiti significa nascondere agli elettori che la maggioranza s’è impegnata, nel rifare i collegi dopo il taglio, a evitare quelle storture: per esempio, superando la base regionale del Senato che consentirà circoscrizioni pluri-regionali, a vantaggio delle Regioni più piccole e dei partiti minori. Ecco perché voterò Sì al referendum.

4 notes

·

View notes

Text

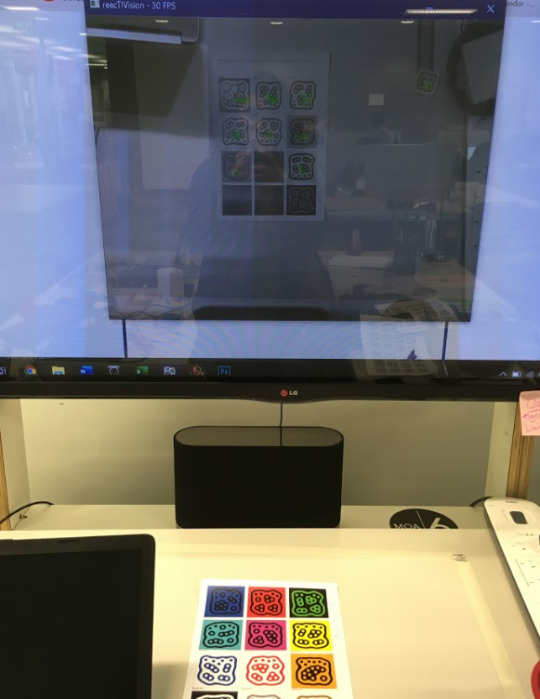

Studio III - Final Outcome - Full Catch-up

I haven’t really been good at keeping up the blog posts, I will admit that and so I’m gonna try my best to summarize as much as I can here...

Within the final week leading up to Open Studio, so many things have happened:

1. We had to change location a few times moving from out initial work area to a new area, then again from there to a corner area.

2. We’ve had to change the rig a few times, from simply screwing into the wall, to sticking it to the new wall, to finally attaching using a small arm to the TV rig.

3. Some of the physical items had to change because they were either too small or too big. We had to change the size of the Fiducial codes ever now and then because the camera height changed and wasn’t able to read the codes after.

The next few days were calm, we wanted to add some more to our project and have a small business website as well, seemed like a good idea but it may have been a bit much because one person ended up putting a lot onto themselves working on that as well as the illustrations for the items in the digital plane. Liam and Victoria sort of kicked off a bit because he was trying to finish of the program but she didn’t have the illustrations he need for a while. But in the end she pulled through and we got the final outcome to look amazing.

A few days left before Open Studio and we were finalizing everything we needed, items for the physical plane, decorations, posters, documents, instructions for the viewers, extra lighting just in case. From the looks of things everything was sorted... Now, we are a Work In Progress.

1 note

·

View note

Text

What Is Commercial Photography?

New Post has been published on https://www.davesonlocationphoto.com/what-is-commercial-photography/

What Is Commercial Photography?

Commercial photography is the art of taking photos for commercial use. This type of photography is usually associated with advertising, merchandising, product placements, sales pitches, and business brochures. It can help sell a product or service. Great commercial photos can increase your sales and profits over time. If you want to promote your service, investment, or any other business, commercial images can be used effectively for such purposes. A professional photographer can capture pictures of you, your business, service, or investment in the right way to promote your brand to the consumer. This article provides information on what is commercial photography.

There are many benefits to this type of photography. Today, marketers are spending millions of dollars to promote their brands. A marketer can use commercial photographs to improve the brand awareness of their product or service. It is more than just clicking a picture with the camera. In fact, a professional photographer knows how to display your product images and add some essence of emotion to entice the attention of the consumer. Commercial photography is the art of creating images to sell your brand. It enhances your corporate image in the long run. Innovative photography will help to increase the conversions and ROI of your business in the long term. That is why you should make use of this art to take your small business to the next level.

youtube

Common Types Of Commercial Photography

This field has multiple categories of photography under it. Here are a few photography categories that fall under commercial photography.

Advertising Photography

This type of photography is used for the purpose of promoting a product, service, individual, or company. They are highlighted in magazines, leaflets, newspapers, and more mediums. You may also come across this type of photography on websites, television, billboards, and digital ads. The process is sales-driven and performed by an advertising agency or design firm.

Architecture And Interior photography

This type of photography is used to capture images of buildings, interiors, and various structures. The professional will use lighting techniques and smooth images to capture the beauty of the interior architecture of a building. These images are used to promote homes, restaurants, real estate businesses, and attract clients to them.

Aerial Photography

These photographs are usually taken from elevated positions with the use of drones and aircraft. The main concepts that are used in aerial photography may include fiducial marks, stereoscopic coverage, focal length, frame numbers, flight lines, and index maps.

Sports Photography

This type of photography captures the most critical moments in the sporting world. It covers sporting news in various media outlets. High-quality cameras and sharp images are used to capture players in action in this type of photography. The photographer should have extensive experience in the industry before getting involved in sports photography.

Product Photography

An excellent product image can help promote the brand awareness of a company. This type of photography is taken seriously by product-based companies across the world. It is critical to promoting the various features and benefits of products – which helps sell the products to consumers.

Journalism Photography

This is the art of shooting images that are newsworthy. The photographer working in this field should have extensive experience and be the best at what they do. In fact, a journalism photographer may often run into life-threatening risks during his or her career.

How To Make Commercial Photography Shoots Go Smoothly

Getting involved in commercial photography can be quite satisfying to the photographer. There are many things to consider to make a commercial photography shoot go smoothly. Here are some things that you need to consider.

The most important thing is to be clear on your client’s needs. Effective communication is key to the success in this area. Does the client need a set number of photos? Do they have a deadline? Do they need any unique wardrobe, props, or locations? Make sure you know what their budget is before deciding to commence the shoot.

Discuss the intended use of the images and usage rights before you start the project. This will affect the shooting process and rate you need to quote. On the other hand, your crew and equipment should be active at all times to perform the task smoothly.

If you are not sure if you need a professional photographer to do work for you, read my article on the Importance of Corporate Photography.

2 notes

·

View notes

Text

View this email in your browser

EDIZIONE DEL 30 LUGLIO 2021

NO ALL'OBBLIGO DEL GREEN PASS

No all’obbligo del Green Pass. Fratelli d’Italia non sarà mai d’accordo con una misura economicida e che introduce un lasciapassare per partecipare alla vita sociale. La strada giusta è convincere e non imporre in maniera subdola l'obbligo vaccinale: la sinistra la smetta di condurre battaglie ideologiche che non producono risultati efficaci.

L’ULTIMA DELIRANTE BUGIA DELLA SINISTRA E DEL MAINSTREAM SUL GREEN PASS

DRAGHI O FAUCI

CHI STA MENTENDO?

GIORGIA MELONI IN PIAZZA AL FIANCO DEL COMITATO SULLE CURE DOMICILIARI

I medici hanno dimostrato sul campo che si può, con una terapia domiciliare fatta bene, impedire che le persone arrivino in terapia intensiva in ospedale. Ma il Ministro della Salute Speranza non li ascolta.

CONTINUA A LEGGERE

DONA IL 2XMILLE A FRATELLI D'ITALIA

NELLA TUA DICHIARAZIONE DEI REDDITI SCRIVI C12.

A TE NON COSTA NULLA

GIUSTIZIA: MEDIAZIONE AL RIBASSO E VERGOGNOSO CLIMA

Il Ddl Giustizia del governo è una mediazione al ribasso per tenere unita una maggioranza di governo dilaniata, anziché uno strumento per affermare diritti inalienabili dei cittadini e per velocizzare il processo penale.

CONTINUA A LEGGERE

Il GOVERNO RICHIAMA ARCURI PER AIUTARLO A SPENDERE MEGLIO I FONDI? MA SIAMO SU 'SCHERZI A PARTE'?

CONTINUA A LEGGERE

AMMINISTRATIVE, MELONI: SU DATA 10 OTTOBRE C’È IMPEGNO FORZE POLITICHE. PARTITI AL GOVERNO DIMOSTRINO CHE LA LINEA NON LA DETTA IL PD

CONTINUA A LEGGERE

Il libro del Presidente Giorgia Meloni è acquistabile in tutte le librerie. Una buona occasione per sostenere il commercio e i negozi di vicinato, in grande difficoltà per la crisi e che Fratelli d'Italia sostiene fin dall'inizio della pandemia. Il libro è acquistabile anche su tutte le piattaforme online:

Amazon - Amazon.it: Io sono Giorgia. Le mie radici le mie idee - Meloni, Giorgia - Libri

Feltrinelli - Libro Io sono Giorgia - G. Meloni - Rizzoli - Saggi italiani | LaFeltrinelli

IBS - Io sono Giorgia. Le mie radici, le mie idee - Giorgia Meloni - Libro - Rizzoli - Saggi italiani | IBS

Mondadori - Io sono Giorgia. Le mie radici le mie idee - Giorgia Meloni - Libro - Mondadori Store

LA PRESENTAZIONE DI "IO SONO GIORGIA" ALLA FESTA DEI PATRIOTI. GUARDA L'INTERVISTA DI GENNARO SANGIULIANO

LE DATE E I LUOGHI DOVE PUOI FIRMARE: CLICCA QUI !

DA CAMERA E SENATO

CAMERA: GIOVANNI RUSSO ADERISCE A GRUPPO FDI

Benvenuto a Giovanni Russo che entra a far parte del gruppo di Fratelli d'Italia alla Camera e si unisce alle nostre battaglie di coerenza e libertà.

CONTINUA A LEGGERE

EQUO COMPENSO, VARCHI: NON SVUOTARE PROPOSTA MELONI

La pdl a prima firma Meloni sull'equo compenso non sia snaturata rispetto alla platea già individuata. Per noi equo compenso significa esclusivamente tutela dei diritti dei professionisti.

CONTINUA A LEGGERE

REDDITO DI CITTADINANZA, FERRO: POZZO DI SAN PATRIZIO PER DELINQUENTI

Nuovi arresti e quasi 1 milione euro sottratto illegalmente ai tanti italiani che giornalmente combattono per sopravvivere, tenere aperte le proprie attività, pagare i debiti accumulati in questo anno e mezzo di covid.

CONTINUA A LEGGERE

GOVERNO, CIRIANI: DUE FIDUCIE IN UNA SETTIMANA, ENNESIMA UMILIAZIONE AL PARLAMENTO

Anche questa settimana il governo ha imposto due fiducie, sul decreto legge Semplificazioni e su quello Reclutamento. E' l'ennesima umiliazione per il Parlamento e per il Senato.

CONTINUA A LEGGERE

VACCINI, ZAFFINI: SPERANZA VUOLE IMPORRE OBBLIGO PER MINORI?

SCUOLA, IANNONE: BATTAGLIA PER I DOCENTI PRECARI DI RELIGIONE

Non c'è stata e non esiste alcuna volontà da parte di questo e dei governi precedenti di mettere mano alla situazione del corpo docente di religione negli istituti scolastici.

CONTINUA A LEGGERE

DA GIOVENTU' NAZIONALE

SARDEGNA: AIUTIAMO GLI ALLEVATORI COLPITI DALL'INCENDIO

CONTINUA A LEGGERE

FRATELLI D'ITALIA NEL MONDO

VOTO ELETTRONICO PER GLI ITALIANI ALL’ESTERO: LA PROPOSTA DI FDI

Introdurre il voto elettronico per gli italiani all’estero. È la proposta formulata da Fratelli d’Italia, che ha presentato un disegno di legge per garantire effettiva rappresentanza a 6 milioni di nostri connazionali.

CONTINUA A LEGGERE

ENTRA ANCHE TU NELLA SQUADRA DEI PATRIOTI

Per dare una mano a Fratelli d’Italia alle prossime amministrative, compila il modulo

APERTO IL TESSERAMENTO 2021 DI FRATELLI D'ITALIA

CLICCA QUI PER ADERIRE

CLICCA QUI PER RICEVERE LE NOSTRE NOTIZIE

0 notes

Text

The U.S. Army has face recognition technology that works in the dark

Army researchers have developed an artificial intelligence and machine learning technique that produces a visible face image from a thermal image of a person's face captured in low-light or nighttime conditions. This development could lead to enhanced real-time biometrics and post-mission forensic analysis for covert nighttime operations.

Thermal cameras like FLIR, or Forward Looking Infrared, sensors are actively deployed on aerial and ground vehicles, in watch towers and at check points for surveillance purposes. More recently, thermal cameras are becoming available for use as body-worn cameras. The ability to perform automatic face recognition at nighttime using such thermal cameras is beneficial for informing a Soldier that an individual is someone of interest, like someone who may be on a watch list.

The motivations for this technology -- developed by Drs. Benjamin S. Riggan, Nathaniel J. Short and Shuowen "Sean" Hu, from the U.S. Army Research Laboratory -- are to enhance both automatic and human-matching capabilities.

"This technology enables matching between thermal face images and existing biometric face databases/watch lists that only contain visible face imagery," said Riggan, a research scientist. "The technology provides a way for humans to visually compare visible and thermal facial imagery through thermal-to-visible face synthesis."

He said under nighttime and low-light conditions, there is insufficient light for a conventional camera to capture facial imagery for recognition without active illumination such as a flash or spotlight, which would give away the position of such surveillance cameras; however, thermal cameras that capture the heat signature naturally emanating from living skin tissue are ideal for such conditions.

"When using thermal cameras to capture facial imagery, the main challenge is that the captured thermal image must be matched against a watch list or gallery that only contains conventional visible imagery from known persons of interest," Riggan said. "Therefore, the problem becomes what is referred to as cross-spectrum, or heterogeneous, face recognition. In this case, facial probe imagery acquired in one modality is matched against a gallery database acquired using a different imaging modality."

This approach leverages advanced domain adaptation techniques based on deep neural networks. The fundamental approach is composed of two key parts: a non-linear regression model that maps a given thermal image into a corresponding visible latent representation and an optimization problem that projects the latent projection back into the image space.

Details of this work were presented in March in a technical paper "Thermal to Visible Synthesis of Face Images using Multiple Regions" at the IEEE Winter Conference on Applications of Computer Vision, or WACV, in Lake Tahoe, Nevada, which is a technical conference comprised of scholars and scientists from academia, industry and government.

At the conference, Army researchers demonstrated that combining global information, such as the features from the across the entire face, and local information, such as features from discriminative fiducial regions, for example, eyes, nose and mouth, enhanced the discriminability of the synthesized imagery. They showed how the thermal-to-visible mapped representations from both global and local regions in the thermal face signature could be used in conjunction to synthesize a refined visible face image.

The optimization problem for synthesizing an image attempts to jointly preserve the shape of the entire face and appearance of the local fiducial details. Using the synthesized thermal-to-visible imagery and existing visible gallery imagery, they performed face verification experiments using a common open source deep neural network architecture for face recognition. The architecture used is explicitly designed for visible-based face recognition. The most surprising result is that their approach achieved better verification performance than a generative adversarial network-based approach, which previously showed photo-realistic properties.

Riggan attributes this result to the fact the game theoretic objective for GANs immediately seeks to generate imagery that is sufficiently similar in dynamic range and photo-like appearance to the training imagery, while sometimes neglecting to preserve identifying characteristics, he said. The approach developed by ARL preserves identity information to enhance discriminability, for example, increased recognition accuracy for both automatic face recognition algorithms and human adjudication.

As part of the paper presentation, ARL researchers showcased a near real-time demonstration of this technology. The proof of concept demonstration included the use of a FLIR Boson 320 thermal camera and a laptop running the algorithm in near real-time. This demonstration showed the audience that a captured thermal image of a person can be used to produce a synthesized visible image in situ. This work received the best paper award in the faces/biometrics session of the conference, out of more than 70 papers presented.

Riggan said he and his colleagues will continue to extend this research under the sponsorship of the Defense Forensics and Biometrics Agency to develop a robust nighttime face recognition capability for the Soldier.

9 notes

·

View notes

Text

Drone Photography - Capture Almost Everything

Like any other sector, today you can see that photography also has seen advanced development over the period of time. Now you can imagine capturing even the most difficult thing one can ever think of in your camera. Well, photography can change the entire look of a place or a person and can make it look so beautiful that we can never think of. According to the time photography has always improved and not it is used to make things easier.

If talking about Drone Photography Isle of Wight then it is one of its kind and to define the term we can say, it is the photography which helps in taking photographs of ground-based places from a higher position. Since the camera features don’t support the idea of taking ground level pictures, thus the platforms used for such photography are rockets, aircraft, kites, blimps, parachutes and many more. An advanced and accurate camera is used in this type of photography. Some of the important concepts of this photography are

Focal Length

Stereoscopic coverage

Frame Numbers

Roll

Fiducial Marks

Flight lines

Frame numbers

Index Maps

Best Time to take Photographs

Some believe the best season to take aerial photograph is during winters. Again, it depends on what you want to capture for example, if you are planning to capture the fields, then it should be done when the land is not ploughed for a long time and that can happen when the crops are not there. During that period the features of the field are clearly visible. If you are photographer then you must even know about Drone Filming Isle of Wight as it is best way to give enhancement to your photography skills.

In Archaeology the photography and Video Editing Isle Of Wight has really helped in searching lost monuments, tracking features that cannot be seen from ground level, or the ones hidden beneath the soil. Such features are located through soil marks, parch marks, crop marks etc., the photography also helps in the field of geology like knowing the changes in the process of nature, variations that occur in soil. Geologists find the photography useful in knowing about the annual rainfall, whether the level of rainfall would be higher, lower or normal and what will be its consequences on the earth.

Though the satellite image and digital mapping are more popular than the photography still in some of the areas where practical applications are required like finding fuel deposits, mineral deposits, recording the geological changes as well as water management. There are still certain areas like research in geological field can be done only through the photography and where other applications are not helpful for example flood risks, drainage problem in urban development etc.,

So, to conclude we can say the photography is not only a boon for archaeology, but also helpful in environmental studies wherein mapping forest, knowing about landslides and planning for water conservation system is also included.

0 notes

Text

How Oculus squeezed sophisticated tracking into pipsqueak hardware

Making the VR experience simple and portable was the main goal of the Oculus Quest, and it definitely accomplishes that. But going from things in the room tracking your headset to your headset tracking things in the room was a complex process. I talked with Facebook CTO Mike Schroepfer (“Schrep”) about the journey from “outside-in” to “inside-out.”

When you move your head and hands around with a VR headset and controllers, some part of the system has to track exactly where those things are at all times. There are two ways this is generally attempted.

One approach is to have sensors in the room you’re in, watching the devices and their embedded LEDs closely — looking from the outside in. The other is to have the sensors on the headset itself, which watches for signals in the room — looking from the inside out.

Both have their merits, but if you want a system to be wireless, your best bet is inside-out, since you don’t have to wirelessly send signals between the headset and the computer doing the actual position tracking, which can add hated latency to the experience.

Facebook and Oculus set a goal a few years back to achieve not just inside-out tracking, but make it as good or better than the wired systems that run on high-end PCs. And it would have to run anywhere, not just in a set scene with boundaries set by beacons or something, and do so within seconds of putting it on. The result is the impressive Quest headset, which succeeded with flying colors at this task (though it’s not much of a leap in others).

Review: Oculus Quest could be the Nintendo Switch of VR

What’s impressive about it isn’t just that it can track objects around it and translate that to an accurate 3D position of itself, but that it can do so in real time on a chip with a fraction of the power of an ordinary computer.

“I’m unaware of any system that’s anywhere near this level of performance,” said Schroepfer. “In the early days there were a lot of debates about whether it would even work or not.”

Our hope is that for the long run, for most consumer applications, it’s going to all be inside-out tracking.

The term for what the headset does is simultaneous localization and mapping, or SLAM. It basically means building a map of your environment in 3D while also figuring out where you are in that map. Naturally robots have been doing this for some time, but they generally use specialized hardware like lidar, and have a more powerful processor at their disposal. All the new headsets would have are ordinary cameras.

“In a warehouse, I can make sure my lighting is right, I can put fiducials on the wall, which are markers that can help reset things if I get errors — that’s like a dramatic simplification of the problem, you know?” Schroepfer pointed out. “I’m not asking you to put fiducials up on your walls. We don’t make you put QR codes or precisely positioned GPS coordinates around your house.

“It’s never seen your living room before, and it just has to work. And in a relatively constrained computing environment — we’ve got a mobile CPU in this thing. And most of that mobile CPU is going to the content, too. The robot isn’t playing Beat Saber at the same time it’s cruising though the warehouse.”

It’s a difficult problem in multiple dimensions, then, which is why the team has been working on it for years. Ultimately several factors came together. One was simply that mobile chips became powerful enough that something like this is even possible. But Facebook can’t really take credit for that.

More important was the ongoing work in computer vision that Facebook’s AI division has been doing under the eye of Yann Lecun and others there. Machine learning models frontload a lot of the processing necessary for computer vision problems, and the resulting inference engines are lighter weight, if not necessarily well understood. Putting efficient, edge-oriented machine learning to work inched this problem closer to having a possible solution.

Most of the labor, however, went into the complex interactions of the multiple systems that interact in real time to do the SLAM work.

“I wish I could tell you it’s just this really clever formula, but there’s lots of bits to get this to work,” Schroepfer said. “For example, you have an IMU on the system, an inertial measurement unit, and that runs at a very high frequency, maybe 1000 Hz, much higher than the rest of the system [i.e. the sensors, not the processor]. But it has a lot of error. And then we run the tracker and mapper on separate threads. And actually we multi-threaded the mapper, because it’s the most expensive part [i.e. computationally]. Multi-threaded programming is a pain to begin with, but you do it across these three, and then they share data in interesting ways to make it quick.”

Schroepfer caught himself here; “I’d have to spend like three hours to take you through all the grungy bits.”

Part of the process was also extensive testing, for which they used a commercial motion tracking rig as ground truth. They’d track a user playing with the headset and controllers, and using the OptiTrack setup measure the precise motions made.

Testing with the OptiTrack system.

To see how the algorithms and sensing system performed, they’d basically play back the data from that session to a simulated version of it: video of what the camera saw, data from the IMU, and any other relevant metrics. If the simulation was close to the ground truth they’d collected externally, good. If it wasn’t, the machine learning system would adjust its parameters and they’d run the simulation again. Over time the smaller, more efficient system drew closer and closer to producing the same tracking data the OptiTrack rig had recorded.

Ultimately it needed to be as good or better than the standard Rift headset. Years after the original, no one would buy a headset that was a step down in any way, no matter how much cheaper it was.

“It’s one thing to say, well my error rate compared to ground truth is whatever, but how does it actually manifest in terms of the whole experience?” said Schroepfer. “As we got towards the end of development, we actually had a couple passionate Beat Saber players on the team, and they would play on the Rift and on the Quest. And the goal was, the same person should be able to get the same high score or better. That was a good way to reset our micro-metrics and say, well this is what we actually need to achieve the end experience that people want.”

the computer vision team here, they’re pretty bullish on cameras with really powerful algorithms behind them being the solution to many problems.

It doesn’t hurt that it’s cheaper, too. Lidar is expensive enough that even auto manufacturers are careful how they implement it, and time-of-flight or structured-light approaches like Kinect also bring the cost up. Yet they massively simplify the problem, being 3D sensing tools to begin with.

“What we said was, can we get just as good without that? Because it will dramatically reduce the long term cost of this product,” he said. “When you’re talking to the computer vision team here, they’re pretty bullish on cameras with really powerful algorithms behind them being the solution to many problems. So our hope is that for the long run, for most consumer applications, it’s going to all be inside-out tracking.”

I pointed out that VR is not considered by all to be a healthy industry, and that technological solutions may not do much to solve a more multi-layered problem.

Schroepfer replied that there are basically three problems facing VR adoption: cost, friction, and content. Cost is self-explanatory, but it would be wrong to say it’s gotten a lot cheaper over the years. Playstation VR established a low-cost entry early on but “real” VR has remained expensive. Friction is how difficult it is to get from “open the box” to “play a game,” and historically has been a sticking point for VR. Oculus Quest addresses both these issues quite well, being at $400 and as our review noted very easy to just pick up and use. All that computer vision work wasn’t for nothing.

Content is still thin on the ground, though. There have been some hits, like Superhot and Beat Saber, but nothing to really draw crowds to the platform (if it can be called that).

“What we’re seeing is, as we get these headsets out, and in developers hands that people come up with all sorts of creative ideas. I think we’re in the early stages — these platforms take some time to marinate,” Schroepfer admitted. “I think everyone should be patient, it’s going to take a while. But this is the way we’re approaching it, we’re just going to keep plugging away, building better content, better experiences, better headsets as fast as we can.”

from RSSMix.com Mix ID 8176395 https://techcrunch.com/2019/08/22/how-oculus-squeezed-sophisticated-tracking-into-pipsqueak-hardware/ via http://www.kindlecompared.com/kindle-comparison/

0 notes

Text

How Oculus squeezed sophisticated tracking into pipsqueak hardware

Making the VR experience simple and portable was the main goal of the Oculus Quest, and it definitely accomplishes that. But going from things in the room tracking your headset to your headset tracking things in the room was a complex process. I talked with Facebook CTO Mike Schroepfer (“Schrep”) about the journey from “outside-in” to “inside-out.”

When you move your head and hands around with a VR headset and controllers, some part of the system has to track exactly where those things are at all times. There are two ways this is generally attempted.

One approach is to have sensors in the room you’re in, watching the devices and their embedded LEDs closely — looking from the outside in. The other is to have the sensors on the headset itself, which watches for signals in the room — looking from the inside out.

Both have their merits, but if you want a system to be wireless, your best bet is inside-out, since you don’t have to wirelessly send signals between the headset and the computer doing the actual position tracking, which can add hated latency to the experience.

Facebook and Oculus set a goal a few years back to achieve not just inside-out tracking, but make it as good or better than the wired systems that run on high-end PCs. And it would have to run anywhere, not just in a set scene with boundaries set by beacons or something, and do so within seconds of putting it on. The result is the impressive Quest headset, which succeeded with flying colors at this task (though it’s not much of a leap in others).

Review: Oculus Quest could be the Nintendo Switch of VR

What’s impressive about it isn’t just that it can track objects around it and translate that to an accurate 3D position of itself, but that it can do so in real time on a chip with a fraction of the power of an ordinary computer.

“I’m unaware of any system that’s anywhere near this level of performance,” said Schroepfer. “In the early days there were a lot of debates about whether it would even work or not.”

Our hope is that for the long run, for most consumer applications, it’s going to all be inside-out tracking.

The term for what the headset does is simultaneous localization and mapping, or SLAM. It basically means building a map of your environment in 3D while also figuring out where you are in that map. Naturally robots have been doing this for some time, but they generally use specialized hardware like lidar, and have a more powerful processor at their disposal. All the new headsets would have are ordinary cameras.

“In a warehouse, I can make sure my lighting is right, I can put fiducials on the wall, which are markers that can help reset things if I get errors — that’s like a dramatic simplification of the problem, you know?” Schroepfer pointed out. “I’m not asking you to put fiducials up on your walls. We don’t make you put QR codes or precisely positioned GPS coordinates around your house.

“It’s never seen your living room before, and it just has to work. And in a relatively constrained computing environment — we’ve got a mobile CPU in this thing. And most of that mobile CPU is going to the content, too. The robot isn’t playing Beat Saber at the same time it’s cruising though the warehouse.”

It’s a difficult problem in multiple dimensions, then, which is why the team has been working on it for years. Ultimately several factors came together. One was simply that mobile chips became powerful enough that something like this is even possible. But Facebook can’t really take credit for that.

More important was the ongoing work in computer vision that Facebook’s AI division has been doing under the eye of Yann Lecun and others there. Machine learning models frontload a lot of the processing necessary for computer vision problems, and the resulting inference engines are lighter weight, if not necessarily well understood. Putting efficient, edge-oriented machine learning to work inched this problem closer to having a possible solution.

Most of the labor, however, went into the complex interactions of the multiple systems that interact in real time to do the SLAM work.

“I wish I could tell you it’s just this really clever formula, but there’s lots of bits to get this to work,” Schroepfer said. “For example, you have an IMU on the system, an inertial measurement unit, and that runs at a very high frequency, maybe 1000 Hz, much higher than the rest of the system [i.e. the sensors, not the processor]. But it has a lot of error. And then we run the tracker and mapper on separate threads. And actually we multi-threaded the mapper, because it’s the most expensive part [i.e. computationally]. Multi-threaded programming is a pain to begin with, but you do it across these three, and then they share data in interesting ways to make it quick.”

Schroepfer caught himself here; “I’d have to spend like three hours to take you through all the grungy bits.”

Part of the process was also extensive testing, for which they used a commercial motion tracking rig as ground truth. They’d track a user playing with the headset and controllers, and using the OptiTrack setup measure the precise motions made.

Testing with the OptiTrack system.

To see how the algorithms and sensing system performed, they’d basically play back the data from that session to a simulated version of it: video of what the camera saw, data from the IMU, and any other relevant metrics. If the simulation was close to the ground truth they’d collected externally, good. If it wasn’t, the machine learning system would adjust its parameters and they’d run the simulation again. Over time the smaller, more efficient system drew closer and closer to producing the same tracking data the OptiTrack rig had recorded.

Ultimately it needed to be as good or better than the standard Rift headset. Years after the original, no one would buy a headset that was a step down in any way, no matter how much cheaper it was.

“It’s one thing to say, well my error rate compared to ground truth is whatever, but how does it actually manifest in terms of the whole experience?” said Schroepfer. “As we got towards the end of development, we actually had a couple passionate Beat Saber players on the team, and they would play on the Rift and on the Quest. And the goal was, the same person should be able to get the same high score or better. That was a good way to reset our micro-metrics and say, well this is what we actually need to achieve the end experience that people want.”

the computer vision team here, they’re pretty bullish on cameras with really powerful algorithms behind them being the solution to many problems.

It doesn’t hurt that it’s cheaper, too. Lidar is expensive enough that even auto manufacturers are careful how they implement it, and time-of-flight or structured-light approaches like Kinect also bring the cost up. Yet they massively simplify the problem, being 3D sensing tools to begin with.

“What we said was, can we get just as good without that? Because it will dramatically reduce the long term cost of this product,” he said. “When you’re talking to the computer vision team here, they’re pretty bullish on cameras with really powerful algorithms behind them being the solution to many problems. So our hope is that for the long run, for most consumer applications, it’s going to all be inside-out tracking.”

I pointed out that VR is not considered by all to be a healthy industry, and that technological solutions may not do much to solve a more multi-layered problem.

Schroepfer replied that there are basically three problems facing VR adoption: cost, friction, and content. Cost is self-explanatory, but it would be wrong to say it’s gotten a lot cheaper over the years. Playstation VR established a low-cost entry early on but “real” VR has remained expensive. Friction is how difficult it is to get from “open the box” to “play a game,” and historically has been a sticking point for VR. Oculus Quest addresses both these issues quite well, being at $400 and as our review noted very easy to just pick up and use. All that computer vision work wasn’t for nothing.

Content is still thin on the ground, though. There have been some hits, like Superhot and Beat Saber, but nothing to really draw crowds to the platform (if it can be called that).

“What we’re seeing is, as we get these headsets out, and in developers hands that people come up with all sorts of creative ideas. I think we’re in the early stages — these platforms take some time to marinate,” Schroepfer admitted. “I think everyone should be patient, it’s going to take a while. But this is the way we’re approaching it, we’re just going to keep plugging away, building better content, better experiences, better headsets as fast as we can.”

from Facebook – TechCrunch https://ift.tt/2TU1TQk

via IFTTT

0 notes

Text

How Oculus squeezed sophisticated tracking into pipsqueak hardware

Making the VR experience simple and portable was the main goal of the Oculus Quest, and it definitely accomplishes that. But going from things in the room tracking your headset to your headset tracking things in the room was a complex process. I talked with Facebook CTO Mike Schroepfer (“Schrep”) about the journey from “outside-in” to “inside-out.”

When you move your head and hands around with a VR headset and controllers, some part of the system has to track exactly where those things are at all times. There are two ways this is generally attempted.

One approach is to have sensors in the room you’re in, watching the devices and their embedded LEDs closely — looking from the outside in. The other is to have the sensors on the headset itself, which watches for signals in the room — looking from the inside out.

Both have their merits, but if you want a system to be wireless, your best bet is inside-out, since you don’t have to wirelessly send signals between the headset and the computer doing the actual position tracking, which can add hated latency to the experience.

Facebook and Oculus set a goal a few years back to achieve not just inside-out tracking, but make it as good or better than the wired systems that run on high-end PCs. And it would have to run anywhere, not just in a set scene with boundaries set by beacons or something, and do so within seconds of putting it on. The result is the impressive Quest headset, which succeeded with flying colors at this task (though it’s not much of a leap in others).

Review: Oculus Quest could be the Nintendo Switch of VR

What’s impressive about it isn’t just that it can track objects around it and translate that to an accurate 3D position of itself, but that it can do so in real time on a chip with a fraction of the power of an ordinary computer.

“I’m unaware of any system that’s anywhere near this level of performance,” said Schroepfer. “In the early days there were a lot of debates about whether it would even work or not.”

Our hope is that for the long run, for most consumer applications, it’s going to all be inside-out tracking.

The term for what the headset does is simultaneous localization and mapping, or SLAM. It basically means building a map of your environment in 3D while also figuring out where you are in that map. Naturally robots have been doing this for some time, but they generally use specialized hardware like lidar, and have a more powerful processor at their disposal. All the new headsets would have are ordinary cameras.

“In a warehouse, I can make sure my lighting is right, I can put fiducials on the wall, which are markers that can help reset things if I get errors — that’s like a dramatic simplification of the problem, you know?” Schroepfer pointed out. “I’m not asking you to put fiducials up on your walls. We don’t make you put QR codes or precisely positioned GPS coordinates around your house.

“It’s never seen your living room before, and it just has to work. And in a relatively constrained computing environment — we’ve got a mobile CPU in this thing. And most of that mobile CPU is going to the content, too. The robot isn’t playing Beat Saber at the same time it’s cruising though the warehouse.”

It’s a difficult problem in multiple dimensions, then, which is why the team has been working on it for years. Ultimately several factors came together. One was simply that mobile chips became powerful enough that something like this is even possible. But Facebook can’t really take credit for that.

More important was the ongoing work in computer vision that Facebook’s AI division has been doing under the eye of Yann Lecun and others there. Machine learning models frontload a lot of the processing necessary for computer vision problems, and the resulting inference engines are lighter weight, if not necessarily well understood. Putting efficient, edge-oriented machine learning to work inched this problem closer to having a possible solution.

Most of the labor, however, went into the complex interactions of the multiple systems that interact in real time to do the SLAM work.

“I wish I could tell you it’s just this really clever formula, but there’s lots of bits to get this to work,” Schroepfer said. “For example, you have an IMU on the system, an inertial measurement unit, and that runs at a very high frequency, maybe 1000 Hz, much higher than the rest of the system [i.e. the sensors, not the processor]. But it has a lot of error. And then we run the tracker and mapper on separate threads. And actually we multi-threaded the mapper, because it’s the most expensive part [i.e. computationally]. Multi-threaded programming is a pain to begin with, but you do it across these three, and then they share data in interesting ways to make it quick.”

Schroepfer caught himself here; “I’d have to spend like three hours to take you through all the grungy bits.”

Part of the process was also extensive testing, for which they used a commercial motion tracking rig as ground truth. They’d track a user playing with the headset and controllers, and using the OptiTrack setup measure the precise motions made.

Testing with the OptiTrack system.

To see how the algorithms and sensing system performed, they’d basically play back the data from that session to a simulated version of it: video of what the camera saw, data from the IMU, and any other relevant metrics. If the simulation was close to the ground truth they’d collected externally, good. If it wasn’t, the machine learning system would adjust its parameters and they’d run the simulation again. Over time the smaller, more efficient system drew closer and closer to producing the same tracking data the OptiTrack rig had recorded.

Ultimately it needed to be as good or better than the standard Rift headset. Years after the original, no one would buy a headset that was a step down in any way, no matter how much cheaper it was.

“It’s one thing to say, well my error rate compared to ground truth is whatever, but how does it actually manifest in terms of the whole experience?” said Schroepfer. “As we got towards the end of development, we actually had a couple passionate Beat Saber players on the team, and they would play on the Rift and on the Quest. And the goal was, the same person should be able to get the same high score or better. That was a good way to reset our micro-metrics and say, well this is what we actually need to achieve the end experience that people want.”

the computer vision team here, they’re pretty bullish on cameras with really powerful algorithms behind them being the solution to many problems.

It doesn’t hurt that it’s cheaper, too. Lidar is expensive enough that even auto manufacturers are careful how they implement it, and time-of-flight or structured-light approaches like Kinect also bring the cost up. Yet they massively simplify the problem, being 3D sensing tools to begin with.

“What we said was, can we get just as good without that? Because it will dramatically reduce the long term cost of this product,” he said. “When you’re talking to the computer vision team here, they’re pretty bullish on cameras with really powerful algorithms behind them being the solution to many problems. So our hope is that for the long run, for most consumer applications, it’s going to all be inside-out tracking.”

I pointed out that VR is not considered by all to be a healthy industry, and that technological solutions may not do much to solve a more multi-layered problem.

Schroepfer replied that there are basically three problems facing VR adoption: cost, friction, and content. Cost is self-explanatory, but it would be wrong to say it’s gotten a lot cheaper over the years. Playstation VR established a low-cost entry early on but “real” VR has remained expensive. Friction is how difficult it is to get from “open the box” to “play a game,” and historically has been a sticking point for VR. Oculus Quest addresses both these issues quite well, being at $400 and as our review noted very easy to just pick up and use. All that computer vision work wasn’t for nothing.

Content is still thin on the ground, though. There have been some hits, like Superhot and Beat Saber, but nothing to really draw crowds to the platform (if it can be called that).

“What we’re seeing is, as we get these headsets out, and in developers hands that people come up with all sorts of creative ideas. I think we’re in the early stages — these platforms take some time to marinate,” Schroepfer admitted. “I think everyone should be patient, it’s going to take a while. But this is the way we’re approaching it, we’re just going to keep plugging away, building better content, better experiences, better headsets as fast as we can.”

0 notes

Text

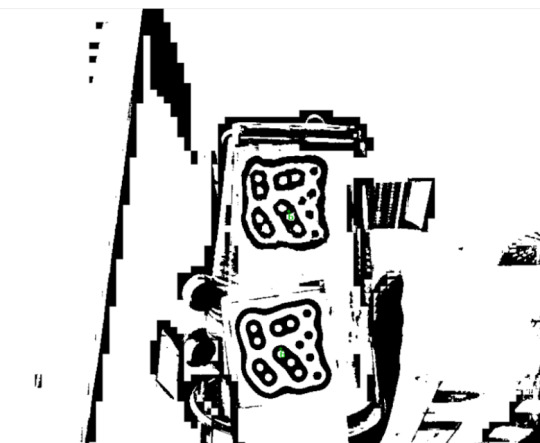

17# Colour testing midi

Today I thought I’d briefly test to what extent can the webcam is able to read the fiducial codes. On Photoshop we quickly edited various images of fiducial codes of different colors. I thought that we would attempt to make our presentation a bit nicer visually if perhaps we made the fiducial codes a bit more discreet on the object. We found that the higher the contrast between the colors the easier likely it is the code will be picked up by the camera, which makes sense. I also wanted to see if a fiducial code would work on say, a clear plastic sheet. So I had a plastic cup in possession from the ITS final day shared lunch and attempted to draw a fiducial code on it. To my surprise it worked, though it was less effective. Needless to say, a polished presentation is not most of our concerns at the moment. So who’s to say if we end up using this newfound information? It was interesting to find out though.

Testing different colors

Replicating fiducial code on a clear plastic cup

Code seems to work

0 notes