#gesturerecognition

Explore tagged Tumblr posts

Text

#SmartGadgets#HighTech#NextGenGadgets#WearableInnovation#SmartAccessories#RingTechnology#TechGadgets#ModernWearables#AIWearables#FitnessTech#HealthTech#SmartDevice#AdvancedGadget#DigitalGear#SmartJewelry#ConnectedWearables#SmartRingTech#GestureRecognition#SmartNotifications#TechLifestyle#Innovation2025#GadgetHub#SmartDeviceReview#FutureWearables#TechExplained#GadgetUnboxing#HandsOnTech#TechComparison2025#TopSmartDevices#UltimateTechReview

1 note

·

View note

Text

Gesture Recognition in Tech: The Next Big Thing in Consumer Electronics

Gesture recognition is reshaping the future of consumer electronics by providing intuitive, hands-free control over our devices. With a projected market size of $39,413.6 million by 2030, this technology is set to revolutionize how we interact with smartphones, TVs, and more. APAC's dominance and the growing application in smart TVs and mobile devices mark a major shift. From ease of use to improved ergonomics, gesture recognition is opening doors for new innovations across industries.

#gesturerecognition#consumerelectronics#smartdevices#futuretech#innovation#techtrends#AI#humanmachineinteraction#touchless#wearabletech

0 notes

Text

Automotive Gesture Recognition System Market Opportunities, Regional Overview, Business Strategies, and Industry Size Forecast to 2030

The Automotive Gesture Recognition System Market Research Report 2024 begins with an overview of the market and offers throughout development. It presents a comprehensive analysis of all the regional and major player segments that gives closer insights upon present market conditions and future market opportunities along with drivers, trending segments, consumer behaviour, pricing factors and market performance and estimation and prices as well as global predominant vendor’s information. The forecast market information, SWOT analysis, Automotive Gesture Recognition System Market scenario, and feasibility study are the vital aspects analysed in this report.

The global automotive gesture recognition system market is expected to grow at a 17% CAGR from 2024 to 2030. It is expected to reach above USD 990.4 million by 2030 from USD 10,326.46 million in 2023.

Access Full Report:

https://exactitudeconsultancy.com/reports/13318/automotive-gesture-recognition-system-market/

#GestureRecognition#AutomotiveTechnology#DriverAssistance#GestureControl#CarTech#AutomotiveInnovation#HumanMachineInterface#VehicleSafety#FutureOfMobility#GestureControlledCars#AutomotiveElectronics#AdvancedDrivingSystems#VehicleTechnology#SmartCar#InCarTechnology#AutomotiveGadgets#TechInCars#GestureInterface#CarInteriors

0 notes

Text

🎮 VR Gaming Boom: $12.1B to $57.5B by 2033 – Play in a New Dimension! 🚀

Virtual Reality (VR) in Gaming Market is revolutionizing the gaming experience by introducing interactive 3D environments that merge the virtual and physical worlds. From cutting-edge VR headsets to innovative content creation tools, the market caters to both consumers and enterprises, driving new levels of engagement and innovation in game design.

To Request Sample Report : https://www.globalinsightservices.com/request-sample/?id=GIS22306 &utm_source=SnehaPatil&utm_medium=Article

Key Market Trends:

VR hardware, particularly headsets, dominates the market, delivering unparalleled immersion through advanced motion tracking, haptic feedback, and gesture recognition.

Software platforms and game development tools are the second-highest performers, enabling developers to craft diverse and interactive VR experiences.

Motion tracking, 3D audio, and haptic feedback are leading technological advancements, enhancing realism and user interaction.

Regional Insights:

North America leads the VR gaming market, thanks to a strong gaming industry, early technology adoption, and high consumer spending.

Europe follows closely, fueled by a rich gaming culture and robust technological infrastructure.

China emerges as a key player, driven by its vast gaming population and government support for VR technologies.

Applications Across Genres:

Action and Adventure Games: Offer immersive storytelling and dynamic gameplay.

Simulation and Sports Games: Provide realistic experiences with precision motion tracking.

Educational Games: Promote interactive learning in engaging 3D environments.

Future of VR in Gaming: As advancements in motion tracking, haptic feedback, and network connectivity continue, VR gaming is poised to redefine entertainment. Opportunities abound for stakeholders to create innovative, boundary-pushing experiences.

#VRGaming #VirtualReality #ImmersiveGaming #VRHeadsets #3DAudio #HapticFeedback #GamingInnovation #VRGameDevelopment #FutureOfGaming #VRAdventure #SimulationGames #MotionTracking #GestureRecognition #TechInGaming #GamersUnite

0 notes

Text

Learning Nothing

How can we train a machine to recognise the difference between ‘something’ and ‘nothing’? Over the past few months, I have been working with Despina Papadopoulos on an R&D wearable project – Embodied Companionship, funded by Human Data Interaction.

“Embodied Companionship seeks to create a discursive relationship between machine learning and humans, centered around nuance, curiosity and second order feedback loops. Using machine learning to not only train and “learn” the wearers behaviour but create a symbiotic relationship with a technological artifact that rests on a mutual progression of understanding, the project aims to embody and make legible the process and shed some light on the black box.” – Text by Despina Papadopoulos

The project builds on the work we did last year in collaboration with Bless, where we created a prototype of a new form of wearable companion – Stylefree, a scarf that becomes ‘alive’ the more the wearer interacts with it. In Embodied Companionship, we wanted to further explore the theoretical, physical and cybernetic relationship between technology, the wearable (medium), and its wearer.

vimeo

*Stylefree – a collaboration between Despina Papadopoulos, Umbrellium and Bless.

In this blog post, I wanted to share some of the interesting challenges I faced through experimentations using machine learning algorithms and wearable microcontrollers to recognise our body movements and gestures. There will be more questions raised than answers in this post as this is a work in progress, but I am hoping to share more insights at the end of the project.

My research focuses on the use of the latest open-source machine learning library; Tensorflow Lite developed for Arduino Nano Ble Sense 33. Having designed, fabricated and programmed many wearable projects over the years (e.g Pollution Explorers – explore air quality with communities using wearables and machine learning algorithms), large scale performances (e.g SUPERGESTURES – each audience wore a gesture-sensing wearable to listen to geolocated audio stories and perform gestures created by young people) and platforms (e.g WearON – a platform for designers to quickly prototype connected IoT wearables), the board is a step up from any previous wearable-friendly controllers I have used. It contains many useful body-related sensors such as 9 axis inertial sensors, microphone, and a few other environmental sensors such as light and humidity sensors. With the type of sensors embedded, it becomes much easier to create smaller size wearables that can better sense the user’s position, movement and body gestures depending on where the board is placed on the body. And with its TinyML which allows the running of Edge Computing applications (AI), we can start to (finally!) play with more advanced gesture recognition. For the purpose of our project, the board is positioned on the arm of the wearer.

*Image of the prototype wearable of Embodied Companionship

Training a Machine

With the constraints, I started exploring a couple of fundamental questions – How does a machine understand a body gesture or a movement? How does it tell (or how can we tell it to tell…) one gesture apart from another? With any machine learning project, we require training data, it is used to provide examples of data patterns that correspond to user-defined categories of those patterns so that in future the machine can compare streams of data that are being captured to the examples and try to match them. However the algorithm doesn't simply match them, it returns a confidence level that the captured stream of data matches any particular pattern. Tensorflow offers a very good basic tutorial on gesture recognition using the arduino board, however, it is based on recognising simple and big gestures (e.g arm flexing and punching) which are easily recognisable. In order for the machine to learn a wearer’s gestural behaviour, it will involve learning many different types of movement patterns that a person might perform with their arm. So our first task is to check whether we can use this arduino and Tensorflow lite to recognise more than 2 types of gestures.

I started with adjusting various parameters of the machine learning code, for e.g, training more than 2 sets of distinct gestures, training with more subtle gestures, increasing the training data set for each gesture, increasing the epochs. The results were not satisfactory, the board could not recognise any of the gestures with high confidence mainly because each gestural data was not distinct enough for the machine to distinguish and hence it spreads its confidence level to the few gestures that it was taught with. It also highlighted a key question for me, i.e. how would a machine ‘know’ when a gesture is happening and when it is not happening? Without having an explicit button press to signify the start and end of a gesture (which is synonymous to the Alexa or Siri wake-up call), I realised that it would also need to recognise when a gesture was not happening.

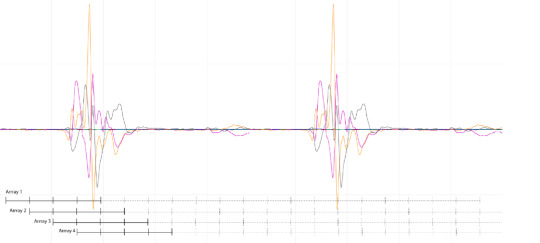

*How a gesture/movement is read on the serial plotter through its 3-axis accelerometer data

The original code from the tutorial was based on detecting a gesture the moment a significant motion is detected which could be a problem if we are trying to recognise more subtle gestures such as a slow hand waving or lifting the arm up slowly. I started experimenting with a couple of other ways for the arduino board to recognise a gesture at the ‘right’ time. First, I programmed a button where the wearer presses it to instruct the board to start recognising the gesture while it's being performed – this is not ideal as the wearer will have to consciously instruct the wearable whenever he/she is performing a gesture, but it allows me to understand what constitute a ‘right’ starting time to recognise a gesture. Lastly I tried programming the board to capture buckets of data at multiple short milliseconds time instances and run multiple analysis at once to compare each bucket and determine which bucket’s gesture at any instance returns the highest confidence level. However that does not return any significantly better result, it’s memory intensive for the board and reinforces the challenge, i.e. the machine needs to know when a person is not performing any gesture.

*Capturing buckets of data at multiple short milliseconds time

While the arduino board might be good at distinguishing between 2 gestures, if you perform a 3rd gesture that is untrained for the board, it will return either one of the learnt gestures with very low confidence level. This is because it was not taught with examples of other gestures. However, if we want it to learn a wearer’s behaviour over time, not only do we need to teach the machine with a set of gestures just like any language that comes with a strict set of components e.g alphabets, but it is equally important to teach it to recognise when the wearer is not doing anything significant. And with that it poses a major challenge, i.e. how much training do we need to teach a machine when the wearer is doing nothing?

Making Sense of the Nuances

When it comes to making sense of body gestures, our recognition of any gesture is guided by our background, culture, history, experience and interaction with each other. It is something that in this day and age, an advanced machine is still incapable of doing, e.g recognising different skin colours. Therefore, as much as we can train a machine to learn a body gesture through its x,y, z coordinates, or its speed of movement, we cannot train it with the cultural knowledge, experience or teach it to detect the subtle nuances of the meaning of a gesture (e.g the difference between crossing your arm when you are tired vs when you are feeling defensive).

Photo of one of the SUPERGESTURES workshops where young people design body gestures that can be detected by the wearable on their arm, and represent their story and vision of Manchester

It is worthwhile to remember that while this R&D project explores the extent to which machine learning can help create a discursive interaction between the wearer and the machine, there are limitations to the capability of a machine and it is important for us as designers and developers to help define a set of parameters that ensure that the machine can understand the nuances in order to create interaction that is meaningful for people of all backgrounds and colours.

While machine learning in other familiar fields such as camera vision do have some form of recognising “nothing” (e.g background subtraction), the concept of recognising “nothing” gestures (e.g should walking and standing up be considered ‘nothing’?) for wearable or body-based work is fairly new and has not been widely explored. A purely technological approach might say that ‘nothing’ simply requires adequate error-detection or filtering. But I would argue that the complexity of deciding what constitutes ‘nothing’ and the widely varying concept of what kinds of movement should be ‘ignored’ during training are absolutely vital to consider if we want to develop a wearable device that is trained for and useful for unique and different people. As this is work in progress, I will be experimenting more with this to gather more insights.

A blogpost by Ling Tan

#wearable technology#embodiedcompanionship#machinelearning#gesturerecognition#arduino#humanmachine#humandata#embodiedexperience#blog

0 notes

Text

Global #GestureRecognition Market value is expected to surpass $4848 million by 2023 growing at an estimated CAGR of more of 34.9% during 2018 to 2023. https://lnkd.in/gpTgsUb Keyplayers: #Microsoft #SamsungElectronics #Intel #SonyCorporation #TexasInstruments #SoftKinetic #marketresearch #marketanalysis #marketintelligence #marketforecast #IndustryARC

0 notes

Photo

https://www.variantmarketresearch.com/report-categories/semiconductor-electronics/gesture-recognition-market Global Gesture Recognition Market Report, published by Variant Market Research, forecast that the global market is expected to reach $43.6 billion by 2024; growing at a CAGR of 16.2% from 2016 to 2024. Gesture recognition is a technology through which a user can control the access of their device using body movements and gestures. Read More: #GesturerecognitionMarket #GestureRecognition #Gesture #variantmarketresearch

0 notes

Text

Qualcomm's Next Mid-Range Android Chip Can Support Up to 16GB of RAM

Qualcomm has announced its second-generation mid-range mobile chip. The Snapdragon 7+ Gen 2 processor is built on the 4nm process. It’ll be available on Android smartphones starting this month. Read more…

View On WordPress

#adreno#embeddedsystems#gesturerecognition#Logitech#mobilecomputers#mobilephones#Qualcomm#qualcommsnapdragon#razer#Realme#redmi#smartphones#technology2cinternet#touchscreenmobilephones#Xiaomi

0 notes

Link

0 notes

Video

youtube

(via https://www.youtube.com/watch?v=0QNiZfSsPc0)

Project Soli is developing a new interaction sensor using radar technology. The sensor can track sub-millimeter motions at high speed and accuracy. It fits onto a chip, can be produced at scale and built into small devices and everyday objects.

0 notes

Link

Gesture recognition technology allows users to read, recognise, process and analyse individual human gestures. In terms of data acquisition, the gesture recognition.

0 notes

Video

youtube

$1 device that uses existing wireless signals to deliver "always on" gesture recognition.

0 notes

Text

BlackBerry granted gesture recognition patent for touch-free image manipulation - published on: Technology Companies List

If BlackBerry lives to see 2014 (and beyond), it could end up delighting smartphone users with some neat gesture recognition tech. In a recently surfaced patent filing, the company formerly known as RIM outlines a method for selecting onscreen images using hand or finger movements above a display. By synthesizing a combo of images — one taken with IR, the other without — the software would be able to determine the intended area of…

Link:

BlackBerry granted gesture recognition patent for touch-free image manipulation

You can see the Listing Profile on: http://technologycompanieslist.com/blackberry-granted-gesture-recognition-patent-for-touch-free-image-manipulation/

BlackBerry granted gesture recognition patent for touch-free image manipulation

0 notes