#hadoop data types

Explore tagged Tumblr posts

Text

Short-Term vs. Long-Term Data Analytics Course in Delhi: Which One to Choose?

In today’s digital world, data is everywhere. From small businesses to large organizations, everyone uses data to make better decisions. Data analytics helps in understanding and using this data effectively. If you are interested in learning data analytics, you might wonder whether to choose a short-term or a long-term course. Both options have their benefits, and your choice depends on your goals, time, and career plans.

At Uncodemy, we offer both short-term and long-term data analytics courses in Delhi. This article will help you understand the key differences between these courses and guide you to make the right choice.

What is Data Analytics?

Data analytics is the process of examining large sets of data to find patterns, insights, and trends. It involves collecting, cleaning, analyzing, and interpreting data. Companies use data analytics to improve their services, understand customer behavior, and increase efficiency.

There are four main types of data analytics:

Descriptive Analytics: Understanding what has happened in the past.

Diagnostic Analytics: Identifying why something happened.

Predictive Analytics: Forecasting future outcomes.

Prescriptive Analytics: Suggesting actions to achieve desired outcomes.

Short-Term Data Analytics Course

A short-term data analytics course is a fast-paced program designed to teach you essential skills quickly. These courses usually last from a few weeks to a few months.

Benefits of a Short-Term Data Analytics Course

Quick Learning: You can learn the basics of data analytics in a short time.

Cost-Effective: Short-term courses are usually more affordable.

Skill Upgrade: Ideal for professionals looking to add new skills without a long commitment.

Job-Ready: Get practical knowledge and start working in less time.

Who Should Choose a Short-Term Course?

Working Professionals: If you want to upskill without leaving your job.

Students: If you want to add data analytics to your resume quickly.

Career Switchers: If you want to explore data analytics before committing to a long-term course.

What You Will Learn in a Short-Term Course

Introduction to Data Analytics

Basic Tools (Excel, SQL, Python)

Data Visualization (Tableau, Power BI)

Basic Statistics and Data Interpretation

Hands-on Projects

Long-Term Data Analytics Course

A long-term data analytics course is a comprehensive program that provides in-depth knowledge. These courses usually last from six months to two years.

Benefits of a Long-Term Data Analytics Course

Deep Knowledge: Covers advanced topics and techniques in detail.

Better Job Opportunities: Preferred by employers for specialized roles.

Practical Experience: Includes internships and real-world projects.

Certifications: You may earn industry-recognized certifications.

Who Should Choose a Long-Term Course?

Beginners: If you want to start a career in data analytics from scratch.

Career Changers: If you want to switch to a data analytics career.

Serious Learners: If you want advanced knowledge and long-term career growth.

What You Will Learn in a Long-Term Course

Advanced Data Analytics Techniques

Machine Learning and AI

Big Data Tools (Hadoop, Spark)

Data Ethics and Governance

Capstone Projects and Internships

Key Differences Between Short-Term and Long-Term Courses

FeatureShort-Term CourseLong-Term CourseDurationWeeks to a few monthsSix months to two yearsDepth of KnowledgeBasic and Intermediate ConceptsAdvanced and Specialized ConceptsCostMore AffordableHigher InvestmentLearning StyleFast-PacedDetailed and ComprehensiveCareer ImpactQuick Entry-Level JobsBetter Career Growth and High-Level JobsCertificationBasic CertificateIndustry-Recognized CertificationsPractical ProjectsLimitedExtensive and Real-World Projects

How to Choose the Right Course for You

When deciding between a short-term and long-term data analytics course at Uncodemy, consider these factors:

Your Career Goals

If you want a quick job or basic knowledge, choose a short-term course.

If you want a long-term career in data analytics, choose a long-term course.

Time Commitment

Choose a short-term course if you have limited time.

Choose a long-term course if you can dedicate several months to learning.

Budget

Short-term courses are usually more affordable.

Long-term courses require a bigger investment but offer better returns.

Current Knowledge

If you already know some basics, a short-term course will enhance your skills.

If you are a beginner, a long-term course will provide a solid foundation.

Job Market

Short-term courses can help you get entry-level jobs quickly.

Long-term courses open doors to advanced and specialized roles.

Why Choose Uncodemy for Data Analytics Courses in Delhi?

At Uncodemy, we provide top-quality training in data analytics. Our courses are designed by industry experts to meet the latest market demands. Here’s why you should choose us:

Experienced Trainers: Learn from professionals with real-world experience.

Practical Learning: Hands-on projects and case studies.

Flexible Schedule: Choose classes that fit your timing.

Placement Assistance: We help you find the right job after course completion.

Certification: Receive a recognized certificate to boost your career.

Final Thoughts

Choosing between a short-term and long-term data analytics course depends on your goals, time, and budget. If you want quick skills and job readiness, a short-term course is ideal. If you seek in-depth knowledge and long-term career growth, a long-term course is the better choice.

At Uncodemy, we offer both options to meet your needs. Start your journey in data analytics today and open the door to exciting career opportunities. Visit our website or contact us to learn more about our Data Analytics course in delhi.

Your future in data analytics starts here with Uncodemy!

2 notes

·

View notes

Text

Wielding Big Data Using PySpark

Introduction to PySpark

PySpark is the Python API for Apache Spark, a distributed computing framework designed to process large-scale data efficiently. It enables parallel data processing across multiple nodes, making it a powerful tool for handling massive datasets.

Why Use PySpark for Big Data?

Scalability: Works across clusters to process petabytes of data.

Speed: Uses in-memory computation to enhance performance.

Flexibility: Supports various data formats and integrates with other big data tools.

Ease of Use: Provides SQL-like querying and DataFrame operations for intuitive data handling.

Setting Up PySpark

To use PySpark, you need to install it and set up a Spark session. Once initialized, Spark allows users to read, process, and analyze large datasets.

Processing Data with PySpark

PySpark can handle different types of data sources such as CSV, JSON, Parquet, and databases. Once data is loaded, users can explore it by checking the schema, summary statistics, and unique values.

Common Data Processing Tasks

Viewing and summarizing datasets.

Handling missing values by dropping or replacing them.

Removing duplicate records.

Filtering, grouping, and sorting data for meaningful insights.

Transforming Data with PySpark

Data can be transformed using SQL-like queries or DataFrame operations. Users can:

Select specific columns for analysis.

Apply conditions to filter out unwanted records.

Group data to find patterns and trends.

Add new calculated columns based on existing data.

Optimizing Performance in PySpark

When working with big data, optimizing performance is crucial. Some strategies include:

Partitioning: Distributing data across multiple partitions for parallel processing.

Caching: Storing intermediate results in memory to speed up repeated computations.

Broadcast Joins: Optimizing joins by broadcasting smaller datasets to all nodes.

Machine Learning with PySpark

PySpark includes MLlib, a machine learning library for big data. It allows users to prepare data, apply machine learning models, and generate predictions. This is useful for tasks such as regression, classification, clustering, and recommendation systems.

Running PySpark on a Cluster

PySpark can run on a single machine or be deployed on a cluster using a distributed computing system like Hadoop YARN. This enables large-scale data processing with improved efficiency.

Conclusion

PySpark provides a powerful platform for handling big data efficiently. With its distributed computing capabilities, it allows users to clean, transform, and analyze large datasets while optimizing performance for scalability.

For Free Tutorials for Programming Languages Visit-https://www.tpointtech.com/

2 notes

·

View notes

Text

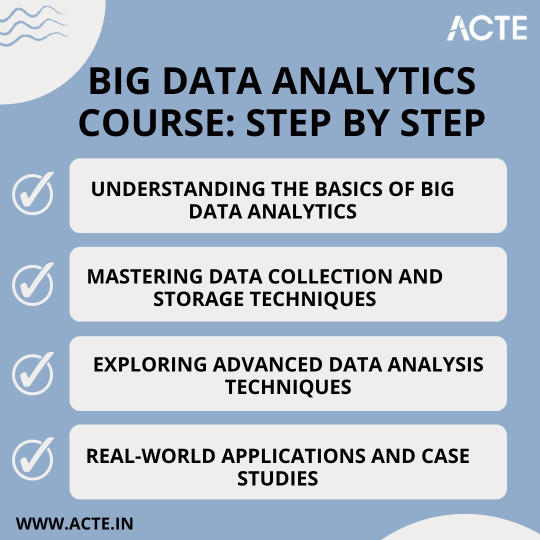

From Beginner to Pro: A Game-Changing Big Data Analytics Course

Are you fascinated by the vast potential of big data analytics? Do you want to unlock its power and become a proficient professional in this rapidly evolving field? Look no further! In this article, we will take you on a journey to traverse the path from being a beginner to becoming a pro in big data analytics. We will guide you through a game-changing course designed to provide you with the necessary information and education to master the art of analyzing and deriving valuable insights from large and complex data sets.

Step 1: Understanding the Basics of Big Data Analytics

Before diving into the intricacies of big data analytics, it is crucial to grasp its fundamental concepts and methodologies. A solid foundation in the basics will empower you to navigate through the complexities of this domain with confidence. In this initial phase of the course, you will learn:

The definition and characteristics of big data

The importance and impact of big data analytics in various industries

The key components and architecture of a big data analytics system

The different types of data and their relevance in analytics

The ethical considerations and challenges associated with big data analytics

By comprehending these key concepts, you will be equipped with the essential knowledge needed to kickstart your journey towards proficiency.

Step 2: Mastering Data Collection and Storage Techniques

Once you have a firm grasp on the basics, it's time to dive deeper and explore the art of collecting and storing big data effectively. In this phase of the course, you will delve into:

Data acquisition strategies, including batch processing and real-time streaming

Techniques for data cleansing, preprocessing, and transformation to ensure data quality and consistency

Storage technologies, such as Hadoop Distributed File System (HDFS) and NoSQL databases, and their suitability for different types of data

Understanding data governance, privacy, and security measures to handle sensitive data in compliance with regulations

By honing these skills, you will be well-prepared to handle large and diverse data sets efficiently, which is a crucial step towards becoming a pro in big data analytics.

Step 3: Exploring Advanced Data Analysis Techniques

Now that you have developed a solid foundation and acquired the necessary skills for data collection and storage, it's time to unleash the power of advanced data analysis techniques. In this phase of the course, you will dive into:

Statistical analysis methods, including hypothesis testing, regression analysis, and cluster analysis, to uncover patterns and relationships within data

Machine learning algorithms, such as decision trees, random forests, and neural networks, for predictive modeling and pattern recognition

Natural Language Processing (NLP) techniques to analyze and derive insights from unstructured text data

Data visualization techniques, ranging from basic charts to interactive dashboards, to effectively communicate data-driven insights

By mastering these advanced techniques, you will be able to extract meaningful insights and actionable recommendations from complex data sets, transforming you into a true big data analytics professional.

Step 4: Real-world Applications and Case Studies

To solidify your learning and gain practical experience, it is crucial to apply your newfound knowledge in real-world scenarios. In this final phase of the course, you will:

Explore various industry-specific case studies, showcasing how big data analytics has revolutionized sectors like healthcare, finance, marketing, and cybersecurity

Work on hands-on projects, where you will solve data-driven problems by applying the techniques and methodologies learned throughout the course

Collaborate with peers and industry experts through interactive discussions and forums to exchange insights and best practices

Stay updated with the latest trends and advancements in big data analytics, ensuring your knowledge remains up-to-date in this rapidly evolving field

By immersing yourself in practical applications and real-world challenges, you will not only gain valuable experience but also hone your problem-solving skills, making you a well-rounded big data analytics professional.

Through a comprehensive and game-changing course at ACTE institute, you can gain the necessary information and education to navigate the complexities of this field. By understanding the basics, mastering data collection and storage techniques, exploring advanced data analysis methods, and applying your knowledge in real-world scenarios, you have transformed into a proficient professional capable of extracting valuable insights from big data.

Remember, the world of big data analytics is ever-evolving, with new challenges and opportunities emerging each day. Stay curious, seek continuous learning, and embrace the exciting journey ahead as you unlock the limitless potential of big data analytics.

17 notes

·

View notes

Text

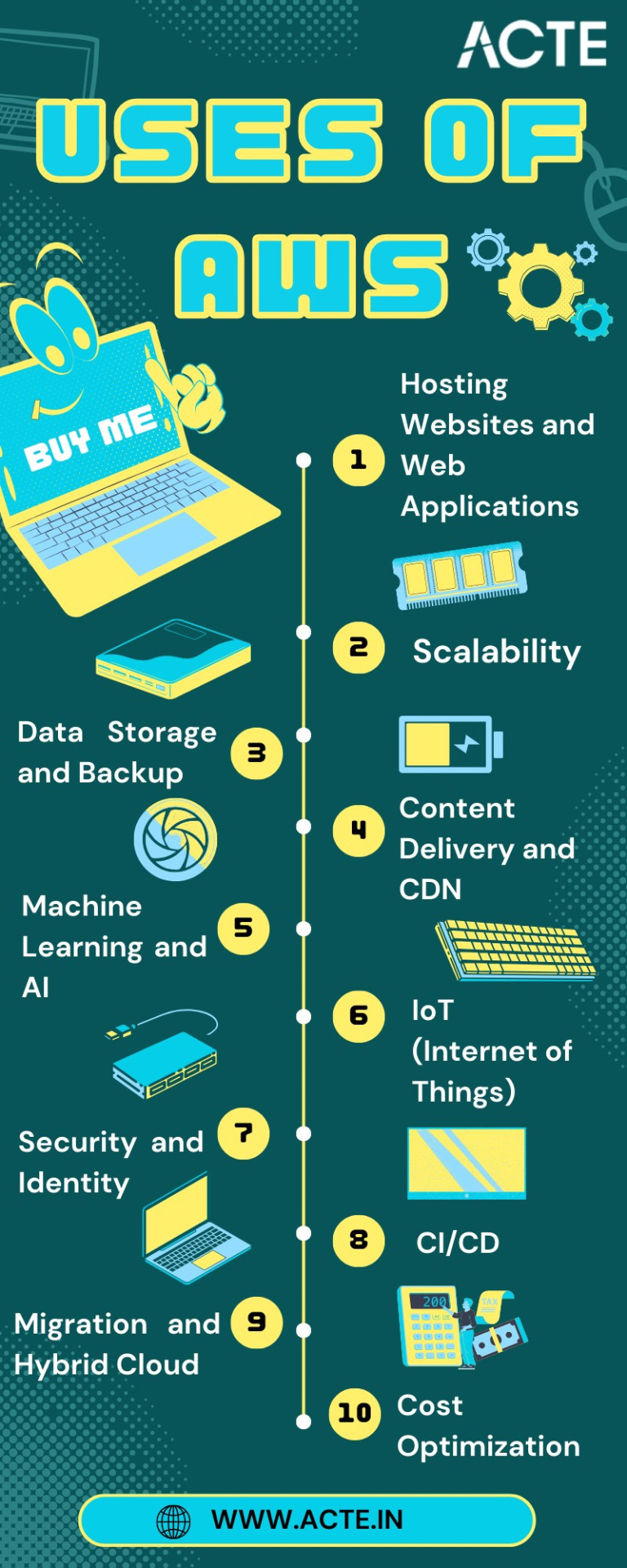

Your Journey Through the AWS Universe: From Amateur to Expert

In the ever-evolving digital landscape, cloud computing has emerged as a transformative force, reshaping the way businesses and individuals harness technology. At the forefront of this revolution stands Amazon Web Services (AWS), a comprehensive cloud platform offered by Amazon. AWS is a dynamic ecosystem that provides an extensive range of services, designed to meet the diverse needs of today's fast-paced world.

This guide is your key to unlocking the boundless potential of AWS. We'll embark on a journey through the AWS universe, exploring its multifaceted applications and gaining insights into why it has become an indispensable tool for organizations worldwide. Whether you're a seasoned IT professional or a newcomer to cloud computing, this comprehensive resource will illuminate the path to mastering AWS and leveraging its capabilities for innovation and growth. Join us as we clarify AWS and discover how it is reshaping the way we work, innovate, and succeed in the digital age.

Navigating the AWS Universe:

Hosting Websites and Web Applications: AWS provides a secure and scalable place for hosting websites and web applications. Services like Amazon EC2 and Amazon S3 empower businesses to deploy and manage their online presence with unwavering reliability and high performance.

Scalability: At the core of AWS lies its remarkable scalability. Organizations can seamlessly adjust their infrastructure according to the ebb and flow of workloads, ensuring optimal resource utilization in today's ever-changing business environment.

Data Storage and Backup: AWS offers a suite of robust data storage solutions, including the highly acclaimed Amazon S3 and Amazon EBS. These services cater to the diverse spectrum of data types, guaranteeing data security and perpetual availability.

Databases: AWS presents a panoply of database services such as Amazon RDS, DynamoDB, and Redshift, each tailored to meet specific data management requirements. Whether it's a relational database, a NoSQL database, or data warehousing, AWS offers a solution.

Content Delivery and CDN: Amazon CloudFront, AWS's content delivery network (CDN) service, ushers in global content distribution with minimal latency and blazing data transfer speeds. This ensures an impeccable user experience, irrespective of geographical location.

Machine Learning and AI: AWS boasts a rich repertoire of machine learning and AI services. Amazon SageMaker simplifies the development and deployment of machine learning models, while pre-built AI services cater to natural language processing, image analysis, and more.

Analytics: In the heart of AWS's offerings lies a robust analytics and business intelligence framework. Services like Amazon EMR enable the processing of vast datasets using popular frameworks like Hadoop and Spark, paving the way for data-driven decision-making.

IoT (Internet of Things): AWS IoT services provide the infrastructure for the seamless management and data processing of IoT devices, unlocking possibilities across industries.

Security and Identity: With an unwavering commitment to data security, AWS offers robust security features and identity management through AWS Identity and Access Management (IAM). Users wield precise control over access rights, ensuring data integrity.

DevOps and CI/CD: AWS simplifies DevOps practices with services like AWS CodePipeline and AWS CodeDeploy, automating software deployment pipelines and enhancing collaboration among development and operations teams.

Content Creation and Streaming: AWS Elemental Media Services facilitate the creation, packaging, and efficient global delivery of video content, empowering content creators to reach a global audience seamlessly.

Migration and Hybrid Cloud: For organizations seeking to migrate to the cloud or establish hybrid cloud environments, AWS provides a suite of tools and services to streamline the process, ensuring a smooth transition.

Cost Optimization: AWS's commitment to cost management and optimization is evident through tools like AWS Cost Explorer and AWS Trusted Advisor, which empower users to monitor and control their cloud spending effectively.

In this comprehensive journey through the expansive landscape of Amazon Web Services (AWS), we've embarked on a quest to unlock the power and potential of cloud computing. AWS, standing as a colossus in the realm of cloud platforms, has emerged as a transformative force that transcends traditional boundaries.

As we bring this odyssey to a close, one thing is abundantly clear: AWS is not merely a collection of services and technologies; it's a catalyst for innovation, a cornerstone of scalability, and a conduit for efficiency. It has revolutionized the way businesses operate, empowering them to scale dynamically, innovate relentlessly, and navigate the complexities of the digital era.

In a world where data reigns supreme and agility is a competitive advantage, AWS has become the bedrock upon which countless industries build their success stories. Its versatility, reliability, and ever-expanding suite of services continue to shape the future of technology and business.

Yet, AWS is not a solitary journey; it's a collaborative endeavor. Institutions like ACTE Technologies play an instrumental role in empowering individuals to master the AWS course. Through comprehensive training and education, learners are not merely equipped with knowledge; they are forged into skilled professionals ready to navigate the AWS universe with confidence.

As we contemplate the future, one thing is certain: AWS is not just a destination; it's an ongoing journey. It's a journey toward greater innovation, deeper insights, and boundless possibilities. AWS has not only transformed the way we work; it's redefining the very essence of what's possible in the digital age. So, whether you're a seasoned cloud expert or a newcomer to the cloud, remember that AWS is not just a tool; it's a gateway to a future where technology knows no bounds, and success knows no limits.

6 notes

·

View notes

Text

Implementing AI: Step-by-step integration guide for hospitals: Specifications Breakdown, FAQs, and More

Implementing AI: Step-by-step integration guide for hospitals: Specifications Breakdown, FAQs, and More

The healthcare industry is experiencing a transformative shift as artificial intelligence (AI) technologies become increasingly sophisticated and accessible. For hospitals looking to modernize their operations and improve patient outcomes, implementing AI systems represents both an unprecedented opportunity and a complex challenge that requires careful planning and execution.

This comprehensive guide provides healthcare administrators, IT directors, and medical professionals with the essential knowledge needed to successfully integrate AI technologies into hospital environments. From understanding technical specifications to navigating regulatory requirements, we’ll explore every aspect of AI implementation in healthcare settings.

Understanding AI in Healthcare: Core Applications and Benefits

Artificial intelligence in healthcare encompasses a broad range of technologies designed to augment human capabilities, streamline operations, and enhance patient care. Modern AI systems can analyze medical imaging with remarkable precision, predict patient deterioration before clinical symptoms appear, optimize staffing schedules, and automate routine administrative tasks that traditionally consume valuable staff time.

The most impactful AI applications in hospital settings include diagnostic imaging analysis, where machine learning algorithms can detect abnormalities in X-rays, CT scans, and MRIs with accuracy rates that often exceed human radiologists. Predictive analytics systems monitor patient vital signs and electronic health records to identify early warning signs of sepsis, cardiac events, or other critical conditions. Natural language processing tools extract meaningful insights from unstructured clinical notes, while robotic process automation handles insurance verification, appointment scheduling, and billing processes.

Discover the exclusive online health & beauty, designed for people who want to stay healthy and look young.

Technical Specifications for Hospital AI Implementation

Infrastructure Requirements

Successful AI implementation demands robust technological infrastructure capable of handling intensive computational workloads. Hospital networks must support high-bandwidth data transfer, with minimum speeds of 1 Gbps for imaging applications and 100 Mbps for general clinical AI tools. Storage systems require scalable architecture with at least 50 TB initial capacity for medical imaging AI, expandable to petabyte-scale as usage grows.

Server specifications vary by application type, but most AI systems require dedicated GPU resources for machine learning processing. NVIDIA Tesla V100 or A100 cards provide optimal performance for medical imaging analysis, while CPU-intensive applications benefit from Intel Xeon or AMD EPYC processors with minimum 32 cores and 128 GB RAM per server node.

Data Integration and Interoperability

AI systems must seamlessly integrate with existing Electronic Health Record (EHR) platforms, Picture Archiving and Communication Systems (PACS), and Laboratory Information Systems (LIS). HL7 FHIR (Fast Healthcare Interoperability Resources) compliance ensures standardized data exchange between systems, while DICOM (Digital Imaging and Communications in Medicine) standards govern medical imaging data handling.

Database requirements include support for both structured and unstructured data formats, with MongoDB or PostgreSQL recommended for clinical data storage and Apache Kafka for real-time data streaming. Data lakes built on Hadoop or Apache Spark frameworks provide the flexibility needed for advanced analytics and machine learning model training.

Security and Compliance Specifications

Healthcare AI implementations must meet stringent security requirements including HIPAA compliance, SOC 2 Type II certification, and FDA approval where applicable. Encryption standards require AES-256 for data at rest and TLS 1.3 for data in transit. Multi-factor authentication, role-based access controls, and comprehensive audit logging are mandatory components.

Network segmentation isolates AI systems from general hospital networks, with dedicated VLANs and firewall configurations. Regular penetration testing and vulnerability assessments ensure ongoing security posture, while backup and disaster recovery systems maintain 99.99% uptime requirements.

Step-by-Step Implementation Framework

Phase 1: Assessment and Planning (Months 1–3)

The implementation journey begins with comprehensive assessment of current hospital infrastructure, workflow analysis, and stakeholder alignment. Form a cross-functional implementation team including IT leadership, clinical champions, department heads, and external AI consultants. Conduct thorough evaluation of existing systems, identifying integration points and potential bottlenecks.

Develop detailed project timelines, budget allocations, and success metrics. Establish clear governance structures with defined roles and responsibilities for each team member. Create communication plans to keep all stakeholders informed throughout the implementation process.

Phase 2: Infrastructure Preparation (Months 2–4)

Upgrade network infrastructure to support AI workloads, including bandwidth expansion and latency optimization. Install required server hardware and configure GPU clusters for machine learning processing. Implement security measures including network segmentation, access controls, and monitoring systems.

Establish data integration pipelines connecting AI systems with existing EHR, PACS, and laboratory systems. Configure backup and disaster recovery solutions ensuring minimal downtime during transition periods. Test all infrastructure components thoroughly before proceeding to software deployment.

Phase 3: Software Deployment and Configuration (Months 4–6)

Deploy AI software platforms in staged environments, beginning with development and testing systems before production rollout. Configure algorithms and machine learning models for specific hospital use cases and patient populations. Integrate AI tools with clinical workflows, ensuring seamless user experiences for medical staff.

Conduct extensive testing including functionality verification, performance benchmarking, and security validation. Train IT support staff on system administration, troubleshooting procedures, and ongoing maintenance requirements. Establish monitoring and alerting systems to track system performance and identify potential issues.

Phase 4: Clinical Integration and Training (Months 5–7)

Develop comprehensive training programs for clinical staff, tailored to specific roles and responsibilities. Create user documentation, quick reference guides, and video tutorials covering common use cases and troubleshooting procedures. Implement change management strategies to encourage adoption and address resistance to new technologies.

Begin pilot programs with select departments or use cases, gradually expanding scope as confidence and competency grow. Establish feedback mechanisms allowing clinical staff to report issues, suggest improvements, and share success stories. Monitor usage patterns and user satisfaction metrics to guide optimization efforts.

Phase 5: Optimization and Scaling (Months 6–12)

Analyze performance data and user feedback to identify optimization opportunities. Fine-tune algorithms and workflows based on real-world usage patterns and clinical outcomes. Expand AI implementation to additional departments and use cases following proven success patterns.

Develop long-term maintenance and upgrade strategies ensuring continued system effectiveness. Establish partnerships with AI vendors for ongoing support, feature updates, and technology evolution. Create internal capabilities for algorithm customization and performance monitoring.

Regulatory Compliance and Quality Assurance

Healthcare AI implementations must navigate complex regulatory landscapes including FDA approval processes for diagnostic AI tools, HIPAA compliance for patient data protection, and Joint Commission standards for patient safety. Establish quality management systems documenting all validation procedures, performance metrics, and clinical outcomes.

Implement robust testing protocols including algorithm validation on diverse patient populations, bias detection and mitigation strategies, and ongoing performance monitoring. Create audit trails documenting all AI decisions and recommendations for regulatory review and clinical accountability.

Cost Analysis and Return on Investment

AI implementation costs vary significantly based on scope and complexity, with typical hospital projects ranging from $500,000 to $5 million for comprehensive deployments. Infrastructure costs including servers, storage, and networking typically represent 30–40% of total project budgets, while software licensing and professional services account for the remainder.

Expected returns include reduced diagnostic errors, improved operational efficiency, decreased length of stay, and enhanced staff productivity. Quantifiable benefits often justify implementation costs within 18–24 months, with long-term savings continuing to accumulate as AI capabilities expand and mature.

Discover the exclusive online health & beauty, designed for people who want to stay healthy and look young.

Frequently Asked Questions (FAQs)

1. How long does it typically take to implement AI systems in a hospital setting?

Complete AI implementation usually takes 12–18 months from initial planning to full deployment. This timeline includes infrastructure preparation, software configuration, staff training, and gradual rollout across departments. Smaller implementations focusing on specific use cases may complete in 6–9 months, while comprehensive enterprise-wide deployments can extend to 24 months or longer.

2. What are the minimum technical requirements for AI implementation in healthcare?

Minimum requirements include high-speed network connectivity (1 Gbps for imaging applications), dedicated server infrastructure with GPU support, secure data storage systems with 99.99% uptime, and integration capabilities with existing EHR and PACS systems. Most implementations require initial storage capacity of 10–50 TB and processing power equivalent to modern server-grade hardware with minimum 64 GB RAM per application.

3. How do hospitals ensure AI systems comply with HIPAA and other healthcare regulations?

Compliance requires comprehensive security measures including end-to-end encryption, access controls, audit logging, and regular security assessments. AI vendors must provide HIPAA-compliant hosting environments with signed Business Associate Agreements. Hospitals must implement data governance policies, staff training programs, and incident response procedures specifically addressing AI system risks and regulatory requirements.

4. What types of clinical staff training are necessary for AI implementation?

Training programs must address both technical system usage and clinical decision-making with AI assistance. Physicians require education on interpreting AI recommendations, understanding algorithm limitations, and maintaining clinical judgment. Nurses need training on workflow integration and alert management. IT staff require technical training on system administration, troubleshooting, and performance monitoring. Training typically requires 20–40 hours per staff member depending on their role and AI application complexity.

5. How accurate are AI diagnostic tools compared to human physicians?

AI diagnostic accuracy varies by application and clinical context. In medical imaging, AI systems often achieve accuracy rates of 85–95%, sometimes exceeding human radiologist performance for specific conditions like diabetic retinopathy or skin cancer detection. However, AI tools are designed to augment rather than replace clinical judgment, providing additional insights that physicians can incorporate into their diagnostic decision-making process.

6. What ongoing maintenance and support do AI systems require?

AI systems require continuous monitoring of performance metrics, regular algorithm updates, periodic retraining with new data, and ongoing technical support. Hospitals typically allocate 15–25% of initial implementation costs annually for maintenance, including software updates, hardware refresh cycles, staff training, and vendor support services. Internal IT teams need specialized training to manage AI infrastructure and troubleshoot common issues.

7. How do AI systems integrate with existing hospital IT infrastructure?

Modern AI platforms use standard healthcare interoperability protocols including HL7 FHIR and DICOM to integrate with EHR systems, PACS, and laboratory information systems. Integration typically requires API development, data mapping, and workflow configuration to ensure seamless information exchange. Most implementations use middleware solutions to manage data flow between AI systems and existing hospital applications.

8. What are the potential risks and how can hospitals mitigate them?

Primary risks include algorithm bias, system failures, data security breaches, and over-reliance on AI recommendations. Mitigation strategies include diverse training data sets, robust testing procedures, comprehensive backup systems, cybersecurity measures, and continuous staff education on AI limitations. Hospitals should maintain clinical oversight protocols ensuring human physicians retain ultimate decision-making authority.

9. How do hospitals measure ROI and success of AI implementations?

Success metrics include clinical outcomes (reduced diagnostic errors, improved patient safety), operational efficiency (decreased processing time, staff productivity gains), and financial impact (cost savings, revenue enhancement). Hospitals typically track key performance indicators including diagnostic accuracy rates, workflow efficiency improvements, patient satisfaction scores, and quantifiable cost reductions. ROI calculations should include both direct cost savings and indirect benefits like improved staff satisfaction and reduced liability risks.

10. Can smaller hospitals implement AI, or is it only feasible for large health systems?

AI implementation is increasingly accessible to hospitals of all sizes through cloud-based solutions, software-as-a-service models, and vendor partnerships. Smaller hospitals can focus on specific high-impact applications like radiology AI or clinical decision support rather than comprehensive enterprise deployments. Cloud platforms reduce infrastructure requirements and upfront costs, making AI adoption feasible for hospitals with 100–300 beds. Many vendors offer scaled pricing models and implementation support specifically designed for smaller healthcare organizations.

Discover the exclusive online health & beauty, designed for people who want to stay healthy and look young.

Conclusion: Preparing for the Future of Healthcare

AI implementation in hospitals represents a strategic investment in improved patient care, operational efficiency, and competitive positioning. Success requires careful planning, adequate resources, and sustained commitment from leadership and clinical staff. Hospitals that approach AI implementation systematically, with proper attention to technical requirements, regulatory compliance, and change management, will realize significant benefits in patient outcomes and organizational performance.

The healthcare industry’s AI adoption will continue accelerating, making early implementation a competitive advantage. Hospitals beginning their AI journey today position themselves to leverage increasingly sophisticated technologies as they become available, building internal capabilities and organizational readiness for the future of healthcare delivery.

As AI technologies mature and regulatory frameworks evolve, hospitals with established AI programs will be better positioned to adapt and innovate. The investment in AI implementation today creates a foundation for continuous improvement and technological advancement that will benefit patients, staff, and healthcare organizations for years to come.

0 notes

Text

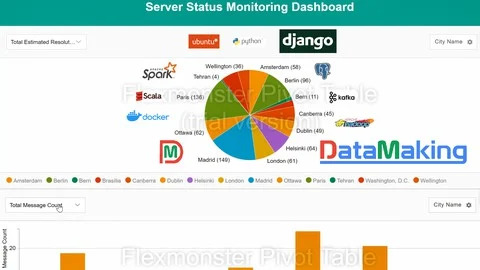

Real Time Spark Project for Beginners: Hadoop, Spark, Docker

🚀 Building a Real-Time Data Pipeline for Server Monitoring Using Kafka, Spark, Hadoop, PostgreSQL & Django

In today’s data centers, various types of servers constantly generate vast volumes of real-time event data—each event representing the server’s status. To ensure stability and minimize downtime, monitoring teams need instant insights into this data to detect and resolve issues swiftly.

To meet this demand, a scalable and efficient real-time data pipeline architecture is essential. Here’s how we’re building it:

🧩 Tech Stack Overview: Apache Kafka acts as the real-time data ingestion layer, handling high-throughput event streams with minimal latency.

Apache Spark (Scala + PySpark), running on a Hadoop cluster (via Docker), performs large-scale, fault-tolerant data processing and analytics.

Hadoop enables distributed storage and computation, forming the backbone of our big data processing layer.

PostgreSQL stores the processed insights for long-term use and querying.

Django serves as the web framework, enabling dynamic dashboards and APIs.

Flexmonster powers data visualization, delivering real-time, interactive insights to monitoring teams.

🔍 Why This Stack? Scalability: Each tool is designed to handle massive data volumes.

Real-time processing: Kafka + Spark combo ensures minimal lag in generating insights.

Interactivity: Flexmonster with Django provides a user-friendly, interactive frontend.

Containerized: Docker simplifies deployment and management.

This architecture empowers data center teams to monitor server statuses live, quickly detect anomalies, and improve infrastructure reliability.

Stay tuned for detailed implementation guides and performance benchmarks!

0 notes

Text

Future Directions and Opportunities for Data Science

Data science is fostering innovation and revolutionizing industries through data-driven decision-making. People and organizations need to understand how data science evolves in order to stay ahead of the curve as technology progresses. Enrolling in a data science course in Bangalore can help you understand how new technologies like artificial intelligence (AI), machine learning, and big data analytics will impact data science over the next ten years and set new benchmarks for efficacy, creativity, and insights.

Explain data science

Data science began in the 1960s with the creation of statistical computers and decision-making models. The digital age has led to a significant growth in data over time, necessitating the use of sophisticated tools and processes. The introduction of big data technologies like Hadoop and Spark transformed the handling and processing of massive information, paving the way for modern data science.

Data science is essential to many businesses, such as retail, entertainment, healthcare, and finance. By enabling specialists to address issues like fraud detection, tailored treatment, recommendation systems, and others, its multidisciplinary nature firmly establishes it as a pillar of technological innovation.

New technology is causing changes in data science

The revolution in data science is mostly due to new technology. The availability, accuracy, and speed of data-driven insights are being accelerated by developments in automation and quantum computing. The technologies that will impact data science in the future are examined in this article.

The Top Six Data Science Trends

Watch for the following advancements in Data Science Online Training in 2025–2026. Additionally, you can learn about how these developments will give firms a competitive edge and some of the employment options they will present.

1. Machine learning and artificial intelligence

Two separate but related computer science subfields are artificial intelligence and machine learning. Machine learning is a type of artificial intelligence that may allow robots to learn from data and improve over time without explicit training. Thus, all machine learning is AI, but not all AI is machine learning.

2. Developments in Natural Language Processing (NLP)

Significant advances in NLP technology will enable us to read language more contextually and precisely. We believe that these technologies will lead to an increase in chatbots, virtual assistants, and automated content production. This will enhance the organic aspect of human-machine interaction across multiple platforms.

3. IoT and edge computing

Edge computing and the Internet of Things (IoT) will work together to enable real-time data processing. Enhancing data processing at the place of origin would reduce latency and bandwidth usage, enabling faster decision-making in areas such as autonomous vehicles, smart cities, and industrial automation.

4. XAI, or explainable AI

As AI systems advance, accountability and transparency will become more crucial. Explainable AI will work to improve the understandability and interpretability of AI models to guarantee ethical use and regulatory compliance. This will be crucial for establishing equity, user confidence, and transparency in AI judgments.

5. Privacy and Data Security

As cybersecurity threats increase and requirements get more strict, data security and confidentiality will become increasingly important. This will necessitate developing innovative methods for encrypting anonymous data and protecting sensitive data utilizing safe multi-party computations that increase user confidence. Businesses should make significant investments in security measures to safeguard their priceless information assets.

6. Analytics for Augmented Data

Artificial intelligence is used in advanced data analytics to expedite the process of finding explanations, generating insights, and preparing data. This innovation has made it possible for more business owners to use data to guide their decisions without needing certain technological expertise. Additionally, by making knowledge more accessible at all organizational levels, it encourages deliberate decision-making.

Conclusion

Cloud-based analytics, AI automation, and quantum computing are some of the cutting-edge technologies that are revolutionizing data science. These discoveries are revolutionizing sectors such as finance, healthcare, and urban planning, underscoring the enormous potential of data science to address real-world issues. If professionals want to thrive in today's fast-paced industry, they must accept flexibility and continual education. If you want to remain competitive, you must investigate state-of-the-art resources, learn the required skills, and keep abreast of market developments.

0 notes

Text

Top 5 Benefits of Implementing a Data Lake Solution

Implementing a data lake solution offers numerous strategic advantages for organizations aiming to harness the full potential of their data assets.Flycatch the data lake solutions company in Saudi Arabia experience a seamless stream of insights driving superior business decisions.

1. Enhanced Data Agility and Flexibility

Data lakes allow organizations to store data in its raw form, accommodating structured, semi-structured, and unstructured data. This flexibility enables:

Rapid ingestion of diverse data types without the need for upfront schema definitions.

Adaptability to evolving data requirements and analytical needs.

This agility supports faster decision-making and innovation by providing immediate access to a wide array of data sources. Built on scalable architectures like Hadoop or cloud-based object storage, data lakes can handle vast amounts of data efficiently. Benefits include:

Horizontal scaling to accommodate growing data volumes.

Cost savings through the use of low-cost storage solutions and pay-as-you-go models.

This scalability ensures that organizations can manage increasing data loads without significant infrastructure investments.

3. Advanced Analytics and Machine Learning Capabilities

Data lakes serve as a foundation for advanced analytics by:

Providing a centralized repository for diverse data types, facilitating comprehensive analysis.

Supporting machine learning and AI applications through access to large, varied datasets.

This capability enables organizations to uncover insights, predict trends, and make data-driven decisions.

4. Data Democratization and Collaboration

By centralizing data storage, data lakes promote:

Self-service access to data for various stakeholders, reducing dependency on IT teams.

Collaboration across departments by breaking down data silos.

This democratization fosters a data-driven culture and enhances organizational efficiency.

5. Consolidation of Data Silos

Data lake solution integrate data from multiple sources into a single repository, leading to:

A unified view of organizational data, improving consistency and accuracy.

Simplified data management and governance.

This consolidation supports comprehensive analytics and streamlined operations.

0 notes

Text

Learn Data Analytics in Noida – From Basics to Advanced

In today’s data-driven world, businesses rely heavily on data analytics to make informed decisions, improve operations, and gain a competitive edge. Whether you're a student, recent graduate, or working professional looking to upskill, learning data analytics in Noida offers a powerful pathway to a high-demand career in tech.

Why Learn Data Analytics?

Data analytics is the backbone of digital transformation. From retail to healthcare, finance to logistics, organizations are harnessing data to improve efficiency, predict trends, and tailor experiences to customers. By mastering data analytics, you gain the ability to extract meaningful insights from raw data — a skill that is both valuable and versatile.

Why Choose Noida for Data Analytics Training?

Noida, as one of India’s leading tech hubs, is home to numerous IT companies, startups, and MNCs that are actively hiring skilled data professionals. It also hosts top-rated institutes and training centers offering comprehensive data analytics programs tailored to current industry needs.

Here’s why Noida stands out:

Industry-Oriented Curriculum Training programs cover real-world tools like Excel, SQL, Python, R, Tableau, Power BI, and advanced machine learning techniques.

Hands-On Learning Most courses offer live projects, internships, and case studies to provide practical experience.

Placement Support Institutes in Noida often have tie-ups with local tech firms, increasing your chances of landing a job right after training.

Flexible Modes Choose from classroom, online, or hybrid formats based on your convenience.

What You’ll Learn: From Basics to Advanced

1. Beginner Level:

Introduction to data and its types

Excel for data manipulation

Basics of SQL for database querying

Data visualization fundamentals

2. Intermediate Level:

Python or R for data analysis

Exploratory Data Analysis (EDA)

Working with real-time datasets

Introduction to business intelligence tools (Tableau/Power BI)

3. Advanced Level:

Predictive analytics using machine learning

Time-series analysis

Natural Language Processing (NLP)

Big data tools (Hadoop, Spark – optional)

Capstone projects and portfolio building

Who Can Enroll?

College students from IT, engineering, or statistics backgrounds

Working professionals in finance, marketing, or operations

Freshers looking to start a career in data analytics

Entrepreneurs wanting to leverage data for better business decisions

No prior coding experience? No problem — many programs start from scratch and gradually build your skills.

Final Thoughts

Learning Data Analytics in Noida opens up a world of opportunities. With the right training, mentorship, and hands-on practice, you can transition into roles such as Data Analyst, Business Analyst, Data Scientist, or BI Developer. The demand for data-savvy professionals continues to grow — and there's no better time than now to dive in.

#Data Analytics Training in Noida#Learn Data Analytics#Data Analytics Course Noida#Advanced Data Analytics Program#Data Analyst Career Noida

0 notes

Text

Upgrade Your Career with the Best Data Analytics Courses in Noida

In a world overflowing with digital information, data is the new oil—and those who can extract, refine, and analyze it are in high demand. The ability to understand and leverage data is now a key competitive advantage in every industry, from IT and finance to healthcare and e-commerce. As a result, data analytics courses have gained massive popularity among job seekers, professionals, and students alike.

If you're located in Delhi-NCR or looking for quality tech education in a thriving urban setting, enrolling in data analytics courses in Noida can give your career the boost it needs.

What is Data Analytics?

Data analytics refers to the science of analyzing raw data to extract meaningful insights that drive decision-making. It involves a range of techniques, tools, and technologies used to discover patterns, predict trends, and identify valuable information.

There are several types of data analytics, including:

Descriptive Analytics: What happened?

Diagnostic Analytics: Why did it happen?

Predictive Analytics: What could happen?

Prescriptive Analytics: What should be done?

Skilled professionals who master these techniques are known as Data Analysts, Data Scientists, and Business Intelligence Experts.

Why Should You Learn Data Analytics?

Whether you’re a tech enthusiast or someone from a non-technical background, learning data analytics opens up a wealth of opportunities. Here’s why data analytics courses are worth investing in:

📊 High Demand, Low Supply: There is a massive talent gap in data analytics. Skilled professionals are rare and highly paid.

�� Diverse Career Options: You can work in finance, IT, marketing, retail, sports, government, and more.

🌍 Global Opportunities: Data analytics skills are in demand worldwide, offering chances to work remotely or abroad.

💰 Attractive Salary Packages: Entry-level data analysts can expect starting salaries upwards of ₹4–6 LPA, which can grow quickly with experience.

📈 Future-Proof Career: As long as businesses generate data, analysts will be needed to make sense of it.

Why Choose Data Analytics Courses in Noida?

Noida, part of the Delhi-NCR region, is a fast-growing tech and education hub. Home to top companies and training institutes, Noida offers the perfect ecosystem for tech learners.

Here are compelling reasons to choose data analytics courses in noida:

🏢 Proximity to IT & MNC Hubs: Noida houses leading firms like HCL, TCS, Infosys, Adobe, Paytm, and many startups.

🧑🏫 Expert Trainers: Courses are conducted by professionals with real-world experience in analytics, machine learning, and AI.

🖥️ Practical Approach: Institutes focus on hands-on learning through real datasets, live projects, and capstone assignments.

🎯 Placement Assistance: Many data analytics institutes in Noida offer dedicated job support, resume writing, and interview prep.

🕒 Flexible Batches: Choose from online, offline, weekend, or evening classes to suit your schedule.

Core Modules Covered in Data Analytics Courses

A comprehensive data analytics course typically includes:

Fundamentals of Data Analytics

Excel for Data Analysis

Statistics & Probability

SQL for Data Querying

Python or R Programming

Data Visualization (Power BI/Tableau)

Machine Learning Basics

Big Data Technologies (Hadoop, Spark)

Business Intelligence Tools

Capstone Project/Internship

Who Should Join Data Analytics Courses?

These courses are suitable for a wide audience:

✅ Fresh graduates (B.Sc, BCA, B.Tech, BBA, MBA)

✅ IT professionals seeking domain change

✅ Non-IT professionals like sales, marketing, and finance executives

✅ Entrepreneurs aiming to make data-backed decisions

✅ Students planning higher education in data science or AI

Career Opportunities After Completing Data Analytics Courses

After completing a data analytics course, learners can pursue roles such as:

Data Analyst

Business Analyst

Data Scientist

Data Engineer

Data Consultant

Operations Analyst

Marketing Analyst

Financial Analyst

BI Developer

Risk Analyst

Top Recruiters Hiring Data Analytics Professionals

Companies in Noida and across India actively seek data professionals. Some top recruiters include:

HCL Technologies

TCS

Infosys

Accenture

Cognizant

Paytm

Genpact

Capgemini

EY (Ernst & Young)

ZS Associates

Startups in fintech, health tech, and e-commerce

Tips to Choose the Right Data Analytics Course in Noida

When selecting a training program, consider the following:

🔍 Course Content: Does it cover the latest tools and techniques?

🧑🏫 Trainer Background: Are trainers experienced and industry-certified?

🛠️ Hands-On Practice: Does the course include real-time projects?

📜 Certification: Is it recognized by companies and institutions?

💬 Reviews and Ratings: What do past students say about the course?

🎓 Post-Course Support: Is job placement or internship assistance available?

Certifications That Add Value

A good institute will prepare you for globally recognized certifications such as:

Microsoft Data Analyst Associate

Google Data Analytics Professional Certificate

IBM Data Analyst Certificate

Tableau Desktop Specialist

Certified Analytics Professional (CAP)

These certifications can boost your credibility and help you stand out in job applications.

Final Thoughts: Your Future Starts Here

In this competitive digital era, data is everywhere—but professionals who can understand and use it effectively are still rare. Taking the right data analytics courses in noida is not just a step toward upskilling—it’s an investment in your future.

Whether you're aiming for a job switch, career growth, or knowledge enhancement, data analytics courses offer a versatile, high-growth pathway. With industry-relevant skills, real-time projects, and expert guidance, you’ll be prepared to take on the most in-demand roles of the decade.

0 notes

Text

Data Analytics for IoT: Unlocking the Power of Connected Intelligence

In today’s hyper-connected world, the Internet of Things (IoT) is reshaping how industries, cities, and consumers interact with the environment. From smart homes to connected factories, IoT devices are generating massive volumes of data every second. But raw data, on its own, holds little value unless transformed into actionable insights — and that’s where data analytics for IoT becomes essential.

What is Data Analytics for IoT?

Data analytics for IoT refers to the process of collecting, processing, and analyzing data generated by interconnected devices (sensors, machines, wearables, etc.) to extract meaningful insights. These analytics can help improve decision-making, automate operations, and enhance user experiences across sectors like healthcare, manufacturing, agriculture, transportation, and more.

IoT data analytics can be categorized into four main types:

Descriptive Analytics – What happened?

Diagnostic Analytics – Why did it happen?

Predictive Analytics – What is likely to happen?

Prescriptive Analytics – What should be done about it?

Why is IoT Data Analytics Important?

As the number of IoT devices is expected to surpass 30 billion by 2030, businesses need robust analytics systems to handle the massive influx of data. Here’s why IoT analytics is critical:

Operational Efficiency: Identify bottlenecks, monitor machine performance, and reduce downtime.

Predictive Maintenance: Avoid costly failures by predicting issues before they occur.

Real-Time Decision Making: Monitor systems and processes in real-time for quick responses.

Customer Insights: Understand usage patterns and improve product design or customer service.

Sustainability: Optimize energy usage and reduce waste through smart resource management.

Key Technologies Powering IoT Data Analytics

To extract valuable insights, several technologies work hand-in-hand with IoT analytics:

Big Data Platforms: Tools like Hadoop, Apache Spark, and cloud storage solutions manage vast data sets.

Edge Computing: Analyzing data closer to where it’s generated to reduce latency and bandwidth.

Artificial Intelligence & Machine Learning (AI/ML): Automating pattern detection, anomaly identification, and forecasting.

Cloud Computing: Scalable infrastructure for storing and analyzing IoT data across multiple devices.

Data Visualization Tools: Platforms like Tableau, Power BI, and Grafana help interpret complex data for decision-makers.

Applications of Data Analytics in IoT

1. Smart Manufacturing

IoT sensors monitor production lines and machinery in real-time. Data analytics helps detect inefficiencies, forecast equipment failures, and optimize supply chains.

2. Healthcare

Wearables and smart medical devices generate health data. Analytics tools help doctors track patient vitals remotely and predict health risks.

3. Smart Cities

Cities use IoT analytics to manage traffic, reduce pollution, optimize energy usage, and improve public safety through connected infrastructure.

4. Agriculture

Smart farming tools monitor soil moisture, weather, and crop health. Farmers use analytics to increase yield and manage resources efficiently.

5. Retail

IoT data from shelves, RFID tags, and customer devices helps track inventory, understand consumer behavior, and personalize shopping experiences.

Challenges in IoT Data Analytics

Despite its benefits, there are significant challenges to consider:

Data Privacy and Security: IoT data is sensitive and prone to breaches.

Data Volume and Velocity: Managing the massive scale of real-time data is complex.

Interoperability: Devices from different manufacturers often lack standard protocols.

Scalability: Analytics systems must evolve as the number of devices grows.

Latency: Real-time processing demands low-latency infrastructure.

The Future of IoT Analytics

The future of IoT data analytics lies in autonomous systems, AI-driven automation, and decentralized processing. Technologies like 5G, blockchain, and advanced AI models will further empower real-time, secure, and scalable analytics solutions. Businesses that harness these advancements will gain a strategic edge in innovation and efficiency.

Conclusion

As IoT devices continue to infiltrate every corner of our world, data analytics will serve as the backbone that turns their data into actionable intelligence. Whether it's a smart thermostat learning your habits or an industrial robot flagging maintenance issues before breakdown, the fusion of IoT and analytics is transforming how we live, work, and think.

Organizations that invest in IoT data analytics today are not just staying competitive — they’re shaping the intelligent, connected future.

0 notes

Text

Unleashing the Power of Big Data Analytics: Mastering the Course of Success

In today's digital age, data has become the lifeblood of successful organizations. The ability to collect, analyze, and interpret vast amounts of data has revolutionized business operations and decision-making processes. Here is where big data analytics could truly excel. By harnessing the potential of data analytics, businesses can gain valuable insights that can guide them on a path to success. However, to truly unleash this power, it is essential to have a solid understanding of data analytics and its various types of courses. In this article, we will explore the different types of data analytics courses available and how they can help individuals and businesses navigate the complex world of big data.

Education: The Gateway to Becoming a Data Analytics Expert

Before delving into the different types of data analytics courses, it is crucial to highlight the significance of education in this field. Data analytics is an intricate discipline that requires a solid foundation of knowledge and skills. While practical experience is valuable, formal education in data analytics serves as the gateway to becoming an expert in the field. By enrolling in relevant courses, individuals can gain a comprehensive understanding of the theories, methodologies, and tools used in data analytics.

Data Analytics Courses Types: Navigating the Expansive Landscape

When it comes to data analytics courses, there is a wide range of options available, catering to individuals with varying levels of expertise and interests. Let's explore some of the most popular types of data analytics courses:

1. Introduction to Data Analytics

This course serves as a perfect starting point for beginners who want to dip their toes into the world of data analytics. The course covers the fundamental concepts, techniques, and tools used in data analytics. It provides a comprehensive overview of data collection, cleansing, and visualization techniques, along with an introduction to statistical analysis. By mastering the basics, individuals can lay a solid foundation for further exploration in the field of data analytics.

2. Advanced Data Analytics Techniques

For those looking to deepen their knowledge and skills in data analytics, advanced courses offer a treasure trove of insights. These courses delve into complex data analysis techniques, such as predictive modeling, machine learning algorithms, and data mining. Individuals will learn how to discover hidden patterns, make accurate predictions, and extract valuable insights from large datasets. Advanced data analytics courses equip individuals with the tools and techniques necessary to tackle real-world data analysis challenges.

3. Specialized Data Analytics Courses

As the field of data analytics continues to thrive, specialized courses have emerged to cater to specific industry needs and interests. Whether it's healthcare analytics, financial analytics, or social media analytics, individuals can choose courses tailored to their desired area of expertise. These specialized courses delve into industry-specific data analytics techniques and explore case studies to provide practical insights into real-world applications. By honing their skills in specialized areas, individuals can unlock new opportunities and make a significant impact in their chosen field.

4. Big Data Analytics Certification Programs

In the era of big data, the ability to navigate and derive meaningful insights from massive datasets is in high demand. Big data analytics certification programs offer individuals the chance to gain comprehensive knowledge and hands-on experience in handling big data. These programs cover topics such as Hadoop, Apache Spark, and other big data frameworks. By earning a certification, individuals can demonstrate their proficiency in handling big data and position themselves as experts in this rapidly growing field.

Education and the mastery of data analytics courses at ACTE Institute is essential in unleashing the power of big data analytics. With the right educational foundation like the ACTE institute, individuals can navigate the complex landscape of data analytics with confidence and efficiency. Whether starting with an introduction course or diving into advanced techniques, the world of data analytics offers endless opportunities for personal and professional growth. By staying ahead of the curve and continuously expanding their knowledge, individuals can become true masters of the course, leading businesses towards success in the era of big data.

2 notes

·

View notes

Text

How To Create EMR Notebook In Amazon EMR Studio

How to Make EMR Notebook?

Amazon Web Services (AWS) has incorporated Amazon EMR Notebooks into Amazon EMR Studio Workspaces on the new Amazon EMR interface. Integration aims to provide a single environment for notebook creation and massive data processing. However, the new console's “Create Workspace” button usually creates notebooks.

Users must visit the Amazon EMR console at the supplied web URL and complete the previous console's procedures to create an EMR notebook. Users usually select “Notebooks” and “Create notebook” from this interface.

When creating a Notebook, users choose a name and a description. The next critical step is connecting the notebook to an Amazon EMR cluster to run the code.

There are two basic ways users associate clusters:

Select an existing cluster

If an appropriate EMR cluster is already operating, users can click “Choose,” select it from a list, and click “Choose cluster” to confirm. EMR Notebooks have cluster requirements, per documentation. These prerequisites, EMR release versions, and security problems are detailed in specialised sections.

Create a cluster

Users can also “Create a cluster” to have Amazon EMR create a laptop-specific cluster. This method lets users name their clusters. This workflow defaults to the latest supported EMR release version and essential apps like Hadoop, Spark, and Livy, however some configuration variables, such as the Release version and pre-selected apps, may not be modifiable.

Users can customise instance parameters by selecting EC2 Instance and entering the appropriate number of instances. A primary node and core nodes are identified. The instance type determines the maximum number of notebooks that can connect to the cluster, subject to constraints.

The EC2 instance profile and EMR role, which users can choose custom or default roles for, are also defined during cluster setup. Links to more information about these service roles are supplied. An EC2 key pair for cluster instance SSH connections can also be chosen.

Amazon EMR versions 5.30.0 and 6.1.0 and later allow optional but helpful auto-termination. For inactivity, users can click the box to shut down the cluster automatically. Users can specify security groups for the primary instance and notebook client instance, use default security groups, or use custom ones from the cluster's VPC.

Cluster settings and notebook-specific configuration are part of notebook creation. Choose a custom or default AWS Service Role for the notebook client instance. The Amazon S3 Notebook location will store the notebook file. If no bucket or folder exists, Amazon EMR can create one, or users can choose their own. A folder with the Notebook ID and NotebookName and.ipynb extension is created in the S3 location to store the notebook file.

If an encrypted Amazon S3 location is used, the Service role for EMR Notebooks (EMR_Notebooks_DefaultRole) must be set up as a key user for the AWS KMS key used for encryption. To add key users to key policies, see AWS KMS documentation and support pages.

Users can link a Git-based repository to a notebook in Amazon EMR. After selecting “Git repository” and “Choose repository”, pick from the list.

Finally, notebook users can add Tags as key-value pairs. The documentation includes an Important Note about a default tag with the key creatorUserID and the value set to the user's IAM user ID. Users should not change or delete this tag, which is automatically applied for access control, because IAM policies can use it. After configuring all options, clicking “Create Notebook” finishes notebook creation.

Users should note that these instructions are for the old console, while the new console now uses EMR Notebooks as EMR Studio Workspaces. To access existing notebooks as Workspaces or create new ones using the “Create Workspace” option in the new UI, EMR Notebooks users need extra IAM role rights. Users should not change or delete the notebook's default access control tag, which contains the creator's user ID. No notebooks can be created with the Amazon EMR API or CLI.

The thorough construction instructions in some current literature match the console interface, however this transition symbolises AWS's intention to centralise notebook creation in EMR Studio.

#EMRNotebook#AmazonEMRconsole#AmazonEMR#AmazonS3#EMRStudio#AmazonEMRAPI#EC2Instance#technology#technews#technologynews#news#govindhtech

0 notes

Text

The tech field is still exhibiting strong growth, with a high demand for qualified workers. Yet for recent graduates to land the most highly sought after roles, you’ll want to make sure that your skills are up to scratch. This is an industry that’s constantly evolving along with the rapid explosion of technology, so employees who want better paying jobs and security will need to stay current with the latest innovations. With that in mind, here are five IT skills that students in particular may want to pay attention to. 1. Programming And Coding Perhaps the number one skill that will guarantee you a job in the IT industry straight out of university is the ability to program or code. IT departments all over the world are on the hunt for talented programmers, who are well trained in the latest platforms and can code in multiple languages. Learning programming is very obvious and essential part of successful career in IT industry. There are many popular programming languages to start as a student. You can choose one of your favorite language and pick a good programming book to learn coding. Although coding is seen as something that’s highly technical, even beginners can pick up introductory skills through a number of online courses and tutorials. For example IT courses at training.com.au often start with this basic skill that’s always in high demand. 2. Database Administration Big data is a term that’s thrown around constantly these days, which is why there’s such a need for those skilled with database administration. This type of job involves the ability to sift and analyse high volumes of data, while setting up logical database architecture to keep everything in check. Because data is growing at such a massive speed on a daily basis, the demand for qualified workers will only grow. If you have some experience with wrangling data through your studies, you’ll be well positioned to land a plum role in the organization of your choice. Many beginners may directly get tempted to jump on to Hadoop, however it may be a good idea to understand basic database and relational database storage as a beginner. Majority of corporate software is still running on relational databases like Oracle and MySQL. Though knowing Hadoop may help, it may not be sufficient for excelling in your job. You need larger skill set to be able to manage big data. 3. UX Design Web design today is all about the user experience, or UX. UX designers think about how the eventual user will be able to interact with a system, whether it’s an application or website. They analyse efficiency, testing it to create a more user-friendly experience. It’s a different way of looking at web design that’s becoming increasingly important for all designers to be aware of. Perhaps you already have some web design experience from your foundation courses– adding UX courses to your CV can’t hurt. Knowing about responsive design will also be a help. Checkout some inspirational responsive examples to stay up to date with latest web design trends. Make sure to know how to use the tools for web design. 4. Mobile Expertise App development, mobile marketing, and responsive web design are all hot trends this year, as most companies make the move to create a viable mobile presence. Businesses must have a strong mobile strategy now to compete, and many are moving to the ability to take payments via smartphone or tablet. Mobile and cloud computing are also coming together to form the future of technology, with mobile apps able to be used on numerous devices. A strong understanding of mobile technology and app design will put you ahead of the curve. 5. Networking If you have some experience with the ins and outs of setting up and monitoring a network, you might want to update this skill set to land a job. IT departments in a variety of industries are looking for individuals who are able to handle IP routing, firewall filtering, and other basic networking tasks with ease. Most security

tester and ethical hacker jobs require you to have a in depth understanding of networking concepts. Naturally, needs will vary depending on the type of company you’re thinking of working for. But for students keen on entering the workforce, upskilling in these IT-related areas will put you in a very good position. Can you think of a skill that we missed out?

0 notes

Text

0 notes

Text

Zoople Technologies: Your Launchpad into the World of Data Science in Kochi

In today's data-driven world, the ability to analyze, interpret, and leverage data is no longer a luxury but a necessity. Data science has emerged as a critical field, and the demand for skilled data professionals is soaring. For individuals in Kochi aspiring to carve a successful career in this dynamic domain, Zoople Technologies stands out as a prominent institute offering comprehensive data science training. This content explores the key aspects that make Zoople Technologies a compelling choice for your data science education journey in Kochi.

Comprehensive Data Science Curriculum Designed for Success:

Zoople Technologies offers a meticulously designed data science course that aims to equip learners with a holistic understanding of the data science lifecycle. Their curriculum goes beyond theoretical concepts, emphasizing practical application and industry-relevant skills. Key components of their data science training typically include:

Foundational Programming with Python: Python is the workhorse of data science, and Zoople Technologies likely provides a strong foundation in Python programming, covering essential libraries such as NumPy for numerical computation and Pandas for data manipulation and analysis.

Statistical Foundations: A solid understanding of statistics is crucial for data analysis. The course likely covers essential statistical concepts, probability, hypothesis testing, and different types of statistical analysis.

Data Wrangling and Preprocessing: Real-world data is often messy. Zoople Technologies likely trains students on techniques for cleaning, transforming, and preparing data for analysis, a critical step in any data science project.

Exploratory Data Analysis (EDA): Understanding the data through visualization and summary statistics is key. The course likely covers EDA techniques using libraries like Matplotlib and Seaborn to uncover patterns and insights.

Machine Learning Algorithms: The core of predictive modeling. Zoople Technologies' curriculum likely introduces various machine learning algorithms, including supervised learning (regression, classification), unsupervised learning (clustering, dimensionality reduction), and model evaluation metrics.

Big Data Technologies (Potentially): Depending on the course level and focus, Zoople Technologies might also introduce big data tools and frameworks like Hadoop and Spark to handle large datasets efficiently.

Database Management with SQL: The ability to extract and manage data from databases is essential. The course likely covers SQL for querying and manipulating data.

Data Visualization and Communication: Effectively communicating findings is crucial. Zoople Technologies likely emphasizes data visualization techniques and tools to present insights clearly and concisely.

Real-World Projects and Case Studies: To solidify learning, the course likely incorporates hands-on projects and case studies that simulate real-world data science challenges. This provides practical experience and builds a strong portfolio.

Experienced Instructors and a Supportive Learning Environment:

While specific instructor profiles aren't detailed in the general search results, Zoople Technologies emphasizes having experienced trainers. Positive student reviews often highlight the helpfulness and knowledge of the faculty. A supportive learning environment is crucial for grasping complex data science concepts, and Zoople Technologies aims to provide just that. Their focus on practical learning likely involves interactive sessions and opportunities for students to clarify doubts.

Emphasis on Practical Skills and Industry Relevance:

Zoople Technologies appears to prioritize practical skills development, recognizing that the data science field demands hands-on experience. Their curriculum is likely designed to bridge the gap between theoretical knowledge and real-world application. The inclusion of projects and case studies ensures that students gain the confidence and practical abilities sought by employers.

Career Support and Placement Assistance: