#important unix commands for oracle dba

Explore tagged Tumblr posts

Text

Useful Linux commands for DBA

Useful Linux commands for ORACLE DBA

Here we learn some useful Linux commands for dba. Read: How to add SWAP Space in Linux PWD: show the present working directory. $pwd /home/oracle ls: list all files and directories from a given location, if the location is not given it shows the list from the current directory. $ls $ls /u01 $ls -l (list file details) $ls -a (show hidden files) cd: change directory or switch directory. $cd…

View On WordPress

#aix commands for oracle dba#basic linux commands#dba commands in sql#important unix commands for oracle dba#linux commands for oracle dba pdf#oracle commands#oracle database administration on linux#oracle dba commands cheat sheet#oracle dba commands list#oracle dba commands pdf#oracle linux commands cheat sheet#shripal singh#solaris commands for oracle dba

0 notes

Text

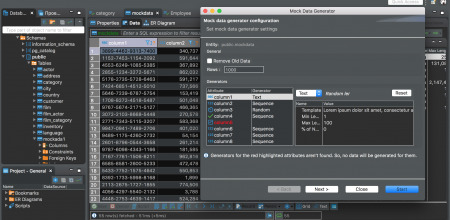

Sql Tools For Mac

Download SQL Server Data Tools (SSDT) for Visual Studio.; 6 minutes to read +32; In this article. APPLIES TO: SQL Server Azure SQL Database Azure Synapse Analytics (SQL Data Warehouse) Parallel Data Warehouse SQL Server Data Tools (SSDT) is a modern development tool for building SQL Server relational databases, databases in Azure SQL, Analysis Services (AS) data models, Integration. SQLite's code is in the public domain, which makes it free for commercial or private use. I use MySQL GUI clients mostly for SQL programming, and I often keep SQL in files. My current favorites are: DBVisualizer Not free but I now use. Oracle SQL Developer is a free, development environment that simplifies the management of Oracle Database in both traditional and Cloud deployments. It offers development of your PL/SQL applications, query tools, a DBA console, a reports interface, and more.

Full MySQL Support

Sequel Pro is a fast, easy-to-use Mac database management application for working with MySQL databases.

Perfect Web Development Companion

Whether you are a Mac Web Developer, Programmer or Software Developer your workflow will be streamlined with a native Mac OS X Application!

Flexible Connectivity

Sequel Pro gives you direct access to your MySQL Databases on local and remote servers.

Easy Installation

Simply download, and connect to your database. Use these guides to get started:

Get Involved

Sequel Pro is open source and built by people like you. We’d love your input – whether you’ve found a bug, have a suggestion or want to contribute some code.

Get Started

New to Sequel Pro and need some help getting started? No problem.

-->

APPLIES TO: SQL Server Azure SQL Database Azure Synapse Analytics (SQL Data Warehouse) Parallel Data Warehouse

SQL Server Data Tools (SSDT) is a modern development tool for building SQL Server relational databases, databases in Azure SQL, Analysis Services (AS) data models, Integration Services (IS) packages, and Reporting Services (RS) reports. With SSDT, you can design and deploy any SQL Server content type with the same ease as you would develop an application in Visual Studio.

SSDT for Visual Studio 2019

Changes in SSDT for Visual Studio 2019

The core SSDT functionality to create database projects has remained integral to Visual Studio.

With Visual Studio 2019, the required functionality to enable Analysis Services, Integration Services, and Reporting Services projects has moved into the respective Visual Studio (VSIX) extensions only.

Note

There's no SSDT standalone installer for Visual Studio 2019.

Install SSDT with Visual Studio 2019

If Visual Studio 2019 is already installed, you can edit the list of workloads to include SSDT. If you don’t have Visual Studio 2019 installed, then you can download and install Visual Studio 2019 Community.

To modify the installed Visual Studio workloads to include SSDT, use the Visual Studio Installer.

Launch the Visual Studio Installer. In the Windows Start menu, you can search for 'installer'.

In the installer, select for the edition of Visual Studio that you want to add SSDT to, and then choose Modify.

Select SQL Server Data Tools under Data storage and processing in the list of workloads.

For Analysis Services, Integration Services, or Reporting Services projects, you can install the appropriate extensions from within Visual Studio with Extensions > Manage Extensions or from the Marketplace.

SSDT for Visual Studio 2017

Changes in SSDT for Visual Studio 2017

Sql Server Data Tools For Mac

Starting with Visual Studio 2017, the functionality of creating Database Projects has been integrated into the Visual Studio installation. There's no need to install the SSDT standalone installer for the core SSDT experience.

Now to create Analysis Services, Integration Services, or Reporting Services projects, you still need the SSDT standalone installer.

Install SSDT with Visual Studio 2017

To install SSDT during Visual Studio installation, select the Data storage and processing workload, and then select SQL Server Data Tools.

Sql Management Studio For Mac

If Visual Studio is already installed, use the Visual Studio Installer to modify the installed workloads to include SSDT.

Launch the Visual Studio Installer. In the Windows Start menu, you can search for 'installer'.

In the installer, select for the edition of Visual Studio that you want to add SSDT to, and then choose Modify.

Select SQL Server Data Tools under Data storage and processing in the list of workloads.

Install Analysis Services, Integration Services, and Reporting Services tools

To install Analysis Services, Integration Services, and Reporting Services project support, run the SSDT standalone installer.

The installer lists available Visual Studio instances to add SSDT tools. If Visual Studio isn't already installed, selecting Install a new SQL Server Data Tools instance installs SSDT with a minimal version of Visual Studio, but for the best experience, we recommend using SSDT with the latest version of Visual Studio.

SSDT for VS 2017 (standalone installer)

Important

Before installing SSDT for Visual Studio 2017 (15.9.6), uninstall Analysis Services Projects and Reporting Services Projects extensions if they are already installed, and close all VS instances.

Removed the inbox component Power Query Source for SQL Server 2017. Now we have announced Power Query Source for SQL Server 2017 & 2019 as out-of-box component, which can be downloaded here.

To design packages using Oracle and Teradata connectors and targeting an earlier version of SQL Server prior to SQL 2019, in addition to the Microsoft Oracle Connector for SQL 2019 and Microsoft Teradata Connector for SQL 2019, you need to also install the corresponding version of Microsoft Connector for Oracle and Teradata by Attunity.

Release Notes

For a complete list of changes, see Release notes for SQL Server Data Tools (SSDT).

System requirements

Microsoft Sql Tools For Mac

SSDT for Visual Studio 2017 has the same system requirements as Visual Studio.

Available Languages - SSDT for VS 2017

Sql Server Tools For Mac

This release of SSDT for VS 2017 can be installed in the following languages:

Considerations and limitations

You can’t install the community version offline

To upgrade SSDT, you need to follow the same path used to install SSDT. For example, if you added SSDT using the VSIX extensions, then you must upgrade via the VSIX extensions. If you installed SSDT via a separate install, then you need to upgrade using that method.

Offline install

To install SSDT when you’re not connected to the internet, follow the steps in this section. For more information, see Create a network installation of Visual Studio 2017.

First, complete the following steps while online:

Download the SSDT standalone installer.

Download vs_sql.exe.

While still online, execute one of the following commands to download all the files required for installing offline. Using the --layout option is the key, it downloads the actual files for the offline installation. Replace <filepath> with the actual layouts path to save the files.

For a specific language, pass the locale: vs_sql.exe --layout c:<filepath> --lang en-us (a single language is ~1 GB).

For all languages, omit the --lang argument: vs_sql.exe --layout c:<filepath> (all languages are ~3.9 GB).

After completing the previous steps, the following steps below can be done offline:

Run vs_setup.exe --NoWeb to install the VS2017 Shell and SQL Server Data Project.

From the layouts folder, run SSDT-Setup-ENU.exe /install and select SSIS/SSRS/SSAS.a. For an unattended installation, run SSDT-Setup-ENU.exe /INSTALLALL[:vsinstances] /passive.

For available options, run SSDT-Setup-ENU.exe /help

Note

If using a full version of Visual Studio 2017, create an offline folder for SSDT only, and run SSDT-Setup-ENU.exe from this newly created folder (don’t add SSDT to another Visual Studio 2017 offline layout). If you add the SSDT layout to an existing Visual Studio offline layout, the necessary runtime (.exe) components are not created there.

Supported SQL versions

Project TemplatesSQL Platforms SupportedRelational databasesSQL Server 2005* - SQL Server 2017 (use SSDT 17.x or SSDT for Visual Studio 2017 to connect to SQL Server on Linux) Azure SQL Database Azure Synapse Analytics (supports queries only; database projects aren't yet supported) * SQL Server 2005 support is deprecated, move to an officially supported SQL versionAnalysis Services models Reporting Services reportsSQL Server 2008 - SQL Server 2017Integration Services packagesSQL Server 2012 - SQL Server 2019

DacFx

SSDT for Visual Studio 2015 and 2017 both use DacFx 17.4.1: Download Data-Tier Application Framework (DacFx) 17.4.1.

Previous versions

Unix Tools For Mac

To download and install SSDT for Visual Studio 2015, or an older version of SSDT, see Previous releases of SQL Server Data Tools (SSDT and SSDT-BI).

See Also

Next steps

After installing SSDT, work through these tutorials to learn how to create databases, packages, data models, and reports using SSDT.

Get help

1 note

·

View note

Text

DBA interview Question and Answer part 23

What is basic difference between V$ view to GV$ or V$ and V_$ view?The V_$ (V$ is the public synonym for V_$ views) view are called dynamic performance views. They are continuously updated while a database is open in use and their contents related primary to performance.Select object_type from '%SESSION' 'V%';OWNER OBJECT_NAME OBJECT_TYPE----- ----------- -------------SYS V_$HS_SESSION VIEWSYS V_$LOGMNR_SESSION VIEWSYS V_$PX_SESSION VIEWSYS V_$SESSION VIEWWhere as GV$ views are called Global dynamic performance view and retrieve information about all started instance accessing one RAC database in contrast with dynamic performance views which retrieves information about local instance only. The GV$ views having the additional column INST_ID which indicates the instance in RAC environment.GV$ views use a special form of parallel execution. The parallel execution co-ordinator runs on the instance that the client connects to and one slave is allocated in each instance to query the underlying V$ view for that instance.What is the Purpose of default Tablespace in oracle database?Each user should have a default tablespace. When a user creates a schema objects and specifies no tablespace to contain it, oracle database stores the object in default user tablespace.The default setting for default tablespace of all users is the SYSTEM tablespace. If a user likely to create any type of objects then you should specify and assign the user a default tablespace. Note: Using the tablespace other than SYSTEM reduces contention between data dictionary objects and the user objects for the same data files. Thus it is not advisable for user data to be stored in the SYSTEM tablesapce.SELECT USERNAME, DEFAULT_TABLESPACE FROM DBA_USERS WHERE USERNAME='EDSS';SQL> Alter user EDSS default tablespace XYZ;SELECT USERNAME, DEFAULT_TABLESPACE FROM DBA_USERS WHERE USERNAME='EDSS';Once you change the tablespace for a user the previous/existing objects stay the same,I suppose that you never specified a tablespace when you created the objects and let to use the default tablespace from the user, the objects stay stored in the previous tablespace(tablespace A) and new objects will be created in the new default tablespace (tablespace B). Like in the example above, the objects for EDSS stay in the ORAJDA_DB tablespace and any new object will be stored in the ORAJDA_DB1 tablespace.What is Identity Columns Feature in oracle 12c?Before Oracle 12c there was no direct equivalent of the AutoNumberor Identityfunctionality, when needed it will implemented using a combination of sequences and triggers. The oracle 12c database introduces the ability to define an identity clause for a table column defined using a numeric type. Using ALWAYS keyword will force the use of the identity.GENERATED ]AS IDENTITY Using BY DEFAULT allows you to use the identity if the column isn't referenced in the insert statement.Using BY DEFAULT ON NULL allows the identity to be used even when the identity column is referenced and NULL value is specified.How to find Truncated Table user information?If you have already configure the data mining concept with your database then there is nothing to do you can query with v$logmnr_contents view and find the list, otherwise you need to do some more step to configure it first with your database.Why used Materialized view instead of Table?Materialized views are basically used to increase query performance since it contains results of a query. They should be used for reporting instead of a table for a faster execution.How does Session communicate with the server process?Server processes executes SQL received from user processes.Which SGA memory structure cannot re-size dynamically after instance startup?Log BufferWhich Activity will generate less UNDO data?InsertWhat happens when a user issue a COMMIT?The LGWR flushes the log buffer to the online redo log.When the SMON processes perform ICR?Only at the time of startup after abort shutdown.What is the purpose of synonym in oracle?Synonym permits application to function without modification regardless of which user owns table or view or regardless of which database holds the table or view. It masks the real name and owner of an object and provides location transparency for tables, views or program units of a remote database.CREATE SYNONYM pay_payment_master FOR HRMS.pay_payment_master;CREATE PUBLIC SYNONYM pay_payment_master FOR [email protected];How many memory layers are in the shared pool?The shared pool of SGA having three layers: Library cache which contains parsed sql statement, cursor information, execution plan etc; dictionary cache contains cache user account information, privilege information, datafiles, segments and extent information; buffer for parallel execution messages and control structure.What is the cache hit ratio, what impact does it have on performance?It calculates how often a requested block has been found in the buffer cache without requiring disk space. This ratio is computed using view V$SYSSTAT. The buffer cache hit ratio can be used to verify the physical I/O as predicted by V$DB_CACHE_ADVICE.select From in 'physical reads');The cache-hit ratio can be calculated as follows: Hit ratio = 1 – (physical reads / (db block gets + consistent gets)) If the cache-hit ratio goes below 90% then: increase the initialization parameter DB_CACHE_SIZE.Which environment variables are critical to run OUI?ORACLE_BASE; ORACLE_HOME; ORACLE_SIDWhat is Cluster verification utility in RAC env.The cluster verification utility (CVU) is a validation tools that you can use to check all the important component that need to verified at different stage of deployment in a RAC environment.How to identify the voting disk in RAC env. and why it is always in odd number?As we know every node are interconnected with each other and pinging voting disk in cluster to check whether they are alive. If voting disks are in even count then both nodes are survival node and it is created multiple brains in same cluster. If it is odd number in that case only one node ping greater count of voting disk and cluster can be saved from multiple brain syndrome. You can identify voting disk by using the below command line:#crsctl query css votediskWhat are the components of physical database structure? What is the use of control files?Oracle database consists of three main categories of files: one or more datafiles, two or more redo log files; one or more control files.When an instance of an Oracle database is started, its control file is used to identify the database and redo log files that must be opened for database operation to proceed. It is also used in database recovery.What is difference between database Refreshing and Cloning?DB refreshing means the data in the target environment has been synchronized with a copy of production. This can be done by restoring with a backup of production database where as cloning means that an identical copy of production has been taken and restore to the target environment.When we need to Clone or Refresh the database? There are a couple of scenarios when cloning should be performed: 1. Creating a new environment with the same or different DBNAME. 2. Sometimes we need to apply patches or other major configuration changes thus a copy of environment is needed to test the effect of this change.3. Normally in software development environment before any major development efforts take place, it is always good to re-clone dev, test environments to keep environment sync. The refreshment is needed only when you sure that the environment are already sync and you need to apply only change of latest data.What is OERR utility?The OERR (Oracle Error) utility is provided only with Oracle databases on UNIX platforms. OERR is not an executable, but instead, a shell script that retrieves messages from installed message files. OERR is an Oracle utility that extracts error messages with suggested actions from the standard Oracle message files. This utility is very useful as it can extract OS-specific errors that are not in the generic Error Messages and Codes Manual.What do you mean by logfile mirroring?The Process of having copy of redolog file is called mirroring. It is done by creating group by log file together. This ensures that LGWR automatically writes them to all the member of the current online redo log group. In case a group fails the database automatically switch over the next group. It diminishes the performance.What is the use of large pool? Which case you need to use the large pool?You need to set large pool if you are using multi thread server and RMAN backup. It prevents RMAN and MTS server from competing with other subsystem for the same memory. RMAN uses the large pool for backup & restore when you set the DBWR_IO_SLAVES or BACKUP_TAPE_IO_SLAVES parameters to simulate asynchronous I/O. If neither of these parameters is enabled then oracle allocates backup buffers from local process memory rather than shared memory. Then there is no use of large pool.What will be your first steps if you get the message Application is running slow?Gather the statistics (statspack, AWR) report to find TOP 5 wait event or run a Top command in Linux to see CPU usage. Later run VMSTAT, SAR and PRSTAT command to get more information on CPU and Memory usage and possible blocking.If poor written statements then run EXPLAIN PLAN on these statements and see whether new index or use of HINT brings the cost of SQL down.How do you add more or subsequent block size specification? Re-create the CONTROLFILE to specify the new BLOCK SIZE for specific data files or Take the database OFFLINE and bring back online with a new BLOCK SIZE specification.

0 notes

Text

DBA Daily/Weekly/Monthly or Quarterly Checklist

In response of some fresher DBA I am giving quick checklist for a production DBA. Here I am including reference of some of the script which I already posted as you know each DBA have its own scripts depending on database environment too. Please have a look on into daily, weekly and quarterly checklist. Note: I am not responsible of any of the script is harming your database so before using directly on Prod DB. Please check it on Test environment first and make sure then go for it.Please send your corrections, suggestions, and feedback to me. I may credit your contribution. Thank you.------------------------------------------------------------------------------------------------------------------------Daily Checks:Verify all database, instances, Listener are up, every 30 Min. Verify the status of daily scheduled jobs/daily backups in the morning very first hour.Verify the success of archive log backups, based on the backup interval.Check the space usage of the archive log file system for both primary and standby DB. Check the space usage and verify all the tablespace usage is below critical level once in a day. Verify Rollback segments.Check the database performance, periodic basis usually in the morning very first hour after the night shift schedule backup has been completed.Check the sync between the primary database and standby database, every 20 min. Make a habit to check out the new alert.log entry hourly specially if getting any error.Check the system performance, periodic basis.Check for the invalid objectsCheck out the audit files for any suspicious activities. Identify bad growth projections.Clear the trace files in the udump and bdump directory as per the policy.Verify all the monitoring agent, including OEM agent and third party monitoring agents.Make a habit to read DBA Manual.Weekly Checks:Perform level 0 or cold backup as per the backup policy. Note the backup policy can be changed as per the requirement. Don’t forget to check out the space on disk or tape before performing level 0 or cold backup.Perform Export backups of important tables.Check the database statistics collection. On some databases this needs to be done every day depending upon the requirement.Approve or plan any scheduled changes for the week.Verify the schedule jobs and clear the output directory. You can also automate it.Look for the object that break rule. Look for security policy violation. Archive the alert logs (if possible) to reference the similar kind of error in future. Visit the home page of key vendors.Monthly or Quarterly Checks:Verify the accuracy of backups by creating test databases.Checks for the critical patch updates from oracle make sure that your systems are in compliance with CPU patches.Checkout the harmful growth rate. Review Fragmentation. Look for I/O Contention. Perform Tuning and Database Maintenance.Verify the accuracy of the DR mechanism by performing a database switch over test. This can be done once in six months based on the business requirements.------------------------------------------------------------------------------------------------------------------------------------------------------- Below is the brief description about some of the important concept including important SQL scripts. You can find more scripts on my different post by using blog search option.Verify all instances are up: Make sure the database is available. Log into each instance and run daily reports or test scripts. You can also automate this procedure but it is better do it manually. Optional implementation: use Oracle Enterprise Manager's 'probe' event.Verify DBSNMP is running:Log on to each managed machine to check for the 'dbsnmp' process. For Unix: at the command line, type ps –ef | grep dbsnmp. There should be two dbsnmp processes running. If not, restart DBSNMP.Verify success of Daily Scheduled Job:Each morning one of your prime tasks is to check backup log, backup drive where your actual backup is stored to verify the night backup. Verify success of database archiving to tape or disk:In the next subsequent work check the location where daily archiving stored. Verify the archive backup on disk or tape.Verify enough resources for acceptable performance:For each instance, verify that enough free space exists in each tablespace to handle the day’s expected growth. As of , the minimum free space for : . When incoming data is stable, and average daily growth can be calculated, then the minimum free space should be at least days’ data growth. Go to each instance, run query to check free mb in tablespaces/datafiles. Compare to the minimum free MB for that tablespace. Note any low-space conditions and correct it.Verify rollback segment:Status should be ONLINE, not OFFLINE or FULL, except in some cases you may have a special rollback segment for large batch jobs whose normal status is OFFLINE. Optional: each database may have a list of rollback segment names and their expected statuses.For current status of each ONLINE or FULL rollback segment (by ID not by name), query on V$ROLLSTAT. For storage parameters and names of ALL rollback segment, query on DBA_ROLLBACK_SEGS. That view’s STATUS field is less accurate than V$ROLLSTAT, however, as it lacks the PENDING OFFLINE and FULL statuses, showing these as OFFLINE and ONLINE respectively.Look for any new alert log entries:Connect to each managed system. Use 'telnet' or comparable program. For each managed instance, go to the background dump destination, usually $ORACLE_BASE//bdump. Make sure to look under each managed database's SID. At the prompt, use the Unix ‘tail’ command to see the alert_.log, or otherwise examine the most recent entries in the file. If any ORA-errors have appeared since the previous time you looked, note them in the Database Recovery Log and investigate each one. The recovery log is in .Identify bad growth projections.Look for segments in the database that are running out of resources (e.g. extents) or growing at an excessive rate. The storage parameters of these segments may need to be adjusted. For example, if any object reached 200 as the number of current extents, upgrade the max_extents to unlimited. For that run query to gather daily sizing information, check current extents, current table sizing information, current index sizing information and find growth trendsIdentify space-bound objects:Space-bound objects’ next_extents are bigger than the largest extent that the tablespace can offer. Space-bound objects can harm database operation. If we get such object, first need to investigate the situation. Then we can use ALTER TABLESPACE COALESCE. Or add another datafile. Run spacebound.sql. If all is well, zero rows will be returned.Processes to review contention for CPU, memory, network or disk resources:To check CPU utilization, go to =>system metrics=>CPU utilization page. 400 is the maximum CPU utilization because there are 4 CPUs on phxdev and phxprd machine. We need to investigate if CPU utilization keeps above 350 for a while.Make a habit to Read DBA Manual:Nothing is more valuable in the long run than that the DBA be as widely experienced, and as widely read, as possible. Readingsshould include DBA manuals, trade journals, and possibly newsgroups or mailing lists.Look for objects that break rules:For each object-creation policy (naming convention, storage parameters, etc.) have an automated check to verify that the policy is being followed. Every object in a given tablespace should have the exact same size for NEXT_EXTENT, which should match the tablespace default for NEXT_EXTENT. As of 10/03/2012, default NEXT_EXTENT for DATAHI is 1 gig (1048576 bytes), DATALO is 500 mb (524288 bytes), and INDEXES is 256 mb (262144 bytes). To check settings for NEXT_EXTENT, run nextext.sql. To check existing extents, run existext.sqlAll tables should have unique primary keys:To check missing PK, run no_pk.sql. To check disabled PK, run disPK.sql. All primary key indexes should be unique. Run nonuPK.sql to check. All indexes should use INDEXES tablespace. Run mkrebuild_idx.sql. Schemas should look identical between environments, especially test and production. To check data type consistency, run datatype.sql. To check other object consistency, run obj_coord.sql.Look for security policy violations:Look in SQL*Net logs for errors, issues, Client side logs, Server side logs and Archive all Alert Logs to historyVisit home pages of key vendors:For new update information made a habit to visit home pages of key vendors such as: Oracle Corporation: http://www.oracle.com, http://technet.oracle.com, http://www.oracle.com/support, http://www.oramag.com Quest Software: http://www.quests.comSun Microsystems: http://www.sun.com Look for Harmful Growth Rates:Review changes in segment growth when compared to previous reports to identify segments with a harmful growth rate. Review Tuning Opportunities and Perform Tuning Maintainance:Review common Oracle tuning points such as cache hit ratio, latch contention, and other points dealing with memory management. Compare with past reports to identify harmful trends or determine impact of recent tuning adjustments. Make the adjustments necessary to avoid contention for system resources. This may include scheduled down time or request for additional resources.Look for I/O Contention:Review database file activity. Compare to past output to identify trends that could lead to possible contention.Review Fragmentation:Investigate fragmentation (e.g. row chaining, etc.), Project Performance into the FutureCompare reports on CPU, memory, network, and disk utilization from both Oracle and the operating system to identify trends that could lead to contention for any one of these resources in the near future. Compare performance trends to Service Level Agreement to see when the system will go out of bounds. -------------------------------------------------------------------------------------------- Useful Scripts: -------------------------------------------------------------------------------------------- Script: To check free, pct_free, and allocated space within a tablespace SELECT tablespace_name, largest_free_chunk, nr_free_chunks, sum_alloc_blocks, sum_free_blocks , to_char(100*sum_free_blocks/sum_alloc_blocks, '09.99') || '%' AS pct_free FROM ( SELECT tablespace_name, sum(blocks) AS sum_alloc_blocks FROM dba_data_files GROUP BY tablespace_name), ( SELECT tablespace_name AS fs_ts_name, max(blocks) AS largest_free_chunk , count(blocks) AS nr_free_chunks, sum(blocks) AS sum_free_blocks FROM dba_free_space GROUP BY tablespace_name ) WHERE tablespace_name = fs_ts_name; Script: To analyze tables and indexes BEGIN dbms_utility.analyze_schema ( '&OWNER', 'ESTIMATE', NULL, 5 ) ; END ; Script: To find out any object reaching SELECT e.owner, e.segment_type , e.segment_name , count(*) as nr_extents , s.max_extents , to_char ( sum ( e.bytes ) / ( 1024 * 1024 ) , '999,999.90') as MB FROM dba_extents e , dba_segments s WHERE e.segment_name = s.segment_name GROUP BY e.owner, e.segment_type , e.segment_name , s.max_extents HAVING count(*) > &THRESHOLD OR ( ( s.max_extents - count(*) ) < &&THRESHOLD ) ORDER BY count(*) desc; The above query will find out any object reaching level extents, and then you have to manually upgrade it to allow unlimited max_extents (thus only objects we expect to be big are allowed to become big. Script: To identify space-bound objects. If all is well, no rows are returned. SELECT a.table_name, a.next_extent, a.tablespace_name FROM all_tables a,( SELECT tablespace_name, max(bytes) as big_chunk FROM dba_free_space GROUP BY tablespace_name ) f WHERE f.tablespace_name = a.tablespace_name AND a.next_extent > f.big_chunk; Run the above query to find the space bound object . If all is well no rows are returned if found something then look at the value of next extent. Check to find out what happened then use coalesce (alter tablespace coalesce;). and finally, add another datafile to the tablespace if needed. Script: To find tables that don't match the tablespace default for NEXT extent. SELECT segment_name, segment_type, ds.next_extent as Actual_Next , dt.tablespace_name, dt.next_extent as Default_Next FROM dba_tablespaces dt, dba_segments ds WHERE dt.tablespace_name = ds.tablespace_name AND dt.next_extent !=ds.next_extent AND ds.owner = UPPER ( '&OWNER' ) ORDER BY tablespace_name, segment_type, segment_name; Script: To check existing extents SELECT segment_name, segment_type, count(*) as nr_exts , sum ( DECODE ( dx.bytes,dt.next_extent,0,1) ) as nr_illsized_exts , dt.tablespace_name, dt.next_extent as dflt_ext_size FROM dba_tablespaces dt, dba_extents dx WHERE dt.tablespace_name = dx.tablespace_name AND dx.owner = '&OWNER' GROUP BY segment_name, segment_type, dt.tablespace_name, dt.next_extent; The above query will find how many of each object's extents differ in size from the tablespace's default size. If it shows a lot of different sized extents, your free space is likely to become fragmented. If so, need to reorganize this tablespace. Script: To find tables without PK constraint SELECT table_name FROM all_tables WHERE owner = '&OWNER' MINUS SELECT table_name FROM all_constraints WHERE owner = '&&OWNER' AND constraint_type = 'P'; Script: To find out which primary keys are disabled SELECT owner, constraint_name, table_name, status FROM all_constraints WHERE owner = '&OWNER' AND status = 'DISABLED' AND constraint_type = 'P'; Script: To find tables with nonunique PK indexes. SELECT index_name, table_name, uniqueness FROM all_indexes WHERE index_name like '&PKNAME%' AND owner = '&OWNER' AND uniqueness = 'NONUNIQUE' SELECT c.constraint_name, i.tablespace_name, i.uniqueness FROM all_constraints c , all_indexes i WHERE c.owner = UPPER ( '&OWNER' ) AND i.uniqueness = 'NONUNIQUE' AND c.constraint_type = 'P' AND i.index_name = c.constraint_name; Script: To check datatype consistency between two environments SELECT table_name, column_name, data_type, data_length,data_precision,data_scale,nullable FROM all_tab_columns -- first environment WHERE owner = '&OWNER' MINUS SELECT table_name,column_name,data_type,data_length,data_precision,data_scale,nullable FROM all_tab_columns@&my_db_link -- second environment WHERE owner = '&OWNER2' order by table_name, column_name; Script: To find out any difference in objects between two instances SELECT object_name, object_type FROM user_objects MINUS SELECT object_name, object_type FROM user_objects@&my_db_link; For more about script and Daily DBA Task or Monitoring use the search concept to check my other post. Follow the below link for important Monitoring Script: http://shahiddba.blogspot.com/2012/04/oracle-dba-daily-checklist.html

0 notes

Text

[Udemy] Oracle DBA 11g/12c - Database Administration for Junior DBA

Learn to become an Oracle Database Administrator (DBA) in 6 weeks and get a well paid job as a Junior DBA. What Will I Learn? Final Goal: Get a job as an Oracle Database Administrator (Oracle DBA) As a Oracle Database Administrator (Oracle DBA), you would be able understand the Database Architecture, which will help you to perform your DBA duties with better understanding. As a Oracle Database Administrator (Oracle DBA), you would be able to Install the necessary Oracle Software/Database As a Oracle Database Administrator (Oracle DBA), you would be able to Administer User accounts in the Database As a Oracle Database Administrator (Oracle DBA), you would be able to Manage Tablespace’s to provide required space for the data As a Oracle Database Administrator (Oracle DBA), you would be able perform Backup and Recovery as needed. As a Oracle Database Administrator (Oracle DBA), you would be able to diagnose the problems and if required will be able to work with Oracle Support As a Oracle Database Administrator (Oracle DBA), you would be able to configure the Listeners for users to communicate Requirements Students should have basic SQL Knowledge Students should know the basic UNIX/LINUX commands An open mind to understand Architecture, before jumping into demo’s Description Learn to become an Oracle Database Administrator (DBA) in 6 weeks and get a well paid job as a Junior DBA. ‘Oracle 11g/12c DBA’ course follows a step by step methodology in introducing concepts and Demo’s to the students so that they can learn with ease. If you want to become an Oracle Database Administrator(Oracle DBA), this course is right for you!!!! Almost in every organization, you will find Database Administrator’s (DBA’s) to maintain the organization’s database. Becoming a good DBA depends on the knowledge you have in the overall architecture of Oracle Database. In this course, I have covered both Oracle Database 11g and Oracle Database 12c so that you get to know the differences and can work in organizations, who are still in 11g. Step by Step methodology followed is… Learn the fundamentals of database to set the stage for Oracle Architecture Understand the Process and Memory Architecture (This is important as understanding this will help you solve lot of critical performance issues). Install Oracle Database 11g & 12c (Manual method and using DBCA) Tablespace management to manage space to store the data. UNDO Tablespace management to manage undo space. REDO Log file management to manage redo space. Manage users and security Networking (Configuring Listeners and TNS entries) Understand various Data dictionary tables/views Diagnose issues using Diagnose data and generate packages for Oracle Support. Backup and Recovery (User Managed) Backup and Recovery (RMAN) Materialized Views Table Partitioning Project Work Let’s start and I will take you step by step to lead your journey into the DBA world!!! Who is the target audience? Students who want to start their careen in Database Administration source https://ttorial.com/oracle-dba-11g12c-database-administration-junior-dba

source https://ttorialcom.tumblr.com/post/176920098178

0 notes