#improved project data recalculations

Explore tagged Tumblr posts

Text

The Mechanism by Which Large Families Accelerate Poverty: The Economic Correlation Between Educational Investment and Birth Rates

When considering poverty issues, there is often a tendency to seek "rational behavior" based on the standards of developed countries. As the case of India illustrates, there is a tendency to choose expensive, low-nutritional food instead of stockpiling affordable food when income increases, and a saving style that involves gradually building incomplete houses is deeply influenced by cultural and psychological factors. This article will clarify, through data, the impact of government healthcare system deficiencies (with a 56% absenteeism rate in clinics), Ethiopia's birth rate of 6.12, and the contradiction of continued absenteeism rates of up to 50% despite free education.

Table of Contents

1. Three Factors Distorting Statistics from the Consumption Behavior of the Poor

2. The Reality of Government Dysfunction Expanding Healthcare Disparities

3. The Process by Which Rising Birth Rates Deplete National Resources

4. The Fundamental Reasons Why Educational Investment Does Not Lead to Poverty Escape

5. Conclusion: A Paradigm Shift in Poverty Measurement

1. Three Factors Distorting Statistics from the Consumption Behavior of the Poor

"Irrational" Food Choices Driven by Cultural Capital

In rural India, 40% of spending during festivals is linked to an "invisible debt repayment system" associated with the caste system. Spending on gifts for higher castes is prioritized over food expenses to secure future employment opportunities.

Unique Savings Systems Formed by Time Preference Rates

In Bangladesh, the "mobile building material savings" system involves handwritten "asset certificates" issued by sellers when purchasing blocks, which circulate as local currency. In 47% of cases, one block is used as a unit for wedding dowries, creating a unique credit system in a region with a financial inclusion rate of only 2%.

2. The Reality of Government Dysfunction Expanding Healthcare Disparities

The Moral Hazard Structure of Public Healthcare Workers

In the Udaipur district, doctors earn $2,800, which is 11 times the salary of nurses, accelerating the decline in workplace morale due to salary disparities.

Successful Private Contracting Examples Indicating Solutions

In Kenya, a private contracting initiative for HIV testing has shown that an AI diagnostic system developed by a pharmacy chain improved diagnostic accuracy by 38% compared to public facilities.

3. The Process by Which Rising Birth Rates Deplete National Resources

The Danger of Applying Malthusian Traps to Modern Times

Ethiopia’s education budget is $23 per person; however, an analysis of its breakdown reveals that 78% is spent on textbooks, while only 3% is allocated for teacher training.

Unexpected Side Effects of Population Control Policies

In Rwanda, a policy promoting contraceptive use resulted in 94% of traditional midwives losing their income sources, leading to a collapse of obstetric care.

4. The Fundamental Reasons Why Educational Investment Does Not Lead to Poverty Escape

The Paradox of Increased Enrollment Not Reflecting Labor Productivity

The fact that the starting salaries of vocational school graduates in Tanzania are on par with unskilled workers is exacerbating distrust in education.

The Mechanism by Which Health Issues Offset Educational Benefits

In Malawi, a deworming project showed that the cost of distributing treatment was $0.80 per person per year, while the estimated lifetime income increase from improved academic performance was calculated at $317.

5. Conclusion: A Paradigm Shift in Poverty Measurement

In Nigeria, estimates of the "Ceremonial Economy Index" indicate that when invisible expenditures are considered, the poverty rate recalculates from the official statistic of 28% to 43%. A study on "wall art savings" in Brazilian favelas found that artistic value was included in asset evaluations in 61% of cases, reflecting advancements in measuring value systems previously overlooked by conventional indicators.

0 notes

Text

Introducing Predictor 2020!

It’s New, Improved, and Better Than Ever (Part 1)

The Predictor is Duggar Data’s favorite forecasting tool. It’s a massive Excel workbook, written by yours truly, that tracks all of the data—i.e., dates—about relationships and pregnancies, crunches that data, and then plugs all of the data into an algorithm to predict future events. Predictor 1.0 hit the scene almost 2 Years Ago... Since then, I’ve improved it several times, but Predictor 2020 is by far the most significant revision. Honestly, it’s a total rebuild, which incorporates more data than ever before, tweaks the algorithm to hopefully be more accurate and realistic, and allow for future expansion. (Now, I can easily add new families, or make specific predictions for the 4th Generation!)

Here’s a quick overview of Predictor 2020—what’s the same, what’s different. Part 1 focuses on what’s the same. Part 2 focuses on what’s changed.

What’s The Same—

Like all Predictors, Predictor 2020 creates personal forecasts for each Duggar, Bates, Maxwell, etc., based on data from that individual and their family.

Like previous versions, Predictor 2020 is fueled by Averages (AKA Means) and Standard Deviations (SDs). Average (or Mean) is a measure of typicality, which identifies a specified value as the most ‘ordinary’ value within a given data set. It’s calculated with the formula: Average = (Sum of All Data Points) / (# of Data Points). While all sorts of fancy means exist in statistics, Duggar Data uses an ordinary arithmetic mean for all purposes. (I don’t claim to be very statistically rigorous!) Standard Deviation (SD) is the other data type used in the Predictor, and comes into play whenever an event is ‘late.’ SD is a measure of variability, which uses a single value to represent how consistent data points are within a specified data set. If you’re nostalgic for your high school statistics class, you can read all about the SD formula here.

Predictor 2020, and all the previous versions, are also chronological. By this, I mean that each event builds on the next. For individuals with no data to speak of—e.g., Josie Duggar, who is far too young to be in a relationship—it starts by calculating a Courtposal Date. Then, it calculates a Proposal Date, assuming a Duggar–average length courtship. A Duggar–average length engagement adds onto that, and you get a projected Wedding Date, which is adjusted to a nearby Friday or Saturday, if needed, etc., etc.

Here’s a brief summary of how Predictor 2020 calculates each date—

Courtship Start Date is based on age, and is sex specific. Females will start courting at their family’s Average Age at C.S. for Females (Born–In and In–Laws), and males will start courting at their family’s Average Age at C.S. for Males (Born–In and In–Laws).

Engagement Date = Courtship Start Date + Average Length Courtship, with average length being calculated from the relevant family’s data.

Wedding Date = Engagement Date + Average Length Engagement, per the relevant family’s data. If necessary, the date is then slightly adjusted to fall on the closest Friday or Saturday.

Pregnancy Announcement #1 = Wedding Date + Average Marriage–to–Firstborn Spacing – Average Timing of Birth (vs. Due Date) – (280 – Mean Timing of Pregnancy Announcement), all based on family data.

DOB of Child #1 = Wedding Date + Average Marriage–to–Firstborn

Subsequent Pregnancy Announcement = Last Birth + Average Spacing Between Births – Average Timing of Birth (vs. Due Date) – (280 – Average Timing of Pregnancy Announcements), all based on the specific couple’s data (if available) or the relevant family’s data (Duggar, Bates, etc.)

Subsequent Children’s DOBs = Last Birth + Average Child Spacing

Fertility Cut–Off is based on the age at which a woman’s mother’s final child was born. Age 40 is used if Duggar Data can’t find information on the woman’s mother, her mother wasn’t quiverfull, or her mother hasn’t yet completed her own quiver and she’s still under Age 40.

Note that, when real data is available, the Predictor always uses that instead of it’s projection. So... The Predictor thought Katie + Travis would begin courting at a specific time, and based all subsequent events on that date. When Katie + Travis actually announced their courtship, I added the real date (or, in this case, my best estimate) in, and the Predictor recalculates everything based on that.

Additionally, with Children’s DOBs... Once a pregnancy is announced, the due date provided by the couple (or my best estimate) controls the projected DOB, not the longer formula listed above. Once there’s a know pregnancy, the DOB formula is quite simply: Due Date + Typical Timing of Birth (vs. Due Date).

Next up... What’s New + Improved for Predictor 2020!

14 notes

·

View notes

Text

Project Data Conversion to PDF, Image Formats & Project Calendar Data are Enhanced using .NET

What’s new in this release?

Aspose team is pleased to announce the new release of Aspose.Tasks for .NET 17.8.0. This month’s release provides support for working with rate scale information in post 2013 MPP file format. It also fixes several bugs that were reported with previous version of the API. Aspose.Tasks for .NET already supported reading/writing rate scale information of resource assignment for MPP 2013 and below versions. With this release, the API now supports reading and writing rate scale data for MSP 2013 and above MPP file formats. Working with loading project data from Microsoft Project Data was supported in one of the earlier versions of API. This, however, had issues with the update of Microsoft Project Database versions update and the functionality was broken. We are glad to share that this issue has been fixed now. You can now load Project data from Project database using this latest version of the API. This release includes plenty of new features as listed below

Add support of RateScale reading/writing for MSP 2013+

TasksReadingException while using MspDbSettings

Error on adding a resource with 0 units to parent task

ActualFinish of a zero-day milestone task not set properly

MPP with Subproject File causes exception while loading into project

Wrong Percent complete in MPP as compared to XML output

Fix difference in Task duration in MSP 2010 and 2013

MPP to XLSX: Resultant file doesn't contain any data

ExtendedAttribute Lookup values mixed up for the same task

Lookup extended attribute with CustomFieldType.Duration can't be saved along with other lookup attributes

Custom field with Cost type and lookup can't be saved to MPP

Tsk.ActualDuration and Tsk.PercentComplete are not calculated after setting of Assn.ActualWork property

Unassigned resource assignment work rendered as 0h

Other most recent bug fixes are also included in this release

Newly added documentation pages and articles

Some new tips and articles have now been added into Aspose.Tasks for .NET documentation that may guide users briefly how to use Aspose.Tasks for performing different tasks like the followings.

Read Write Rate Scale Information

Importing Project Data From Microsoft Project Database

Overview: Aspose.Tasks for .NET

Aspose.Tasks is a non-graphical .NET Project management component that enables .NET applications to read, write and manage Project documents without utilizing Microsoft Project. With Aspose.Tasks you can read and change tasks, recurring tasks, resources, resource assignments, relations and calendars. Aspose.Tasks is a very mature product that offers stability and flexibility. As with all of the Aspose file management components, Aspose.Tasks works well with both WinForm and WebForm applications.

More about Aspose.Tasks for .NET

Homepage of Aspose.Tasks for .NET

Download Aspose.Tasks for .NET

Online documentation of Aspose.Tasks for .NET

#Improved Project Calculations#Project Data Conversion to PDF#project Documents Loading#improved project data recalculations#Project Data Conversion to Images#.NET Project management

0 notes

Text

How Mobile Payroll Software Helps in Construction Payroll

Payroll is a necessary process for any business, but it's even more essential in the construction industry. It is because it ensures that workers are paid correctly and on time. For a construction company, payroll software can help automate the process of computing salaries and tax deductions. It also offers other functions that help streamline the process and enhance productivity.

Accurate Time Tracking

Construction time tracking software helps construction companies improve productivity and work efficiency by giving employees a clear view of how long they spend on a job or project. Additionally, this information can be used to produce more precise projections for upcoming projects. It can also help you reduce labor costs, as many construction projects require overtime to be completed on schedule. However, it means that your company could only pay a significant amount of overtime with a proper tracking system. A good construction time tracker should also help you comply with the Fair Labor Standards Act (FLSA) and other government regulations. For example, a good system will give managers real-time information about the hours their crews are working so they can determine if they're approaching overtime threshold limits. The right time-tracking software will also ensure employees aren't committing time theft. It is a growing issue in construction, as it can cost a company thousands of dollars each year in lost productivity and payments. Some construction time tracking apps are designed with this in mind. For example, they use GPS to identify workers' locations and can set geofences around your work sites to prevent employees from clocking in before they're on site. They also collect important compliance information from employees every time they clock out, so you can easily review it for compliance purposes.

Employee Self-Service

A mobile payroll solution with employee self-service features may help your employees feel comfortable and confident at work. It's also a great way to provide employees an empathetic connection with your company. For construction companies, it's critical to have a payroll software system that is purpose-built for the industry. A unified, scalable solution can help you automate and streamline your payroll process from paycheck calculation to wage reporting and compliance management. Payroll for construction requires a system that can handle complex payroll calculations, union payroll requirements and tax codes, multiple company and project payrolls, labor hours and labor rates matching, and more. Moreover, construction-specific payroll software should be cloud-based and offer real-time processing capabilities for your labor data. Construction-specific payroll software can help you avoid costly fines and penalties due to manual errors. It can also automatically distribute time cards to jobs or project phases and recalculate pay rates based on those time distributions. Another feature that's important to look for in construction payroll software is a payroll provider that can calculate workers' compensation payments. It will ensure your employees are paid according to local labor standards and tax laws.

Time Off Tracking

Construction companies need to keep track of their employees' time accurately. The accuracy of this process helps the business stay on top of its finances and avoid any potential cash flow problems. Payroll for construction is an essential component of the project costing process and keeping track of staff hours. The right time card software ensures that labor costs are accounted for properly, avoiding errors in project costing. Besides time tracking, many mobile payroll solutions also offer time off tracking. These programs are a great way to track employee vacation and sick leave requests, generate reports, and ensure compliance. Some time off tracking software also has a self-service portal for employees to submit their requests and receive approval from management. These systems can help businesses reduce unnecessary travel, reduce time spent on manual tasks, and improve efficiency. Most time tracking apps allow users to send their weekly hours to their accounting system for automatic payroll calculation. It helps save time and reduces the need for manually calculating employee payroll. Moreover, it helps users keep track of their hours more precisely and avoid any time theft.

Employee Attendance Tracking

Employee attendance tracking with mobile payroll software can save your company time and energy. It automates many time-consuming tasks that are often tedious to do manually. In addition, it helps you keep track of when employees are present, absent, late to their shifts, and how many hours they work per day. Time and attendance software also allows you to calculate payroll quickly and easily. After the data is collected from the app, it will automatically generate a timesheet for hourly paid employees. Field service businesses like construction companies need accurate time and attendance. They need this information to avoid incorrect timesheets, ensure better accuracy on job-related expenses, and process paychecks efficiently. For this reason, you should look for a time and attendance solution with all the features you need in one place that integrates with your payroll system. This way, your field team's work hours are logged against the right clients and projects. In addition, your time and attendance software should make it easy to comply with overtime and break rules in your state. It should also allow you to track lunch breaks to ensure your workers get their scheduled break time. When choosing an employee attendance tracker for your construction business, it is best to consider a solution with experience serving the industry. Moreover, it should have customer testimonials that speak to the reliability of its product. Read the full article

0 notes

Text

Why we use React for Website Development

React not only brings technical advantages, but it also simplifies development.

React is a JavaScript software library that provides a basic framework for the output of user interface components from websites (web framework). Components are structured hierarchically in React and can be represented in its syntax as self-defined HTML tags. The React model promises the simple but high-performance structure of even complex applications thanks to the concepts of unidirectional data flow and the “Virtual DOM”. [Wiki: https://en.wikipedia.org/wiki/React_(JavaScript_library) ]

Today, React is often used for modern web applications/projects, from small and simple (e.g. Single Page Application) to large and complex. It’s very flexible and can be used for UI Web Design projects of any size and platform, including mobile, web, and desktop development. Many world-famous companies, such as Instagram and Airbnb, therefore rely on React for their products.

The features and benefits of React

Virtual DOM

Performance is the biggest challenge in today’s dynamic web applications. In order to enable dynamic websites, a DOM (Document Object Model) is built in the browser, which saves all elements, no matter how small, in a tree structure in order to carry out operations such as the calculation of positions or properties such as color, size, etc. If the DOM or some of its properties are to be changed, the DOM must be recalculated. For this purpose, jQuery is typically used, which carries out the operations via the browser DOM. Since the elements can influence each other in different ways, these changes or calculations are quite time-consuming.

Virtual DOM solves this problem in React: By storing the DOM in memory and only updating it selectively, it can be changed very quickly and the number of updates in the browser is minimized.

Components

Components allow you to break your user interface into independent, reusable parts and view each part in isolation. The components are JavaScript functions or classes (since ES6) with a render method.

One way data flow

React only transmits the data in one direction, towards the components. This simplifies debugging.

JSX

A fundamental concept of React is JSX. JSX is an extension of the JavaScript programming language and enables HTML-like elements to be used seamlessly in JavaScript. With JSX, React is simply connected to the display (view layer). The idea behind JSX is no longer to separate according to technology, but according to responsibility. This concept is called Separation of Concerns (SoC). Since a component no longer has to be combined from two entities, JSX helps to write self-contained components that are themselves very easy to reuse.

Advantages of React for Development

Simplicity

React has a very steep learning curve because it is very easy to build components with. A component in React is little more than a JavaScript class that contains all of the logic to represent the component. You don’t even have to learn a template language to write a component because the UI part of a component is written in React using JavaScript. JSX improves readability, especially by making it easier to write DOM elements.

Scalability and flexibility

The core of React are components and their composition for an application. The components are divided into classes that can be easily expanded and transferred. This allows scalable and flexible applications to be developed. In addition, the component status can be checked and changed at any time using the React API.

Simplified development complexity and reusability

Another big advantage of React Js Web Development is the JSX syntax extension, which offers excellent reusability. The standard mechanics at React suggest using standardized inline styles described with JavaScript objects. JSX combines HTML and CSS in one JavaScript code and frees you from the pain of having to insert links to other files via attributes. Each component can be freely defined anywhere in the project by assigning it a certain unique status.

Active community and sufficient reusable components

React with its ecosystem is a kind of flexible framework. You can choose libraries yourself and add them to the core of the application.

Summary

React is increasingly growing and is the sought-after platform by many people which is why most Web Development Company in Bangalore have increased hiring Web Developers who are well versed in React JS Web Development.

0 notes

Text

Still rolling through this. Almost done with the game!! It’s so good guys. Also side note: I’m planning on traveling out the of the country soon so internet will be spotty. I’ve written some parts but just letting you know if I disappear I’m not dead.

[ Artificially Genuine ] ft. Connor

[ 01 Markus | Kara ] [ 02 Markus | Kara ]

It’s fixed but the organization decides to use the downtime to move forward with the testing of other candidates. The lapse in time aggravates your progression timeline but you use the opportunity to proactively prepare for future challenges to avoid this upset. With each week, it seemed, the selection pool drew more narrow and somehow your admission remained viable. Apparently there was too much going right to completely disregard all your work. If it was to select one sole core alibi, advancement would be the marching theme for Cyberlife.

Naturally this results in less hours at home and more frequencies in your lab just to remain afloat. Overtime was a commodity in your line of work but groundbreaking results certainly paid better than any hourly wage.

Leaned at an uncomfortable angle, you lazed over the wide screen, eyes blinking in tandem with the patient cursor awaiting your next command. The preceding numbers and letters were the extent of your presumptive calculations as you took into account every possible altercation, fine tuning the decision map to know when to act and when to investigate for stimulus. In terms of pages, you were making amicable progress but without a clear end point there was really no scope of ground covered. As if the prospect of another prototype exceeding where Connor had failed wasn’t frustrating enough.

As if drawn to the specimen at the thought, your eyes flittered briefly over the impassive android. It wasn’t currently in stasis as you often had questions but still hadn’t you noticed any movement in the last hour.

Was it bored?

Your nose turned at the consideration, immediately disregarding the notion of it feeling such a sense of emptiness. It was simply waiting It’s next order, as it should be. In order to be at a maximum capacity for receptiveness it had to be attentive to the slightest order. Programming minimal loading abilities just seemed silly, like some kind of archaic screen saver to diverge from black screens.

Yet.

“Do you require my assistance?”

Well it was certainly performing at full magnitude.

Rather than be startled given your current assessment, instead you sank deeper into the spiral of consideration. What if this was another layer of complexity to tackle? The organization certainly didn’t care much for the image of humanity in their projects but there was an advantage to them being able connect in the real world. The less they seemed like robots the more their work would be accepted. Though forebarence was trivial to results, you’d argue as you took in the quietness of the empty lab.

“Ridiculous,” you scoffed and resumed your attention on the screen, the android knowing well enough to take it as an offhand comment than to inquire. As long as it did its job, who cared how it functions outside of command lists. Alternatively to wrecking havoc, it would be a welcomed assistance.

Biting your lip, you tried not to think about the memory of leering glances and collective whispers of your colleagues as they huddled in their circles. To you it was simply a distraction to them and yourself. Clocking in meant you were there to improve progress not gossip and slow it. This was why you were a step above them, if not consequently another step or several away from them.

You functioned better this way though. No one thought like you making the prospect of collaborating not only invasive but detrimental. You’d spend more time explaining than experimenting. Besides, look how far you’d come on your own.

As your thoughts drowned in the limitless abyss of alternate realities and vague reminiscing you found yourself peering considerably back at the static android.

“For fucks sake.”

Kicking away from the desk, you wheeled near one of your tool containers, rummaging recklessly through the inventory. You could practically hear it’s processors whirling with the turn of events, attempting to evaluate the sudden shift in the environment for possible outcomes. Rolling your eyes as you can’t up short, you cruised to another. It was difficult to not come up short when you weren’t quite sure what you were looking for. Outside of sequenced codes, there wasn’t much stimulus to add. Connor was programmed to take in its surroundings and recalculate probability and projected scenarios. In lieu of murder, it wasn’t meant to do much else. That was what the ‘wait for instruction’ coding that was ingrained in every android despite its purpose was for.

And still, again.

Connor’s hand shot into the air, catching the rashly thrown item with little difficulty. As you returned, he stared at you inquisitively, hand still hiding it. You had no doubt in that short moment of reflex he’d already analyzed it. Which of course works lead to the endless run of calculations streaming across its face.

“What am I meant to do with this ?”

God this was so stupid, why did you even bother?

Groaning, you found yourself struggling to justify such a pointless interaction. A simple whim had grown into an action so unnecessary yet plaguing you with such curiosity. You waved him away with feigned disinterest as you realigned your erratic mess.

“I don’t know. Play with it or something.”

“Play?” It sounded so utterly perplexed, if that was even the right word to describe that sequence of processing. One of the struggles of programming for new android designs was the speculation of overloading servers. There was still no official shortcomings to what all they could handle and confer. The shift from store clerk to house attendant was a marginally invasive turn of events that took time and consideration to introduce to the public. Now technology was doing more than scratch surface, and integrating more firmly as a permanent fixture.

“Just manipulate it with your hands, Connor … it’s not that difficult,” you muttered under your breath but of course it wasn’t lost to it.

The expression on its face never changed but it was hard to miss the heavy implication of consideration as he turned the silver coin over in its hands. Your head remained trained forward, but every now and again your gaze strays to its progress.

It had progressed to testing mass and gravity, tossing the quarter back and forth between hands to analyze more data. And when all possibilities were exhausted, it returned it to one hand and close its fist around it. Just when you thought the idea was a waste of time, the coin seemed to appear out of nowhere, wedged between two fingers before flipping effortless along its knuckles from one side to the next. The action is as so simple yet motorly complex in a way you hadn’t considered.

The motion abruptly ended with a closed hand and Connor turned its head to you.

“Is this what you instructed ?”

“I-“ Your tongue felt cool to the touch of air, and you quickly snapped it shut to get rid of the awed expression on your face. It wasn’t even that impressive. He’d likely recalled a cinematic event from some source. Even in experimenting with the item, there was no way he’d flawlessly execute such movement without a prompt. You were a damn good engineer but even that was leaps and bounds above your potential. Not to mention hinting at autonomous proficiency. Not even a daring mind could managed such a feat.

Simmering on satisfaction, you were able at allocate more attention to your work with the distraction well taken care of. “Yes, keep that on you and resume that activity in pending commands.”

It nods in confirmation, the cognitive circle whirling to life.

Eventually your fingers gain pace, sudden the action becoming more smooth as the mind block seemed to finally lift.

Not even a minute later the coin resumes its dance.

#dbh connor x reader#dbh connor#detriot become human connor x reader#detroit become human x reader#dbh writing

93 notes

·

View notes

Text

Why we use React for Website Development

FOLLOW ME LET'S BE FRIENDS LINKED IN INSTAGRAM +91 8041732999+91 [email protected]

📷COMPANY SERVICES PRODUCTS TECHNOLOGY PORTFOLIO BLOG CONTACT US

2nd of April, 2021 In: Web Design/UI/UX Why we use React for Website Development 📷 📷 React not only brings technical advantages, but it also simplifies development. React is a JavaScript software library that provides a basic framework for the output of user interface components from websites (web framework). Components are structured hierarchically in React and can be represented in its syntax as self-defined HTML tags. The React model promises the simple but high-performance structure of even complex applications thanks to the concepts of unidirectional data flow and the “Virtual DOM”. [Wiki: https://en.wikipedia.org/wiki/React_(JavaScript_library) ]Today, React is often used for modern web applications/projects, from small and simple (e.g. Single Page Application) to large and complex. It’s very flexible and can be used for UI Web Design projects of any size and platform, including mobile, web, and desktop development. Many world-famous companies, such as Instagram and Airbnb, therefore rely on React for their products.The features and benefits of React Virtual DOMPerformance is the biggest challenge in today’s dynamic web applications. In order to enable dynamic websites, a DOM (Document Object Model) is built in the browser, which saves all elements, no matter how small, in a tree structure in order to carry out operations such as the calculation of positions or properties such as color, size, etc. If the DOM or some of its properties are to be changed, the DOM must be recalculated. For this purpose, jQuery is typically used, which carries out the operations via the browser DOM. Since the elements can influence each other in different ways, these changes or calculations are quite time-consuming.Virtual DOM solves this problem in React: By storing the DOM in memory and only updating it selectively, it can be changed very quickly and the number of updates in the browser is minimized.ComponentsComponents allow you to break your user interface into independent, reusable parts and view each part in isolation. The components are JavaScript functions or classes (since ES6) with a render method.One way data flowReact only transmits the data in one direction, towards the components. This simplifies debugging.JSXA fundamental concept of React is JSX. JSX is an extension of the JavaScript programming language and enables HTML-like elements to be used seamlessly in JavaScript. With JSX, React is simply connected to the display (view layer). The idea behind JSX is no longer to separate according to technology, but according to responsibility. This concept is called Separation of Concerns (SoC). Since a component no longer has to be combined from two entities, JSX helps to write self-contained components that are themselves very easy to reuse.Advantages of React for Development SimplicityReact has a very steep learning curve because it is very easy to build components with. A component in React is little more than a JavaScript class that contains all of the logic to represent the component. You don’t even have to learn a template language to write a component because the UI part of a component is written in React using JavaScript. JSX improves readability, especially by making it easier to write DOM elements.

0 notes

Text

Key steps for wind turbine power performance testing

Contributed by Matt Cramer, ArcVera Renewables

Running power performance tests (PPTs) on operational assets is a highly complex task that requires a significant amount of experience.

When should I carry out power performance tests?

Power performance testing is carried out to determine the economic value of a wind project and to ensure that projects are performing to expectations. Performance testing allows investors to identify project underperformance, ensure that projects are generating the expected return on investment, and manage investment risk.

By plotting the power generated against the wind speed, the power curve compares actual on-site results to the warranted power curve in order to identify any deviations or anomalies, which are then analyzed to pinpoint the root cause. The OEM will often recommend actions for improving performance, such as adding vortex generators, adjusting control parameters, or increasing the rated power to maximize the annual energy production and potential revenue of a wind farm.

Beyond turbine performance verification, power performance testing is also conducted for regulatory compliance and warranty verification. Performance testing is warranted for new turbine models or models with inconsistent PPT results. PPTs are also recommended for projects in simple terrain and are a must-do in certain site conditions, such as complex terrain, high elevation, low-temperature, and extreme precipitation, that have no proven performance for the selected wind turbine generator (WTG) model. Terrain deviations in wind projects can have significant impacts on the turbine performance over the project lifetime.

The effects of wakes, terrain-induced turbulence, up flow, veer, and shear due to the proximity of turbines to terrain features and to each other, as well as atmospheric conditions, all tend to have a negative impact on turbine performance. Newer methods for power performance testing can account for many of these conditions, as well as reduce the cost of testing, by utilizing remote sensing to quantify shear, veer, turbulence, inflow angles, and other factors to enable normalization of data for these atmospheric factors. The use of remote sensing can also reduce the cost of testing by enabling the use of shorter meteorological towers (or no towers at all in the case of nacelle-mounted LIDAR testing).

How do I select the right PPT provider?

Because testing is carried out according to a standard, PPT methodologies do not vary significantly among providers. However, this is not to say that all providers are equal in quality of service; differences will be apparent in a provider’s troubleshooting methodologies and execution recommendations are given for when to run a test and how to deploy instruments efficiently and accurately. Because the quality of a provider’s methodologies may be difficult to evaluate right off the bat, it is essential to be able to identify other markers of a good PPT provider beforehand. Listed below are two of the most critical parameters for turbine owners to look for when selecting their PPT provider:

The most crucial consideration is experience. Experienced PPT professionals will have established best practices for gathering all the relevant information to accurately scope, manage, and deliver the project. They can efficiently resolve or identify problems with the test and keep it on schedule. Experience with factors such as annual weather variations and their impact on PPT helps seasoned professionals determine optimal conditions for testing that can save clients time and money.

Experience with the physical deployment of hardware generally improves understanding of results. This is also true for fieldwork; field staff must possess the kind of data analysis experience that enhances the understanding of the test. Best practices in hardware deployment are informed by an understanding of the data, data quality, and uncertainty requirements of the test.

How can providers ensure high levels of confidence in their power performance test results?

A high level of confidence in test results is achieved by ensuring that on-site measurements have high data availability and low scatter.

The quality of the data collected (high versus low data availability) is partly dependent on the weather. For example, tests that run in weather windows with high winds and mild temperatures will generally be time-efficient due to high data availability. On the other hand, cold weather, ice, lightning, and other extreme weather can cause instrument failure and low data availability.

In addition to conducive weather, securing high data availability also requires the constant monitoring of sensors. Monitoring sensors around the clock allows for catching problems early, promptly troubleshooting, and repairing or replacing sensors as needed. Using reliable, high-quality equipment and having a responsive site manager and meteorological tower (met tower) crew available to troubleshoot are prerequisites for successful sensor monitoring.

Scatter is driven mainly by real-world conditions and wind turbine performance. It can be reduced by placing the met tower closer to the turbine (within the lower end of the 2D to 4D range allowed by the IEC). It should be noted, however, that moving the met tower closer to the turbine can also exaggerate the much-discussed “blockage effect” on the power curve test. This method of scatter reduction is indeed a trade-off, so the pros and cons must be weighed carefully.

Additionally, beyond the minimum requirements of the IEC standard, restricting the wind-direction sector can also help reduce scatter. This is done by utilizing the blockage effect, which can sometimes be quantified by segregating data into two different categories: 1) when the wind comes from a direction that places the met tower upwind of the test turbine and 2) when the wind comes from a direction that places the met tower to the side of the test turbine where it is not subjected to blockage from the turbine rotor.

Scenario testing, or “what if” analyses, can be used to troubleshoot inconsistent results and thus increase confidence in those results. By restricting data or, for example, comparing data between different turbine operating states, it becomes easier to identify what might be driving any discrepancies in turbine performance results. A large, geographically diverse wind turbine operational database is required to support this analysis. ArcVera’s extensive PPT database for wind farms across the United States is one of the tools that aids ArcVera in its ability to proficiently perform such detailed analyses.

What are the key steps involved in the delivery of successful power performance tests?

While power performance testing comprises several processes, in general, a PPT requires three overarching phases:

Test planning

The test plan is a highly detailed document that is written in accordance with the IEC 61400-12 standard and depicts all details concerning test methodology. The test plan is a preliminary document that helps the client and test supplier make sure that the test methodology will meet the client’s requirements and that the goals of the test are mutually understood. It requires significant effort and input from multiple parties and involves reducing risks related to compliance with the Turbine Supply Agreement (TSA), IEC 61400-12 standard, test laboratory accreditation requirements, and turbine vendor requirements.

Hardware selection and deployment

Hardware must meet wind turbine vendor, client, and IEC requirements. In addition, additional requirements are sometimes placed on the hardware by the grid operator. Selection and specification of appropriate and compliant hardware is a crucial capability acquired through comprehensive experience with test execution. Particular attention should be given to calibration requirements. Calibration certificates are required by many accreditation bodies, so ensuring that the supplier has those certificates during procurement will help to avoid problems later. Hardware field performance and compatibility with the DAS are other important considerations. Equipment shipping must be coordinated and the enclosure setup tested. Hardware should be shipped directly to the site and installed according to procedures so that the test hardware installation meets the accreditation requirements.

Data collection analysis and reporting

Clear and transparent reporting is key to a successful test. Data must be regularly checked for errors and immediately input into a database that calculates bin completion. Errors due to meteorological events are often challenging for unseasoned analysts to recognize, which is another reason prioritizing a PPT provider’s experience level is so important. For example, one weather condition that is often not identified until after data analysis is icing, which can often lead to detrimental sensor failures. Icing is likely to be filtered out during post-processing, but it can lead to undetected sensor damage if the analyst does not apply deliberate scrutiny. Sensor damage can invalidate test results if unchecked or not addressed promptly.

Experience level also comes into play if the wind turbine vendor requirements include filtering out periods of precipitation, as this involves a degree of professional judgment to decide if conditions such as heavy fog or light mist should be regarded as meaningful precipitation.

Automated data processing and reporting tools exist to allow experts to recalculate the power curve quickly and easily. These tools will not, however, remove the need for experts. For example, subsets of the collected data can be selected by the expert analyst to restrict the test period, valid direction sector, turbine availability definition, wind shear filters, turbulence filters, and other data filters to help troubleshoot inconsistent results. With the proper expertise, DAS and sensor failures due to lightning strikes or other causes can be accurately diagnosed by examining the data.

Despite the fact that troubleshooting is a regular occurrence that requires experienced personnel, it is not always possible to require such a stipulation within a time-bound proposal process. Therefore, it is critical for turbine owners to do their due diligence beforehand and seek out PPT providers with enough experience to be able to effectively troubleshoot any unexpected issues, should they arise.

Should I opt for lidar or met towers?

There are two main ways of assessing a site’s wind resource: met towers and lidar.

Meteorological towers (met towers) configured with industry-standard anemometers to gather wind-speed measurements are the wind industry’s most widely accepted wind measurement methodology. Anemometer-based measurements have been used for decades for wind energy measurement application purposes to technically support project financings.

This long history of use translates to an accepted level of measurement uncertainty for project finance technical due diligence requirements. Met towers are particularly cost-effective as tilt-up versions; however, tilt-up towers above 80 meters are typically more expensive and are more difficult to permit. In the United States and many other countries, reliable and expensive aviation lighting is required for towers higher than 60 meters (200 feet), and depending on the structure, higher than 60-meter towers may have decreased weather ratings.

Higher met towers are also structures of unique designs, using steel or aluminum welded lattice structural geometry that introduces wind flow obstruction properties that are not well understood and require unique sensor mounting hardware to mitigate.

Adding to the cost equation is met towers are simply difficult to reuse – the decommissioning and re-shipment of the tower is costly; adding more expense if higher towers often require a concrete foundation that must be removed, and the sensors must be either replaced or recalibrated after each use. The highest quality anemometers use a bearing system that is not User serviceable, requiring the time-consuming and expensive process of sending the sensor back to the factory for bearing replacement and calibration.

Lidar, on the other hand, is small, mobile, and reusable but is not generally accepted as a permanent monitoring system. Lidar has only recently been allowed for commercial tests under Edition 2 of IEC 61400-12-1, though the standard does not allow for the use of lidar in complex terrain. With lower test data scatter, good uncertainty numbers, much lower costs than traditional power curve testing, and faster test time due to reduced time for planning met tower logistics, it is quite likely that lidar will be more widely accepted in the future.

The upcoming IEC 61400-50-3 standard for measuring wind using nacelle-mounted lidar in flat terrain could soon become a game-changer, as the need for met towers could be eliminated entirely for many sites.

While lidar may remove the need for a hub height tower and thereby reduce costs and permitting complications, there are nevertheless other issues and logistical challenges to consider, such as power supply. As well, lidar verification must be done at intervals, potentially leading to longer, more expensive test campaigns. Unlike the anemometer, the lidar data recovery rate is generally not 100 percent, which reduces data availability and could induce delays in test completion, so any cost benefits may be lost.

How do larger turbine sizes impact performance testing requirements?

Larger rotors lead to more scatter in the data, which has partly inspired some changes in Edition 2 of the IEC 61400-12-1 standard, including allowing the use of lidar to measure shear and veer. Because of this new allowance and of the growing frequency of larger rotors, lidar measurement is likely to gain popularity in the coming years.

Since performing PPTs on individual WTGs is likely to become more expensive with higher hub heights, owners will end up testing fewer machines per megawatt of energy production since IEC and warranty requirements do not change with increased size. The number of tests required is typically calculated as a percentage of the number of turbines rather than the overall project capacity. For example, a project consisting of one hundred 1 MW turbines would require more tests than a project of the same size with two 5 MW turbines.

The use of a short met tower and lidar (as prescribed under Edition 2 of the IEC standard) is less expensive and reduces some of the logistical challenges associated with the installation of a hub height met tower; however, there is also higher uncertainty in this type of test. Because of this, ArcVera clients would generally be advised to stick with hub height met tower installation, as prescribed under Edition 1 of the IEC standard, in order to minimize test uncertainty and maximize coverage under the power curve warranty.

Hub height met towers are likely to continue to be the gold standard, especially since they are still feasible for hub heights of up to at least 120 meters. Nacelle anemometry can be accurate if used together with one or more met towers to create a nacelle transfer function. This method has been successfully used in the past both for warranty verification and to allow evaluation of the performance of all wind turbines at a wind farm.

Going forward, it is likely that nacelle lidar will be used instead of nacelle-mounted anemometers for many applications. This is particularly probable in the case of offshore wind farms. Offshore sites differ significantly from onshore sites in terms of layout and turbine size. The installation of met towers in the ocean has proven to be costly, time-consuming, and as such, impractical. As the terrain and elevation are consistent, nacelle-mounted lidar tests have become standard practice.

Conclusion

Power performance testing is executed in order to determine the economic value of a wind project and to ensure that projects are performing as they are supposed to, in turn allowing investors to identify project underperformance and manage investment risk. Power performance testing is also conducted for regulatory compliance and warranty verification and is recommended for projects in simple terrain and other types of site conditions.

Although PPT methodologies do not vary significantly among providers due to the guidance of industry-utilized IEC standards, not all providers are equal in quality of service; differences are most apparent in a provider’s troubleshooting methodologies, test execution recommendations, and instrument deployment approach. Because informed judgment in making those pivotal decisions is what sets providers apart, and because power performance testing is such a complex task, depth of experience is the most crucial consideration for owners to look for when choosing a provider. The best PPT professionals will have used the totality of their experience to thoughtfully establish best practices for accurately scoping, managing, and delivering projects.

PPT services, while standardized, are complex and nuanced. Experienced providers recognize the planning value as well as the most expensive, time-delaying pitfalls. Procurement of PPT services should include careful attention to the firm’s experience doing PPT tests in a variety of locations. It is also critically important to find out who will actually be engaged in the assignment, since some firms tend to field their PPT services work scope using inexperienced, lower-cost staffing, presumably to maximize their margins. Acquiring an experienced PPT provider means having effective field personnel who know how to think through issues, attain reliable data, and maintain the plan-defined PPT schedule, lowering the risk of weather-related and other cost runups.

Subscribe to Renewable Energy World’s free, weekly newsletter for more stories like this

About the author:

Matt Cramer, ArcVera Renewables’ Business Development Manager & PPT Technical Services Lead

Matthew co-founded Turbine Test Services LLC. (TTS), an accredited wind turbine testing company specializing in loads testing and power performance testing and analysis. Matthew has performed extensive data analysis, deployed tests, sourced hardware, and was involved in all technical aspects of testing. Matthew joined ArcVera in October 2020 specifically to do business development for ArcVera’s PPT group with client-focused services that are responsive to client needs and closely managed and implemented by senior-level engineers.

from https://ift.tt/3AhbNA6

0 notes

Text

How CPAs helped save businesses during COVID-19

As the COVID–19th Pandemic changed the business environment, customers turned to their CPAs to keep their businesses alive. CPAs offering Client Advisory Services (CAS) were well positioned to help clients in difficulty stabilize and reposition their business. The term CAS can encompass everything from outsourced accounting to outsourced CFO and controller services to management consulting services. Have CPAs who offer CAS real–Time Knowing about their clients ‘finances, which gives them the opportunity to offer a better strategic perspective on their clients’ business. For example, CPAs working with customers in affected sectors used CAS to enable their customers to re-imagine what is possible.

Let restaurants cook

About 75% of Nick Swedberg’s customers are restaurants and small craft breweries in Minnesota. These types of companies were “some of the hardest hit” during the pandemic shutdown, he said.

To help his customers stay in business, Swedberg, a partner at Boyum Barenscheer in Bloomington, Minnesota, advised them on a variety of solutions, such as: to–walk Sales and deliveries. Since they had planned to start deliveries to replace In–person while eating, they had to deal with questions about the profitability and feasibility of moving, including analysis of employee compensation, auto insurance and handling tips, which are highly regulated in his state. He was able to do this with Excel, “set up some advanced formulas so there are only a few key metrics” that he had to adjust to test possible outcomes for different scenarios, he said.

Swedberg also helped its clients reach out to local government leaders for temporary exemptions from regulations. For example, some of its restaurant owner customers got permission from their cities to expand outdoors when indoor eating was banned. One city had a restaurant take over a city parking lot, while another closed its main street to allow al fresco dining. When a customer of a brewery owner asked the local health authority if beer delivery could be legalized, the board worked with the company to make it happen possible.

Swedberg supported this process by quantifying how the special permits sought by customers could save their restaurants and the jobs of their employees, and help them make convincing arguments on the spot Government.

As customers got more creative, Swedberg helped them implement their ideas. For example, a Mexican restaurant owner turned half of its space into a market where customers could buy salsa, meat, and other ingredients that they could use in preparing meals at home. As Swedberg predicts, the pivot cost has been minimal, the restaurant business has gotten a boost, and the market has done well. “It was fun to see how it comes to fruition,” he said. On the flip side, another client decided not to pursue their plans to convert their space into a home for four types of restaurants when the projected cost was too high.

“My job is to get them to put their thoughts in order and then come up with a real financial forecast,” to see if their plans were viable, Swedberg said. “Do you remember in math when you had to convert a word problem into an equation?

Maintaining the health of service-related practices

Sandy Shecter, CPA, CGMA, is the company-wide director of Rehmann Solutions, a division of 900–person Rehmann with branches in three federal states. Based in Detroit, Shecter has customers of doctors, dentists, and surgical centers that range in size from one provider to 350+. Their opening ability and patient volumes were impacted when many states ordered closures last spring.

Shecter stepped in to help clients apply for the Paycheck Protection Program and other loans or grants. Your company also worked with clients to provide data and forecasting for decision making on management issues such as: B. to provide employee leave; introducing new ways to work with patients, such as B. Telemedicine; and solving critical technology problems, such as cybersecurity or the complete outsourcing of a customer’s IT department.

Your company also assists with a variety of outsourced services, including recruiting to reduce staff costs, technology expertise that enables telemedicine, and outsourced bookkeeping and bookkeeping. “Providing these services on an outsourced basis enables customers to customize their usage as needed,” she said.

At the end of September 2020, most of Shecter’s customers were almost back In front–pandemic Patient level, but predicts permanent change. Your strategic planning with customers includes options such as making greater use of patient portals for communication and switching return–office Employees too the end–Location locations.

She advises CPAs to think beyond compliance and focus on delivering more to clients in terms of business consulting. “The pandemic underscored the value that customers place on us real–Time Information that they can use to manage their business, “she said.” This is really crucial. “For example, your customers often ask her for help with cash flow management, debt aging analysis, and budget recalculation and monitoring.

Finding the key to real estate sustainability

When rental income declined during the pandemic, Brandon Hall, CPA, who served as his clients’ outsourced CFO, conducted real estate portfolio research for clients of real estate investors to see how they could improve rental income performance. He also developed the tax minimization and costs–Demarcation Strategies to help them improve cash flow.

Hall, the CEO of 24–employees The Real Estate CPA firm of Raleigh, NC creates custom dashboards for clients using Google Sheets, spreadsheets that are easy to access and collaborate with for both him and his clients. It is possible to enter the customer’s QuickBooks online accounting data live into this dashboard. “The dashboard keeps track of what’s important to the customer,” said Hall, which usually includes information about utilization. budget–to–costs, Rehab expenses, cash income from a cash investment in real estate, return on equity and internal rate of return.

Hall was initially concerned about the economic uncertainty the pandemic was causing, as he hadn’t seen a similar disruption in his career. His business coach gave advice that he uses with his own customers and recommends other practitioners: Create a financial plan for the next 12 months that will be in one– to two–month Tranches and set the expected financial performance metrics for each tranche. Decide what action to take if you miss your metrics by 10%, 20%, and so on – for example, staff or expense savings. Once you hit your metrics, no changes will be made necessary.

Just having a plan of what to do when outcomes are good and bad can reduce customer stress and improve their response to setbacks, Hall said. “You cannot control the economy, but you can control how you react to it,” he said.

Help construction companies build stronger businesses

In northern Colorado, due to the dynamism of the local economy, the pandemic had minimal economic impact on construction customers, said Ralph Shinn, CPA / PFS, partner at nine–employees ClearPath consultant in Fort Collins. Surprisingly, the real challenge for these customers was to hire enough workers or contractors as some decided to stop working and start receiving unemployment benefits during the pandemic. When this forced clients to postpone some projects, the company helped them use that time as an opportunity to reevaluate their hiring practices.

The company also helped its customers bill and invoice additional expenses related to the pandemic or prepare quotes that include additional expenses related to the pandemic, such as the cost of personal protective equipment required on construction sites, and the additional mileage and vehicle fees that employees incur when carpooling is no longer feasible. “Lots [clients] didn’t take that cost into account, “Shinn said.

Shinn’s practice has too Professional–Services Customers ranging from physical therapists to companies providing hospital administration services. Many of these customers were unfamiliar with remote working, so Shinn’s company, which has been using remote working for more than 20 years, offered them free training on the remote control–work Environment and access to the cloud–based Packages it uses. “We wanted to show them that work in one cloud–based and a paperless environment can make businesses more efficient, effective and profitable, “Shinn said. The company also offered advice on the tax benefits of setting up home offices and training remote workers.

CPAs can step in when customers struggle

In uncertain times, many clients not only seek tax and accounting help, but also business advice. As companies grappled with the economic upheaval caused by the pandemic, CPAs stepped in to identify customers’ needs based on their existing knowledge of their companies. Offering CAS services can strengthen the role of CPAs as trusted business advisors who can provide practical and effective solutions to business challenges and valuable advice on how to make the most of opportunities.

source https://seedfinance.net/2021/08/12/how-cpas-helped-save-businesses-during-covid-19/

0 notes

Text

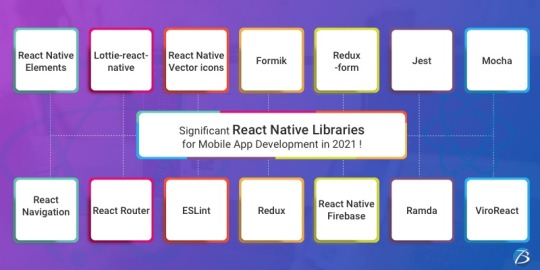

Significant React Native Libraries for Mobile App Development in 2021

React Native happens to be one of the most sought-after app development frameworks across the globe as it comes with a host of advantages like a cost-effective developmental cycle, faster time-to-market, high performance, modular and intuitive architecture, and many more.

One of the unique benefits of this framework is the availability of countless third-party libraries that expedite the development and prove highly convenient for every React Native App Development Company. However, owing to the presence of thousands of React Native libraries, selecting the apt ones becomes a herculean task. As a consequence, development teams often have to spare a great deal of time and effort for picking the right tool or library that would prove fruitful.

For easing out this task, I have penned down the most significant tools and libraries that complement the React Native framework. A quick read will help you to find the perfect match that suits your requirement.

Tools and Libraries for Various React Native App Development Categories

Category: User Interface

React Native Elements

This UI library, built using JavaScript, has earned 20.5k stars and 4.2k forks on GitHub.

This library comes with cross-platform compatibility and supports Expo.

It is easy to use, customizable, and community-driven.

Lottie-react-native

This library created by Airbnb enables adding attractive animations to React Native applications.

React Native developers can either select from the free animations available or design and add their animations employing “Adobe After Effects.”

Functioning: The animation data is exported in JSON format with Bodymovin and rendered natively on mobile.

Styled Components

This library enables developers to write CSS code for styling components

It removes the mapping between styles and components, thereby easing out the usage of components as a low-level styling construct.

The styles can be reused several times resulting in lesser coding

React Native Vector icons

React Native Vector icons is a library that offers numerous icons of various types, designed for the React Native Apps.

Each element can be fully customized

Category: Forms

Formik

It’s a small library that helps to build forms in React

Formik enables to validate the form values, display error messages and helps to submit the form.

Redux-form

Redux-form enables proper state management in Redux

It helps in tracking the commonest form states like fields contained in the form, focussed field, field values, fields which the users have interacted with, etc.

Category: Testing

Jest

This is a popular testing framework, designed and maintained by Facebook, and is used for testing JavaScript code. This versatile testing tool is compatible with any JavaScript framework or library, including React, Angular, VueJS, etc. Uber, Airbnb, and Intuit are some of the top brands that have leveraged this tool. Its offerings are:

High-speed performance

Standard syntax with report guide

Mocks functions, with the inclusion of third-party node_module libraries

Conducts parallelization, snapshot, and async method tests

Enables managing tests with bigger objects, by using live snapshots

Mocha

Mocha is a JavaScript test framework, used for testing React and React Native apps. It provides the Developers full control over what plugins and tools they choose to use while testing applications. Its major highlights are:

Runs on Node.js

Provides support for asynchronous front-end and backend testing, test coverage reports, and the usage of any claims library

Helps to track errors

Excels in mocking tests

Enzyme

Enzyme is another testing tool developed by Airbnb.

It comes with API wrappers, to ease out developers’ tasks like manipulating, asserting, and traversing the React DOM.

It supports full and shallow DOM and also supports static rendering

Besides, it is compatible with several other testing frameworks and libraries like Mocha and Jest.

Chai

It’s an assertion testing library meant for browser and node

Chai employs behavior-driven and test-driven development principles

Compatible with various testing tools and can be paired with any JS testing framework

Its functionality can be extended by using several custom plugins

Moreover, it enables the developers to create their plugins and share them in the community

Category: Navigation

React Navigation

This component supports navigational patterns like tabs, stacks, and drawers

It is based on JavaScript and is simple to use

It enables developers to effortlessly set up app screens

Can be completely customized as well as extended

React Router

This is a library of navigational components which composes declaratively with the app.

It allows one to specify named components, create various types of layouts, and pass layout components.

Category: App’s State Management

Redux

Redux, a free-standing library, and a predictable state container is predominantly used along with the UI library components of React. Besides the React ecosystem, one can also use Redux with other frameworks like Vue, Angular, Vanilla JS, Ember, etc. Its principal offerings are:

Can be used with back-end as well as front-end libraries

Enables the developers to write consistent codes

Allows editing the live code

Functions well in various environments – Server-side, client-side, and native

Connects the pieces of state to the React components by minimizing the need for props or callbacks.

Category: Linting and checking Types

ESLint

It’s a JavaScript-based, open-source linter tool

ESLint is configurable and pluggable

It improves the code consistency and makes it bug-free

It helps in evaluating patterns in the code and eliminates errors by automatically fixing the code, to enhance the overall code quality.

It helps detect creases in the JavaScript code that don’t comply with the standard guidelines

It helps react native developers to create their own linting rules

Flow

Developed by Facebook, Flow is a static type checker JavaScript library

It easily identifies problems during coding

It proves beneficial in crafting large applications, as it prevents bad rebases when several persons are working on a single program.

The main objective of Flow is to make the code more precise and enhance the speed of the coding process

Category: Networking

Networking tools are used to establish a networking flow in React Native projects. Let us have a look at a few of them.

react-native –firebase is a lightweight layer on the top of Firebase libraries. It creates a JavaScript bridge connecting to the native JavaScript SDKs to ease out using Firebase in React Native Application Development projects.

Apollo Client is quite compatible and adaptable. It is required when the developers need to use GraphQL. It assists in creating a User Interface that pulls data with GraphQL.

Axios, a lightweight HTTP JavaScript client was built to send asynchronous HTTP requests to REST endpoints. Besides, it performs CRUD operations.

react-native-ble-manager is a plugin that helps in connecting and transmitting data between a mobile handset and BLE peripherals.

Category: Utils

The below-mentioned ready-made tools simplify and speed up working with Utils while developing React Native apps.

Ramda is a library that eases out creating functional pipelines without user-data mutation.

The JavaScript functions’ toolkit Lodash offers clean and effective methodologies to your development team for working with collections and objects.

Reselect builds memorized selectors that are needed for avoiding unnecessary recalculation and redrawing of data. This library also quickens the speed of your app.

Moment works with various data formats and is capable of parsing, manipulating as well as validating times and dates in JavaScript.

Validate.js, designed by Wrap, offers the app developers a declarative way to validate JS objects

Category: Analytics

The following libraries act as mediators enabling one to implement the trending analytical tools into their React Native Mobile App Development projects.

react-native-mixpanel is a kind of wrapper for the library named Mixpanel and helps the developers to reap all the benefits of the Mixpanel library.

react-native-google-analytics-bridge acts as a bridge for establishing compatibility between Google Analytics tools and React Native projects.

Category: Localization

react-native-i18n helps in localizing or internationalizing applications. It integrates the i18n-js library in JavaScript for React Native applications.

Category: In-app Purchases

react-native-in-app-utils is a small library used to implement the in-app billing procedure for iOS apps. It can be effortlessly installed and is simple to work with.

react-native-billing is used for adding in-app billing to applications meant for the Android platform. It possesses a simple UI and wraps anjlab’s InApp Billing library to function as a bridge.

Category: AR and VR

ViroReact is used to speedily develop native cross-platform VR/AR apps in React Native. Its key functionalities are:

It has an easy learning curve

It comes with a high-performing native 3D rendering engine as well as a custom extension of React for creating VR and AR solutions.

It provides support for all kinds of platforms in VR including Samsung Gear VR, Google Cardboard, Google Daydream, etc. for Android and iOS; and AR including Android ARCore and iOS ARKit platforms.

Needs React-Viro-CLI and React-Native-CLI for writing cross-platform native codes

Final Verdict:

I hope the aforesaid information was helpful and has given you a clear idea of which library/libraries would be most suitable for your next project.

To know more about our other core technologies, refer to links below:

Angular App Development Company

Ionic App Development Company

Blockchain app developers

0 notes

Text

React For Website Development | Web Design and Development Company in Bangalore Limra Softech

React is a JavaScript software library that provides a basic framework for the output of user interface components from websites (web framework). Components are structured hierarchically in React and can be represented in its syntax as self-defined HTML tags. The React model promises the simple but high-performance structure of even complex applications thanks to the concepts of unidirectional data flow and the “Virtual DOM”. [Wiki: https://en.wikipedia.org/wiki/React_(JavaScript_library) ]

Today, React is often used for modern web applications/projects, from small and simple (e.g. Single Page Application) to large and complex. It’s very flexible and can be used for UI Web Design projects of any size and platform, including mobile, web, and desktop development. Many world-famous companies, such as Instagram and Airbnb, therefore rely on React for their products.

The features and benefits of React

Virtual DOM

Performance is the biggest challenge in today’s dynamic web applications. In order to enable dynamic websites, a DOM (Document Object Model) is built in the browser, which saves all elements, no matter how small, in a tree structure in order to carry out operations such as the calculation of positions or properties such as color, size, etc. If the DOM or some of its properties are to be changed, the DOM must be recalculated. For this purpose, jQuery is typically used, which carries out the operations via the browser DOM. Since the elements can influence each other in different ways, these changes or calculations are quite time-consuming.

Virtual DOM solves this problem in React: By storing the DOM in memory and only updating it selectively, it can be changed very quickly and the number of updates in the browser is minimized.

Components

Components allow you to break your user interface into independent, reusable parts and view each part in isolation. The components are JavaScript functions or classes (since ES6) with a render method.

One way data flow

React only transmits the data in one direction, towards the components. This simplifies debugging.

JSX

A fundamental concept of React is JSX. JSX is an extension of the JavaScript programming language and enables HTML-like elements to be used seamlessly in JavaScript. With JSX, React is simply connected to the display (view layer). The idea behind JSX is no longer to separate according to technology, but according to responsibility. This concept is called Separation of Concerns (SoC). Since a component no longer has to be combined from two entities, JSX helps to write self-contained components that are themselves very easy to reuse.

Advantages of React for Development

Simplicity

React has a very steep learning curve because it is very easy to build components with. A component in React is little more than a JavaScript class that contains all of the logic to represent the component. You don’t even have to learn a template language to write a component because the UI part of a component is written in React using JavaScript. JSX improves readability, especially by making it easier to write DOM elements.

Scalability and flexibility

The core of React are components and their composition for an application. The components are divided into classes that can be easily expanded and transferred. This allows scalable and flexible applications to be developed. In addition, the component status can be checked and changed at any time using the React API.

Simplified development complexity and reusability

Another big advantage of React Js Web Development is the JSX syntax extension, which offers excellent reusability. The standard mechanics at React suggest using standardized inline styles described with JavaScript objects. JSX combines HTML and CSS in one JavaScript code and frees you from the pain of having to insert links to other files via attributes. Each component can be freely defined anywhere in the project by assigning it a certain unique status.

Active community and sufficient reusable components

React with its ecosystem is a kind of flexible framework. You can choose libraries yourself and add them to the core of the application. To know more about Limra Web Development Company in Bangalore, Kindly visit us at https://www.limrasoftech.com/web-design-company-bangalore/ Contact Details: [email protected] +91 8041732999

Explore more about Limra Web Development Company in Bangalore at the below links:

Web Development Company In Bangalore | Web Design And Development Company In Bangalore | Website Development Company In Bangalore | Website Design Company Bangalore | Web Design Company Bangalore | Web Design Company In Bangalore | Website Design Company In Bangalore | Web Designers in Bangalore

#Web Development Company in Bangalore#Web Design Company in Bangalore#Website Development Company in Bangalore#Website Design Company in Bangalore#Web Designers in Bangalore

0 notes

Text

Why we use React for website development

React not only brings technical advantages, but it also simplifies development.

React is a JavaScript software library that provides a basic framework for the output of user interface components from websites (web framework). Components are structured hierarchically in React and can be represented in its syntax as self-defined HTML tags. The React model promises the simple but high-performance structure of even complex applications thanks to the concepts of unidirectional data flow and the “Virtual DOM”. [Wiki: https://de.wikipedia.org/wiki/React ]