#index CSV XML HTML Google

Explore tagged Tumblr posts

Text

Complete List of File Types Indexable by Google

Discover all File Types Indexable by Google, including PDFs, DOCX, images, videos, and code files. Learn how to optimize non-HTML formats for search visibility and use the filetype: operator effectively. File Types Indexable by Google: A Comprehensive Guide for Webmasters and SEOs When it comes to search engine optimization (SEO), content is king—but so is the format that content comes in.…

#DOCX SEO#file types indexed by Google#filetype operator Google Search#Google indexable file types#Google indexing media files#Googlebot file support#image formats indexable#index CSV XML HTML Google#optimize PDFs for SEO#PDF indexing Google#search file types in Google#searchable documents Google#SEO document formats#text files indexed by Google#video formats indexable by Google

0 notes

Text

How to Scrape AliExpress Review Data With Python?

Introduction

Scraping data from e-commerce websites like AliExpress can provide valuable insights into product performance, customer satisfaction, and market trends. AliExpress, being one of the largest online retail platforms, hosts a vast amount of review data that can be beneficial for data analysis, sentiment analysis, and business intelligence. This blog will guide you through the process to scrape Aliexpress review data using Python.

About AliExpress

AliExpress is a global online retail platform launched in 2010 by the Alibaba Group. It connects international buyers with Chinese sellers, offering a wide range of products including electronics, fashion, home goods, and more. Known for its affordable prices and vast product selection, AliExpress caters to both individual consumers and businesses looking for wholesale deals. The platform supports multiple languages and currencies, enhancing its accessibility and appeal to a global audience. AliExpress is also known for its buyer protection policies, ensuring secure transactions and customer satisfaction. The platform's review system allows customers to share their experiences and rate products, providing valuable feedback to other shoppers. With its comprehensive logistics network and various shipping options, AliExpress has become a popular choice for online shopping, especially for those seeking cost-effective alternatives to local marketplaces.

Prerequisites

Before we dive into the actual scraping process, ensure you have the following tools and libraries installed:

Python: Make sure you have Python installed on your system. You can download it from python.org.

BeautifulSoup: A library used for parsing HTML and XML documents.

Requests: A simple HTTP library for Python.

Pandas: A data manipulation and analysis library.

Selenium: A browser automation tool, useful if JavaScript rendering is required.

Install these libraries using pip:pip install beautifulsoup4 requests pandas selenium

Understanding the AliExpress Website Structure

Before we start scraping, it's crucial to understand the structure of the AliExpress review pages. Reviews are often loaded dynamically with JavaScript, which means we may need to use Selenium to render the JavaScript content before extracting the data.

Scraping AliExpress Review Data

Step 1: Setting Up Selenium

First, let's set up Selenium to automate the browser and fetch the dynamically loaded content.

Download the appropriate WebDriver for your browser (e.g., ChromeDriver for Google Chrome) from here.

Place the WebDriver executable in a directory included in your system's PATH.

Step 2: Navigating to the Product Page

Step 3: Locating and Loading Reviews

Step 4: Extracting Review Data

Step 5: Saving the Data

Finally, save the extracted review data to a CSV file for further analysis.df.to_csv('aliexpress_reviews.csv', index=False)

Handling Anti-Scraping Measures

AliExpress, like many other websites, has measures in place to prevent automated scraping. Here are some tips to avoid getting blocked:

Use Proxies: Rotate proxies to distribute your requests and avoid getting banned.

Random Delays: Introduce random delays between requests to mimic human behavior.

User-Agent Rotation: Rotate user-agent strings to make your requests appear as if they are coming from different browsers.

Implementing Proxies and User-Agent Rotation

Use Cases of Scraping AliExpress Review Data

Market Research and Competitor Analysis

To extract Aliexpress review data can provide invaluable insights for market research and competitor analysis. Businesses can analyze customer feedback on competitors’ products by extracting review data to understand their strengths and weaknesses. This information can guide product development and marketing strategies, helping companies to stay competitive in the e-commerce landscape.

Sentiment Analysis

Performing sentiment analysis on AliExpress review data allows businesses to gauge customer satisfaction and identify common pain points. By scraping and analyzing reviews, companies can detect positive and negative trends in customer feedback. This enables them to make informed decisions on product improvements and customer service enhancements.

Product Development

Product developers can benefit from AliExpress review data extraction by better understanding customer preferences and needs. Reviews often contain detailed information about product performance, quality, and features that customers value. This data can be used to refine existing products or develop new ones that better meet market demands.

Pricing Strategy

E-commerce data scraping from AliExpress reviews can help businesses optimize their pricing strategies. By analyzing reviews, companies can identify how price changes impact customer satisfaction and sales. This insight allows for the adjustment of pricing models to maximize revenue while maintaining customer loyalty.

Enhancing Customer Experience

Extracting AliExpress review data can significantly enhance the customer experience by identifying common issues and areas for improvement. Businesses can use this data to address frequent complaints, improve product descriptions, and enhance customer support. This proactive approach can lead to higher customer satisfaction and retention rates.

Influencer and Affiliate Marketing

Marketers can scrape Aliexpress review data to provide a way to identify potential influencers and affiliates. Reviews often highlight individuals who are particularly enthusiastic about specific products. These individuals can be approached for collaborations to promote products, leveraging their positive experiences to reach a broader audience.

Trend Analysis

AliExpress review data extraction helps identify emerging trends in consumer preferences and market demands. By continuously monitoring reviews, businesses can stay ahead of market shifts and adapt their offerings accordingly. This ensures they remain relevant and competitive in a fast-evolving e-commerce environment.

By leveraging the insights gained from scraping AliExpress review data, businesses can make data-driven decisions to improve their products, marketing strategies, and customer relations, ultimately driving growth and success in the e-commerce sector.

How Datazivot Can Help You with Aliexpress Review Data Extraction?

Comprehensive Data Extraction Services

Datazivot specializes in e-commerce Review data scraping, offering robust solutions to scrape AliExpress review data efficiently and accurately. By leveraging advanced scraping technologies, Datazivot can extract detailed review data from AliExpress, including ratings, customer comments, timestamps, and more. This comprehensive data extraction service ensures you receive all the necessary information to make informed business decisions.

Customized Solutions

Understanding that each business has unique needs, Datazivot provides customized AliExpress review data extraction solutions tailored to your specific requirements. Whether you need data on specific products, categories, or timeframes, Datazivot can tailor its scraping services to meet your exact specifications. This customization ensures you get the most relevant and valuable insights from the data.

High-Quality Data and Accuracy

Datazivot employs state-of-the-art scraping techniques to ensure the data extracted is accurate and of high quality. The company's sophisticated algorithms and tools can handle large volumes of data without compromising on accuracy. This high level of precision is crucial for businesses that rely on detailed and reliable data for market analysis, product development, and strategic planning.

Real-Time Data Extraction

In the fast-paced e-commerce environment, having access to real-time data is essential. Datazivot offers real-time AliExpress review data extraction, enabling businesses to stay up-to-date with the latest customer feedback and market trends. This real-time access helps businesses react promptly to changes in consumer sentiment and market dynamics.

Data Integration and Analysis

Beyond just scraping data, Datazivot assists with the integration and analysis of the extracted AliExpress review data. The company provides tools and services to help you seamlessly integrate the data into your existing systems and workflows. Additionally, Datazivot offers analytical support to help you derive actionable insights from the data, enhancing your decision-making process.

Compliance and Ethical Scraping

Datazivot adheres to strict ethical guidelines and legal standards in its product review data scraping practices. The company ensures that its methods comply with AliExpress’s terms of service and relevant data protection regulations. This commitment to ethical scraping provides peace of mind that your data extraction activities are conducted responsibly and legally.

Conclusion

To scrape Aliexpress review data with Reviews Scraping API involves navigating dynamic content and handling anti-scraping measures effectively. By leveraging libraries like Selenium, BeautifulSoup, and Requests, you can extract valuable review data for analysis and insights. Remember to respect the website's terms of service and use scraping ethically.

With Datazivot’s expertise in e-commerce data scraping, you can streamline the process of extracting AliExpress review data and gain actionable insights for your projects using Reviews Scraping API. Contact Datazivot today to learn how we can help you harness the power of AliExpress review data for your business!

#ScrapeAliExpressReviewData#ExtractAliExpressReviewData#ProductReviewDataScraping#AliExpressReviewDataScraping#AliExpressReviewDataExtraction#AliExpressReviewDataScraper#EcommerceReviewDataScraping

0 notes

Text

Python in Data Engineering: Powering Your Data Processes

Python is a globally recognized programming language, consistently ranking high in various surveys. For instance, it bagged the first position in the Popularity of Programming Language index and secured the second spot in the TIOBE index. Moreover, the Stack Overflow survey for 2021 saw Python as the most sought-after and third most adored programming language.

Predominantly regarded as the language of choice for data scientists, Python has also made significant strides in data engineering, becoming a critical tool in the field.

Data Engineering in the Cloud

Data engineers and data scientists often encounter similar challenges, particularly concerning data processing. However, in the realm of data engineering, our primary focus is on robust, reliable, and efficient industrial processes like data pipelines and ETL (Extract-Transform-Load) jobs, irrespective of whether the solution is for on-premise or cloud platforms.

Python has showcased its suitability for cloud environments, prompting cloud service providers to integrate Python for controlling and implementing their services. Major players in the cloud arena like Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure have incorporated Python solutions in their services to address various problems.

In the serverless computing domain, Python is one of the few programming languages supported by AWS Lambda Functions, GCP Cloud Functions, and Azure Functions. These services enable on-demand triggering of data ETL processes without the need for a perpetually running server.

For big data problems where ETL jobs require heavy processing, parallel computing becomes essential. Python wrapper for the Spark engine, PySpark, is supported by AWS Elastic MapReduce (EMR), GCP's Dataproc, and Azure's HDInsight.

Each of these platforms offers APIs, which are critical for programmatic data retrieval or job triggering, and these are conveniently wrapped in Python SDKs like boto for AWS, google_cloud_* for GCP, and azure-sdk-for-python for Azure.

Python's Role in Data Ingestion

Business data can come from various sources like SQL and noSQL databases, flat files like CSVs, spreadsheets, external systems, APIs, and web documents. Python's popularity has led to the development of numerous libraries and modules for accessing these data, such as SQLAlchemy for SQL databases, Scrapy, Beautiful Soup, and Requests for web-originated data, and many more.

A noteworthy library is Pandas, which facilitates reading data into "DataFrames" from various formats, including CSVs, TSVs, JSON, XML, HTML, LaTeX, SQL, Microsoft, and open spreadsheets, and other binary formats.

Parallel Computing with PySpark

Apache Spark, an open-source engine for processing large volumes of data, leverages parallel computing principles in a highly efficient and fault-tolerant manner. PySpark, a Python interface for Spark, is extensively used and offers a straightforward way to develop ETL jobs for those familiar with Pandas.

Job Scheduling with Apache Airflow

Cloud platforms have commercialized popular Python-based tools as "managed" services for easier setup and operation. One such example is Amazon's Managed Workflows for Apache Airflow. Apache Airflow, written in Python, is an open-source workflow management platform, allowing you to author and schedule workflow processing sequences programmatically.

Conclusion

Python plays a significant role in data engineering and is an indispensable tool for any data engineer. With its ability to implement and control most relevant technologies and processes, Python has been a natural choice for Mindfire Solutions, allowing us to offer data engineering services and web development solutions in Python. If you're looking for data engineering services, please feel free to contact us at Mindfire Solutions. We're always ready to discuss your needs and find out how we can assist you in meeting your business goals.

0 notes

Photo

Full technical SEO audit of your website "He provided very detailed and neat work. Also, he has a way of explaining things that makes you want to listen to him and you feel like you can learn. He carried out a technical SEO audit for a website and I am 100% sure that he would hire him again for this service or another" WHAT'S WRONG WITH YOUR WEBSITE/ONLINE BUSINESS? If your website has any technical problems, they could impact many things. Your Position In Google How Quick Your Site Loads On Phones Getting New Customers Making More Money Meet Terry O SEO Consultant: He will run a technical SEO audit report on your website. This will list any technical on-page SEO issues affecting your SEO performance. That includes: Crawlability Issues & Errors Google Indexability status Link Issues Redirects Social Tagging Issues Page Speed Issues Slow Page Issues Image Optimisation Issues Problems with Javascript CSS Issues HTTP Status Codes Crawl Depth XML Sitemaps check HTML Sitemaps check Bot Access Site Architecture URL Structure Site Content Title Tags Social Sharing Popups and Notifications THIS AUDIT IS ONE OF THE MOST IMPORTANT THINGS YOU CAN DO FOR YOUR SITE. This report will save you $1000's on hiring an expensive digital design agency to fix your website. What You Get: A video review of your audit and printouts You'll get a scored rating out of 100 for your website. A list of errors or warnings on .csv file Excel sheets that you can review Where are the problems on your site Contact Now: https://lnkd.in/gWYvci-3 #seo #seoaudit #seoauditexpert #searchengineoptimizationaudit #websiteaudit

0 notes

Text

6 Free SEO Tools to Powercharge Your SEO Campaign

Every SEO professional has the same opinion that doing SEO barehanded is a dead-end deal. Search engine optimization software makes that point ingesting and painstaking job loads quicker and less complicated. There are a terrific variety of search engine marketing gear designed to serve each purpose of internet site optimization. They come up with a assisting hand at every stage of SEO beginning with key-word research and finishing with the evaluation of your search engine optimization campaign effects. (SANWELLS)

Nowadays you could discover a wide range of definitely loose search engine marketing gear to be had at the net. And this evaluate of the most popular loose search engine optimization tools is going that will help you choose up the ones that may make your website reputation leap.

Google AdWords Keyword Tool

Keywords lay the foundation stone of every SEO campaign. Hence keywords unearthing and faceting is the initial step at the way to Google's pinnacle. Of path you could put on your thinking cap and make up the list of key phrases on your own. But this is like a shot inside the dark considering the fact that your thoughts may additionally significantly fluctuate from the terms human beings definitely enter in Google.

Here, Google AdWords Keyword Tool is available in on hand. Despite the truth that this tool is firstly aimed to help Google AdWords advertisers, you could use that for key-word studies too. Google AdWords Keyword Tool facilitates you find out which key phrases to target, suggests the opposition for the selected keywords, helps you to see anticipated traffic volumes and provides the listing of guidelines on famous keywords. Google AdWords Keyword Tool has a customers-friendly interface and furthermore it is absolutely loose. You can use some different paid alternative equipment like Wordtracker or Keyword Discovery that may be powerful as well, but Google AdWords Keyword Tool is the remaining leader among unfastened keyword studies tools.

XML Sitemaps Generators

To make sure all your pages get crawled and indexed you have to set sitemaps to your internet site. They are like prepared-to-move slowly webs for Google spiders that permit them to quickly discover what pages are in location and which of them have been lately up to date. Sitemaps also can be useful for human visitors on account that they arrange the whole structure of internet site's content and make website navigation lots less difficult. The XML Sitemap Generator helps you to make up XML, ROR sitemaps that can be submitted to Google, Yahoo! And masses of different search engines like google and yahoo. This search engine marketing tool additionally lets you generate HTML sitemaps that enhance internet site navigation for humans and make your website online site visitors-pleasant.

Search engine marketing Book's Rank Checker

To check whether your optimization campaign is blowing hot or bloodless you want an excellent rank checking device to degree the fluctuations of your website's rankings. SEO Book's Rank Checker may be of extraordinary help in that. It's a Firefox plug-in that lets you run checking ranks in Big Three: Google, Yahoo! And Bing and effortlessly export the accumulated records. All you have to do is enter you website's URL and the key phrases you need to test your positions for. That's it and in some seconds SEO Book's Rank Checker presents you with the outcomes for your ratings. It's rapid, easy-in-use and unfastened.

Backlinks Watch

Links are just like the ace of trumps in Google reputation sport. The factor is that the extra great links are on your back link profile the better your website ranks. That's why an amazing search engine marketing device for hyperlink research and evaluation is a have to-have for your arsenal. Backlink Watch is an online oneway link checker that facilitates you no longer handiest see what websites have hyperlinks in your page, but also offers you some facts for search engine optimization evaluation, together with the name of the linking web page, the anchor text of the link, it says whether the hyperlink has dofollow or nofollow tag, and many others. The simplest downside of this device is that it gives you handiest 1,000 backlinks consistent with a website, no matter the real variety of backlinks a website has.

Compete

Compete[dot]com gives a huge pool of analytical records to fish from. It's an on line tool for monitoring and analysis of on line competition that gives categories of services: free Site Analytics and subscription based totally paid Search Analytics that helps you to take gain of a few additional features. Compete an out-and-outer search engine marketing device that helps you to see site visitors and engagement metrics for a precise internet site and find the websites for affiliating and link constructing purposes. Compete is also a high-quality keyword analyzer, because it lets you run the evaluation of your on line competitors' keywords. Some other features to mention are subdomain evaluation, export to CSV, tagging and so forth.

SEO PowerSuite.

SEO PowerSuite is all-in-one search engine marketing toolkit that helps you to cowl all elements of internet site optimization. It consists of four SEO gear to nail all search engine marketing responsibilities. WebSite Auditor is a incredible leg-up for developing smashing content in your internet site. It analyzes you top 10 online competitors and works out a surefire plan based on the excellent optimization practices to your niche. Rank Tracker is a wonderful at your internet site positions monitoring and producing the maximum click on-productive words. Search engine marketing SpyGlass is powercharged search engine marketing software for oneway link checking and evaluation. This is the handiest SEO tool that helps you to discover up to 50,000 backlinks consistent with a website and generate reports with ready-to-use internet site optimization approach. And the last and the maximum superior on this row is LinkAssistant. It is a feature-wealthy powerhouse search engine optimization device for hyperlink building and management that shoulders the primary elements of offpage optimization.

Free versions of these 4 gear can help you tackle the primary optimization challenges. You can also purchase an extended version of search engine marketing PowerSuite with advanced features to make your optimization campaign whole.

Summing matters up we will conclude that there are lots of search engine optimization gear in order to in no way burn a hole on your pocket and can help you efficiently run your internet site optimization campaign with minimal of fees and efforts.

You can find more statistics at the pleasant unfastened SEO equipment and SEO software that turn your search engine marketing into child's play.

1 note

·

View note

Text

Which Is The Best PostgreSQL GUI? 2021 Comparison

PostgreSQL graphical user interface (GUI) tools help open source database users to manage, manipulate, and visualize their data. In this post, we discuss the top 6 GUI tools for administering your PostgreSQL hosting deployments. PostgreSQL is the fourth most popular database management system in the world, and heavily used in all sizes of applications from small to large. The traditional method to work with databases is using the command-line interface (CLI) tool, however, this interface presents a number of issues:

It requires a big learning curve to get the best out of the DBMS.

Console display may not be something of your liking, and it only gives very little information at a time.

It is difficult to browse databases and tables, check indexes, and monitor databases through the console.

Many still prefer CLIs over GUIs, but this set is ever so shrinking. I believe anyone who comes into programming after 2010 will tell you GUI tools increase their productivity over a CLI solution.

Why Use a GUI Tool?

Now that we understand the issues users face with the CLI, let’s take a look at the advantages of using a PostgreSQL GUI:

Shortcut keys make it easier to use, and much easier to learn for new users.

Offers great visualization to help you interpret your data.

You can remotely access and navigate another database server.

The window-based interface makes it much easier to manage your PostgreSQL data.

Easier access to files, features, and the operating system.

So, bottom line, GUI tools make PostgreSQL developers’ lives easier.

Top PostgreSQL GUI Tools

Today I will tell you about the 6 best PostgreSQL GUI tools. If you want a quick overview of this article, feel free to check out our infographic at the end of this post. Let’s start with the first and most popular one.

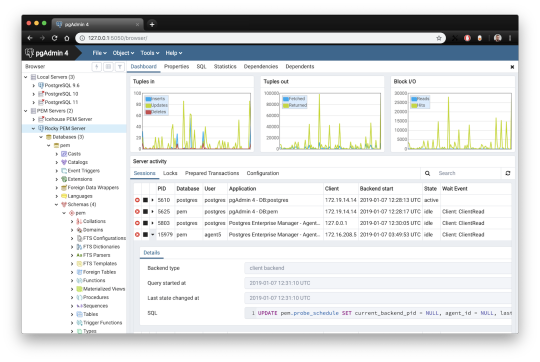

1. pgAdmin

pgAdmin is the de facto GUI tool for PostgreSQL, and the first tool anyone would use for PostgreSQL. It supports all PostgreSQL operations and features while being free and open source. pgAdmin is used by both novice and seasoned DBAs and developers for database administration.

Here are some of the top reasons why PostgreSQL users love pgAdmin:

Create, view and edit on all common PostgreSQL objects.

Offers a graphical query planning tool with color syntax highlighting.

The dashboard lets you monitor server activities such as database locks, connected sessions, and prepared transactions.

Since pgAdmin is a web application, you can deploy it on any server and access it remotely.

pgAdmin UI consists of detachable panels that you can arrange according to your likings.

Provides a procedural language debugger to help you debug your code.

pgAdmin has a portable version which can help you easily move your data between machines.

There are several cons of pgAdmin that users have generally complained about:

The UI is slow and non-intuitive compared to paid GUI tools.

pgAdmin uses too many resources.

pgAdmin can be used on Windows, Linux, and Mac OS. We listed it first as it’s the most used GUI tool for PostgreSQL, and the only native PostgreSQL GUI tool in our list. As it’s dedicated exclusively to PostgreSQL, you can expect it to update with the latest features of each version. pgAdmin can be downloaded from their official website.

pgAdmin Pricing: Free (open source)

2. DBeaver

DBeaver is a major cross-platform GUI tool for PostgreSQL that both developers and database administrators love. DBeaver is not a native GUI tool for PostgreSQL, as it supports all the popular databases like MySQL, MariaDB, Sybase, SQLite, Oracle, SQL Server, DB2, MS Access, Firebird, Teradata, Apache Hive, Phoenix, Presto, and Derby – any database which has a JDBC driver (over 80 databases!).

Here are some of the top DBeaver GUI features for PostgreSQL:

Visual Query builder helps you to construct complex SQL queries without actual knowledge of SQL.

It has one of the best editors – multiple data views are available to support a variety of user needs.

Convenient navigation among data.

In DBeaver, you can generate fake data that looks like real data allowing you to test your systems.

Full-text data search against all chosen tables/views with search results shown as filtered tables/views.

Metadata search among rows in database system tables.

Import and export data with many file formats such as CSV, HTML, XML, JSON, XLS, XLSX.

Provides advanced security for your databases by storing passwords in secured storage protected by a master password.

Automatically generated ER diagrams for a database/schema.

Enterprise Edition provides a special online support system.

One of the cons of DBeaver is it may be slow when dealing with large data sets compared to some expensive GUI tools like Navicat and DataGrip.

You can run DBeaver on Windows, Linux, and macOS, and easily connect DBeaver PostgreSQL with or without SSL. It has a free open-source edition as well an enterprise edition. You can buy the standard license for enterprise edition at $199, or by subscription at $19/month. The free version is good enough for most companies, as many of the DBeaver users will tell you the free edition is better than pgAdmin.

DBeaver Pricing

: Free community, $199 standard license

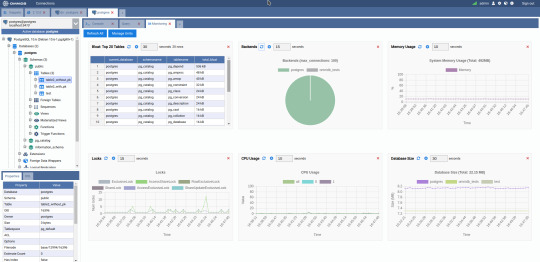

3. OmniDB

The next PostgreSQL GUI we’re going to review is OmniDB. OmniDB lets you add, edit, and manage data and all other necessary features in a unified workspace. Although OmniDB supports other database systems like MySQL, Oracle, and MariaDB, their primary target is PostgreSQL. This open source tool is mainly sponsored by 2ndQuadrant. OmniDB supports all three major platforms, namely Windows, Linux, and Mac OS X.

There are many reasons why you should use OmniDB for your Postgres developments:

You can easily configure it by adding and removing connections, and leverage encrypted connections when remote connections are necessary.

Smart SQL editor helps you to write SQL codes through autocomplete and syntax highlighting features.

Add-on support available for debugging capabilities to PostgreSQL functions and procedures.

You can monitor the dashboard from customizable charts that show real-time information about your database.

Query plan visualization helps you find bottlenecks in your SQL queries.

It allows access from multiple computers with encrypted personal information.

Developers can add and share new features via plugins.

There are a couple of cons with OmniDB:

OmniDB lacks community support in comparison to pgAdmin and DBeaver. So, you might find it difficult to learn this tool, and could feel a bit alone when you face an issue.

It doesn’t have as many features as paid GUI tools like Navicat and DataGrip.

OmniDB users have favorable opinions about it, and you can download OmniDB for PostgreSQL from here.

OmniDB Pricing: Free (open source)

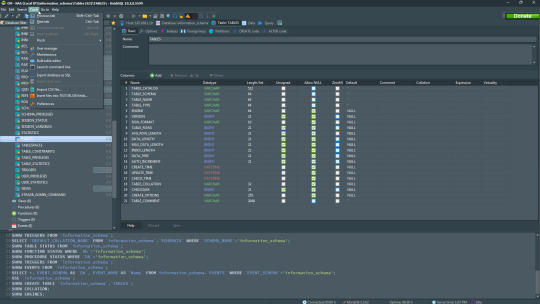

4. DataGrip

DataGrip is a cross-platform integrated development environment (IDE) that supports multiple database environments. The most important thing to note about DataGrip is that it’s developed by JetBrains, one of the leading brands for developing IDEs. If you have ever used PhpStorm, IntelliJ IDEA, PyCharm, WebStorm, you won’t need an introduction on how good JetBrains IDEs are.

There are many exciting features to like in the DataGrip PostgreSQL GUI:

The context-sensitive and schema-aware auto-complete feature suggests more relevant code completions.

It has a beautiful and customizable UI along with an intelligent query console that keeps track of all your activities so you won’t lose your work. Moreover, you can easily add, remove, edit, and clone data rows with its powerful editor.

There are many ways to navigate schema between tables, views, and procedures.

It can immediately detect bugs in your code and suggest the best options to fix them.

It has an advanced refactoring process – when you rename a variable or an object, it can resolve all references automatically.

DataGrip is not just a GUI tool for PostgreSQL, but a full-featured IDE that has features like version control systems.

There are a few cons in DataGrip:

The obvious issue is that it’s not native to PostgreSQL, so it lacks PostgreSQL-specific features. For example, it is not easy to debug errors as not all are able to be shown.

Not only DataGrip, but most JetBrains IDEs have a big learning curve making it a bit overwhelming for beginner developers.

It consumes a lot of resources, like RAM, from your system.

DataGrip supports a tremendous list of database management systems, including SQL Server, MySQL, Oracle, SQLite, Azure Database, DB2, H2, MariaDB, Cassandra, HyperSQL, Apache Derby, and many more.

DataGrip supports all three major operating systems, Windows, Linux, and Mac OS. One of the downsides is that JetBrains products are comparatively costly. DataGrip has two different prices for organizations and individuals. DataGrip for Organizations will cost you $19.90/month, or $199 for the first year, $159 for the second year, and $119 for the third year onwards. The individual package will cost you $8.90/month, or $89 for the first year. You can test it out during the free 30 day trial period.

DataGrip Pricing

: $8.90/month to $199/year

5. Navicat

Navicat is an easy-to-use graphical tool that targets both beginner and experienced developers. It supports several database systems such as MySQL, PostgreSQL, and MongoDB. One of the special features of Navicat is its collaboration with cloud databases like Amazon Redshift, Amazon RDS, Amazon Aurora, Microsoft Azure, Google Cloud, Tencent Cloud, Alibaba Cloud, and Huawei Cloud.

Important features of Navicat for Postgres include:

It has a very intuitive and fast UI. You can easily create and edit SQL statements with its visual SQL builder, and the powerful code auto-completion saves you a lot of time and helps you avoid mistakes.

Navicat has a powerful data modeling tool for visualizing database structures, making changes, and designing entire schemas from scratch. You can manipulate almost any database object visually through diagrams.

Navicat can run scheduled jobs and notify you via email when the job is done running.

Navicat is capable of synchronizing different data sources and schemas.

Navicat has an add-on feature (Navicat Cloud) that offers project-based team collaboration.

It establishes secure connections through SSH tunneling and SSL ensuring every connection is secure, stable, and reliable.

You can import and export data to diverse formats like Excel, Access, CSV, and more.

Despite all the good features, there are a few cons that you need to consider before buying Navicat:

The license is locked to a single platform. You need to buy different licenses for PostgreSQL and MySQL. Considering its heavy price, this is a bit difficult for a small company or a freelancer.

It has many features that will take some time for a newbie to get going.

You can use Navicat in Windows, Linux, Mac OS, and iOS environments. The quality of Navicat is endorsed by its world-popular clients, including Apple, Oracle, Google, Microsoft, Facebook, Disney, and Adobe. Navicat comes in three editions called enterprise edition, standard edition, and non-commercial edition. Enterprise edition costs you $14.99/month up to $299 for a perpetual license, the standard edition is $9.99/month up to $199 for a perpetual license, and then the non-commercial edition costs $5.99/month up to $119 for its perpetual license. You can get full price details here, and download the Navicat trial version for 14 days from here.

Navicat Pricing

: $5.99/month up to $299/license

6. HeidiSQL

HeidiSQL is a new addition to our best PostgreSQL GUI tools list in 2021. It is a lightweight, free open source GUI that helps you manage tables, logs and users, edit data, views, procedures and scheduled events, and is continuously enhanced by the active group of contributors. HeidiSQL was initially developed for MySQL, and later added support for MS SQL Server, PostgreSQL, SQLite and MariaDB. Invented in 2002 by Ansgar Becker, HeidiSQL aims to be easy to learn and provide the simplest way to connect to a database, fire queries, and see what’s in a database.

Some of the advantages of HeidiSQL for PostgreSQL include:

Connects to multiple servers in one window.

Generates nice SQL-exports, and allows you to export from one server/database directly to another server/database.

Provides a comfortable grid to browse and edit table data, and perform bulk table edits such as move to database, change engine or ollation.

You can write queries with customizable syntax-highlighting and code-completion.

It has an active community helping to support other users and GUI improvements.

Allows you to find specific text in all tables of all databases on a single server, and optimize repair tables in a batch manner.

Provides a dialog for quick grid/data exports to Excel, HTML, JSON, PHP, even LaTeX.

There are a few cons to HeidiSQL:

Does not offer a procedural language debugger to help you debug your code.

Built for Windows, and currently only supports Windows (which is not a con for our Windors readers!)

HeidiSQL does have a lot of bugs, but the author is very attentive and active in addressing issues.

If HeidiSQL is right for you, you can download it here and follow updates on their GitHub page.

HeidiSQL Pricing: Free (open source)

Conclusion

Let’s summarize our top PostgreSQL GUI comparison. Almost everyone starts PostgreSQL with pgAdmin. It has great community support, and there are a lot of resources to help you if you face an issue. Usually, pgAdmin satisfies the needs of many developers to a great extent and thus, most developers do not look for other GUI tools. That’s why pgAdmin remains to be the most popular GUI tool.

If you are looking for an open source solution that has a better UI and visual editor, then DBeaver and OmniDB are great solutions for you. For users looking for a free lightweight GUI that supports multiple database types, HeidiSQL may be right for you. If you are looking for more features than what’s provided by an open source tool, and you’re ready to pay a good price for it, then Navicat and DataGrip are the best GUI products on the market.

Ready for some PostgreSQL automation?

See how you can get your time back with fully managed PostgreSQL hosting. Pricing starts at just $10/month.

While I believe one of these tools should surely support your requirements, there are other popular GUI tools for PostgreSQL that you might like, including Valentina Studio, Adminer, DB visualizer, and SQL workbench. I hope this article will help you decide which GUI tool suits your needs.

Which Is The Best PostgreSQL GUI? 2019 Comparison

Here are the top PostgreSQL GUI tools covered in our previous 2019 post:

pgAdmin

DBeaver

Navicat

DataGrip

OmniDB

Original source: ScaleGrid Blog

3 notes

·

View notes

Text

Sqlite For Mac Os X

Sqlite For Mac Os X El Capitan

Sqlite Viewer Mac

Sqlite Mac Os X Install

If you are looking for an SQLite Editor in the public domain under Creative Commons license or GPL (General Public License) i.e. for free commercial or non-commercial use. Then here is a shortlist of the SQLite Editor that is available on the web for free download.

SQLite is famous for its great feature zero-configuration, which means no complex setup or administration is needed. This chapter will take you through the process of setting up SQLite on Windows, Linux and Mac OS X. Install SQLite on Windows. Step 1 − Go to SQLite download page, and download precompiled binaries from Windows section. Core Data is an object graph and persistence framework provided by Apple in the macOS and iOS operating systems.It was introduced in Mac OS X 10.4 Tiger and iOS with iPhone SDK 3.0. It allows data organized by the relational entity–attribute model to be serialized into XML, binary, or SQLite stores. The data can be manipulated using higher level objects representing entities. Requirements: Free, ideally open source Browse schema, data. Run queries Bonus if updated in near real time when the file is. SQLite viewer for Mac OS X. Ask Question Asked 5 years, 10 months ago. Active 4 years, 3 months ago. Viewed 504 times 3. I need to inspect an SQLite file on Mac. Since I develop on Windows, Linux and OS X, it helps to have the same tools available on each. I also tried SQLite Admin (Windows, so irrelevant to the question anyway) for a while, but it seems unmaintained these days, and has the most annoying hotkeys of any application I've ever used - Ctrl-S clears the current query, with no hope of undo.

These software work on macOS, Windows, Linux and most of the Unix Operating systems.

SQLite is the server. The SQLite library reads and writes directly to and from the database files on disk. SQLite is used by Mac OS X software such as NetNewsWire and SpamSieve. When you download SQLite and build it on a stock Mac OS X system, the sqlite tool has a.

1. SQLiteStudio

Link : http://sqlitestudio.pl/

SQLiteStudio Database manager has the following features :

A small single executable Binary file, so there is need to install or uninstall.

Open source and free - Released under GPLv2 licence.

Good UI with SQLite3 and SQLite2 features.

Supports Windows 9x/2k/XP/2003/Vista/7, Linux, MacOS X, Solaris, FreeBSD and other Unix Systems.

Language support : English, Polish, Spanish, German, Russian, Japanese, Italian, Dutch, Chinese,

Exporting Options : SQL statements, CSV, HTML, XML, PDF, JSON, dBase

Importing Options : CSV, dBase, custom text files, regular expressions

UTF-8 support

2. Sqlite Expert

Link : http://www.sqliteexpert.com/download.html

SQLite Expert though not under public domain, but its free for commercial use and is available in two flavours.

a. Personal Edition

Sqlite For Mac Os X El Capitan

It is free for personal and commercial use but, covers only basic SQLite features.

But its a freeware and does not have an expiration date.

b. Professional Edition

It is for $59 (onetime fee, with free lifetime updates )

It covers In-depth SQLite features.

But its a freeware and does not have an expiration date.

Features :

Visual SQL Query Builder : with auto formatting, sql parsing, analysis and syntax highlighting features.

Powerful restructure capabilities : Restructure any complex table without losing data.

Import and Export data : CSV files, SQL script or SQLite. Export data to Excel via clipboard.

Data editing : using powerful in-place editors

Image editor : JPEG, PNG, BMP, GIF and ICO image formats.

Full Unicode Support.

Support for encrypted databases.

Lua and Pascal scripting support.

3. Database Browser for SQLite

Link : http://sqlitebrowser.org/

Database Browser for SQLite is a high quality, visual, open source tool to create, design, and edit database files compatible with SQLite.

Database Browser for SQLite is bi-licensed under the Mozilla Public License Version 2, as well as the GNU General Public License Version 3 or later.

You can modify or redistribute it under the conditions of these licenses.

Features :

You can Create, define, modify and delete tables

You can Create, define and delete indexes

You can Browse, edit, add and delete records

You can Search records

You can Import and export records as

You can Import and export tables from/to text, CSV, SQL dump files

You can Issue SQL queries and inspect the results

You can See Log of all SQL commands issued by the application

4. SQLite Manager for Firefox Browser

Link : https://addons.mozilla.org/en-US/firefox/addon/sqlite-manager/

This is an addon plugin for Firefox Browser,

Features :

Manage any SQLite database on your computer.

An intuitive hierarchical tree showing database objects.

Helpful dialogs to manage tables, indexes, views and triggers.

You can browse and search the tables, as well as add, edit, delete and duplicate the records.

Facility to execute any sql query.

The views can be searched too.

A dropdown menu helps with the SQL syntax thus making writing SQL easier.

Easy access to common operations through menu, toolbars, buttons and context-menu.

Export tables/views/database in csv/xml/sql format. Import from csv/xml/sql (both UTF-8 and UTF-16).

Possible to execute multiple sql statements in Execute tab.

You can save the queries.

Support for ADS on Windows

Sqlite Viewer Mac

More Posts related to Mac-OS-X,

More Posts:

Sqlite Mac Os X Install

Facebook Thanks for stopping by! We hope to see you again soon. - Facebook

Android EditText Cursor Colour appears to be white - Android

Disable EditText Cursor Android - Android

Connection Failed: 1130 PHP MySQL Error - MySQL

SharePoint Managed Metadata Hidden Taxonomy List - TaxonomyHiddenList - SharePoint

Execute .bin and .run file Ubuntu Linux - Linux

Possible outages message Google Webmaster tool - Google

Android : Remove ListView Separator/divider programmatically or using xml property - Android

Unable to edit file in Notepad++ - NotepadPlusPlus

SharePoint PowerShell Merge-SPLogFile filter by time using StartTime EndTime - SharePoint

SQLite Error: unknown command or invalid arguments: open. Enter .help for help - Android

JBoss stuck loading JBAS015899: AS 7.1.1.Final Brontes starting - Java

Android Wifi WPA2/WPA Connects and Disconnects issue - Android

Android Toolbar example with appcompat_v7 21 - Android

ERROR x86 emulation currently requires hardware acceleration. Intel HAXM is not installed on this machine - Android

1 note

·

View note

Text

Omegat google translate

#Omegat google translate how to

#Omegat google translate pdf

#Omegat google translate download

#Omegat google translate mac

Additional formats can be supported by defining custom configurations, for example using regular-expressions based parameters. The filters include support for formats such as HTML, XLIFF, TMX, PO, XML (supports ITS), (ODT, ODS, ODP, etc.), MS Office 2007 (DOCX, XSLX, PPTX, etc.), Properties, CSV, and more. They all have a common API and can be used to perform different types of actions on the translatable content of many different file formats. The framework provides a collection of filters. The framework allows also to create new utilities easily using a pipeline mechanism. Other utilities include: encodings convertion, source-target text alignment, pseudo-translation, translation comparison, RTF conversion, search and replace, and more. It supports the creation of different packages you can translate using tools such as OmegaT, Virtaal, and even commercial tools. Translating with OmegaT - An Introduction to Computer Assisted Translation. The framework is useful to develop workflows to process files before and after translations, or to perform different tasks on translation-related data at any point.įor example, you can use Rainbow, one of the tools built on top of the libraries, to prepare for translation documents in many different formats, and to post-process them after translation. 19-04-2013 - Google Translate now supports Khmer - Google Translate Blog. Those applications are built on top of Java libraries that you can also use directly to develop your own programs and scripts. Keep following LinuxHint for future tips and updates on Linux.The Okapi framework is a set of cross-platform tools and components to help in translation and localization tasks. I hope you found this article informative and useful. Beagle was featured on Linux for Translators as an interesting tool for language professionals. packages you can translate using tools such as OmegaT, Virtaal, and even commercial tools. Beagle is a terminology indexer which allows to search content among our files and applications.

#Omegat google translate how to

In this article you can find how to install and get started with Tesseract.ĭespite this is not intended for professional translators Beagle is a great aid for people working with documents. Empty queries are charged for one character. memory applications and interface to Google Translate. Price is per character sent to the API for processing, including whitespace characters. OmegaT translation memory manager, versions OmegaT2-2-204Beta and. It costs 20 per 1 million characters for translation or language detection. It is also a great way to contabilize words from such sources to give your customers a proper quote. Its pricing is based off monthly usage in terms of millions of characters. OCR tools allow us to extract text content from images, handwriting or scanned papers. As professional linguistic many times you’ll get from customers scanned documents, images with long text and embedded content you can’t just copy to edit. OCR: Optical Character Recognition with Tesseractĭeveloped by Google and IBM is one of the leading OCR systems in the market.

#Omegat google translate download

It allows to download and align websites and is capable to align texts in up to 100 languages simultaneously.

#Omegat google translate pdf

It supports autoalign for txt, docx, rtf, pdf and more formats.

#Omegat google translate mac

LF ALigner, also available for Linux, Windows and Mac is another memory and alignment tool. Bitext2Mx helps translators to keep a proper paragraph structure and associate text segments. It allows to keep translated content aligned as the original, or to save differential rules to align segments automatically. It supports Linux, Mac and Windows and the subtitling process is really easy and intuitive.īitext2Mx is the most popular alignment tool. Very old but AegiSub is among the most popular tools to add or edit subtitles in media files (both video and audio files). It also allows to split and join files, frame rate conversion, colouring subtitles and more.Īlso available for Linux, Mac and Windows, Gaupol is another open source subtitles editor written in Python and released under GPL license. It is available for Linux, Mac and Windows and allows to preview subtitles in real time. Jubler is a great subtitling tool with unique features like spell checking with option to select dictionaries. Official website for MateCat: Captioning/Subtitling tools: It does not require installation and may be the best option to get started with CAT tools. Their advantage is their huge database and dictionaries, MateCat has a database with over 20 billion of definitions. MateCat and SmartCAT are two web applications serving the same functions of the memory tools named above. As memory tool it includes text segmentation, fuzzy search and integration with OmegaT format. Despite it is a memory tool it has additional features like online translation engines integration such as Google Translator, Bing or Apertium. Anaphraseus is an OpenOffice extension for professional translators.

0 notes

Text

How to Scrape IMDb Top Box Office Movies Data using Python?

Different Libraries for Data Scrapping

We all understand that in Python, you have various libraries for various objectives. We will use the given libraries:

BeautifulSoup: It is utilized for web scraping objectives for pulling data out from XML and HTML files. It makes a parse tree using page source codes, which can be utilized to scrape data in a categorized and clearer manner.

Requests: It allows you to send HTTP/1.1 requests with Python. Using it, it is easy to add content including headers, multipart files, form data, as well as parameters through easy Python libraries. This also helps in accessing response data from Python in a similar way.

Pandas: It is a software library created for Python programming language to do data analysis and manipulation. Particularly, it provides data operations and structures to manipulate numerical tables as well as time series.

For scraping data using data extraction with Python, you have to follow some basic steps:

1: Finding the URL:

Here, we will extract IMDb website data to scrape the movie title, gross, weekly growth, as well as total weeks for the finest box office movies in the US. This URL for a page is https://www.imdb.com/chart/boxoffice/?ref_=nv_ch_cht

2: Reviewing the Page

Do right-click on that element as well as click on the “Inspect” option.

3: Get the Required Data to Scrape

Here, we will go to scrape data including movies title, weekly growth, and name, gross overall, and total weeks are taken for it that is in “div” tag correspondingly.

4: Writing the Code

For doing that, you can utilize Jupiter book or Google Colab. We are utilizing Google Colab here:

Import libraries:

import requests from bs4 import BeautifulSoup import pandas as pd

Make empty arrays and we would utilize them in the future to store data of a particular column.

TitleName=[] Gross=[] Weekend=[] Week=[]

Just open the URL as well as scrape data from a website.

url = "https://www.imdb.com/chart/boxoffice/?ref_=nv_ch_cht" r = requests.get(url).content

With the use of Find as well as Find All techniques in BeautifulSoup, we scrape data as well as store that in a variable.

soup = BeautifulSoup(r, "html.parser") list = soup.find("tbody", {"class":""}).find_all("tr") x = 1 for i in list: title = i.find("td",{"class":"titleColumn"}) gross = i.find("span",{"class":"secondaryInfo"}) weekend = i.find("td",{"class":"ratingColumn"}) week=i.find("td",{"class":"weeksColumn"}

With the append option, we store all the information in an Array, which we have made before.

TitleName.append(title.text) Gross.append(gross.text) Weekend.append(weekend.text) Week.append(week.text)

5. Storing Data in the Sheet. We Store Data in the CSV Format

df=pd.DataFrame({'Movie Title':TitleName, 'Weekend':Weekend, 'Gross':Gross, 'Week':Week}) df.to_csv('DS-PR1-18IT012.csv', index=False, encoding='utf-8')

6. It’s Time to Run the Entire Code

All the information is saved as IMDbRating.csv within the path of a Python file.

For more information, contact 3i Data Scraping or ask for a free quote about IMDb Top Box Office Movies Data Scraping services.

0 notes

Text

The Quality Magento 2 Extensions for your web-store that you need to compete

Magento is friendly platform for ecommerce and for online business websites, it is no doubt, a very effective open source system developed particularly for e-commerce websites. Magento 2 Extensions that is design to improve your web-store performance like Security, Store Management, Store Administration, SEO, Navigation, and Many more features that will help you to expand your business in short time.

Soon Magento 1 to be end make sure migrate to Magento 2, data migration tool migrates the entire data from the database of Magento 1 platform to the database of Magento 2. Magento 1.x users are at a crossroads, Migrate or re-platform completely.

We have searched highly effective Magento 2 Extensions free that will help you to boost your site sales, Increase in Organic traffic, Plugins that will supercharge your ecommerce store.

Ultimate Magento 2 SEO Extension

Magento 2 SEO Extension inherits default features and adds more advanced functions to make your site user-friendly

SEO Plugin for Magento 2 that dramatically improves out SEO features offered by Fme Extensions, This extension will help to improve your web-store ranking, Boost your site sales, Increase in Organic traffic by letting you optimize the meta tags, meta description, and meta titles of your web-store for multiple search engines like Google, Bing, Yahoo.

It’s also support advanced Magento Rich snippets, Provides SEO for layered Navigation pages and allow you to solve duplicate content issues.

Auto-create SEO Meta Title, Description & Keywords

Generate SEO Optimized Alt Tags for Product Images

Build XML & HTML Sitemaps for Store

Add Extended Google Rich Snippet Tags

Handle Content Language Duplicates with Hreflang Tags

Add No Index No Follow Tags to Any Page

Add Canonical Tags to Prevent Duplication

Store Locator Magento 2 Extension

How store Locator Magento 2 Extension can help to boost your sales? Store Locator is now need of any Magento Based website if you have physical store as well then this Ultimate Extension will help to double your web-store sales in short time

Make thing easy for user to access your physical store just on one click or it’s will help your customers to find nearest physical store location and get the drive direction

Creates A Dedicated Store Locator Page

List Your Store Locations as well as Show them on Integrated Google Map

Allow Customers to Search Stores by Address

Add Store Tags and Let Customers Filter Stores Listing by Tags

Add or Import Stores using CSV File Upload

Separate Landing Page for Each Store Location to Display Store Description, Products, Opening Hours & Holidays

Configure Default Map Radius

Add Link in Footer & Set Standard Longitude & Latitude

Extensive SEO Configurations

Magento 2 Services Development

Fme Extensions is count one of the best and Quality Magento Extensions provider that will help to boost your sales, Improve your site performance and double up your business in short time that’s what you want

Being a leading Magento 2 development company, FmeExtensions offer its valuable clients, the top quality custom development services at affordable price! Our professionals spend as much time as needed in assisting you to boost up your Magento Store’s efficiency,

Experience of developing 150+ extensions & 1000+ custom development projects

Affordable and Customer-centric Magento solutions

Responsive and Mobile Friendly Magento 2 Website Development

Free Revision of Projects with support and on time delivery

0 notes

Text

SEOquake - Plugin SEO miễn phí và mạnh mẽ bạn nên biết!

Bạn đang tìm kiếm một công cụ miễn phí cho phép bạn phân tích SEO trang web của bạn? Bạn có muốn làm SEO cho trang web của bạn chỉ với một cú nhấp chuột không? Nếu bạn đã trả lời CÓ cho 1 trong 2 câu hỏi trên, thì SEOquake là công cụ phù hợp với bạn! Từ phân tích SERP đến chẩn đoán trang, SEOquake cung cấp các dữ liệu cần thiết. Vậy SEOquake có những tính năng gì và sử dụng SEOquake ra sao, cùng tìm hiểu với TopOnSeek nhé!

SEOquake là gì?

SEOquake là một plugin miễn phí dùng trên trình duyệt, cung cấp các dữ liệu phân tích chỉ với một nút bấm. SEOquake tương thích với Chrome, Firefox, Opera và Safari. Ngoài ra còn có một ứng dụng tương đương có tên SEOquake Go cho iOS.

SEOquake giúp bạn phân tích cơ bản tên miền hoặc trang đích với các dữ liệu như urls, canonical, meta data, heading, mật độ từ khóa, liên kết nội bộ (internal link), liên kết bên ngoài (external link), Bên cạnh đó, các chỉ số nâng cao hơn như Google Index, xếp hạng Alexa, dữ liệu xếp hạng SEMrush, organic research data, webarchive age, lượt thích trên Facebook,... Những số liệu này đến trực tiếp từ các SERPs. Sau khi đã hiểu về SEOquake, hãy cùng cài đặt để thực hành nhé!

Cách cài đặt SEOquake

Cài đặt plugin SEOquake rất dễ. Tất cả bạn phải làm là mở trình duyệt bạn muốn sử dụng SEOquake, đi đến các cửa hàng web tương ứng của họ, tìm kiếm plugin và cài đặt nó. Quy trình từng bước để cài đặt nó trong Chrome: Bước 1: Mở trình duyệt Chrome và search

Bước 2: Search ở “Search the store”

Bước 3: Click vào “Add to Chrome” Bước 4: Chờ khi hộp thoại xác nhận liên kết xuất hiện, nhấp vào trên Thêm tiện ích mở rộng, Plugin sẽ cài đặt vào trình duyệt của bạn trong vài giây.

Bước 5: khi hoàn thành logo SEOquake sẽ xuất hiện ở phía trên bên phải của trình duyệt của bạn, ngay bên cạnh URL bar/ address bar Nếu bạn muốn cài đặt cài đặt SEOquake trên Iphone, hãy đến App Store - Apple và tìm kiếm SEOquake Go và cài đặt tương tự nhé!

Cách sử dụng SEOquake

1. SEO bar Khi bạn nhập URL vào thanh địa chỉ của trình duyệt, thanh SEO sẽ tự động xuất hiện và hiển thị cho bạn dữ liệu cho các tham số khác nhau. Các dữ liệu sẽ được hiển thị là: Facebook thíchSố trang được thiết lập chỉ mục (indexing) trong GoogleThứ hạng AlexaXếp hạng SEMrushWebarchiver ageKeyword difficulty …và hơn thế nữa! Bạn có thể chọn tham số nào bạn muốn xem trên SEO bar bằng cách nhấp vào thiết bị Cài đặt, nằm ở phía bên phải của thanh

2. Bảng điều khiển Nếu bạn thấy các chỉ số trên SEObar không hiển thị theo ý thích của bạn hoặc không phải những gì bạn đang tìm kiếm. Chỉ cần nhấp vào biểu tượng SEOquake, bảng điều khiển sẽ xuất hiện cho phép bạn xem các tham số tương tự được hiển thị trên SEObar nhưng trong một bố cục khác.

3. SERP Overlay Nghiên cứu từ khóa và nghiên cứu đối thủ là bước quan trọng trong một dự án SEO. SERP Overlay sẽ hỗ trợ bạn trong quá trình tìm kiếm dữ liệu. Cụ thể, SERP Overlay sẽ hiển thị các tham số và các tiêu chí để bạn lựa chọn nhằm lọc được dữ liệu mà bạn thực sự đang tìm kiếm

Chỉ cần nhập từ khóa vào Google, SERP Overlay sẽ tự động xuất hiện tất cả các dữ liệu. Độ khó của từ khóa cũng sẽ được hiển thị bên dưới thanh tìm kiếm của Google, điều này giúp bạn ước tính mức độ khó của từ khóa, từ đó có kế hoạch đánh bại đối thủ tốt hơn. 4. Page SEO Audit Đây là phần quan trọng nhất của tool SEOquake. Page SEO Audit giúp bạn kiểm tra trang web/ trang đích của bạn có đang bị lỗi nào không. Hãy nhấp vào biểu tượng SEOquake và chọn tab “Diagnosis” để bắt đầu chẩn đoán Trang “Diagnosis” sẽ mở ra ở một tab riêng và các dữ liệu được hiểu thị thành ba phần khác nhau: Page Analysis, Mobile Compliance, Site Compliance Page Analysis (Phân tích trang): Phần này sẽ phân tích mã hóa trang web của bạn bao gồm: URL, canonical, headings (thẻ tiêu đề), meta tag, thuộc tính HTML, thẻ ALT,....

Mobile Compliance (Tuân thủ trên thiết bị di động): Phần này sẽ kiểm tra mức độ tuân thủ của trang web/ trang đích trên các thiết bị di động. Tối ưu các trang AMP (Trang di động tăng tốc) là cách để làm cho các trang web/ trang đích nhanh trên thiết bị di động. Tối ưu Meta Viewpoint giúp các trang di động có thứ hạng tốt hơn.

Site Compliance (Tuân thủ trang web): Phần này sẽ xem xét toàn bộ trang web, thay vì chỉ là một page cụ thể như các tính năng trên. Site Compliance sẽ kiểm tra các dữ liệu: hiện trạng XML Sitemaps, tệp Robots.txt, khai báo ngôn ngữ, hình ảnh favicon, cài đặt của Google™ Analytics trên website, Encoding, Doctype.

Bạn có thể tìm hiểu thêm thông tin của các dữ liệu trên bằng cách nhấp vào mũi tên của “Tips” ở góc phải, thông tin giải thích sẽ được hiển thị dạng danh sách thả xuống. 5. Keyword density (mật độ từ khóa) Phần này sẽ hiển thị tất cả các từ khóa được tìm thấy dưới ở trang đang phân tích. Các từ khóa được phân theo thứ tự từ khóa 1 từ, từ khóa 2 từ, từ khóa 3 từ và từ khóa 4 từ. Các chỉ số hiển thị kèm theo: mật độ từ khóa (density), độ nổi bật (prominence), vị trí xuất hiện (found in), số lần xuất hiện (repeat)

6. Công cụ liên kết Nhấp vào các tab “Internal” and “External”, danh sách các liên kết và anchor text sẽ được hiển thị cùng với các thông số. Bạn có thể xuất dữ liệu này ở định dạng CSV.

7. So sánh công cụ URL Sử dụng tab “Compare url” giúp bạn so sánh được tất cả các trang domain/ trang đích riêng lẻ của mình và đối thủ. Nhập trang domain hoặc url bạn muốn so sánh, click “Process URL”, tất cả các tham số của từng trang theo từng tham số do bạn lựa chọn sẽ được hiển thị. Điều này giúp bạn có những phân tích nhanh, đánh giá được trang nào đang vượt trội so với các trang khác. Bên cạnh đó, bạn có thể dễ dàng xuất dữ liệu ở định dạng CSV.

Các thông số trong SEOquake

1. Thông số trang đích cụ thể Google cache date - lần cuối cùng Google tạo phiên bản bộ nhớ cache của trang được truy vấn.Facebook likes - lượng lượt thích trên Facebook mà trang nhận được trong câu hỏiGoogle PlusOne - số lượng Google +1 được nhận bởi trang đang đề cậpPage source - liên kết đến nguồn trangPinterest Pin count - ghim nhận được bởi trang truy vấnLinkedin share count - cổ phiếu nhận được bởi trang truy vấn 2. Thông số trang domain SEMrush rank - Xếp hạng SEMrush của trang webGoogle Index - số lượng trang được Google lập chỉ mụcBing Index - số lượng trang được Bing lập chỉ mụcAlexa rank - Điểm Alexa của trang (càng thấp, càng tốt)Webarchive Age - ngày đầu tiên khi archive.org phát hiện ra trangBaidu Index - số lượng trang được lập chỉ mục bởi BaiduYahoo Index - số lượng trang được lập chỉ mục bởi YahooSEMrush SE Traffic - lưu lượng trung bình hàng tháng được ước tính bởi SEMrushSEMrush SE Traffic Price - số tiền ước tính trung bình mỗi tháng để trả giá cho các từ khóa mà tên miền được yêu cầu được xếp hạng trong SEMrush.SEMrush Video Adv - số lượng Quảng cáo YouTube mà tên miền đang sử dụngCompete Rank - Điều này dựa trên số lượng khách truy cập duy nhấtWhois - Bản ghi Whois cho tên miền 3. Backlinks Google Links - số lượng backlink được tìm thấy bởi GoogleSEMrush Links - số lượng liên kết ngược được tìm thấy bởi SEMrushSEMrush Linkdomain - số lượng backlink SEMrush được tìm thấy cho www./non www cụ thể. phiên bản của tên miềnSEMrush Link domain 2 - số lượng liên kết ngược SEMrush được tìm thấy cho toàn bộ miềnBaidu Link - số lượng liên kết ngược được tìm thấy bởi Baidu Với những chia sẻ trên, TopOnSeek hy vọng bạn đã hiểu rõ hơn về SEOquake - một trong những công cụ phân tích mạnh mẽ và miễn phí của SEO. Các dữ liệu, thông số trên SEOquake phân tích mạnh đến mức bạn sẽ không tin nó là miễn phí! Bạn đã sẵn sàng để thử SEOquake chưa? Read the full article

0 notes

Text

How To Build a (Low Cost) PBN Fast With WordPress Alpha

One of the advantages of using WordPress Alpha is that we can use it to create a Private Blog network with very little effort. In fact it can be done in just 3 Steps! (Literally 4 CLICKS Per Site) Let me show you how...

How To Build A Private Blog Network One Site (4 Clicks) At A Time For Under $15 Per Site

The steps below are for the actual site building and assume that you have already found a domain and that you have spam checked the domain too.

Personally i use VidSpy Alpha to find niche relevant expired domains and although there are far more comprehensive tool out there this one does the job for me and finds great domains that i can easily boost the SEO metrics after rebuilding and adding fresh content by adding some basic link strategies and indexed the existing backlinks to each site using Link Control Alpha.

The third tool in this system is Expired Domain Alpha which rebuilds sites from archive.org (wayback machine) with the exact design as it was before, regardless if it was a WordPress site or not and will even recreate the exact internal and outbound links that the site had previously.

The Expired Domain Alpha plugin costs from as little as $5 per site. In contrast if you tried to buy that on fiverr.com it would cost you minimum $80 for the same in depth rebuild but without the internal and outbound links feature.

And Yes...

It takes just one click and is part of the 4 click PBN site building system below...

Click ONE

Deploy Your Site In WordPress Alpha Choosing Your First Host Server...

There are 8 cities worldwide with servers in digital ocean, in fact there are 12 data centers in total, in different locations like London, New York, Singapore, San Francisco, Amsterdam etc. (See Screenshot below)

Each host (droplet that you deploy costs $5 per month) and you can add multiple sites to each but you should use only one per PBN site unless you take advantage of cloudflare API which is one way to ad more sites but is a risk that you need to weigh up yourself.

Choose option of one host - multiple sites as we can use the server for other types of sites later (such as traffic sucking local biz alpha directory sites as these are not part or of a PBN and can be hosted anywhere giving you more value for money)

Digital Oceans 12 Datacenter Locations

Digital Ocean locations

DigitalOcean's datacenters are in the following locations:

NYC1, NYC2, NYC3: New York City, united States.

AMS2, AMS3: Amsterdam, the Netherlands.

SF01, SF02: San Francisco, United States.

SGP1: Singapore

LON1: London, United Kingdom.

FRA1: Frankfurt, Germany.

TOR1: Toronoto, Canada.

BLR1: Bangalore, India.

Digital Ocean Pricing

One of the great features within digital ocean is the auto expansion when needed for server resources.

When you deploy a site with WordPress Alpha it creates a droplet in Digital Ocean with the configuration that costs $5 per month.

Digital Ocean WordPress Hosting Options

Remember that you can add multiple sites but the more you add the slower your sites are likely to be.

However, digital ocean offers quality but optionally cheap WordPress hosting options. You can scale up as needed when you have sites that start to drive more traffic.

We suggest 10 to 12 sites maximum per server unless they are Local Biz Alpha Sites as they tend to run into 10's of thousands of pages.

Click TWO

Set Your DNS Within WordPress Alpha, It's just one click and done

Wait a few minutes for your site to propagate

Your site will be set up with pre configured plugins

You can add personalization to your site and tweak some settings

Click THREE

(Optional But Highly Recommended)

Recreate Your Expired Domain From Wayback Machine In One Click!

Or you can add Expired Domain Alpha plugin and restore the website from wayback machine with the exact design it had previously (regardless if it was a wordpress site or not) and with it's exact internal and outbound links! - Super Powerful Huh?

The Expired Domain Alpha plugin automatically pulls in snapshots from archive.org (wayback machine) for you to choose which one you want to deploy)

The plugin will show a progress bar when it is deployed. As it is rebuilding the entire site together with all internal and outbound links it needs time to work. It will provide an estimated time (in days) for the rebuild to be complete. It's starts with deploying pages and design and goes through each page rebuilding it exactly as it was.

Install SSL which is literally just one click and done.

Click FOUR

Deploy SSL To Make Your Site http

How to make a secure website with WordPress Alpha?

Super simple, it takes just one click to make your site secure.

This is required and sites not https may be disadvantaged in the search results by Google

It takes one click within WordPress Alpha (If you're logged in to the site log out and back in again after clicking the https deployment button

Thats it!

Of course there are numerous things in between these 4 clicks to personalise your site, finalise your settings on some plugins and add logo and do some settings etc. but this is as close as we can get to fully automated PBN building, right down to recreating the expired domain in one clicks and

Quick Tips For Building A PBN With WordPress Alpha…

I suggest that you want a few weeks before starting to add links. It is so easy to add these sites that you can build out multiple sites per week pretty effortlessly and just leave them to mature. As WordPress Alpha evolves we will add i more detailed site management features like tagging, notes and labels etc for you to keep track and even export according to tags into CSV etc. We have rebuild sites using WordPress Alpha using HTML within WordPress, you can still add more content in the normal way or even just run an invisible autoblog to keep the content unique and fresh. The WordPress Alpha “PBN” Template will be ready within a few days and will have the exact plugins that i recommend with settings already done and with advanced options for you to choose from such as:

Option To Hide WordPress

Option To Block Bots

Analytics Tools That Do Not Need Google Analytics

Option For Complete Link Control For Bulk Management Of Internal and Outbound Links Using Link Control Alpha

With Link Control Alpha You Can Even Discover The Expired Domains Inbound Links and Send To Indexer In Two Clicks!

Ever wondered how to backlink an expired domain PBN site? It should already have backlinks if you choose a good domain, just index them on a drip feed

Rebuild and Ping Sitemaps For XML Sitemap, as well as Video XML, Image XML and Mobile XML with the WP Alpha Template for PBN Sites (takes one click to set up and one click to ping google per sitemap)

WordPress RSS Tools For Fast Indexing and Syndication

Optional Autoblog Content From Any RSS Feed With Built In Spinner And Automated One Page SEO For Every Post Using Autoblog Alpha Plugin (Best Add This To A Category That Does Not Show In Navigation)

Pre-Configured Settings For Yoast/All In One SEO, Hummingbird, GDPR, Statistics and More

Built In HTML Sitemap, Schema, AMP,

Choice Of Fast Themes To Switch Between On Each Site

Marketing Elements Built In To Make The Sites Look REAL such as call to action, list building optin forms (slide in, full page overlay, smart bar options)

In the meantime you can build adequate PBN sites with the existing Generic Blog template, and you can check out the core plugins and features that we have pre-configured on this post about How & Why To Set Up A Site With WordPress Alpha.

We will even have a service from my own PBN site builder guy that i have been using for years (long before WordPress Alpha). In fact he has built loads of my PBN sites in Easy Blog Networks and configuring them to look like real sites.

He is available to build all this out for you if you want to take it much further with CTA, logo, optin forms, auto content and more. However i suggest that you only do this if you have a traffic breakout site (a site that starts ranking and driving traffic) so that the cost can be recovered quickly. One more thing… Beyond those 8 servers that Digital Ocean Provide you can also use Cloudflare for DNS too. That can create unique Class C IP’s for extra sites outside of the first 8 sites. It's not a perfect way to do it but it can work if you do it properly, which i will explain how in the course that comes with WP Alpha Private Blog Network template training. Essentially what we do is build some “concept” PBN sites.

What's a Concept PBN site?

Tune in when we launch the PBN template and learn what this is and how easy it is to do in detail. No One Else Is Teaching This As A Strategy (It's One Of My Own Unique Tricks!) All of this will be covered in step by step detail in video tutorials which is what i do with every new template and will include building a 25 site PBN for under $350.

youtube

Source: wordpressalpha.com

0 notes

Text

Using Google Search Console to manage ‘search enhancement’ validation errors and employing the API

Wrapping up our series on Google Search Console we’ll look at reporting features that deal with front-end markup errors and some data management options. Note that what we’re discussing here are Google “Search Enhancements” validation errors, so when it comes to validating HTML and CSS you’ll need to look elsewhere.

Search enhancements

We covered page-level error reporting using the URL Inspection Tool. The Enhancements section details site-wide warnings and error reporting.

Mobile Usability, AMP-HTML, and Rich results information can be found when the corresponding markup is detected. Google has a small number of dedicated validation services and it’s nice to see some replication of these in Google Search Console. Notably missing, however, are Lighthouse findings.

In the Mobile Usability report, you can view errors that aren’t necessarily validation errors but, for some reason, Google has flagged them as being mobile-unfriendly.

Ideally, you won’t see any errors, but keep in mind that perfectly valid markup can be reported as having Mobile Usability “errors.” It’s important to know the difference for relating Mobile Usability feedback to developers. The errors shown above indicate totally valid markup for a page that is otherwise not mobile-friendly.

On the other hand, when it comes to AMP and Structured Data reporting, validation errors will broadly affect your appearance in Google Search — not just mobile search. Charting here is practically unnecessary; you will want to see the data table with its details. It’s common to find only a fraction of your indexed pages in reporting. Google API documentation refers to the fraction as a “samples list.” These data serve as a hint that pages where such markup has been detected could possibly qualify to power corresponding Google “Search Enhancement” features.

Catch up with previous installments of the Google Search Console series

Managing Sitemap XML with Google Search Console

Getting started with Google Search Console

Security & manual actions

The most vital issues you would likely ever see reported in Google Search Console are found in the Security & Manual Actions section. You’ll want to be notified to correct these issues as soon as possible, as they could cause you to be removed from appearing in search altogether until they are remedied and Google registers your site as safe again. (See Search Engine Land’s Guide to Google Penalties for all the details.)

Links

Google is well-known for being a search engine that deeply analyzes the Web, beginning with the voluminous hypertext links among URLs. Although it’s generally true that links are important, it’s too simple to presume a high link count will always boost your rankings. Even when you rightly avoid buying links for the purpose of artificial ranking, you’ll likely find plenty of link cruft in your GSC Links report because the link building economy surrounding Google is so massive.

Downloads & GSC API

Downloads from the Google Search Console are going to be woefully inadequate for all but individual practitioner use. Select your date range for up to 16 months worth of history and download in CSV or Google Spreadsheet format.

A quick CSV can be used for importing to Excel, where it can be marked up as a deliverable for a stakeholder’s specific purpose. Beyond this type of application, you’re not likely to make much more use of downloads as you already have the Search Console charts and data table at your disposal. You’re more likely to graduate to using Google’s Search Console Data Studio connector.