#instead of going like. a vertical Rift. a major Thing

Explore tagged Tumblr posts

Text

So much of 18Missy2 is like. Can we stop hurting each other so much? Maybe not, but can we bring it to- to normal levels, can we find a way to live with it. Can the entity-formerly-known-as-the-Master stop being kind of an awful person? Maybe not, but there are one million entitled assholes in the universe, right, the issue is Scale of Harm. can we find something we can live with?

#where's that post that's like#Enemies To Lovers Shouldn't Be M/F If I Wanted To See A Man And A Woman Fighting I'd Go Look At My Parents' Marriage#im burning that down w a fancy gas lighter.#the Dysfunction is still insane- or i hope to manage to make it be- but it like.#instead of going like. a vertical Rift. a major Thing#its like. horizontal. permeating widely into every aspect of this very normal sort of life.#i felt so fuckin Understood in my goals by Shalka. they're literally still insane they're just weirdly Chill in each other's toxic insanity

0 notes

Text

Porting Falcon Age to the Oculus Quest

There have already been several blog posts and articles on how to port an existing VR game to the Quest. So we figured what better way to celebrate Falcon Age coming to the Oculus Quest than to write another one!

So what we did was reduced the draw calls, reduced the poly counts, and removed some visual effects to lower the CPU and GPU usage allowing us to keep a constant 72 hz. Just like everyone else!

Thank you for coming to our Tech talk. See you next year!

...

Okay, you probably want more than that.

Falcon Age

So let's talk a bit about the original PlayStation VR and PC versions of the game and a couple of the things we thought were important about that experience we wanted to keep beyond the basics of the game play.

Loading Screens Once you’re past the main menu and into the game, Falcon Age has no loading screens. We felt this was important to make the world feel like a real place the player could explore. But this comes at some cost in needing to be mindful of the number of objects active at one time. And in some ways even more importantly the number of objects that are enabled or disabled at one time. In Unity there can be a not insignificant cost to enabling an object. So much so that this was a consideration we had to be mindful of on the PlayStation 4 as loading a new area could cause a massive spike in frame time causing the frame rate to drop. Going to the Quest this would be only more of an issue.

Lighting & Environmental Changes While the game doesn’t have a dynamic time of day, different areas have different environmental setups. We dynamically fade between different types of lighting, skies, fog, and post processing to give areas a unique feel. There are also events and actions the player does in the game that can cause these to happen. This meant all of our lighting and shadows were real time, along with having custom systems for handling transitioning between skies and our custom gradient fog.

Our skies are all hand painted clouds and horizons cube maps on top of Procedural Sky from the asset store that handles the sky color and sun circle with some minor tweaks to allow fading between different cube maps. Having the sun in the sky box be dynamic allowed the direction to change without requiring totally new sky boxes to be painted.

Our gradient fog works by having a color gradient ramp stored in a 1 by 64 pixel texture that is sampled using spherical distance exp2 fog opacity as the UVs. We can fade between different fog types just by blending between different textures and sampling the blended result. This is functionally similar to the fog technique popularized by Campo Santo’s Firewatch, though it is not applied as a post process as it was for that game. Instead all shaders used in the game were hand modified to use this custom fog instead of Unity’s built in fog.

Post processing was mostly handled by Unity’s own Post Processing Stack V2, which includes the ability to fade between volumes which the custom systems extended. While we knew not all of this would be able to translate to the Quest, we needed to retain as much of this as possible.

The Bird At its core, Falcon Age is about your interactions with your bird. Petting, feeding, playing, hunting, exploring, and cooperating with her. One of the subtle but important aspects of how she “felt” to the player was her feathers, and the ability for the player to pet her and have her and her feathers react. She also has special animations for perching on the player’s hand or even individual fingers, and head stabilization. If at all possible we wanted to retain as much of this aspect of the game, even if it came at the cost of other parts.

You can read more about the work we did on the bird interactions and AI in a previous dev blog posts here: https://outerloop.tumblr.com/post/177984549261/anatomy-of-a-falcon

Taking on the Quest

Now, there had to be some compromises, but how bad was it really? The first thing we did was we took the PC version of the game (which natively supports the Oculus Rift) and got that running on the Quest. We left things mostly unchanged, just with the graphics settings set to very low, similar to the base PlayStation 4 PSVR version of the game.

It ran at less than 5 fps. Then it crashed.

Ooph.

But there’s some obvious things we could do to fix a lot of that. Post processing had to go, just about any post processing is just too expensive on the Quest, so it was disabled entirely. We forced all the textures in the game to be at 1/8th resolution, that mostly stopped the game from crashing as we were running out of memory. Next up were real time shadows, they got disabled entirely. Then we turned off grass, and pulled in some of the LOD distances. These weren’t necessarily changes we would keep, just ones to see what it would take to get the performance better. And after that we were doing much better.

A real, solid … 50 fps.

Yeah, nope.

That is still a big divide between where we were and the 72 fps we needed to be at. It became clear that the game would not run on the Quest without more significant changes and removal of assets. Not to mention the game did not look especially nice at this point. So we made the choice of instead of trying to take the game as it was on the PlayStation VR and PC and try to make it look like a version of that with the quality sliders set to potato, we would need to go for a slightly different look. Something that would feel a little more deliberate while retaining the overall feel.

Something like this.

Optimize, Optimize, Optimize (and when that fails delete)

Vertex & Batch Count

One of the first and really obvious things we needed to do was to bring down the mesh complexity. On the PlayStation 4 we were pushing somewhere between 250,000 ~ 500,000 vertices each frame. The long time rule of thumb for mobile VR has been to be somewhere closer to 100,000 vertices, maybe 200,000 max for the Quest.

This was in some ways actually easier than it sounds for us. We turned off shadows. That cut the vertex count down significantly in many areas, as many of the total scene’s vertex count comes from rendering the shadow maps. But the worse case areas were still a problem.

We also needed to reduce the total number of objects and number of materials being used at one time to help with batching. If you’ve read any other “porting to Quest” posts by other developers this is all going to be familiar.

This means combining textures from multiple object into atlases and modifying the UVs of the meshes to match the new position in the atlas. In our case it meant completely re-texturing all of the rocks with a generic atlas rather than having every rock use a custom texture set.

Now you might think we would want also reduce the mesh complexity by a ton. And that’s true to an extent. Counter intuitively some of the environment meshes on the Quest are more complex than the original version. Why? Because as I said we were looking to change the look. To that end some meshes ended up being optimized to far low vertex counts, and others ended up needing a little more mesh detail to make up for the loss in shading detail and unique texturing. But we went from almost every mesh in the game having a unique texture to the majority of environment objects sharing a small handful of atlases. This improved batching significantly, which was a much bigger win than reducing the vertex count for most areas of the game.

That’s not to say vertex count wasn’t an issue still. A few select areas were completely pulled out and rebuilt as new custom merged meshes in cases where other optimizations weren’t enough. Most of the game’s areas are built using kit bashing, reusing sets of common parts to build out areas. Parts like those rocks above, or many bits of technical & mechanical detritus used to build out the refineries in the game. Making bespoke meshes let us remove more hidden geometry, further reduce object counts, and lower vertex counts in those problem areas.

We also saw a significant portion of the vertex count coming from the terrain. We are using Unity’s built in terrain system. And thankfully we didn’t have to start from total scratch here as simply increasing the terrain component's Pixel Error automatically reduces the complexity of the rendered terrain. That dropped the vertex count even more getting us closer to the target budget without significantly changing the appearance of the geometry.

After that many smaller details were removed entirely. I mentioned before we turned off grass entirely. We also removed several smaller meshes from the environment in various places where we didn’t think their absence would be noticed. As well as removed or more aggressively disabled out of view NPCs in some problem areas.

Shader Complexity

Another big cost was most of the game was using either a lightly modified version of Unity’s Standard shader, or the excellent Toony Colors Pro 2 PBR shader. The terrain also used the excellent and highly optimized MicroSplat. But these were just too expensive to use as they were. So I wrote custom simplified shaders for nearly everything.

The environment objects use a simplified diffuse shading only shader. It had support for an albedo, normal, and (rarely used) occlusion texture. Compared to how we were using the built in Standard shader this cut down the number of textures a single material could use by more than half in some cases. This still had support for the customized gradient fog we used throughout the game, as well as a few other unique options. Support for height fog was built into the shader to cover a few spots in the game where we’d previously used post processing style methods to achieve. I also added support for layering with the terrain’s texture to hide a few places where there were transitions from terrain to mesh.

Toony Colors Pro 2 is a great tool, and is deservedly popular. But the PBR shader we were using for characters is more expensive than even the Standard shader! This is because the way it’s implemented is it’s mostly the original Standard Shader with some code on top to modify the output. Toony Colors Pro 2 has a large number of options for modifying and optimizing what settings to use. But in the end I wrote a new shader from scratch that mimicked some of the aspects we liked about it. Like the environment shader it was limited to diffuse shading, but added a Fresnel shine.

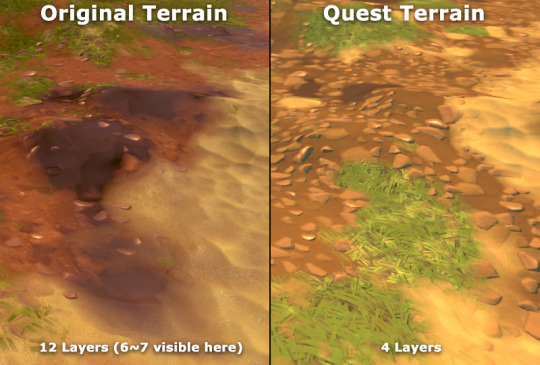

The PSVR and PC terrain used MicroSplat with 12 different terrain layers. MicroSplat makes these very fast and much cheaper to render than the built in terrain rendering. But after some testing we found we couldn’t support more than 4 terrain layers at a time without really significant drops in performance. So we had to go through and completely repaint the entire terrain, limiting ourselves to only 4 texture layers.

Also, like the other shaders mentioned above, the terrain was limited to diffuse only shading. MicroSplat’s built in shader options made this easy, and apart from the same custom fog support added for the original version, it didn’t require any modifications.

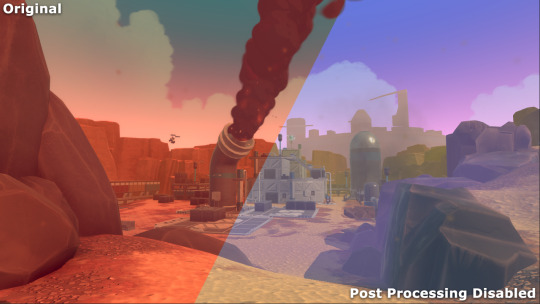

Post Processing, Lighting, and Fog

The PSVR and PC versions of Falcon Age makes use of color grading, ambient occlusion, bloom, and depth of field. The Quest is extremely fill rate limited, meaning full screen passes of anything are extremely expensive, regardless of how simple the shader is. So instead of trying to get this working we opted to disable all post processing. However this resulted in the game being significantly less saturated. And in extreme cases completely different. To make up for this the color of the lighting and the gradient fog was tweaked to make up for this. This is probably the single biggest factor in the overall appearance of the original versions of the game and the Quest version not looking quite the same.

Also as mentioned before we disabled real time shadows. We discussed doing what many other games have done which is move to baked lighting, or at least pre-baked shadows. We decided against this for a number of reasons. Not the least of which was our game is mostly outdoors so shadows weren’t as important as it might have been for many other games. We’ve also found that simple real time lighting can often be faster than baked lighting, and that certainly proved to be true for this game.

However the lack of shadows and screen space ambient occlusion meant that there was a bit of a disconnect between characters in the world and the ground. So we added simple old school blob shadows. These are simple sprites that float just above the terrain or collision geometry, using a raycast from a character’s center of mass, and sometimes from individual feet. There’s a small selection of basic blob shapes and a few unique shapes for certain feet shapes to add a little extra bit of ground connection. These are faded out quickly in the distance to reduce the number of raycasts needed.

Falcon

Apart from the aforementioned changes to the shading, which was also applied to the falcon’s custom shaders, we did almost nothing to the bird. All the original animations, reaction systems, and feather interactions remained. The only thing we did to the bird was simplify a few of the bird equipment and toy models. The bird models themselves remained intact.

I did say we thought this was important at the start. And we early on basically put a line in the sand and said we were going to keep everything enabled on the bird unless absolutely forced to disable it.

There was one single sacrifice to the optimization gods we couldn’t avoid though. That’s the trails on the bird’s wings. We were making use of Ara Trails, which produce very high quality and configurable trails with a lot more control than Unity’s built in systems. These weren’t really a problem for rendering on the GPU, but CPU usage was enough that it made sense to pull them.

Selection Highlights

This is perhaps an odd thing to call out, but the original game used a multi pass post process based effect to draw the highlight outlines on objects for both interaction feedback and damage indication. These proved to be far too expensive to use on the Quest. So I had to come up with a different approach. Something like your basic inverted shell outline, like so many toon stylized games use, would seem like the perfect approach. However we never built the meshes to work with that kind of technique, and even though we were rebuilding large numbers of the meshes in the game anyway, some objects we wanted to highlight proved difficult for this style of outline.

With some more work it would have been possible to make this an option. But instead I found an easier to implement approach that, on the face, should have been super slow. But it turns out the Quest is very efficient at handling stencil masking. This is a technique that lets you mark certain pixels of the screen so that subsequent meshes being rendered can ask to not be rendered in. So I render the highlighted object 6 times! With 4 of those times slightly offset in screen space in the 4 diagonal directions. The result is a fairly decent looking outline that works on arbitrary objects, and was cheap enough to be left enabled on everything that it had been on before, including objects that might cover the entire screen when being highlighted.

Particles and General VFX

For the PSVR version of the game, we already had two levels of VFX in the game to support the base Playstation 4 and Playstation 4 Pro with different kinds of particle systems. The Quest version started out with these lower end particle systems to begin with, but it wasn’t enough. Across the board the number and size of particles had to be reduced. With some effects removed or replaced entirely. This was both for CPU performance as the sheer number of particles was a problem and GPU performance as the screen area the particles covered became a problem for the Quest’s reduced fill rate limitations.

For example the baton had an effect that included a few very simple circular glows on top of electrical arcs and trailing embers. The glows covered enough of the screen to cause a noticeable drop in framerate even just holding it by your side. Holding it up in front of your face proved too expensive to keep framerate in even the simplest of scenes.

Similar the number of embers had to be reduced to improve the CPU impact. The above comparison image only shows the removal of the glow and already has the reduced particle count applied.

Another more substantive change was the large smoke plumes. You may have already noticed the difference in some of the previous comparisons above. In the original game these used regular sprites. But even reducing the particle count in half the rendering cost was too much. So these were replaced with mesh cylinders using a shader that makes them ripple and fade out. Before changing how they were done the areas where the smoke plumes are were unable to keep the frame rate above 72 fps any time they were in view. Sometimes dipping as low as 48 hz. Afterwards they ceased to be a performance concern.

Those smoke plumes originally made use of a stylized smoke / explosion effect. That same style of effect is reused frequently in the game for any kind of smoke puff or explosion. So while they were removed for the smoke stacks, they still appeared frequently. Every time you take out a sentry or drone your entire screen was filled with these smoke effects, and the frame rate would dip below the target. With some experimentation we found that counter to a lot of information out there, using alpha tested (or more specifically alpha to coverage) particles proved to be far more efficient to render than the original alpha blended particles with a very similar overall appearance. So that plus some other optimizations to those shaders and the particle counts of those effects mean multiple full screen explosions did not cause a loss in frame rate.

The two effects are virtually identical in appearance, ignoring the difference in lighting and post processing. The main difference here is the Quest explosion smoke is using dithered alpha to coverage transparency. You can see if you look close enough, even with the gif color dithering.

Success!

So after all that we finally got to the goal of a 72hz frame rate! Coming soon to an Oculus Quest near you!

https://www.oculus.com/experiences/quest/2327302830679091/

10 notes

·

View notes

Text

Why Virtual Reality Is a Whole Lot More Important Than You Think

https://120profit.com/?p=2372&utm_source=SocialAutoPoster&utm_medium=Social&utm_campaign=Tumblr Virtual reality is best described through the moments—or rather the memories—of the things you experience when you have that headset on. When I put on the HTC Vive and experienced virtual reality for the first few times: I played fetch with a robot dog in a futuristic laboratory. I threw a baseball cap into the sun. I leapt away from the low groan of a zombie behind me. I tried to use my virtual hand to scratch my real nose when it was itchy. In virtual reality, I became an active participant in an environment completely removed from reality. And that’s what makes VR a wildly different experience from watching a movie or even playing a video game. Unlike most forms of media, virtual reality blocks out the rest of the world in a way that doesn’t just encourage us to suspend our disbelief, but actually takes our senses for a ride and immerses us wholly in the experience. From the outside, bystanders will see someone wearing a headset, waving their hands around, wearing a smile or a serious, focused expression on their face. This is what VR looks like to people on the outside: But to the person wearing the headset, they’re completely drawn into a whole other world, where they can actually affect and interact with their environment in a way that just isn’t possible in any other medium. This is what they actually see: It’s easy to be skeptical about virtual reality if you haven’t tried it—I know I was. After all, attempts at virtual reality go back to the 1960s, all of which just never caught on. But in the case of today’s VR, after trying a few different experiences, it’s clear why Facebook spent $2 billion to acquire the Oculus Rift virtual reality system in 2014. There are two kinds of people: Those who think VR will change the world. And those who haven’t tried VR. [Click to Tweet] Words don’t do it justice. The best way to understand virtual reality is to experience it yourself. The second best way is to understand VR is probably to understand what it isn’t. What Virtual Reality Is Not Before I tried VR, I had already formed an (inaccurate) opinion about what it’d be like. And after comparing my experience with others, I realize this is a mistake that a lot of people will make. So before we go into the implications of VR, let’s clear up a few things first. 1. Virtual Reality Isn’t a Fad VR isn’t “the next step after 3D movies”. It isn’t “like having a small TV strapped to your face” either. And it’s not “just for gamers”. VR is an emerging breed of technology that will take some time to fully take root—like the automobile or even the personal computer. This excerpt from Commodore Magazine published in 1987 illustrates the atmosphere of skepticism that tends to accompany new technology—in this case, the personal computer: “Experts predicted that within five years, every household would have a computer. Dad would run his business on it. Mom would store her recipes on it. The kids would do their homework on it. Today only 15% of American homes have a computer – and the other 85% don't seem the least bit interested. There is a general feeling that the home computer was a fad and that there is really no practical purpose for a computer in the home.” We can rely on technology to grow at an exponential rate—how well we adopt that technology, however, depends on many other variables. But unlike the smartwatch and other relatively new devices, there’s more buy-in around VR —from Google’s Cardboard, Valve’s Steam, Facebook’s Oculus, HTC’s Vive, and Sony’s Playstation VR. We can expect adoption to be a lot smoother and faster than it was for other emerging technology. And that’s a good thing, because virtual reality isn’t an incremental step up in terms of innovation. It’s a huge leap forward. 2. Virtual Reality Is Not the Same As Augmented Reality You’ve probably experienced augmented reality before, especially if you’ve used Snapchat’s video filters to vomit rainbows or swap faces with a friend. Augmented reality (AR), as the name suggests, augments reality by applying a layer over your view of the real world. Like a heads-up display (HUD) AR enhances your perception—it doesn’t try to replace it entirely. Virtual reality, on the other hand, removes you from reality and that’s what affords it a level of immersion we’ve never been able to achieve before. And while it’s possible to combine the two so that parts of what you see are drawn from the real world and parts of it are virtual, the full immersion you get with “true VR” is what makes it drastically different from anything else you’ve tried. 3. Google’s Cardboard And The HTC VIVE Are Virtual Worlds Apart There are essentially two very different VR experiences you can try right now. One is affordable and accessible, consisting largely of 360 degree videos. You might have encountered these on YouTube or Facebook. You slot your smartphone into a Google Cardboard, Samsung Gear VR or other headset, fire up a compatible VR app and put it on. Your smartphone’s accelerometer enables your point of view to correspond with the movement of your headset. Considering its incredibly low price, Google’s Cardboard headset isn’t a bad way to get a small taste of VR in its most rudimentary form. But it doesn't quite compare to the experience of true VR. Riding a rollercoaster in a 360 degree video only scratches the surface of the VR experience. To experience virtual reality at its best right now, you’ll need a powerful PC and over $799 USD for the HTC Vive’s headset, two motion controllers, and motion sensors. You’ll also need a dedicated room to set it all up in. But this takes the experience to a whole other level by adding environmental interaction, depth, and movement in a 3D space. A Shopify Unite conference attendee experiences VR with the HTC Vive. While 360 degree video is a step in a different direction, it’d be a big mistake to assume you’ve really experienced virtual reality if that’s the only thing you’ve tried. The Future of Commerce in VR Isn’t What You Think When we think about shopping in virtual reality, it’s easy to assume it’ll look something like the brick and mortar experience we’re already familiar with: Pushing a cart around, picking up the items we want to buy, taking those items to the checkout, but all from the comfort of our homes. But that’s a poor use of both VR and the human imagination—especially when virtual environments present a blank 3-Dimensional canvas that can be filled with literally anything. If you could set up shop anywhere, why do it in a store? For example, instead of laying out your camping equipment in a cluttered retail space, why not showcase your products far away from any cities with a sun setting, a fire crackling, and transport your customers there instead of leaving it to their imagination. VR offers a whole new way for brands to tell stories around their products. Consider how effective video content is at holding up a mirror to consumers and helping them see themselves in others using the products they might want to own. Now picture what VR can achieve as it puts consumers in the very shoes of someone enjoying those products. What’s more, VR affords consumers the ability to get a sense of scale with your products—something that’s always been difficult for even the best product pages to properly convey online. Naturally, specific verticals will benefit greatly from VR, such as the furniture industry as consumers could view tables or couches in a virtual room for a better idea of how these items would look in their own homes. They can even customize these products, changing the color or the size, and see their choices come to life virtually in front of them—just a purchase away from being actually in front them. VR Isn't Just for Consumers The role VR will play in the future of commerce won’t rest solely on the consumer’s side. There will be implications for business owners too. While you probably won’t be managing your online store in VR, you might be spending a few hours at a time with games and entertainment. You’re going to want a way to stay immersed in VR so you don’t have to take off the headset for every text message, phone call or real world distraction. The HTC Vive already connects with your phone so that you can respond to calls and messages with the headset on. The same could be done with order notifications, or support questions. A merchant could perform basic operations for their store while they are within a game or other experience. VR can also potentially help business owners plan out the look of a retail space, or even visualize product concepts before they’re created. In fact, VR can do more than just show you the product in front of you. It could blow it up to show all of its components, and allow you to better understand how it all fits together. This can be incredibly useful for training purposes, or even to show a manufacturer the intended steps to assemble your product. The Metaverse: An Inevitable Social Network in VR One thing to keep in mind when it comes to VR is that—like television, video game consoles or your smartphone—it’ll be something that people routinely plug into to consume content or interact with others all over the world. The hard-to-ignore question, then, becomes what kind of social networks will VR spawn? In a virtual environment there’s opportunity to interact with others in a way that’s even more “face-to-face” than a FaceTime video call. This hypothetical social network has been dubbed the “metaverse” and, once realized, it will be a major tipping point for VR. vTime is just one early attempt at a "Second Life" in VR. Once a consistent, connected social experience is established—like Facebook or Twitter—it can then grow into a new channel for businesses to engage with consumers—like Facebook or Twitter. Virtual Reality Has Finally Become a Reality If you haven’t tried VR yet, I strongly encourage you to do so if you ever have the chance. Because one thing that will surprise you (just as it surprised me) is how good VR is right now—how easy it is to lose yourself in the experience. When you consider the inevitable improvements that are to come for this technology, as well as the still-growing library of content for VR, it’s safe to say that—after decades of attempts—virtual reality is no longer something only found between the pages of a science fiction novel. Virtual reality is now a reality. Build your own business on Shopify! Start your free 14-day trial today—no credit card required. About The Author Braveen Kumar is a Content Crafter at Shopify where he develops resources to empower entrepreneurs to start and succeed in business. Follow @braveeenk !function(f,b,e,v,n,t,s){if(f.fbq)return;n=f.fbq=function(){n.callMethod? n.callMethod.apply(n,arguments):n.queue.push(arguments)};if(!f._fbq)f._fbq=n; n.push=n;n.loaded=!0;n.version='2.0';n.queue=[];t=b.createElement(e);t.async=!0; t.src=v;s=b.getElementsByTagName(e)[0];s.parentNode.insertBefore(t,s)}(window, document,'script','//connect.facebook.net/en_US/fbevents.js'); fbq('init', '1904241839800487'); fbq('track', "PageView"); 120profit.com - https://120profit.com/?p=2372&utm_source=SocialAutoPoster&utm_medium=Social&utm_campaign=Tumblr

0 notes