#kubernetes explained

Explore tagged Tumblr posts

Text

#kubernetes controller manager#kubernetes controller golang#kubernetes controller explained#kubernetes controller example#kubernetes controller development

0 notes

Text

something that sucks about programming jobs is that it's really hard to talk about them. if you're talking with other computer bitches there's this implicit dick-measuring contest at all times. non-computers ppl don't really ask about work at all. I think this is because it takes a lot of explaining to figure out what the hell you're even talking about, much less the relevant context. I tried to explain Kubernetes to an ex once and it took several hours to build up the concepts to give a rough sketch of why I was frustrated with it. I think we really take for granted the tower of abstractions that you need to engage with this work. I feel quite lonely in this regard.

17 notes

·

View notes

Text

The. Stack. Must. Grow.

how did i replace factorio with Kubernetes? Can someone explain this to me?

5 notes

·

View notes

Text

Tumblr has been making a lot of controversial changes lately, and this post has some great points that inspired me to say more.

It seems like people are very split on whether our hellsite doing stupid anti-user stuff means that we need to show more support or show less support. In my opinion, it's a cry for help that means we need more support (especially monetary), and I'll explain why.

Tumblr is currently a financially sinking ship. It's costing more money in upkeep than it's making. Automattic, the company that owns it, is trying to make it profitable, because they're a business. It's what they do. In my opinion, they have much better intentions than the previous overlords. Matt Mullenweg, the CEO of Automattic, said (a bit indirectly) in a blog post that he wants to open source Tumblr. That was 12 August 2019.

At the time of writing this post (23 August 2023), they're doing a damn good job of it. Looking through the blog of Tumblr's engineering team, they've already open-sourced several of the site's components:

StreamBuilder (the thing that makes the dashboard)

Kanvas (media editor and camera)

Tumblr's custom Kubernetes system (this is what allows them to scale the site's software to a huge number of servers to handle all the traffic)

webpack-web-app-manifest-plugin (I have no idea what this one does, maybe some JavaScript developer can enlighten me)

and that's great! More importantly, it shows that they have good intentions. Making the site open source is a very pro-consumer thing to do, because it means they care about consumers having good services more than they care about being profitable. If they only cared about profit, they would avoid the risk of inadvertently assisting competitors with the open source effort.

My point here is that they are genuinely trying to balance keeping users happy with not having Tumblr die completely.

So at this point, their options are:

sit still and let the platform die

change stuff until the platform is profitable

and since doing #1 would be stupid, they're doing #2. Needless to say, they are not doing a great job of it, for many many reasons. The most direct thing we can do is give them money so that the platform becomes profitable, that way they're no longer being held hostage by their finances. However compelling user feedback may be, it's not more compelling than the company dying. So we save the site from dying financially, then we work on improving the other stuff.

Some people think that giving them money is endorsing what they're doing right now, like disproportionately applying the mature label to trans folks, and twitterifying the dashboard. I disagree.

Giving money to Tumblr is saying "I think you can do better with more resources, why don't you show me."

They clearly need more resources to moderate properly, and to figure out how their decisions are impacting users. In the first post I linked it talks about how running experiments on people's behavior (and getting meaningful results) is really hard. They clearly need more resources for that, so they can accurately quantify how shit their decisions are, and then make better ones. They can't do that if the site is fucking dead.

Tumblr can't get better if it's fucking dead.

so buy crabs, support the site, and have faith that it will improve eventually. If it doesn't, we can all jump ship to cohost or something, but I would prefer to stay here.

8 notes

·

View notes

Text

Full Stack Development: Using DevOps and Agile Practices for Success

In today’s fast-paced and highly competitive tech industry, the demand for Full Stack Developers is steadily on the rise. These versatile professionals possess a unique blend of skills that enable them to handle both the front-end and back-end aspects of software development. However, to excel in this role and meet the ever-evolving demands of modern software development, Full Stack Developers are increasingly turning to DevOps and Agile practices. In this comprehensive guide, we will explore how the combination of Full Stack Development with DevOps and Agile methodologies can lead to unparalleled success in the world of software development.

Full Stack Development: A Brief Overview

Full Stack Development refers to the practice of working on all aspects of a software application, from the user interface (UI) and user experience (UX) on the front end to server-side scripting, databases, and infrastructure on the back end. It requires a broad skill set and the ability to handle various technologies and programming languages.

The Significance of DevOps and Agile Practices

The environment for software development has changed significantly in recent years. The adoption of DevOps and Agile practices has become a cornerstone of modern software development. DevOps focuses on automating and streamlining the development and deployment processes, while Agile methodologies promote collaboration, flexibility, and iterative development. Together, they offer a powerful approach to software development that enhances efficiency, quality, and project success. In this blog, we will delve into the following key areas:

Understanding Full Stack Development

Defining Full Stack Development

We will start by defining Full Stack Development and elucidating its pivotal role in creating end-to-end solutions. Full Stack Developers are akin to the Swiss Army knives of the development world, capable of handling every aspect of a project.

Key Responsibilities of a Full Stack Developer

We will explore the multifaceted responsibilities of Full Stack Developers, from designing user interfaces to managing databases and everything in between. Understanding these responsibilities is crucial to grasping the challenges they face.

DevOps’s Importance in Full Stack Development

Unpacking DevOps

A collection of principles known as DevOps aims to eliminate the divide between development and operations teams. We will delve into what DevOps entails and why it matters in Full Stack Development. The benefits of embracing DevOps principles will also be discussed.

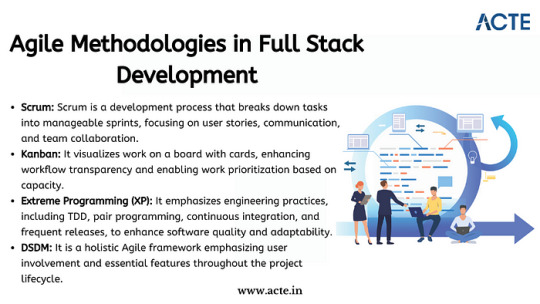

Agile Methodologies in Full Stack Development

Introducing Agile Methodologies

Agile methodologies like Scrum and Kanban have gained immense popularity due to their effectiveness in fostering collaboration and adaptability. We will introduce these methodologies and explain how they enhance project management and teamwork in Full Stack Development.

Synergy Between DevOps and Agile

The Power of Collaboration

We will highlight how DevOps and Agile practices complement each other, creating a synergy that streamlines the entire development process. By aligning development, testing, and deployment, this synergy results in faster delivery and higher-quality software.

Tools and Technologies for DevOps in Full Stack Development

Essential DevOps Tools

DevOps relies on a suite of tools and technologies, such as Jenkins, Docker, and Kubernetes, to automate and manage various aspects of the development pipeline. We will provide an overview of these tools and explain how they can be harnessed in Full Stack Development projects.

Implementing Agile in Full Stack Projects

Agile Implementation Strategies

We will delve into practical strategies for implementing Agile methodologies in Full Stack projects. Topics will include sprint planning, backlog management, and conducting effective stand-up meetings.

Best Practices for Agile Integration

We will share best practices for incorporating Agile principles into Full Stack Development, ensuring that projects are nimble, adaptable, and responsive to changing requirements.

Learning Resources and Real-World Examples

To gain a deeper understanding, ACTE Institute present case studies and real-world examples of successful Full Stack Development projects that leveraged DevOps and Agile practices. These stories will offer valuable insights into best practices and lessons learned. Consider enrolling in accredited full stack developer training course to increase your full stack proficiency.

Challenges and Solutions

Addressing Common Challenges

No journey is without its obstacles, and Full Stack Developers using DevOps and Agile practices may encounter challenges. We will identify these common roadblocks and provide practical solutions and tips for overcoming them.

Benefits and Outcomes

The Fruits of Collaboration

In this section, we will discuss the tangible benefits and outcomes of integrating DevOps and Agile practices in Full Stack projects. Faster development cycles, improved product quality, and enhanced customer satisfaction are among the rewards.

In conclusion, this blog has explored the dynamic world of Full Stack Development and the pivotal role that DevOps and Agile practices play in achieving success in this field. Full Stack Developers are at the forefront of innovation, and by embracing these methodologies, they can enhance their efficiency, drive project success, and stay ahead in the ever-evolving tech landscape. We emphasize the importance of continuous learning and adaptation, as the tech industry continually evolves. DevOps and Agile practices provide a foundation for success, and we encourage readers to explore further resources, courses, and communities to foster their growth as Full Stack Developers. By doing so, they can contribute to the development of cutting-edge solutions and make a lasting impact in the world of software development.

#web development#full stack developer#devops#agile#education#information#technology#full stack web development#innovation

2 notes

·

View notes

Text

CNAPP Explained: The Smartest Way to Secure Cloud-Native Apps with EDSPL

Introduction: The New Era of Cloud-Native Apps

Cloud-native applications are rewriting the rules of how we build, scale, and secure digital products. Designed for agility and rapid innovation, these apps demand security strategies that are just as fast and flexible. That’s where CNAPP—Cloud-Native Application Protection Platform—comes in.

But simply deploying CNAPP isn’t enough.

You need the right strategy, the right partner, and the right security intelligence. That’s where EDSPL shines.

What is CNAPP? (And Why Your Business Needs It)

CNAPP stands for Cloud-Native Application Protection Platform, a unified framework that protects cloud-native apps throughout their lifecycle—from development to production and beyond.

Instead of relying on fragmented tools, CNAPP combines multiple security services into a cohesive solution:

Cloud Security

Vulnerability management

Identity access control

Runtime protection

DevSecOps enablement

In short, it covers the full spectrum—from your code to your container, from your workload to your network security.

Why Traditional Security Isn’t Enough Anymore

The old way of securing applications with perimeter-based tools and manual checks doesn’t work for cloud-native environments. Here’s why:

Infrastructure is dynamic (containers, microservices, serverless)

Deployments are continuous

Apps run across multiple platforms

You need security that is cloud-aware, automated, and context-rich—all things that CNAPP and EDSPL’s services deliver together.

Core Components of CNAPP

Let’s break down the core capabilities of CNAPP and how EDSPL customizes them for your business:

1. Cloud Security Posture Management (CSPM)

Checks your cloud infrastructure for misconfigurations and compliance gaps.

See how EDSPL handles cloud security with automated policy enforcement and real-time visibility.

2. Cloud Workload Protection Platform (CWPP)

Protects virtual machines, containers, and functions from attacks.

This includes deep integration with application security layers to scan, detect, and fix risks before deployment.

3. CIEM: Identity and Access Management

Monitors access rights and roles across multi-cloud environments.

Your network, routing, and storage environments are covered with strict permission models.

4. DevSecOps Integration

CNAPP shifts security left—early into the DevOps cycle. EDSPL’s managed services ensure security tools are embedded directly into your CI/CD pipelines.

5. Kubernetes and Container Security

Containers need runtime defense. Our approach ensures zero-day protection within compute environments and dynamic clusters.

How EDSPL Tailors CNAPP for Real-World Environments

Every organization’s tech stack is unique. That’s why EDSPL never takes a one-size-fits-all approach. We customize CNAPP for your:

Cloud provider setup

Mobility strategy

Data center switching

Backup architecture

Storage preferences

This ensures your entire digital ecosystem is secure, streamlined, and scalable.

Case Study: CNAPP in Action with EDSPL

The Challenge

A fintech company using a hybrid cloud setup faced:

Misconfigured services

Shadow admin accounts

Poor visibility across Kubernetes

EDSPL’s Solution

Integrated CNAPP with CIEM + CSPM

Hardened their routing infrastructure

Applied real-time runtime policies at the node level

✅ The Results

75% drop in vulnerabilities

Improved time to resolution by 4x

Full compliance with ISO, SOC2, and GDPR

Why EDSPL’s CNAPP Stands Out

While most providers stop at integration, EDSPL goes beyond:

🔹 End-to-End Security: From app code to switching hardware, every layer is secured. 🔹 Proactive Threat Detection: Real-time alerts and behavior analytics. 🔹 Customizable Dashboards: Unified views tailored to your team. 🔹 24x7 SOC Support: With expert incident response. 🔹 Future-Proofing: Our background vision keeps you ready for what’s next.

EDSPL’s Broader Capabilities: CNAPP and Beyond

While CNAPP is essential, your digital ecosystem needs full-stack protection. EDSPL offers:

Network security

Application security

Switching and routing solutions

Storage and backup services

Mobility and remote access optimization

Managed and maintenance services for 24x7 support

Whether you’re building apps, protecting data, or scaling globally, we help you do it securely.

Let’s Talk CNAPP

You’ve read the what, why, and how of CNAPP — now it’s time to act.

📩 Reach us for a free CNAPP consultation. 📞 Or get in touch with our cloud security specialists now.

Secure your cloud-native future with EDSPL — because prevention is always smarter than cure.

0 notes

Text

Helm Tutorials— Simplify Kubernetes Package Handling | Waytoeasylearn

Helm is a tool designed to simplify managing applications on Kubernetes. It makes installing, updating, and sharing software on cloud servers easier. This article explains what Helm is, how it functions, and why many people prefer it. It highlights its key features and shows how it can improve your workflow.

Master Helm Effortlessly! 🚀 Dive into the Best Waytoeasylearn Tutorials for Streamlined Kubernetes & Cloud Deployments.➡️ Learn Now!

What You Will Learn

✔ What Helm Is — Understand why Helm is important for Kubernetes and what its main parts are. ✔ Helm Charts & Templates — Learn to create and modify Helm charts using templates, variables, and built-in features. ✔ Managing Repositories — Set up repositories, host your charts, and track different versions with ChartMuseum. ✔ Handling Charts & Dependencies — Perform upgrades, rollbacks, and manage dependencies easily. ✔ Helm Hooks & Kubernetes Jobs — Use hooks to run tasks before or after installation and updates. ✔ Testing & Validation — Check Helm charts through linting, status checks, and organized tests.

Why Take This Course?

🚀 Simplifies Kubernetes Workflows — Automate the process of deploying applications with Helm. 💡 Hands-On Learning — Use real-world examples and case studies to see how Helm works. ⚡ Better Management of Charts & Repositories — Follow best practices for organizing and handling charts and repositories.

After this course, you will be able to manage Kubernetes applications more efficiently using Helm’s tools for automation and packaging.

0 notes

Text

How to Choose the Right Azure DevOps Consulting Services for Your Business

In the current digital era, every company, whether big or small, wants to get software out faster, more reliably, and with more assurance of achieving quality. In pursuit of it, many organizations are availing themselves of DevOps Consulting Services, especially those integrated with Azure-the cloud platform by Microsoft. Right Azure DevOps Consulting Services can perfectly help your business in bridging development and operations, automating workflows, and maximizing productivity.

But under so many allowances and options, how do you choose the right DevOps consulting company to fit your own needs?

This guide will explain to you what DevOps is, why Azure is a smart choice, and how to join hands with your best consulting partner in working toward your goals.

What is Azure DevOps Consulting Services

Microsoft Azure DevOps Consulting Services are expert-led, custom solutions that help your organization learn, adopt, and scale DevOps practices using the Microsoft Azure DevOps toolset. These ondemand, personaltized services are provided by Microsoft certified, Azure based DevOps consultants who collaborate with you to design, deploy, and manage your Azure based DevOps infrastructure and workflows.

Here’s why to consider ByteDance for Azure DevOps consulting services.

Faster and more reliable software releases

Automated testing and deployment pipelines

Improved collaboration between developers and IT operations

Better visibility and control over the development lifecycle

Enterprise-ready, scalable cloud infrastructure with the security and compliance 1 features customers expect from Microsoft

Whether you’re new to DevOps or looking to improve your practice, an experienced consulting firm can help you better navigate the landscape.

Why You Should Consider Azure for DevOps

Now before we get into the details of how you select a consultant, why is Azure becoming the go-to platform for DevOps.

1. Integrated Tools

Azure provides an end to end DevOps experience with Azure Repos (source control), Azure Pipelines (CI/CD), Azure Boards (work tracking), Azure Test Plans (testing) and Azure Artifacts (package management).

2. Scalability and Flexibility Scalability

Whether you choose cloud-native or hybrid solutions, Azure provides the flexibility to expand your DevOps processes as your business grows.

3. Security and Compliance

Azure provides a secure and compliant foundation — with more than 113 certifications — to help your DevOps process integrate security with built-in security features and role-based access control.

4. Streamlined Implementation

Azure DevOps includes deep third-party integrations with dozens of tools and services such as GitHub, Jenkins, Docker and Kubernetes providing you the freedom to easily plug it into your current tech stack.

Top 8 Factors to Consider When Selecting A DevOps Consulting Company

Hopefully now you’re sold on the tremendous value you stand to receive by leveraging the power of Azure & DevOps, so now let’s talk about how you can start determining who the right consulting partner is going to be for you.

1. Governments are using Expertise and Innovation on Azure.

Not all DevOps consultants are Azure specialists. Look for a DevOps consulting company that’s proven themselves by deploying and managing Azure DevOps environments.

What to Check:

Certifications (e.g., Microsoft Partner status)

Case studies with Azure projects

In-depth knowledge of Azure tools and services

2. Understanding of Your Business Goals

There is no one-size-fits-all approach to adopting DevOps. The right consultant will dig deep in the beginning to understand your business requirements, what makes you unique, your pain points and your vision for sustainable, future growth.

Questions to Ask:

Have they worked with businesses of your size or industry?

Do they offer tailored strategies or only pre-built solutions?

3. Deep-Dive Integrated Delivery

The best Azure DevOps Consulting Services guide you through the entire DevOps lifecycle — from planning and design, to implementation and ongoing, continuous monitoring.

Look For:

Assessment and gap analysis

Infrastructure setup

CI/CD pipeline creation

Monitoring and support

4. Training and Knowledge Transfer

A reliable consulting firm doesn’t just build the system — they also empower your internal teams to manage and scale the system after implementation.

Ensure They Provide:

User training sessions

Documentation

Long-term support if needed

5. Automation and Cost optimization

Good DevOps consulting company will assist you in pinpointing areas where cloud deployment can accelerate automation, minimize the manual processes, enhance the efficiency and get your operational costs down.

Tools and Areas:

Automated testing and deployments

Infrastructure as Code (IaC)

Resource optimization on Azure

6. Flexibility and Support

Just as your business environment is constantly changing, your DevOps processes should change and grow with them. Select a strategic partner that is willing and able to provide solutions with flexibility, through quick turnaround, deliverable and ongoing support.

Things to Consider Before You Hire Azure DevOps Consultants

For a smart decision, ask these questions first.

Which Azure DevOps technologies are your primary focus

Can you share some success stories or case studies?

Do you offer cloud migration or integration services with Azure?

What does your onboarding process look like for new users.

What’s your preventative practice to head off issues or falling deadlines on a project.

By asking these questions you should get a full picture of their experience, their process and most importantly if they are trustworthy or not.

Pitfalls to Look Out for When Hiring DevOps Consulting Services

No one is above making the wrong call, and that includes long-time, mature companies. These are some of the deceiving traps that lead many to fail, *don’t be one of those people who fall through the cracks.

1. Focusing Only on Cost

Choosing the cheapest option may save money short-term but could lead to poor implementation or lack of support. Look for value, not just price.

2. Ignoring Customization

Generic DevOps solutions often fail to address unique business needs. Make sure the consultants offer customizable services.

3. Skipping Reviews and References

Follow up on testimonials, reviews, or request client references to ensure you’re working with a trusted content provider.

Here’s How Azure DevOps Consulting Services Can Help Your Business

Here’s a more in-depth look at what enterprises have to gain by working with the right Azure DevOps partner on board.

Faster Time to Market

Easily integrated automated CI/CD pipelines means like new features, fix issues and keep customers happy and in smart time.

Greater Quality and Reliability

By enabling increased automation of testing, it helps ensure a greater code quality and fewer defects that go into production.

Better Collaboration among Transportation Stakeholders

Shared tools and shared dashboards enable development and operations teams to work together in a much more collaborative, unified fashion.

Cost Efficiency

With automation and scalable cloud resources, businesses can reduce costs while increasing output.

A Retail Company Gets Agile on Azure DevOps

A $1 billion mid-sized retail company was having trouble with their release cycles, taking 5+ months to release, if at all through frequent deployments. To help get their developers building and their operators deploying, they hired an Azure DevOps consulting firm.

What Changed:

CI/CD pipelines reduced deployment time from days to minutes

Azure Boards improved work tracking across teams

Cost savings of 30% through automation

This analysis resulted in quicker update cycles, increased customer satisfaction and quantifiable business returns.

What You Should Expect from a Leading DevOps Consulting Firm

A quality consulting partner will:

Provide an initial DevOps maturity assessment

Define clear goals and success metrics

Use best practices for Azure DevOps implementation

Offer proactive communication and project tracking

Stay updated with new tools and features from Azure

Getting Started with Azure DevOps Consulting Services

If you are looking to get started with Azure DevOps Consulting Services, here’s a short roadmap to get started.

Stage 1: Understand Your Existing Process

Identify the gaps in your development and deployment processes.

Develop a Goal-Oriented Framework

Stage 2: legitimate releases at a greater speed, less bug fixes, or good user participation.

Provide these answers to prospective providers to help you identify those that are a good fit for your needs and values.

Step 3: Open Up Research and Shortlist Providers

Research and learn how prospective DevOps consulting companies are rated against each other, based on their experience with Azure, customer reviews and ratings, and the services they provide.

Get individualized advice, ideas, and assistance

Step 4: Ask for a Consultation

Consult with their workshop team industry experts, interview them with your questions, receive a customized proposal designed to meet your unique needs.

Step 5: Start Small

Engage in a small-scale pilot project before rolling DevOps out enterprise-wide.

In this day and age, smart growth could be the most powerful brand on earth.

Conclusion

Choosing the best Azure DevOps Consulting Services will ensure that your enterprise is getting the most out of its efficiency potential and fostering a culture of innovation. Microsoft Azure DevOps Consulting Services will transform how your enterprise creates new software solutions and modernizes current software practices. To really realize a digital twin requires a smart partner, one that can get you there, quickly and intelligently, reducing the costs of operations while creating a stronger, more connected city.

So make sure you choose a DevOps consulting company that not only possesses extensive Azure knowledge, but aligns with your aspirations and objectives and can assist you in executing on that protracted strategy. Slow down, get curious, take time to ask better questions, and make a joint commitment to a partnership that advances the work in meaningful ways.

Googling how to train your team in DevOps?

Find out how we can help you accelerate your migration to Azure with our proven Azure migration services and begin your digital transformation today.

0 notes

Text

52013l4 in Modern Tech: Use Cases and Applications

In a technology-driven world, identifiers and codes are more than just strings—they define systems, guide processes, and structure workflows. One such code gaining prominence across various IT sectors is 52013l4. Whether it’s in cloud services, networking configurations, firmware updates, or application builds, 52013l4 has found its way into many modern technological environments. This article will explore the diverse use cases and applications of 52013l4, explaining where it fits in today’s digital ecosystem and why developers, engineers, and system administrators should be aware of its implications.

Why 52013l4 Matters in Modern Tech

In the past, loosely defined build codes or undocumented system identifiers led to chaos in large-scale environments. Modern software engineering emphasizes observability, reproducibility, and modularization. Codes like 52013l4:

Help standardize complex infrastructure.

Enable cross-team communication in enterprises.

Create a transparent map of configuration-to-performance relationships.

Thus, 52013l4 isn’t just a technical detail—it’s a tool for governance in scalable, distributed systems.

Use Case 1: Cloud Infrastructure and Virtualization

In cloud environments, maintaining structured builds and ensuring compatibility between microservices is crucial. 52013l4 may be used to:

Tag versions of container images (like Docker or Kubernetes builds).

Mark configurations for network load balancers operating at Layer 4.

Denote system updates in CI/CD pipelines.

Cloud providers like AWS, Azure, or GCP often reference such codes internally. When managing firewall rules, security groups, or deployment scripts, engineers might encounter a 52013l4 identifier.

Use Case 2: Networking and Transport Layer Monitoring

Given its likely relation to Layer 4, 52013l4 becomes relevant in scenarios involving:

Firewall configuration: Specifying allowed or blocked TCP/UDP ports.

Intrusion detection systems (IDS): Tracking abnormal packet flows using rules tied to 52013l4 versions.

Network troubleshooting: Tagging specific error conditions or performance data by Layer 4 function.

For example, a DevOps team might use 52013l4 as a keyword to trace problems in TCP connections that align with a specific build or configuration version.

Use Case 3: Firmware and IoT Devices

In embedded systems or Internet of Things (IoT) environments, firmware must be tightly versioned and managed. 52013l4 could:

Act as a firmware version ID deployed across a fleet of devices.

Trigger a specific set of configurations related to security or communication.

Identify rollback points during over-the-air (OTA) updates.

A smart home system, for instance, might roll out firmware_52013l4.bin to thermostats or sensors, ensuring compatibility and stable transport-layer communication.

Use Case 4: Software Development and Release Management

Developers often rely on versioning codes to track software releases, particularly when integrating network communication features. In this domain, 52013l4 might be used to:

Tag milestones in feature development (especially for APIs or sockets).

Mark integration tests that focus on Layer 4 data flow.

Coordinate with other teams (QA, security) based on shared identifiers like 52013l4.

Use Case 5: Cybersecurity and Threat Management

Security engineers use identifiers like 52013l4 to define threat profiles or update logs. For instance:

A SIEM tool might generate an alert tagged as 52013l4 to highlight repeated TCP SYN floods.

Security patches may address vulnerabilities discovered in the 52013l4 release version.

An organization’s SOC (Security Operations Center) could use 52013l4 in internal documentation when referencing a Layer 4 anomaly.

By organizing security incidents by version or layer, organizations improve incident response times and root cause analysis.

Use Case 6: Testing and Quality Assurance

QA engineers frequently simulate different network scenarios and need clear identifiers to catalog results. Here’s how 52013l4 can be applied:

In test automation tools, it helps define a specific test scenario.

Load-testing tools like Apache JMeter might reference 52013l4 configurations for transport-level stress testing.

Bug-tracking software may log issues under the 52013l4 build to isolate issues during regression testing.

What is 52013l4?

At its core, 52013l4 is an identifier, potentially used in system architecture, internal documentation, or as a versioning label in layered networking systems. Its format suggests a structured sequence: “52013” might represent a version code, build date, or feature reference, while “l4” is widely interpreted as Layer 4 of the OSI Model — the Transport Layer.Because of this association, 52013l4 is often seen in contexts that involve network communication, protocol configuration (e.g., TCP/UDP), or system behavior tracking in distributed computing.

FAQs About 52013l4 Applications

Q1: What kind of systems use 52013l4? Ans. 52013l4 is commonly used in cloud computing, networking hardware, application development environments, and firmware systems. It's particularly relevant in Layer 4 monitoring and version tracking.

Q2: Is 52013l4 an open standard? Ans. No, 52013l4 is not a formal standard like HTTP or ISO. It’s more likely an internal or semi-standardized identifier used in technical implementations.

Q3: Can I change or remove 52013l4 from my system? Ans. Only if you fully understand its purpose. Arbitrarily removing references to 52013l4 without context can break dependencies or configurations.

Conclusion

As modern technology systems grow in complexity, having clear identifiers like 52013l4 ensures smooth operation, reliable communication, and maintainable infrastructures. From cloud orchestration to embedded firmware, 52013l4 plays a quiet but critical role in linking performance, security, and development efforts. Understanding its uses and applying it strategically can streamline operations, improve response times, and enhance collaboration across your technical teams.

0 notes

Text

The Most Underrated Tech Careers No One Talks About (But Pay Well)

Published by Prism HRC – Leading IT Recruitment Agency in Mumbai

Let’s be real. When people say “tech job,” most of us instantly think of software developers, data scientists, or full-stack engineers.

But here's the thing tech is way deeper than just coding roles.

There’s a whole world of underrated, lesser-known tech careers that are not only in high demand in 2025 but also pay surprisingly well, sometimes even more than the jobs people brag about on LinkedIn.

Whether you’re tired of following the herd or just want to explore offbeat (but profitable) options, this is your roadmap to smart career choices that don’t get the spotlight — but should.

1. Technical Writer

Love explaining things clearly? Got a thing for structure and detail? You might be sitting on one of the most overlooked goldmines in tech.

What they do: Break down complex software, tools, and systems into user-friendly documentation, manuals, tutorials, and guides.

Why it’s underrated: People underestimate writing. But companies are paying top dollar to folks who can explain their tech to customers and teams.

Skills:

Writing clarity

Markdown, GitHub, API basics

Tools like Notion, Confluence, and Snagit

Average Salary: ₹8–18 LPA (mid-level, India)

2. DevOps Engineer

Everyone talks about developers, but DevOps folks are the ones who actually make sure your code runs smoothly from deployment to scaling.

What they do: Bridge the gap between development and operations. Automate, monitor, and manage infrastructure.

Why it’s underrated: It’s not flashy, but this is what keeps systems alive. DevOps engineers are like the emergency room doctors of tech.

Skills:

Docker, Jenkins, Kubernetes

Cloud platforms (AWS, Azure, GCP)

CI/CD pipelines

Average Salary: ₹10–25 LPA

3. UI/UX Researcher

Designers get the spotlight, but researchers are the ones shaping how digital products actually work for people.

What they do: Conduct usability tests, analyze user behavior, and help design teams create better products.

Why it’s underrated: It's not about drawing buttons. It's about knowing how users think, and companies pay big for those insights.

Skills:

Research methods

Figma, heatmaps, analytics tools

Empathy and communication

Average Salary: ₹7–18 LPA

4. Site Reliability Engineer (SRE)

A hybrid of developer and operations wizard. SREs keep systems reliable, scalable, and disaster-proof.

What they do: Design fail-safe systems, ensure uptime, and prepare for worst-case tech scenarios.

Why it’s underrated: It’s a high-responsibility, high-reward role. Most people don’t realize how crucial this is until something crashes.

Skills:

Monitoring (Prometheus, Grafana)

Cloud & infrastructure knowledge

Scripting (Shell, Python)

Average Salary: ₹15–30 LPA

5. Product Analyst

If you're analytical but not super into coding, this role is the perfect balance of tech, data, and strategy.

What they do: Track user behavior, generate insights, and help product teams make smarter decisions.

Why it’s underrated: People don’t realize how data-driven product decisions are. Analysts who can turn numbers into narratives are game-changers.

Skills:

SQL, Excel, Python (basics)

A/B testing

Tools like Mixpanel, Amplitude, GA4

Average Salary: ₹8–20 LPA

6. Cloud Solutions Architect (Entry-Mid Level)

Everyone knows cloud is booming, but few realize how many roles exist that don’t involve hardcore backend coding.

What they do: Design and implement cloud-based solutions for companies across industries.

Why it’s underrated: People assume you need 10+ years of experience. You don’t. Get certified and build projects you’re in.

Skills:

AWS, Azure, or GCP

Virtualization, network design

Architecture mindset

Average Salary: ₹12–22 LPA (entry to mid-level)

Prism HRC’s Take

At Prism HRC, we’ve seen candidates with these lesser-known skills land incredible offers, often outpacing their peers who went the “mainstream” route.

In fact, hiring managers now ask us for “hybrid profiles” who can write documentation and automate deployment or those who blend design sense with behavioral insight.

Your edge in 2025 isn’t just what you know; it’s knowing where to look.

Before you go

If you’re tired of chasing the same roles as everyone else or feel stuck trying to “become a developer,” it’s time to zoom out.

These underrated careers are less crowded, more in demand, and often more stable.

Start learning. Build a project. Apply smartly. And if you need guidance?

Prism HRC is here to help you carve a unique path and own it. Based in Gorai-2, Borivali West, Mumbai Website: www.prismhrc.com Instagram: @jobssimplified LinkedIn: Prism HRC

#underratedtechjobs#hiddenjobsintech#techcareers2025#UIUXresearcher#PrismHRC#BestITRecruitmentAgencyinMumbai#midleveltechjobs#noncodingtechroles#technicalwriter#devopsengineer#cloudsolutionsarchitect

0 notes

Text

Is ChatGPT Easy to Use? Here’s What You Need to Know

Introduction: A Curious Beginning I still remember the first time I stumbled upon ChatGPT my heart raced at the thought of talking to an AI. I was a fresh-faced IT enthusiast, eager to explore how a “gpt chat” interface could transform my workflow. Yet, as excited as I was, I also felt a tinge of apprehension: Would I need to learn a new programming language? Would I have to navigate countless settings? Spoiler alert: Not at all. In this article, I’m going to walk you through my journey and show you why ChatGPT is as straightforward as chatting with a friend. By the end, you’ll know exactly “how to use ChatGPT” in your day-to-day IT endeavors whether you’re exploring the “chatgpt app” on your phone or logging into “ChatGPT online” from your laptop.

What Is ChatGPT, Anyway?

If you’ve heard of “chat openai,” “chat gbt ai,” or “chatgpt openai,” you already know that OpenAI built this tool to mimic human-like conversation. ChatGPT sometimes written as “Chat gpt”—is an AI-powered chatbot that understands natural language and responds with surprisingly coherent answers. With each new release remember buzz around “chatgpt 4”? OpenAI has refined its approach, making the bot smarter at understanding context, coding queries, creative brainstorming, and more.

GPT Chat: A shorthand term some people use, but it really means the same as ChatGPT just another way to search or tag the service.

ChatGPT Online vs. App: Although many refer to “chatgpt online,” you can also download the “chatgpt app” on iOS or Android for on-the-go access.

Free vs. Paid: There’s even a “chatgpt gratis” option for users who want to try without commitment, while premium plans unlock advanced features.

Getting Started: Signing Up for ChatGPT Online

1. Creating Your Account

First things first head over to the ChatGPT website. You’ll see a prompt to sign up or log in. If you’re wondering about “chat gpt free,” you’re in luck: OpenAI offers a free tier that anyone can access (though it has usage limits). Here’s how I did it:

Enter your email (or use Google/Microsoft single sign-on).

Verify your email with the link they send usually within seconds.

Log in, and voila, you’re in!

No complex setup, no plugin installations just a quick email verification and you’re ready to talk to your new AI buddy. Once you’re “ChatGPT online,” you’ll land on a simple chat window: type a question, press Enter, and watch GPT 4 respond.

Navigating the ChatGPT App

While “ChatGPT online” is perfect for desktop browsing, I quickly discovered the “chatgpt app” on my phone. Here’s what stood out:

Intuitive Interface: A text box at the bottom, a menu for adjusting settings, and conversation history links on the side.

Voice Input: On some versions, you can tap the microphone icon—no need to type every query.

Seamless Sync: Whatever you do on mobile shows up in your chat history on desktop.

For example, one night I was troubleshooting a server config while waiting for a train. Instead of squinting at the station’s Wi-Fi, I opened the “chat gpt free” app on my phone, asked how to tweak a Dockerfile, and got a working snippet in seconds. That moment convinced me: whether you’re using “chatgpt online” or the “chatgpt app,” the learning curve is minimal.

Key Features of ChatGPT 4

You might have seen “chatgpt 4” trending this iteration boasts numerous improvements over earlier versions. Here’s why it feels so effortless to use:

Better Context Understanding: Unlike older “gpt chat” bots, ChatGPT 4 remembers what you asked earlier in the same session. If you say, “Explain SQL joins,” and then ask, “How does that apply to Postgres?”, it knows you’re still talking about joins.

Multi-Turn Conversations: Complex troubleshooting often requires back-and-forth questions. I once spent 20 minutes configuring a Kubernetes cluster entirely through a multi-turn conversation.

Code Snippet Generation: Want Ruby on Rails boilerplate or a Python function? ChatGPT 4 can generate working code that requires only minor tweaks. Even if you make a mistake, simply pasting your error output back into the chat usually gets you an explanation.

These features mean that even non-developers say, a project manager looking to automate simple Excel tasks can learn “how to use ChatGPT” with just a few chats. And if you’re curious about “chat gbt ai” in data analytics, hop on and ask ChatGPT can translate your plain-English requests into practical scripts.

Tips for First-Time Users

I’ve coached colleagues on “how to use ChatGPT” in the last year, and these small tips always come in handy:

Be Specific: Instead of “Write a Python script,” try “Write a Python 3.9 script that reads a CSV file and prints row sums.” The more detail, the more precise the answer.

Ask Follow-Up Questions: Stuck on part of the response? Simply type, “Can you explain line 3 in more detail?” This keeps the flow natural—just like talking to a friend.

Use System Prompts: At the very start, you can say, “You are an IT mentor. Explain in beginner terms.” That “meta” instruction shapes the tone of every response.

Save Your Favorite Replies: If you stumble on a gem—say, a shell command sequence—star it or copy it to a personal notes file so you can reference it later.

When a coworker asked me how to connect a React frontend to a Flask API, I typed exactly that into the chat. Within seconds, I had boilerplate code, NPM install commands, and even a short security note: “Don’t forget to add CORS headers.” That level of assistance took just three minutes, demonstrating why “gpt chat” can feel like having a personal assistant.

Common Challenges and How to Overcome Them

No tool is perfect, and ChatGPT is no exception. Here are a few hiccups you might face and how to fix them:

Occasional Inaccuracies: Sometimes, ChatGPT can confidently state something that’s outdated or just plain wrong. My trick? Cross-check any critical output. If it’s a code snippet, run it; if it’s a conceptual explanation, ask follow-up questions like, “Is this still true for Python 3.11?”

Token Limits: On the “chatgpt gratis” tier, you might hit usage caps or get slower response times. If you encounter this, try simplifying your prompt or wait a few minutes for your quota to reset. If you need more, consider upgrading to a paid plan.

Overly Verbose Answers: ChatGPT sometimes loves to explain every little detail. If that happens, just say, “Can you give me a concise version?” and it will trim down its response.

Over time, you learn how to phrase questions so that ChatGPT delivers exactly what you need quickly—no fluff, just the essentials. Think of it as learning the “secret handshake” to get premium insights from your digital buddy.

Comparing Free and Premium Options

If you search “chat gpt free” or “chatgpt gratis,” you’ll see that OpenAI’s free plan offers basic access to ChatGPT 3.5. It’s great for light users students looking for homework help, writers brainstorming ideas, or aspiring IT pros tinkering with small scripts. Here’s a quick breakdown: FeatureFree Tier (ChatGPT 3.5)Paid Tier (ChatGPT 4)Response SpeedStandardFaster (priority access)Daily Usage LimitsLowerHigherAccess to Latest ModelChatGPT 3.5ChatGPT 4 (and beyond)Advanced Features (e.g., Code)LimitedFull accessChat History StorageShorter retentionLonger session memory

For someone just dipping toes into “chat openai,” the free tier is perfect. But if you’re an IT professional juggling multiple tasks and you want the speed and accuracy of “chatgpt 4” the upgrade is usually worth it. I switched to a paid plan within two weeks of experimenting because my productivity jumped tenfold.

Real-World Use Cases for IT Careers

As an IT blogger, I’ve seen ChatGPT bridge gaps in various IT roles. Here are some examples that might resonate with you:

Software Development: Generating boilerplate code, debugging error messages, or even explaining complex algorithms in simple terms. When I first learned Docker, ChatGPT walked me through building an image, step by step.

System Administration: Writing shell scripts, explaining how to configure servers, or outlining best security practices. One colleague used ChatGPT to set up an Nginx reverse proxy without fumbling through documentation.

Data Analysis: Crafting SQL queries, parsing data using Python pandas, or suggesting visualization libraries. I once asked, “How to use chatgpt for data cleaning?” and got a concise pandas script that saved hours of work.

Project Management: Drafting Jira tickets, summarizing technical requirements, or even generating risk-assessment templates. If you ever struggled to translate technical jargon into plain English for stakeholders, ChatGPT can be your translator.

In every scenario, I’ve found that the real magic isn’t just the AI’s knowledge, but how quickly it can prototype solutions. Instead of spending hours googling or sifting through Stack Overflow, you can ask a direct question and get an actionable answer in seconds.

Security and Privacy Considerations

Of course, when dealing with AI, it’s wise to think about security. Here’s what you need to know:

Data Retention: OpenAI may retain conversation data to improve their models. Don’t paste sensitive tokens, passwords, or proprietary code you can’t risk sharing.

Internal Policies: If you work for a company with strict data guidelines, check whether sending internal data to a third-party service complies with your policy.

Public Availability: Remember that anyone else could ask ChatGPT similar questions. If you need unique, private solutions, consult official documentation or consider an on-premises AI solution.

I routinely use ChatGPT for brainstorming and general code snippets, but for production credentials or internal proprietary logic, I keep those aspects offline. That balance lets me benefit from “chatgpt openai” guidance without compromising security.

Is ChatGPT Right for You?

At this point, you might be wondering, “Okay, but is it really easy enough for me?” Here’s my honest take:

Beginners who have never written a line of code can still ask ChatGPT to explain basic IT concepts no jargon needed.

Intermediate users can leverage the “chatgpt app” on mobile to troubleshoot on the go, turning commute time into learning time.

Advanced professionals will appreciate how ChatGPT 4 handles multi-step instructions and complex code logic.

If you’re seriously exploring a career in IT, learning “how to use ChatGPT” is almost like learning to use Google in 2005: essential. Sure, there’s a short learning curve to phrasing your prompts for maximum efficiency, but once you get the hang of it, it becomes second nature just like typing “ls -la” into a terminal.

Conclusion: Your Next Steps

So, is ChatGPT easy to use? Absolutely. Between the intuitive “chatgpt app,” the streamlined “chatgpt online” interface, and the powerful capabilities of “chatgpt 4,” most users find themselves up and running within minutes. If you haven’t already, head over to the ChatGPT website and create your free account. Experiment with a few prompts maybe ask it to explain “how to use chatgpt” and see how it fits into your daily routine.

Remember:

Start simple. Ask basic questions, then gradually dive deeper.

Don’t be afraid to iterate. If an answer isn’t quite right, refine your prompt.

Keep security in mind. Never share passwords or sensitive data.

Whether you’re writing your first “gpt chat” script, drafting project documentation, or just curious how “chat gbt ai” can spice up your presentations, ChatGPT is here to help. Give it a try, and in no time, you’ll wonder how you ever managed without your AI sidekick.

1 note

·

View note

Text

Unlock Kubernetes Power with the HELM MasterClass: Your Complete Guide to Kubernetes Packaging

Managing Kubernetes applications can feel like trying to solve a Rubik’s Cube in the dark. You've got dozens of YAML files, configuration dependencies, and update issues—it's easy to get lost. That’s where Helm, the Kubernetes package manager, becomes your guiding light.

But let’s be honest: learning Helm from scratch can be overwhelming unless you’ve got the right roadmap. That’s why the HELM MasterClass: Kubernetes Packaging Manager is an absolute game-changer for developers, DevOps engineers, and anyone who wants to truly master container orchestration.

This course doesn’t just teach Helm—it empowers you to build, deploy, and manage production-grade Kubernetes applications like a pro.

What is Helm? And Why Should You Care?

Before we jump into the details of the course, let’s simplify what Helm actually does.

Helm is often called the “Yum” or “Apt” for Kubernetes. It’s a package manager that helps you define, install, and upgrade even the most complex Kubernetes applications.

Imagine writing a single command and deploying an entire app stack—database, backend, frontend, configurations—all bundled up neatly. That’s Helm in action. It takes the repetitive and error-prone YAML work and transforms it into clean, reusable packages called charts.

Here’s why Helm is crucial:

✅ Simplifies Kubernetes deployments

✅ Reusable templates save hours of repetitive YAML writing

✅ Handles configuration management with grace

✅ Supports seamless updates and rollbacks

✅ It’s production-tested and cloud-native

If you’re aiming to level up in DevOps or SRE roles, Helm isn’t optional—it’s essential.

Why the HELM MasterClass is the Ultimate Training Ground

There are plenty of YouTube videos and random blog posts on Helm, but most leave you with more questions than answers.

The HELM MasterClass: Kubernetes Packaging Manager cuts through the noise. Designed to take you from complete beginner to confident chart-builder, this course delivers structured, hands-on lessons in a way that just makes sense.

Here’s what makes the course stand out:

🎯 Beginner to Advanced – Step-by-Step

Whether you’ve never used Helm before or want to fine-tune your skills, this course walks you through:

The Helm ecosystem

Creating and using Helm Charts

Templating YAML files

Managing dependencies

Using Helm in production environments

You’ll build confidence gradually—with zero jargon and plenty of real-world examples.

🧠 Real Projects, Real Practice

You don’t just watch someone else write code—you build alongside the instructor. Every module comes with practice scenarios, which means you’re learning Helm the way it’s meant to be learned: by doing.

📦 Chart Your Own Apps

One of the biggest takeaways is that you’ll learn how to package your own apps into Helm charts. This gives you true independence—you won’t have to rely on third-party charts for your deployments.

💥 Troubleshooting and Debugging

The course goes beyond theory. It shows you how to tackle real-world problems like:

Helm chart versioning conflicts

Managing values.yaml files smartly

Debugging failed deployments

Setting up CI/CD pipelines with Helm

Who Should Enroll in the HELM MasterClass?

This course is tailored for:

Kubernetes beginners who feel overwhelmed by configuration chaos

DevOps engineers aiming to optimize deployments

Site Reliability Engineers (SREs) who want stable, repeatable processes

Backend or Full-stack developers working with microservices

Cloud architects creating infrastructure as code pipelines

If you're someone looking to gain confidence in production-grade deployments, this course will become your go-to reference.

What Will You Learn?

Let’s break down the highlights of the HELM MasterClass: Kubernetes Packaging Manager so you know exactly what you’re getting:

Module 1: Introduction to Helm

Why Helm exists

Helm vs raw YAML

Installing Helm CLI

Module 2: Helm Charts Explained

What are charts?

Structure of a chart directory

Finding and using public charts

Module 3: Templating Magic

Writing your first templates

Understanding template functions

Using control structures in Helm templates

Module 4: Chart Dependencies

Managing dependent charts

Requirements.yaml

Chart locking and best practices

Module 5: Custom Values and Overrides

values.yaml deep dive

Using --set flag

Merging strategies

Module 6: Production-Ready Workflows

Installing charts with CI/CD

Managing Helm releases

Rollbacks and version control

Debugging Helm deployments

Module 7: Advanced Techniques

Helm Hooks and lifecycle events

Using Helmfile and Helm Secrets

Integrating Helm with GitOps tools like ArgoCD

By the end of this course, you won’t just “use Helm”—you’ll master it.

What Makes This Course Special?

With the fast-moving Kubernetes ecosystem, having up-to-date knowledge is key. This course is updated regularly to reflect the latest Helm versions and Kubernetes updates. You’re not learning outdated material—you’re gaining cutting-edge skills.

Plus, it’s all broken down in plain language. No complex acronyms or assumptions. Just clear, hands-on learning.

Benefits of Learning Helm

Still wondering if Helm is worth the effort? Here are some tangible benefits:

🚀 Faster Deployments

Helm reduces manual YAML editing, so you ship faster with fewer errors.

🔁 Easy Rollbacks

Made a mistake in production? Helm makes rolling back simple with one command.

🧩 Modularity

Break your apps into reusable modules. Perfect for microservices architecture.

🔐 Security and Secrets Management

Use values.yaml or Helm Secrets for sensitive configurations. Say goodbye to hard-coded passwords.

☁️ Cloud-Native Ready

All major cloud platforms (AWS, Azure, GCP) support Helm as part of their Kubernetes tooling. That means you're gaining a skill that translates globally.

What Students Say

People who’ve taken this course are seeing the impact in their careers and projects:

“I finally understand how Helm works—this course explains the ‘why’ and not just the ‘how.’” – Sarah T., DevOps Engineer

“I deployed a production-grade app using Helm in just a day after completing this course. Incredible!” – Rajiv M., Cloud Architect

“Clear, concise, and packed with real examples. Easily one of the best Kubernetes-related courses I’ve taken.” – Lisa G., Software Developer

Final Thoughts: Time to Master Kubernetes the Smart Way

Kubernetes is powerful, but it’s not exactly beginner-friendly. Helm bridges that gap and makes managing Kubernetes apps approachable—even enjoyable.

And if you’re serious about mastering Helm, there’s no better way than diving into the HELM MasterClass: Kubernetes Packaging Manager.

This course is not just a tutorial. It’s a transformation. From struggling with YAML to deploying apps effortlessly, your Kubernetes journey gets a major upgrade.

0 notes

Text

Challenges with Enterprise AI Integration—and How to Overcome Them

Enterprise AI is no longer experimental. It’s operational. From predictive maintenance and process optimization to hyper-personalized experiences, large organizations are investing heavily in AI to unlock productivity and long-term advantage. But what looks promising in a POC often meets resistance, complexity, or underperformance at enterprise scale.

Integrating AI into core systems, workflows, and decision-making layers isn’t about layering models—it’s about aligning technology with infrastructure, data, compliance, and business priorities. And for most enterprises, that’s where the friction starts.

Here’s a breakdown of the most common challenges businesses face during AI integration—and how the most resilient enterprises are solving them:

1. Legacy Systems and Data Silos

Enterprise environments rarely start from scratch. Legacy systems run mission-critical processes. Departmental silos own fragmented data. And AI models often struggle to integrate with monolithic, outdated tech stacks.

What works:

API-first strategies to create interoperability between AI modules and legacy systems—without deep refactoring.

Building a centralized data fabric that unifies siloed data stores and provides real-time access across teams.

Introducing AI middleware layers that can abstract complexity and serve as a modular intelligence layer over existing infrastructure.

Read More: Can AI Agents Be Integrated With Existing Enterprise Systems

2. Model Governance, Compliance, and Explainability

In industries like finance, healthcare, and insurance, it’s not just about accuracy. It’s about transparency, auditability, and the ability to explain how a decision was made. Black-box AI can trigger compliance flags and stall adoption.

What works:

Implementing ModelOps frameworks to standardize model lifecycle management—training, deployment, monitoring, and retirement.

Embedding explainable AI (XAI) principles into model development to ensure decisions can be interpreted by stakeholders and auditors.

Running scenario testing and audit trails to meet regulatory standards and reduce risk exposure.

3. Organizational Readiness and Change Management

AI isn’t just a technology shift—it’s a culture shift. Teams need to trust AI outcomes, understand when to act on them, and adapt workflows. Without internal buy-in, AI gets underused or misused.

What works:

Creating AI playbooks and training paths for business users, not just data scientists.

Setting up cross-functional AI councils to govern use cases, ethical boundaries, and implementation velocity.

Demonstrating quick wins through vertical-specific pilots that solve visible business problems and show ROI.

4. Data Privacy, Security, and Cross-Border Compliance

AI initiatives can get stuck navigating enterprise security policies, data residency requirements, and legal obligations across jurisdictions. Especially when models require access to sensitive, proprietary, or regulated data.

What works:

Leveraging federated learning for training on distributed data sources without moving the data.

Using anonymization and encryption techniques at both rest and transit levels.

Working with cloud providers with built-in compliance tools for HIPAA, GDPR, PCI DSS, etc., to reduce overhead.

5. Scalability and Performance Under Load

Many AI models perform well in test environments but start failing at production scale—when latency, real-time processing, or large concurrent users push the system.

What works:

Deploying models in containerized environments (Kubernetes, Docker) to allow elastic scaling based on load.

Optimizing inference speed using GPU acceleration, edge computing, or lightweight models like DistilBERT instead of full-scale LLMs.

Monitoring model performance metrics in real-time, including latency, failure rates, and throughput, as part of observability stacks.

6. Misalignment Between Tech and Business

Even sophisticated models can fail if they don’t directly support core business goals. Enterprises that approach AI purely from an R&D angle often find themselves with outputs that aren’t actionable.

What works:

Building use-case-first roadmaps, where AI initiatives are directly linked to OKRs, cost savings, or growth targets.

Running joint design sprints between AI teams and business units to co-define the problem and solution scope.

Measuring success not by model metrics (like accuracy), but by business outcomes (like churn reduction or claim processing time).

Key Takeaway

Enterprise AI integration isn’t just about building smarter models—it’s about aligning people, data, governance, and infrastructure. The enterprises that are seeing real returns are the ones that solve upstream complexity early: breaking silos, standardizing operations, and building trust across the board. AI doesn’t deliver returns in isolation—it scales when it’s embedded where decisions happen.

0 notes

Text

Kubernetes vs. Traditional Infrastructure: Why Clusters and Pods Win

In today’s fast-paced digital landscape, agility, scalability, and reliability are not just nice-to-haves—they’re necessities. Traditional infrastructure, once the backbone of enterprise computing, is increasingly being replaced by cloud-native solutions. At the forefront of this transformation is Kubernetes, an open-source container orchestration platform that has become the gold standard for managing containerized applications.

But what makes Kubernetes a superior choice compared to traditional infrastructure? In this article, we’ll dive deep into the core differences, and explain why clusters and pods are redefining modern application deployment and operations.

Understanding the Fundamentals

Before drawing comparisons, it’s important to clarify what we mean by each term:

Traditional Infrastructure

This refers to monolithic, VM-based environments typically managed through manual or semi-automated processes. Applications are deployed on fixed servers or VMs, often with tight coupling between hardware and software layers.

Kubernetes

Kubernetes abstracts away infrastructure by using clusters (groups of nodes) to run pods (the smallest deployable units of computing). It automates deployment, scaling, and operations of application containers across clusters of machines.

Key Comparisons: Kubernetes vs Traditional Infrastructure

Feature

Traditional Infrastructure

Kubernetes

Scalability

Manual scaling of VMs; slow and error-prone

Auto-scaling of pods and nodes based on load

Resource Utilization

Inefficient due to over-provisioning

Efficient bin-packing of containers

Deployment Speed

Slow and manual (e.g., SSH into servers)

Declarative deployments via YAML and CI/CD

Fault Tolerance

Rigid failover; high risk of downtime

Self-healing, with automatic pod restarts and rescheduling

Infrastructure Abstraction

Tightly coupled; app knows about the environment

Decoupled; Kubernetes abstracts compute, network, and storage

Operational Overhead

High; requires manual configuration, patching

Low; centralized, automated management

Portability

Limited; hard to migrate across environments

High; deploy to any Kubernetes cluster (cloud, on-prem, hybrid)

Why Clusters and Pods Win

1. Decoupled Architecture

Traditional infrastructure often binds application logic tightly to specific servers or environments. Kubernetes promotes microservices and containers, isolating app components into pods. These can run anywhere without knowing the underlying system details.

2. Dynamic Scaling and Scheduling

In a Kubernetes cluster, pods can scale automatically based on real-time demand. The Horizontal Pod Autoscaler (HPA) and Cluster Autoscaler help dynamically adjust resources—unthinkable in most traditional setups.

3. Resilience and Self-Healing

Kubernetes watches your workloads continuously. If a pod crashes or a node fails, the system automatically reschedules the workload on healthy nodes. This built-in self-healing drastically reduces operational overhead and downtime.

4. Faster, Safer Deployments

With declarative configurations and GitOps workflows, teams can deploy with speed and confidence. Rollbacks, canary deployments, and blue/green strategies are natively supported—streamlining what’s often a risky manual process in traditional environments.

5. Unified Management Across Environments

Whether you're deploying to AWS, Azure, GCP, or on-premises, Kubernetes provides a consistent API and toolchain. No more re-engineering apps for each environment—write once, run anywhere.

Addressing Common Concerns

“Kubernetes is too complex.”

Yes, Kubernetes has a learning curve. But its complexity replaces operational chaos with standardized automation. Tools like Helm, ArgoCD, and managed services (e.g., GKE, EKS, AKS) help simplify the onboarding process.

“Traditional infra is more secure.”

Security in traditional environments often depends on network perimeter controls. Kubernetes promotes zero trust principles, pod-level isolation, and RBAC, and integrates with service meshes like Istio for granular security policies.

Real-World Impact

Companies like Spotify, Shopify, and Airbnb have migrated from legacy infrastructure to Kubernetes to:

Reduce infrastructure costs through efficient resource utilization

Accelerate development cycles with DevOps and CI/CD

Enhance reliability through self-healing workloads

Enable multi-cloud strategies and avoid vendor lock-in

Final Thoughts

Kubernetes is more than a trend—it’s a foundational shift in how software is built, deployed, and operated. While traditional infrastructure served its purpose in a pre-cloud world, it can’t match the agility and scalability that Kubernetes offers today.

Clusters and pods don’t just win—they change the game.

0 notes

Text

Mercans Tech Leaders Empower Digital Transformation in Global Payroll with Stateless Architecture

As global businesses scale operations across borders, managing payroll has become an increasingly complex challenge. Mercans, a global leader in payroll technology and Employer of Record (EOR) services, is changing that narrative with its cutting-edge platform and forward-thinking strategy. In a recent discussion, Mercans’ Global Head of Engineering Eero Plato and Chief Technology Officer Andre Voolaid offered an inside look into how the company is revolutionizing global payroll with automation, AI, and a unified technology infrastructure.

Operating in more than 160 countries, Mercans’ platform, HR Blizz, is engineered to streamline global payroll processes while meeting the highest standards of compliance and data security. With a single-codebase architecture and seamless integrations with major HCM providers like SAP, Dayforce, and Workday, Mercans enables multinational organizations to manage payroll with consistency and speed—regardless of geography.

Thinking Global from the Ground Up

What differentiates Mercans is its foundational design philosophy. The company has deliberately moved away from building country-specific payroll solutions and instead focuses on a global-first model.

“Our technology is built on three core principles,” explains Plato. “We seek country-specific exceptions rather than delivering country-specific solutions. This perspective allows us to build a global solution and focus on the differentiators between countries.”

This approach simplifies expansion for international companies, allowing them to operate without setting up local payroll infrastructures in each market. The platform’s cloud-native design ensures scalability and robust performance while maintaining end-to-end encryption and AI-based data anonymization to protect employee information.

Automation and AI at the Heart of Payroll

Mercans is not just digitizing payroll—it’s automating it. A major innovation on the roadmap is instant payroll calculation. By embedding AI and machine learning (ML) directly into the core platform, payroll updates now happen in real-time.

“One key change is making payroll calculations instant,” says Voolaid. “When an employee’s data is updated, the system automatically recalculates the payroll.”

“So essentially, it simplifies the long and complex payroll process as much as possible,” he adds. “These are our goals. And, additionally, AI and ML, these areas play an increasing role, particularly in automated payroll validations and anomaly detections.”

The addition of AI and ML doesn’t just boost efficiency—it enhances accuracy and enables predictive analytics, giving HR teams the tools they need to make more informed decisions about their workforce.

Staying Ahead in Compliance and Security

Global payroll comes with the heavy responsibility of regulatory compliance. Mercans manages this through a unique blend of technology and local expertise. With a globally distributed workforce possessing in-depth local knowledge, the company can swiftly adapt to evolving rules and tax laws.

“Compliance is the basis of payroll, so local compliance,” notes Voolaid. “We navigate these challenges through local prowess and dedicated compliance mechanisms in the business.”

This strategy is supported by a specialized compliance certification program, ensuring accuracy across 100+ countries. Combined with strict data governance policies and access controls, Mercans helps its clients maintain compliance while reducing administrative burden.

Scaling Smart with Talent and Cloud

As part of its growth strategy, Mercans is expanding its presence in North America, while strengthening its foothold in Western Europe, Latin America, and the Middle East. To support this growth, the company has transitioned its infrastructure to a Kubernetes-powered cloud-native environment, offering both private and public cloud flexibility.

The company is also focused on attracting top engineering talent by offering innovative, technically challenging projects.

“The key drivers to retain that talent is to offer developers technically challenging and innovative projects especially,” says Plato. “It’s with top talent that it is not so much, maybe the soft aspects of the job. It’s rather like how challenging and technically comprehensive the solutions that we are delivering are.”

Looking Ahead

With its bold vision, unified platform, and commitment to innovation, Mercans is setting a new standard for global payroll technology. As it continues to evolve into a full-scale technology provider, the company remains focused on its mission to simplify and secure payroll for businesses worldwide—one real-time calculation at a time.

#HR Tech News#HR Tech Articles#Human Resource Trends#Human Resource Current Updates#HR Tech#HR Technology

0 notes

Text

Jenkins vs GitLab CI/CD: Key Differences Explained

In the world of DevOps and software automation, choosing the right CI/CD tool can significantly impact your team's productivity and the efficiency of your development pipeline. Two of the most popular tools in this space are Jenkins and GitLab CI/CD. While both are designed to automate the software delivery process, they differ in structure, usability, and integration capabilities. Below is a detailed look at the differences between Jenkins and GitLab CI/CD, helping you make an informed decision based on your project requirements.

1. Core integration and setup Jenkins is a stand-alone open-source automation server that requires you to set up everything manually, including integrations with source control systems, plugins, and build environments. This setup can be powerful but complex, especially for smaller teams or those new to CI/CD tools. GitLab CI/CD, on the other hand, comes as an integrated part of the GitLab platform. From code repositories to issue tracking and CI/CD pipelines, everything is included in one interface. This tight integration makes it more user-friendly and easier to manage from day one.

2. Plugin dependency vs built-in tools One of Jenkins’ biggest strengths—and weaknesses—is its plugin ecosystem. With over 1,800 plugins available, Jenkins allows deep customization and support for almost any development environment. However, this heavy reliance on plugins also means users must spend time managing compatibility, updates, and security. In contrast, GitLab CI/CD provides most essential features out of the box, reducing the need for third-party plugins. Whether you need container support, auto DevOps, or security testing, GitLab includes these tools natively, making maintenance much easier.

3. Pipeline configuration methods Jenkins pipelines can be configured using a web interface or through a Jenkinsfile written in Groovy. While powerful, this approach requires familiarity with Jenkins syntax and structure, which can add complexity to your workflow. GitLab CI/CD uses a YAML-based file named .gitlab-ci.yml placed in the root of your repository. This file is easy to read and version-controlled, allowing teams to manage pipeline changes along with their codebase. The simplicity of YAML makes GitLab pipelines more accessible, especially to developers with limited DevOps experience.

4. User interface and experience Jenkins’ UI is considered outdated by many users, with limited design improvements over the years. While functional, it’s not the most intuitive experience, especially when managing complex builds and pipeline jobs. GitLab CI/CD offers a modern and clean interface, providing real-time pipeline status, logs, and visual job traces directly from the dashboard. This improves transparency and makes debugging or monitoring easier for teams.

5. Scalability and performance Jenkins can scale to support complex builds and large organizations, especially with the right infrastructure. However, this flexibility comes at a cost: teams are responsible for maintaining, upgrading, and scaling Jenkins nodes manually. GitLab CI/CD supports scalable runners that can be configured for distributed builds. It also works well with Kubernetes and cloud environments, enabling easier scalability without extensive manual setup.

6. Community and support Jenkins, being older, has a large community and long-standing documentation. This makes it easier to find help or solutions for common problems. GitLab CI/CD, though newer, benefits from active development and enterprise support, with frequent updates and a growing user base.

To explore the topic in more depth, check out this guide on the differences between Jenkins and GitLab CI/CD, which breaks down the tools in more technical detail.