#kubernetes overview

Explore tagged Tumblr posts

Text

Full Stack Development: Using DevOps and Agile Practices for Success

In today’s fast-paced and highly competitive tech industry, the demand for Full Stack Developers is steadily on the rise. These versatile professionals possess a unique blend of skills that enable them to handle both the front-end and back-end aspects of software development. However, to excel in this role and meet the ever-evolving demands of modern software development, Full Stack Developers are increasingly turning to DevOps and Agile practices. In this comprehensive guide, we will explore how the combination of Full Stack Development with DevOps and Agile methodologies can lead to unparalleled success in the world of software development.

Full Stack Development: A Brief Overview

Full Stack Development refers to the practice of working on all aspects of a software application, from the user interface (UI) and user experience (UX) on the front end to server-side scripting, databases, and infrastructure on the back end. It requires a broad skill set and the ability to handle various technologies and programming languages.

The Significance of DevOps and Agile Practices

The environment for software development has changed significantly in recent years. The adoption of DevOps and Agile practices has become a cornerstone of modern software development. DevOps focuses on automating and streamlining the development and deployment processes, while Agile methodologies promote collaboration, flexibility, and iterative development. Together, they offer a powerful approach to software development that enhances efficiency, quality, and project success. In this blog, we will delve into the following key areas:

Understanding Full Stack Development

Defining Full Stack Development

We will start by defining Full Stack Development and elucidating its pivotal role in creating end-to-end solutions. Full Stack Developers are akin to the Swiss Army knives of the development world, capable of handling every aspect of a project.

Key Responsibilities of a Full Stack Developer

We will explore the multifaceted responsibilities of Full Stack Developers, from designing user interfaces to managing databases and everything in between. Understanding these responsibilities is crucial to grasping the challenges they face.

DevOps’s Importance in Full Stack Development

Unpacking DevOps

A collection of principles known as DevOps aims to eliminate the divide between development and operations teams. We will delve into what DevOps entails and why it matters in Full Stack Development. The benefits of embracing DevOps principles will also be discussed.

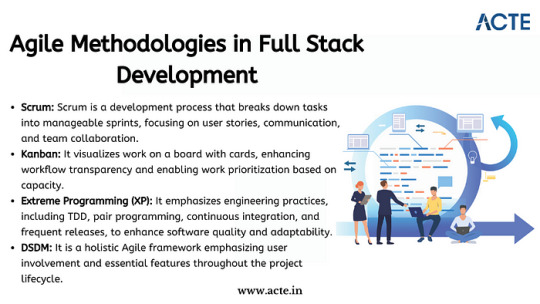

Agile Methodologies in Full Stack Development

Introducing Agile Methodologies

Agile methodologies like Scrum and Kanban have gained immense popularity due to their effectiveness in fostering collaboration and adaptability. We will introduce these methodologies and explain how they enhance project management and teamwork in Full Stack Development.

Synergy Between DevOps and Agile

The Power of Collaboration

We will highlight how DevOps and Agile practices complement each other, creating a synergy that streamlines the entire development process. By aligning development, testing, and deployment, this synergy results in faster delivery and higher-quality software.

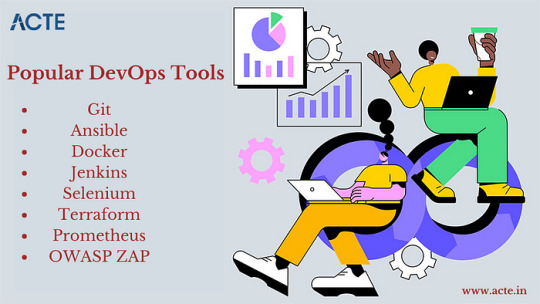

Tools and Technologies for DevOps in Full Stack Development

Essential DevOps Tools

DevOps relies on a suite of tools and technologies, such as Jenkins, Docker, and Kubernetes, to automate and manage various aspects of the development pipeline. We will provide an overview of these tools and explain how they can be harnessed in Full Stack Development projects.

Implementing Agile in Full Stack Projects

Agile Implementation Strategies

We will delve into practical strategies for implementing Agile methodologies in Full Stack projects. Topics will include sprint planning, backlog management, and conducting effective stand-up meetings.

Best Practices for Agile Integration

We will share best practices for incorporating Agile principles into Full Stack Development, ensuring that projects are nimble, adaptable, and responsive to changing requirements.

Learning Resources and Real-World Examples

To gain a deeper understanding, ACTE Institute present case studies and real-world examples of successful Full Stack Development projects that leveraged DevOps and Agile practices. These stories will offer valuable insights into best practices and lessons learned. Consider enrolling in accredited full stack developer training course to increase your full stack proficiency.

Challenges and Solutions

Addressing Common Challenges

No journey is without its obstacles, and Full Stack Developers using DevOps and Agile practices may encounter challenges. We will identify these common roadblocks and provide practical solutions and tips for overcoming them.

Benefits and Outcomes

The Fruits of Collaboration

In this section, we will discuss the tangible benefits and outcomes of integrating DevOps and Agile practices in Full Stack projects. Faster development cycles, improved product quality, and enhanced customer satisfaction are among the rewards.

In conclusion, this blog has explored the dynamic world of Full Stack Development and the pivotal role that DevOps and Agile practices play in achieving success in this field. Full Stack Developers are at the forefront of innovation, and by embracing these methodologies, they can enhance their efficiency, drive project success, and stay ahead in the ever-evolving tech landscape. We emphasize the importance of continuous learning and adaptation, as the tech industry continually evolves. DevOps and Agile practices provide a foundation for success, and we encourage readers to explore further resources, courses, and communities to foster their growth as Full Stack Developers. By doing so, they can contribute to the development of cutting-edge solutions and make a lasting impact in the world of software development.

#web development#full stack developer#devops#agile#education#information#technology#full stack web development#innovation

2 notes

·

View notes

Text

Migrating Virtual Machines to Red Hat OpenShift Virtualization with Ansible Automation Platform

As enterprises modernize their infrastructure, migrating traditional virtual machines (VMs) to container-native platforms is no longer just a trend — it’s a necessity. One of the most powerful solutions for this evolution is Red Hat OpenShift Virtualization, which allows organizations to run VMs side-by-side with containers on a unified Kubernetes platform. When combined with Red Hat Ansible Automation Platform, this migration can be automated, repeatable, and efficient.

In this blog, we’ll explore how enterprises can leverage Ansible to seamlessly migrate workloads from legacy virtualization platforms (like VMware or KVM) to OpenShift Virtualization.

🔍 Why OpenShift Virtualization?

OpenShift Virtualization extends OpenShift’s capabilities to include traditional VMs, enabling:

Unified management of containers and VMs

Native integration with Kubernetes networking and storage

Simplified CI/CD pipelines that include VM-based workloads

Reduction of operational overhead and licensing costs

🛠️ The Role of Ansible Automation Platform

Red Hat Ansible Automation Platform is the glue that binds infrastructure automation, offering:

Agentless automation using SSH or APIs

Pre-built collections for platforms like VMware, OpenShift, KubeVirt, and more

Scalable execution environments for large-scale VM migration

Role-based access and governance through automation controller (formerly Tower)

🧭 Migration Workflow Overview

A typical migration flow using Ansible and OpenShift Virtualization involves:

1. Discovery Phase

Inventory the source VMs using Ansible VMware/KVM modules.

Collect VM configuration, network settings, and storage details.

2. Template Creation

Convert the discovered VM configurations into KubeVirt/OVIRT VM manifests.

Define OpenShift-native templates to match the workload requirements.

3. Image Conversion and Upload

Use tools like virt-v2v or Ansible roles to export VM disk images (VMDK/QCOW2).

Upload to OpenShift using Containerized Data Importer (CDI) or PVCs.

4. VM Deployment

Deploy converted VMs as KubeVirt VirtualMachines via Ansible Playbooks.

Integrate with OpenShift Networking and Storage (Multus, OCS, etc.)

5. Validation & Post-Migration

Run automated smoke tests or app-specific validation.

Integrate monitoring and alerting via Prometheus/Grafana.

- name: Deploy VM on OpenShift Virtualization

hosts: localhost

tasks:

- name: Create PVC for VM disk

k8s:

state: present

definition: "{{ lookup('file', 'vm-pvc.yaml') }}"

- name: Deploy VirtualMachine

k8s:

state: present

definition: "{{ lookup('file', 'vm-definition.yaml') }}"

🔐 Benefits of This Approach

✅ Consistency – Every VM migration follows the same process.

✅ Auditability – Track every step of the migration with Ansible logs.

✅ Security – Ansible integrates with enterprise IAM and RBAC policies.

✅ Scalability – Migrate tens or hundreds of VMs using automation workflows.

🌐 Real-World Use Case

At HawkStack Technologies, we’ve successfully helped enterprises migrate large-scale critical workloads from VMware vSphere to OpenShift Virtualization using Ansible. Our structured playbooks, coupled with Red Hat-supported tools, ensured zero data loss and minimal downtime.

🔚 Conclusion

As cloud-native adoption grows, merging the worlds of VMs and containers is no longer optional. With Red Hat OpenShift Virtualization and Ansible Automation Platform, organizations get the best of both worlds — a powerful, policy-driven, scalable infrastructure that supports modern and legacy workloads alike.

If you're planning a VM migration journey or modernizing your data center, reach out to HawkStack Technologies — Red Hat Certified Partners — to accelerate your transformation. For more details www.hawkstack.com

0 notes

Text

Fix Deployment Fast with a Docker Course in Ahmedabad

Are you tired of hearing or saying, "It works on my machine"? That phrase is an indicator of disruptively broken deployment processes: when code works fine locally but breaks on staging and production.

From the perspective of developers and DevOps teams, it is exasperating, and quite frankly, it drains resources. The solution to this issue is Containerisation. The local Docker Course Ahmedabadpromises you the quickest way to master it.

The Benefits of Docker for Developers

Docker is a solution to the problem of the numerous inconsistent environments; it is not only a trendy term. Docker technology, which utilises Docker containers, is capable of providing a reliable solution to these issues. Docker is the tool of choice for a highly containerised world. It allows you to take your application and every single one of its components and pack it thus in a container that can execute anywhere in the world. Because of this feature, “works on my machine” can be completely disregarded.

Using exercises tailored to the local area, a Docker Course Ahmedabad teaches you how to create docker files, manage your containers, and push your images to Docker Hub. This course gives you the chance to build, deploy, and scale containerised apps.

Combining DevOps with Classroom Training and Classes in Ahmedabad Makes for Seamless Deployment Mastery

Reducing the chances of error in using docker is made much easier using DevOps, the layer that takes it to the next level. Unlike other courses that give a broad overview of containers, DevOps Classes and Training in Ahmedabad dive into automation, the establishment of CI/CD pipelines, monitoring, and with advanced tools such as Kubernetes and Jenkins, orchestration.

Docker skills combined with DevOps practices mean that you’re no longer simply coding but rather deploying with greater speed while reducing errors. Companies, especially those with siloed systems, appreciate this multifaceted skill set.

Real-World Impact: What You’ll Gain

Speed: Thus, up to 80% of deployment time is saved.

Reliability: Thus, your application will remain seamless across dev, test, and production environments.

Confidence: For end-users, the deployment problems have already been resolved well before they have the chance to exist.

Achieving these skills will exponentially propel your career.

Conclusion: Transform Every DevOps Weakness into a Strategic Advantage

Fewer bugs and faster release cadence are a universal team goal. Putting confidence in every deployment is every developer’s dream. A comprehensive Docker Course in Ahmedabador DevOps Classes and Training in Ahmedabadcan help achieve both together. Don’t be limited by impediments. Highsky IT Solutions transforms deployment challenges into success with strategic help through practical training focused on boosting your career with Docker and DevOps.

#linux certification ahmedabad#red hat certification ahmedabad#linux online courses in ahmedabad#data science training ahmedabad#rhce rhcsa training ahmedabad#aws security training ahmedabad#docker training ahmedabad#red hat training ahmedabad#microsoft azure cloud certification#python courses in ahmedabad

0 notes

Text

Mastering Kubernetes Networking: From Basics to Best Practices

Kubernetes is a powerful platform for container orchestration, but its networking capabilities are often misunderstood. To effectively use Kubernetes, it's essential to understand how networking works within the platform. In this guide, we'll explore the fundamentals of Kubernetes networking, including network policies, service discovery, and network topologies. The first step in understanding Kubernetes networking is to understand the different components involved. There are several key components, including pods, services, and deployments. Pods are the basic execution units in Kubernetes, while services provide a stable network identity and load balancing. Deployments are used to manage the rollout of new versions of an application. To establish communication between pods, Kubernetes uses a combination of host networking and overlay networking. Host networking relies on the underlying infrastructure to provide connectivity between pods, while overlay networking uses a virtual network to provide isolation and security. IAMDevBox.com provides a comprehensive overview of both approaches. Managing networking in Kubernetes can be challenging, especially for large-scale deployments. To overcome these challenges, it's essential to understand common issues such as network latency, packet loss, and security breaches. By understanding these challenges, you can implement effective solutions to optimize your network architecture. Read more: https://www.iamdevbox.com/posts/

0 notes

Text

AI/ML Training in Indore – Future-Proof Your Tech Career with Infograins TCS

Introduction – Master AI & Machine Learning with Industry Experts

In the fast-evolving digital era, Artificial Intelligence (AI) and Machine Learning (ML) are revolutionizing the tech world. Whether you're an aspiring data scientist or a developer looking to pivot, enrolling in AI/ML Training in Indore can give you a competitive edge. Infograins TCS offers a practical, project-based learning environment to help you gain expertise in AI and ML, and prepare for high-demand job roles in top companies.

Overview – Deep Learning to Data Science, All in One Course

Our AI/ML Training in Indore covers the full spectrum of artificial intelligence and machine learning — from Python programming and data handling to deep learning, neural networks, natural language processing (NLP), and model deployment. The training is designed to be hands-on, incorporating real-time projects that mimic real-world business problems. This ensures every learner gains practical exposure and problem-solving skills needed for today’s data-driven ecosystem.

Key Benefits – Why Our AI/ML Training Is the Right Choice

Infograins TCS offers more than just theoretical knowledge. With our training, you will:

Gain hands-on experience with real-world AI/ML projects

Learn from industry experts with years of domain experience

Work with essential tools like TensorFlow, Scikit-learn, and Python

Receive job support and opportunities for ai ml training in Indore as well as internship options

This comprehensive approach ensures you're ready for both entry-level and advanced roles in data science, AI engineering, and analytics.

Why Choose Us – Elevate Your Career with Infograins TCS

Infograins TCS stands out as a trusted AI/ML Training Institute in Indore because of our consistent focus on quality, practical learning, and placement outcomes. With personalized mentoring, updated course content, and real-time learning environments, we ensure every student gets the tools and confidence to succeed in this competitive field. Our goal isn’t just to train you—it’s to launch your career.

Certification Programs at Infograins TCS

After completing the course, students receive a professional certificate that validates their expertise in AI and Machine Learning. Our aiml certification in Indore is recognized by employers and gives you the credibility to showcase your skills on your resume and LinkedIn profile. The certification acts as a career gateway into roles such as Machine Learning Engineer, AI Developer, and Data Scientist.

After Certification – What Opportunities Await You?

Post-certification, we support your journey with job assistance, resume workshops, and interview preparation. We also provide internship opportunities to bridge the gap between theory and application. This helps you gain industry exposure, build a real-world portfolio, and network with professionals in the AI/ML community—boosting your career from learning to landing.

Explore Our More Courses – Broaden Your Tech Skillset

In addition to AI/ML Training in Indore, Infograins TCS offers a range of other career-boosting IT courses:

Data Science with Python

Full Stack Development

Cloud Computing (AWS & Azure)

DevOps and Kubernetes

Business Analyst Training Each program is designed with market demand in mind, ensuring you're equipped with in-demand skills.

Why We as a Helping Partner – Beyond Just Training

Infograins TCS is not just an institute; we are your long-term learning partner. We understand that AI and ML are complex domains and require continued support, practical application, and mentoring. We go beyond traditional classroom training to offer one-on-one mentorship, job-matching guidance, and career tracking. Our AI/ML Training in Indore is designed to give learners lasting success—not just a certificate.

FAQs – Professional Answers to Your Common Questions

1. Who is eligible for the AI/ML training course? This course is open to graduates, working professionals, and anyone with a basic understanding of programming and mathematics.

2. Will I receive a certificate after completing the course? Yes, we offer a professional aiml certification in Indore recognized by industry leaders and tech recruiters.

3. What tools and technologies will I learn? You’ll work with Python, Scikit-learn, TensorFlow, Pandas, NumPy, and more, as part of our hands-on learning methodology.

4. Are there internship opportunities available after the course? Yes, eligible students will be offered internships that involve real-world AI/ML projects to enhance their practical knowledge and resume.

5. Do you offer placement assistance? Absolutely. Our dedicated career support team provides job readiness training, mock interviews, and connects you with top recruiters in the tech industry.

Start Your Journey with AI/ML Training in Indore

The future of technology is intelligent—and you can be at the forefront of it. Join AI/ML Training in Indore at Infograins TCS and turn your ambition into a thriving tech career. Enroll now and take the first step toward becoming an AI & ML professional.

0 notes

Text

North America Cloud Security Market Size, Revenue, End Users And Forecast Till 2028

The North America cloud security market is expected to grow from US$ 17,168.84 million in 2022 to US$ 42,944.12 million by 2028. It is estimated to grow at a CAGR of 16.5% from 2022 to 2028.

Surging Managed Container Services is fueling the growth of North America cloud security market

The use of containers in the IT sector has increased exponentially in recent years. A large number of businesses use managed or native Kubernetes orchestration; the well-known managed cloud services used by these enterprises include Amazon Elastic Container Service for Kubernetes, Azure Kubernetes Service, and Google Kubernetes Engine. These managed service platforms have simplified the management, deployment, and scaling of use cases. With the increasing use of containers, enterprises need to ensure that the right security solutions are in place to prevent security issues. For instance, the pods of Kubernetes clusters might receive traffic from any source, raising security issues throughout the company. To prevent attacks on vulnerable networks, enterprises implement network policies for their managed Kubernetes services. Thus, the adoption of managed container services is bolstering the growth of the North America cloud security market.

Grab PDF To Know More @ https://www.businessmarketinsights.com/sample/BMIRE00028041

North America Cloud Security Market Overview

The US, Canada, and Mexico are among the major economies in North America. With higher penetration of large and mid-sized companies, there is a growing frequency of cyber-attacks and the increasing number of hosted servers. Moreover, growing number of cyber crime and the production of new cyber attacks, as well as surge in usage of cloud-based solutions are all becoming major factor propelling the adoption of cloud security solutions and services. In addition, to enhance IT infrastructure and leverage the benefits of technologies such as AI and ML, there is a growing adoption of cloud security and therefore, becoming major factors contributing towards the market growth. Furthermore, there is huge growth potential in industries such as energy, manufacturing, and utilities, as they are continuously migrating towards digital-transformed methods of operations and focusing on data protection measures. Major companies such as Microsoft, Google, Cisco, McAfee, Palo Alto Networks, FireEye, and Fortinet and start-ups in the North America cloud security market provide cloud security solutions and services.

North America Cloud Security Strategic Insights

Strategic insights for the North America Cloud Security provides data-driven analysis of the industry landscape, including current trends, key players, and regional nuances. These insights offer actionable recommendations, enabling readers to differentiate themselves from competitors by identifying untapped segments or developing unique value propositions. Leveraging data analytics, these insights help industry players anticipate the market shifts, whether investors, manufacturers, or other stakeholders. A future-oriented perspective is essential, helping stakeholders anticipate market shifts and position themselves for long-term success in this dynamic region. Ultimately, effective strategic insights empower readers to make informed decisions that drive profitability and achieve their business objectives within the market.

Market leaders and key company profiles

Amazon Web Services

Microsoft Corp

International Business Machines Corp

Oracle Corp

Trend Micro Incorporated

VMware, Inc.

Palo Alto Networks, Inc.

Cisco Systems Inc

Check Point Software Technologies Ltd.

Google LLC

North America Cloud Security Regional Insights

The geographic scope of the North America Cloud Security refers to the specific areas in which a business operates and competes. Understanding local distinctions, such as diverse consumer preferences (e.g., demand for specific plug types or battery backup durations), varying economic conditions, and regulatory environments, is crucial for tailoring strategies to specific markets. Businesses can expand their reach by identifying underserved areas or adapting their offerings to meet local demands. A clear market focus allows for more effective resource allocation, targeted marketing campaigns, and better positioning against local competitors, ultimately driving growth in those targeted areas.

North America Cloud Security Market Segmentation

The North America cloud security market is segmented into service model, deployment model, enterprise size, solution type, industry vertical, and country. Based on service model, the North America cloud security market is segmented into infrastructure as a service (IaaS), platform as a service (PaaS) and software as a service (SaaS). The software-as-a-service (SaaS)segment registered the largest market share in 2022.

Based on deployment model, the North America cloud security market is segmented into public cloud, private cloud, and hybrid cloud. The public cloud segment registered the largest market share in 2022.Based on enterprise size, the North America cloud security market is segmented into small and medium-sized enterprises (SMEs), and large enterprises. The large enterprises segment registered a larger market share in 2022.

About Us:

Business Market Insights is a market research platform that provides subscription service for industry and company reports. Our research team has extensive professional expertise in domains such as Electronics & Semiconductor; Aerospace & Defence; Automotive & Transportation; Energy & Power; Healthcare; Manufacturing & Construction; Food & Beverages; Chemicals & Materials; and Technology, Media, & Telecommunications.

0 notes

Text

Building Scalable Machine Learning Pipelines with Kubeflow & Kubernetes

Building Scalable Machine Learning Pipelines with Kubeflow and Kubernetes 1. Introduction 1.1 Overview Building scalable machine learning (ML) pipelines is crucial for modern data-driven applications. These pipelines automate the end-to-end ML workflow, from data preparation to model deployment, enabling efficient and reproducible processes. Kubeflow and Kubernetes provide a robust framework…

0 notes

Text

Effective Kubernetes cluster monitoring simplifies containerized workload management by measuring uptime, resource use (such as memory, CPU, and storage), and interaction between cluster components. It also enables cluster managers to monitor the cluster and discover issues such as inadequate resources, errors, pods that fail to start, and nodes that cannot join the cluster. Essentially, Kubernetes monitoring enables you to discover issues and manage Kubernetes clusters more proactively. What Kubernetes Metrics Should You Measure? Monitoring Kubernetes metrics is critical for ensuring the reliability, performance, and efficiency of applications in a Kubernetes cluster. Because Kubernetes constantly expands and maintains containers, measuring critical metrics allows you to spot issues early on, optimize resource allocation, and preserve overall system integrity. Several factors are critical to watch with Kubernetes: Cluster monitoring - Monitors the health of the whole Kubernetes cluster. It helps you find out how many apps are running on a node, if it is performing efficiently and at the right capacity, and how much resource the cluster requires overall. Pod monitoring - Tracks issues impacting individual pods, including resource use, application metrics, and pod replication or auto scaling metrics. Ingress metrics - Monitoring ingress traffic can help in discovering and managing a variety of issues. Using controller-specific methods, ingress controllers can be set up to track network traffic information and workload health. Persistent storage - Monitoring volume health allows Kubernetes to implement CSI. You can also use the external health monitor controller to track node failures. Control plane metrics - With control plane metrics we can track and visualize cluster performance while troubleshooting by keeping an eye on schedulers, controllers, and API servers. Node metrics - Keeping an eye on each Kubernetes node's CPU and memory usage might help ensure that they never run out. A running node's status can be defined by a number of conditions, such as Ready, MemoryPressure, DiskPressure, OutOfDisk, and NetworkUnavailable. Monitoring and Troubleshooting Kubernetes Clusters Using the Kubernetes Dashboard The Kubernetes dashboard is a web-based user interface for Kubernetes. It allows you to deploy containerized apps to a Kubernetes cluster, see an overview of the applications operating on the cluster, and manage cluster resources. Additionally, it enables you to: Debug containerized applications by examining data on the health of your Kubernetes cluster's resources, as well as any anomalies that have occurred. Create and modify individual Kubernetes resources, including deployments, jobs, DaemonSets, and StatefulSets. Have direct control over your Kubernetes environment using the Kubernetes dashboard. The Kubernetes dashboard is built into Kubernetes by default and can be installed and viewed from the Kubernetes master node. Once deployed, you can visit the dashboard via a web browser to examine extensive information about your Kubernetes cluster and conduct different operations like scaling deployments, establishing new resources, and updating application configurations. Kubernetes Dashboard Essential Features Kubernetes Dashboard comes with some essential features that help manage and monitor your Kubernetes clusters efficiently: Cluster overview: The dashboard displays information about your Kubernetes cluster, including the number of nodes, pods, and services, as well as the current CPU and memory use. Resource management: The dashboard allows you to manage Kubernetes resources, including deployments, services, and pods. You can add, update, and delete resources while also seeing extensive information about them. Application monitoring: The dashboard allows you to monitor the status and performance of Kubernetes-based apps. You may see logs and stats, fix issues, and set alarms.

Customizable views: The dashboard allows you to create and preserve bespoke dashboards with the metrics and information that are most essential to you. Kubernetes Monitoring Best Practices Here are some recommended practices to help you properly monitor and debug Kubernetes installations: 1. Monitor Kubernetes Metrics Kubernetes microservices require understanding granular resource data like memory, CPU, and load. However, these metrics may be complex and challenging to leverage. API indicators such as request rate, call error, and latency are the most effective KPIs for identifying service faults. These metrics can immediately identify degradations in a microservices application's components. 2. Ensure Monitoring Systems Have Enough Data Retention Having scalable monitoring solutions helps you to efficiently monitor your Kubernetes cluster as it grows and evolves over time. As your Kubernetes cluster expands, so will the quantity of data it creates, and your monitoring systems must be capable of handling this rise. If your systems are not scalable, they may get overwhelmed by the volume of data and be unable to offer accurate or relevant results. 3. Integrate Monitoring Systems Into Your CI/CD Pipeline Source Integrating Kubernetes monitoring solutions with CI/CD pipelines enables you to monitor your apps and infrastructure as they are deployed, rather than afterward. By connecting your monitoring systems to your pipeline for continuous integration and delivery (CI/CD), you can automatically collect and process data from your infrastructure and applications as it is delivered. This enables you to identify potential issues early on and take action to stop them from getting worse. 4. Create Alerts You may identify the problems with your Kubernetes cluster early on and take action to fix them before they get worse by setting up the right alerts. For example, if you configure alerts for crucial metrics like CPU or memory use, you will be informed when those metrics hit specific thresholds, allowing you to take action before your cluster gets overwhelmed. Conclusion Kubernetes allows for the deployment of a large number of containerized applications within its clusters, each of which has nodes that manage the containers. Efficient observability across various machines and components is critical for successful Kubernetes container orchestration. Kubernetes has built-in monitoring facilities for its control plane, but they may not be sufficient for thorough analysis and granular insight into application workloads, event logging, and other microservice metrics within Kubernetes clusters.

0 notes

Text

Developing and Deploying AI/ML Applications on Red Hat OpenShift AI (AI268)

As AI and Machine Learning continue to reshape industries, the need for scalable, secure, and efficient platforms to build and deploy these workloads is more critical than ever. That’s where Red Hat OpenShift AI comes in—a powerful solution designed to operationalize AI/ML at scale across hybrid and multicloud environments.

With the AI268 course – Developing and Deploying AI/ML Applications on Red Hat OpenShift AI – developers, data scientists, and IT professionals can learn to build intelligent applications using enterprise-grade tools and MLOps practices on a container-based platform.

🌟 What is Red Hat OpenShift AI?

Red Hat OpenShift AI (formerly Red Hat OpenShift Data Science) is a comprehensive, Kubernetes-native platform tailored for developing, training, testing, and deploying machine learning models in a consistent and governed way. It provides tools like:

Jupyter Notebooks

TensorFlow, PyTorch, Scikit-learn

Apache Spark

KServe & OpenVINO for inference

Pipelines & GitOps for MLOps

The platform ensures seamless collaboration between data scientists, ML engineers, and developers—without the overhead of managing infrastructure.

📘 Course Overview: What You’ll Learn in AI268

AI268 focuses on equipping learners with hands-on skills in designing, developing, and deploying AI/ML workloads on Red Hat OpenShift AI. Here’s a quick snapshot of the course outcomes:

✅ 1. Explore OpenShift AI Components

Understand the ecosystem—JupyterHub, Pipelines, Model Serving, GPU support, and the OperatorHub.

✅ 2. Data Science Workspaces

Set up and manage development environments using Jupyter notebooks integrated with OpenShift’s security and scalability features.

✅ 3. Training and Managing Models

Use libraries like PyTorch or Scikit-learn to train models. Learn to leverage pipelines for versioning and reproducibility.

✅ 4. MLOps Integration

Implement CI/CD for ML using OpenShift Pipelines and GitOps to manage lifecycle workflows across environments.

✅ 5. Model Deployment and Inference

Serve models using tools like KServe, automate inference pipelines, and monitor performance in real-time.

🧠 Why Take This Course?

Whether you're a data scientist looking to deploy models into production or a developer aiming to integrate AI into your apps, AI268 bridges the gap between experimentation and scalable delivery. The course is ideal for:

Data Scientists exploring enterprise deployment techniques

DevOps/MLOps Engineers automating AI pipelines

Developers integrating ML models into cloud-native applications

Architects designing AI-first enterprise solutions

🎯 Final Thoughts

AI/ML is no longer confined to research labs—it’s at the core of digital transformation across sectors. With Red Hat OpenShift AI, you get an enterprise-ready MLOps platform that lets you go from notebook to production with confidence.

If you're looking to modernize your AI/ML strategy and unlock true operational value, AI268 is your launchpad.

👉 Ready to build and deploy smarter, faster, and at scale? Join the AI268 course and start your journey into Enterprise AI with Red Hat OpenShift.

For more details www.hawkstack.com

0 notes

Text

DevOps with Docker and Kubernetes Coaching by Gritty Tech

Introduction

In the evolving world of software development and IT operations, the demand for skilled professionals in DevOps with Docker and Kubernetes coaching is growing rapidly. Organizations are seeking individuals who can streamline workflows, automate processes, and enhance deployment efficiency using modern tools like Docker and Kubernetes For More…

Gritty Tech, a leading global platform, offers comprehensive DevOps with Docker and Kubernetes coaching that combines hands-on learning with real-world applications. With an expansive network of expert tutors across 110+ countries, Gritty Tech ensures that learners receive top-quality education with flexibility and support.

What is DevOps with Docker and Kubernetes?

Understanding DevOps

DevOps is a culture and methodology that bridges the gap between software development and IT operations. It focuses on continuous integration, continuous delivery (CI/CD), automation, and faster release cycles to improve productivity and product quality.

Role of Docker and Kubernetes

Docker allows developers to package applications and dependencies into lightweight containers that can run consistently across environments. Kubernetes is an orchestration tool that manages these containers at scale, handling deployment, scaling, and networking with efficiency.

When combined, DevOps with Docker and Kubernetes coaching equips professionals with the tools and practices to deploy faster, maintain better control, and ensure system resilience.

Why Gritty Tech is the Best for DevOps with Docker and Kubernetes Coaching

Top-Quality Education, Affordable Pricing

Gritty Tech believes that premium education should not come with a premium price tag. Our DevOps with Docker and Kubernetes coaching is designed to be accessible, offering robust training programs without compromising quality.

Global Network of Expert Tutors

With educators across 110+ countries, learners benefit from diverse expertise, real-time guidance, and tailored learning experiences. Each tutor is a seasoned professional in DevOps, Docker, and Kubernetes.

Easy Refunds and Tutor Replacement

Gritty Tech prioritizes your satisfaction. If you're unsatisfied, we offer a no-hassle refund policy. Want a different tutor? We offer tutor replacements swiftly, without affecting your learning journey.

Flexible Payment Plans

Whether you prefer monthly billing or paying session-wise, Gritty Tech makes it easy. Our flexible plans are designed to suit every learner’s budget and schedule.

Practical, Hands-On Learning

Our DevOps with Docker and Kubernetes coaching focuses on real-world projects. You'll learn to set up CI/CD pipelines, containerize applications, deploy using Kubernetes, and manage cloud-native applications effectively.

Key Benefits of Learning DevOps with Docker and Kubernetes

Streamlined Development: Improve collaboration between development and operations teams.

Scalability: Deploy applications seamlessly across cloud platforms.

Automation: Minimize manual tasks with scripting and orchestration.

Faster Delivery: Enable continuous integration and continuous deployment.

Enhanced Security: Learn secure deployment techniques with containers.

Job-Ready Skills: Gain competencies that top tech companies are actively hiring for.

Curriculum Overview

Our DevOps with Docker and Kubernetes coaching covers a wide array of modules that cater to both beginners and experienced professionals:

Module 1: Introduction to DevOps Principles

DevOps lifecycle

CI/CD concepts

Collaboration and monitoring

Module 2: Docker Fundamentals

Containers vs. virtual machines

Docker installation and setup

Building and managing Docker images

Networking and volumes

Module 3: Kubernetes Deep Dive

Kubernetes architecture

Pods, deployments, and services

Helm charts and configurations

Auto-scaling and rolling updates

Module 4: CI/CD Integration

Jenkins, GitLab CI, or GitHub Actions

Containerized deployment pipelines

Monitoring tools (Prometheus, Grafana)

Module 5: Cloud Deployment

Deploying Docker and Kubernetes on AWS, Azure, or GCP

Infrastructure as Code (IaC) with Terraform or Ansible

Real-time troubleshooting and performance tuning

Who Should Take This Coaching?

The DevOps with Docker and Kubernetes coaching program is ideal for:

Software Developers

System Administrators

Cloud Engineers

IT Students and Graduates

Anyone transitioning into DevOps roles

Whether you're a beginner or a professional looking to upgrade your skills, this coaching offers tailored learning paths to meet your career goals.

What Makes Gritty Tech Different?

Personalized Mentorship

Unlike automated video courses, our live sessions with tutors ensure all your queries are addressed. You'll receive personalized feedback and career guidance.

Career Support

Beyond just training, we assist with resume building, interview preparation, and job placement resources so you're confident in entering the job market.

Lifetime Access

Enrolled students receive lifetime access to updated materials and recorded sessions, helping you stay up to date with evolving DevOps practices.

Student Success Stories

Thousands of learners across continents have transformed their careers through our DevOps with Docker and Kubernetes coaching. Many have secured roles as DevOps Engineers, Site Reliability Engineers (SRE), and Cloud Consultants at leading companies.

Their success is a testament to the effectiveness and impact of our training approach.

FAQs About DevOps with Docker and Kubernetes Coaching

What is DevOps with Docker and Kubernetes coaching?

DevOps with Docker and Kubernetes coaching is a structured learning program that teaches you how to integrate Docker containers and manage them using Kubernetes within a DevOps lifecycle.

Why should I choose Gritty Tech for DevOps with Docker and Kubernetes coaching?

Gritty Tech offers experienced mentors, practical training, flexible payments, and global exposure, making it the ideal choice for DevOps with Docker and Kubernetes coaching.

Is prior experience needed for DevOps with Docker and Kubernetes coaching?

No. While prior experience helps, our coaching is structured to accommodate both beginners and professionals.

How long does the DevOps with Docker and Kubernetes coaching program take?

The average duration is 8 to 12 weeks, depending on your pace and session frequency.

Will I get a certificate after completing the coaching?

Yes. A completion certificate is provided, which adds value to your resume and validates your skills.

What tools will I learn in DevOps with Docker and Kubernetes coaching?

You’ll gain hands-on experience with Docker, Kubernetes, Jenkins, Git, Terraform, Prometheus, Grafana, and more.

Are job placement services included?

Yes. Gritty Tech supports your career with resume reviews, mock interviews, and job assistance services.

Can I attend DevOps with Docker and Kubernetes coaching part-time?

Absolutely. Sessions are scheduled flexibly, including evenings and weekends.

Is there a money-back guarantee for DevOps with Docker and Kubernetes coaching?

Yes. If you’re unsatisfied, we offer a simple refund process within a stipulated period.

How do I enroll in DevOps with Docker and Kubernetes coaching?

You can register through the Gritty Tech website. Our advisors are ready to assist you with the enrollment process and payment plans.

Conclusion

Choosing the right platform for DevOps with Docker and Kubernetes coaching can define your success in the tech world. Gritty Tech offers a powerful combination of affordability, flexibility, and expert-led learning. Our commitment to quality education, backed by global tutors and personalized mentorship, ensures you gain the skills and confidence needed to thrive in today’s IT landscape.

Invest in your future today with Gritty Tech — where learning meets opportunity.

0 notes

Text

**The Future of Cloud Services: How New York Companies Can Leverage Microsoft and Google Technologies**

Introduction

In modern instantly evolving virtual panorama, cloud capabilities have remodeled the means firms operate. Particularly for https://elliotmvza837.yousher.com/navigating-cybersecurity-inside-the-big-apple-essential-it-support-solutions-for-new-york-businesses organizations in New York, leveraging structures like Microsoft Azure and Google Cloud can give a boost to operational effectivity, foster innovation, and ascertain amazing security measures. This article delves into the long run of cloud providers and promises insights on how New York corporations can harness the potential of Microsoft and Google technology to remain aggressive in their respective industries.

The Future of Cloud Services: How New York Companies Can Leverage Microsoft and Google Technologies

The destiny of cloud expertise isn't always practically garage; it’s approximately creating a flexible surroundings that helps improvements throughout sectors. For New York prone, adopting technology from giants like Microsoft and Google can lead to greater agility, more advantageous facts management skills, and elevated protection protocols. As organizations a growing number of shift toward digital options, expertise these technology becomes the most important for sustained boom.

Understanding Cloud Services What Are Cloud Services?

Cloud facilities seek advice from a number computing sources provided over the information superhighway (the "cloud"). These incorporate:

Infrastructure as a Service (IaaS): Virtualized computing materials over the web. Platform as a Service (PaaS): Platforms permitting developers to build functions without dealing with underlying infrastructure. Software as a Service (SaaS): Software delivered over the net, removing the need for deploy. Key Benefits of Cloud Services Cost Efficiency: Reduces capital expenditure on hardware. Scalability: Easily scales supplies structured on call for. Accessibility: Access facts and programs from anywhere. Security: Advanced defense options guard sensitive counsel. Microsoft's Role in Cloud Computing Overview of Microsoft Azure

Microsoft Azure is probably the most main cloud carrier companies offering a range of services and products resembling virtual machines, databases, analytics, and AI features.

Core Features of Microsoft Azure Virtual Machines: Create scalable VMs with more than a few working structures. Azure SQL Database: A controlled database carrier for app improvement. AI & Machine Learning: Integrate AI features seamlessly into functions. Google's Impact on Cloud Technologies Introduction to Google Cloud Platform (GCP)

Google's cloud featuring emphasizes high-overall performance computing and mechanical device mastering potential tailored for agencies seeking resourceful ideas.

youtube

Distinct Features of GCP BigQuery: A effective analytics device for tremendous datasets. Cloud Functions: Event-pushed serverless compute platform. Kubernetes Engine: Manag

0 notes

Text

**The Future of Cloud Services: How New York Companies Can Leverage Microsoft and Google Technologies**

Introduction

In trendy swiftly evolving virtual panorama, cloud facilities have changed the approach groups function. Particularly for providers in New York, leveraging platforms like Microsoft Azure and Google Cloud can give a boost to operational efficiency, foster innovation, and be sure that sturdy security measures. This article delves into the future of cloud facilities and gives you insights on how New York organisations can harness the chronic of Microsoft and Google applied sciences to stay aggressive of their respective industries.

The Future of Cloud Services: How New York Companies Can Leverage Microsoft and Google Technologies

The long term of cloud offerings is absolutely not as regards to garage; it’s approximately growing a versatile environment that helps improvements across sectors. For New York providers, adopting technologies from giants like Microsoft and Google can bring about more suitable agility, more advantageous statistics control functions, and better safety protocols. As companies increasingly more shift in opposition to digital suggestions, understanding these technology becomes needed for sustained boom.

Understanding Cloud Services What Are Cloud Services?

Cloud features refer https://www.instagram.com/wheelhouse.it/ to a variety of computing instruments equipped over the net (the "cloud"). These contain:

youtube

Infrastructure as a Service (IaaS): Virtualized computing instruments over the web. Platform as a Service (PaaS): Platforms allowing developers to construct programs with no managing underlying infrastructure. Software as a Service (SaaS): Software added over the internet, doing away with the want for deploy. Key Benefits of Cloud Services Cost Efficiency: Reduces capital expenditure on hardware. Scalability: Easily scales elements situated on demand. Accessibility: Access archives and applications from anyplace. Security: Advanced safety beneficial properties defend touchy data. Microsoft's Role in Cloud Computing Overview of Microsoft Azure

Microsoft Azure is one of several optimal cloud carrier carriers offering diverse functions comparable to digital machines, databases, analytics, and AI skills.

Core Features of Microsoft Azure Virtual Machines: Create scalable VMs with varied operating programs. Azure SQL Database: A controlled database carrier for app growth. AI & Machine Learning: Integrate AI competencies seamlessly into programs. Google's Impact on Cloud Technologies Introduction to Google Cloud Platform (GCP)

Google's cloud presenting emphasizes prime-functionality computing and equipment mastering abilities adapted for organisations looking ingenious options.

Distinct Features of GCP BigQuery: A useful analytics software for big datasets. Cloud Functions: Event-pushed serverless compute platform. Kubernetes Engine: Manage containerized packages effective

0 notes

Text

**The Future of Cloud Services: How New York Companies Can Leverage Microsoft and Google Technologies**

Introduction

In ultra-modern speedily evolving virtual panorama, cloud facilities have changed the way organizations function. Particularly for organizations in New York, leveraging structures like Microsoft Azure and Google Cloud can enrich operational efficiency, foster innovation, and be sure that powerful security features. This article delves into the long run of cloud capabilities and offers insights on how New York services can harness the continual of Microsoft and Google technology to stay competitive in their respective industries.

" style="max-width:500px;height:auto;">

The Future of Cloud Services: How New York Companies Can Leverage Microsoft and Google Technologies

The future of cloud amenities shouldn't be virtually garage; it’s about growing a flexible surroundings that supports suggestions across sectors. For New York organisations, adopting technologies from giants like Microsoft and Google can result in extra agility, superior records leadership skills, and enhanced protection protocols. As groups increasingly more shift in opposition t digital strategies, understanding these applied sciences becomes obligatory for sustained growth.

youtube

Understanding Cloud Services What Are Cloud Services?

Cloud https://ameblo.jp/waylongwta678/entry-12895928598.html expertise seek advice from quite a number computing components furnished over the net (the "cloud"). These consist of:

Infrastructure as a Service (IaaS): Virtualized computing components over the web. Platform as a Service (PaaS): Platforms permitting builders to construct applications without managing underlying infrastructure. Software as a Service (SaaS): Software introduced over the web, removing the need for setting up. Key Benefits of Cloud Services Cost Efficiency: Reduces capital expenditure on hardware. Scalability: Easily scales components based mostly on demand. Accessibility: Access documents and packages from any place. Security: Advanced defense points maintain delicate recordsdata. Microsoft's Role in Cloud Computing Overview of Microsoft Azure

Microsoft Azure is one of several most well known cloud carrier providers featuring varying providers reminiscent of virtual machines, databases, analytics, and AI abilties.

Core Features of Microsoft Azure Virtual Machines: Create scalable VMs with numerous operating structures. Azure SQL Database: A managed database provider for app progress. AI & Machine Learning: Integrate AI capabilities seamlessly into functions. Google's Impact on Cloud Technologies Introduction to Google Cloud Platform (GCP)

Google's cloud featuring emphasizes high-functionality computing and mechanical device getting to know capabilities adapted for organisations searching for modern suggestions.

Distinct Features of GCP BigQuery: A efficient analytics tool for gigantic datasets. Cloud Functions: Event-pushed serverless compute platform. Kubernetes Engine: Man

1 note

·

View note

Text

Implementing Microservices in Node.js with Docker & Kubernetes

Please note that due to length constraints, this response provides a condensed version of the tutorial. Each section is covered in summary form, and code snippets are simplified. For a full implementation, additional details and code would be required. 1. Introduction 1.1 Overview Microservices architecture is a design approach that structures an application as a collection of loosely coupled,…

0 notes

Text

Human-Centric Exploration of Generative AI Development

Generative AI is more than a buzzword. It’s a transformative technology shaping industries and igniting innovation across the globe. From creating expressive visuals to designing personalized experiences, it allows organizations to build powerful, scalable solutions with lasting impact. As tools like ChatGPT and Stable Diffusion continue to gain traction, investors and businesses alike are exploring the practical steps to develop generative AI solutions tailored to real-world needs.

Why Generative AI is the Future of Innovation

The rapid rise of generative AI in sectors like finance, healthcare, and media has drawn immense interest—and funding. OpenAI's valuation crossed $25 billion with Microsoft backing it with over $1 billion, signaling confidence in generative models even amidst broader tech downturns. The market is projected to reach $442.07 billion by 2031, driven by its ability to generate text, code, images, music, and more. For companies looking to gain a competitive edge, investing in generative AI isn’t just a trend—it’s a strategic move.

What Makes Generative AI a Business Imperative?

Generative AI increases efficiency by automating tasks, drives creative ideation beyond human limits, and enhances decision-making through data analysis. Its applications include marketing content creation, virtual product design, intelligent customer interactions, and adaptive user experiences. It also reduces operational costs and helps businesses respond faster to market demands.

How to Create a Generative AI Solution: A Step-by-Step Overview

1. Define Clear Objectives: Understand what problem you're solving and what outcomes you seek. 2. Collect and Prepare Quality Data: Whether it's image, audio, or text-based, the dataset's quality sets the foundation. 3. Choose the Right Tools and Frameworks: Utilize Python, TensorFlow, PyTorch, and cloud platforms like AWS or Azure for development. 4. Select Suitable Architectures: From GANs to VAEs, LSTMs to autoregressive models, align the model type with your solution needs. 5. Train, Fine-Tune, and Test: Iteratively improve performance through tuning hyperparameters and validating outputs. 6. Deploy and Monitor: Deploy using Docker, Flask, or Kubernetes and monitor with MLflow or TensorBoard.

Explore a comprehensive guide here: How to Create Your Own Generative AI Solution

Industry Applications That Matter

Healthcare: Personalized treatment plans, drug discovery

Finance: Fraud detection, predictive analytics

Education: Tailored learning modules, content generation

Manufacturing: Process optimization, predictive maintenance

Retail: Customer behavior analysis, content personalization

Partnering with the Right Experts

Building a successful generative AI model requires technical know-how, domain expertise, and iterative optimization. This is where generative AI consulting services come into play. A reliable generative AI consulting company like SoluLab offers tailored support—from strategy and development to deployment and scale.

Whether you need generative AI consultants to help refine your idea or want a long-term partner among top generative AI consulting companies, SoluLab stands out with its proven expertise. Explore our Gen AI Consulting Services

Final Thoughts

Generative AI is not just shaping the future—it’s redefining it. By collaborating with experienced partners, adopting best practices, and continuously iterating, you can craft AI solutions that evolve with your business and customers. The future of business is generative—are you ready to build it?

0 notes

Text

Developing and Deploying AI/ML Applications on Red Hat OpenShift AI with Hawkstack

Artificial Intelligence (AI) and Machine Learning (ML) are driving innovation across industries—from predictive analytics in healthcare to real-time fraud detection in finance. But building, scaling, and maintaining production-grade AI/ML solutions remains a significant challenge. Enter Red Hat OpenShift AI, a powerful platform that brings together the flexibility of Kubernetes with enterprise-grade ML tooling. And when combined with Hawkstack, organizations can supercharge observability and performance tracking throughout their AI/ML lifecycle.

Why Red Hat OpenShift AI?

Red Hat OpenShift AI (formerly Red Hat OpenShift Data Science) is a robust enterprise platform designed to support the full AI/ML lifecycle—from development to deployment. Key benefits include:

Scalability: Native Kubernetes integration allows seamless scaling of ML workloads.

Security: Red Hat’s enterprise security practices ensure that ML pipelines are secure by design.

Flexibility: Supports a variety of tools and frameworks, including Jupyter Notebooks, TensorFlow, PyTorch, and more.

Collaboration: Built-in tools for team collaboration and continuous integration/continuous deployment (CI/CD).

Introducing Hawkstack: Observability for AI/ML Workloads

As you move from model training to production, observability becomes critical. Hawkstack, a lightweight and extensible observability framework, integrates seamlessly with Red Hat OpenShift AI to provide real-time insights into system performance, data drift, model accuracy, and infrastructure metrics.

Hawkstack + OpenShift AI: A Powerful Duo

By integrating Hawkstack with OpenShift AI, you can:

Monitor ML Pipelines: Track metrics across training, validation, and deployment stages.

Visualize Performance: Dashboards powered by Hawkstack allow teams to monitor GPU/CPU usage, memory footprint, and latency.

Enable Alerting: Proactively detect model degradation or anomalies in your inference services.

Optimize Resources: Fine-tune resource allocation based on telemetry data.

Workflow: Developing and Deploying ML Apps

Here’s a high-level overview of what a modern AI/ML workflow looks like on OpenShift AI with Hawkstack:

1. Model Development

Data scientists use tools like JupyterLab or VS Code on OpenShift AI to build and train models. Libraries such as scikit-learn, XGBoost, and Hugging Face Transformers are pre-integrated.

2. Pipeline Automation

Using Red Hat OpenShift Pipelines (Tekton), you can automate training and evaluation pipelines. Integrate CI/CD practices to ensure robust and repeatable workflows.

3. Model Deployment

Leverage OpenShift AI’s serving layer to deploy models using Seldon Core, KServe, or OpenVINO Model Server—all containerized and scalable.

4. Monitoring and Feedback with Hawkstack

Once deployed, Hawkstack takes over to monitor inference latency, throughput, and model accuracy in real-time. Anomalies can be fed back into the training pipeline, enabling continuous learning and adaptation.

Real-World Use Case

A leading financial services firm recently implemented OpenShift AI and Hawkstack to power their loan approval engine. Using Hawkstack, they detected a model drift issue caused by seasonal changes in application data. Alerts enabled retraining to be triggered automatically, ensuring their decisions stayed fair and accurate.

Conclusion

Deploying AI/ML applications in production doesn’t have to be daunting. With Red Hat OpenShift AI, you get a secure, scalable, and enterprise-ready foundation. And with Hawkstack, you add observability and performance intelligence to every stage of your ML lifecycle.

Together, they empower organizations to bring AI/ML innovations to market faster—without compromising on reliability or visibility.

For more details www.hawkstack.com

0 notes