#mongodb charts

Explore tagged Tumblr posts

Text

The Technology Stack Behind a Fast Cryptocurrency Exchange Script

In the fast-paced world of crypto trading, speed, security, and scalability are everything. Whether you're launching a new platform or upgrading an existing one, the technology stack behind your cryptocurrency exchange script plays a crucial role in user experience and platform success.

Let’s break down what goes into building a lightning-fast and secure crypto exchange.

🔹 Frontend Technologies

Speedy UI/UX is critical. Most modern exchanges use React.js or Vue.js to build interactive, responsive interfaces. These frameworks ensure real-time updates for charts, orders, and wallets without page reloads.

🔹 Backend Frameworks

A high-performance backend is the engine. Node.js, Go, or Python (Django) are popular choices thanks to their scalability and non-blocking architecture. These handle everything from API requests to order matching engines efficiently.

🔹 Database Layer

Handling massive transactional data? Robust databases like PostgreSQL, MongoDB, or Redis are often used for storing trade history, user data, and wallet balances. Some platforms use a hybrid structure to combine speed and consistency.

🔹 Blockchain Integration

A core component of any cryptocurrency exchange script is wallet integration. Secure APIs connect your platform to major blockchains like Bitcoin, Ethereum, BNB Chain, and others for deposits, withdrawals, and transaction tracking.

🔹 Security Stack

Cyber threats are real. A solid exchange script includes 2FA, anti-DDoS protection, KYC/AML modules, and encrypted wallets to ensure compliance and protect user funds.

🔹 Real-Time Engines

For instant order execution and price updates, technologies like WebSockets and Kafka enable real-time data flow, critical for high-frequency traders.

🔹 DevOps & Deployment

Using Docker, Kubernetes, and cloud platforms like AWS or Azure ensures that your exchange is scalable, highly available, and easy to maintain. A solid cryptocurrency exchange script backed by this stack not only performs faster but also earns user trust. Are you using the right stack to build your crypto platform?

0 notes

Text

Understanding Data Science: The Backbone of Modern Decision-Making

Data science is the multidisciplinary field that blends statistical analysis, programming, and domain knowledge to extract actionable insights from complex datasets. It plays a critical role in everything from predicting customer behavior to detecting fraud, personalizing healthcare, and optimizing supply chains.

What is Data Science?

At its core, data science is about turning data into knowledge. It combines tools and techniques from statistics, computer science, and mathematics to analyze large volumes of data and solve real-world problems.

A data scientist’s job is to:

Ask the right questions

Collect and clean data

Analyze and interpret trends

Build models and algorithms

Present results in an understandable way

It’s not just about numbers it's about finding patterns and making smarter decisions based on those patterns.

Why is Data Science Important?

Data is often called the new oil, but just like oil, it needs to be refined before it becomes valuable. That’s where data science comes in.

Here’s why it matters:

Business Growth: Data science helps businesses forecast trends, improve customer experience, and create targeted marketing strategies.

Automation: It enables automation of repetitive tasks through machine learning and AI, saving time and resources.

Risk Management: Financial institutions use data science to detect fraud and manage investment risks.

Innovation: From healthcare to agriculture, data science drives innovation by providing insights that lead to better decision-making.

Key Components of Data Science

To truly understand data science, it’s important to know its main components:

Data Collection Gathering raw data from various sources like databases, APIs, sensors, or user behavior logs.

Data Cleaning and Preprocessing Raw data is messy—cleaning involves handling missing values, correcting errors, and formatting for analysis.

Exploratory Data Analysis (EDA) Identifying patterns, correlations, and anomalies using visualizations and statistical summaries.

Machine Learning & Predictive Modeling Building algorithms that learn from data and make predictions—such as spam filters or recommendation engines.

Data Visualization Communicating findings through charts, dashboards, or storytelling tools to help stakeholders make informed decisions.

Deployment & Monitoring Integrating models into real-world systems and constantly monitoring their performance.

Popular Tools & Languages in Data Science

A data scientist’s toolbox includes several powerful tools:

Languages: Python, R, SQL

Libraries: Pandas, NumPy, Matplotlib, Scikit-learn, TensorFlow

Visualization Tools: Tableau, Power BI, Seaborn

Big Data Platforms: Hadoop, Spark

Databases: MySQL, PostgreSQL, MongoDB

Python remains the most preferred language due to its simplicity and vast library ecosystem.

Applications of Data Science

Data science isn’t limited to tech companies. Here’s how it’s applied across different industries:

Healthcare: Predict disease outbreaks, personalize treatments, manage patient data.

Retail: Track customer behavior, manage inventory, and enhance recommendations.

Finance: Detect fraud, automate trading, and assess credit risk.

Marketing: Segment audiences, optimize campaigns, and analyze consumer sentiment.

Manufacturing: Improve supply chain efficiency and predict equipment failures.

Careers in Data Science

Demand for data professionals is skyrocketing. Some popular roles include:

Data Scientist Builds models and interprets complex data.

Data Analyst Creates reports and visualizations from structured data.

Machine Learning Engineer Designs and deploys AI models.

Data Engineer Focuses on infrastructure and pipelines for collecting and processing data.

Business Intelligence Analyst Turns data into actionable business insights.

According to LinkedIn and Glassdoor, data science is one of the most in-demand and well-paying careers globally.

How to Get Started in Data Science

You don’t need a Ph.D. to begin your journey. Start with the basics:

Learn Python or R Focus on data structures, loops, and libraries like Pandas and NumPy.

Study Statistics and Math Understanding probability, distributions, and linear algebra is crucial.

Work on Projects Real-world datasets from platforms like Kaggle or UCI Machine Learning Repository can help you build your portfolio.

Stay Curious Read blogs, follow industry news, and never stop experimenting with data.

Final Thoughts

Data science is more than a buzzword it’s a revolution in how we understand the world around us. Whether you're a student, professional, or entrepreneur, learning data science opens the door to endless possibilities.

In a future driven by data, the question is not whether you can afford to invest in data science but whether you can afford not to.

0 notes

Text

The Future of Forex Trading Is Software—Here’s How to Build It

The forex (foreign exchange) market is one of the busiest markets in the world. Every day, people trade trillions of dollars by buying and selling currencies.

Thanks to new technology, forex trading is changing fast. Today, many traders use software to trade better, faster, and smarter. In this blog, I’ll explain why forex software is the future - and how you can build your own.

Why Forex Trading Is Going Digital

In the past, traders had to call brokers to place a trade. Now, everything happens online. With mobile phones, artificial intelligence (AI), and cloud technology, forex trading has become faster and easier. Here’s why software is now so important:

1. Fast and Efficient

The forex market moves very quickly. Prices can change in seconds. Trading software lets you react instantly, so you don’t miss any chances.

2. Automatic Trading

Software can trade for you using rules you set. This is called automated or algorithmic trading. The software can work for you all day—even while you sleep.

3. Smart Data Use

Trading software can look at large amounts of data in just a few seconds. It can find patterns and help you make better decisions.

4. Lower Costs

Using software can save money. It reduces trading fees and you won’t need a big team, since software can do most of the work.

What Good Forex Trading Software Needs

If you want to build forex trading software, make sure it has these key features:

Fast and Stable

The software must work in real-time and place trades quickly. Even a one-second delay can cost money.

Simple to Use

The design should be clean and easy to understand. Beginners and experienced users should both feel comfortable using it.

Safe and Secure

Security is very important. Your software must protect user data and money with tools like strong passwords and two-factor login.

Flexible

Let users change the settings, choose different indicators, and set alerts. This way, they can trade in their own style.

Works on Phones

Many traders use their phones. Your software should work smoothly on both computers and mobile devices.

Test Strategies

Let users test their strategies using old market data. This helps them learn and avoid mistakes when using real money.

How to Build Forex Trading Software

Now let’s look at the steps to create your own forex trading platform:

1. Know Your Users

Start by understanding who will use your software. Are they new traders or professionals? Study other platforms and look for ways to improve.

2. Pick the Right Tools

You’ll need:

A good frontend (what users see) like React or Vue

A strong backend (how the software works) like Python or Node.js

A database to store user info (like PostgreSQL or MongoDB)

APIs to get real-time forex prices (such as Alpha Vantage or ForexFeed)

3. Design a Simple Interface

Work with a designer to build a clear and easy layout. Users should be able to open accounts, add money, trade, and check charts easily.

4. Add Key Trading Features

Include tools like:

Buy and sell orders (market, limit, stop)

Profit and loss settings

Charts and indicators

Live news and price updates

5. Add Automation

Let users create or choose trading bots. You can offer simple tools like drag-and-drop builders or let advanced users write their own code.

6. Test Everything

Before you launch, test your software well. Check for bugs, run simulations, and make sure it works on all devices and under heavy use.

7. Launch and Keep Improving

After launch, listen to user feedback. Keep updating the software with better tools, more features, and stronger security.

How AI Can Help in Forex Trading

Artificial Intelligence (AI) is becoming a big part of trading. It can:

Predict future price moves using past data

Warn users about risky trades

Suggest strategies based on the user’s trading habits

By using AI, your software can become smarter and more helpful to users.

Final Thoughts

Forex trading is changing, and software is leading the way. With the right tools, traders can trade faster and make smarter decisions. If you want to build forex trading software development, now is a great time.

Focus on making it fast, simple, secure, and smart. Add helpful tools like automation and AI. If you do it right, your platform won’t just be another app—it will help shape the future of forex trading.

0 notes

Text

Inventory Management System Development

Inventory management is essential for businesses that deal with physical goods. An efficient inventory system helps track stock levels, manage orders, reduce waste, and improve overall operational efficiency. In this blog post, we’ll explore the key components and programming approach for building an Inventory Management System (IMS).

Core Features of an Inventory Management System

Product Catalog: Add, edit, delete, and categorize products.

Stock Tracking: Monitor stock levels in real-time.

Purchase & Sales Records: Track incoming and outgoing items.

Supplier & Customer Management: Manage business relationships.

Reports & Analytics: Generate sales, inventory, and purchase reports.

Alerts: Notify when stock is low or out of stock.

Tech Stack Suggestions

Frontend: React.js, Vue.js, or Angular

Backend: Node.js, Django, Laravel, or Spring Boot

Database: MySQL, PostgreSQL, or MongoDB

Authentication: JWT, OAuth, or Firebase Auth

Deployment: Docker + AWS/GCP/Heroku

Basic Database Structure

Products Table: - product_id (PK) - name - category - quantity - price - supplier_id (FK) Suppliers Table: - supplier_id (PK) - name - contact_info Sales Table: - sale_id (PK) - product_id (FK) - quantity_sold - date Purchases Table: - purchase_id (PK) - product_id (FK) - quantity_purchased - date

Sample API Endpoints (Node.js Example)

GET /products – List all products

POST /products – Add a new product

PUT /products/:id – Update product details

DELETE /products/:id – Remove a product

GET /inventory/report – Generate inventory report

Frontend Functionality Tips

Use modals for adding/editing items

Display stock levels using color indicators (e.g., red for low stock)

Enable filtering/searching by product category or supplier

Use charts for visual stock and sales analytics

Bonus Features to Consider

Barcode Scanning: Integrate barcode scanning for quick item lookup

Role-Based Access: Allow different permissions for admin, staff, and viewer

Mobile Access: Build a mobile-responsive UI or companion app

Data Export: Export inventory reports to Excel/PDF

Conclusion

Building an inventory management system can significantly benefit any business that handles products or stock. By designing a system with clean UI, efficient backend logic, and accurate data handling, you can help companies stay organized and save time. Start simple, scale gradually, and always prioritize usability and security in your system design.

0 notes

Text

Top 15 Data Collection Tools in 2025: Features, Benefits

In the data-driven world of 2025, the ability to collect high-quality data efficiently is paramount. Whether you're a seasoned data scientist, a marketing guru, or a business analyst, having the right data collection tools in your arsenal is crucial for extracting meaningful insights and making informed decisions. This blog will explore 15 of the best data collection tools you should be paying attention to this year, highlighting their key features and benefits.

Why the Right Data Collection Tool Matters in 2025:

The landscape of data collection has evolved significantly. We're no longer just talking about surveys. Today's tools need to handle diverse data types, integrate seamlessly with various platforms, automate processes, and ensure data quality and compliance. The right tool can save you time, improve accuracy, and unlock richer insights from your data.

Top 15 Data Collection Tools to Watch in 2025:

Apify: A web scraping and automation platform that allows you to extract data from any website. Features: Scalable scraping, API access, workflow automation. Benefits: Access to vast amounts of web data, streamlined data extraction.

ParseHub: A user-friendly web scraping tool with a visual interface. Features: Easy point-and-click interface, IP rotation, cloud-based scraping. Benefits: No coding required, efficient for non-technical users.

SurveyMonkey Enterprise: A robust survey platform for large organizations. Features: Advanced survey logic, branding options, data analysis tools, integrations. Benefits: Scalable for complex surveys, professional branding.

Qualtrics: A comprehensive survey and experience management platform. Features: Advanced survey design, real-time reporting, AI-powered insights. Benefits: Powerful analytics, holistic view of customer experience.

Typeform: Known for its engaging and conversational survey format. Features: Beautiful interface, interactive questions, integrations. Benefits: Higher response rates, improved user experience.

Jotform: An online form builder with a wide range of templates and integrations. Features: Customizable forms, payment integrations, conditional logic. Benefits: Versatile for various data collection needs.

Google Forms: A free and easy-to-use survey tool. Features: Simple interface, real-time responses, integrations with Google Sheets. Benefits: Accessible, collaborative, and cost-effective.

Alchemer (formerly SurveyGizmo): A flexible survey platform for complex research projects. Features: Advanced question types, branching logic, custom reporting. Benefits: Ideal for in-depth research and analysis.

Formstack: A secure online form builder with a focus on compliance. Features: HIPAA compliance, secure data storage, integrations. Benefits: Suitable for regulated industries.

MongoDB Atlas Charts: A data visualization tool with built-in data collection capabilities. Features: Real-time data updates, interactive charts, MongoDB integration. Benefits: Seamless for MongoDB users, visual data exploration.

Amazon Kinesis Data Streams: A scalable and durable real-time data streaming service. Features: High throughput, real-time processing, integration with AWS services. Benefits: Ideal for collecting and processing streaming data.

Apache Kafka: A distributed streaming platform for building real-time data pipelines. Features: High scalability, fault tolerance, real-time data processing. Benefits: Robust for large-scale streaming data.

Segment: A customer data platform that collects and unifies data from various sources. Features: Data integration, identity resolution, data governance. Benefits: Holistic view of customer data, improved data quality.

Mixpanel: A product analytics platform that tracks user interactions within applications. Features: Event tracking, user segmentation, funnel analysis. Benefits: Deep insights into user behavior within digital products.

Amplitude: A product intelligence platform focused on understanding user engagement and retention. Features: Behavioral analytics, cohort analysis, journey mapping. Benefits: Actionable insights for product optimization.

Choosing the Right Tool for Your Needs:

The best data collection tool for you will depend on the type of data you need to collect, the scale of your operations, your technical expertise, and your budget. Consider factors like:

Data Type: Surveys, web data, streaming data, product usage data, etc.

Scalability: Can the tool handle your data volume?

Ease of Use: Is the tool user-friendly for your team?

Integrations: Does it integrate with your existing systems?

Automation: Can it automate data collection processes?

Data Quality Features: Does it offer features for data cleaning and validation?

Compliance: Does it meet relevant data privacy regulations?

Elevate Your Data Skills with Xaltius Academy's Data Science and AI Program:

Mastering data collection is a crucial first step in any data science project. Xaltius Academy's Data Science and AI Program equips you with the fundamental knowledge and practical skills to effectively utilize these tools and extract valuable insights from your data.

Key benefits of the program:

Comprehensive Data Handling: Learn to collect, clean, and prepare data from various sources.

Hands-on Experience: Gain practical experience using industry-leading data collection tools.

Expert Instructors: Learn from experienced data scientists who understand the nuances of data acquisition.

Industry-Relevant Curriculum: Stay up-to-date with the latest trends and technologies in data collection.

By exploring these top data collection tools and investing in your data science skills, you can unlock the power of data and drive meaningful results in 2025 and beyond.

1 note

·

View note

Text

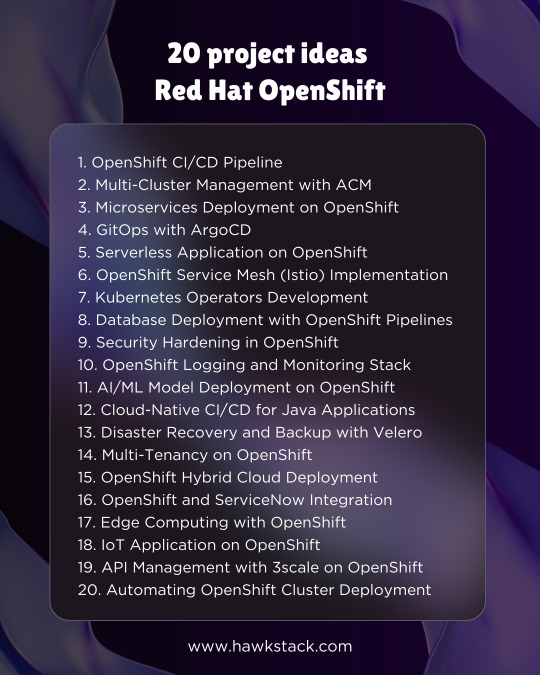

20 project ideas for Red Hat OpenShift

1. OpenShift CI/CD Pipeline

Set up a Jenkins or Tekton pipeline on OpenShift to automate the build, test, and deployment process.

2. Multi-Cluster Management with ACM

Use Red Hat Advanced Cluster Management (ACM) to manage multiple OpenShift clusters across cloud and on-premise environments.

3. Microservices Deployment on OpenShift

Deploy a microservices-based application (e.g., e-commerce or banking) using OpenShift, Istio, and distributed tracing.

4. GitOps with ArgoCD

Implement a GitOps workflow for OpenShift applications using ArgoCD, ensuring declarative infrastructure management.

5. Serverless Application on OpenShift

Develop a serverless function using OpenShift Serverless (Knative) for event-driven architecture.

6. OpenShift Service Mesh (Istio) Implementation

Deploy Istio-based service mesh to manage inter-service communication, security, and observability.

7. Kubernetes Operators Development

Build and deploy a custom Kubernetes Operator using the Operator SDK for automating complex application deployments.

8. Database Deployment with OpenShift Pipelines

Automate the deployment of databases (PostgreSQL, MySQL, MongoDB) with OpenShift Pipelines and Helm charts.

9. Security Hardening in OpenShift

Implement OpenShift compliance and security best practices, including Pod Security Policies, RBAC, and Image Scanning.

10. OpenShift Logging and Monitoring Stack

Set up EFK (Elasticsearch, Fluentd, Kibana) or Loki for centralized logging and use Prometheus-Grafana for monitoring.

11. AI/ML Model Deployment on OpenShift

Deploy an AI/ML model using OpenShift AI (formerly Open Data Hub) for real-time inference with TensorFlow or PyTorch.

12. Cloud-Native CI/CD for Java Applications

Deploy a Spring Boot or Quarkus application on OpenShift with automated CI/CD using Tekton or Jenkins.

13. Disaster Recovery and Backup with Velero

Implement backup and restore strategies using Velero for OpenShift applications running on different cloud providers.

14. Multi-Tenancy on OpenShift

Configure OpenShift multi-tenancy with RBAC, namespaces, and resource quotas for multiple teams.

15. OpenShift Hybrid Cloud Deployment

Deploy an application across on-prem OpenShift and cloud-based OpenShift (AWS, Azure, GCP) using OpenShift Virtualization.

16. OpenShift and ServiceNow Integration

Automate IT operations by integrating OpenShift with ServiceNow for incident management and self-service automation.

17. Edge Computing with OpenShift

Deploy OpenShift at the edge to run lightweight workloads on remote locations, using Single Node OpenShift (SNO).

18. IoT Application on OpenShift

Build an IoT platform using Kafka on OpenShift for real-time data ingestion and processing.

19. API Management with 3scale on OpenShift

Deploy Red Hat 3scale API Management to control, secure, and analyze APIs on OpenShift.

20. Automating OpenShift Cluster Deployment

Use Ansible and Terraform to automate the deployment of OpenShift clusters and configure infrastructure as code (IaC).

For more details www.hawkstack.com

#OpenShift #Kubernetes #DevOps #CloudNative #RedHat #GitOps #Microservices #CICD #Containers #HybridCloud #Automation

0 notes

Text

Road Map for Data Science: A Complete Guide to Becoming a Data Scientist

In the digital-first world of today, data science is one of the most in-demand career paths. Every industry-from healthcare to finance and e-commerce-is driven by data-driven decision-making, which makes it a very rewarding and future-proof career.

However, how would someone begin? Which tools are needed to know? What type of career exists? This handbook acts as a roadmap, allowing up-and-coming professionals in data science the path forward toward creating a sustainable and thriving career.

Step 1: Knowing What Data Science Is

Data science is essentially the art of extracting insights from data. It is where skills in statistics, programming, and domain expertise are combined to analyze large pieces of information while driving decisions for business.

Key Areas in Data Science

To become a good data scientist, one has to be very skilled in

✔ Data Collection & Cleaning: Gathering raw data and making it usable.

✔ Exploratory Data Analysis (EDA): Understanding patterns and trends.

✔ Machine Learning & AI: Building predictive models for automation.

✔ Big Data Technologies: Handling large-scale datasets efficiently.

✔ Data Visualization: Communicating insights effectively using charts and graphs.

As the use of data-driven decision-making becomes more prevalent, the need for individuals to be proficient in these areas increases across all industries.

Learning Step 2: Tools and Technologies to Master

One needs to master tools to execute real-world analytics. Some of the most popular tools applied in the industry are:

Programming Languages: Python (most in-demand), R, SQL

Data Manipulation: Pandas, NumPy

Machine Learning Frameworks: Scikit-learn, TensorFlow, PyTorch

Big Data & Cloud Platforms: Apache Spark, AWS, Google Cloud, Azure

Data Visualization: Matplotlib, Seaborn, Tableau, Power BI

Databases: SQL, MongoDB

A data scientist doesn't need to learn all these at once. Starting with Python, SQL, and machine learning frameworks is a good starting point.

Step 3: Establishing a Mathematical and Statistical Backbone

Mathematics and statistics are the backbone of data science, and there is wide focus to be sure to master the following topics:

✅ Linear Algebra: Matrices and vectors are very necessary in machine learning models

✅ Probability & Statistics: Used for prediction and understanding data distributions

✅ Calculus: Used in optimization techniques applied in machine learning algorithms.

While coding is important, mathematical intuition does help a data scientist build better models and make quality decisions.

Step 4: Acquiring Practical Experience via Projects

Theoretical knowledge is not sufficient-there must be real-world projects to close the gap between learning and application.

Here's how one can acquire practical experience:

Work on datasets from Kaggle, UCI Machine Learning Repository, or real world business cases.

Work on building models of machine learning to predict trends (such as stock prices, customer churn).

Build dashboards using Tableau or Power BI.

Hackathons/competitions. This will really challenge your skills.

A robust portfolio of projects with real-world applications is one of the greatest ways to communicate expertise to prospective employers.

Step 5: Career Opportunities in Data Science

Data scientists are in high demand because businesses see the value of data-driven strategy. Some career paths include the following:

1. Data Scientist

Most common, where people work on machine learning models, data insights.

2. Data Analyst

Deals with cleaning and visualization of data and reports in SQL, Excel, BI tools.

3. Machine Learning Engineer

The job deals with developing and deploying AI-based applications. The job requires deep knowledge in deep learning and cloud platforms.

4. Business Intelligence Analyst

This role deals with company data interpretation and developing dashboards for the guidance of executives to take decisions.

5. AI Research Scientist

Works on emerging innovations related to AI and model development

More and more companies are now using AI for automation. With that kind of promise, a career in data science has never looked better.

Here are some best data analytics courses for beginners and professionals looking to upskill. In return, this helps with structured learning and industry-specific training.

India and the Global Boom in Data Science

India is at a massive threshold change in data science and AI. With initiatives such as Digital India, AI for All, and Smart Cities, the whole nation is in the process of taking big data analytics and AI very rapidly within different industries.

There are key points that shape the data science revolution in India.

✔ Growing IT Ecosystem: TCS, Infosys, Wipro, and Accenture are already pumping in capital on AI solutions.

✔ Booming Startups: India has become a hub for AI startups in fintech, e-commerce, and healthcare.

✔ Government Support: The Indian government is actively investing in AI research and innovation.

✔ Skilled Workforce: India produces thousands of engineers and data scientists every year.

The foremost in-demand skills in today's era are those related to python, SQL, Pandas, and machine learning, where AI-driven solutions are on the rise.

Step 6: Keeping Up and Networking

Data science is a constantly evolving field. To keep up, professionals must:

✅ Follow AI research papers and case studies.

✅ Join data science communities and forums like Kaggle, GitHub, and Stack Overflow.

Attend conferences and webinars.

Network with industry professionals through LinkedIn and local meetups.

Building connections and staying up-to-date with new tools and trends ensures long-term success in the field.

Conclusion

The road to becoming a data scientist requires dedication, continuous learning, and hands-on experience. By mastering programming, machine learning, statistics, and business intelligence tools, one can build a rewarding career in this high-growth field.

As India continues with its AI-driven transformation, there will only be a greater need for skilled professionals. Be you a fresher or an experienced professional, the scope for data science is infinite, providing one with limitless avenues to innovate, grow, and make a difference.

Start now and be a part of the future of AI and analytics!

0 notes

Text

How to Create a Binance Clone: A Comprehensive Guide for Developers

Binance is one of the world's leading cryptocurrency exchanges, providing a platform to buy, sell, and trade a wide variety of cryptocurrencies. creating a binance clone is an ambitious project that involves understanding both the core features of the exchange and the complex technologies required to manage secure financial transactions.

This guide will cover the features, technology stack, and step-by-step process required to develop a Binance clone.

1. Understanding the main features of Binance

Before diving into development, it is important to identify the key features of Binance that make it popular:

spot trading: The main feature of Binance where users can buy and sell cryptocurrencies.

Forward trading: Allows users to trade contracts based on the price of the cryptocurrency rather than the asset.

expressed: Users can lock their cryptocurrencies in exchange for rewards.

Peer-to-Peer (P2P) Trading: Binance allows users to buy and sell directly with each other.

wallet management: A secure and easy way for users to store and withdraw cryptocurrencies.

security features: Includes two-factor authentication (2FA), cold storage, and encryption to ensure secure transactions.

market data: Real-time updates on cryptocurrency prices, trading volumes and other market analysis.

API for trading:For advanced users to automate their trading strategies and integrate with third-party platforms.

2. Technology Stack to Build a Binance Clone

Building a Binance clone requires a strong technology stack, as the platform needs to handle millions of transactions, provide real-time market data, and ensure high-level security. Here is a recommended stack:

front end: React or Angular for building the user interface, ensuring it is interactive and responsive.

backend: Node.js with Express to handle user authentication, transaction management, and API requests. Alternatively, Python can be used with Django or Flask for better management of numerical data.

database: PostgreSQL or MySQL for relational data storage (user data, transaction logs, etc.). For cryptocurrency data, consider using a NoSQL database like MongoDB.

blockchain integration: Integrate with blockchain networks, like Bitcoin, Ethereum, and others, to handle cryptocurrency transactions.

websocket: To provide real-time updates on cryptocurrency prices and trades.

cloud storage: AWS, Google Cloud, or Microsoft Azure for secure file storage, especially for KYC documents or transaction logs.

payment gateway: Integrate with payment gateways like Stripe or PayPal to allow fiat currency deposits and withdrawals.

Security: SSL encryption, 2FA (Google Authenticator or SMS-based), IP whitelisting, and cold wallet for cryptocurrency storage.

Containerization and Scaling: Use Docker for containerization, Kubernetes for orchestration, and microservices to scale and handle traffic spikes.

crypto api: For real-time market data, price alerts and order book management. APIs like CoinGecko or CoinMarketCap can be integrated.

3. Designing the User Interface (UI)

The UI of a cryptocurrency exchange should be user-friendly, easy to navigate, and visually appealing. Here are the key components to focus on:

dashboard: A simple yet informative dashboard where users can view their portfolio, balance, recent transactions and price trends.

trading screen: An advanced trading view with options for spot trading, futures trading, limit orders, market orders and more. Include charts, graphs and candlestick patterns for real-time tracking.

account settings: Allow users to manage their personal information, KYC documents, two-factor authentication, and security settings.

Deposit/Withdrawal Screen: An interface for users to deposit and withdraw both fiat and cryptocurrencies.

Transaction History: A comprehensive history page where users can track all their deposits, withdrawals, trades and account activities.

Order Book and Market Data: Display buy/sell orders, trading volume and price charts in real time.

4. Key Features for Implementation

The key features that need to be implemented in your Binance clone include:

User Registration and Authentication: Users should be able to sign up via email or social login (Google, Facebook). Implement two-factor authentication (2FA) for added security.

KYC (Know Your Customer): Verify the identity of users before allowing them to trade or withdraw large amounts. You can integrate KYC services using third-party providers like Jumio or Onfido.

spot and futures trading: Implement spot and futures trading functionality where users can buy, sell or trade cryptocurrencies.

Order Types: Include different order types such as market orders, limit orders and stop-limit orders for advanced trading features.

real time data feed: Use WebSockets to provide real-time market data including price updates, order book and trade history.

wallet management: Create secure wallet for users to store their cryptocurrencies. You can use hot wallets for frequent transactions and cold wallets for long-term storage.

deposit and withdrawal system: Allow users to deposit and withdraw both fiat and cryptocurrencies. Make sure you integrate with a trusted third-party payment processor for fiat withdrawals.

Security: Implement multi-layered security measures like encryption, IP whitelisting, and DDoS protection. Cold storage for large amounts of cryptocurrencies and real-time fraud monitoring should also be prioritized.

5. Blockchain Integration

One of the most important aspects of building a Binance clone is integrating the right blockchain network for transaction processing. You will need to implement the following:

blockchain nodes: Set up nodes for popular cryptocurrencies like Bitcoin, Ethereum, Litecoin, etc. to process transactions and verify blocks.

smart contracts: Use smart contracts for secure transactions, especially for tokenized assets or ICOs (Initial Coin Offerings).

cryptocurrency wallet: Develop wallet solutions supporting major cryptocurrencies. Make sure wallets are secure and easy to use for transactions.

6. Security measures

Security is paramount when building a cryptocurrency exchange, and it is important to ensure that your platform is protected from potential threats. Here are the key security measures to implement:

ssl encryption: Encrypt all data exchanged between the server and the user's device.

Two-Factor Authentication (2FA): Implement Google Authenticator or SMS-based 2FA for user login and transaction verification.

Cold room: Store most user funds in cold storage wallets to reduce the risk of hacks.

anti phishing: Implement anti-phishing features to protect users from fraudulent websites and attacks.

audit trails: Keep detailed logs of all transactions and account activities for transparency and security audits.

7. Monetization Strategies for Your Binance Clone

There are several ways to earn from Binance clone:

trading fees: Charge a small fee (either fixed or percentage-based) for each trade made on the platform.

withdrawal fee: Charge a fee for cryptocurrency withdrawals or fiat withdrawals from bank accounts.

margin trading: Provide margin trading services for advanced users and charge interest on borrowed funds.

token lists: Charge projects a fee for listing their tokens on their platform.

Affiliate Program: Provide referral links for users to invite others to join the platform, thereby earning a percentage of their trading fees.

8. Challenges in creating a Binance clone

Creating a Binance clone is not without challenges:

Security: Handling the security of users' funds and data is important, as crypto exchanges are prime targets for cyber attacks.

rules: Depending on your target sector, you will need to comply with various financial and crypto regulations (e.g., AML/KYC regulations).

scalability: The platform must be able to handle millions of transactions, especially during market surges.

real time data: Handling real-time market data and ensuring low latency is crucial for a trading platform.

conclusion

Creating a Binance clone is a complex but rewarding project that requires advanced technical skills in blockchain integration, secure payment processing, and real-time data management. By focusing on key features, choosing the right tech stack, and implementing strong security measures, you can build a secure and feature-rich cryptocurrency exchange.

If you are involved in web development with WordPress and want to add a crypto payment system to your site, or if you want to integrate cryptocurrency features, feel free to get in touch! I will be happy to guide you in this.

0 notes

Text

Astrology App Development Costs Revealed: Unlocking the Secrets of Astrotalk

The astrology app industry is booming, with users seeking insights into their lives through horoscopes and astrological advice. This surge in demand has led to a growing interest in developing astrology apps. Understanding the costs involved can help potential developers navigate this exciting market effectively. And while talking about Astrology Apps. We always thought about Astrotalk. The platform’s precise forecasts, in-depth analysis, and reliable services have encouraged user confidence, generating a dedicated community.

So here, in this blog we will get to know about app development like AstroTalk along with the features and how much cost is to develop app like Astrotalk.

The Booming Astrology App Market: Statistics and Growth Projections

The popularity of astrology apps continues to rise. As of 2023, the global astrology app market is valued at over $2 billion, with projections indicating it could reach $5 billion by 2025. This growth is fueled by the increasing adoption of mobile technology and a growing interest in personal well-being.

Why Develop an Astrology App? Market Opportunities and User Demands

Astrology apps attract diverse demographics. With an ever-increasing number of users seeking guidance from the stars, a well-designed astrology app meets a clear market necessity. Features such as daily forecasts, compatibility reports, and personalized readings are in high demand. Developers can tap into a dedicated audience eager for insightful content.

Navigating the Development Landscape: A Preview of Cost Factors

When embarking on the journey of astrology app development, various factors affect the overall cost. Understanding these can help in planning your budget effectively:

Complexity of Features: The more advanced the features, the higher the costs.

Design Quality: Investing in high-quality design impacts user retention.

Development Approach: Choosing between native, hybrid, or cross-platform affects costs significantly.

Phase 1: Planning and Design – Setting a Budget Foundation

Defining App Features and Functionality: Core Astrology Features vs. Premium Options

Start by identifying essential features users expect:

Daily horoscopes

Birth chart calculations

Compatibility insights

Personalized readings

Premium features might include:

In-app consultations with astrologers

Exclusive content and tools

Advanced analytical features

UI/UX Design and Prototyping: Investing in User Experience

A user-friendly design is crucial for app success. Invest in creating a simple, engaging interface. Prototyping helps streamline the design process and allows for user feedback before full development.

Market Research and Competitive Analysis: Understanding Your Target Audience

Conduct thorough market research to identify trends and user preferences. Analyzing competitors can offer insights into what works and what doesn't, helping you carve out a unique space in the market.

Phase 2: Development – The Core of Your Investment

Choosing a Development Approach: Native, Hybrid, or Cross-Platform

Your choice of development approach impacts both cost and performance. Native apps deliver better performance but require separate development for iOS and Android, leading to higher costs. Hybrid apps are more cost-effective and allow for a single codebase but may sacrifice some performance. Select a development approach that aligns with your target audience:

Native apps offer the best performance but come with higher costs.

Hybrid apps balance functionality and cost.

Cross-platform apps are budget-friendly but may lack some features.

Technology Stack Selection: Languages, Frameworks, and Databases

Choose the right technologies to support your app:

Front-end: React Native, Flutter, or Swift

Back-end: Node.js, Ruby on Rails, or Python

Database: Firebase, MySQL, or MongoDB

Backend Development and Server Infrastructure: Scalability and Security

A robust backend is essential. Choose secure hosting and data management options to ensure user data safety. Costs vary depending on the choice of servers and services. Cloud solutions like AWS or Google Cloud can offer scalable options that grow with your user base.

Phase 3: Testing and Quality Assurance – Ensuring a Smooth Launch

Types of Testing: Unit, Integration, and User Acceptance Testing

Testing is critical to delivering a quality app. Different types of testing, such as unit, integration, and user acceptance testing, help identify and fix issues early. Comprehensive testing prevents poor user experiences and negative reviews. Testing is vital to identify issues. Use different types of testing to ensure your app works flawlessly:

Unit testing focuses on individual components.

Integration testing checks how components work together.

User acceptance testing validates the app with real users.

Bug Fixing and Iteration: Addressing User Feedback and Performance Issues

After testing, bugs are often noticed. Be prepared to fix these promptly. Iterating based on user feedback improves the app experience and builds loyalty.

Quality Assurance Costs: Internal vs. External Testing Teams

Deciding between internal or external testing teams impacts costs. Internal teams may save money but require training and resources. External teams bring expertise but come at a higher initial cost.

Phase 4: Launch and Post-Launch Activities – Sustaining Growth

App Store Optimization (ASO): Maximizing Visibility and Downloads

Optimize your app for search in app stores. Use keywords effectively in the app title and description. Good ASO can significantly boost downloads.

Marketing and Promotion: Reaching Your Target Audience

Marketing strategies are essential for driving downloads. Social media, influencer partnerships, and targeted advertising can help reach even more users. Offering referral bonuses may also encourage word-of-mouth promotion.

Ongoing Maintenance and Updates: Adapting to Evolving User Needs

Regular updates keep your app relevant. Pay attention to user feedback, emerging trends, and technology advances to adapt your app accordingly. Regular updates can keep users engaged and loyal.

Phase 5: Monetization Strategies – Generating Revenue

In-App Purchases (IAP): Offering Premium Features and Content

In-app purchases allow users to access premium features. This can include exclusive content, personalized readings, or advanced tools.

Subscription Models: Recurring Revenue Streams

Subscription models provide consistent revenue. Offer monthly or yearly plans with different tiers to cater to various user preferences.

Advertising and Partnerships: Exploring Additional Revenue Sources

Partnering with brands or including ads can generate extra income. Ensure these align with your user base for the best results.

Conclusion: Making Astrotalk a Reality – Key Takeaways and Actionable Steps

The journey to develop a successful astrology app like Astrotalk involves careful planning, strategic investment, and continuous learning.

Next Steps: Planning Your Astrology App Development Journey

Create a detailed plan outlining your app’s unique features, conducting market research, and determining your budget. Engage with development teams to start building your vision.

Resources and Tools: Useful Links and Further Information

Explore online resources for insights into app development. Websites like Stack Overflow, GitHub, and Medium provide valuable information to support your project.

Creating an astrology app can be rewarding. With careful planning and strategic investments, you can tap into the thriving astrology market and cater to a growing audience eager for insight from the stars.

For more info visit us: https://deorwine.com/blog/astrotalk-like-astrology-app-development-cost-features/

Contact Us:

Website: https://deorwine.com

Email id: [email protected]

Skype: deorwineinfotech For Any Query Call Us at: +91-9116115717

#Astrology app development#Astrology app development cost#Astrology app feature#Astrology app development company#mobile app development company

0 notes

Text

Fitness App Development 2025: A Step-by-Step Guide.

Fitness app are gaining much popularity as more and more people are becoming aware of their well-being and health. With a huge increase in the number of health-conscious people, this is an ideal time for health and fitness businesses to build fitness apps. From customized diet charts to workout schedules, modern fitness apps offer a plethora of features to help users take control of their lifestyle.

A fitness app that goes beyond its essential features is considered already successful. The idea is to create a custom fitness app that’s intuitive, easy to use, and aligned with user requirements. The core of a winning app lies in its clean interface, which allows users to navigate easily and move toward their goals without any help. The app should provide them with an actionable roadmap while also motivating them to stay consistent.

Fitness App Market: What’s the Future?

During the pandemic, people were forced to stay indoors. Gyms, fitness centers, and public parks were closed for workouts. As a result, the demand for reliable fitness apps and wearable devices like the Apple Watch increased. These apps provided people with workout videos, motivational tips, personalized diet plans, and a safe alternative to crowded gyms they would go otherwise.

Fast-forward to today, the fitness website development business is now driven by people’s positive attitude towards health and advancements in the IT sector. The fitness app market is growing so rapidly that it is expected to reach around $14.7 bn by 2026. With such strong statistics, a fitness app is no longer a luxury but it has become a necessity.

As the trends show no visible signs of going down, modern fitness apps will soon become essential for people’s lives. Just like any other app (calendar, messages, contacts, Whatsapp) it will come already integrated into mobile devices in the future.

How To Develop A Fitness App?

Creating a winning fitness app involves following some essential steps. Each step is critical to ensure your app is unique and meets the requirements of the user to perform well in today’s competitive market.

1) Understanding Your Target Audience– Before thinking about the idea of your fitness app, it’s essential to understand who your target audience is. What are they looking for? What are their pain points? Identify your target audience and their fitness goals. After identifying, your goal is to design an app that solves their problem and stands out from the competition. You can tap on any single demand amongst these trending ones- Nutrition and diet apps, activity tracker apps, mental wellness apps, and workout apps.

2) Conduct Market Research– After you have successfully identified the target audience for your app, it’s time to review the market. Shortlist some winning fitness apps in the market to see what type of features are appreciated by users and where these apps are lacking. This will help you to spot opportunities. For example, if the present apps lack personalized diet plans with the workout, you can offer the same and attract more users. AI integration is another trend that you can hop on. AI can automate things and offer smart suggestions to your users.

3) Selecting the Right Technology– Choose the right technology to build your fitness app. This step includes selecting the programming languages and software that goes perfectly with your app needs. For example, if you want your app to function seamlessly on various devices, select a tech stack that supports this requirement. frontend development- you can opt for React Native App or Flutter App. For backend development, opt for Node.js or Django so that your app can handle heavy user traffic. Utilize APIs to connect your app with services like social media, fitness wearables, etc. For databases, you may try using MongoDB, which efficiently stores and manages data.

4) Focus On User Experience– A positive user experience makes your app a winning one. Focus on creating a user-friendly design that is easier to navigate and fun to use. Ensure your app looks aesthetic and works seamlessly across smartphones and laptops. Furthermore, a simple design helps in creating a clutter-free environment for the user and avoids confusion in locating the features.

5) Build A Scalable Security System– Develop a scalable security system that can handle the heavy traffic of users and work well across devices. Ensure your app can scale its security system as its popularity grows. A successful system architecture ensures smooth operation as more users join, preventing crashes or faults.

6) Test and Improve Your App– Subsequently, the next step is to test your app to find any issues before launch. Collect feedback from test users and learn what features they appreciate and what is the scope for improvement. Fix issues if any. Regular updates based on feedback help maintain performance and keep the app relevant.

Trending Categories in Fitness Apps

1) Workout and Exercise Apps– These apps provide workout and exercise videos to users. Easy access to these types of videos helps in maintaining your workout routines and supports personal fitness plans. These apps also provide community support to keep you moving and share your fitness journey with like-minded people. The famous app- Nike Training Club offers 100+ styles of workouts, including cardio, yoga, pilates, weight training, and Zumba.

2) Activity Tracking Apps– Activity tracking apps monitor your daily physical activities. These activities include the total steps you walk in a day, calories burnt, stairs climbed, etc. This gives you a detailed view of your daily fitness activities, and suggestions to improve it. For instance, a very popular app in this category is MyFitnessPal, which can track users’ diets and exercises. It lets users log their meals and track daily calorie intake.

3) Nutrition and Diet Apps– This is another popular category of fitness apps that help users manage their eating habits. Expert diet recommendations help users plan meals, find recipes, and track nutrients and calories. “MyPlate Calorie Counter” is one such example that is worth the hype. Besides tracking your nutrition and regular diet, it offers recipe ideas and personalized meal plans. Plus, a community support feature provides insights, tips, and daily motivation.

4) Yoga and Meditation Apps– The craze for yoga and meditation is not just limited to India, but in other countries like the UK, France, Germany etc. It will be a multi-million dollar industry very soon. Apps in this category help users relax and practice meditation for calmness and a non-cluttered mind. For example, Deep breathing exercises, mindfulness, yoga poses, etc are a few of the practices. “Headspace” is one popular app that provides 1-2 min videos to help with sleep, stress, and mindfulness. It has helped millions of users to achieve a better lifestyle.

5) Outdoor Fitness Apps– Outdoor fitness apps include running and cycling apps, which are favorites of outdoor adventurers. They can track their cycling activities and how many calories they are burning while doing an activity. These apps feature GPS routes, performance analysis, and plan routes. “Strava” is one app popular amongst runners and cyclists. This app shows performance metrics and you can even connect with your social media friends to compete with them.

Must Have Features to Include in Your Fitness Application

Onboarding– Therefore, instead of throwing some boring and long questions for your users, you can use engaging, fun quizzes to make the onboarding process entertaining for them.

User profile– Similarly, a detailed user profile where they can talk about their workout goals and diet preferences is a good start to your app.

Video Libraries– Make a separate section for video tutorials and guides to give users a clear direction of the exercises and how to do them correctly.

Goal Setting and Progress Tracking– Implement features to track user activities and allow them to set goals, log workouts, and feel motivated to move towards their fitness goal.

Getting Social– Encourage your community members to share their fitness achievements, participate in daily challenges, and share tips with everyone.

Push Notifications– Instead of sending your users general tips, or promotions–give them personalized notifications based on their daily activities to keep them engaged.

Voice Recognition and Control- This is a more advanced feature that you can embed in your custom fitness app. This lets users communicate with the app during workouts without using a mobile device. Moreover, that way, users can give voice commands to the app. For example, asking some questions regarding a particular exercise and getting an answer in return.

Movement Analysis– This feature is supported by AI, which allows your app to analyze the user’s movements while performing an exercise, and provide them instant feedback to correct their posture. This feature works in real time. The app can identify deviations in the user’s position and guide them to correct the posture with audio prompts. Embedding this feature in your app requires help from experienced AI engineers, but it is worth the money and effort.

Conclusion

Brain Inventory, as a fitness android app development company, has all the resources and staff you need to make a fitness app for your business. We create apps for any level of complexity and creativeness. Additionally, We have the availability of in-house engineers, UI/UX designers, project managers, QA experts, and fitness app developers to help us build a winning app. Whether you need to fill gaps or hire a full team of experts, just contact us!

0 notes

Text

Prefab Cloud Spanner And PostgreSQL: Flexible And Affordable

Prefab’s Cloud Spanner with PostgreSQL: Adaptable, dependable, and reasonably priced for any size

PostgreSQL is a fantastic OLTP database that can serve the same purposes as Redis for real-time access, MongoDB for schema flexibility, and Elastic for data that doesn’t cleanly fit into tables or SQL. It’s like having a Swiss Army knife in the world of databases. PostgreSQL manages everything with elegance, whether you need it for analytics queries or JSON storage. Its transaction integrity is likewise flawless.

NoSQL databases, such as HBase, Cassandra, and DynamoDB, are at the other end of the database spectrum. Unlike PostgreSQL’s adaptability, these databases are notoriously difficult to set up, comprehend, and work with. However, their unlimited scalability compensates for their inflexibility. NoSQL databases are the giants of web-scale databases because they can handle enormous amounts of data and rapid read/write performance.

However, is there a database that can offer both amazing scale and versatility?

It might have it both ways after its experience with Spanner.

Why use the PostgreSQL interface from Spanner?

At Prefab, Google uses dynamic logging, feature flags, and secrets management to help developers ship apps more quickly. To construct essential features, including evaluation charts, that aid in it operations, scaling, and product improvement, it employ Cloud Spanner as a data store for its customers’ setups, feature flags, and generated client telemetry.

The following are some of the main features that attracted to Spanner:

99.99% uptime by default (multi-availability zone); if you operate in many regions, you can reach up to 99.999% uptime.

Robust ACID transactions

Scaling horizontally, even for writes

Clients, queries, and schemas in PostgreSQL

To put it another way, Spanner offers the ease of use and portability that make PostgreSQL so alluring, along with the robustness and uptime of a massively replicated database on the scale of Google.

How Spanner is used in Prefab

Because Prefab’s architecture is divided into two sections, it made perfect sense for us to have a separate database for each section. This allowed us to select the most appropriate technology for the task. The two aspects of its architecture are as follows:

Using Google’s software development kits (SDKs), developers can leverage its core Prefab APIs to serve their clients.

Google Cloud clients utilize a web application to monitor and manage their app settings.

In addition to providing incredibly low latency, Google’s feature flag services must be scalable to satisfy the needs of the developers’ downstream clients. With Spanner’s support, Java and the Java virtual machine (JVM) are the ideal options for this high throughput, low latency, and high scalability sector. Although it has a much lower throughput, the user interface (UI) of its program must still enable us to provide features to its clients quickly. It uses PostgreSQL, React, and Ruby on Rails for this section of its architecture.

Spanner in operation

The backend for Google Cloud’s dynamic logging’s volume tracking is one functionality that currently makes use of Cloud Spanner. Its SDK transmits the volume for each log level and logger to Spanner after detecting log requests in its customers’ apps. Then, using the Prefab UI, Google Cloud leverages this information to assist users in determining how many log statements will be output to their log aggregator if they enable logging at different settings.

It need a table with the following shape in order to enable this capture:

CREATE TABLE logger_rollup ( id varchar(36) NOT NULL, start_at timestamptz NOT NULL, end_at timestamptz NOT NULL, project_id bigint NOT NULL, project_env_id bigint NOT NULL, logger_name text NOT NULL, trace_count bigint NOT NULL, debug_count bigint NOT NULL, info_count bigint NOT NULL, warn_count bigint NOT NULL, error_count bigint NOT NULL, fatal_count bigint NOT NULL, created_at spanner.commit_timestamp, client_id bigint, api_key_id bigint, PRIMARY KEY (project_env_id, logger_name, id) );

As clients provide the telemetry for Google Cloud’s dynamic logging, this table scales really quickly and erratically. Yes, a time series database or some clever windowing and data removal techniques might potentially be used for this. However, for the sake of this post, this is a simple method to show how Spanner aids in performance management for a table with a large amount of data.

Get 100X storage with no downtime for ⅓ of the cos

It must duplicate Prefab’s database among several zones during production. Because feature flags and dynamic configuration systems are single points of failure by design, reliability is crucial.

Here, Google adopts a belt and suspenders strategy, but its “belt” is robust with Spanner’s uptime SLA and multi-availability zone replication. You would need to treble the cost of a single instance of PostgreSQL to accomplish this. However, replication and automatic failover are included in Cloud Spanner pricing right out of the box. Additionally, you only pay for the bytes you use, and each node has a ton of storage space up to 10TB with Spanner’s latest improvements. This gives the comparison the following appearance for:

The best practice of having a database instance for each environment can become exorbitantly costly at small scales. This was a problem when I initially looked into Spanner a few years back because the least instance size was 1,000 PUs, or one node. Spanner’s scale has since been modified to scale down to less than a whole node, which makes our selection much simpler. Additionally, it allows us to scale up anytime we need to without having to restructure our apps or deal with outages.

Recent enhancements to the Google Cloud ecosystem with Spanner

When we first started using the PostgreSQL interface for Spanner, we encountered several difficulties. Nonetheless, we are thrilled that the majority of the first issues we ran into have been resolved because Google Cloud is always developing and enhancing its goods and services.

Here are a few of our favorite updates:

Query editor: Having a query editor in the Google Cloud console is quite handy as it enables us to examine and optimize any queries that perform poorly.

Key Visualizer: Understanding row keys becomes crucial when examining large-volume NoSQL databases with HBase. It can identify typical problems that lead to hotspots and examine Cloud Spanner data access trends over time with the Key Visualizer.

In brief

Although it has extensive prior experience with HBase and PostgreSQL, it is quite with its choice to use Spanner as Prefab’s preferred horizontally scalable operational database. For its requirements, it has found it to be simple to use, offering all the same scaling capabilities as HBase without the hassles of developing it yourself. It saves time and money when there are fewer possible points of failure and fewer items to manage.

Consider broadening your horizons if you’re afraid of large tables but haven’t explored options other than PostgreSQL. Spanner’s PostgreSQL interface combines the dependable and scalable nature of Cloud Spanner and Google Cloud with the portability and user-friendliness of PostgreSQL.

Start Now

Spanner is available for free for the first ninety days or for as low as $65 a month after that. Additionally, it would be delighted to establish a connection with you and would appreciate it if you could learn more about its Feature Flags, Dynamic Logging, and Secret Management, which are components of the solution built on top of Cloud Spanner.

Read more on Govindhtech.com

#Prefab#CloudSpanner#PostgreSQL#database#SQL#DynamoDB#SDK#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

1 note

·

View note

Text

Angular- which has emerged as a powerful framework for front-end development was designed to address the issues faced in single page application development. Currently, Angular is the best choice of most of the front-end designers for creating an interactive dynamic application. It is the crossed platform supporting language which requires Also, most of the coders prefer Angular because JavaScript is the main pillar behind its development. And, the most famous MEAN stack development requires all the JavaScript technologies to completely develop your whole application which comprised of MongoDB, ExpressJS, AngularJS, and NodeJS. Thus, they don’t need to learn various languages for the entire development. Learning AngularJS can be very beneficial for those who are willing to mark their presence in front-end or complete full stack development. Anyone can go through Angular Training for learning AngularJS so that they can create interactive designs, templates and dynamic contents for their application. The only requirement is a basic understanding of common web technologies like HTML and CSS. There are multiple AngularJS libraries freely accessible over the internet after utilizing them you can include cool and interactive features like charts, navigation, popups etc., in your web application. Here, I’m discussing a few libraries through which you can easily drive front-end development making it outstanding and effective for the end-users. Let’s explore them in-depth: PrimeNG PrimeNG has a massive collection of UI components for your Angular program. It is an open-source package registered distributed under the MIT license and created by PrimeTek Informatics. It comprised of 80+ components for promoting your UI design easily. For mobile page development, it offers enhanced user experience with touch-optimized elements fulfilling your responsive design need. Its interactive customizable templates allow you to start web designing journey in very less time. Currently, PrimeNG is used by more than one million developers. Its impressive functionalities make more than 500 government, private and other non-profitable organizations to adopt this package for the development. Material 2The material is the official component library of Google. It is developed on Angular and TypeScript. It is comprised of various components for as per material specifications. Whether it is about form-controls, navigation, layout, buttons and indicators, popups, modals, or data table- you will have a complete access to these resources. Thus, you can optimize and organize your content according to your objective. Anyone can leverage this complete package over the github with the comprehensive, modern user interface which runs across the internet, mobile phone or a desktop. NG-Lightening This package holds native angular components and directories. It is created from Scratch in TypeScript over the Lightning Design System CSS framework. If you are facing any interoperable issue like cross-domain, and you want to implement Salesforce Lightning Design System (SDLC) icons- don’t worry. It will assist you with a clone of multiple sprite files such as symbols.svg to which you can access locally on your system through the server. These stateless resources are dependent upon their input properties so that you can achieve an enhanced performance and flexibility to use. NG2 ChartsIf you are dealing with the issues for base charts, NG2 chart is the best option. It offers a complete package to serve varieties of charts including line, polar area, doughnut, pie, radar, bar. It offers various properties, events, colors to effectively include interactive charts in your Angular application. You can easily download this package from Github, here where it is registered under the MIT license. Onsen-UI Onsen-UI is another open source framework licensed under Apache 2.0 written in JavaScript. It assists developers in hybrid app development including HTML5, Cascading Style Sheets (CSS) and Javascript like web technologies.

This package comes up with tabs, side menu, navigation and bunch of other valuable components like lists and forms. It supports iOS and Android material designing with attractive styling to achieve better performance result. The best part about Onsen-UI is that it can support both iOS and Android without any trouble with same source code. Fuel-UIFor those who want new and incredible UI patterns, this library is one of the best options. Fuel-UI is one of the evolving UI components which can be implemented with Angular 2 and Bootstrap 4 project applications. It presents varieties of components, directives, and pipes to utilize your work in the easiest way. For including alert boxes, scrolling effects, popups, tags, bars, navigations, tabs etc., in your template you can simply integrate this library. It also offers directives like animations, highlighting tools, tooltips with multiple pipes to cover the most critical and technical aspects of the development.Thus, you can see how Angular is taking front-end development to another level through its massive set of libraries.

0 notes

Text

Angular- which has emerged as a powerful framework for front-end development was designed to address the issues faced in single page application development. Currently, Angular is the best choice of most of the front-end designers for creating an interactive dynamic application. It is the crossed platform supporting language which requires Also, most of the coders prefer Angular because JavaScript is the main pillar behind its development. And, the most famous MEAN stack development requires all the JavaScript technologies to completely develop your whole application which comprised of MongoDB, ExpressJS, AngularJS, and NodeJS. Thus, they don’t need to learn various languages for the entire development. Learning AngularJS can be very beneficial for those who are willing to mark their presence in front-end or complete full stack development. Anyone can go through Angular Training for learning AngularJS so that they can create interactive designs, templates and dynamic contents for their application. The only requirement is a basic understanding of common web technologies like HTML and CSS. There are multiple AngularJS libraries freely accessible over the internet after utilizing them you can include cool and interactive features like charts, navigation, popups etc., in your web application. Here, I’m discussing a few libraries through which you can easily drive front-end development making it outstanding and effective for the end-users. Let’s explore them in-depth: PrimeNG PrimeNG has a massive collection of UI components for your Angular program. It is an open-source package registered distributed under the MIT license and created by PrimeTek Informatics. It comprised of 80+ components for promoting your UI design easily. For mobile page development, it offers enhanced user experience with touch-optimized elements fulfilling your responsive design need. Its interactive customizable templates allow you to start web designing journey in very less time. Currently, PrimeNG is used by more than one million developers. Its impressive functionalities make more than 500 government, private and other non-profitable organizations to adopt this package for the development. Material 2The material is the official component library of Google. It is developed on Angular and TypeScript. It is comprised of various components for as per material specifications. Whether it is about form-controls, navigation, layout, buttons and indicators, popups, modals, or data table- you will have a complete access to these resources. Thus, you can optimize and organize your content according to your objective. Anyone can leverage this complete package over the github with the comprehensive, modern user interface which runs across the internet, mobile phone or a desktop. NG-Lightening This package holds native angular components and directories. It is created from Scratch in TypeScript over the Lightning Design System CSS framework. If you are facing any interoperable issue like cross-domain, and you want to implement Salesforce Lightning Design System (SDLC) icons- don’t worry. It will assist you with a clone of multiple sprite files such as symbols.svg to which you can access locally on your system through the server. These stateless resources are dependent upon their input properties so that you can achieve an enhanced performance and flexibility to use. NG2 ChartsIf you are dealing with the issues for base charts, NG2 chart is the best option. It offers a complete package to serve varieties of charts including line, polar area, doughnut, pie, radar, bar. It offers various properties, events, colors to effectively include interactive charts in your Angular application. You can easily download this package from Github, here where it is registered under the MIT license. Onsen-UI Onsen-UI is another open source framework licensed under Apache 2.0 written in JavaScript. It assists developers in hybrid app development including HTML5, Cascading Style Sheets (CSS) and Javascript like web technologies.

This package comes up with tabs, side menu, navigation and bunch of other valuable components like lists and forms. It supports iOS and Android material designing with attractive styling to achieve better performance result. The best part about Onsen-UI is that it can support both iOS and Android without any trouble with same source code. Fuel-UIFor those who want new and incredible UI patterns, this library is one of the best options. Fuel-UI is one of the evolving UI components which can be implemented with Angular 2 and Bootstrap 4 project applications. It presents varieties of components, directives, and pipes to utilize your work in the easiest way. For including alert boxes, scrolling effects, popups, tags, bars, navigations, tabs etc., in your template you can simply integrate this library. It also offers directives like animations, highlighting tools, tooltips with multiple pipes to cover the most critical and technical aspects of the development.Thus, you can see how Angular is taking front-end development to another level through its massive set of libraries.

0 notes

Text

How to Select Classes for Data Science That Will Help You Get a Job

It is always exciting and at the same time challenging as one can think of entering a career in data science. As much as organizations start practicing big data in their operations, they are likely to require data scientists. Performance in class greatly determines whether one will succeed in this competitive world hence the need to select the right courses. Read on for this step-by-step guide that will enable you to come up with a realistic plan on which classes to take to acquire skills and make yourself more marketable to employers. Here in this article, Advanto Software will guide you in the selection of classes for Data Science.

Defining the Essence of Classes for Data Science

We have to emphasize that, while considering courses, one should define the basic skills needed for a data scientist’s position. In simple words, data science is an interdisciplinary approach involving statistical analysis, programming, and domain knowledge. The primary skills needed include:

Statistical Analysis and Probability

Programming Languages (Python, R)

Machine Learning Algorithms

Data Visualization Techniques

Big Data Technologies

Data Wrangling and Cleaning

1. In this case, one should try to concentrate on those academic disciplines that form the basis for data science classes.

Statistical Analysis and Probability

Data science’s foundation is statistical analysis. This process comprises knowledge of distributions, testing of hypotheses, and inference-making processes out of data. Classes in statistical analysis will cover: Classes in statistical analysis will cover:

Descriptive Statistics: Arithmetic average, positional average, most frequent value, and measure of variation.

Inferential Statistics: Confidence Intervals, Hypothesis Testing, and Regression Analysis.

Probability Theory: Bayes’ Theorem, probability density and distribution functions and stochastic processes.

Programming for Data Science

To be precise, a data scientist cannot afford to have poor programming skills. Python and R are the two most popular languages in the area. Look for classes that offer:

Python Programming: Development skills in certain libraries, for instance, Pandas, NumPy, and Scikit-learn.

R Programming: This means focus on packages such as; ggplot, dplyr, and caret.

Data Manipulation and Analysis: Approaches to data management and analysis.

2. Master Level Data Science Concepts

Machine Learning and AI

Machine Learning is an important aspect of data science. Advanced courses should delve into:

Statistical Analysis and Probability