#multioutputs

Explore tagged Tumblr posts

Photo

The AdventureUltra packs a powerpunch and presperates gatorade. Designed to replace the heavy and bulky generators used when tailgating or camping, the AdventureUltra is a streamlined power source that can run a 42-inch television for up to three hours. The AdventureUltra has a maximum power output of 45 watts, enough to run a fan or recharge a drone battery. It can also be used with 2 in 1 laptops and new laptops, such as the 12-inch MacBook, that require less than 45 watts either when powered off and solely charging or when powered on and charging simultaneously. It houses a 13,400 mAh battery that can charge a 1960 mAh iPhone 7 nearly 7 times. BUY IT NOW $129 Learn More At https://mycharge.com/collections/adventure-series/products/adventureultra #batterypack #coldgrove #multiOutputs #outdoorhandy #cargear #cargadgets #lifesaver #compact #onthego #longlasting #weekendtrips #ononecharge #wherehaveyoubeen #likeThis #IfYouLikeThis #fullbattery #fullbattery🔋

#likethis#weekendtrips#longlasting#cargadgets#multioutputs#onthego#compact#fullbattery🔋#cargear#outdoorhandy#batterypack#wherehaveyoubeen#lifesaver#coldgrove#ifyoulikethis#ononecharge#fullbattery

0 notes

Text

How to evaluate regression models?

Data Science Interview Questions around model evaluation metrics

Vimarsh Karbhari

Dec 18

Validation and Evaluation of a Data Science Model provides more colour to our hypothesis and helps evaluate different models that would provide better results against our data. These are the metrics that help us evaluate our models.

What Big-O is to coding, validation and evaluation is to Data Science Models.

There are three main errors (metrics) used to evaluate models, Mean absolute error, Mean Squared error and R2 score.

Mean Absolute Error (MAE)

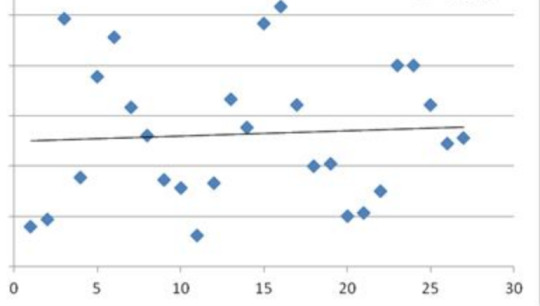

Source: Regression Docs

Lets take an example where we have some points. We have a line that fits those points. When we do a summation of the absolute value distance from the points to the line, we get Mean absolute error. The problem with this metric is that it is not differentiable. Let us translate this into how we can use Scikit Learn to calculate this metric.

>>> from sklearn.metrics import mean_absolute_error >>> y_true = [3, -0.5, 2, 7] >>> y_pred = [2.5, 0.0, 2, 8] >>> mean_absolute_error(y_true, y_pred) 0.5 >>> y_true = [[0.5, 1], [-1, 1], [7, -6]] >>> y_pred = [[0, 2], [-1, 2], [8, -5]] >>> mean_absolute_error(y_true, y_pred) 0.75 >>> mean_absolute_error(y_true, y_pred, multioutput='raw_values') array([0.5, 1. ]) >>> mean_absolute_error(y_true, y_pred, multioutput=[0.3, 0.7]) ... 0.85...

Mean Squared Error (MSE)

Mean Squared Error solves differentiability problem of the MAE. Consider the same diagram above. We have a line that fits those points. When we do a summation of the square of distances from the points to the line, we get Mean squared error. In Scikit learn it looks like:

>>> from sklearn.metrics import mean_squared_error >>> y_true = [3, -0.5, 2, 7] >>> y_pred = [2.5, 0.0, 2, 8] >>> mean_squared_error(y_true, y_pred) 0.375 >>> y_true = [[0.5, 1],[-1, 1],[7, -6]] >>> y_pred = [[0, 2],[-1, 2],[8, -5]] >>> mean_squared_error(y_true, y_pred) 0.708... >>> mean_squared_error(y_true, y_pred, multioutput='raw_values') ... array([0.41666667, 1. ]) >>> mean_squared_error(y_true, y_pred, multioutput=[0.3, 0.7]) ... 0.825..

The mathematical representations of MAE and MSE are below:

Source: Stackexchange

R2 Score

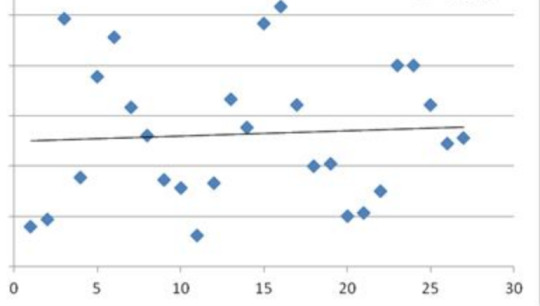

Let us take a naive approach by taking an average of all the points by thinking of a horizontal line through them. Then we can calculate the MSE for this simple model.

Source: R2 Score

R2 score answers the question that if this simple model has a larger error than the linear regression model. However, it terms of metrics the answer we need is how much larger. The R2 score answers this question. R2 score is 1 — (Error from Linear Regression Model/Simple average model).

Best possible score is 1.0 and it can be negative (because the model can be arbitrarily worse). A constant model that always predicts the expected value of y, disregarding the input features, would get a R² score of 0.0. In Scikit Learn it looks like:

>>> from sklearn.metrics import r2_score >>> y_true = [3, -0.5, 2, 7] >>> y_pred = [2.5, 0.0, 2, 8] >>> r2_score(y_true, y_pred) 0.948... >>> y_true = [[0.5, 1], [-1, 1], [7, -6]] >>> y_pred = [[0, 2], [-1, 2], [8, -5]] >>> r2_score(y_true, y_pred, multioutput='variance_weighted') ... 0.938... >>> y_true = [1,2,3] >>> y_pred = [1,2,3] >>> r2_score(y_true, y_pred) 1.0 >>> y_true = [1,2,3] >>> y_pred = [2,2,2] >>> r2_score(y_true, y_pred) 0.0 >>> y_true = [1,2,3] >>> y_pred = [3,2,1] >>> r2_score(y_true, y_pred) -3.0

Conclusion

Model evaluation leads a Data Scientist in the right direction to select or tune an appropriate model. In a Data Science Interviews, it tests the fundamentals of candidates in the same way. In any interview, knowing these values and terms for the problems being discussed is table stakes.

For more such answers to important Data Science questions, please visit Acing AI.

Subscribe to our Acing AI newsletter, I promise not to spam and its FREE!

Acing AI Newsletter — Revue Acing AI Newsletter — Reducing the entropy in Data Science and AI. Aimed to help people get into AI and Data Science by…www.getrevue.co

Thanks for reading! 😊 If you enjoyed it, test how many times can you hit 👏 in 5 seconds. It’s great cardio for your fingers AND will help other people see the story.

0 notes

Text

It’s Sunday! My goal is to finish my homework before 10pm tonight so I can go to bed a decent hour and not be completely stressed out. Here’s where I stand as of right now:

Naive Bayes Classification Lecture Video

Week 1 Lecture Video

Week 2 Lecture Video

Performance Measures - completed at 11:47am

Multiclass Classification - completed at 12:01pm

Error Analysis - completed at 12:09pm

Multilabel Classification - completed at 12:13pm

Multioutput Classification - completed at 12:16pm

Linear Regression - completed at 1:41pm

Gradient Descent - completed at 2:30pm

Polynomial Regression - completed at 2:34pm

Learning Curves - completed at 2:58pm

Regularized Linear Models

Logistic Regression

Week 3 Discussion Post - completed at 8:38pm

Assignment 3 - completed at 8:26pm

My goal is to finish my reading by noon and to start on the homework assignment shortly after lunch. I put together a new schedule for myself so I can hopefully stop waiting until the last minute to do homework and also incorporate some new habits I want to build (like eating healthy and exercising).

0 notes

Text

It’s Saturday and no, I definitely didn’t get my homework done. I’m terrible at procrastinating. It’s hard to not procrastinate. Does anyone have any tip or tricks for that? Anyhow, here’s a list of stuff I want to get done today, but we’ll see how it goes:

Prepare the Data for Machine Learning Algorithms - completed at 4:50pm

Select and Train the Model - completed at 4:56pm

Fine Tune Your Model - completed at 9:14pm

Launch Monitor and Maintain Your System - completed at 9:26pm

Naive Bayes Classification Lecture Video

Week 1 Lecture Video

Week 2 Lecture Video

Performance Measures

Multiclass Classification

Error Analysis

Multilabel Classification

Multioutput Classification

Linear Regression

Gradient Descent

Polynomial Regression

Learning Curves

Regularized Linear Models

Logistic Regression

Week 3 Discussion Post

Assignment 3

0 notes

Text

It’s Tuesday. I was exhausted after yesterday and didn’t get a ton done yesterday, so same agenda items for today as yesterday. Hopefully I’ll actually be able to finish some of these today because I’m actually starting on this today.

Working with Real Data - completed at 5:32pm

Look at the Big Picture - completed at 5:44pm

Get the Data - completed at 5:59pm

Discover and Visualize the Data to Gain Insights - completed at 6:02pm

Prepare the Data for Machine Learning Algorithms

Select and Train the Model

Fine Tune Your Model

Launch Monitor and Maintain Your System

Naive Bayes Classification Lecture Video

Week 1 Lecture Video

Week 2 Lecture Video

Performance Measures

Multiclass Classification

Error Analysis

Multilabel Classification

Multioutput Classification

Linear Regression

Gradient Descent

Polynomial Regression

Learning Curves

Regularized Linear Models

Logistic Regression

Week 3 Discussion Post

Assignment 3

0 notes

Text

hey guys! it’s early Monday morning. I finished my homework assignment late last night and I hated it. I hate getting things done last minute, so my goal is to actually finish my homework before this weekend. I only have 30 pages of reading this week, but I also need to catch up on taking notes from the reading I skipped. That said, I still think I can get all my homework done before the end of the week. Here’s what I’ve got for this week:

Working with Real Data

Get the Data

Discover and Visualize the Data to Gain Insights

Prepare the Data for Machine Learning Algorithms

Select and Train the Model

Fine Tune Your Model

Launch Monitor and Maintain Your System

Performance Measures

Multiclass Classification

Error Analysis

Multilabel Classification

Multioutput Classification

Linear Regression

Gradient Descent

Polynomial Regression

Learning Curves

Regularized Linear Models

Logistic Regression

Week 3 Discussion Post

Assignment 3

Fingers crossed that I can actually get ahead this week!

0 notes

Text

Hey guys. It’s Sunday morning. I have homework due tonight, so time to get on it. I went apple picking yesterday, which was fairly fun, but I was anxious about school the whole time. I sometimes wish I didn’t have school to worry about alongside work, trying to get healthy, and having a social life.

I got a B- on my homework assignment from last week, which is terrifying to me. I’ve got straight As so far. Literally 5 classes stand in my way of being a straight A student since junior high. Anyhow, I have a lot to get done today. We’ll see how this goes.

Watch lecture video

Naive Bayes classification lecture

Naive Bayes interactive module

Performance Measures

Multiclass Classification

Error Analysis

Multilabel Classification

Multioutput Classification

Logistic Regression

Assignment 2

Fit classification model

Logistic Regression Method

Naive Bayes Classification Method

Predict Binary Response Variable

Cross Validation Evaluation

ROC AUC Classification Performance

Modeling Method Recommendations

Client Targeting Suggestions

Data preparation, exploration, visualization

Research Design and Modeling Methods

Result evaluation/model evaluation

Problem description/recommendation

0 notes

Text

hello! It’s saturday morning. No one is awake just yet, but I figured I would at least get going on some of my homework. I didn’t end up doing much work this week, but I don’t know. I get mad at myself for not doing more throughout the week, but I will try harder during week 3. Hopefully it’ll become easier.

I just took a look at Week 3′s reading and I only have 30 pages to read, which is very exciting. Odds are that means that the discussion and the assignment will be harder, but that gives me a really great opportunity to actually get stuff done during the week and maybe have some semblance of a weekend. Anyhow, first I need to take care of Week 2. I’m going apple picking with my roommate and I need to finish at least parts of my homework/reading otherwise I’m not going to have fun tomorrow. We’ll see how this goes. This is what I have left to do:

Week 2 Discussion Post - completed at 6:38am

Watch lecture video

Naive Bayes classification lecture

Naive Bayes interactive module

MNIST - completed at 6:46am

Training a Binary Classifier - completed at 6:55am

Performance Measures - completed at

Multiclass Classification

Error Analysis

Multilabel Classification

Multioutput Classification

Logistic Regression

Scope Assignment 2

0 notes

Text

hello again! I’m back trying to start up on week 2 of my classes. I’m trying to go apple picking with my roommate this Saturday, so I gotta get my homework and reading done before Saturday. I know I technically have until Sunday to get my homework done, but how amazing would it be to actually have a weekend just for fun. We’ll see. I’m skipping working out today because I got home late from work and I would’ve been home even later had I gone to the gym today. Anyhow, this is what I would like to get done today:

Sports Analytics Paper - completed 5:31am

Week 2 Discussion Post

Watch lecture video

Naive Bayes classification lecture

Naive Bayes interactive module

MNIST

Training a Binary Classifier

Performance Measures

Multiclass Classification

Error Analysis

Multilabel Classification

Multioutput Classification

Logistic Regression

Scope Assignment 2

0 notes