#nltk

Explore tagged Tumblr posts

Text

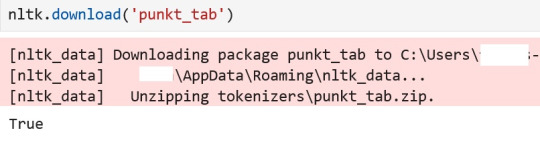

Resource punkt_tab not found - for NLTK (NLP)

Over the past few years, I see that quite a few folks are STILL getting this error in Jupyter Notebook. And it means, again, a lot of troubleshooting on my part. The instructor for the course I am taking (one of several Gen AI courses) did not get that error or they have an environment set up for that specific .ipynb file. And as such, they did not comment on it. After I did that last…

View On WordPress

0 notes

Text

El concepto de los diccionarios de sentimientos y cómo son fundamentales en el análisis de sentimientos.

¿Qué son los diccionarios de sentimientos y cómo funcionan? Imagina un diccionario, pero en lugar de definir palabras, clasifica las palabras según la emoción que expresan. Estos son los diccionarios de sentimientos. Son como una especie de “tesauro emocional” que asigna a cada palabra una puntuación que indica si es positiva, negativa o neutral. ¿Cómo funcionan? Lexicón: Contienen una extensa…

#alicante#análisis de sentimientos#anotación manual#aprendizaje automático#comunidad valenciana#contexto#corpus#diccionarios de sentimientos#empresas locales.#F1-score#gobierno#Google Cloud Natural Language API#herramientas de análisis de sentimientos#IBM Watson#inteligencia artificial#intensidad#MonkeyLearn#NLTK#polaridad#precisión#procesamiento del lenguaje natural#RapidMiner#recall#redes neuronales#spaCy#turismo

1 note

·

View note

Text

#nlp libraries#natural language processing libraries#python libraries#nodejs nlp libraries#python and libraries#javascript nlp libraries#best nlp libraries for nodejs#nlp libraries for java script#best nlp libraries for javascript#nlp libraries for nodejs and javascript#nltk library#python library#pattern library#python best gui library#python library re#python library requests#python library list#python library pandas#python best plotting library

0 notes

Text

in what situation is the word "oh" a proper noun

#🍯 talks#using nltk for my project and skimming through to fix some mistakes#and it keeps tagging oh as nnp

1 note

·

View note

Text

Can't believe I had to miss my morphology lecture because comp sci has no concept of timetables

#it's so sad#i literally only took it as a minor to get a leg up on python for nltk#and i'm doing intensive french with it as well so i'm swamped and i'm only two weeks in#bro i just wanna do linguistics :(

0 notes

Text

Want to make NLP tasks a breeze? Explore how NLTK streamlines text analysis in Python, making it easier to extract valuable insights from your data. Discover more https://bit.ly/487hj9L

0 notes

Text

Hi! I work in social science research, and wanted to offer a little bit of nuance into the notes of this post. A lot of people seem to be referring to LLMs like ChatGPT/Claude/Deepseek as purely ‘generative AI’ and used to ‘fix’ problems that don’t actually exist and while that is 99% true (hell in my field we’re extremely critical of the use of generative AI in the general public and how it is used), immediately demonizing LLMs as useless overlooks how great of a research tool it is for fields outside of STEM.

Tl;dr for below the cut: even ‘generative AI’ like ChatGPT can be used as an analytical tool in research. In fact, that’s one of the things it’s actually built for.

In social sciences and humanities we deal with a lot of rich qualitative data. It’s great! We capture some really specific and complex phenomena! But there is a drawback to this: it’s bloody hard to get through large amounts of this data.

Imagine you had just spent 12 months studying a particular group or community in the workplace, and as part of that you interviewed different members to gain better insight into the activities/behaviours/norms etc. By the end of this fieldwork stint you have over 20 hours worth of interviews, which transcribed is a metric fuckton of written data (and that’s not even mentioning the field notes or observational data you may have accrued)

The traditional way of handling this was to spend hours and hours and days and days pouring over the data with human eyes, develop a coding scheme, apply codes to sections by hand using programs like Atlas.ti or Nvivo (think Advanced Digital Highlighters), and then generate a new (or validate an existing) theory about People In The Place. This process of ‘coding’ takes a really long fucking time, and a lot of researchers if they have the money outsource it to poor grad students and research assistants to do it for them.

We developed computational methods to handle this somewhat (using natural language processing libraries like NLTK) but these analyse the data on a word-to-word level, which creates limitations in what kind of coding you can apply, and how it can be applied reliably (if at all). NLP like NLTK could recognize a word as a verb, adjective, or nouns, and even identify how ‘related’ words could be to one another (e.g ‘tree’ is more closely related to ‘park’ than it is to ‘concrete’). They couldn’t keep track of a broader context, however. They’re good for telling you whether something is positive or negative in tone (in what we call sentiment analysis) but bad for bad for telling you a phrase might be important when you relate it back to the place or person or circumstance.

LLMs completely change the game in that regard. They’re literally the next step of these Natural Language Processing programs we’ve been using for years, but are much much better at the context level. You can use it to contextualise not just a word, but a whole sentence or phrase against a specific background. This is really helpful when you’re doing what we call deductive coding - when you have a list of codes that relate to a rule or framework or definition that you’re applying to the data. Advanced LLMs like ChatGPT analysis mode can produce a level of reliability that matches human reliability for deductive coding, especially when given adequate context and examples.

But the even crazier thing? It can do inductive coding. Inductive coding is where the codes emerge from the data itself, not from an existing theory or framework. Now this definitely comes with limitations - it’s still the job of the researcher to pull these codes into a coherent and applicable finding, and of course the codes themselves are limited by the biases within the model (so not great for anything that deals with ‘sensitive issues’ or intersectionality).

Some fields like those in metacognition have stacks of historical data from things like protocol studies (people think aloud while doing a task) that were conducted to test individual theories and frameworks, but have never been revisited because the sheer amount of time it would take to hand code them makes the task economically and physically impossible. But now? Researchers are already doing in minutes which historically took them months or years, and the insights they’re gaining are applicable to broader and broader contexts.

People are still doing the necessary work of synthesizing the info that LLMs provide, but now (written) qual data is much more accessibly handled in large amounts - something that qualitative researchers have been trying to achieve for decades.

Midjourney and other generative image programs can still get fucked though.

#sorry I don’t normally add to posts and definitely never this much#but I want to offer a slightly different perspective#TO BE CLEAR I HATE HOW CHATGPT IS BEING USED IN THE BROADER WORK SOCIETY#BUT#please please please remember that qual research exists and LLMs like ChatGPT emerged out of the need to analyse this qual data

179K notes

·

View notes

Text

How Python Can Be Used in Finance: Applications, Benefits & Real-World Examples

In the rapidly evolving world of finance, staying ahead of the curve is essential. One of the most powerful tools at the intersection of technology and finance today is Python. Known for its simplicity and versatility, Python has become a go-to programming language for financial professionals, data scientists, and fintech companies alike.

This blog explores how Python is used in finance, the benefits it offers, and real-world examples of its applications in the industry.

Why Python in Finance?

Python stands out in the finance world because of its:

Ease of use: Simple syntax makes it accessible to professionals from non-programming backgrounds.

Rich libraries: Packages like Pandas, NumPy, Matplotlib, Scikit-learn, and PyAlgoTrade support a wide array of financial tasks.

Community support: A vast, active user base means better resources, tutorials, and troubleshooting help.

Integration: Easily interfaces with databases, Excel, web APIs, and other tools used in finance.

Key Applications of Python in Finance

1. Data Analysis & Visualization

Financial analysis relies heavily on large datasets. Python’s libraries like Pandas and NumPy are ideal for:

Time-series analysis

Portfolio analysis

Risk assessment

Cleaning and processing financial data

Visualization tools like Matplotlib, Seaborn, and Plotly allow users to create interactive charts and dashboards.

2. Algorithmic Trading

Python is a favorite among algo traders due to its speed and ease of prototyping.

Backtesting strategies using libraries like Backtrader and Zipline

Live trading integration with brokers via APIs (e.g., Alpaca, Interactive Brokers)

Strategy optimization using historical data

3. Risk Management & Analytics

With Python, financial institutions can simulate market scenarios and model risk using:

Monte Carlo simulations

Value at Risk (VaR) models

Stress testing

These help firms manage exposure and regulatory compliance.

4. Financial Modeling & Forecasting

Python can be used to build predictive models for:

Stock price forecasting

Credit scoring

Loan default prediction

Scikit-learn, TensorFlow, and XGBoost are popular libraries for machine learning applications in finance.

5. Web Scraping & Sentiment Analysis

Real-time data from financial news, social media, and websites can be scraped using BeautifulSoup and Scrapy. Python’s NLP tools (like NLTK, spaCy, and TextBlob) can be used for sentiment analysis to gauge market sentiment and inform trading strategies.

Benefits of Using Python in Finance

✅ Fast Development

Python allows for quick development and iteration of ideas, which is crucial in a dynamic industry like finance.

✅ Cost-Effective

As an open-source language, Python reduces licensing and development costs.

✅ Customization

Python empowers teams to build tailored solutions that fit specific financial workflows or trading strategies.

✅ Scalability

From small analytics scripts to large-scale trading platforms, Python can handle applications of various complexities.

Real-World Examples

💡 JPMorgan Chase

Developed a proprietary Python-based platform called Athena to manage risk, pricing, and trading across its investment banking operations.

💡 Quantopian (acquired by Robinhood)

Used Python for developing and backtesting trading algorithms. Users could write Python code to create and test strategies on historical market data.

💡 BlackRock

Utilizes Python for data analytics and risk management to support investment decisions across its portfolio.

💡 Robinhood

Leverages Python for backend services, data pipelines, and fraud detection algorithms.

Getting Started with Python in Finance

Want to get your hands dirty? Here are a few resources:

Books:

Python for Finance by Yves Hilpisch

Machine Learning for Asset Managers by Marcos López de Prado

Online Courses:

Coursera: Python and Statistics for Financial Analysis

Udemy: Python for Financial Analysis and Algorithmic Trading

Practice Platforms:

QuantConnect

Alpaca

Interactive Brokers API

Final Thoughts

Python is transforming the financial industry by providing powerful tools to analyze data, build models, and automate trading. Whether you're a finance student, a data analyst, or a hedge fund quant, learning Python opens up a world of possibilities.

As finance becomes increasingly data-driven, Python will continue to be a key differentiator in gaining insights and making informed decisions.

Do you work in finance or aspire to? Want help building your first Python financial model? Let me know, and I’d be happy to help!

#outfit#branding#financial services#investment#finance#financial advisor#financial planning#financial wellness#financial freedom#fintech

0 notes

Text

Highest Paying Machine Learning Jobs in Chennai and How to Get Them

Machine Learning (ML) is transforming the way industries operate—from automating business decisions to enabling predictive insights in healthcare, finance, and retail. Chennai, one of India’s fastest-growing tech hubs, has seen a significant rise in demand for skilled ML professionals. With global IT giants and promising startups setting up shop here, the city is offering some of the highest-paying machine learning jobs in India.

If you're planning to take a Machine Learning Course in Chennai, you're already on the right path. But what exactly are the roles that pay the most? And how can you land one of them?

This guide will walk you through the top-paying ML jobs in Chennai, salary expectations, required skills, and how to get hired.

Why Machine Learning Jobs Are Booming in Chennai?

1. Presence of Tech Giants

Companies like TCS, Infosys, Accenture, Cognizant, and HCL have large-scale operations in Chennai and are increasingly adopting AI/ML technologies.

2. Rise of Startups and FinTech

Chennai’s startup ecosystem is vibrant, with a growing number of FinTech, HealthTech, and EduTech ventures actively hiring for AI and ML roles.

3. Strong Educational Infrastructure

With prestigious institutions like IIT Madras, Anna University, and top training providers offering ML programs, the city breeds highly skilled talent—fueling industry growth.

Top High-Paying Machine Learning Jobs in Chennai

Here are the most lucrative ML jobs in Chennai, with salary insights and what it takes to land them.

1. Machine Learning Engineer

Average Salary: ₹9–18 LPA

Top Employers: Amazon, Zoho, TCS, Tiger Analytics

What You Do: Develop, train, and deploy ML models to solve real-world problems such as fraud detection, recommendation systems, and process automation.

Must-Have Skills: Python, Scikit-learn, TensorFlow, data preprocessing, model optimization

2. Data Scientist (ML Focus)

Average Salary: ₹10–20 LPA

Top Employers: LatentView, Freshworks, Ford Analytics, BankBazaar

What You Do: Use ML algorithms to analyze data, uncover patterns, and build predictive models that support decision-making.

Must-Have Skills: Python, R, SQL, data visualization, ML algorithms, business acumen

3. AI/ML Research Scientist

Average Salary: ₹12–25 LPA

Top Employers: IIT Madras Research Park, Accenture AI Labs, Saama Technologies

What You Do: Conduct advanced research in neural networks, deep learning, and NLP, often publishing papers or filing patents.

Must-Have Skills: Python, PyTorch, reinforcement learning, mathematical modeling, publications

4. Computer Vision Engineer

Average Salary: ₹10–22 LPA

Top Employers: Detect Technologies, Mad Street Den, L&T Smart World

What You Do: Create AI models that interpret images and video, often used in facial recognition, surveillance, and automation.

Must-Have Skills: OpenCV, TensorFlow, image processing, deep learning, CNNs

5. NLP Engineer (Natural Language Processing)

Average Salary: ₹9–18 LPA

Top Employers: Zoho, Kissflow, ThoughtWorks

What You Do: Design models that process and analyze human language, such as chatbots, translation engines, and sentiment analyzers.

Must-Have Skills: NLTK, spaCy, BERT, Transformers, sentiment analysis

6. Machine Learning Consultant

Average Salary: ₹15–28 LPA

Top Employers: Deloitte, KPMG, PwC, Cognizant AI Advisory

What You Do: Advise businesses on implementing ML solutions to improve efficiency and reduce costs.

Must-Have Skills: Business strategy, ML tools, client management, cross-functional communication

7. AI Product Manager (with ML background)

Average Salary: ₹20–35 LPA

Top Employers: Freshworks, Chargebee, Zoho

What You Do: Oversee the development of AI-powered products, manage teams, and translate technical features into business value.

Must-Have Skills: Product lifecycle, ML understanding, Agile, UX design thinking, stakeholder communication

How to Get High-Paying ML Jobs in Chennai?

Now that you know which roles pay well, here’s how to get one:

1. Enroll in a Machine Learning Course in Chennai

A well-structured Machine Learning Course in Chennai gives you the foundation to pursue these roles. Choose a program that offers:

Classroom interaction with experts

Hands-on projects and capstone assignments

Resume and placement assistance

Certifications recognized by industry leaders

2. Build a Strong Portfolio

Employers want proof that you can apply your skills in the real world. Some project ideas include:

Fraud detection using logistic regression

Customer churn prediction

Image classification with CNNs

Resume screening using NLP

Host your work on GitHub, showcase it on LinkedIn, and write about it on Medium or Kaggle.

3. Get Certified

Supplement your classroom training with certifications from:

Google (ML Crash Course)

IBM Applied AI Professional Certificate

AWS Machine Learning Specialty

TensorFlow Developer Certification

Certifications increase credibility and visibility on platforms like Naukri, LinkedIn, and Glassdoor.

4. Practice Interview Questions

Use platforms like:

LeetCode

InterviewBit

StrataScratch

HackerRank

Focus on ML-specific questions, data structures, and algorithms.

5. Attend Chennai-Based ML Meetups & Conferences

Events like PyData Chennai, DataHack, and The Fifth Elephant provide networking opportunities and expose you to real-world problems.

You’ll also discover new job openings and get tips directly from recruiters and hiring managers.

Mistakes to Avoid When Starting an ML Career in Chennai

Focusing only on theory: Practical project work matters more in interviews.

Skipping fundamentals: Without understanding data structures, algorithms, and math, you’ll struggle in advanced ML.

No specialization: Try to develop niche skills like NLP, Computer Vision, or MLOps.

Ignoring soft skills: Communication and business understanding are essential, especially in consulting and product roles.

Final Thoughts

Chennai is no longer just a back-end IT city. It's evolving into a machine learning and AI powerhouse. With companies across sectors investing heavily in AI, now is the perfect time to upskill and capitalize on the demand.

Enrolling in a well-rounded Machine Learning Course in Chennai is your first step toward high-paying, future-ready jobs. With the right guidance, hands-on experience, and career support—like the one offered by Boston Institute of Analytics—you can break into top-paying roles and build a stable, high-growth career in this exciting domain.

#Best Data Science Courses in Chennai#Artificial Intelligence Course in Chennai#Data Scientist Course in Chennai#Machine Learning Course in Chennai

0 notes

Text

Chatbot Python projects in chennai

Build intelligent assistants with Chatbot Python Projects in Chennai! Learn to develop AI-powered chatbots using Python libraries like NLTK, TensorFlow, and Rasa. Project centers offer hands-on training, real-time implementation, and expert mentorship. Ideal for final year students, these projects enhance your skills in natural language processing and automation. Kickstart your career with practical chatbot development experience in Chennai!

0 notes

Text

What It Takes to Build a Modern AI Chatbot: Tools, Tech, and Tactics

Artificial intelligence has fundamentally transformed how businesses interact with customers, and AI chatbots are at the forefront of this change. These intelligent systems are now integrated into websites, apps, and messaging platforms to provide real-time support, automate tasks, and deliver enhanced user experiences. But what goes into developing an AI chatbot that is not only technically sound but also user-friendly? This blog dives deep into the full spectrum of AI chatbot development—from the algorithms powering their intelligence to the nuances of user experience design.

Understanding the Core of AI Chatbots

AI chatbots are software applications designed to simulate human-like conversations with users through natural language. Unlike rule-based bots that rely on pre-defined scripts and decision trees, AI-powered chatbots use natural language processing (NLP), machine learning (ML), and, in more advanced cases, large language models (LLMs) to understand and respond intelligently. The core components of AI chatbots typically include a language understanding module, a dialogue management system, and a natural language generation component. These modules work together to interpret user queries, determine intent, and formulate responses that feel natural and contextually appropriate.

Natural Language Processing: The Brain Behind the Bot

Natural language processing is at the heart of every AI chatbot. NLP allows machines to understand, interpret, and generate human language in a meaningful way. It involves several sub-processes such as tokenization, stemming, part-of-speech tagging, named entity recognition, and sentiment analysis. These processes enable the chatbot to break down user input and derive meaningful insights. More advanced NLP systems incorporate context management, enabling the chatbot to remember previous parts of a conversation and respond in a way that makes the interaction feel coherent. NLP engines such as spaCy, NLTK, and the transformers from Hugging Face provide the foundation for building effective language models.

Machine Learning Models That Drive Intelligence

Machine learning takes chatbot development beyond static scripting into the realm of dynamic learning. Through supervised, unsupervised, or reinforcement learning, chatbots can be trained to improve over time. Supervised learning involves training models on labeled datasets, allowing the bot to understand what correct responses look like. Unsupervised learning helps in clustering and categorizing large volumes of user queries, which is helpful for refining intent recognition. Reinforcement learning, although more complex, allows the chatbot to learn through interaction, optimizing its responses based on feedback loops. These models are trained using frameworks like TensorFlow, PyTorch, or Keras, depending on the complexity and desired outcome of the chatbot.

Dialogue Management: Orchestrating the Conversation Flow

While NLP and ML handle the interpretation and learning aspects, dialogue management governs how a chatbot responds and keeps the conversation flowing. This component determines the chatbot’s next action based on the identified intent, user history, and business goals. A good dialogue manager manages state transitions, tracks user inputs across turns, and routes conversations toward successful resolutions. Frameworks like Rasa and Microsoft Bot Framework offer built-in dialogue management capabilities that support contextual conversations, fallback mechanisms, and multi-turn dialogue flows.

Integrating APIs and External Systems

Modern chatbots are rarely standalone systems. They are often integrated with CRM platforms, databases, e-commerce engines, and other enterprise systems through APIs. This connectivity allows the chatbot to perform actions like retrieving order details, booking appointments, or updating user profiles in real-time. API integration plays a critical role in turning the chatbot from a passive responder into an active digital assistant. Developers must ensure these integrations are secure, scalable, and responsive to avoid delays or data inconsistencies in user interactions.

Designing Conversational UX: Balancing Functionality and Usability

Beyond algorithms and data structures, chatbot development demands an equal focus on conversational user experience (UX). This involves designing dialogue flows that feel intuitive, natural, and helpful. A chatbot’s UX determines how users perceive the quality of the interaction. Key aspects of conversational UX include tone of voice, prompt design, context handling, and error recovery. A good UX avoids robotic responses, manages user frustration gracefully, and keeps the conversation aligned with user intent. Developers and designers often collaborate using tools like Botmock, Voiceflow, or Adobe XD to prototype and test conversational flows before implementation.

Choosing the Right Platform for Deployment

Once the chatbot is developed, choosing the right deployment platform is crucial. Depending on the target audience, the bot may be deployed on websites, mobile apps, social media platforms, or messaging services like WhatsApp, Facebook Messenger, and Slack. Each platform comes with its own user behavior patterns and technical constraints. For example, web-based chatbots might require live chat handover capabilities, while messaging platforms need to comply with message rate limits and approval policies. Developers need to account for platform-specific SDKs and APIs while ensuring a consistent brand voice across channels.

Data Collection and Continuous Improvement

The work doesn't end after deployment. AI chatbots require continuous monitoring, feedback collection, and optimization. Data from user interactions must be anonymized and analyzed to understand where the chatbot performs well and where it falls short. Developers use this feedback loop to retrain models, refine dialogue flows, and improve response accuracy. Features such as analytics dashboards, A/B testing, and heatmaps help teams track user engagement and conversion metrics. The goal is to ensure the chatbot evolves in alignment with user needs and business objectives.

Addressing Privacy, Ethics, and Compliance

As AI chatbots handle increasing volumes of personal data, developers must prioritize privacy, ethics, and legal compliance. Depending on the jurisdiction, data regulations such as GDPR, CCPA, or HIPAA may apply. Developers must implement proper data encryption, anonymization techniques, and secure data storage practices. Moreover, ethical considerations like bias in language models, inappropriate content filtering, and transparency in AI decision-making must be addressed. Providing users with clear disclaimers and opt-out options is not just a best practice—it’s a requirement for building trust.

Leveraging Pre-Trained Models and LLMs

In recent years, the availability of large pre-trained language models such as GPT, BERT, and Claude has accelerated chatbot development. These models offer advanced conversational abilities out of the box and can be fine-tuned on domain-specific data to create highly intelligent and responsive bots. While using LLMs reduces development time, it also raises considerations around cost, performance latency, and content moderation. Developers can either use APIs from providers like OpenAI or build private LLMs on secure infrastructure for better data control.

Multilingual and Multimodal Capabilities

As businesses expand globally, multilingual capabilities in chatbots have become essential. NLP engines now support over 100 languages, allowing chatbots to interact with users across regions seamlessly. Additionally, multimodal chatbots that combine text, voice, and visual elements offer richer interactions. For instance, a chatbot can respond with product images, QR codes, or even generate voice responses for accessibility. Incorporating these features enhances the overall user experience and makes the chatbot more inclusive.

Challenges in AI Chatbot Development

Despite the advancements, AI chatbot development comes with its share of challenges. Achieving high intent recognition accuracy in ambiguous queries, managing long contextual conversations, and avoiding inappropriate or irrelevant responses remain technical hurdles. There is also the risk of over-promising the chatbot’s capabilities, leading to user frustration. Striking a balance between automation and human handoff is critical for ensuring reliability. These challenges require a combination of strong engineering, rigorous testing, and ongoing user feedback to overcome effectively.

Custom-Built vs. White-Label Chatbots

Businesses often face the decision of building custom chatbots from scratch or leveraging white-label solutions. Custom-built chatbots offer more flexibility, brand alignment, and control over features. However, they require greater investment in time, expertise, and resources. On the other hand, white-label chatbot platforms offer pre-built functionalities, faster deployment, and reduced costs, making them ideal for SMEs and startups. The choice depends on the complexity of use cases, scalability needs, and long-term strategic goals.

Future of AI Chatbots: Beyond Text-Based Interactions

The future of AI chatbot development points toward hyper-personalization, emotion-aware interactions, and integration with advanced AI agents. With advancements in sentiment analysis and affective computing, chatbots will soon be able to adapt their tone and responses based on a user’s emotional state. Integration with IoT, AR/VR, and wearable devices will further extend the capabilities of AI bots beyond text and voice, enabling them to assist users in immersive environments. This evolution will make chatbots central to the next generation of human-computer interaction.

Conclusion: Building Intelligent Bots That Users Actually Want

AI chatbot development is a multidisciplinary journey that spans algorithms, design, engineering, and psychology. A successful chatbot must be intelligent enough to understand nuanced language, yet simple and empathetic enough to engage users effectively. From core NLP and machine learning models to conversational UX and ethical compliance, each layer contributes to the bot’s performance and perception. As businesses adopt more AI-driven solutions, the role of chatbots will only grow in scope and significance. Developing them thoughtfully—with a balance of innovation, usability, and responsibility—is key to delivering experiences users can trust and enjoy.

0 notes

Text

What AI Skills Will Make You the Most Money in 2025? Here's the Inside Scoop

If you’ve been even slightly tuned into the tech world, you’ve heard it: AI is taking over. But here’s the good news—it’s not here to replace everyone; it’s here to reward those who get ahead of the curve. The smartest move you can make right now? Learn AI skills that are actually in demand and highly paid.

We're stepping into a world where AI is not just automating jobs, it’s creating new, high-paying careers—and they’re not all for coders. Whether you’re a techie, creative, strategist, or entrepreneur, there’s something in AI that can fuel your next big leap.

So, let’s break down the 9 most income-generating AI skills for 2025, what makes them hot, and how you can start developing them today.

1. Machine Learning (ML) Engineering

Machine learning is the brain behind modern AI. From YouTube recommendations to fraud detection, it powers everything.

Why it pays: Businesses are using ML to cut costs, boost sales, and predict customer behavior. ML engineers can expect salaries from $130,000 to $180,000+ depending on experience and location.

What to learn: Python, TensorFlow, PyTorch, data modeling, algorithms

Pro tip: Get hands-on with Kaggle competitions to build your portfolio.

2. Natural Language Processing (NLP)

NLP is how machines understand human language—think ChatGPT, Alexa, Grammarly, or AI content moderation.

Why it pays: NLP is exploding thanks to chatbots, AI customer support, and automated content. Salaries range between $110,000 to $160,000.

What to learn: SpaCy, NLTK, BERT, GPT models, tokenization, sentiment analysis

Real-life bonus: If you love languages and psychology, NLP blends both.

3. AI Product Management

Not all high-paying AI jobs require coding. AI Product Managers lead AI projects from concept to launch.

Why it pays: Every tech company building AI features needs a PM who gets it. These roles can bring in $120,000 to $170,000, and more in startups with equity.

What to learn: Basics of AI, UX, Agile methodologies, data analysis, prompt engineering

Starter tip: Learn how to translate business problems into AI product features.

4. Computer Vision

This is the tech that lets machines "see" — powering facial recognition, self-driving cars, and even AI-based medical imaging.

Why it pays: Industries like healthcare, retail, and automotive are investing heavily in vision-based AI. Salaries are typically $130,000 and up.

What to learn: OpenCV, YOLO, object detection, image classification, CNNs (Convolutional Neural Networks)

Why it’s hot: The AR/VR boom is only just beginning—and vision tech is at the center.

5. AI-Driven Data Analysis

Data is gold, but AI turns it into actionable insights. Data analysts who can use AI to automate reports and extract deep trends are in high demand.

Why it pays: AI-powered analysts often pull $90,000 to $130,000, and can climb higher in enterprise roles.

What to learn: SQL, Python (Pandas, NumPy), Power BI, Tableau, AutoML tools

Great for: Anyone who loves solving puzzles with numbers.

6. Prompt Engineering

Yes, it’s a real job now. Prompt engineers design inputs for AI tools like ChatGPT or Claude to get optimal results.

Why it pays: Businesses pay up to $250,000 a year for prompt experts because poorly written prompts can cost time and money.

What to learn: How LLMs work, instruction tuning, zero-shot vs. few-shot prompting, language logic

Insider fact: Even content creators are using prompt engineering to boost productivity and generate viral ideas.

7. AI Ethics and Policy

As AI becomes mainstream, the need for regulation, fairness, and transparency is growing fast. Enter AI ethicists and policy strategists.

Why it pays: Roles range from $100,000 to $160,000, especially in government, think tanks, and large corporations.

What to learn: AI bias, explainability, data privacy laws, algorithmic fairness

Good fit for: People with legal, social science, or philosophical backgrounds.

8. Generative AI Design

If you’re a designer, there’s gold in gen AI tools. Whether it’s building AI-powered logos, animations, voiceovers, or 3D assets—creativity now meets code.

Why it pays: Freelancers can earn $5,000+ per project, and full-time creatives can make $100,000+ if they master the tools.

What to learn: Midjourney, Adobe Firefly, RunwayML, DALL·E, AI video editors

Hot tip: Combine creativity with some basic scripting (Python or JavaScript) and you become unstoppable.

9. AI Integration & Automation (No-Code Tools)

Not a tech whiz? No problem. If you can use tools like Zapier, Make.com, or Notion AI, you can build automation flows that solve business problems.

Why it pays: Businesses pay consultants $80 to $200+ per hour to set up custom AI workflows.

What to learn: Zapier, Make, Airtable, ChatGPT APIs, Notion, AI chatbots

Perfect for: Entrepreneurs and freelancers looking to scale fast without hiring.

How to Get Started Without Burning Out

Pick one lane. Don’t try to learn everything. Choose one skill based on your background and interest.

Use free platforms. Coursera, YouTube, and Google’s AI courses offer incredible resources.

Practice, don’t just watch. Build projects, join AI communities, and ask for feedback.

Show your work. Post projects on GitHub, Medium, or LinkedIn. Even small ones count.

Stay updated. AI changes fast. Follow influencers, subscribe to newsletters, and keep tweaking your skills.

Real Talk: Do You Need a Degree?

Nope. Many high-earning AI professionals are self-taught. What really counts is your ability to solve real-world problems using AI tools. If you can do that and show results, you’re golden.

Even companies like Google, Meta, and OpenAI look at what you can do, not just your college transcript.

Final Thoughts

AI isn’t some far-off future—it’s happening right now. The people who are getting rich off this tech are not just coding geniuses or math wizards. They’re creators, problem-solvers, and forward thinkers who dared to learn something new.

The playing field is wide open—and if you start today, 2025 could be your most profitable year yet.

So which skill will you start with?

0 notes

Text

Chatbot Python projects in chennai

Looking to build smart Chatbots using Python? Explore the best opportunities for Chatbot Python projects in Chennai, where innovation meets real-world applications. Python is the preferred language for AI-driven chatbots, thanks to libraries like NLTK, TensorFlow, and ChatterBot. Project centers in Chennai offer hands-on training in designing, developing, and deploying chatbots for customer service, education, and e-commerce sectors.

0 notes

Text

Chatbot Python projects in chennai

Building Chatbots with Python in Chennai: A Growing Tech Trend

Chatbots are revolutionizing customer interactions, and Chennai is becoming a hub for AI-driven chatbot development. With Python’s powerful libraries like NLTK, TensorFlow, and Flask, developers are creating intelligent bots for businesses. From e-commerce to customer support, chatbot technology is reshaping industries. Aspiring developers in Chennai can explore courses, internships, and projects to dive into this fast-evolving field.

Would you like more details on tools or learning resources?What are the popular chatbot frameworks to mention?

0 notes

Text

Chatbot Python Projects in Chennai

Python chatbot development addresses the growing demand for conversational AI solutions. Students work with NLTK, spaCy, and Rasa to create intelligent virtual assistants for various industries. These projects cover natural language understanding and dialogue management. Chatbot implementation can reduce customer service costs by 50% while improving user satisfaction and response times. Chennai's service-oriented industries, including banking and healthcare, actively adopt chatbot technologies, creating excellent career opportunities.

0 notes

Text

Project Title:AI-Driven Social Media Sentiment Temporal Evolution Analysis using Pandas, VADER, and Dynamic Topic Modeling.

part 1Here is your advanced AI/ML/Data Science project, now available as a code file in the canvas below: # ai_driven_social_media_sentiment_analysis_dashboard.py import streamlit as st import pandas as pd import numpy as np import seaborn as sns import matplotlib.pyplot as plt import nltk from nltk.sentiment.vader import SentimentIntensityAnalyzer from sklearn.feature_extraction.text import…

0 notes