#numeric data types in sql

Explore tagged Tumblr posts

Text

Structured Query Language (SQL): A Comprehensive Guide

Structured Query Language, popularly called SQL (reported "ess-que-ell" or sometimes "sequel"), is the same old language used for managing and manipulating relational databases. Developed in the early 1970s by using IBM researchers Donald D. Chamberlin and Raymond F. Boyce, SQL has when you consider that end up the dominant language for database structures round the world.

Structured query language commands with examples

Today, certainly every important relational database control system (RDBMS)—such as MySQL, PostgreSQL, Oracle, SQL Server, and SQLite—uses SQL as its core question language.

What is SQL?

SQL is a website-specific language used to:

Retrieve facts from a database.

Insert, replace, and delete statistics.

Create and modify database structures (tables, indexes, perspectives).

Manage get entry to permissions and security.

Perform data analytics and reporting.

In easy phrases, SQL permits customers to speak with databases to shop and retrieve structured information.

Key Characteristics of SQL

Declarative Language: SQL focuses on what to do, now not the way to do it. For instance, whilst you write SELECT * FROM users, you don’t need to inform SQL the way to fetch the facts—it figures that out.

Standardized: SQL has been standardized through agencies like ANSI and ISO, with maximum database structures enforcing the core language and including their very own extensions.

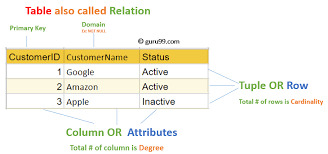

Relational Model-Based: SQL is designed to work with tables (also called members of the family) in which records is organized in rows and columns.

Core Components of SQL

SQL may be damaged down into numerous predominant categories of instructions, each with unique functions.

1. Data Definition Language (DDL)

DDL commands are used to outline or modify the shape of database gadgets like tables, schemas, indexes, and so forth.

Common DDL commands:

CREATE: To create a brand new table or database.

ALTER: To modify an present table (add or put off columns).

DROP: To delete a table or database.

TRUNCATE: To delete all rows from a table but preserve its shape.

Example:

sq.

Copy

Edit

CREATE TABLE personnel (

id INT PRIMARY KEY,

call VARCHAR(one hundred),

income DECIMAL(10,2)

);

2. Data Manipulation Language (DML)

DML commands are used for statistics operations which include inserting, updating, or deleting information.

Common DML commands:

SELECT: Retrieve data from one or more tables.

INSERT: Add new records.

UPDATE: Modify existing statistics.

DELETE: Remove information.

Example:

square

Copy

Edit

INSERT INTO employees (id, name, earnings)

VALUES (1, 'Alice Johnson', 75000.00);

three. Data Query Language (DQL)

Some specialists separate SELECT from DML and treat it as its very own category: DQL.

Example:

square

Copy

Edit

SELECT name, income FROM personnel WHERE profits > 60000;

This command retrieves names and salaries of employees earning more than 60,000.

4. Data Control Language (DCL)

DCL instructions cope with permissions and access manage.

Common DCL instructions:

GRANT: Give get right of entry to to users.

REVOKE: Remove access.

Example:

square

Copy

Edit

GRANT SELECT, INSERT ON personnel TO john_doe;

five. Transaction Control Language (TCL)

TCL commands manage transactions to ensure data integrity.

Common TCL instructions:

BEGIN: Start a transaction.

COMMIT: Save changes.

ROLLBACK: Undo changes.

SAVEPOINT: Set a savepoint inside a transaction.

Example:

square

Copy

Edit

BEGIN;

UPDATE personnel SET earnings = income * 1.10;

COMMIT;

SQL Clauses and Syntax Elements

WHERE: Filters rows.

ORDER BY: Sorts effects.

GROUP BY: Groups rows sharing a assets.

HAVING: Filters companies.

JOIN: Combines rows from or greater tables.

Example with JOIN:

square

Copy

Edit

SELECT personnel.Name, departments.Name

FROM personnel

JOIN departments ON personnel.Dept_id = departments.Identity;

Types of Joins in SQL

INNER JOIN: Returns statistics with matching values in each tables.

LEFT JOIN: Returns all statistics from the left table, and matched statistics from the right.

RIGHT JOIN: Opposite of LEFT JOIN.

FULL JOIN: Returns all records while there is a in shape in either desk.

SELF JOIN: Joins a table to itself.

Subqueries and Nested Queries

A subquery is a query inside any other query.

Example:

sq.

Copy

Edit

SELECT name FROM employees

WHERE earnings > (SELECT AVG(earnings) FROM personnel);

This reveals employees who earn above common earnings.

Functions in SQL

SQL includes built-in features for acting calculations and formatting:

Aggregate Functions: SUM(), AVG(), COUNT(), MAX(), MIN()

String Functions: UPPER(), LOWER(), CONCAT()

Date Functions: NOW(), CURDATE(), DATEADD()

Conversion Functions: CAST(), CONVERT()

Indexes in SQL

An index is used to hurry up searches.

Example:

sq.

Copy

Edit

CREATE INDEX idx_name ON employees(call);

Indexes help improve the performance of queries concerning massive information.

Views in SQL

A view is a digital desk created through a question.

Example:

square

Copy

Edit

CREATE VIEW high_earners AS

SELECT call, salary FROM employees WHERE earnings > 80000;

Views are beneficial for:

Security (disguise positive columns)

Simplifying complex queries

Reusability

Normalization in SQL

Normalization is the system of organizing facts to reduce redundancy. It entails breaking a database into multiple related tables and defining overseas keys to link them.

1NF: No repeating groups.

2NF: No partial dependency.

3NF: No transitive dependency.

SQL in Real-World Applications

Web Development: Most web apps use SQL to manipulate customers, periods, orders, and content.

Data Analysis: SQL is extensively used in information analytics systems like Power BI, Tableau, and even Excel (thru Power Query).

Finance and Banking: SQL handles transaction logs, audit trails, and reporting systems.

Healthcare: Managing patient statistics, remedy records, and billing.

Retail: Inventory systems, sales analysis, and consumer statistics.

Government and Research: For storing and querying massive datasets.

Popular SQL Database Systems

MySQL: Open-supply and extensively used in internet apps.

PostgreSQL: Advanced capabilities and standards compliance.

Oracle DB: Commercial, especially scalable, agency-degree.

SQL Server: Microsoft’s relational database.

SQLite: Lightweight, file-based database used in cellular and desktop apps.

Limitations of SQL

SQL can be verbose and complicated for positive operations.

Not perfect for unstructured information (NoSQL databases like MongoDB are better acceptable).

Vendor-unique extensions can reduce portability.

Java Programming Language Tutorial

Dot Net Programming Language

C ++ Online Compliers

C Language Compliers

2 notes

·

View notes

Text

VPS Windows Hosting in India: The Ultimate Guide for 2024

In the ever-evolving landscape of web hosting, Virtual Private Servers (VPS) have become a preferred choice for both businesses and individuals. Striking a balance between performance, cost-effectiveness, and scalability, VPS hosting serves those seeking more than what shared hosting provides without the significant expense of a dedicated server. Within the myriad of VPS options, VPS Windows Hosting stands out as a popular choice for users who have a preference for the Microsoft ecosystem.

This comprehensive guide will explore VPS Windows Hosting in India, shedding light on its functionality, key advantages, its relevance for Indian businesses, and how to select the right hosting provider in 2024.

What is VPS Windows Hosting?

VPS Windows Hosting refers to a hosting type where a physical server is partitioned into various virtual servers, each operating with its own independent Windows OS. Unlike shared hosting, where resources are shared among multiple users, VPS provides dedicated resources, including CPU, RAM, and storage, which leads to enhanced performance, security, and control.

Why Choose VPS Windows Hosting in India?

The rapid growth of India’s digital landscape and the rise in online businesses make VPS hosting an attractive option. Here are several reasons why Windows VPS Hosting can be an optimal choice for your website or application in India:

Seamless Compatibility: Windows VPS is entirely compatible with Microsoft applications such as ASP.NET, SQL Server, and Microsoft Exchange. For websites or applications that depend on these technologies, Windows VPS becomes a natural option.

Scalability for Expanding Businesses: A notable advantage of VPS hosting is its scalability. As your website or enterprise grows, upgrading server resources can be done effortlessly without downtime or cumbersome migration. This aspect is vital for startups and SMEs in India aiming to scale economically.

Localized Hosting for Improved Speed: Numerous Indian hosting providers have data centers within the country, minimizing latency and enabling quicker access for local users, which is particularly advantageous for targeting audiences within India.

Enhanced Security: VPS hosting delivers superior security compared to shared hosting, which is essential in an era where cyber threats are increasingly prevalent. Dedicated resources ensure your data remains isolated from others on the same physical server, diminishing the risk of vulnerabilities.

Key Benefits of VPS Windows Hosting

Dedicated Resources: VPS Windows hosting ensures dedicated CPU, RAM, and storage, providing seamless performance, even during traffic surges.

Full Administrative Control: With Windows VPS, you gain root access, allowing you to customize server settings, install applications, and make necessary adjustments.

Cost Efficiency: VPS hosting provides the advantages of dedicated hosting at a more economical price point. This is incredibly beneficial for businesses looking to maintain a competitive edge in India’s market.

Configurability: Whether you require specific Windows applications or custom software, VPS Windows hosting allows you to tailor the server to meet your unique needs.

Managed vs. Unmanaged Options: Depending on your technical ability, you can opt for managed VPS hosting, where the provider manages server maintenance, updates, and security, or unmanaged VPS hosting, where you retain full control of the server and its management.

How to Select the Right VPS Windows Hosting Provider in India

With a plethora of hosting providers in India offering VPS Windows hosting, selecting one that meets your requirements is crucial. Here are several factors to consider:

Performance & Uptime: Choose a hosting provider that guarantees a minimum uptime of 99.9%. Reliable uptime ensures your website remains accessible at all times, which is crucial for any online venture.

Data Center Location: Confirm that the hosting provider has data centers located within India or in proximity to your target users. This will enhance loading speeds and overall user satisfaction.

Pricing & Plans: Evaluate pricing plans from various providers to ensure you’re receiving optimal value. Consider both initial costs and renewal rates, as some providers may offer discounts for longer commitments.

Customer Support: Opt for a provider that offers 24/7 customer support, especially if you lack an in-house IT team. Look for companies that offer support through various channels like chat, phone, and email.

Security Features: Prioritize providers offering robust security features such as firewall protection, DDoS mitigation, automatic backups, and SSL certificates.

Backup and Recovery: Regular backups are vital for data protection. Verify if the provider includes automated backups and quick recovery options for potential issues.

Top VPS Windows Hosting Providers in India (2024)

To streamline your research, here's a brief overview of some of the top VPS Windows hosting providers in India for 2024:

Host.co.in

Recognized for its competitive pricing and exceptional customer support, Host.co.in offers a range of Windows VPS plans catering to businesses of various sizes.

BigRock

Among the most well-known hosting providers in India, BigRock guarantees reliable uptime, superb customer service, and diverse hosting packages, including Windows VPS.

MilesWeb

MilesWeb offers fully managed VPS hosting solutions at attractive prices, making it a great option for businesses intent on prioritizing growth over server management.

GoDaddy

As a leading name in hosting, GoDaddy provides flexible Windows VPS plans designed for Indian businesses, coupled with round-the-clock customer support.

Bluehost India

Bluehost delivers powerful VPS solutions for users requiring high performance, along with an intuitive control panel and impressive uptime.

Conclusion

VPS Windows Hosting in India is an outstanding option for individuals and businesses in search of a scalable, cost-effective, and performance-oriented hosting solution. With dedicated resources and seamless integration with Microsoft technologies, it suits websites that experience growing traffic or require ample resources.

As we advance into 2024, the necessity for VPS Windows hosting is expected to persist, making it imperative to choose a hosting provider that can accommodate your developing requirements. Whether launching a new website or upgrading your existing hosting package, VPS Windows hosting is a strategic investment for the future of your online endeavors.

FAQs

Is VPS Windows Hosting costly in India?

While VPS Windows hosting is pricier than shared hosting, it is much more affordable than dedicated servers and many providers in India offer competitive rates, making it accessible for small and medium-sized enterprises.

Can I upgrade my VPS Windows Hosting plan easily?

Absolutely, VPS hosting plans provide significant scalability. You can effortlessly enhance your resources like CPU, RAM, and storage without experiencing downtime.

What type of businesses benefit from VPS Windows Hosting in India?

Businesses that demand high performance, improved security, and scalability find the most advantage in VPS hosting. It’s particularly ideal for sites that utilize Windows-based technologies like ASP.NET and SQL Server.

2 notes

·

View notes

Text

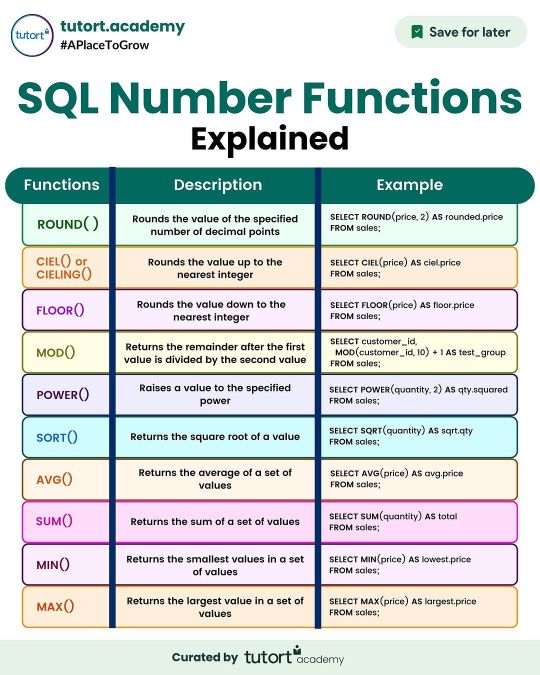

SQL Number Functions🎯

SQL’s numeric functions perform operations on numbers. They take one or more numbers as arguments and return a number as output. Note that numbers may be of different data types, including integers, doubles, and floats💻

SQL numeric functions can be divided into scalar and aggregate functions. Scalar functions compute a result for each input row individually. For example, applying the ABS() function to a column produces the absolute value for each row within that column. On the other hand, aggregate functions operate on values across multiple rows to produce an output – e.g. applying the MAX() function to a column yields the highest value among all rows within that column👩💻

✅ Do follow for more such content👉 @tutort-academy 📍

6 notes

·

View notes

Text

Computer Language

Computer languages, also known as programming languages, are formal languages used to communicate instructions to a computer. These instructions are written in a syntax that computers can understand and execute. There are numerous programming languages, each with its own syntax, semantics, and purpose. Here are some of the main types of programming languages:

1.Low-Level Languages:

Machine Language: This is the lowest level of programming language, consisting of binary code (0s and 1s) that directly corresponds to instructions executed by the computer's hardware. It is specific to the computer's architecture.

Assembly Language: Assembly language uses mnemonic codes to represent machine instructions. It is a human-readable form of machine language and closely tied to the computer's hardware architecture

2.High-Level Languages:

Procedural Languages: Procedural languages, such as C, Pascal, and BASIC, focus on defining sequences of steps or procedures to perform tasks. They use constructs like loops, conditionals, and subroutines.

Object-Oriented Languages: Object-oriented languages, like Java, C++, and Python, organize code around objects, which are instances of classes containing data and methods. They emphasize concepts like encapsulation, inheritance, and polymorphism.

Functional Languages: Functional languages, such as Haskell, Lisp, and Erlang, treat computation as the evaluation of mathematical functions. They emphasize immutable data and higher-order functions.

Scripting Languages: Scripting languages, like JavaScript, PHP, and Ruby, are designed for automating tasks, building web applications, and gluing together different software components. They typically have dynamic typing and are interpreted rather than compiled.

Domain-Specific Languages (DSLs): DSLs are specialized languages tailored to a specific domain or problem space. Examples include SQL for database querying, HTML/CSS for web development, and MATLAB for numerical computation.

3.Other Types:

Markup Languages: Markup languages, such as HTML, XML, and Markdown, are used to annotate text with formatting instructions. They are not programming languages in the traditional sense but are essential for structuring and presenting data.

Query Languages: Query languages, like SQL (Structured Query Language), are used to interact with databases by retrieving, manipulating, and managing data.

Constraint Programming Languages: Constraint programming languages, such as Prolog, focus on specifying constraints and relationships among variables to solve combinatorial optimization problems.

2 notes

·

View notes

Text

Learn Data Analytics: Grow Skills, Make it Truth Future

The world inside that's increasingly shaped by information, being able to understand and interpret data isn't just a specialized skill anymore—it's a crucial ability for navigating today’s landscape. At its heart, data analytics involves digging into raw data to uncover meaningful patterns, draw insightful conclusions, and guide decision-making. When individuals get a handle on this discipline, they can turn those raw numbers into actionable insights, paving the way for a more predictable and 'truthful' future for themselves and the organizations they work with. This article dives into the compelling reasons to learn data analytics, highlighting the key skills involved and how they help build a future rooted in verifiable facts.

The Foundational Power of Data Literacy

At the heart of a data-driven future lies data literacy – the ability to read, understand, create, and communicate data as information. This fundamental understanding is the first step towards leveraging analytics effectively. Without it, individuals and businesses risk making decisions based on intuition or outdated information, which can lead to missed opportunities and significant errors.

Understanding Data's Language

Learning data analytics begins with grasping how data is generated and structured. This involves:

Data Sources: Recognizing where data comes from, whether it's from website clicks, sales transactions, sensor readings, or social media interactions.

Data Types: Differentiating between numerical, categorical, textual, and temporal data, as each requires different analytical approaches.

Data Quality: Appreciating the importance of clean, accurate, and complete data. Flawed data inevitably leads to flawed conclusions, rendering efforts useless.

Essential Skills for Data Analytics Growth

To truly make a "truth future" through data, a blend of technical proficiency, analytical thinking, and effective communication is required.

Technical Proficiencies

The journey into data analytics necessitates acquiring specific technical skills:

Statistical Foundations: A solid understanding of statistical concepts (e.g., probability, hypothesis testing, regression) is crucial for interpreting data accurately and building robust models.

Programming Languages: Python and R are the industry standards. They offer powerful libraries for data manipulation, statistical analysis, machine learning, and visualization. Proficiency in at least one of these is non-negotiable.

Database Management: SQL (Structured Query Language) skills are vital for querying, extracting, and managing data from relational databases, which are the backbone of many business operations.

Data Visualization Tools: Tools like Tableau, Power BI, or Qlik Sense enable analysts to transform complex datasets into intuitive charts, graphs, and dashboards, making insights accessible to non-technical audiences.

Analytical Thinking and Problem-Solving

Beyond tools, the analytical mindset is paramount. This involves:

Critical Thinking: The ability to question assumptions, identify biases, and evaluate the validity of data and its interpretations.

Problem Framing: Defining business problems clearly and translating them into analytical questions that can be answered with data.

Pattern Recognition: The knack for identifying trends, correlations, and anomalies within datasets that might not be immediately obvious.

Communication Skills

Even the most profound data insights are useless if they cannot be effectively communicated.

Storytelling with Data: Presenting findings in a compelling narrative that highlights key insights and their implications for decision-making.

Stakeholder Management: Understanding the needs and questions of different audiences (e.g., executives, marketing teams, operations managers) and tailoring presentations accordingly.

Collaboration: Working effectively with cross-functional teams to integrate data insights into broader strategies.

Making the "Truth Future": Applications of Data Analytics

The skills acquired in data analytics empower individuals to build a future grounded in verifiable facts, impacting various domains.

Business Optimization

In the corporate world, data analytics helps to:

Enhance Customer Understanding: By analyzing purchasing habits, Browse behavior, and feedback, businesses can create personalized experiences and targeted marketing campaigns.

Improve Operational Efficiency: Data can reveal bottlenecks in supply chains, optimize resource allocation, and predict equipment failures, leading to significant cost savings.

Drive Strategic Decisions: Whether it's market entry strategies, product development, or pricing models, analytics provides the evidence base for informed choices, reducing risk and increasing profitability.

Personal Empowerment

Data analytics isn't just for corporations; it can profoundly impact individual lives:

Financial Planning: Tracking spending patterns, identifying savings opportunities, and making informed investment decisions.

Health and Wellness: Analyzing fitness tracker data, sleep patterns, and dietary information to make healthier lifestyle choices.

Career Advancement: Understanding job market trends, in-demand skills, and salary benchmarks to strategically plan career moves and upskilling efforts.

Societal Impact

On a broader scale, data analytics contributes to a more 'truthful' and efficient society:

Public Policy: Governments use data to understand demographic shifts, optimize public services (e.g., transportation, healthcare), and allocate resources effectively.

Scientific Discovery: Researchers analyze vast datasets in fields like genomics, astronomy, and climate science to uncover new knowledge and accelerate breakthroughs.

Urban Planning: Cities leverage data from traffic sensors, public transport usage, and environmental monitors to design more sustainable and livable urban environments.

The demand for skilled data analytics professionals continues to grow across the nation, from the vibrant tech hubs to emerging industrial centers. For those looking to gain a comprehensive and practical understanding of this field, pursuing dedicated training is a highly effective path. Many individuals choose programs that offer hands-on experience and cover the latest tools and techniques. For example, a well-regarded Data analytics training course in Noida, along with similar opportunities in Kanpur, Ludhiana, Moradabad, Delhi, and other cities across India, provides the necessary foundation for a successful career. These courses are designed to equip students with the skills required to navigate and contribute to the data-driven landscape.

Conclusion

Learning data analytics goes beyond just picking up technical skills; it’s really about developing a mindset that looks for evidence, values accuracy, and inspires thoughtful action. By honing these vital abilities, people can not only grasp the intricacies of our digital landscape but also play an active role in shaping a future that’s more predictable, efficient, and fundamentally rooted in truth. In a world full of uncertainties, data analytics provides a powerful perspective that helps us find clarity and navigate a more assured path forward

1 note

·

View note

Text

SQL provides powerful functions for transforming and analyzing data. In the best data analyst course, you’ll master three essential types: string, numeric, and conditional functions—each crucial for preparing raw data into insights.

0 notes

Text

What are the different types of power bi certification course in Pune

Introduction Power BI and Its Rising Demand

Data is not just information it's power. In the current digital period, associations calculate on data visualization and analytics to stay competitive. Power BI, Microsoft's leading business analytics tool, empowers professionals to fantasize, dissect, and share perceptivity from data in real- time. As businesses decreasingly move towards data- driven opinions, the demand for professed Power BI professionals is soaring.

Types of Power BI Certification Courses in Pune

Freshman- position Power BI Courses

Ideal for those dipping their toes into data analytics. These courses introduce Power BI’s interface, dashboards, and introductory DAX formulas. Anticipate hands- on tutorials and interactive visual systems.

Intermediate Power BI Certification Programs

For those with some experience in analytics, intermediate courses claw into data modeling, Power Query metamorphoses, and advanced visualizations. It’s the perfect ground between proposition and assiduity operation.

Advanced Power BI Certification Tracks

Targeting professionals with previous BI experience, advanced instruments concentrate on enterprise- position results, Power BI integration with Azure, and bedded analytics. learning these modules paves the way for elderly data critic places.

Microsoft Power BI instrument – The Global Standard

The Microsoft Certified Data Analyst Associate credential is the gold standard. Grounded on the PL- 300 test, it validates your capability to prepare, model, fantasize, and dissect data using Power BI. numerous Pune institutes structure their training to directly support this instrument.

Course Format Options in Pune

Classroom- Grounded Training

For those who thrive in a physical literacy terrain, classroom sessions offer educator support, peer commerce, and a structured literacy pace. Several training centers in Pune, especially in areas like Kothrud and Hinjewadi, give weekend and weekday batches.

Online educator- Led Power BI Courses

Blending inflexibility with real- time support, these courses offer live virtual classes from pukka coaches. Perfect for working professionals demanding schedule rigidity.

Tone- Paced Learning Programs

For the tone- motivated learner, recorded lectures, downloadable coffers, and practice datasets are handed. These are frequently more budget-friendly and can be completed at your own pace.

Duration and Structure of Power BI Certification Courses

utmost freshman- position courses last 4 – 6 weeks, while intermediate and advanced tracks may stretch to 8 – 12 weeks. Courses generally follow a modular structure, gradationally introducing learners to data cleaning, modeling, visualization, and reporting.

Real- Time systems and Case Studies – What to Anticipate

Anticipate systems involving deals dashboards, client segmentation, and functional analytics. Real- time case studies insure you are not just learning proposition but applying it to business scripts.

Tools and Technologies Covered Alongside Power BI

numerous courses integrate tools like SQL, Excel, Python for data visualization, and Azure Synapse Analytics to give learners a comprehensive BI toolkit.

Benefits of Enrolling in a Power BI Course in Pune

Pune, being a tech mecca, offers excellent faculty, practical exposure, and networking openings. scholars frequently profit from original externship tie- ups and placement support.

Top Institutes Offering Power BI Certification Course in Pune

Some well- regarded names include

Ethans Tech

3RI Technologies

SevenMentor

Edureka( online presence with Pune support) These institutes boast educated coaches, solid course material, and placement backing.

How to Choose the Right instrument Course for You

Define your literacy thing freshman, job switcher, or advanced critic? Review the syllabus, read reviews, assess coach credibility, and interrogate about real- world design work. Do n’t forgetpost-course support and placement help.

Average Cost of Power BI instruments in Pune

Prices range from ₹ 8,000 to ₹ 25,000 depending on course position and format. Online courses may offer EMI and rush abatements.

Career openings Post Certification

pukka professionals can land places like

Business Intelligence Analyst

Data Visualization Specialist

Power BI inventor

Data AnalystCompanies hiring include TCS, Infosys, conscious, andmid-level startups.

Salary prospects in Pune vs Other metropolises

A pukka Power BI critic in Pune earns ₹ 4 – 7 LPA on average. In discrepancy, hires in New York or San Francisco may start from$ 70,000 –$ 90,000 annually, reflecting cost- of- living differences.

Comparing Pune’s Immolations to Other metropolises

Power BI instrument Course in Washington

Power BI Certification Course in Washington offers cutting- edge content backed by government and commercial collaboration, the cost of training and living is significantly advanced compared to Pune.

Power BI Certification Course in San Francisco

Known for its Silicon Valley edge, Power BI Certification Course in San Francisco frequently leans into pall integration and AI- powered BI tools. But again, Pune offers more affordable options with solid content depth.

Power BI Certification Course in New York

Courses in New York emphasize fiscal and enterprise data analytics. Pune’s growing finance and IT sectors are catching up presto, making it a good contender at a bit of the price.

Tips to Ace Your Power BI instrument test

Practice daily using Microsoft’s sample datasets.

Recreate dashboards from scrape.

Focus on DAX and data modeling.

Join Power BI forums for tips and challenges.

Conclusion – The Road Ahead for BI Professionals

Whether you are in Pune, Washington, San Francisco, or Power BI Certification Course in New York can turbocharge your career in data analytics. As businesses embrace data for every decision, pukka professionals will continue to be in high demand.

0 notes

Text

Learn Data Analytics in Noida – From Basics to Advanced

In today’s data-driven world, businesses rely heavily on data analytics to make informed decisions, improve operations, and gain a competitive edge. Whether you're a student, recent graduate, or working professional looking to upskill, learning data analytics in Noida offers a powerful pathway to a high-demand career in tech.

Why Learn Data Analytics?

Data analytics is the backbone of digital transformation. From retail to healthcare, finance to logistics, organizations are harnessing data to improve efficiency, predict trends, and tailor experiences to customers. By mastering data analytics, you gain the ability to extract meaningful insights from raw data — a skill that is both valuable and versatile.

Why Choose Noida for Data Analytics Training?

Noida, as one of India’s leading tech hubs, is home to numerous IT companies, startups, and MNCs that are actively hiring skilled data professionals. It also hosts top-rated institutes and training centers offering comprehensive data analytics programs tailored to current industry needs.

Here’s why Noida stands out:

Industry-Oriented Curriculum Training programs cover real-world tools like Excel, SQL, Python, R, Tableau, Power BI, and advanced machine learning techniques.

Hands-On Learning Most courses offer live projects, internships, and case studies to provide practical experience.

Placement Support Institutes in Noida often have tie-ups with local tech firms, increasing your chances of landing a job right after training.

Flexible Modes Choose from classroom, online, or hybrid formats based on your convenience.

What You’ll Learn: From Basics to Advanced

1. Beginner Level:

Introduction to data and its types

Excel for data manipulation

Basics of SQL for database querying

Data visualization fundamentals

2. Intermediate Level:

Python or R for data analysis

Exploratory Data Analysis (EDA)

Working with real-time datasets

Introduction to business intelligence tools (Tableau/Power BI)

3. Advanced Level:

Predictive analytics using machine learning

Time-series analysis

Natural Language Processing (NLP)

Big data tools (Hadoop, Spark – optional)

Capstone projects and portfolio building

Who Can Enroll?

College students from IT, engineering, or statistics backgrounds

Working professionals in finance, marketing, or operations

Freshers looking to start a career in data analytics

Entrepreneurs wanting to leverage data for better business decisions

No prior coding experience? No problem — many programs start from scratch and gradually build your skills.

Final Thoughts

Learning Data Analytics in Noida opens up a world of opportunities. With the right training, mentorship, and hands-on practice, you can transition into roles such as Data Analyst, Business Analyst, Data Scientist, or BI Developer. The demand for data-savvy professionals continues to grow — and there's no better time than now to dive in.

#Data Analytics Training in Noida#Learn Data Analytics#Data Analytics Course Noida#Advanced Data Analytics Program#Data Analyst Career Noida

0 notes

Text

Data Analyst Interview Questions: A Comprehensive Guide

Preparing for an interview as a Data Analyst is difficult, given the broad skills needed. Technical skill, business knowledge, and problem-solving abilities are assessed by interviewers in a variety of ways. This guide will assist you in grasping the kind of questions that will be asked and how to answer them.

By mohammed hassan on Pixabay

General Data Analyst Interview Questions

These questions help interviewers assess your understanding of the role and your basic approach to data analysis.

Can you describe what a Data Analyst does? A Data Analyst collects, processes, and analyzes data to help businesses make data-driven decisions and identify trends or patterns.

What are the key responsibilities of a Data Analyst? Responsibilities include data collection, data cleaning, exploratory data analysis, reporting insights, and collaborating with stakeholders.

What tools are you most familiar with? Say tools like Excel, SQL, Python, Tableau, Power BI, and describe how you have used them in past projects.

What types of data? Describe structured, semi-structured, and unstructured data using examples such as databases, JSON files, and pictures or videos.

Technical Data Analyst Interview Questions

Technical questions evaluate your tool knowledge, techniques, and your ability to manipulate and interpret data.

What is the difference between SQL's inner join and left join? The inner join gives only the common rows between tables, whereas a left join gives all rows of the left table as well as corresponding ones of the right.

How do you deal with missing data in a dataset? Methods are either removing rows, mean/median imputation, or forward-fill/backward-fill depending on context and proportion of missing data.

Can you describe normalization and why it's significant? Normalization minimizes data redundancy and enhances data integrity by structuring data effectively between relational tables.

What are some Python libraries that are frequently used for data analysis? Libraries consist of Pandas for data manipulation, NumPy for numerical computations, Matplotlib/Seaborn for data plotting, and SciPy for scientific computing.

How would you construct a query to discover duplicate values within a table? Use a GROUP BY clause with a HAVING COUNT(*) > 1 to find duplicate records according to one or more columns.

Behavioral and Situational Data Analyst Interview Questions

These assess your soft skills, work values, and how you deal with actual situations.

Describe an instance where you managed a challenging stakeholder. Describe how you actively listened, recognized their requirements, and provided insights that supported business objectives despite issues with communication.

Tell us about a project in which you needed to analyze large datasets. Describe how you broke the dataset down into manageable pieces, what tools you used, and what you learned from the analysis.

Read More....

0 notes

Text

Data Analysis: Turning Information into Insight

In nowadays’s digital age, statistics has come to be a vital asset for businesses, researchers, governments, and people alike. However, raw facts on its personal holds little value till it's far interpreted and understood. This is wherein records evaluation comes into play. Data analysis is the systematic manner of inspecting, cleansing, remodeling, and modeling facts with the objective of coming across beneficial information, drawing conclusions, and helping selection-making.

What Is Data Analysis In Research

What is Data Analysis?

At its middle, records analysis includes extracting meaningful insights from datasets. These datasets can variety from small and based spreadsheets to large and unstructured facts lakes. The primary aim is to make sense of data to reply questions, resolve issues, or become aware of traits and styles that are not without delay apparent.

Data evaluation is used in truely every enterprise—from healthcare and finance to marketing and education. It enables groups to make proof-based choices, improve operational efficiency, and advantage aggressive advantages.

Types of Data Analysis

There are several kinds of information evaluation, every serving a completely unique purpose:

1. Descriptive Analysis

Descriptive analysis answers the question: “What happened?” It summarizes raw facts into digestible codecs like averages, probabilities, or counts. For instance, a store might analyze last month’s sales to decide which merchandise achieved satisfactory.

2. Diagnostic Analysis

This form of evaluation explores the reasons behind beyond outcomes. It answers: “Why did it occur?” For example, if a agency sees a surprising drop in internet site visitors, diagnostic evaluation can assist pinpoint whether or not it changed into because of a technical problem, adjustments in search engine marketing rating, or competitor movements.

3. Predictive Analysis

Predictive analysis makes use of historical information to forecast destiny consequences. It solutions: “What is probable to occur?” This includes statistical models and system getting to know algorithms to pick out styles and expect destiny trends, such as customer churn or product demand.

4. Prescriptive Analysis

Prescriptive analysis provides recommendations primarily based on facts. It solutions: “What have to we do?” This is the maximum advanced type of analysis and often combines insights from predictive analysis with optimization and simulation techniques to manual selection-making.

The Data Analysis Process

The technique of information analysis commonly follows those steps:

1. Define the Objective

Before diving into statistics, it’s essential to without a doubt recognize the question or trouble at hand. A well-defined goal guides the entire analysis and ensures that efforts are aligned with the preferred outcome.

2. Collect Data

Data can come from numerous sources which includes databases, surveys, sensors, APIs, or social media. It’s important to make certain that the records is relevant, timely, and of sufficient high-quality.

3. Clean and Prepare Data

Raw information is regularly messy—it may comprise missing values, duplicates, inconsistencies, or mistakes. Data cleansing involves addressing these problems. Preparation may include formatting, normalization, or growing new variables.

Four. Analyze the Data

Tools like Excel, SQL, Python, R, or specialized software consisting of Tableau, Power BI, and SAS are typically used.

5. Interpret Results

Analysis isn't pretty much numbers; it’s about meaning. Interpreting effects involves drawing conclusions, explaining findings, and linking insights lower back to the authentic goal.

6. Communicate Findings

Insights have to be communicated effectively to stakeholders. Visualization tools including charts, graphs, dashboards, and reports play a vital position in telling the story behind the statistics.

7. Make Decisions and Take Action

The last aim of statistics analysis is to tell selections. Whether it’s optimizing a advertising marketing campaign, improving customer support, or refining a product, actionable insights flip data into real-global effects.

Tools and Technologies for Data Analysis

A big selection of gear is available for facts analysis, each suited to distinct tasks and talent levels:

Excel: Great for small datasets and short analysis. Offers capabilities, pivot tables, and charts.

Python: Powerful for complicated facts manipulation and modeling. Popular libraries consist of Pandas, NumPy, Matplotlib, and Scikit-learn.

R: A statistical programming language extensively used for statistical analysis and statistics visualization.

SQL: Essential for querying and handling information saved in relational databases.

Tableau & Power BI: User-friendly enterprise intelligence equipment that flip facts into interactive visualizations and dashboards.

Healthcare: Analyzing affected person statistics to enhance treatment plans, predict outbreaks, and control resources.

Finance: Detecting fraud, coping with threat, and guiding investment techniques.

Retail: Personalizing advertising campaigns, managing inventory, and optimizing pricing.

Sports: Enhancing performance through participant records and game analysis.

Public Policy: Informing choices on schooling, transportation, and financial improvement.

Challenges in Data Analysis

Data Quality: Incomplete, old, or incorrect information can lead to deceptive conclusions.

Data Privacy: Handling sensitive records requires strict adherence to privacy guidelines like GDPR.

Skill Gaps: There's a developing demand for skilled information analysts who can interpret complicated facts sets.

Integration: Combining facts from disparate resources may be technically hard.

Bias and Misinterpretation: Poorly designed analysis can introduce bias or lead to wrong assumptions.

The Future of Data Analysis

As facts keeps to grow exponentially, the sector of facts analysis is evolving rapidly. Emerging developments include:

Artificial Intelligence (AI) & Machine Learning: Automating evaluation and producing predictive fashions at scale.

Real-Time Analytics: Enabling decisions based totally on live data streams for faster reaction.

Data Democratization: Making records handy and understandable to everybody in an business enterprise

2 notes

·

View notes

Text

Reading and Importing Data in SAS: CSV, Excel, and More

In the world of data analytics, efficient data importation is a fundamental skill. SAS (Statistical Analysis System), a powerful platform for data analysis and statistical computing, offers robust tools to read and import data from various formats, including CSV, Excel, and more. Regardless of whether you are a beginner or overseeing analytics at an enterprise level, understanding how to import data into SAS is the initial step towards obtaining valuable insights.

This article breaks down the most common methods of importing data in SAS, along with best practices and real-world applications—offering value to everyone from learners in a Data Analyst Course to experienced professionals refining their workflows.

Why Importing Data Matters in SAS

Before any analysis begins, the data must be accessible. Importing data correctly ensures integrity, compatibility, and efficiency in processing. SAS supports a range of formats, allowing analysts to work with data from different sources seamlessly. The most common among these are CSV and Excel files due to their ubiquity in business and research environments.

Understanding how SAS handles these files can drastically improve productivity, particularly when working with large datasets or performing repetitive tasks in reporting and modelling.

Importing CSV Files into SAS

Comma-Separated Values (CSV) files are lightweight, easy to generate, and commonly used to exchange data. In SAS, importing CSVs is straightforward.

When importing a CSV file, SAS treats each line as an observation and each comma as a delimiter between variables. This format is ideal for users who deal with exported data from databases or web applications.

Best Practices:

Clean your CSV files before importing—ensure no missing headers, extra commas, or encoding issues.

Use descriptive variable names in the first row of the CSV to streamline your SAS workflow.

Always review the imported data to verify that variable types and formats are interpreted correctly.

Professionals undertaking a Data Analyst Course often begin with CSV files due to their simplicity, making this an essential foundational skill.

Importing Excel Files into SAS

Excel files are the go-to format for business users and analysts. They often contain multiple sheets, merged cells, and various data types, which adds complexity to the import process.

SAS provides built-in tools for reading Excel files, including engines like XLSX and the Import Wizard, which are available in SAS Studio or Enterprise Guide. These tools allow users to preview sheets, specify ranges, and even convert date formats during import.

Key Considerations:

Ensure the Excel file is not open during import to avoid access errors.

Use consistent formatting in Excel—SAS may misinterpret mixed data types within a single column.

If your Excel workbook contains multiple sheets, decide whether you need to import one or all of them.

Advanced users and those enrolled in a Data Analytics Course in Mumbai often work with Excel as part of larger data integration pipelines, making mastery of these techniques critical.

Importing Data from Other Sources

Beyond CSV and Excel, SAS supports numerous other data formats, including:

Text files (.txt): Often used for raw data exports or logs.

Database connections: Through SAS/ACCESS, users can connect to databases like Oracle, SQL Server, or MySQL.

JSON and XML: Increasingly used in web-based and API data integrations.

SAS Datasets (.sas7bdat): Native format with optimised performance for large datasets.

Each format comes with its own import nuances, such as specifying delimiters, encoding schemes, or schema mappings. Familiarity with these enhances flexibility in working with diverse data environments.

Tips for Efficient Data Importing

Here are a few practical tips to improve your SAS data importing skills:

Automate repetitive imports using macros or scheduled jobs.

Validate imported data against source files to catch discrepancies early.

Log and document your import steps—especially when working in team environments or preparing data for audits.

Stay updated: SAS frequently updates its procedures and import capabilities to accommodate new formats and security standards.

Learning and Upskilling with SAS

Importing data is just one piece of the SAS puzzle. For aspiring data professionals, structured training offers the advantage of guided learning, hands-on practice, and industry context. A Data Analyst training will typically begin with data handling techniques, setting the stage for more advanced topics like modelling, visualisation, and predictive analytics.

For learners in metro regions, a Data Analytics Course in Mumbai can provide local networking opportunities, expert mentorship, and exposure to real-world projects involving SAS. These programs often include training in data import techniques as part of their curriculum, preparing students for the demands of modern data-driven roles.

Final Thoughts

Reading and importing data into SAS is a vital skill that underpins all subsequent analysis. Whether you're working with CSV files exported from a CRM, Excel spreadsheets from finance teams, or direct connections to enterprise databases, mastering these tasks can significantly enhance your efficiency and accuracy.

By understanding the nuances of each data format and leveraging SAS's powerful import tools, you’ll be better equipped to manage data workflows, ensure data quality, and drive valuable insights. And for those committed to building a career in analytics, a course could be the stepping stone to mastering not just SAS but the entire data science pipeline.

Business name: ExcelR- Data Science, Data Analytics, Business Analytics Course Training Mumbai

Address: 304, 3rd Floor, Pratibha Building. Three Petrol pump, Lal Bahadur Shastri Rd, opposite Manas Tower, Pakhdi, Thane West, Thane, Maharashtra 400602

Phone: 09108238354,

Email: [email protected]

0 notes

Text

Are You Job-Ready After a Java Course in Coimbatore (2025)?

1. The Enduring Power of Java in 2025

Java remains a leading language in 2025 for enterprise applications, Android, and cloud services due to its platform independence, security, and strong community. In Coimbatore's growing IT sector, Java skills are highly sought after. A good Java Course in Coimbatore offers a head start.

Java's cross-platform ("Write Once, Run Anywhere") capability.

High performance and scalability for demanding applications.

Significant job market demand in India and globally.

Access to extensive open-source libraries and tools.

Continued relevance in backend and cloud development.

Staying updated with practical training is crucial for 2025.

2. Key Elements of a Job-Focused Java Course in 2025

Job readiness in 2025 requires more than Core Java knowledge; hands-on experience with industry tools and frameworks is essential. A comprehensive Java Course in Coimbatore should emphasize project-based learning.

Coverage of Core Java fundamentals (OOPs, data types, etc.).

Inclusion of Advanced Java concepts (Servlets, JSP, JDBC, MVC).

Training on frameworks like Spring and Spring Boot.

Experience with tools such as Git/GitHub and Maven.

Database integration with MySQL or PostgreSQL.

Opportunities to build and deploy full-stack applications.

Preparation for technical and HR interviews is also vital.

3. Expanding Your Skill Set Beyond Java

Becoming a well-rounded developer in 2025 involves complementary skills alongside Java. Employers value versatility. When choosing a Java Course in Coimbatore, consider if it includes or encourages these:

Basic frontend skills (HTML5, CSS3, JavaScript).

Database skills (SQL).

Understanding of web technologies (REST APIs, JSON).

Familiarity with IDEs (IntelliJ, Eclipse).

Proficiency in version control (Git/GitHub).

Basic exposure to cloud platforms or deployment tools.

Understanding the full-stack perspective is increasingly important.

4. The Java Job Landscape in Coimbatore for 2025

Coimbatore's growing IT sector presents numerous opportunities for Java developers in 2025. Java is in high demand for backend, application development, and integration roles.

Common job roles include Java Developer, Backend Developer, Full Stack Java Developer, and Android Developer.

Key employers in Coimbatore include Cognizant, Bosch, and local startups.

Employers value skills in Java, Spring Boot, and database connectivity.

Demand is increasing in sectors like finance, health tech, and logistics.

Training aligned with market needs is crucial for job seekers.

5. Comprehensive Placement Support and Valuable Feedback

Effective placement support is a key aspect of a good Java Course in Coimbatore, going beyond just teaching.

Includes resume building and LinkedIn optimization.

Offers mock technical and HR interviews.

Provides opportunities for live project demos.

May include direct referrals to hiring partners.

Positive feedback from past students highlights the impact of this support.

Alumni success stories reflect the trustworthiness of the training.

6. Conclusion: Achieving Your Developer Aspirations

A well-structured Java Course in Coimbatore can indeed be sufficient to land a developer job in 2025, provided it offers the right content, practical experience, and placement assistance. Java remains in high demand, but continuous learning and a broader skillset are crucial for success.

Choose a course covering Core and Advanced Java.

Gain experience with essential tools and frameworks.

Build a portfolio through real-world projects.

Utilize resume and interview preparation.

Consider learning complementary technologies.

A job-focused course in Coimbatore can be a great launchpad for your tech career.

#java course#appincoimbatore#javatraining#JavaCourseCoimbatore#JobReady2025#LearnJava#FullStackTraining#ITCareersIndia

1 note

·

View note

Text

Zoople Technologies: Your Launchpad into the World of Data Science in Kochi

In today's data-driven world, the ability to analyze, interpret, and leverage data is no longer a luxury but a necessity. Data science has emerged as a critical field, and the demand for skilled data professionals is soaring. For individuals in Kochi aspiring to carve a successful career in this dynamic domain, Zoople Technologies stands out as a prominent institute offering comprehensive data science training. This content explores the key aspects that make Zoople Technologies a compelling choice for your data science education journey in Kochi.

Comprehensive Data Science Curriculum Designed for Success:

Zoople Technologies offers a meticulously designed data science course that aims to equip learners with a holistic understanding of the data science lifecycle. Their curriculum goes beyond theoretical concepts, emphasizing practical application and industry-relevant skills. Key components of their data science training typically include:

Foundational Programming with Python: Python is the workhorse of data science, and Zoople Technologies likely provides a strong foundation in Python programming, covering essential libraries such as NumPy for numerical computation and Pandas for data manipulation and analysis.

Statistical Foundations: A solid understanding of statistics is crucial for data analysis. The course likely covers essential statistical concepts, probability, hypothesis testing, and different types of statistical analysis.

Data Wrangling and Preprocessing: Real-world data is often messy. Zoople Technologies likely trains students on techniques for cleaning, transforming, and preparing data for analysis, a critical step in any data science project.

Exploratory Data Analysis (EDA): Understanding the data through visualization and summary statistics is key. The course likely covers EDA techniques using libraries like Matplotlib and Seaborn to uncover patterns and insights.

Machine Learning Algorithms: The core of predictive modeling. Zoople Technologies' curriculum likely introduces various machine learning algorithms, including supervised learning (regression, classification), unsupervised learning (clustering, dimensionality reduction), and model evaluation metrics.

Big Data Technologies (Potentially): Depending on the course level and focus, Zoople Technologies might also introduce big data tools and frameworks like Hadoop and Spark to handle large datasets efficiently.

Database Management with SQL: The ability to extract and manage data from databases is essential. The course likely covers SQL for querying and manipulating data.

Data Visualization and Communication: Effectively communicating findings is crucial. Zoople Technologies likely emphasizes data visualization techniques and tools to present insights clearly and concisely.

Real-World Projects and Case Studies: To solidify learning, the course likely incorporates hands-on projects and case studies that simulate real-world data science challenges. This provides practical experience and builds a strong portfolio.

Experienced Instructors and a Supportive Learning Environment:

While specific instructor profiles aren't detailed in the general search results, Zoople Technologies emphasizes having experienced trainers. Positive student reviews often highlight the helpfulness and knowledge of the faculty. A supportive learning environment is crucial for grasping complex data science concepts, and Zoople Technologies aims to provide just that. Their focus on practical learning likely involves interactive sessions and opportunities for students to clarify doubts.

Emphasis on Practical Skills and Industry Relevance:

Zoople Technologies appears to prioritize practical skills development, recognizing that the data science field demands hands-on experience. Their curriculum is likely designed to bridge the gap between theoretical knowledge and real-world application. The inclusion of projects and case studies ensures that students gain the confidence and practical abilities sought by employers.

Career Support and Placement Assistance:

A significant aspect of choosing a data science institute is the career support it offers. Zoople Technologies highlights placement assistance, including resume building, interview preparation, and potentially connecting students with job opportunities. While specific placement records aren't always publicly available, their focus on job-ready skills suggests an intent to support students in their career transitions.

Why Choose Zoople Technologies for Data Science in Kochi?

Comprehensive Curriculum: Covering the breadth of essential data science topics.

Focus on Practical Learning: Emphasizing hands-on experience through projects and case studies.

Experienced Trainers: Providing guidance and mentorship in a supportive environment.

Industry-Relevant Skills: Equipping students with the tools and techniques demanded by the data science industry.

Career Support: Offering assistance in navigating the job market.

To make an informed decision about Zoople Technologies' data science course in Kochi, prospective students are encouraged to:

Inquire about the detailed course syllabus: Understand the specific topics covered and the depth of each module.

Ask about the instructors' backgrounds and experience: Learn about their expertise in the data science field.

Seek information on the types of projects and case studies involved: Understand the practical learning opportunities.

Enquire about their placement assistance program: Get details on the support provided for career transitions.

Read recent student reviews and testimonials: Gain insights from past students' experiences.

By offering a comprehensive curriculum, emphasizing practical skills, and providing career support, Zoople Technologies aims to be a valuable launchpad for individuals seeking to embark on a successful and rewarding career in the exciting field of data science in Kochi.

0 notes

Text

Data Analytics Training in Kochi: Transforming Data into Career Opportunities

In today's digital-first world, data has become the foundation of intelligent decision-making and competitive advantage. From small startups to global enterprises, organizations are increasingly relying on data analytics to refine their strategies, understand customer behavior, predict trends, and improve operational efficiency. As this demand grows, so does the need for skilled professionals who can analyze, interpret, and derive actionable insights from data.

If you're aiming to join this booming field, Data Analytics training in Kochi can be your launching pad into a high-growth, future-proof career.

What Is Data Analytics?

Data analytics is the process of collecting, organizing, and analyzing large sets of data to uncover patterns, trends, and insights. It allows organizations to make better business decisions, optimize processes, and even automate decision-making.

The discipline is broadly classified into four types:

Descriptive Analytics – Analyzes past data to understand what happened.

Diagnostic Analytics – Explains why something happened by finding the root cause.

Predictive Analytics – Forecasts future trends using statistical models and machine learning.

Prescriptive Analytics – Recommends actions based on predictive insights.

These analytics processes are used across every industry including finance, healthcare, e-commerce, manufacturing, logistics, and even public administration.

Why Data Analytics Skills Are in Demand

The ability to work with data has become a core skill in nearly every domain. Businesses today collect massive amounts of structured and unstructured data from customers, internal operations, and digital channels. Without skilled analysts, much of that data remains underutilized.

Here’s why learning data analytics is a valuable move:

High Career Growth: Roles in data analytics are consistently listed among the top emerging jobs globally.

Cross-Industry Relevance: Data skills are required in nearly every industry.

Attractive Salaries: According to LinkedIn and Glassdoor, data analysts and scientists earn above-average salaries compared to other IT roles.

Remote Work Opportunities: Data analytics can be done remotely, offering location flexibility.

Why Choose Kochi for Data Analytics Training?

Kochi, one of Kerala's fastest-growing IT hubs, offers an ideal environment for tech-focused training and employment. Choosing Best Data Analytics training in Kochi has several advantages:

1. Proximity to IT Parks and MNCs

With tech parks like Infopark and SmartCity hosting numerous IT and analytics companies, Kochi offers excellent internship and job opportunities post-training.

2. Growing Startup Ecosystem

The city has a dynamic startup culture, making it a great place for data professionals to find meaningful roles early in their careers.

3. Cost-Effective Living and Learning

Compared to other metros, Kochi offers affordable education and a lower cost of living.

4. Quality Training Institutes

Kochi has multiple reputed training centers offering comprehensive courses tailored to industry needs.

What You Will Learn in a Data Analytics Training Program

A robust Data Analytics training Institute in Kochi should provide hands-on experience with real-world tools and techniques. Here’s what a comprehensive course typically includes:

1. Foundations in Statistics and Probability

Understanding data begins with solid statistical knowledge—essential for analyzing data sets and making logical inferences.

2. Programming for Data Analysis

Courses usually cover:

Python (Pandas, NumPy, Matplotlib, Seaborn)

R programming (Optional, but valuable)

3. Database Management and SQL

SQL remains a vital tool for querying and managing relational databases.

4. Data Visualization

Tools like Tableau, Power BI, or Excel are used to create visual representations of data that help in business storytelling and decision-making.

5. Machine Learning Basics

Courses also introduce machine learning models such as:

Linear regression

Decision trees

Clustering and classification algorithms

6. Real-Time Projects and Case Studies

Good programs include capstone projects based on live data sets from various industries such as retail, finance, or health care.

Career Paths After Data Analytics Training

Once you complete a Data Analytics training in Kochi, you open the doors to a range of high-demand roles such as:

Data Analyst

Business Analyst

Data Scientist (with advanced learning)

BI Developer

Data Engineer

Operations Analyst

Each of these positions offers strong growth potential, job security, and opportunities to work with global teams.

How to Choose the Right Institute for Data Analytics Training

When selecting a training provider in Kochi, here are key factors to consider:

Curriculum Quality: Ensure it covers both foundational and advanced analytics tools.

Experienced Trainers: Instructors with industry experience provide valuable real-world insights.

Hands-On Learning: Look for practical, project-based learning rather than just theory.

Placement Support: Institutes with strong industry ties help with resume building, interviews, and job referrals.

Certification: Make sure the course provides recognized certifications to boost your credibility.

Among several training centers in Kochi, Zoople Technologies stands out as a top-tier choice.

Why Zoople Technologies Is a Leader in Data Analytics Training in Kochi

When it comes to professional, job-oriented Data Analytics training in Kochi, Zoople Technologies has established itself as a reliable and respected institution. With years of experience in tech training and a strong commitment to practical education, Zoople has empowered hundreds of students and professionals to enter the data field confidently.

What Sets Zoople Technologies Apart?

Industry-Aligned Curriculum: Courses are updated regularly to match current industry requirements and trends.

Expert Instructors: Trainers at Zoople are experienced data professionals from leading organizations.

Real-Time Projects: Students get to work on real-world business problems using actual datasets.

Comprehensive Tools: Training includes Python, SQL, Tableau, Power BI, and introductory machine learning concepts.

Flexible Modes: Both classroom and hybrid online formats are available for learners with different schedules.

Placement Support: Zoople offers dedicated placement assistance, mock interviews, and resume preparation.

Zoople Technologies has helped learners from diverse backgrounds—from commerce graduates to IT professionals—build successful careers in analytics. Its practical and mentorship-driven approach ensures that students are not just learning but becoming job-ready.

Conclusion

In a world that thrives on data, acquiring analytics skills is no longer optional—it's essential. With businesses depending more on data-driven decision-making, the job market for analysts continues to expand rapidly. Enrolling in Data Analytics training in Kochi gives you the right foundation to enter this lucrative field, especially when you choose a training institute that emphasizes hands-on, industry-relevant skills.

Among the many options available, Zoople Technologies stands out as a beacon of quality and credibility in Kerala’s tech education space. With its comprehensive curriculum, experienced faculty, and excellent placement record, Zoople is the go-to choice for anyone serious about building a career in data analytics.

If you're looking to turn your passion for data into a profession, Zoople Technologies is the perfect place to start.

0 notes

Text

Top Data Analysis Methods in 2025: A Complete Guide for Beginners and Professionals

🚀 Introduction: Why Data Analysis Methods Matter Today

We live in a world overflowing with data—from social media stats and website clicks to sales transactions and customer feedback. But raw data alone is meaningless. It’s only through the use of data analysis methods that we can extract actionable insights and make informed decisions.

Whether you’re a business owner, student, analyst, or entrepreneur, understanding data analysis methods is no longer optional—it’s essential.

In this article, we’ll explore the most widely used data analysis methods, their benefits, tools, use cases, expert opinions, and FAQs—all written in a human-friendly, easy-to-understand tone.

🔍 What Are Data Analysis Methods?

Data analysis methods are systematic approaches used to examine, transform, and interpret data to discover patterns, trends, and insights. These methods range from simple descriptive statistics to complex predictive algorithms.

By using the right method, businesses and analysts can:

📈 Identify trends

💡 Solve business problems

🔮 Forecast future outcomes

🎯 Improve performance

📘 Types of Data Analysis Methods

Here’s a detailed breakdown of the major types of data analysis methods you should know in 2025:

1. Descriptive Analysis

Goal: Summarize historical data to understand what has happened. Example: Monthly revenue report, user growth trends.

Techniques Used:

Mean, median, mode

Frequency distribution

Data visualization (charts, graphs)

Best Tools: Excel, Tableau, Google Data Studio

2. Exploratory Data Analysis (EDA)

Goal: Explore the dataset to uncover initial patterns, detect outliers, and identify relationships. Example: Discovering patterns in customer purchase history.

Techniques Used:

Box plots, scatter plots, heat maps

Correlation matrix

Data cleaning

Best Tools: Python (Pandas, Matplotlib), R, Power BI

3. Inferential Analysis

Goal: Make predictions or generalizations about a larger population based on sample data. Example: Predicting election results based on sample polling.

Techniques Used:

Hypothesis testing

Confidence intervals

T-tests, chi-square tests

Best Tools: SPSS, R, Python (SciPy)

4. Diagnostic Analysis

Goal: Determine the causes of a past event or outcome. Example: Why did the bounce rate increase last month?

Techniques Used:

Root cause analysis

Regression analysis

Data mining

Best Tools: SQL, Power BI, SAS

5. Predictive Analysis

Goal: Forecast future outcomes based on historical data. Example: Predicting next month’s sales based on seasonal trends.

Techniques Used:

Machine learning (decision trees, random forest)

Time series analysis

Neural networks

Best Tools: Python (Scikit-learn, TensorFlow), IBM Watson

6. Prescriptive Analysis

Goal: Recommend actions based on predicted outcomes. Example: Suggesting product pricing for maximum profitability.

Techniques Used:

Optimization

Simulation modeling

Decision trees

Best Tools: MATLAB, Excel Solver, Gurobi

7. Quantitative Analysis

Goal: Focus on numerical data to understand trends and measure outcomes. Example: Measuring website conversion rates.

Techniques Used:

Statistical modeling

Data aggregation

Regression

8. Qualitative Analysis

Goal: Analyze non-numerical data like text, images, or videos. Example: Analyzing customer reviews or survey responses.

Techniques Used:

Sentiment analysis

Thematic coding

Content analysis

Best Tools: NVivo, Lexalytics, Google NLP API

💼 Use Cases of Data Analysis Methods in the Real World

Here’s how businesses use these methods across industries:

🛍 Retail

Method Used: Predictive & diagnostic

Purpose: Forecast demand, understand sales dips

💳 Banking

Method Used: Inferential & prescriptive

Purpose: Detect fraud, assess risk

🏥 Healthcare

Method Used: Diagnostic & descriptive

Purpose: Patient outcome analysis, treatment optimization

📱 Tech Companies

Method Used: Exploratory & predictive

Purpose: App usage patterns, churn prediction

🛠 Best Tools for Applying Data Analysis Methods

Tool NameKey FeaturesSuitable ForExcelCharts, pivot tables, formulasBeginnersPythonML, EDA, statistical analysisIntermediate to ExpertR LanguageStatistical modeling, data visualizationIntermediateTableauVisual dashboardsBusiness analystsPower BIIntegration with Microsoft appsEnterprisesSQLQuerying large datasetsData engineers

🌟 Real Reviews From Experts

“I started with Excel for simple descriptive analysis and gradually moved to Python for predictive modeling. The transition was smoother than I expected.” – Neha D., Data Analyst at a Startup

“We used prescriptive methods in Power BI to optimize our logistics routes. Saved us 20% in transport costs within three months.” – Arjun K., Supply Chain Manager

“Using EDA methods helped us detect user drop-off points in our app, which we quickly fixed.” – Priya S., UX Designer

📌 Step-by-Step Guide to Choosing the Right Data Analysis Method

Define Your Objective: What do you want to find out?

Identify Data Type: Is it qualitative or quantitative?

Choose Your Tool: Based on your team’s skill level.

Clean the Data: Remove duplicates, null values, outliers.

Apply the Method: Use the appropriate model/technique.

Visualize & Interpret: Create charts to simplify interpretation.

Take Action: Use insights to make data-driven decisions.

❓ Frequently Asked Questions (FAQs)

🔹 Q1. What is the difference between data analysis methods and data analysis techniques?

A: Methods refer to the broad approach (e.g., descriptive, predictive), while techniques are specific tools or processes (e.g., regression, clustering).

🔹 Q2. Which data analysis method should I use as a beginner?

A: Start with descriptive and exploratory analysis. These are easy to learn and highly insightful.

🔹 Q3. Do I need coding skills to use these methods?

A: Not always. Tools like Excel, Tableau, and Power BI require minimal to no coding. For advanced analysis (e.g., machine learning), coding helps.

🔹 Q4. Can I use multiple methods in one project?

A: Absolutely! Many real-world projects use a combination of methods for deeper insights.

🔹 Q5. Which is the most powerful data analysis method?

A: That depends on your goal. For forecasting, predictive analysis is powerful. For decision-making, prescriptive analysis works best.

🧠 Tips to Master Data Analysis Methods in 2025

📝 Take online courses (Coursera, Udemy, DataCamp)

💻 Practice with real datasets (Kaggle, Google Dataset Search)

🧮 Understand the math behind techniques

📊 Visualize findings to communicate better

👥 Collaborate with other analysts and teams

✅ Conclusion: Your Data, Your Power

Data is no longer just for analysts or IT professionals. In 2025, knowing how to use data analysis methods can set you apart in virtually any profession. From optimizing marketing campaigns to launching new products, these methods empower you to make data-driven decisions with confidence.

So whether you’re just starting out or looking to level up, keep experimenting, keep analyzing, and let your data tell the story.

🌐 Read more expert data analysis content at diglip7.com 📩 Have questions? Drop a comment or connect with us for consultation.

0 notes

Text

Intro to Web Scraping

Chances are, if you have access to the internet, you have heard of Data Science. Aside from the buzz generated by the title ‘Data Scientist’, only a few in relevant fields can claim to understand what data science is. The majority of people think, if at all, that a data scientist is a mad scientist type able to manipulate statistics and computers to magically generate crazy visuals and insights seemingly out of thin air.

Looking at the plethora of definitions to be found in numerous books and across the internet of what data science is, the layman’s image of a data scientist may not be that far off.

While the exact definition of ‘data science’ is still a work in progress, most in the know would agree that the data science universe encompasses fields such as:

Big Data

Analytics

Machine Learning

Data Mining

Visualization

Deep Learning

Business Intelligence

Predictive Modeling

Statistics

Data Source: Top keywords