#power-efficient FPGA

Explore tagged Tumblr posts

Text

Microchip unveils PolarFire Core to cut FPGA costs for power-efficient applications

May 29, 2025 /Semi/ — Microchip Technology has launched the PolarFire® Core family of FPGAs and SoCs, targeting customers seeking cost-effective, low-power programmable logic solutions. By eliminating integrated serial transceivers, the new lineup reduces bill-of-material costs by up to 30%, while preserving the core strengths of PolarFire: power efficiency, security, and reliability. The…

#electronic components news#Electronic components supplier#Electronic parts supplier#embedded systems design#FPGA cost optimization#Microchip FPGA#mid-range FPGA#PolarFire Core#power-efficient FPGA#RISC-V SoC

0 notes

Text

Agilex 3 FPGAs: Next-Gen Edge-To-Cloud Technology At Altera

Agilex 3 FPGA

Today, Altera, an Intel company, launched a line of FPGA hardware, software, and development tools to expand the market and use cases for its programmable solutions. Altera unveiled new development kits and software support for its Agilex 5 FPGAs at its annual developer’s conference, along with fresh information on its next-generation, cost-and power-optimized Agilex 3 FPGA.

Altera

Why It Matters

Altera is the sole independent provider of FPGAs, offering complete stack solutions designed for next-generation communications infrastructure, intelligent edge applications, and high-performance accelerated computing systems. Customers can get adaptable hardware from the company that quickly adjusts to shifting market demands brought about by the era of intelligent computing thanks to its extensive FPGA range. With Agilex FPGAs loaded with AI Tensor Blocks and the Altera FPGA AI Suite, which speeds up FPGA development for AI inference using well-liked frameworks like TensorFlow, PyTorch, and OpenVINO toolkit and tested FPGA development flows, Altera is leading the industry in the use of FPGAs in AI inference workload

Intel Agilex 3

What Agilex 3 FPGAs Offer

Designed to satisfy the power, performance, and size needs of embedded and intelligent edge applications, Altera today revealed additional product details for its Agilex 3 FPGA. Agilex 3 FPGAs, with densities ranging from 25K-135K logic elements, offer faster performance, improved security, and higher degrees of integration in a smaller box than its predecessors.

An on-chip twin Cortex A55 ARM hard processor subsystem with a programmable fabric enhanced with artificial intelligence capabilities is a feature of the FPGA family. Real-time computation for time-sensitive applications such as industrial Internet of Things (IoT) and driverless cars is made possible by the FPGA for intelligent edge applications. Agilex 3 FPGAs give sensors, drivers, actuators, and machine learning algorithms a smooth integration for smart factory automation technologies including robotics and machine vision.

Agilex 3 FPGAs provide numerous major security advancements over the previous generation, such as bitstream encryption, authentication, and physical anti-tamper detection, to fulfill the needs of both defense and commercial projects. Critical applications in industrial automation and other fields benefit from these capabilities, which guarantee dependable and secure performance.

Agilex 3 FPGAs offer a 1.9×1 boost in performance over the previous generation by utilizing Altera’s HyperFlex architecture. By extending the HyperFlex design to Agilex 3 FPGAs, high clock frequencies can be achieved in an FPGA that is optimized for both cost and power. Added support for LPDDR4X Memory and integrated high-speed transceivers capable of up to 12.5 Gbps allow for increased system performance.

Agilex 3 FPGA software support is scheduled to begin in Q1 2025, with development kits and production shipments following in the middle of the year.

How FPGA Software Tools Speed Market Entry

Quartus Prime Pro

The Latest Features of Altera’s Quartus Prime Pro software, which gives developers industry-leading compilation times, enhanced designer productivity, and expedited time-to-market, are another way that FPGA software tools accelerate time-to-market. With the impending Quartus Prime Pro 24.3 release, enhanced support for embedded applications and access to additional Agilex devices are made possible.

Agilex 5 FPGA D-series, which targets an even wider range of use cases than Agilex 5 FPGA E-series, which are optimized to enable efficient computing in edge applications, can be designed by customers using this forthcoming release. In order to help lower entry barriers for its mid-range FPGA family, Altera provides software support for its Agilex 5 FPGA E-series through a free license in the Quartus Prime Software.

Support for embedded applications that use Altera’s RISC-V solution, the Nios V soft-core processor that may be instantiated in the FPGA fabric, or an integrated hard-processor subsystem is also included in this software release. Agilex 5 FPGA design examples that highlight Nios V features like lockstep, complete ECC, and branch prediction are now available to customers. The most recent versions of Linux, VxWorks, and Zephyr provide new OS and RTOS support for the Agilex 5 SoC FPGA-based hard processor subsystem.

How to Begin for Developers

In addition to the extensive range of Agilex 5 and Agilex 7 FPGAs-based solutions available to assist developers in getting started, Altera and its ecosystem partners announced the release of 11 additional Agilex 5 FPGA-based development kits and system-on-modules (SoMs).

Developers may quickly transition to full-volume production, gain firsthand knowledge of the features and advantages Agilex FPGAs can offer, and easily and affordably access Altera hardware with FPGA development kits.

Kits are available for a wide range of application cases and all geographical locations. To find out how to buy, go to Altera’s Partner Showcase website.

Read more on govindhtech.com

#Agilex3FPGA#NextGen#CloudTechnology#TensorFlow#Agilex5FPGA#OpenVINO#IntelAgilex3#artificialintelligence#InternetThings#IoT#FPGA#LPDDR4XMemory#Agilex5FPGAEseries#technology#Agilex7FPGAs#QuartusPrimePro#technews#news#govindhtech

2 notes

·

View notes

Text

FPGA Market - Exploring the Growth Dynamics

The FPGA market is witnessing rapid growth finding a foothold within the ranks of many up-to-date technologies. It is called versatile components, programmed and reprogrammed to perform special tasks, staying at the fore to drive innovation across industries such as telecommunications, automotive, aerospace, and consumer electronics. Traditional fixed-function chips cannot be changed to an application, whereas in the case of FPGAs, this can be done. This brings fast prototyping and iteration capability—extremely important in high-flux technology fields such as telecommunications and data centers. As such, FPGAs are designed for the execution of complex algorithms and high-speed data processing, thus making them well-positioned to handle the demands that come from next-generation networks and cloud computing infrastructures.

In the aerospace and defense industries, FPGAs have critically contributed to enhancing performance in systems and enhancing their reliability. It is their flexibility that enables the realization of complex signal processing, encryption, and communication systems necessary for defense-related applications. FPGAs provide the required speed and flexibility to meet the most stringent specifications of projects in aerospace and defense, such as satellite communications, radar systems, and electronic warfare. The ever-improving FPGA technology in terms of higher processing power and lower power consumption is fueling demand in these critical areas.

Consumer electronics is another upcoming application area for FPGAs. From smartphones to smart devices, and finally the IoT, the demand for low-power and high-performance computing is on the rise. In this regard, FPGAs give the ability to integrate a wide array of varied functions onto a single chip and help in cutting down the number of components required, thereby saving space and power. This has been quite useful to consumer electronics manufacturers who wish to have state-of-the-art products that boast advanced features and have high efficiency. As IoT devices proliferate, the role of FPGAs in this area will continue to foster innovation.

Growing competition and investments are noticed within the FPGA market, where key players develop more advanced and efficient products. The performance of FPGAs is increased by investing in R&D; the number of features grows, and their cost goes down. This competitive environment is forcing innovation and a wider choice availability for end-users is contributing to the growth of the whole market.

Author Bio -

Akshay Thakur

Senior Market Research Expert at The Insight Partners

2 notes

·

View notes

Text

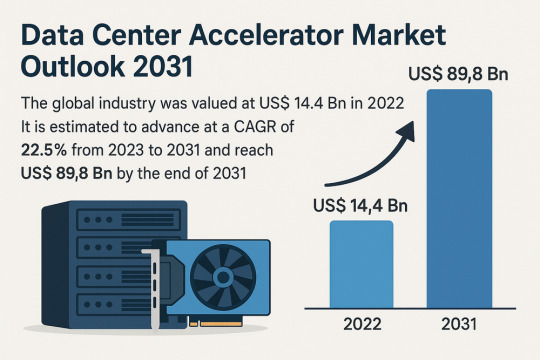

Data Center Accelerator Market Set to Transform AI Infrastructure Landscape by 2031

The global data center accelerator market is poised for exponential growth, projected to rise from USD 14.4 Bn in 2022 to a staggering USD 89.8 Bn by 2031, advancing at a CAGR of 22.5% during the forecast period from 2023 to 2031. Rapid adoption of Artificial Intelligence (AI), Machine Learning (ML), and High-Performance Computing (HPC) is the primary catalyst driving this expansion.

Market Overview: Data center accelerators are specialized hardware components that improve computing performance by efficiently handling intensive workloads. These include Graphics Processing Units (GPUs), Tensor Processing Units (TPUs), Field Programmable Gate Arrays (FPGAs), and Application-Specific Integrated Circuits (ASICs), which complement CPUs by expediting data processing.

Accelerators enable data centers to process massive datasets more efficiently, reduce reliance on servers, and optimize costs a significant advantage in a data-driven world.

Market Drivers & Trends

Rising Demand for High-performance Computing (HPC): The proliferation of data-intensive applications across industries such as healthcare, autonomous driving, financial modeling, and weather forecasting is fueling demand for robust computing resources.

Boom in AI and ML Technologies: The computational requirements of AI and ML are driving the need for accelerators that can handle parallel operations and manage extensive datasets efficiently.

Cloud Computing Expansion: Major players like AWS, Azure, and Google Cloud are investing in infrastructure that leverages accelerators to deliver faster AI-as-a-service platforms.

Latest Market Trends

GPU Dominance: GPUs continue to dominate the market, especially in AI training and inference workloads, due to their capability to handle parallel computations.

Custom Chip Development: Tech giants are increasingly developing custom chips (e.g., Meta’s MTIA and Google's TPUs) tailored to their specific AI processing needs.

Energy Efficiency Focus: Companies are prioritizing the design of accelerators that deliver high computational power with reduced energy consumption, aligning with green data center initiatives.

Key Players and Industry Leaders

Prominent companies shaping the data center accelerator landscape include:

NVIDIA Corporation – A global leader in GPUs powering AI, gaming, and cloud computing.

Intel Corporation – Investing heavily in FPGA and ASIC-based accelerators.

Advanced Micro Devices (AMD) – Recently expanded its EPYC CPU lineup for data centers.

Meta Inc. – Introduced Meta Training and Inference Accelerator (MTIA) chips for internal AI applications.

Google (Alphabet Inc.) – Continues deploying TPUs across its cloud platforms.

Other notable players include Huawei Technologies, Cisco Systems, Dell Inc., Fujitsu, Enflame Technology, Graphcore, and SambaNova Systems.

Recent Developments

March 2023 – NVIDIA introduced a comprehensive Data Center Platform strategy at GTC 2023 to address diverse computational requirements.

June 2023 – AMD launched new EPYC CPUs designed to complement GPU-powered accelerator frameworks.

2023 – Meta Inc. revealed the MTIA chip to improve performance for internal AI workloads.

2023 – Intel announced a four-year roadmap for data center innovation focused on Infrastructure Processing Units (IPUs).

Gain an understanding of key findings from our Report in this sample - https://www.transparencymarketresearch.com/sample/sample.php?flag=S&rep_id=82760

Market Opportunities

Edge Data Center Integration: As computing shifts closer to the edge, opportunities arise for compact and energy-efficient accelerators in edge data centers for real-time analytics and decision-making.

AI in Healthcare and Automotive: As AI adoption grows in precision medicine and autonomous vehicles, demand for accelerators tuned for domain-specific processing will soar.

Emerging Markets: Rising digitization in emerging economies presents substantial opportunities for data center expansion and accelerator deployment.

Future Outlook

With AI, ML, and analytics forming the foundation of next-generation applications, the demand for enhanced computational capabilities will continue to climb. By 2031, the data center accelerator market will likely transform into a foundational element of global IT infrastructure.

Analysts anticipate increasing collaboration between hardware manufacturers and AI software developers to optimize performance across the board. As digital transformation accelerates, companies investing in custom accelerator architectures will gain significant competitive advantages.

Market Segmentation

By Type:

Central Processing Unit (CPU)

Graphics Processing Unit (GPU)

Application-Specific Integrated Circuit (ASIC)

Field-Programmable Gate Array (FPGA)

Others

By Application:

Advanced Data Analytics

AI/ML Training and Inference

Computing

Security and Encryption

Network Functions

Others

Regional Insights

Asia Pacific dominates the global market due to explosive digital content consumption and rapid infrastructure development in countries such as China, India, Japan, and South Korea.

North America holds a significant share due to the presence of major cloud providers, AI startups, and heavy investment in advanced infrastructure. The U.S. remains a critical hub for data center deployment and innovation.

Europe is steadily adopting AI and cloud computing technologies, contributing to increased demand for accelerators in enterprise data centers.

Why Buy This Report?

Comprehensive insights into market drivers, restraints, trends, and opportunities

In-depth analysis of the competitive landscape

Region-wise segmentation with revenue forecasts

Includes strategic developments and key product innovations

Covers historical data from 2017 and forecast till 2031

Delivered in convenient PDF and Excel formats

Frequently Asked Questions (FAQs)

1. What was the size of the global data center accelerator market in 2022? The market was valued at US$ 14.4 Bn in 2022.

2. What is the projected market value by 2031? It is projected to reach US$ 89.8 Bn by the end of 2031.

3. What is the key factor driving market growth? The surge in demand for AI/ML processing and high-performance computing is the major driver.

4. Which region holds the largest market share? Asia Pacific is expected to dominate the global data center accelerator market from 2023 to 2031.

5. Who are the leading companies in the market? Top players include NVIDIA, Intel, AMD, Meta, Google, Huawei, Dell, and Cisco.

6. What type of accelerator dominates the market? GPUs currently dominate the market due to their parallel processing efficiency and widespread adoption in AI/ML applications.

7. What applications are fueling growth? Applications like AI/ML training, advanced analytics, and network security are major contributors to the market's growth.

Explore Latest Research Reports by Transparency Market Research: Tactile Switches Market: https://www.transparencymarketresearch.com/tactile-switches-market.html

GaN Epitaxial Wafers Market: https://www.transparencymarketresearch.com/gan-epitaxial-wafers-market.html

Silicon Carbide MOSFETs Market: https://www.transparencymarketresearch.com/silicon-carbide-mosfets-market.html

Chip Metal Oxide Varistor (MOV) Market: https://www.transparencymarketresearch.com/chip-metal-oxide-varistor-mov-market.html

About Transparency Market Research Transparency Market Research, a global market research company registered at Wilmington, Delaware, United States, provides custom research and consulting services. Our exclusive blend of quantitative forecasting and trends analysis provides forward-looking insights for thousands of decision makers. Our experienced team of Analysts, Researchers, and Consultants use proprietary data sources and various tools & techniques to gather and analyses information. Our data repository is continuously updated and revised by a team of research experts, so that it always reflects the latest trends and information. With a broad research and analysis capability, Transparency Market Research employs rigorous primary and secondary research techniques in developing distinctive data sets and research material for business reports. Contact: Transparency Market Research Inc. CORPORATE HEADQUARTER DOWNTOWN, 1000 N. West Street, Suite 1200, Wilmington, Delaware 19801 USA Tel: +1-518-618-1030 USA - Canada Toll Free: 866-552-3453 Website: https://www.transparencymarketresearch.com Email: [email protected] of Form

Bottom of Form

0 notes

Text

Point of Load Power Chip Market: Opportunities in Commercial and Residential Sectors

MARKET INSIGHTS

The global Point of Load Power Chip Market size was valued at US$ 1,340 million in 2024 and is projected to reach US$ 2,450 million by 2032, at a CAGR of 9.27% during the forecast period 2025-2032. This growth trajectory follows a broader semiconductor industry trend, where the worldwide market reached USD 580 billion in 2022 despite macroeconomic headwinds.

Point-of-load (PoL) power chips are voltage regulator ICs designed for localized power conversion near high-performance processors, FPGAs, and ASICs. These compact solutions provide precise voltage regulation, improved transient response, and higher efficiency compared to centralized power architectures. Key variants include single-channel (dominant with 65% market share) and multi-channel configurations, deployed across industrial (32% share), automotive (25%), and aerospace (18%) applications.

The market expansion is driven by escalating power demands in 5G infrastructure, AI servers, and electric vehicles—each requiring advanced power management solutions. Recent innovations like Infineon’s 12V/48V multi-phase controllers and TI’s buck-boost converters demonstrate how PoL technology addresses modern efficiency challenges. However, supply chain constraints and geopolitical factors caused Asia-Pacific revenues to dip 2% in 2022, even as Americas grew 17%.

MARKET DYNAMICS

MARKET DRIVERS

Expanding Demand for Energy-Efficient Electronics to Accelerate Market Growth

The global push toward energy efficiency is creating substantial demand for point-of-load (POL) power chips across multiple industries. These components play a critical role in reducing power consumption by delivering optimized voltage regulation directly to processors and other sensitive ICs rather than relying on centralized power supplies. Current market analysis reveals that POL solutions can improve overall system efficiency by 15-30% compared to traditional power architectures, making them indispensable for modern electronics. The rapid proliferation of IoT devices, 5G infrastructure, and AI-driven applications further amplifies this demand, as these technologies require precise power management at minimal energy loss.

Automotive Electrification Trends to Fuel Adoption Rates

Automakers worldwide are accelerating their transition to electric vehicles (EVs) and advanced driver-assistance systems (ADAS), creating unprecedented opportunities for POL power chips. These components are essential for managing power distribution to onboard computing modules, sensors, and infotainment systems with minimal electromagnetic interference. Industry projections estimate that automotive applications will account for over 25% of the total POL power chip market by 2027, driven by increasing semiconductor content per vehicle. Recent advancements in autonomous driving technology particularly benefit from the high current density and fast transient response offered by next-generation POL regulators.

Data Center Infrastructure Modernization to Sustain Market Expansion

Hyperscale data centers are undergoing significant architectural changes to support AI workloads and edge computing, with POL power delivery emerging as a critical enabling technology. Modern server designs increasingly adopt distributed power architectures to meet the stringent efficiency requirements of advanced CPUs, GPUs, and memory modules. This shift comes amid forecasts predicting global data center power consumption will reach 8% of worldwide electricity usage by 2030, making efficiency improvements economically imperative. Leading chip manufacturers have responded with innovative POL solutions featuring digital interfaces for real-time voltage scaling and load monitoring capabilities.

MARKET RESTRAINTS

Supply Chain Disruptions and Material Shortages to Constrain Market Potential

While demand for POL power chips continues growing, the semiconductor industry faces persistent challenges in securing stable supply chains for critical materials. Specialty substrates, such as silicon carbide (SiC) and gallium nitride (GaN), which enable high-efficiency POL designs, remain subject to allocation due to fabrication capacity limitations. Market intelligence suggests lead times for certain power semiconductors exceeded 52 weeks during recent supply crunches, creating bottlenecks for electronics manufacturers. These constraints particularly impact automotive and industrial sectors where component qualification processes limit rapid supplier substitutions.

Thermal Management Challenges to Limit Design Flexibility

As POL regulators push toward higher current densities in smaller form factors, thermal dissipation becomes a significant constraint for system designers. Contemporary applications often require POL solutions to deliver upwards of 30A from packages smaller than 5mm x 5mm, creating localized hot spots that challenge traditional cooling approaches. This thermal limitation forces compromises between power density, efficiency, and reliability—particularly in space-constrained applications like smartphones or wearable devices. Manufacturers continue investing in advanced packaging technologies to address these limitations, but thermal considerations remain a key factor in POL architecture decisions.

MARKET OPPORTUNITIES

Integration of AI-Based Power Optimization to Create New Value Propositions

Emerging artificial intelligence applications in power management present transformative opportunities for the POL chip market. Adaptive voltage scaling algorithms powered by machine learning can dynamically optimize power delivery based on workload patterns and environmental conditions. Early implementations in data centers demonstrate potential energy savings of 10-15% through AI-driven POL adjustments, with similar techniques now being adapted for mobile and embedded applications. This technological convergence enables POL regulators to evolve from static components into intelligent power nodes within larger system architectures.

Medical Electronics Miniaturization to Open New Application Verticals

The healthcare sector’s accelerating adoption of portable and implantable medical devices creates substantial growth potential for compact POL solutions. Modern diagnostic equipment and therapeutic devices increasingly incorporate multiple voltage domains that must operate reliably within strict safety parameters. POL power chips meeting medical safety standards (IEC 60601) currently represent less than 15% of the total market, signaling significant expansion capacity as device manufacturers transition from linear regulators to more efficient switching architectures. This transition aligns with broader healthcare industry trends toward battery-powered and wireless solutions.

MARKET CHALLENGES

Design Complexity and Verification Costs to Impact Time-to-Market

Implementing advanced POL architectures requires sophisticated power integrity analysis and system-level verification—processes that significantly extend development cycles. Power delivery networks incorporating multiple POL regulators demand extensive simulation to ensure stability across all operating conditions, with analysis suggesting power subsystem design now consumes 30-40% of total PCB development effort for complex electronics. These challenges are compounded by the need to comply with evolving efficiency standards and electromagnetic compatibility requirements across different geographic markets.

Intense Price Competition to Pressure Profit Margins

The POL power chip market faces ongoing pricing pressures as the technology matures and experiences broader adoption. While premium applications like servers and telecom infrastructure tolerate higher component costs, consumer electronics and IoT devices demonstrate extreme price sensitivity. Market analysis indicates that average selling prices for basic POL regulators have declined by 7-12% annually over the past three years, forcing manufacturers to achieve economies of scale through architectural innovations and process technology advancements. This relentless pricing pressure creates significant challenges for sustaining research and development investments.

POINT OF LOAD POWER CHIP MARKET TRENDS

Rising Demand for Efficient Power Management in Electronic Devices

The global Point of Load (PoL) power chip market is experiencing robust growth, driven by the increasing complexity of electronic devices requiring localized voltage regulation. As modern integrated circuits (ICs) operate at progressively lower voltages with higher current demands, PoL solutions have become critical for minimizing power loss and optimizing efficiency. The automotive sector alone accounts for over 30% of the market demand, as electric vehicles incorporate dozens of PoL regulators for advanced driver assistance systems (ADAS) and infotainment. Meanwhile, 5G infrastructure deployment is accelerating adoption in telecommunications, where base stations require precise voltage regulation for RF power amplifiers.

Other Trends

Miniaturization and Integration Advancements

Manufacturers are pushing the boundaries of semiconductor packaging technologies to develop smaller, more integrated PoL solutions. Stacked die configurations and wafer-level packaging now allow complete power management ICs (PMICs) to occupy less than 10mm² board space. This miniaturization is particularly crucial for portable medical devices and wearable technologies, where space constraints demand high power density. Recent innovations in gallium nitride (GaN) and silicon carbide (SiC) technologies are further enhancing power conversion efficiency, with some PoL converters now achieving over 95% efficiency even at load currents exceeding 50A.

Industry 4.0 and Smart Manufacturing Adoption

The fourth industrial revolution is driving significant demand for industrial-grade PoL solutions as factories deploy more IoT-enabled equipment and robotics. Unlike commercial-grade components, these industrial PoL converters feature extended temperature ranges (-40°C to +125°C operation) and enhanced reliability metrics. Market analysis indicates industrial applications will grow at a CAGR exceeding 8% through 2030, as manufacturers increasingly adopt predictive maintenance systems requiring robust power delivery. Furthermore, the aerospace sector’s shift toward more electric aircraft (MEA) architectures is creating specialized demand for radiation-hardened PoL regulators capable of withstanding harsh environmental conditions.

COMPETITIVE LANDSCAPE

Key Industry Players

Semiconductor Giants Compete Through Innovation and Strategic Expansions

The global Point of Load (PoL) power chip market features a highly competitive landscape dominated by established semiconductor manufacturers, with Analog Devices and Texas Instruments collectively holding over 35% market share in 2024. These companies maintain leadership through continuous R&D investment – Analog Devices alone allocated approximately 20% of its annual revenue to product development last year.

While traditional power management leaders maintain strong positions, emerging players like Infineon Technologies are gaining traction through specialized automotive-grade solutions. The Germany-based company reported 18% year-over-year growth in its power segment during 2023, fueled by increasing electric vehicle adoption.

Market dynamics show regional variations in competitive strategies. Renesas Electronics and ROHM Semiconductor dominate the Asia-Pacific sector with cost-optimized solutions, whereas North American firms focus on high-efficiency chips for data center applications. This regional specialization creates multiple growth avenues across market segments.

Recent years have seen accelerated consolidation, with NXP Semiconductors acquiring three smaller power IC developers since 2022 to expand its PoL portfolio. Such strategic moves, combined with ongoing technological advancements in wide-bandgap semiconductors, are reshaping competitive positioning across the value chain.

List of Key Point of Load Power Chip Manufacturers

Analog Devices, Inc. (U.S.)

Infineon Technologies AG (Germany)

Texas Instruments Incorporated (U.S.)

NXP Semiconductors N.V. (Netherlands)

STMicroelectronics N.V. (Switzerland)

Renesas Electronics Corporation (Japan)

ROHM Semiconductor (Japan)

Dialog Semiconductor (Germany)

Microchip Technology Inc. (U.S.)

Segment Analysis:

By Type

Multi-channel Segment Dominates Due to Growing Demand for Higher Efficiency Power Management

The market is segmented based on type into:

Single Channel

Subtypes: Non-isolated, Isolated

Multi-channel

Subtypes: Dual-output, Triple-output, Quad-output

By Application

Automotive Segment Leads Owing to Increasing Electronic Content in Vehicles

The market is segmented based on application into:

Industrial

Aerospace

Automotive

Medical

Others

By Form Factor

Surface-Mount Devices Gaining Traction Due to Miniaturization Trends

The market is segmented based on form factor into:

Through-hole

Surface-mount

By Voltage Rating

Low Voltage Segment Prevails in Consumer Electronics Applications

The market is segmented based on voltage rating into:

Low Voltage (Below 5V)

Medium Voltage (5V-24V)

High Voltage (Above 24V)

Regional Analysis: Point of Load Power Chip Market

North America The North American Point of Load (PoL) power chip market is driven by strong demand from automotive, industrial, and aerospace applications, particularly in the U.S. and Canada. The region benefits from advanced semiconductor manufacturing infrastructure and high investments in next-generation power management solutions. With automotive electrification trends accelerating—such as the shift toward electric vehicles (EVs) and ADAS (Advanced Driver Assistance Systems)—demand for efficient PoL power chips is rising. Additionally, data center expansions and 5G infrastructure deployments are fueling growth. The U.S. holds the majority share, supported by key players like Texas Instruments and Analog Devices, as well as increasing government-backed semiconductor investments such as the CHIPS and Science Act.

Europe Europe’s PoL power chip market is shaped by stringent energy efficiency regulations and strong industrial automation adoption, particularly in Germany and France. The automotive sector remains a key driver, with European OEMs integrating advanced power management solutions to comply with emissions regulations and enhance EV performance. The presence of leading semiconductor firms like Infineon Technologies and STMicroelectronics strengthens innovation, focusing on miniaturization and high-efficiency chips. Challenges include economic uncertainties and supply chain disruptions, but demand remains resilient in medical and renewable energy applications, where precise power distribution is critical.

Asia-Pacific Asia-Pacific dominates the global PoL power chip market, led by China, Japan, and South Korea, which account for a majority of semiconductor production and consumption. China’s rapid industrialization, coupled with its aggressive investments in EVs and consumer electronics, fuels demand for multi-channel PoL solutions. Meanwhile, Japan’s automotive and robotics sectors rely on high-reliability power chips, while India’s expanding telecom and renewable energy infrastructure presents new opportunities. Despite supply chain vulnerabilities and export restrictions impacting the region, local players like Renesas Electronics and ROHM Semiconductor continue to advance technologically.

South America South America’s PoL power chip market is still in a nascent stage, with Brazil and Argentina showing gradual growth in industrial and automotive applications. Local infrastructure limitations and heavy reliance on imports hinder market expansion, but rising investments in automotive manufacturing and renewable energy projects could spur future demand. Political and economic instability remains a barrier; however, increasing digitization in sectors like telecommunications and smart grid development provides a foundation for long-term PoL adoption.

Middle East & Africa The Middle East & Africa’s PoL power chip market is emerging but constrained by limited semiconductor infrastructure. Gulf nations like Saudi Arabia and the UAE are investing in smart city projects, data centers, and industrial automation, driving demand for efficient power management solutions. Africa’s market is more fragmented, though increasing mobile penetration and renewable energy initiatives present growth avenues. Regional adoption is slower due to lower local manufacturing capabilities, but partnerships with global semiconductor suppliers could accelerate market penetration.

Report Scope

This market research report provides a comprehensive analysis of the Global Point of Load Power Chip market, covering the forecast period 2025–2032. It offers detailed insights into market dynamics, technological advancements, competitive landscape, and key trends shaping the industry.

Key focus areas of the report include:

Market Size & Forecast: Historical data and future projections for revenue, unit shipments, and market value across major regions and segments. The Global Point of Load Power Chip market was valued at USD 1.2 billion in 2024 and is projected to reach USD 2.8 billion by 2032, growing at a CAGR of 11.3%.

Segmentation Analysis: Detailed breakdown by product type (Single Channel, Multi-channel), application (Industrial, Aerospace, Automotive, Medical, Others), and end-user industry to identify high-growth segments and investment opportunities.

Regional Outlook: Insights into market performance across North America, Europe, Asia-Pacific, Latin America, and the Middle East & Africa. Asia-Pacific currently dominates with 42% market share due to strong semiconductor manufacturing presence.

Competitive Landscape: Profiles of leading market participants including Analog Devices, Texas Instruments, and Infineon Technologies, including their product offerings, R&D focus (notably in automotive and industrial applications), and recent developments.

Technology Trends & Innovation: Assessment of emerging technologies including integration with IoT devices, advanced power management solutions, and miniaturization trends in semiconductor design.

Market Drivers & Restraints: Evaluation of factors driving market growth (increasing demand for energy-efficient devices, growth in automotive electronics) along with challenges (supply chain constraints, semiconductor shortages).

Stakeholder Analysis: Insights for component suppliers, OEMs, system integrators, and investors regarding strategic opportunities in evolving power management solutions.

Related Reports:https://semiconductorblogs21.blogspot.com/2025/06/laser-diode-cover-glass-market-valued.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/q-switches-for-industrial-market-key.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/ntc-smd-thermistor-market-emerging_19.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/lightning-rod-for-building-market.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/cpe-chip-market-analysis-cagr-of-121.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/line-array-detector-market-key-players.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/tape-heaters-market-industry-size-share.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/wavelength-division-multiplexing-module.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/electronic-spacer-market-report.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/5g-iot-chip-market-technology-trends.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/polarization-beam-combiner-market.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/amorphous-selenium-detector-market-key.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/output-mode-cleaners-market-industry.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/digitally-controlled-attenuators-market.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/thin-double-sided-fpc-market-key.html

0 notes

Text

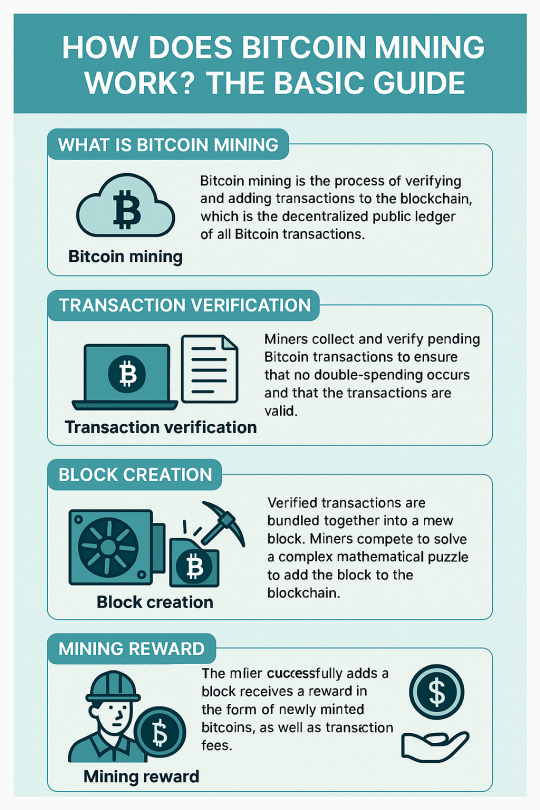

How Does Bitcoin Mining Work? The Basic Guide

Bitcoin mining is a foundational process in the world of cryptocurrency. It plays a critical role in maintaining the integrity of the Bitcoin network, enabling transactions, and introducing new bitcoins into circulation. For anyone looking to understand how Bitcoin functions or even considering mining as a potential pursuit, understanding its underlying mechanisms is essential. This guide breaks down Bitcoin mining in a simple and comprehensive way.

What is Bitcoin Mining?

Bitcoin mining is the process of validating and recording transactions on the Bitcoin blockchain, a decentralized public ledger. Miners use specialized hardware to solve complex mathematical problems. When a problem is solved, the miner adds a new block of transactions to the blockchain and is rewarded with newly minted bitcoins and transaction fees.

Why Is Mining Necessary?

Mining serves several essential functions:

Validation of Transactions: It ensures that every transaction is legitimate and prevents double-spending.

Decentralization: Because mining is done by many users worldwide, it helps keep the Bitcoin network decentralized.

Issuance of New Bitcoins: It introduces new bitcoins into circulation at a controlled and predictable rate.

How Does Bitcoin Mining Work?

The process involves several key steps:

Transaction Verification: When people send bitcoins, those transactions are grouped into a "block." Each block can contain thousands of transactions.

Hashing Function: Miners take the block's data and run it through a cryptographic function called SHA-256, generating a fixed-length string of numbers and letters known as a hash.

Proof of Work: Miners must find a hash that meets a specific condition (i.e., starts with a certain number of zeros). This requires changing a small piece of data in the block (called a nonce) repeatedly and rehashing until the condition is met.

Block Addition: When a miner successfully finds a valid hash, the block is broadcast to the network and verified by other nodes. If accepted, it is added to the blockchain.

Reward Distribution: The successful miner receives a reward in bitcoins, known as the block reward, along with transaction fees from the included transactions.

Equipment Used in Mining

Initially, mining could be done using ordinary computers (CPUs). As difficulty increased, miners switched to:

GPUs (Graphics Processing Units): More efficient than CPUs for parallel processing.

FPGAs (Field-Programmable Gate Arrays): Hardware configured for mining tasks.

ASICs (Application-Specific Integrated Circuits): The most powerful and efficient hardware designed specifically for Bitcoin mining.

Mining Pools

Due to the increasing difficulty of mining and competition, most miners join mining pools. A mining pool is a group of miners who combine their computational power to increase their chances of solving a block. Rewards are distributed proportionally based on the amount of computing power each miner contributes.

Bitcoin Mining Difficulty

Mining difficulty is a measure of how hard it is to find a valid hash. This difficulty adjusts every 2016 blocks (approximately every two weeks) to ensure blocks are mined roughly every 10 minutes. If blocks are being mined too quickly, the difficulty increases; if too slowly, it decreases.

Bitcoin Halving

Approximately every four years, the block reward is halved in an event known as Bitcoin halving. This reduces the number of new bitcoins issued and helps control inflation. Initially, the reward was 50 BTC; as of 2024, it's 3.125 BTC per block.

Environmental Impact

Bitcoin mining consumes a significant amount of electricity, leading to concerns about its environmental impact. Some solutions include:

Using renewable energy sources.

Transitioning to more energy-efficient equipment.

Encouraging innovations in sustainable mining practices.

Is Bitcoin Mining Profitable?

Profitability depends on several factors:

Electricity Costs: Mining is energy-intensive, and lower electricity rates lead to higher profits.

Mining Hardware: More efficient ASICs reduce power consumption and increase output.

Bitcoin Price: Higher prices make mining more lucrative.

Network Difficulty: Increased difficulty can reduce the chances of earning rewards.

Risks Involved

Volatility: Bitcoin's price is highly volatile, affecting profitability.

Regulatory Changes: Governments may impose restrictions or bans on mining.

Hardware Obsolescence: Rapid advancements in technology can render current hardware obsolete.

Future of Bitcoin Mining

As Bitcoin continues to grow, mining will evolve:

Greater use of green energy.

Advancements in chip technology.

Movement toward more decentralized mining models.

Community focus on reducing carbon footprint and improving sustainability.

Bitcoin mining is a complex but fascinating process. It not only supports the Bitcoin network but also offers an opportunity for users to earn cryptocurrency. However, it comes with significant costs, risks, and environmental implications. Whether you're looking to mine or simply understand how it works, grasping the fundamentals of Bitcoin mining helps you appreciate the broader ecosystem of blockchain and digital finance.

0 notes

Text

Data center processors are the foundational computing engines that power today’s digital infrastructure, enabling everything from cloud services and big data analytics to artificial intelligence and edge computing. Designed to handle vast amounts of data with high speed and efficiency, these processors have evolved far beyond traditional CPUs to include specialized accelerators like GPUs, FPGAs, and custom AI chips. As data centers face increasing demands for performance, scalability, and energy efficiency, the development of advanced processors plays a critical role in shaping the future of computing and digital transformation across industries.

0 notes

Text

Understanding the DMA Card: Applications, Benefits, and Selection Guide

What is a DMA Card? An Overview of Direct Memory Access

Defining the DMA Card and Its Purpose

A Direct Memory Access (DMA) card is a specialized hardware component that allows certain peripherals to access system memory independently of the central processing unit (CPU). This capability enables high-speed data transfer without exposing the CPU to additional computational burden. Traditional data transfer methods rely on the CPU to oversee every byte of data transmission, which can create bottlenecks, especially in high-demand applications such as gaming and data analysis. DMA cards streamline these processes, providing a more efficient way to move data between devices and memory.

The dma card integrates directly with a computer’s motherboard through slots like PCIe, allowing it to interface with various peripherals, including graphics cards, storage devices, and more. By taking the initiative to manage data transfer, DMA cards significantly enhance system performance and responsiveness.

Key Technologies Behind DMA Implementation

The core technology behind DMA cards relies on a separate DMA controller, which acts as an intermediary between the memory and peripheral devices. This controller can execute transactions such as reading or writing data directly without CPU intervention. For users, this results in improved processing speeds and reduced latencies. The process typically unfolds in several steps:

Request: The peripheral device sends a request to the DMA controller to transfer data.

Grant: After processing, the controller grants the request and manages the data transfer directly to or from the memory.

Completion: The DMA controller signals the peripheral that the transfer has been completed.

This method of operation frees up the CPU to perform other tasks, allowing for a multi-tasking environment that does not compromise on speed and performance.

Historical Context and Evolution of DMA Cards

The concept of direct memory access has roots dating back to the early days of computing. Initially designed for mainframe systems, the technology was recognized for its efficiency in offloading tasks from the CPU. As computers evolved, so did the implementation of DMA. The introduction of PCIe technology in the early 2000s allowed for faster data communication, further enhancing the capabilities of DMA cards.

Over time, advancements in semiconductor technology led to the development of smaller, more powerful DMA cards, such as those equipped with FPGA (Field Programmable Gate Array) functionalities. These modern cards offer customizable performance settings, making them ideal for gaming, video rendering, and complex data processing tasks.

Applications of DMA Cards in Modern Computing

Use Cases in Gaming: Enhancing Performance with DMA

In the gaming industry, performance is paramount. Game developers and players alike benefit from the high-speed data transfer provided by DMA cards. For example, DMA cards enable faster loading times by allowing game data to be streamed directly into memory without CPU intervention. This can lead to significant improvements in frame rates and overall gameplay experience.

Moreover, DMA technology is increasingly being used to facilitate real-time data processing for game cheat detection systems. By allowing read and write access to memory without CPU involvement, DMA cards can operate stealthily, making them difficult for traditional anti-cheat systems to detect.

Implementing DMA Cards for Data Transfer Efficiency

DMA cards are not limited to gaming; they play a vital role in data-centric applications across industries. For instance, in video production, the use of DMA significantly accelerates the transfer of large video files from capture devices to editing software. By streamlining this process, creatives can focus more on their craft and less on technical delays.

Additionally, in machine learning applications, DMA cards can facilitate rapid data retrieval, improving training times for complex models. As datasets grow in size and complexity, the efficiency offered by DMA becomes increasingly essential.

Industry-Specific Applications: From Gaming to Industrial Automation

Beyond gaming and data processing, DMA cards find utility in various sectors such as industrial automation, telecommunications, and scientific research. In industrial settings, they are used to manage data flow from sensors to processing units without overwhelming the CPU. This efficient data management enables timely responses in systems critical for safety and compliance.

In the telecommunications sector, DMA technology supports the demanding data transfer requirements of modern networks. Here, DMA cards improve throughput and reduce latency, allowing service providers to deliver high-quality streaming services and manage large volumes of concurrent users.

Benefits of Using DMA Cards

Increased Data Transfer Speeds

The primary advantage of using DMA cards is the significant increase in data transfer speeds. By bypassing the CPU for direct memory access, data can be moved more quickly and efficiently. This is especially valuable in applications requiring high bandwidth, such as video streaming or data-heavy software applications.

Reducing CPU Load for Improved Performance

With DMA cards handling data transfers independently, the CPU is free to execute other processes. This reduction in CPU load can lead to enhanced system performance, especially in multitasking environments where many applications run simultaneously. Users can experience smoother performance as the system becomes more responsive under load.

Enhanced Reliability and Support for High-Volume Tasks

DMA cards are designed to handle high data volumes reliably. In industries where data integrity and loss prevention are paramount, such as finance and healthcare, the use of DMA cards ensures that transfers occur without interruption or error. Their built-in redundancy and error-checking capabilities further enhance their reliability in mission-critical applications.

Choosing the Right DMA Card for Your Needs

Key Features to Consider: Speed, Compatibility, and Support

When selecting a DMA card, several factors come into play. First and foremost, assess the speed ratings of the card, which indicate how rapidly data can be processed. Next, compatibility with existing hardware is crucial; ensure that the card fits your motherboard’s slot type (PCIe, USB, etc.) and is compatible with your operating system.

Additionally, factor in manufacturer support for firmware updates and customization options. A card with robust support can provide long-term value as technologies evolve.

Comparison of Popular DMA Card Models

The market for DMA cards is populated with various models, each catering to specific needs. Popular choices include the FPGA-based cards known for their flexibility and performance. For instance, the 75T and 35T models offer broad compatibility and high throughput rates, making them ideal for both gamers and data scientists alike. Comparisons can involve assessing factors such as speed, supported features, and user reviews.

Understanding Firmware and Customization Options

Many modern DMA cards come with customizable firmware settings, offering users the ability to tweak performance profiles according to their requirements. Understanding how to leverage these options can lead to improved performance and reliability. For users with specific needs, such as gameplay optimization or intensive data processing, detailed knowledge of firmware can make a significant difference.

Best Practices for Using and Maintaining DMA Cards

Installation Tips and Common Pitfalls

Proper installation is critical to harnessing the full potential of DMA cards. Always refer to the manufacturer’s guidelines. Common pitfalls include improper seating of the card in its slot and incompatible peripheral setups. Ensuring a secure connection minimizes issues and maximizes performance.

Regular Maintenance for Optimal Performance

Like any hardware, DMA cards benefit from regular maintenance. Keep software and firmware updated to capitalize on performance enhancements and security patches. Regularly monitoring temperatures and ensuring good air circulation can prevent overheating and prolong the lifespan of the card.

Upgrading: When is it Time to Replace Your DMA Card?

Signs that it may be time to upgrade your DMA card include consistently low performance metrics, compatibility issues with new hardware, or lack of manufacturer support for updates. Evaluating your current needs against the capabilities of your card can help inform your decision to upgrade.

1 note

·

View note

Text

CGMiner quidminer.com

CGMiner quidminer.com is a powerful tool for miners looking to maximize their profits in the world of cryptocurrency mining. With the increasing popularity of virtual currencies, more and more individuals are turning to mining as a way to earn money. However, not all mining software is created equal, and that's where CGMiner comes into play.

CGMiner is renowned for its efficiency and reliability, making it a top choice for both novice and experienced miners. It supports a wide range of hardware, including ASICs, GPUs, and FPGAs, ensuring that users can optimize their mining setup regardless of the equipment they have. By utilizing CGMiner, miners can achieve higher hash rates and lower power consumption, leading to increased profitability.

To further enhance your mining experience, consider visiting https://paladinmining.com. This platform offers valuable resources and tools that can help you fine-tune your mining operations. Whether you're looking for the latest mining news, tutorials, or community support, Paladin Mining has got you covered.

Moreover, integrating CGMiner with platforms like quidminer.com can provide additional benefits. Quidminer.com offers a user-friendly interface and comprehensive analytics, allowing you to monitor your mining activities closely. This combination ensures that you stay on top of your game and make informed decisions to boost your earnings.

In conclusion, CGMiner quidminer.com is an excellent choice for anyone serious about virtual coin mining. By leveraging the power of CGMiner and the resources provided by https://paladinmining.com, you can unlock your full mining potential and start earning more efficiently. So, don't wait any longer—embark on your mining journey today and reap the rewards of the crypto world!

quidminer.com

PaladinMining

Paladin Mining

0 notes

Text

The Growing Demand for VLSI Professionals.

In today’s digitally driven world, the semiconductor industry stands as a core pillar supporting innovation in electronics, telecommunications, and computing. One of the most crucial technologies in this sector is VLSI (Very Large Scale Integration), which refers to the process of creating integrated circuits by combining thousands of transistors into a single chip. As the demand for smarter, faster, and more energy-efficient electronic devices grows, so does the need for skilled VLSI engineers. Hyderabad, known as a major IT and tech hub in India, is becoming a popular destination for those looking to build a career in this field. This article explores how the right VLSI training can open doors to a rewarding future, and why Hyderabad stands out as a top choice for aspiring professionals.

The Role of Structured VLSI Training in Career Growth

Quality education is essential for anyone aiming to enter the semiconductor industry. VLSI design and verification are complex domains that require in-depth knowledge, hands-on practice, and understanding of industry-grade tools and protocols. A structured training program provides the technical foundation along with real-world exposure through live projects and mentorship. Hyderabad offers a competitive edge in this regard due to its established ecosystem of electronics companies and technical institutions. Choosing the best vlsi training institute in hyderabad with placement can significantly enhance employability, giving students a clear pathway to jobs in top MNCs and semiconductor firms. Institutes that provide placement assistance not only offer academic learning but also act as a bridge to industry entry.

Why Hyderabad is Emerging as a VLSI Education Hub

Hyderabad’s technological infrastructure, along with its steady demand for electronics and chip design professionals, has made it a leading destination for VLSI education. The city hosts several design centers and research units that regularly seek out skilled VLSI engineers. Apart from this, the availability of experienced faculty, advanced labs, and industry partnerships has made VLSI training programs in Hyderabad highly effective. Whether someone is a fresh engineering graduate or a working professional looking to upskill, Hyderabad provides options tailored to different learning needs.

Key Components of a Comprehensive VLSI Curriculum

An effective VLSI course generally includes modules on digital design, analog design, RTL coding, verification techniques, FPGA implementation, and industry tools like Cadence and Synopsys. Besides theoretical knowledge, practical training sessions ensure learners become job-ready. Soft skill development, resume preparation, and mock interviews are also integral parts of top-tier VLSI training programs. These elements make a noticeable difference when candidates face actual job interviews or begin work on real-time projects.

The Flexibility of Online Learning in Modern Education

With the rise of digital platforms, online education has transformed the learning experience across all technical fields. Online vlsi training in hyderabad has made high-quality education accessible to a broader audience, including students from remote locations and working professionals with tight schedules. These programs allow learners to attend live classes, access recorded sessions, interact with instructors, and complete assignments at their convenience. The flexibility of online training, combined with the technical excellence of Hyderabad-based institutes, offers a powerful solution for anyone eager to enter the semiconductor field without compromising current commitments.

Conclusion: Building a Future in VLSI Technology

In summary, VLSI is a promising domain for those passionate about technology and innovation. With a growing global demand for skilled semiconductor professionals, getting the right training is more important than ever. Hyderabad offers an ideal setting with top-tier institutes, industry exposure, and placement support. Among the many options available, Takshila Institute of VLSI Technologies stands out for delivering expert-led training programs designed to meet industry standards. By enrolling in the best vlsi training institute in hyderabad with placement or opting for online vlsi training in hyderabad, individuals can take confident steps toward a successful and future-ready career in chip design and electronics.

0 notes

Text

Designing the Future: How Embedded Systems Enhance Medical Device Reliability

Technology is making a huge impact in healthcare, and at the core of many of those breakthroughs is smart engineering. Voler Systems has become a trusted name in developing medical technologies that help people live healthier, safer lives. Whether it's designing advanced medical devices, building embedded systems, or creating custom FPGA solutions, Voler Systems is helping bring the future of healthcare to life.

Turning Ideas Into Life-Saving Medical Devices Bringing a medical device to market is about more than just having a great idea—it requires deep technical knowledge, strict regulatory compliance, and careful planning. Voler Systems specializes in medical devices development, working closely with clients from early design to final production. Their team understands the high stakes involved and focuses on building devices that are accurate, reliable, and user-friendly. Whether it’s for remote patient monitoring or in-clinic diagnostic equipment, their experience helps turn concepts into real-world healthcare solutions. To learn more, visit the Medical Devices Development page.

Reliable Embedded Systems That Power Healthcare Devices Behind most smart medical devices is a powerful system running in the background. These systems are known as embedded systems—and getting them right is critical. Voler Systems has led numerous projects in embedded systems, creating customized designs that power everything from wearable monitors to imaging machines. The focus is always on low power usage, fast response time, and reliability in demanding healthcare settings. Find out how embedded tech powers medical innovation at Projects Embedded Systems.

Smarter Hardware with FPGA Development In situations where performance and speed are non-negotiable, FPGAs (Field Programmable Gate Arrays) provide a unique advantage. These reprogrammable chips allow for fast, custom hardware-level functionality—ideal for critical healthcare applications. Voler Systems offers expert FPGA development services, helping healthcare companies create high-performance systems for imaging, diagnostics, and real-time processing. Explore the possibilities at FPGA Development.

A Trusted Technology Partner in Healthcare Developing smart medical technologies requires more than just technical skills—it requires a deep understanding of both engineering and healthcare needs. That’s where Voler Systems stands out. Whether it’s creating intelligent devices, building efficient embedded platforms, or accelerating performance with FPGA solutions, their work helps healthcare innovators bring safe and effective products to life.

If you're looking to bring a new medical product to market—or improve an existing one—Voler Systems offers the engineering expertise and industry knowledge to help make it happen.

#wearable medical device#electronic design services#medical device design#embedded systems design#projects embedded systems#medical device development company

0 notes

Text

Autonomous Vehicle Hardware

Introduction

Self-driving automobiles, often known as autonomous vehicles (AVs), are among the most revolutionary developments in contemporary mobility. They promise to revolutionize transportation by providing benefits in sustainability, accessibility, efficiency, and safety. Advanced software algorithms and a highly complex array of hardware components work together to provide a seamless and intelligent driving experience. The Autonomous Vehicle Hardware provides the physical framework that permits sensing, processing, and actuation, while the software makes high-level choices.

The main Autonomous Vehicle Hardware elements of autonomous cars are examined in this article along with their functions, advantages, drawbacks, and wider ramifications for mobility in the future.

Key Hardware Elements for Autonomous Vehicle Hardware

1. Sensors: Autonomous Vehicles’ Eyes and Ears

The main means by which AVs sense their surroundings are sensors. To create a 360-degree situational map in real time, they collect information on objects, traffic signs, road markings, and dynamic road users. Typical sensors include:

Light Detection and Ranging, or LiDAR

LiDAR creates intricate 3D maps of the environment using laser pulses. It provides precise object detection and great spatial resolution, which are essential for recognizing cars, pedestrians, and road borders.

Radar (Radio Ranging and Detection)

Radar, in contrast to LiDAR, measures object speed and distance using radio waves, and it works consistently in inclement weather, such as rain, fog, and snow.

Cameras

Visual information from high-definition cameras is used for pedestrian identification, traffic sign recognition, lane detection, and object categorization. They enable the AV to understand intricate situations when paired with computer vision.

Ultrasonic Sensors

These short-range sensors are frequently utilized for low-speed movements and parking assistance since they can identify surrounding obstructions.

Global Positioning System, or GPS

When combined with high-definition maps and inertial measurement units (IMUs), GPS’s geolocation and time data allow for accurate localization and route planning.

2. Computing Hardware: Automation’s Brain

High-performance computing is necessary for autonomous cars to process enormous amounts of real-time sensor data. Among the computer hardware are:

CPU, or central processing unit

The CPU carries out system-level coordination, general-purpose computations, and sensor data interpretation.

Graphics Processing Unit (GPU) Deep learning activities like object tracking and image identification require GPUs, which are designed for parallel processing.

FPGAs, or field-programmable gate arrays

FPGAs provide low-power customizable logic for data fusion, real-time signal processing, and bespoke hardware acceleration.

ASICs, or application-specific integrated circuits

Large-scale autonomous fleets benefit from increased efficiency and speed thanks to ASICs, which are specially made processors tailored for particular AI tasks.

Units for Sensor Fusion

Better object detection, path planning, and control decisions are made possible by these devices, which combine input from several sensors into a cohesive environmental model.

3. Control Systems: Regulating Vehicle Motion

By transforming processed data into actual movements, control systems enable the car to steer, brake, accelerate, and shift gears as needed.

Actuators

The mechanical operations necessary for driving are carried out by actuators. They convert commands into motion responses after receiving them from the control unit.

Wire-Drive Systems

By substituting electronic control systems for mechanical linkages, drive-by-wire enhances accuracy and responsiveness while facilitating the seamless integration of autonomous control.

Units for Electronic Brake and Stability Control

Even when traversing intricate metropolitan settings, these guarantee that brakes and vehicle stability are preserved in challenging driving situations.

4. Communication Systems: Facilitating Instantaneous Communication

AVs can interface to external systems using communication devices to improve safety and coordination.

V2X, or vehicle-to-everything

V2X includes communication between pedestrians (V2P), infrastructure (V2I), and vehicles (V2V). Predictive navigation, hazard alerts, and cooperative traffic management are made possible by this real-time information sharing.

Devoted Short-Range Communications (DSRC) and 5G

These technologies provide high-bandwidth, low-latency communication that is necessary to enable remote system updates and high-speed data transmission.

5. Safety and Redundancy Systems: Guaranteeing Fail-Safe Function

Safety is of the utmost importance in autonomous driving; therefore, systems for redundancy and backup are specifically designed to reduce failures.

Sensors and computation modules that are redundant

Consequently, backups take over immediately to ensure safe functioning in the event that one sensor or processor fails.

Systems for Power Backup and Emergency Braking

In the event of a major malfunction, these mechanisms not only guarantee that the car can stop safely but also ensure it can continue to function.

Systems of Isolation

Furthermore, the isolation of electrical and communication systems helps guard against hardware malfunctions and cyber intrusions.

5. Improving User Experience through Human-Machine Interface (HMI)

Although self-driving cars operate autonomously, human interaction remains crucial. Therefore, HMI systems play a vital role in making it easier for users to interact with and understand the AV.

Voice assistants, visual displays, and touchscreens

Moreover, these interfaces provide status updates, route information, and the ability to manually override when necessary.

Systems for Monitoring Drivers (DMS)

In particular, DMS helps ensure that human drivers are always aware and ready to take control in semi-autonomous settings.

Autonomous Vehicle Hardware Benefits

1. Increased Safety on the Road

Since the majority of road accidents are caused by human faults such as exhaustion and distraction, advanced technology helps to lessen these risks. Moreover, rapid reaction speeds and real-time 360° awareness further enhance threat avoidance and detection.

2. Congestion Reduction and Traffic Efficiency

AVs can select the best route choices, cut down on idle time, and alleviate traffic jams by interacting with other cars and infrastructure, especially in crowded urban areas.

3. Reduced Emissions and Enhanced Fuel Economy

Reduced fuel usage and greenhouse gas emissions are two benefits of hardware-driven precision in driving patterns, such as smoother braking and acceleration.

4. Improved Availability

Autonomous vehicles empower people with impairments, the elderly, and those without driving experience to live more independently. Additionally, autonomous ride-hailing services have expanded mobility options for underprivileged neighbourhoods.

5. Decrease in Traffic Deaths

Consequently, the integration of predictive AI, collision avoidance technology, and redundant safety measures can lead to a considerable reduction in road deaths.

6. Intelligent Parking and Use of Urban Space

There is less need for large parking facilities because autonomous cars can self-park in constrained areas and drop off passengers at entrances.

7. Economical Models of Transportation

By eliminating the need for private vehicle ownership, fleet-based autonomous services not only reduce transportation costs but also lessen environmental impact.

8. Improved Systems for Traffic Management

In addition, city infrastructure leverages real-time data from AVs to enhance emergency response systems, manage traffic flows, and optimize signal timings.

Challenges and Limitations

1. Expensive upfront expenses

As a result of LiDAR units, high-performance computers, and redundancy systems, there is a considerable increase in vehicle prices, which in turn limits early-stage affordability.

2. Complexity of the System

Furthermore, the incorporation of multiple software and hardware layers complicates the overall design, thereby making testing, debugging, and long-term maintenance more challenging.

3. Dependability of Hardware

Despite the presence of redundant systems, hardware failures, environmental deterioration, and aging components still pose significant risks to safety and durability.

4. Risks Associated with Cybersecurity

To protect user safety and data privacy, hardware interfaces must be protected against hacking, tampering, and unwanted data access.

5. Ethical Decision-Making

Hardware execution must handle difficult moral conundrums that arise from hardcoded ethical considerations, such as deciding between pedestrian and passenger safety.

6. Risks of Job Displacement

Moreover, widespread AV adoption may require workforce reskilling and could significantly impact jobs in the driving, logistics, and delivery industries.

7. Incompatibility of Infrastructure

Currently, urban infrastructure and roads do not adequately accommodate AVs; therefore, a significant investment in smart infrastructure is necessary to support V2X communication and ensure precise navigation.

8. Privacy Issues with Data

Since AVs gather enormous volumes of environmental and personal data, the absence of strict data protection measures could, consequently, lead to a decline in public confidence.

Conclusion

Just as important as the software algorithms that drive autonomous cars is the Autonomous Vehicle Hardware that supports them. Every hardware layer, from sensing and computation to actuation and communication, is essential to maintaining performance, safety, and dependability. Despite tremendous advancements, governments, tech companies, and automakers still need to work together to address issues like high costs, cybersecurity, and infrastructure preparedness.

Strong Autonomous Vehicle Hardware will be essential to developing safer, greener, and more equitable transportation networks as the future of mobility develops.

For more information on Dorleco’s Autonomous Vehicle Hardware solutions and staffing solutions, please visit our website or contact us by email at [email protected]

0 notes

Text

Intel VTune Profiler For Data Parallel Python Applications

Intel VTune Profiler tutorial

This brief tutorial will show you how to use Intel VTune Profiler to profile the performance of a Python application using the NumPy and Numba example applications.

Analysing Performance in Applications and Systems

For HPC, cloud, IoT, media, storage, and other applications, Intel VTune Profiler optimises system performance, application performance, and system configuration.

Optimise the performance of the entire application not just the accelerated part using the CPU, GPU, and FPGA.

Profile SYCL, C, C++, C#, Fortran, OpenCL code, Python, Google Go, Java,.NET, Assembly, or any combination of languages can be multilingual.

Application or System: Obtain detailed results mapped to source code or coarse-grained system data for a longer time period.

Power: Maximise efficiency without resorting to thermal or power-related throttling.

VTune platform profiler

It has following Features.

Optimisation of Algorithms

Find your code’s “hot spots,” or the sections that take the longest.

Use Flame Graph to see hot code routes and the amount of time spent in each function and with its callees.

Bottlenecks in Microarchitecture and Memory

Use microarchitecture exploration analysis to pinpoint the major hardware problems affecting your application’s performance.

Identify memory-access-related concerns, such as cache misses and difficulty with high bandwidth.

Inductors and XPUs

Improve data transfers and GPU offload schema for SYCL, OpenCL, Microsoft DirectX, or OpenMP offload code. Determine which GPU kernels take the longest to optimise further.

Examine GPU-bound programs for inefficient kernel algorithms or microarchitectural restrictions that may be causing performance problems.

Examine FPGA utilisation and the interactions between CPU and FPGA.

Technical summary: Determine the most time-consuming operations that are executing on the neural processing unit (NPU) and learn how much data is exchanged between the NPU and DDR memory.

In parallelism

Check the threading efficiency of the code. Determine which threading problems are affecting performance.

Examine compute-intensive or throughput HPC programs to determine how well they utilise memory, vectorisation, and the CPU.

Interface and Platform

Find the points in I/O-intensive applications where performance is stalled. Examine the hardware’s ability to handle I/O traffic produced by integrated accelerators or external PCIe devices.

Use System Overview to get a detailed overview of short-term workloads.

Multiple Nodes

Describe the performance characteristics of workloads involving OpenMP and large-scale message passing interfaces (MPI).

Determine any scalability problems and receive suggestions for a thorough investigation.

Intel VTune Profiler

To improve Python performance while using Intel systems, install and utilise the Intel Distribution for Python and Data Parallel Extensions for Python with your applications.

Configure your Python-using VTune Profiler setup.

To find performance issues and areas for improvement, profile three distinct Python application implementations. The pairwise distance calculation algorithm commonly used in machine learning and data analytics will be demonstrated in this article using the NumPy example.

The following packages are used by the three distinct implementations.

Numpy Optimised for Intel

NumPy’s Data Parallel Extension

Extensions for Numba on GPU with Data Parallelism

Python’s NumPy and Data Parallel Extension

By providing optimised heterogeneous computing, Intel Distribution for Python and Intel Data Parallel Extension for Python offer a fantastic and straightforward approach to develop high-performance machine learning (ML) and scientific applications.

Added to the Python Intel Distribution is:

Scalability on PCs, powerful servers, and laptops utilising every CPU core available.

Assistance with the most recent Intel CPU instruction sets.

Accelerating core numerical and machine learning packages with libraries such as the Intel oneAPI Math Kernel Library (oneMKL) and Intel oneAPI Data Analytics Library (oneDAL) allows for near-native performance.

Tools for optimising Python code into instructions with more productivity.

Important Python bindings to help your Python project integrate Intel native tools more easily.

Three core packages make up the Data Parallel Extensions for Python:

The NumPy Data Parallel Extensions (dpnp)

Data Parallel Extensions for Numba, aka numba_dpex

Tensor data structure support, device selection, data allocation on devices, and user-defined data parallel extensions for Python are all provided by the dpctl (Data Parallel Control library).