#reviewall

Link

0 notes

Text

The Biggest Problem With obama runtz strain info, And How You Can Fix It

The Main Principles Of Marijuana Obama Runtz Review

Table of ContentsThe 15-Second Trick For Marijuana Obama Runtz ReviewAll About Obama Runtz Weed Strain InformationRumored Buzz on Buy Obama Runtz Weed Strain OnlineStrain Review: Obama Runtz for DummiesThe Basic Principles Of Obama Runtz Weed Strain Information Some Known Details About Obama Runtz Strain Indica Or Sativa Weed 3 Easy Facts About Buy Obama Runtz Online DescribedOur Obama Runtz Marijuana Strain Information & Reviews Ideas

Was it just the video recording that went virus-like that made the Obama Runtz strain hip in 2020? While everyone was actually rapping regarding this bud or selling it at high costs, you could be questioning what all the hype concerns. Below's your resource to the incredibly well-liked strain that possessed every person paying for $100 for a fraction of a bag.

Yung pound went additionally to happen out with a track phoned "Obomba Runtz." The streets relished the strain, its own elusive presence, as well as virus-like meme society relationships. Cigarette smoking add-ons, T-shirts, hats, chains, plus all kind of merch were actually pressed with Obama Runtz on all of them. It performs not assist that the Runtz stress itself was actually the grass of 2020 for several stoners. obama care weed strain.

The hype performs have some thinking to it: this comfortable as well as unclear scent is one to relax and also unwind your nerves. Along along with its sweet fragrance, its own equally sweetened flavors match some vanilla cake pieces and also bunches of fruit product. Some stoners disclose a berry-like taste and also others add that it possesses some spiciness to it also (jealousy runtz strain).

Not known Incorrect Statements About Obama Runtz Strain Reviews

Much like being amidst the crowds of people that really want a piece of the floral, you can easily feel progressively spacey along with each puff. In an immediate, it can easily create your head buzz and also coldness you out. monkey mints. The ecstasy will involve you in various surges as it lifts your state of mind and takes you right into that high.

There is very little details to locate concerning how to grow it. Raisers might mention that as a result of its account as a 70% indica-leaning tension, it is most likely sturdy. It may be very easy for it to withstand termites as well as ailments that some marijuana plants suffer from - animal face strain. Along with a blooming time of around 49 to 63 times, the produce will be fulfilling besides the attempt.

A great deal of the hype around the virus-like pressure as well as the viral video clip could just originate from its own name and also the status it brings. strawberry shortcake strain. Still, the aroma, tastes, as well as experiences that included it are legitimate if not overrated. If you're a traveler you could certainly not even understand why it is actually thus revered.

Obama Runtz Strain Review & Information Can Be Fun For Everyone

Obama Runtz is actually medium-sized vegetations. You may see the buddies above. You may observe they are actually dealt with in trichomes, the cold white colored specs which contain the riches of THC and also various other cannabinoids that generate an entourage result that gets you greater as well as much better ache alleviation than either cannabinoid or terpene offered alone (space runtz sativa or indica).

However it is a grass, thus do not be actually frightened. If you received seeds, weeds desire to expand, they want to live and experience lifestyle so it's certainly not all on you. Indoors, you may make an effort to establish up an expand camping tent (jealousy strain). You can either purchase these from the store or online.

They are going to actually possess a reflective coating on the within the camping tent to concentrate your light on your vegetations. You are going to require venting from an enthusiast, consumption, as well as exhaust body to always keep fresh air as well as carbon dioxide moving in and also out. Remember your vegetations possess to take a breath that stuff that is actually visiting of your bronchis (runts strains).

10 Easy Facts About Obama Runtz Marijuana Strain Information & Reviews Described

Now, these are pot vegetations, and also pots will develop whether you desire them to or even certainly not, the goal right here is to make sure you highlight the very best top qualities in your weed. You require to obtain some high discharge lamps to deliver the sunny your plants are actually heading to be actually eating.

Sprouting seeds is actually so very easy, they even do it behind bars. All you need to have is actually 2 layers as well as pair of paper napkins. Take one layer and one damp napkin - area 41 weed strain. Put your seeds on the damp napkin. They will certainly require to take in some of that water to intitiate the genetic plan loaded within.

After that cover with the other plate with room in between for sky flow. Your plants will certainly grow a small fallen leave and also a tiny origin in 3 to 10 days. Some will certainly certainly not grow as well as will definitely be actually thrown away. Some plants are going to be actually males, you only desire women. This is since the males will fertilize the women as well as the buds will be actually smaller.

Buy Obama Runtz Online for Dummies

Thus, if you want seeds, keep the men, or else move the guys or ruin them. The plant stage will definitely find your children expanding in the dirt for regarding three full weeks, growing some fallen leaves and also ultimately a typical, identifiable weed fallen leave. During the fertile stage, you will have the capacity to tell which are men as well as which are actually ladies, you may would like to eliminate the men to obtain much bigger buddies - best runtz strain.

Blooming is going to last 8 to 9 full weeks. Your huge buddies will be actually created. When ready to harvest, reduced them in bundles (dirty sprite strain). Hang them to dry out. Once dried out, you can easily stash all of them in discolored mason jars. You need to get about 18 ozs every square gauge of room or 18 ounces per vegetation.

THC degrees are certainly not high. Terpene levels are powerful. This creates it a really good option for newbies that are actually using it for chronic discomfort, anxiousness, or clinical depression - gummies weed strain. It may assist relieve neck and back pain without being actually therefore strong that it meddles with the capacity to operate. Do certainly not run hefty machinery while drunk.

What Does Buy Obama Runtz Online Mean?

It starts with a cerebral, pleasant rush. la pop rocks. It is actually one thing you may smoke and still service projects. It is actually a really good weed for creative pursuits. It may help you surpass imaginative blocks. It is actually a good grass to provide good friends, skilled and also unskilled. It is actually moderate behaved like the head of state it is actually named for.

Planted along with affection, expanded along with sympathy, Treehouse Delights brings the Emerald Triangle to your door. star dawg thc level.

The tastes of Obama Runtz are actually sweet and spicy. The pressure has a cherry taste when inhaled and develops a plant based mist when breathed out. The stress possesses a cherry and also flower scent along with berry-like notes. You can easily deduce some info concerning just how to build the Obama Runtz stress based upon just how its moms and dads are developed.

The Facts About Obama Runtz Strain Review: Indica-leaning Hybrid Revealed

The fragrance is basically the like, in spite of the truth that along with a stinging, vibrant pineapple impact that switches marginally impactful as the adhesive little nugs are actually damaged apart as well as consumed. You can get Obama Runtz Weed Pressures listed below, that are top notch and also laboratory tested to make sure the worldwide requirement.

Along with our research study & technology in marijuana products, our experts have cultivated top notch pressures that are risk-free for you. You may easily Buy Obama Runtz Weed Stress below in one try. Additionally, we supply several provides & offers. Inspect it out (gobbstopper strain).

Regrettably, Obama Runtz wasn't developed through nor is associated with the former president. While the strain title might be a bit confusing, it was actually nonetheless motivated by Barack Obama's love for marijuana in his youth and his well-known message of improvement. This stress is actually pointed out to possibly rejuvenate and motivate, which may produce creative and motivating effects.

The Definitive Guide to Obama Runtz Strain Reviews

Runtz, additionally recognized as Runtz OG, is crafted through the Cookies Fam that crossed with. Each of these tensions are sweet as well as fruity, creating Runtz some of the tastiest tensions around. Additionally, Runtz might provide euphoric and also uplifting highs, which might make you more talkative as well as social (first strain of weed). It was actually also named Leafly's 'Strain of the Year' in 2020.

1 note

·

View note

Text

VidPrimo Review⚡💻📲All-In-One Video Hosting & Marketing Platform📲💻⚡Get FREE +350 Bonuses💲💰💸

from Digital IT Product Reviews To Make Money Online https://kaleemzreviews.blogspot.com/2022/09/vidprimo-reviewall-in-one-video-hosting.html

0 notes

Text

VidPrimo Review⚡💻📲All-In-One Video Hosting & Marketing Platform📲💻⚡Get FREE +350 Bonuses💲💰💸

from Digital IT Product Reviews To Make Money Online https://kaleemzreviews.blogspot.com/2022/09/vidprimo-reviewall-in-one-video-hosting.html

0 notes

Text

The 3 Easiest Ways For Newbies To Start In Affiliate Marketing

With the aid of the Internet, you can almost have everything right at your fingertips. With just a few clicks you get access to thousands and even millions of pieces of information and data on virtually any field of interest. As years pass by, the Internet continuous to effect radical changes in many facets of human endeavors, including commerce. Experts say that the information space, commonly known as the “world wide web,” grows by over a million pages everyday as more and more people utilize the Internet for information, education, entertainment, business and other personal reasons. It doesn’t take a business-oriented individual to realize that this phenomenon can bring about sky-high financial gains. The Internet’s fast-growing popularity in the recent years is surely an opportunity for business that any entrepreneur would not want to miss.

You might be thinking only businessmen can make much money out of the Internet, don’t you? Think again. You too can earn big bucks through the Internet even if you don’t have products to sell and high-profile and established company. How? That is through affiliate marketing. You might have come across these words over the net while surfing. Affiliate marketing is a revenue sharing between a merchant and an affiliate who gets paid for referring or promoting the merchants’ products and services. It is one of the burgeoning industries nowadays because it is proven to be cost-efficient and quantifiable means of attaining great profit both for the merchant and the affiliate and other players in the affiliate program, such as the affiliate network or affiliate solution provider.

Affiliate marketing works effectively for the merchant and the affiliate. To the first, he gains opportunities to advertise his products to a larger market, which increases his chances to earn. The more affiliate websites or hard-working affiliates he gets, the more sales he can expect. By getting affiliates to market his products and services, he is saving himself time, effort and money in looking for possible markets and customers. When a client clicks on the link in the affiliate website, purchases the product, recommends it to others who look for the same item or buys it again, the merchant multiplies his chances of earning. On the other hand, the affiliate marketer benefits from each customer who clicks on the link in his website and who actually purchases the product or avails of the service provided by the merchant. In most cases, the affiliate gets commision per sale, which can be fixed percentage or fixed amount.

If you want to be an affiliate marketer and make fortunes out of the Internet, you may follow the following three most basic and easiest ways to start an effective affiliate marketing program. First is to identify a particular thing you are interested in or passionate about so you won’t be bored and forced to develop your affiliate web site later on. Focusing on a specific area you know very well will help you bring out your best without much risks and effort. You can add a personal touch to your site and give your visitors who are possible buyers an impression that you are an expert in your field. In this way, you gain their trust and eventually encourage them to buy the products you endorse. Next is to look for good paying merchants and products or services related to your interest and create now a website. In choosing the products, you must also consider its conversion rate

1 note

·

View note

Text

Case Study: How the Cookie Monster Ate 22% of Our Visibility

Last year, the team at Homeday — one of the leading property tech companies in Germany — made the decision to migrate to a new content management system (CMS). The goals of the migration were, among other things, increased page speed and creating a state-of-the-art, future-proof website with all the necessary features. One of the main motivators for the migration was to enable content editors to work more freely in creating pages without the help of developers.

After evaluating several CMS options, we decided on Contentful for its modern technology stack, with a superior experience for both editors and developers. From a technical viewpoint, Contentful, as a headless CMS, allows us to choose which rendering strategy we want to use.

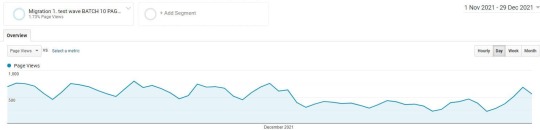

We’re currently carrying out the migration in several stages, or waves, to reduce the risk of problems that have a large-scale negative impact. During the first wave, we encountered an issue with our cookie consent, which led to a visibility loss of almost 22% within five days. In this article I'll describe the problems we were facing during this first migration wave and how we resolved them.

Setting up the first test-wave

For the first test-wave we chose 10 SEO pages with high traffic but low conversion rates. We established an infrastructure for reporting and monitoring those 10 pages:

Rank-tracking for most relevant keywords

SEO dashboard (DataStudio, Moz Pro, SEMRush, Search Console, Google Analytics)

Regular crawls

After a comprehensive planning and testing phase, we migrated the first 10 SEO pages to the new CMS in December 2021. Although several challenges occurred during the testing phase (increased loading times, bigger HTML Document Object Model, etc.) we decided to go live as we didn't see big blocker and we wanted to migrate the first testwave before christmas.

First performance review

Very excited about achieving the first step of the migration, we took a look at the performance of the migrated pages on the next day.

What we saw next really didn't please us.

Overnight, the visibility of tracked keywords for the migrated pages reduced from 62.35% to 53.59% — we lost 8.76% of visibility in one day!

As a result of this steep drop in rankings, we conducted another extensive round of testing. Among other things we tested for coverage/ indexing issues, if all meta tags were included, structured data, internal links, page speed and mobile friendliness.

Second performance review

All the articles had a cache date after the migration and the content was fully indexed and being read by Google. Moreover, we could exclude several migration risk factors (change of URLs, content, meta tags, layout, etc.) as sources of error, as there hasn't been any changes.

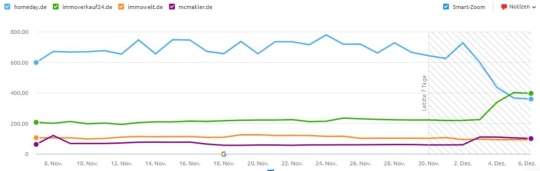

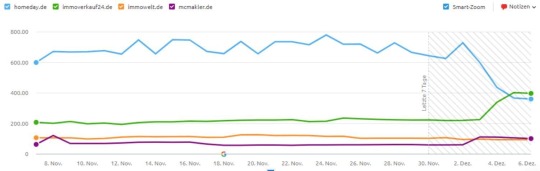

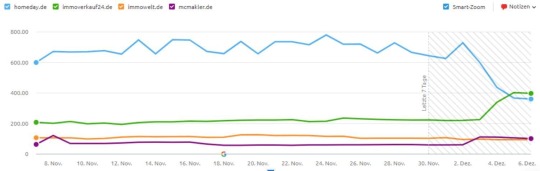

Visibility of our tracked keywords suffered another drop to 40.60% over the next few days, making it a total drop of almost 22% within five days. This was also clearly shown in comparison to the competition of the tracked keywords (here "estimated traffic"), but the visibility looked analogous.

As other migration risk factors plus Google updates had been excluded as sources of errors, it definitely had to be a technical issue. Too much JavaScript, low Core Web Vitals scores, or a larger, more complex Document Object Model (DOM) could all be potential causes. The DOM represents a page as objects and nodes so that programming languages like JavaScript can interact with the page and change for example style, structure and content.

Following the cookie crumbs

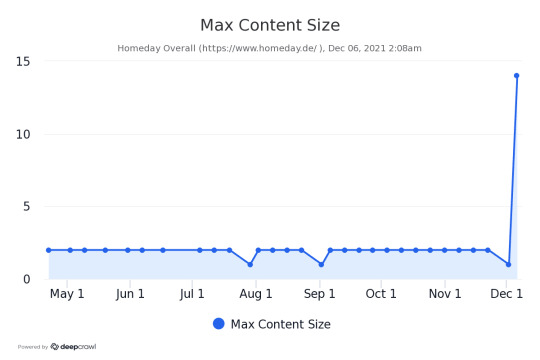

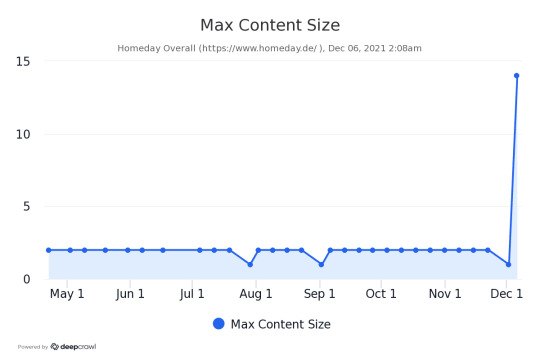

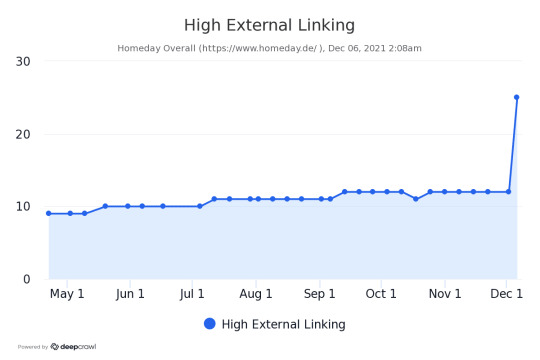

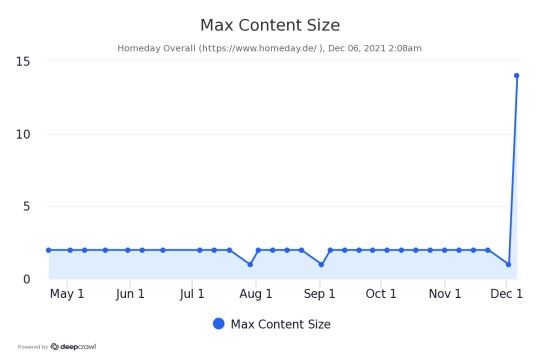

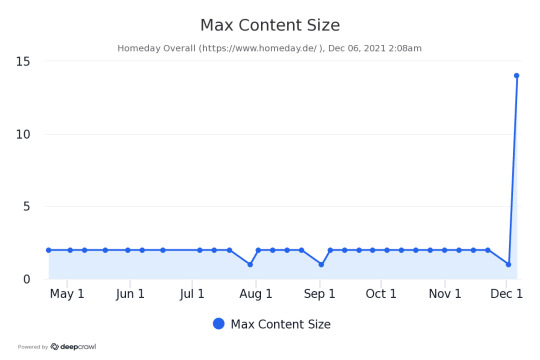

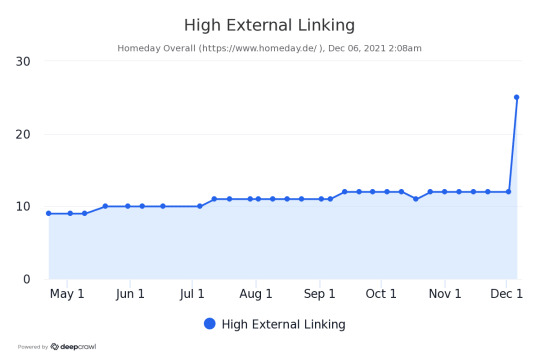

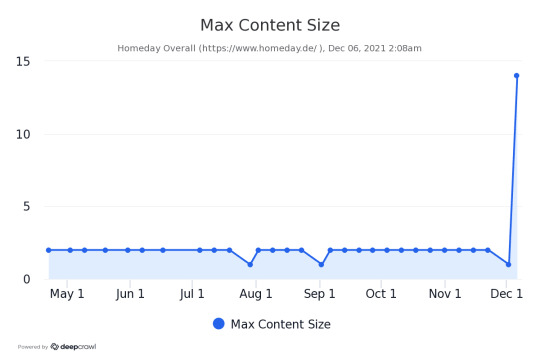

We had to identify issues as quickly as possible and do quick bug-fixing and minimize more negative effects and traffic drops. We finally got the first real hint of which technical reason could be the cause when one of our tools showed us that the number of pages with high external linking, as well as the number of pages with maximum content size, went up. It is important that pages don't exceed the maximum content size as pages with a very large amount of body content may not be fully indexed. Regarding the high external linking it is important that all external links are trustworthy and relevant for users. It was suspicious that the number of external links went up just like this.

Both metrics were disproportionately high compared to the number of pages we migrated. But why?

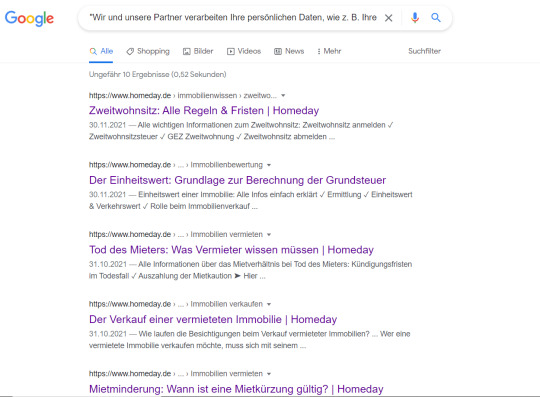

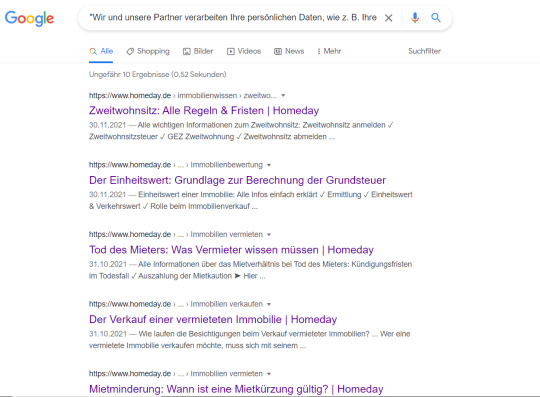

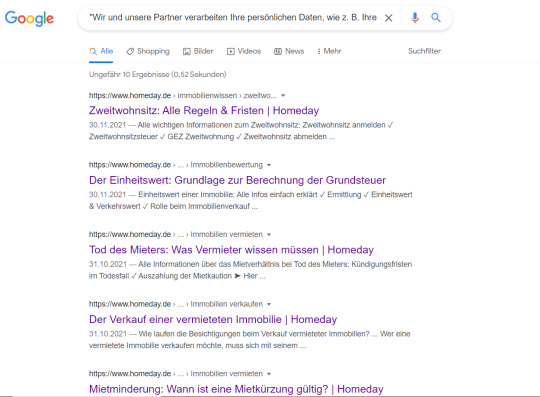

After checking which external links had been added to the migrated pages, we saw that Google was reading and indexing the cookie consent form for all migrated pages. We performed a site search, checking for the content of the cookie consent, and saw our theory confirmed:

This led to several problems:

There was tons of duplicated content created for each page due to indexing the cookie consent form.

The content size of the migrated pages drastically increased. This is a problem as pages with a very large amount of body content may not be fully indexed.

The number of external outgoing links drastically increased.

Our snippets suddenly showed a date on the SERPs. This would suggest a blog or news article, while most articles on Homeday are evergreen content. In addition, due to the date appearing, the meta description was cut off.

But why was this happening? According to our service provider, Cookiebot, search engine crawlers access websites simulating a full consent. Hence, they gain access to all content and copy from the cookie consent banners are not indexed by the crawler.

So why wasn't this the case for the migrated pages? We crawled and rendered the pages with different user agents, but still couldn't find a trace of the Cookiebot in the source code.

Investigating Google DOMs and searching for a solution

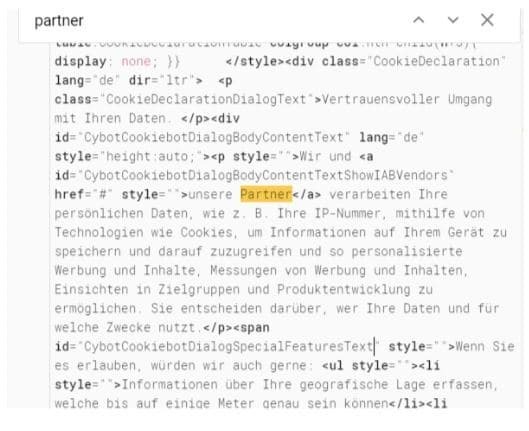

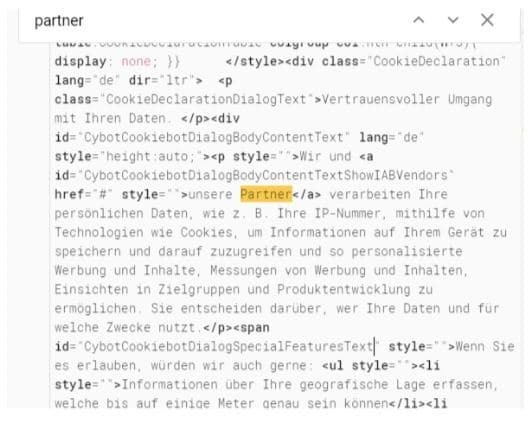

The migrated pages are rendered with dynamic data that comes from Contentful and plugins. The plugins contain just JavaScript code, and sometimes they come from a partner. One of these plugins was the cookie manager partner, which fetches the cookie consent HTML from outside our code base. That is why we didn't find a trace of the cookie consent HTML code in the HTML source files in the first place. We did see a larger DOM but traced that back to Nuxt's default, more complex, larger DOM. Nuxt is a JavaScript framework that we work with.

To validate that Google was reading the copy from the cookie consent banner, we used the URL inspection tool of Google Search Console. We compared the DOM of a migrated page with the DOM of a non-migrated page. Within the DOM of a migrated page, we finally found the cookie consent content:

Something else that got our attention were the JavaScript files loaded on our old pages versus the files loaded on our migrated pages. Our website has two scripts for the cookie consent banner, provided by a 3rd party: one to show the banner and grab the consent (uc) and one that imports the banner content (cd).

The only script loaded on our old pages was uc.js, which is responsible for the cookie consent banner. It is the one script we need in every page to handle user consent. It displays the cookie consent banner without indexing the content and saves the user's decision (if they agree or disagree to the usage of cookies).

For the migrated pages, aside from uc.js, there was also a cd.js file loading. If we have a page, where we want to show more information about our cookies to the user and index the cookie data, then we have to use the cd.js. We thought that both files are dependent on each other, which is not correct. The uc.js can run alone. The cd.js file was the reason why the content of the cookie banner got rendered and indexed.

It took a while to find it because we thought the second file was just a pre-requirement for the first one. We determined that simply removing the loaded cd.js file would be the solution.

Performance review after implementing the solution

The day we deleted the file, our keyword visibility was at 41.70%, which was still 21% lower than pre-migration.

However, the day after deleting the file, our visibility increased to 50.77%, and the next day it was almost back to normal at 60.11%. The estimated traffic behaved similarly. What a relief!

Conclusion

I can imagine that many SEOs have dealt with tiny issues like this. It seems trivial, but led to a significant drop in visibility and traffic during the migration. This is why I suggest migrating in waves and blocking enough time for investigating technical errors before and after the migration. Moreover, keeping a close look at the site's performance within the weeks after the migration is crucial. These are definitely my key takeaways from this migration wave. We just completed the second migration wave in the beginning of May 2022 and I can state that so far no major bugs appeared. We’ll have two more waves and complete the migration hopefully successfully by the end of June 2022.

The performance of the migrated pages is almost back to normal now, and we will continue with the next wave.

0 notes

Text

Case Study: How the Cookie Monster Ate 22% of Our Visibility

Last year, the team at Homeday — one of the leading property tech companies in Germany — made the decision to migrate to a new content management system (CMS). The goals of the migration were, among other things, increased page speed and creating a state-of-the-art, future-proof website with all the necessary features. One of the main motivators for the migration was to enable content editors to work more freely in creating pages without the help of developers.

After evaluating several CMS options, we decided on Contentful for its modern technology stack, with a superior experience for both editors and developers. From a technical viewpoint, Contentful, as a headless CMS, allows us to choose which rendering strategy we want to use.

We’re currently carrying out the migration in several stages, or waves, to reduce the risk of problems that have a large-scale negative impact. During the first wave, we encountered an issue with our cookie consent, which led to a visibility loss of almost 22% within five days. In this article I'll describe the problems we were facing during this first migration wave and how we resolved them.

Setting up the first test-wave

For the first test-wave we chose 10 SEO pages with high traffic but low conversion rates. We established an infrastructure for reporting and monitoring those 10 pages:

Rank-tracking for most relevant keywords

SEO dashboard (DataStudio, Moz Pro, SEMRush, Search Console, Google Analytics)

Regular crawls

After a comprehensive planning and testing phase, we migrated the first 10 SEO pages to the new CMS in December 2021. Although several challenges occurred during the testing phase (increased loading times, bigger HTML Document Object Model, etc.) we decided to go live as we didn't see big blocker and we wanted to migrate the first testwave before christmas.

First performance review

Very excited about achieving the first step of the migration, we took a look at the performance of the migrated pages on the next day.

What we saw next really didn't please us.

Overnight, the visibility of tracked keywords for the migrated pages reduced from 62.35% to 53.59% — we lost 8.76% of visibility in one day!

As a result of this steep drop in rankings, we conducted another extensive round of testing. Among other things we tested for coverage/ indexing issues, if all meta tags were included, structured data, internal links, page speed and mobile friendliness.

Second performance review

All the articles had a cache date after the migration and the content was fully indexed and being read by Google. Moreover, we could exclude several migration risk factors (change of URLs, content, meta tags, layout, etc.) as sources of error, as there hasn't been any changes.

Visibility of our tracked keywords suffered another drop to 40.60% over the next few days, making it a total drop of almost 22% within five days. This was also clearly shown in comparison to the competition of the tracked keywords (here "estimated traffic"), but the visibility looked analogous.

As other migration risk factors plus Google updates had been excluded as sources of errors, it definitely had to be a technical issue. Too much JavaScript, low Core Web Vitals scores, or a larger, more complex Document Object Model (DOM) could all be potential causes. The DOM represents a page as objects and nodes so that programming languages like JavaScript can interact with the page and change for example style, structure and content.

Following the cookie crumbs

We had to identify issues as quickly as possible and do quick bug-fixing and minimize more negative effects and traffic drops. We finally got the first real hint of which technical reason could be the cause when one of our tools showed us that the number of pages with high external linking, as well as the number of pages with maximum content size, went up. It is important that pages don't exceed the maximum content size as pages with a very large amount of body content may not be fully indexed. Regarding the high external linking it is important that all external links are trustworthy and relevant for users. It was suspicious that the number of external links went up just like this.

Both metrics were disproportionately high compared to the number of pages we migrated. But why?

After checking which external links had been added to the migrated pages, we saw that Google was reading and indexing the cookie consent form for all migrated pages. We performed a site search, checking for the content of the cookie consent, and saw our theory confirmed:

This led to several problems:

There was tons of duplicated content created for each page due to indexing the cookie consent form.

The content size of the migrated pages drastically increased. This is a problem as pages with a very large amount of body content may not be fully indexed.

The number of external outgoing links drastically increased.

Our snippets suddenly showed a date on the SERPs. This would suggest a blog or news article, while most articles on Homeday are evergreen content. In addition, due to the date appearing, the meta description was cut off.

But why was this happening? According to our service provider, Cookiebot, search engine crawlers access websites simulating a full consent. Hence, they gain access to all content and copy from the cookie consent banners are not indexed by the crawler.

So why wasn't this the case for the migrated pages? We crawled and rendered the pages with different user agents, but still couldn't find a trace of the Cookiebot in the source code.

Investigating Google DOMs and searching for a solution

The migrated pages are rendered with dynamic data that comes from Contentful and plugins. The plugins contain just JavaScript code, and sometimes they come from a partner. One of these plugins was the cookie manager partner, which fetches the cookie consent HTML from outside our code base. That is why we didn't find a trace of the cookie consent HTML code in the HTML source files in the first place. We did see a larger DOM but traced that back to Nuxt's default, more complex, larger DOM. Nuxt is a JavaScript framework that we work with.

To validate that Google was reading the copy from the cookie consent banner, we used the URL inspection tool of Google Search Console. We compared the DOM of a migrated page with the DOM of a non-migrated page. Within the DOM of a migrated page, we finally found the cookie consent content:

Something else that got our attention were the JavaScript files loaded on our old pages versus the files loaded on our migrated pages. Our website has two scripts for the cookie consent banner, provided by a 3rd party: one to show the banner and grab the consent (uc) and one that imports the banner content (cd).

The only script loaded on our old pages was uc.js, which is responsible for the cookie consent banner. It is the one script we need in every page to handle user consent. It displays the cookie consent banner without indexing the content and saves the user's decision (if they agree or disagree to the usage of cookies).

For the migrated pages, aside from uc.js, there was also a cd.js file loading. If we have a page, where we want to show more information about our cookies to the user and index the cookie data, then we have to use the cd.js. We thought that both files are dependent on each other, which is not correct. The uc.js can run alone. The cd.js file was the reason why the content of the cookie banner got rendered and indexed.

It took a while to find it because we thought the second file was just a pre-requirement for the first one. We determined that simply removing the loaded cd.js file would be the solution.

Performance review after implementing the solution

The day we deleted the file, our keyword visibility was at 41.70%, which was still 21% lower than pre-migration.

However, the day after deleting the file, our visibility increased to 50.77%, and the next day it was almost back to normal at 60.11%. The estimated traffic behaved similarly. What a relief!

Conclusion

I can imagine that many SEOs have dealt with tiny issues like this. It seems trivial, but led to a significant drop in visibility and traffic during the migration. This is why I suggest migrating in waves and blocking enough time for investigating technical errors before and after the migration. Moreover, keeping a close look at the site's performance within the weeks after the migration is crucial. These are definitely my key takeaways from this migration wave. We just completed the second migration wave in the beginning of May 2022 and I can state that so far no major bugs appeared. We’ll have two more waves and complete the migration hopefully successfully by the end of June 2022.

The performance of the migrated pages is almost back to normal now, and we will continue with the next wave.

0 notes

Text

Case Study: How the Cookie Monster Ate 22% of Our Visibility

Last year, the team at Homeday — one of the leading property tech companies in Germany — made the decision to migrate to a new content management system (CMS). The goals of the migration were, among other things, increased page speed and creating a state-of-the-art, future-proof website with all the necessary features. One of the main motivators for the migration was to enable content editors to work more freely in creating pages without the help of developers.

After evaluating several CMS options, we decided on Contentful for its modern technology stack, with a superior experience for both editors and developers. From a technical viewpoint, Contentful, as a headless CMS, allows us to choose which rendering strategy we want to use.

We’re currently carrying out the migration in several stages, or waves, to reduce the risk of problems that have a large-scale negative impact. During the first wave, we encountered an issue with our cookie consent, which led to a visibility loss of almost 22% within five days. In this article I'll describe the problems we were facing during this first migration wave and how we resolved them.

Setting up the first test-wave

For the first test-wave we chose 10 SEO pages with high traffic but low conversion rates. We established an infrastructure for reporting and monitoring those 10 pages:

Rank-tracking for most relevant keywords

SEO dashboard (DataStudio, Moz Pro, SEMRush, Search Console, Google Analytics)

Regular crawls

After a comprehensive planning and testing phase, we migrated the first 10 SEO pages to the new CMS in December 2021. Although several challenges occurred during the testing phase (increased loading times, bigger HTML Document Object Model, etc.) we decided to go live as we didn't see big blocker and we wanted to migrate the first testwave before christmas.

First performance review

Very excited about achieving the first step of the migration, we took a look at the performance of the migrated pages on the next day.

What we saw next really didn't please us.

Overnight, the visibility of tracked keywords for the migrated pages reduced from 62.35% to 53.59% — we lost 8.76% of visibility in one day!

As a result of this steep drop in rankings, we conducted another extensive round of testing. Among other things we tested for coverage/ indexing issues, if all meta tags were included, structured data, internal links, page speed and mobile friendliness.

Second performance review

All the articles had a cache date after the migration and the content was fully indexed and being read by Google. Moreover, we could exclude several migration risk factors (change of URLs, content, meta tags, layout, etc.) as sources of error, as there hasn't been any changes.

Visibility of our tracked keywords suffered another drop to 40.60% over the next few days, making it a total drop of almost 22% within five days. This was also clearly shown in comparison to the competition of the tracked keywords (here "estimated traffic"), but the visibility looked analogous.

As other migration risk factors plus Google updates had been excluded as sources of errors, it definitely had to be a technical issue. Too much JavaScript, low Core Web Vitals scores, or a larger, more complex Document Object Model (DOM) could all be potential causes. The DOM represents a page as objects and nodes so that programming languages like JavaScript can interact with the page and change for example style, structure and content.

Following the cookie crumbs

We had to identify issues as quickly as possible and do quick bug-fixing and minimize more negative effects and traffic drops. We finally got the first real hint of which technical reason could be the cause when one of our tools showed us that the number of pages with high external linking, as well as the number of pages with maximum content size, went up. It is important that pages don't exceed the maximum content size as pages with a very large amount of body content may not be fully indexed. Regarding the high external linking it is important that all external links are trustworthy and relevant for users. It was suspicious that the number of external links went up just like this.

Both metrics were disproportionately high compared to the number of pages we migrated. But why?

After checking which external links had been added to the migrated pages, we saw that Google was reading and indexing the cookie consent form for all migrated pages. We performed a site search, checking for the content of the cookie consent, and saw our theory confirmed:

This led to several problems:

There was tons of duplicated content created for each page due to indexing the cookie consent form.

The content size of the migrated pages drastically increased. This is a problem as pages with a very large amount of body content may not be fully indexed.

The number of external outgoing links drastically increased.

Our snippets suddenly showed a date on the SERPs. This would suggest a blog or news article, while most articles on Homeday are evergreen content. In addition, due to the date appearing, the meta description was cut off.

But why was this happening? According to our service provider, Cookiebot, search engine crawlers access websites simulating a full consent. Hence, they gain access to all content and copy from the cookie consent banners are not indexed by the crawler.

So why wasn't this the case for the migrated pages? We crawled and rendered the pages with different user agents, but still couldn't find a trace of the Cookiebot in the source code.

Investigating Google DOMs and searching for a solution

The migrated pages are rendered with dynamic data that comes from Contentful and plugins. The plugins contain just JavaScript code, and sometimes they come from a partner. One of these plugins was the cookie manager partner, which fetches the cookie consent HTML from outside our code base. That is why we didn't find a trace of the cookie consent HTML code in the HTML source files in the first place. We did see a larger DOM but traced that back to Nuxt's default, more complex, larger DOM. Nuxt is a JavaScript framework that we work with.

To validate that Google was reading the copy from the cookie consent banner, we used the URL inspection tool of Google Search Console. We compared the DOM of a migrated page with the DOM of a non-migrated page. Within the DOM of a migrated page, we finally found the cookie consent content:

Something else that got our attention were the JavaScript files loaded on our old pages versus the files loaded on our migrated pages. Our website has two scripts for the cookie consent banner, provided by a 3rd party: one to show the banner and grab the consent (uc) and one that imports the banner content (cd).

The only script loaded on our old pages was uc.js, which is responsible for the cookie consent banner. It is the one script we need in every page to handle user consent. It displays the cookie consent banner without indexing the content and saves the user's decision (if they agree or disagree to the usage of cookies).

For the migrated pages, aside from uc.js, there was also a cd.js file loading. If we have a page, where we want to show more information about our cookies to the user and index the cookie data, then we have to use the cd.js. We thought that both files are dependent on each other, which is not correct. The uc.js can run alone. The cd.js file was the reason why the content of the cookie banner got rendered and indexed.

It took a while to find it because we thought the second file was just a pre-requirement for the first one. We determined that simply removing the loaded cd.js file would be the solution.

Performance review after implementing the solution

The day we deleted the file, our keyword visibility was at 41.70%, which was still 21% lower than pre-migration.

However, the day after deleting the file, our visibility increased to 50.77%, and the next day it was almost back to normal at 60.11%. The estimated traffic behaved similarly. What a relief!

Conclusion

I can imagine that many SEOs have dealt with tiny issues like this. It seems trivial, but led to a significant drop in visibility and traffic during the migration. This is why I suggest migrating in waves and blocking enough time for investigating technical errors before and after the migration. Moreover, keeping a close look at the site's performance within the weeks after the migration is crucial. These are definitely my key takeaways from this migration wave. We just completed the second migration wave in the beginning of May 2022 and I can state that so far no major bugs appeared. We’ll have two more waves and complete the migration hopefully successfully by the end of June 2022.

The performance of the migrated pages is almost back to normal now, and we will continue with the next wave.

0 notes

Text

Case Study: How the Cookie Monster Ate 22% of Our Visibility

Last year, the team at Homeday — one of the leading property tech companies in Germany — made the decision to migrate to a new content management system (CMS). The goals of the migration were, among other things, increased page speed and creating a state-of-the-art, future-proof website with all the necessary features. One of the main motivators for the migration was to enable content editors to work more freely in creating pages without the help of developers.

After evaluating several CMS options, we decided on Contentful for its modern technology stack, with a superior experience for both editors and developers. From a technical viewpoint, Contentful, as a headless CMS, allows us to choose which rendering strategy we want to use.

We’re currently carrying out the migration in several stages, or waves, to reduce the risk of problems that have a large-scale negative impact. During the first wave, we encountered an issue with our cookie consent, which led to a visibility loss of almost 22% within five days. In this article I'll describe the problems we were facing during this first migration wave and how we resolved them.

Setting up the first test-wave

For the first test-wave we chose 10 SEO pages with high traffic but low conversion rates. We established an infrastructure for reporting and monitoring those 10 pages:

Rank-tracking for most relevant keywords

SEO dashboard (DataStudio, Moz Pro, SEMRush, Search Console, Google Analytics)

Regular crawls

After a comprehensive planning and testing phase, we migrated the first 10 SEO pages to the new CMS in December 2021. Although several challenges occurred during the testing phase (increased loading times, bigger HTML Document Object Model, etc.) we decided to go live as we didn't see big blocker and we wanted to migrate the first testwave before christmas.

First performance review

Very excited about achieving the first step of the migration, we took a look at the performance of the migrated pages on the next day.

What we saw next really didn't please us.

Overnight, the visibility of tracked keywords for the migrated pages reduced from 62.35% to 53.59% — we lost 8.76% of visibility in one day!

As a result of this steep drop in rankings, we conducted another extensive round of testing. Among other things we tested for coverage/ indexing issues, if all meta tags were included, structured data, internal links, page speed and mobile friendliness.

Second performance review

All the articles had a cache date after the migration and the content was fully indexed and being read by Google. Moreover, we could exclude several migration risk factors (change of URLs, content, meta tags, layout, etc.) as sources of error, as there hasn't been any changes.

Visibility of our tracked keywords suffered another drop to 40.60% over the next few days, making it a total drop of almost 22% within five days. This was also clearly shown in comparison to the competition of the tracked keywords (here "estimated traffic"), but the visibility looked analogous.

As other migration risk factors plus Google updates had been excluded as sources of errors, it definitely had to be a technical issue. Too much JavaScript, low Core Web Vitals scores, or a larger, more complex Document Object Model (DOM) could all be potential causes. The DOM represents a page as objects and nodes so that programming languages like JavaScript can interact with the page and change for example style, structure and content.

Following the cookie crumbs

We had to identify issues as quickly as possible and do quick bug-fixing and minimize more negative effects and traffic drops. We finally got the first real hint of which technical reason could be the cause when one of our tools showed us that the number of pages with high external linking, as well as the number of pages with maximum content size, went up. It is important that pages don't exceed the maximum content size as pages with a very large amount of body content may not be fully indexed. Regarding the high external linking it is important that all external links are trustworthy and relevant for users. It was suspicious that the number of external links went up just like this.

Both metrics were disproportionately high compared to the number of pages we migrated. But why?

After checking which external links had been added to the migrated pages, we saw that Google was reading and indexing the cookie consent form for all migrated pages. We performed a site search, checking for the content of the cookie consent, and saw our theory confirmed:

This led to several problems:

There was tons of duplicated content created for each page due to indexing the cookie consent form.

The content size of the migrated pages drastically increased. This is a problem as pages with a very large amount of body content may not be fully indexed.

The number of external outgoing links drastically increased.

Our snippets suddenly showed a date on the SERPs. This would suggest a blog or news article, while most articles on Homeday are evergreen content. In addition, due to the date appearing, the meta description was cut off.

But why was this happening? According to our service provider, Cookiebot, search engine crawlers access websites simulating a full consent. Hence, they gain access to all content and copy from the cookie consent banners are not indexed by the crawler.

So why wasn't this the case for the migrated pages? We crawled and rendered the pages with different user agents, but still couldn't find a trace of the Cookiebot in the source code.

Investigating Google DOMs and searching for a solution

The migrated pages are rendered with dynamic data that comes from Contentful and plugins. The plugins contain just JavaScript code, and sometimes they come from a partner. One of these plugins was the cookie manager partner, which fetches the cookie consent HTML from outside our code base. That is why we didn't find a trace of the cookie consent HTML code in the HTML source files in the first place. We did see a larger DOM but traced that back to Nuxt's default, more complex, larger DOM. Nuxt is a JavaScript framework that we work with.

To validate that Google was reading the copy from the cookie consent banner, we used the URL inspection tool of Google Search Console. We compared the DOM of a migrated page with the DOM of a non-migrated page. Within the DOM of a migrated page, we finally found the cookie consent content:

Something else that got our attention were the JavaScript files loaded on our old pages versus the files loaded on our migrated pages. Our website has two scripts for the cookie consent banner, provided by a 3rd party: one to show the banner and grab the consent (uc) and one that imports the banner content (cd).

The only script loaded on our old pages was uc.js, which is responsible for the cookie consent banner. It is the one script we need in every page to handle user consent. It displays the cookie consent banner without indexing the content and saves the user's decision (if they agree or disagree to the usage of cookies).

For the migrated pages, aside from uc.js, there was also a cd.js file loading. If we have a page, where we want to show more information about our cookies to the user and index the cookie data, then we have to use the cd.js. We thought that both files are dependent on each other, which is not correct. The uc.js can run alone. The cd.js file was the reason why the content of the cookie banner got rendered and indexed.

It took a while to find it because we thought the second file was just a pre-requirement for the first one. We determined that simply removing the loaded cd.js file would be the solution.

Performance review after implementing the solution

The day we deleted the file, our keyword visibility was at 41.70%, which was still 21% lower than pre-migration.

However, the day after deleting the file, our visibility increased to 50.77%, and the next day it was almost back to normal at 60.11%. The estimated traffic behaved similarly. What a relief!

Conclusion

I can imagine that many SEOs have dealt with tiny issues like this. It seems trivial, but led to a significant drop in visibility and traffic during the migration. This is why I suggest migrating in waves and blocking enough time for investigating technical errors before and after the migration. Moreover, keeping a close look at the site's performance within the weeks after the migration is crucial. These are definitely my key takeaways from this migration wave. We just completed the second migration wave in the beginning of May 2022 and I can state that so far no major bugs appeared. We’ll have two more waves and complete the migration hopefully successfully by the end of June 2022.

The performance of the migrated pages is almost back to normal now, and we will continue with the next wave.

0 notes

Link

0 notes

Text

Case Study: How the Cookie Monster Ate 22% of Our Visibility

Last year, the team at Homeday — one of the leading property tech companies in Germany — made the decision to migrate to a new content management system (CMS). The goals of the migration were, among other things, increased page speed and creating a state-of-the-art, future-proof website with all the necessary features. One of the main motivators for the migration was to enable content editors to work more freely in creating pages without the help of developers.

After evaluating several CMS options, we decided on Contentful for its modern technology stack, with a superior experience for both editors and developers. From a technical viewpoint, Contentful, as a headless CMS, allows us to choose which rendering strategy we want to use.

We’re currently carrying out the migration in several stages, or waves, to reduce the risk of problems that have a large-scale negative impact. During the first wave, we encountered an issue with our cookie consent, which led to a visibility loss of almost 22% within five days. In this article I'll describe the problems we were facing during this first migration wave and how we resolved them.

Setting up the first test-wave

For the first test-wave we chose 10 SEO pages with high traffic but low conversion rates. We established an infrastructure for reporting and monitoring those 10 pages:

Rank-tracking for most relevant keywords

SEO dashboard (DataStudio, Moz Pro, SEMRush, Search Console, Google Analytics)

Regular crawls

After a comprehensive planning and testing phase, we migrated the first 10 SEO pages to the new CMS in December 2021. Although several challenges occurred during the testing phase (increased loading times, bigger HTML Document Object Model, etc.) we decided to go live as we didn't see big blocker and we wanted to migrate the first testwave before christmas.

First performance review

Very excited about achieving the first step of the migration, we took a look at the performance of the migrated pages on the next day.

What we saw next really didn't please us.

Overnight, the visibility of tracked keywords for the migrated pages reduced from 62.35% to 53.59% — we lost 8.76% of visibility in one day!

As a result of this steep drop in rankings, we conducted another extensive round of testing. Among other things we tested for coverage/ indexing issues, if all meta tags were included, structured data, internal links, page speed and mobile friendliness.

Second performance review

All the articles had a cache date after the migration and the content was fully indexed and being read by Google. Moreover, we could exclude several migration risk factors (change of URLs, content, meta tags, layout, etc.) as sources of error, as there hasn't been any changes.

Visibility of our tracked keywords suffered another drop to 40.60% over the next few days, making it a total drop of almost 22% within five days. This was also clearly shown in comparison to the competition of the tracked keywords (here "estimated traffic"), but the visibility looked analogous.

As other migration risk factors plus Google updates had been excluded as sources of errors, it definitely had to be a technical issue. Too much JavaScript, low Core Web Vitals scores, or a larger, more complex Document Object Model (DOM) could all be potential causes. The DOM represents a page as objects and nodes so that programming languages like JavaScript can interact with the page and change for example style, structure and content.

Following the cookie crumbs

We had to identify issues as quickly as possible and do quick bug-fixing and minimize more negative effects and traffic drops. We finally got the first real hint of which technical reason could be the cause when one of our tools showed us that the number of pages with high external linking, as well as the number of pages with maximum content size, went up. It is important that pages don't exceed the maximum content size as pages with a very large amount of body content may not be fully indexed. Regarding the high external linking it is important that all external links are trustworthy and relevant for users. It was suspicious that the number of external links went up just like this.

Both metrics were disproportionately high compared to the number of pages we migrated. But why?

After checking which external links had been added to the migrated pages, we saw that Google was reading and indexing the cookie consent form for all migrated pages. We performed a site search, checking for the content of the cookie consent, and saw our theory confirmed:

This led to several problems:

There was tons of duplicated content created for each page due to indexing the cookie consent form.

The content size of the migrated pages drastically increased. This is a problem as pages with a very large amount of body content may not be fully indexed.

The number of external outgoing links drastically increased.

Our snippets suddenly showed a date on the SERPs. This would suggest a blog or news article, while most articles on Homeday are evergreen content. In addition, due to the date appearing, the meta description was cut off.

But why was this happening? According to our service provider, Cookiebot, search engine crawlers access websites simulating a full consent. Hence, they gain access to all content and copy from the cookie consent banners are not indexed by the crawler.

So why wasn't this the case for the migrated pages? We crawled and rendered the pages with different user agents, but still couldn't find a trace of the Cookiebot in the source code.

Investigating Google DOMs and searching for a solution

The migrated pages are rendered with dynamic data that comes from Contentful and plugins. The plugins contain just JavaScript code, and sometimes they come from a partner. One of these plugins was the cookie manager partner, which fetches the cookie consent HTML from outside our code base. That is why we didn't find a trace of the cookie consent HTML code in the HTML source files in the first place. We did see a larger DOM but traced that back to Nuxt's default, more complex, larger DOM. Nuxt is a JavaScript framework that we work with.

To validate that Google was reading the copy from the cookie consent banner, we used the URL inspection tool of Google Search Console. We compared the DOM of a migrated page with the DOM of a non-migrated page. Within the DOM of a migrated page, we finally found the cookie consent content:

Something else that got our attention were the JavaScript files loaded on our old pages versus the files loaded on our migrated pages. Our website has two scripts for the cookie consent banner, provided by a 3rd party: one to show the banner and grab the consent (uc) and one that imports the banner content (cd).

The only script loaded on our old pages was uc.js, which is responsible for the cookie consent banner. It is the one script we need in every page to handle user consent. It displays the cookie consent banner without indexing the content and saves the user's decision (if they agree or disagree to the usage of cookies).

For the migrated pages, aside from uc.js, there was also a cd.js file loading. If we have a page, where we want to show more information about our cookies to the user and index the cookie data, then we have to use the cd.js. We thought that both files are dependent on each other, which is not correct. The uc.js can run alone. The cd.js file was the reason why the content of the cookie banner got rendered and indexed.

It took a while to find it because we thought the second file was just a pre-requirement for the first one. We determined that simply removing the loaded cd.js file would be the solution.

Performance review after implementing the solution

The day we deleted the file, our keyword visibility was at 41.70%, which was still 21% lower than pre-migration.

However, the day after deleting the file, our visibility increased to 50.77%, and the next day it was almost back to normal at 60.11%. The estimated traffic behaved similarly. What a relief!

Conclusion

I can imagine that many SEOs have dealt with tiny issues like this. It seems trivial, but led to a significant drop in visibility and traffic during the migration. This is why I suggest migrating in waves and blocking enough time for investigating technical errors before and after the migration. Moreover, keeping a close look at the site's performance within the weeks after the migration is crucial. These are definitely my key takeaways from this migration wave. We just completed the second migration wave in the beginning of May 2022 and I can state that so far no major bugs appeared. We’ll have two more waves and complete the migration hopefully successfully by the end of June 2022.

The performance of the migrated pages is almost back to normal now, and we will continue with the next wave.

https://ift.tt/Aero3fG

0 notes

Text

WebPull Review⚡💻📲All-In-One Website Builder To Create & Sell Insane Websites📲💻⚡FREE +250 Bonuses💲💰💸

from Digital IT Product Reviews To Make Money Online https://kaleemzreviews.blogspot.com/2022/07/webpull-reviewall-in-one-website.html

0 notes

Text

WebPull Review⚡💻📲All-In-One Website Builder To Create & Sell Insane Websites📲💻⚡FREE +250 Bonuses💲💰💸

from Digital IT Product Reviews To Make Money Online https://kaleemzreviews.blogspot.com/2022/07/webpull-reviewall-in-one-website.html

0 notes

Text

Case Study: How the Cookie Monster Ate 22% of Our Visibility

Last year, the team at Homeday — one of the leading property tech companies in Germany — made the decision to migrate to a new content management system (CMS). The goals of the migration were, among other things, increased page speed and creating a state-of-the-art, future-proof website with all the necessary features. One of the main motivators for the migration was to enable content editors to work more freely in creating pages without the help of developers.

After evaluating several CMS options, we decided on Contentful for its modern technology stack, with a superior experience for both editors and developers. From a technical viewpoint, Contentful, as a headless CMS, allows us to choose which rendering strategy we want to use.

We’re currently carrying out the migration in several stages, or waves, to reduce the risk of problems that have a large-scale negative impact. During the first wave, we encountered an issue with our cookie consent, which led to a visibility loss of almost 22% within five days. In this article I'll describe the problems we were facing during this first migration wave and how we resolved them.

Setting up the first test-wave

For the first test-wave we chose 10 SEO pages with high traffic but low conversion rates. We established an infrastructure for reporting and monitoring those 10 pages:

Rank-tracking for most relevant keywords

SEO dashboard (DataStudio, Moz Pro, SEMRush, Search Console, Google Analytics)

Regular crawls

After a comprehensive planning and testing phase, we migrated the first 10 SEO pages to the new CMS in December 2021. Although several challenges occurred during the testing phase (increased loading times, bigger HTML Document Object Model, etc.) we decided to go live as we didn't see big blocker and we wanted to migrate the first testwave before christmas.

First performance review

Very excited about achieving the first step of the migration, we took a look at the performance of the migrated pages on the next day.

What we saw next really didn't please us.

Overnight, the visibility of tracked keywords for the migrated pages reduced from 62.35% to 53.59% — we lost 8.76% of visibility in one day!

As a result of this steep drop in rankings, we conducted another extensive round of testing. Among other things we tested for coverage/ indexing issues, if all meta tags were included, structured data, internal links, page speed and mobile friendliness.

Second performance review

All the articles had a cache date after the migration and the content was fully indexed and being read by Google. Moreover, we could exclude several migration risk factors (change of URLs, content, meta tags, layout, etc.) as sources of error, as there hasn't been any changes.

Visibility of our tracked keywords suffered another drop to 40.60% over the next few days, making it a total drop of almost 22% within five days. This was also clearly shown in comparison to the competition of the tracked keywords (here "estimated traffic"), but the visibility looked analogous.

As other migration risk factors plus Google updates had been excluded as sources of errors, it definitely had to be a technical issue. Too much JavaScript, low Core Web Vitals scores, or a larger, more complex Document Object Model (DOM) could all be potential causes. The DOM represents a page as objects and nodes so that programming languages like JavaScript can interact with the page and change for example style, structure and content.

Following the cookie crumbs

We had to identify issues as quickly as possible and do quick bug-fixing and minimize more negative effects and traffic drops. We finally got the first real hint of which technical reason could be the cause when one of our tools showed us that the number of pages with high external linking, as well as the number of pages with maximum content size, went up. It is important that pages don't exceed the maximum content size as pages with a very large amount of body content may not be fully indexed. Regarding the high external linking it is important that all external links are trustworthy and relevant for users. It was suspicious that the number of external links went up just like this.

Both metrics were disproportionately high compared to the number of pages we migrated. But why?

After checking which external links had been added to the migrated pages, we saw that Google was reading and indexing the cookie consent form for all migrated pages. We performed a site search, checking for the content of the cookie consent, and saw our theory confirmed:

This led to several problems:

There was tons of duplicated content created for each page due to indexing the cookie consent form.

The content size of the migrated pages drastically increased. This is a problem as pages with a very large amount of body content may not be fully indexed.

The number of external outgoing links drastically increased.

Our snippets suddenly showed a date on the SERPs. This would suggest a blog or news article, while most articles on Homeday are evergreen content. In addition, due to the date appearing, the meta description was cut off.

But why was this happening? According to our service provider, Cookiebot, search engine crawlers access websites simulating a full consent. Hence, they gain access to all content and copy from the cookie consent banners are not indexed by the crawler.

So why wasn't this the case for the migrated pages? We crawled and rendered the pages with different user agents, but still couldn't find a trace of the Cookiebot in the source code.

Investigating Google DOMs and searching for a solution

The migrated pages are rendered with dynamic data that comes from Contentful and plugins. The plugins contain just JavaScript code, and sometimes they come from a partner. One of these plugins was the cookie manager partner, which fetches the cookie consent HTML from outside our code base. That is why we didn't find a trace of the cookie consent HTML code in the HTML source files in the first place. We did see a larger DOM but traced that back to Nuxt's default, more complex, larger DOM. Nuxt is a JavaScript framework that we work with.

To validate that Google was reading the copy from the cookie consent banner, we used the URL inspection tool of Google Search Console. We compared the DOM of a migrated page with the DOM of a non-migrated page. Within the DOM of a migrated page, we finally found the cookie consent content:

Something else that got our attention were the JavaScript files loaded on our old pages versus the files loaded on our migrated pages. Our website has two scripts for the cookie consent banner, provided by a 3rd party: one to show the banner and grab the consent (uc) and one that imports the banner content (cd).

The only script loaded on our old pages was uc.js, which is responsible for the cookie consent banner. It is the one script we need in every page to handle user consent. It displays the cookie consent banner without indexing the content and saves the user's decision (if they agree or disagree to the usage of cookies).

For the migrated pages, aside from uc.js, there was also a cd.js file loading. If we have a page, where we want to show more information about our cookies to the user and index the cookie data, then we have to use the cd.js. We thought that both files are dependent on each other, which is not correct. The uc.js can run alone. The cd.js file was the reason why the content of the cookie banner got rendered and indexed.

It took a while to find it because we thought the second file was just a pre-requirement for the first one. We determined that simply removing the loaded cd.js file would be the solution.

Performance review after implementing the solution

The day we deleted the file, our keyword visibility was at 41.70%, which was still 21% lower than pre-migration.

However, the day after deleting the file, our visibility increased to 50.77%, and the next day it was almost back to normal at 60.11%. The estimated traffic behaved similarly. What a relief!

Conclusion

I can imagine that many SEOs have dealt with tiny issues like this. It seems trivial, but led to a significant drop in visibility and traffic during the migration. This is why I suggest migrating in waves and blocking enough time for investigating technical errors before and after the migration. Moreover, keeping a close look at the site's performance within the weeks after the migration is crucial. These are definitely my key takeaways from this migration wave. We just completed the second migration wave in the beginning of May 2022 and I can state that so far no major bugs appeared. We’ll have two more waves and complete the migration hopefully successfully by the end of June 2022.

The performance of the migrated pages is almost back to normal now, and we will continue with the next wave.

0 notes

Text

Case Study: How the Cookie Monster Ate 22% of Our Visibility

Last year, the team at Homeday — one of the leading property tech companies in Germany — made the decision to migrate to a new content management system (CMS). The goals of the migration were, among other things, increased page speed and creating a state-of-the-art, future-proof website with all the necessary features. One of the main motivators for the migration was to enable content editors to work more freely in creating pages without the help of developers.

After evaluating several CMS options, we decided on Contentful for its modern technology stack, with a superior experience for both editors and developers. From a technical viewpoint, Contentful, as a headless CMS, allows us to choose which rendering strategy we want to use.

We’re currently carrying out the migration in several stages, or waves, to reduce the risk of problems that have a large-scale negative impact. During the first wave, we encountered an issue with our cookie consent, which led to a visibility loss of almost 22% within five days. In this article I'll describe the problems we were facing during this first migration wave and how we resolved them.

Setting up the first test-wave

For the first test-wave we chose 10 SEO pages with high traffic but low conversion rates. We established an infrastructure for reporting and monitoring those 10 pages:

Rank-tracking for most relevant keywords

SEO dashboard (DataStudio, Moz Pro, SEMRush, Search Console, Google Analytics)

Regular crawls

After a comprehensive planning and testing phase, we migrated the first 10 SEO pages to the new CMS in December 2021. Although several challenges occurred during the testing phase (increased loading times, bigger HTML Document Object Model, etc.) we decided to go live as we didn't see big blocker and we wanted to migrate the first testwave before christmas.

First performance review

Very excited about achieving the first step of the migration, we took a look at the performance of the migrated pages on the next day.

What we saw next really didn't please us.

Overnight, the visibility of tracked keywords for the migrated pages reduced from 62.35% to 53.59% — we lost 8.76% of visibility in one day!

As a result of this steep drop in rankings, we conducted another extensive round of testing. Among other things we tested for coverage/ indexing issues, if all meta tags were included, structured data, internal links, page speed and mobile friendliness.

Second performance review

All the articles had a cache date after the migration and the content was fully indexed and being read by Google. Moreover, we could exclude several migration risk factors (change of URLs, content, meta tags, layout, etc.) as sources of error, as there hasn't been any changes.

Visibility of our tracked keywords suffered another drop to 40.60% over the next few days, making it a total drop of almost 22% within five days. This was also clearly shown in comparison to the competition of the tracked keywords (here "estimated traffic"), but the visibility looked analogous.

As other migration risk factors plus Google updates had been excluded as sources of errors, it definitely had to be a technical issue. Too much JavaScript, low Core Web Vitals scores, or a larger, more complex Document Object Model (DOM) could all be potential causes. The DOM represents a page as objects and nodes so that programming languages like JavaScript can interact with the page and change for example style, structure and content.

Following the cookie crumbs

We had to identify issues as quickly as possible and do quick bug-fixing and minimize more negative effects and traffic drops. We finally got the first real hint of which technical reason could be the cause when one of our tools showed us that the number of pages with high external linking, as well as the number of pages with maximum content size, went up. It is important that pages don't exceed the maximum content size as pages with a very large amount of body content may not be fully indexed. Regarding the high external linking it is important that all external links are trustworthy and relevant for users. It was suspicious that the number of external links went up just like this.

Both metrics were disproportionately high compared to the number of pages we migrated. But why?

After checking which external links had been added to the migrated pages, we saw that Google was reading and indexing the cookie consent form for all migrated pages. We performed a site search, checking for the content of the cookie consent, and saw our theory confirmed:

This led to several problems:

There was tons of duplicated content created for each page due to indexing the cookie consent form.

The content size of the migrated pages drastically increased. This is a problem as pages with a very large amount of body content may not be fully indexed.

The number of external outgoing links drastically increased.

Our snippets suddenly showed a date on the SERPs. This would suggest a blog or news article, while most articles on Homeday are evergreen content. In addition, due to the date appearing, the meta description was cut off.

But why was this happening? According to our service provider, Cookiebot, search engine crawlers access websites simulating a full consent. Hence, they gain access to all content and copy from the cookie consent banners are not indexed by the crawler.

So why wasn't this the case for the migrated pages? We crawled and rendered the pages with different user agents, but still couldn't find a trace of the Cookiebot in the source code.

Investigating Google DOMs and searching for a solution

The migrated pages are rendered with dynamic data that comes from Contentful and plugins. The plugins contain just JavaScript code, and sometimes they come from a partner. One of these plugins was the cookie manager partner, which fetches the cookie consent HTML from outside our code base. That is why we didn't find a trace of the cookie consent HTML code in the HTML source files in the first place. We did see a larger DOM but traced that back to Nuxt's default, more complex, larger DOM. Nuxt is a JavaScript framework that we work with.

To validate that Google was reading the copy from the cookie consent banner, we used the URL inspection tool of Google Search Console. We compared the DOM of a migrated page with the DOM of a non-migrated page. Within the DOM of a migrated page, we finally found the cookie consent content:

Something else that got our attention were the JavaScript files loaded on our old pages versus the files loaded on our migrated pages. Our website has two scripts for the cookie consent banner, provided by a 3rd party: one to show the banner and grab the consent (uc) and one that imports the banner content (cd).

The only script loaded on our old pages was uc.js, which is responsible for the cookie consent banner. It is the one script we need in every page to handle user consent. It displays the cookie consent banner without indexing the content and saves the user's decision (if they agree or disagree to the usage of cookies).

For the migrated pages, aside from uc.js, there was also a cd.js file loading. If we have a page, where we want to show more information about our cookies to the user and index the cookie data, then we have to use the cd.js. We thought that both files are dependent on each other, which is not correct. The uc.js can run alone. The cd.js file was the reason why the content of the cookie banner got rendered and indexed.

It took a while to find it because we thought the second file was just a pre-requirement for the first one. We determined that simply removing the loaded cd.js file would be the solution.

Performance review after implementing the solution

The day we deleted the file, our keyword visibility was at 41.70%, which was still 21% lower than pre-migration.

However, the day after deleting the file, our visibility increased to 50.77%, and the next day it was almost back to normal at 60.11%. The estimated traffic behaved similarly. What a relief!

Conclusion

I can imagine that many SEOs have dealt with tiny issues like this. It seems trivial, but led to a significant drop in visibility and traffic during the migration. This is why I suggest migrating in waves and blocking enough time for investigating technical errors before and after the migration. Moreover, keeping a close look at the site's performance within the weeks after the migration is crucial. These are definitely my key takeaways from this migration wave. We just completed the second migration wave in the beginning of May 2022 and I can state that so far no major bugs appeared. We’ll have two more waves and complete the migration hopefully successfully by the end of June 2022.

The performance of the migrated pages is almost back to normal now, and we will continue with the next wave.

0 notes

Text

Case Study: How the Cookie Monster Ate 22% of Our Visibility

Last year, the team at Homeday — one of the leading property tech companies in Germany — made the decision to migrate to a new content management system (CMS). The goals of the migration were, among other things, increased page speed and creating a state-of-the-art, future-proof website with all the necessary features. One of the main motivators for the migration was to enable content editors to work more freely in creating pages without the help of developers.

After evaluating several CMS options, we decided on Contentful for its modern technology stack, with a superior experience for both editors and developers. From a technical viewpoint, Contentful, as a headless CMS, allows us to choose which rendering strategy we want to use.

We’re currently carrying out the migration in several stages, or waves, to reduce the risk of problems that have a large-scale negative impact. During the first wave, we encountered an issue with our cookie consent, which led to a visibility loss of almost 22% within five days. In this article I'll describe the problems we were facing during this first migration wave and how we resolved them.

Setting up the first test-wave

For the first test-wave we chose 10 SEO pages with high traffic but low conversion rates. We established an infrastructure for reporting and monitoring those 10 pages: