#runtimeclass

Explore tagged Tumblr posts

Text

What are autoboxing and unboxing? . . . . For more questions about Java https://bit.ly/3kw8py6 Check the above link

#wrapperclass#boxing#unboxing#objectcloning#serialization#externalizable#IOstream#finalize#runtimeclass#anonymousinnerclass#localinnerclass#memberinnerclass#interface#garbagecollector#polymorphism#java#constructor#thiskeyword#computersciencemajor#javatpoint

2 notes

·

View notes

Text

OpenStack Magnum is an OpenStack API service being developed by the OpenStack Containers Team to ease the deployment of container orchestration engines (COE) such as Kubernetes, Docker Swarm, and Apache Mesos in OpenStack Cloud platform. Among the three supported engines, Kubernetes is the most popular one, so this article focuses entirely on Kubernetes. The OpenStack Heat service is used as an orchestration for the cluster creation and management operations. Magnum has good integration with other OpenStack services, such as Cinder for volumes management, Octavia for Load balancing among other services. This ensures a seamless integration with core Kubernetes. The primary image that will be used to build Kubernetes instances in this guide is Fedora CoreOS. This guide assumes you already have a running OpenStack cloud platform with Cinder configured. Our Lab environment is based on OpenStack Victoria running on CentOS 8. The installation was done using the guide in the link below: Install OpenStack Victoria on CentOS 8 With Packstack You should also have OpenStack client configured in your Workstation and able to access with OpenStack API endpoints: OpenStack client installation and configuration The Cinder Block Storage service must have configured backend to be used for Docker volumes and instance data storage. If using LVM on Cinder it should have free space used by Docker volumes: $ sudo vgs VG #PV #LV #SN Attr VSize VFree cinder-volumes 1 8 0 wz--n- 986.68g Container Infra > Cluster Templates > Create Cluster Template If you get an error “forbidden: PodSecurityPolicy: unable to admit pod: []” with some pods starting after cluster creation consider adding below labels: --labels admission_control_list="NodeRestriction,NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,TaintNodesByCondition,Priority,DefaultTolerationSeconds,DefaultStorageClass,StorageObjectInUseProtection,PersistentVolumeClaimResize,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,RuntimeClass" Step 4: Create a Kubernetes Cluster on OpenStack Magnum If you have “cloud_provider_enabled” label is set to true you must set cluster_user_trust to True in magnum.conf. $ sudo vim /etc/magnum/magnum.conf cluster_user_trust = true If you made the change restart magnum Api and Conductor services: for i in api conductor; do sudo systemctl restart openstack-magnum-$i; done Use the ClusterTemplate name as a template for cluster creation. This cluster will result in one master kubernetes node and two minion nodes: openstack coe cluster create k8s-cluster-01 \ --cluster-template k8s-cluster-template \ --master-count 1 \ --node-count 2 List kubernetes clusters to check creation status. $ openstack coe cluster list --column name --column status --column health_status +----------------+--------------------+---------------+ | name | status | health_status | +----------------+--------------------+---------------+ | k8s-cluster-01 | CREATE_IN_PROGRESS | None | +----------------+--------------------+---------------+ Once the cluster setup is complete status and health_status will change. $ openstack coe cluster list --column name --column status --column health_status +----------------+-----------------+---------------+ | name | status | health_status | +----------------+-----------------+---------------+ | k8s-cluster-01 | CREATE_COMPLETE | HEALTHY | +----------------+-----------------+---------------+ You should see Cinder volumes created and attached to the instances $ openstack volume list --column Name --column Status --column Size +------------------------------------------+--------+------+ | Name | Status | Size | +------------------------------------------+--------+------+ | k8s-cluster-01-ohx564ufdsma-kube_minions | in-use | 10 | | k8s-cluster-01-ohx564ufdsma-kube_minions | in-use | 10 |

| k8s-cluster-01-ohx564ufdsma-kube_masters | in-use | 10 | +------------------------------------------+--------+------+ To list created Virtual machines use the commands below which have a filter to limit number of columns displayed: $ openstack server list --column Name --column Image --column Status +--------------------------------------+--------+------------------+ | Name | Status | Image | +--------------------------------------+--------+------------------+ | k8s-cluster-01-ohx564ufdsma-node-0 | ACTIVE | Fedora-CoreOS-34 | | k8s-cluster-01-ohx564ufdsma-node-1 | ACTIVE | Fedora-CoreOS-34 | | k8s-cluster-01-ohx564ufdsma-master-0 | ACTIVE | Fedora-CoreOS-34 | | mycirros | ACTIVE | Cirros-0.5.2 | +--------------------------------------+--------+------------------+ List of Servers as seen in Web UI: List node groups: $ openstack coe nodegroup list k8s-cluster-01 To check the list of all cluster stacks: $ openstack stack list For completed setup “Stack Status” should show as CREATE_COMPLETE: $ openstack stack list --column 'Stack Name' --column 'Stack Status' +-----------------------------+-----------------+ | Stack Name | Stack Status | +-----------------------------+-----------------+ | k8s-cluster-01-ohx564ufdsma | CREATE_COMPLETE | +-----------------------------+-----------------+ To check an individual cluster’s stack: $ openstack stack show Monitoring cluster status in detail (e.g., creating, updating): K8S_CLUSTER_HEAT_NAME=$(openstack stack list | awk "/\sk8s-cluster-/print \$4") echo $K8S_CLUSTER_HEAT_NAME openstack stack resource list $K8S_CLUSTER_HEAT_NAME Accessing Kubernetes Cluster nodes The login user for Fedora CoreOS is core. List OpenStack instances and show Network information: $ openstack server list --column Name --column Networks +--------------------------------------+---------------------------------------+ | Name | Networks | +--------------------------------------+---------------------------------------+ | k8s-cluster-01-ohx564ufdsma-node-0 | private=172.10.10.63, 150.70.145.201 | | k8s-cluster-01-ohx564ufdsma-node-1 | private=172.10.10.115, 150.70.145.202 | | k8s-cluster-01-ohx564ufdsma-master-0 | private=172.10.10.151, 150.70.145.200 | +--------------------------------------+---------------------------------------+ Login to the VM using core username and public IP. You should use the private key for the SSH key used to deploy the Kubernetes Cluster: $ ssh core@masterip $ ssh core@workerip Example of SSH access to the master node: $ ssh [email protected] Enter passphrase for key '/Users/jmutai/.ssh/id_rsa': Fedora CoreOS 34.20210427.3.0 Tracker: https://github.com/coreos/fedora-coreos-tracker Discuss: https://discussion.fedoraproject.org/c/server/coreos/ [core@k8s-cluster-01-ohx564ufdsma-master-0 ~]$ To get OS release information, run: $ cat /etc/os-release NAME=Fedora VERSION="34.20210427.3.0 (CoreOS)" ID=fedora VERSION_ID=34 VERSION_CODENAME="" PLATFORM_ID="platform:f34" PRETTY_NAME="Fedora CoreOS 34.20210427.3.0" ANSI_COLOR="0;38;2;60;110;180" LOGO=fedora-logo-icon CPE_NAME="cpe:/o:fedoraproject:fedora:34" HOME_URL="https://getfedora.org/coreos/" DOCUMENTATION_URL="https://docs.fedoraproject.org/en-US/fedora-coreos/" SUPPORT_URL="https://github.com/coreos/fedora-coreos-tracker/" BUG_REPORT_URL="https://github.com/coreos/fedora-coreos-tracker/" REDHAT_BUGZILLA_PRODUCT="Fedora" REDHAT_BUGZILLA_PRODUCT_VERSION=34 REDHAT_SUPPORT_PRODUCT="Fedora" REDHAT_SUPPORT_PRODUCT_VERSION=34 PRIVACY_POLICY_URL="https://fedoraproject.org/wiki/Legal:PrivacyPolicy" VARIANT="CoreOS" VARIANT_ID=coreos OSTREE_VERSION='34.20210427.3.0' DEFAULT_HOSTNAME=localhost Listing running containers in the node: $ sudo docker ps System containers etcd, kubernetes and the heat-agent will be installed with podman:

$ sudo podman ps Here is my screenshot of Podman containers: Docker containers on Worker node: Step 5: Retrieve the config of a cluster using CLI If the Cluster creation was successful, you should be able to pull Kubernetes configuration file. A Kubernetes configuration file contains information needed to access your cluster; i.e URL, access credentials and certificates. Magnum allows you to retrieve config for a specific cluster. Create a directory to store your config: mkdir kubeconfigs List clusters: $ openstack coe cluster list --column name --column status --column health_status +----------------+-----------------+---------------+ | name | status | health_status | +----------------+-----------------+---------------+ | k8s-cluster-01 | CREATE_COMPLETE | HEALTHY | +----------------+-----------------+---------------+ Then pull the cluster configuration: export cluster=k8s-cluster-01 openstack coe cluster config $cluster --dir ./kubeconfigs File called config is created: $ ls ./kubeconfigs config Install kubectl tool Follow official kubectl installation guide to install the tool on your operating system. Installation on Linux curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl" sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl Installation on macOS curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/darwin/amd64/kubectl" chmod +x ./kubectl sudo mv ./kubectl /usr/local/bin/kubectl sudo chown root: /usr/local/bin/kubectl After the installation test to ensure the version you installed is up-to-date: $ kubectl version --client Client Version: version.InfoMajor:"1", Minor:"21", GitVersion:"v1.21.1", GitCommit:"5e58841cce77d4bc13713ad2b91fa0d961e69192", GitTreeState:"clean", BuildDate:"2021-05-12T14:18:45Z", GoVersion:"go1.16.4", Compiler:"gc", Platform:"linux/amd64" Export the variable KUBECONFIG which contains the path to the downloaded Kubernetes config: export KUBECONFIG= In my setup this will be: $ export KUBECONFIG=./kubeconfigs/config Verify the config works: $ kubectl cluster-info Kubernetes control plane is running at https://150.70.145.200:6443 CoreDNS is running at https://150.70.145.200:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'. $ kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-cluster-01-ohx564ufdsma-master-0 Ready master 5h23m v1.18.2 k8s-cluster-01-ohx564ufdsma-node-0 Ready 5h21m v1.18.2 k8s-cluster-01-ohx564ufdsma-node-1 Ready 5h21m v1.18.2 Confirm if you’re able to run containers in the cluster: $ kubectl create ns sandbox namespace/sandbox created $ kubectl run --image=nginx mynginx -n sandbox pod/mynginx created $ kubectl get pods -n sandbox NAME READY STATUS RESTARTS AGE mynginx 1/1 Running 0 8s $ kubectl delete pod mynginx -n sandbox pod "mynginx" deleted Reference: OpenStack Victoria Magnum user guides Magnum Quickstart guide

0 notes

Text

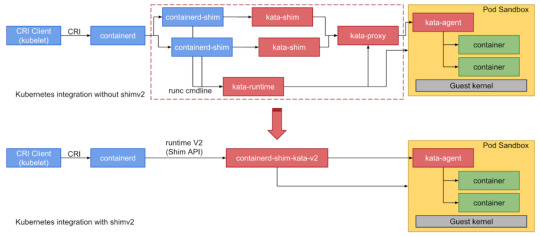

Running Percona Kubernetes Operator for Percona XtraDB Cluster with Kata Containers

Kata containers are containers that use hardware virtualization technologies for workload isolation almost without performance penalties. Top use cases are untrusted workloads and tenant isolation (for example in a shared Kubernetes cluster). This blog post describes how to run Percona Kubernetes Operator for Percona XtraDB Cluster (PXC Operator) using Kata containers. Prepare Your Kubernetes Cluster Setting up Kata containers and Kubernetes is well documented in the official github repo (cri-o, containerd, Kubernetes DaemonSet). We will just cover the most important steps and pitfalls. Virtualization Support First of all, remember that Kata containers require hardware virtualization support from the CPU on the nodes. To check if your linux system supports it run on the node: $ egrep ‘(vmx|svm)’ /proc/cpuinfo VMX (Virtual Machine Extension) and SVM (Secure Virtual Machine) are Intel and AMD features that add various instructions to allow running a guest OS with full privileges, but still keeping host OS protected. For example, on AWS only i3.metal and r5.metal instances provide VMX capability. Containerd Kata containers are OCI (Open Container Interface) compliant, which means that they work pretty well with CRI (Container Runtime Interface) and hence well supported by Kubernetes. To use Kata containers please make sure your Kubernetes nodes run using CRI-O or containerd runtimes. The image below describes pretty well how Kubernetes works with Kata. Hint: GKE or kops allows you to start your cluster with containerd out of the box and skip manual steps. Setting Up Nodes To run Kata containers, k8s nodes need to have kata-runtime installed and runtime configured properly. The easiest way is to use DaemonSet which installs required packages on every node and reconfigures containerd. As a first step apply the following yamls to create the DaemonSet: $ kubectl apply -f https://raw.githubusercontent.com/kata-containers/packaging/master/kata-deploy/kata-rbac/base/kata-rbac.yaml $ kubectl apply -f https://raw.githubusercontent.com/kata-containers/packaging/master/kata-deploy/kata-deploy/base/kata-deploy.yaml DaemonSet reconfigures containerd to support multiple runtimes. It does that by changing /etc/containerd/config.toml. Please note that some tools (ex. kops) keep containerd in a separate configuration file config-kops.toml. You need to copy the configuration created by DaemonSet to the corresponding file and restart containerd. Create runtimeClasses for Kata. RuntimeClass is a feature that allows you to pick runtime for the container during its creation. It has been available since Kubernetes 1.14 as Beta. $ kubectl apply -f https://raw.githubusercontent.com/kata-containers/packaging/master/kata-deploy/k8s-1.14/kata-qemu-runtimeClass.yaml Everything is set. Deploy test nginx pod and set the runtime: $ cat nginx-kata.yaml apiVersion: v1 kind: Pod metadata: name: nginx-kata spec: runtimeClassName: kata-qemu containers: - name: nginx image: nginx $ kubectl apply -f nginx-kata.yaml $ kubectl describe pod nginx-kata | grep “Container ID” Container ID: containerd://3ba8d62be5ee8cd57a35081359a0c08059cf08d8a53bedef3384d18699d13111 On the node verify if Kata is used for this container through ctr tool: # ctr --namespace k8s.io containers list | grep 3ba8d62be5ee8cd57a35081359a0c08059cf08d8a53bedef3384d18699d13111 3ba8d62be5ee8cd57a35081359a0c08059cf08d8a53bedef3384d18699d13111 sha256:f35646e83998b844c3f067e5a2cff84cdf0967627031aeda3042d78996b68d35 io.containerd.kata-qemu.v2cat Runtime is showing kata-qemu.v2 as requested. The current latest stable PXC Operator version (1.6) does not support runtimeClassName. It is still possible to run Kata containers by specifying io.kubernetes.cri.untrusted-workload annotation. To ensure containerd supports this annotation add the following into the configuration toml file on the node: # cat <> /etc/containerd/config.toml [plugins.cri.containerd.untrusted_workload_runtime] runtime_type = "io.containerd.kata-qemu.v2" EOF # systemctl restart containerd Install the Operator We will install the operator with regular runtime but will put the PXC cluster into Kata containers. Create the namespace and switch the context: $ kubectl create namespace pxc-operator $ kubectl config set-context $(kubectl config current-context) --namespace=pxc-operator Get the operator from github: $ git clone -b v1.6.0 https://github.com/percona/percona-xtradb-cluster-operator Deploy the operator into your Kubernetes cluster: $ cd percona-xtradb-cluster-operator $ kubectl apply -f deploy/bundle.yaml Now let’s deploy the cluster, but before that, we need to explicitly add an annotation to PXC pods and mark them untrusted to enforce Kubernetes to use Kata containers runtime. Edit deploy/cr.yaml : pxc: size: 3 image: percona/percona-xtradb-cluster:8.0.20-11.1 … annotations: io.kubernetes.cri.untrusted-workload: "true" Now, let’s deploy the PXC cluster: $ kubectl apply -f deploy/cr.yaml The cluster is up and running (using 1 node for the sake of experiment): $ kubectl get pods NAME READY STATUS RESTARTS AGE pxc-kata-haproxy-0 2/2 Running 0 5m32s pxc-kata-pxc-0 1/1 Running 0 8m16s percona-xtradb-cluster-operator-749b86b678-zcnsp 1/1 Running 0 44m In crt output you should see percona-xtradb cluster running using Kata runtime: # ctr --namespace k8s.io containers list | grep percona-xtradb-cluster | grep kata 448a985c82ae45effd678515f6cf8e11a6dfca159c9abf05a906c7090d297cba docker.io/percona/percona-xtradb-cluster:8.0.20-11.2 io.containerd.kata-qemu.v2 We are working on adding the support for runtimeClassName option for our operators. The support of this feature enables users to freely choose any container runtime. Conclusions Running databases in containers is an ongoing trend and keeping data safe is always the top priority for a business. Kata containers provide security isolation through mature and extensively tested qemu virtualization with little-to-none changes to the existing environment. Deploy Percona XtraDB Cluster with ease in your Kubernetes cluster with our Operator and Kata containers for better isolation without performance penalties. https://www.percona.com/blog/2020/11/04/running-percona-kubernetes-operator-for-percona-xtradb-cluster-with-kata-containers/

0 notes

Text

Kata Containers in Screwdriver

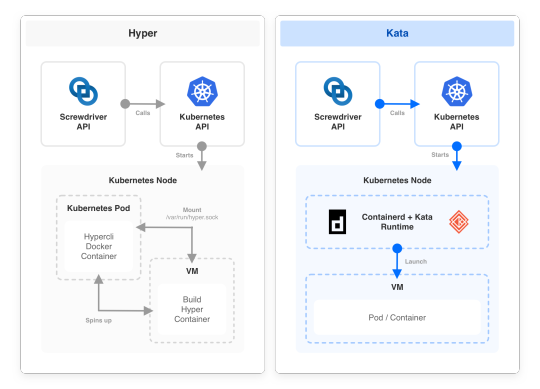

Screwdriver is a scalable CI/CD solution which uses Kubernetes to manage user builds. Screwdriver build workers interfaces with Kubernetes using either “executor-k8s” or “executor-k8s-vm” depending on required build isolation.

executor-k8s runs builds directly as Kubernetes pods while executor-k8s-vm uses HyperContainers along with Kubernetes for stricter build isolation with containerized Virtual Machines (VMs). This setup was ideal for running builds in an isolated, ephemeral, and lightweight environment. However, HyperContainer is now deprecated, has no support, is based on an older Docker runtime and it also required non-native Kubernetes setup for build execution. Therefore, it was time to find a new solution.

Why Kata Containers ?

Kata Containers is an open source project and community that builds a standard implementation of lightweight virtual machines (VMs) that perform like containers, but provide the workload isolation and security advantages of VMs. It combines the benefits of using a hypervisor, such as enhanced security, along with container orchestration capabilities provided by Kubernetes. It is the same team behind HyperD where they successfully merged the best parts of Intel Clear Containers with Hyper.sh RunV. As a Kubernetes runtime, Kata enables us to deprecate executor-k8s-vm and use executor-k8s exclusively for all Kubernetes based builds.

Screwdriver journey to Kata

As we faced a growing number of instabilities with the current HyperD - like network and devicemapper issues and IP cleanup workarounds, we started our initial evaluation of Kata in early 2019 (

https://github.com/screwdriver-cd/screwdriver/issues/818#issuecomment-482239236) and identified two major blockers to move ahead with Kata:

1. Security concern for privileged mode (required to run docker daemon in kata)

2. Disk performance.

We recently started reevaluating Kata in early 2020 based on a fix to “add flag to overload default privileged host device behaviour” provided by Containerd/cri (https://github.com/containerd/cri/pull/1225), but still we faced issues with disk performance and switched from overlayfs to devicemapper, which yielded significant improvement. With our two major blockers resolved and initial tests with Kata looking promising, we moved ahead with Kata.

Screwdriver Build Architecture

Replacing Hyper with Kata led to a simpler build architecture. We were able to remove the custom build setup scripts to launch Hyper VM and rely on native Kubernetes setup.

Setup

To use Kata containers for running user builds in a Screwdriver Kubernetes build cluster, a cluster admin needs to configure Kubernetes to use Containerd container runtime with Cri-plugin.

Components:

Screwdriver build Kubernetes cluster (minimum version: 1.14+) nodes must have the following components set up for using Kata containers for user builds.

Containerd:

Containerd is a container runtime that helps with management of the complete lifecycle of the container.

Reference: https://containerd.io/docs/getting-started/

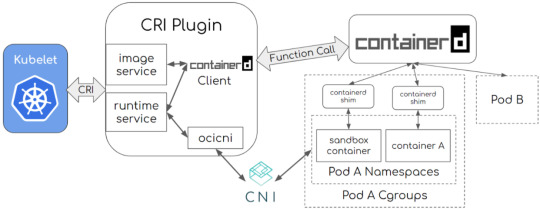

CRI-Containerd plugin:

Cri-Containerd is a containerd plugin which implements Kubernetes container runtime interface. CRI plugin interacts with containerd to manage the containers.

Reference: https://github.com/containerd/cri

Image credit: containerd / cri. Photo licensed under CC-BY-4.0.

Architecture:

Image credit: containerd / cri. Photo licensed under CC-BY-4.0.

Installation:

Reference: https://github.com/containerd/cri/blob/master/docs/installation.md, https://github.com/containerd/containerd/blob/master/docs/ops.md

Crictl:

To debug, inspect, and manage their pods, containers, and container images.

Reference: https://github.com/containerd/cri/blob/master/docs/crictl.md

Kata:

Builds lightweight virtual machines that seamlessly plugin to the containers ecosystem.

Architecture:

Image credit: kata-containers Project licensed under Apache License Version 2.0

Installation:

https://github.com/kata-containers/documentation/blob/master/Developer-Guide.md#run-kata-containers-with-kubernetes

https://github.com/kata-containers/documentation/blob/master/how-to/containerd-kata.md

https://github.com/kata-containers/documentation/blob/master/how-to/how-to-use-k8s-with-cri-containerd-and-kata.md

https://github.com/kata-containers/documentation/blob/master/how-to/containerd-kata.md#kubernetes-runtimeclass

https://github.com/kata-containers/documentation/blob/master/how-to/containerd-kata.md#configuration

Routing builds to Kata in Screwdriver build cluster

Screwdriver uses Runtime Class to route builds to Kata nodes in Screwdriver build clusters. The Screwdriver plugin executor-k8s config handles this based on:

1. Pod configuration:

apiVersion: v1 kind: Pod metadata: name: kata-pod namespace: sd-build-namespace labels: sdbuild: "sd-kata-build" app: screwdriver tier: builds spec: runtimeClassName: kata containers: - name: "sd-build-container" image: <<image>> imagePullPolicy: IfNotPresent

2. Update the plugin to use k8s in your buildcluster-queue-worker configuration

https://github.com/screwdriver-cd/buildcluster-queue-worker/blob/master/config/custom-environment-variables.yaml#L4-L83

Performance

The below tables compare build setup and overall execution time for Kata and Hyper when the image is pre-cached or not cached.

Known problems

While the new Kata implementation offers a lot of advantages, there are some known problems we are aware of with fixes or workarounds:

Run images based on Rhel6 containers don't start and immediately exit

Pre-2.15 glibc: Enabled kernel_params = "vsyscall=emulate" refer kata issue https://github.com/kata-containers/runtime/issues/1916 if trouble running pre-2.15 glibc.

Yum install will hang forever: Enabled kernel_params = "init=/usr/bin/kata-agent" refer kata issue https://github.com/kata-containers/runtime/issues/1916 to get a better boot time, small footprint.

32-bit executable cannot be loaded refer kata issue https://github.com/kata-containers/runtime/issues/886: To workaround/mitigate we maintain a container exclusion list and route to current hyperd setup and we have plans to eol these containers by Q4 of this year.

Containerd IO snapshotter - Overlayfs vs devicemapper for storage driver: Devicemapper gives better performance. Overlayfs took 19.325605 seconds to write 1GB, but Devicemapper only took 5.860671 seconds.

Compatibility List

In order to use this feature, you will need these minimum versions:

API - v0.5.902

UI - v1.0.515

Build Cluster Queue Worker - v1.18.0

Launcher - v6.0.71

Contributors

Thanks to the following contributors for making this feature possible:

Lakshminarasimhan Parthasarathy

Suresh Visvanathan

Nandhakumar Venkatachalam

Pritam Paul

Chester Yuan

Min Zhang

Questions & Suggestions

We’d love to hear from you. If you have any questions, please feel free to reach out here. You can also visit us on Github and Slack.

0 notes

Text

從零開始入門 K8s:理解 RuntimeClass 與使用多容器運行時

RuntimeClass 是 Kubernetes 一種內置的集群資源,主要用來解決多個容器運行時混用的問題。本文將主要為大家介紹RuntimeClass的使用方法。

一、RuntimeClass 需求來源

容器運行時的演進過程

我們首先了解一下容器運行時的演進過程,整個過程大���分為三個階段:

第一個階段:2014 年

… from 從零開始入門 K8s:理解 RuntimeClass 與使用多容器運行時 via KKNEWS

0 notes

Link

0 notes

Text

What is a heavyweight component? . . . . For more questions about Java https://bit.ly/3kw8py6 Check the above link

#strictfp#lightweightcomponent#heavyweightcomponent#reflection#Classclass#Systemclass#wrapperclass#boxing#unboxing#objectcloning#serialization#externalizable#IOstream#finalize#runtimeclass#anonymousinnerclass#localinnerclass#memberinnerclass#interface#garbagecollector#polymorphism#java#constructor#thiskeyword#computersciencemajor#javatpoint

0 notes

Text

What is a lightweight component? . . . . For more questions about Java https://bit.ly/3kw8py6 Check the above link

#strictfp#lightweightcomponent#heavyweightcomponent#reflection#Classclass#Systemclass#wrapperclass#boxing#unboxing#objectcloning#serialization#externalizable#IOstream#finalize#runtimeclass#anonymousinnerclass#localinnerclass#memberinnerclass#interface#garbagecollector#polymorphism#java#constructor#thiskeyword#computersciencemajor#javatpoint

0 notes

Text

What is the purpose of the strictfp keyword? . . . . For more questions about Java https://bit.ly/3kw8py6 Check the above link

#strictfp#lightweightcomponent#heavyweightcomponent#reflection#Classclass#Systemclass#wrapperclass#boxing#unboxing#objectcloning#serialization#externalizable#IOstream#finalize#runtimeclass#anonymousinnerclass#localinnerclass#memberinnerclass#interface#garbagecollector#polymorphism#java#constructor#thiskeyword#computersciencemajor#javatpoint

0 notes

Text

What is the purpose of the System class? . . . . For more questions about Java https://bit.ly/3kw8py6 Check the above link

#reflection#Classclass#Systemclass#wrapperclass#boxing#unboxing#objectcloning#serialization#externalizable#IOstream#finalize#runtimeclass#anonymousinnerclass#localinnerclass#memberinnerclass#interface#garbagecollector#polymorphism#java#constructor#thiskeyword#computersciencemajor#javatpoint

0 notes

Text

What are the ways to instantiate the Class class? . . . . For more questions about Java https://bit.ly/3kw8py6 Check the above link

#reflection#Classclass#Systemclass#wrapperclass#boxing#unboxing#objectcloning#serialization#externalizable#IOstream#finalize#runtimeclass#anonymousinnerclass#localinnerclass#memberinnerclass#interface#garbagecollector#polymorphism#java#constructor#thiskeyword#computersciencemajor#javatpoint

0 notes

Text

What is the reflection? . . . . For more questions about Java https://bit.ly/3kw8py6 Check the above link

#reflection#Classclass#Systemclass#wrapperclass#boxing#unboxing#objectcloning#serialization#externalizable#IOstream#finalize#runtimeclass#anonymousinnerclass#localinnerclass#memberinnerclass#interface#garbagecollector#polymorphism#java#constructor#thiskeyword#computersciencemajor#javatpoint

0 notes

Text

What is object cloning? . . . . For more questions about Java https://bit.ly/3kw8py6 Check the above link

#wrapperclass#boxing#unboxing#objectcloning#serialization#externalizable#IOstream#finalize#runtimeclass#anonymousinnerclass#localinnerclass#memberinnerclass#interface#garbagecollector#polymorphism#java#constructor#thiskeyword#computersciencemajor#javatpoint

0 notes

Text

What are wrapper classes? . . . . For more questions about Java https://bit.ly/3kw8py6 Check the above link

#wrapperclass#boxing#unboxing#objectcloning#serialization#externalizable#IOstream#finalize#runtimeclass#anonymousinnerclass#localinnerclass#memberinnerclass#interface#garbagecollector#polymorphism#java#constructor#thiskeyword#computersciencemajor#javatpoint

0 notes

Text

Improvements and Fixes

Jithin Emmanuel, Engineering Manager, Verizon Media

API

Feature: Slack Notifications messages can be customized for each job PR-24; see guide for usage

Enhancement: executor-k8s can use Kubernetes runtimeClass to target specific build nodes PR-2072

Enhancement: Performance boost when using executor-k8s to run builds by preventing unwanted copy of habitat packages PR-121

Bugfix: PR comments using meta will try to edit pre existing comment PR-152

Bugfix: Prevent Github tags pushes from starting jobs which uses branch filtering

Bugfix: Restarting builds works with chainPR #2029

Bugfix: Git clone on branches with & in name works as expected #2059

Bugfix: Performance enhancement when deleting pipeline jobs PR-445

Bugfix: Ensure workflow join job’s build is started in the correct event #2070

UI

Bugfix: Redirect to 404 if pipeline page does not exist PR-545

Queue Service

Bugfix: Add Redis-based locking to prevent build time outs being processed multiple times PR-15

Bugfix: Handle API quirks on return status code to prevent unwanted retries PR-15

See queue-service announcement post for more context

Compatibility List

In order to have these improvements, you will need these minimum versions:

API - v0.5.896

Queue-Service - v1.0.17

UI - v1.0.505

Contributors

Thanks to the following contributors for making this feature possible:

adong

jweingarten

kumada626

parthasl

pritamstyz4ever

sakka2

supra08

tkyi

yoshwata

yuichi10

Questions and Suggestions

We’d love to hear from you. If you have any questions, please feel free to reach out here. You can also visit us on Github and Slack.

0 notes