#spatial effects. I have four variables. all of the differences in those need to be shown first before I go factorial

Explore tagged Tumblr posts

Text

aaaaaaaAAAAAAAAAAA PRESENTATIONS BAD

#I gotta present ALL my work this year to my lab group tomorrow#and like in theory it’s a chill informal thing AND YET#I have fully given up on making nice slides. they get white default with the fonts changed to look fancier#genuinely looks better anyway#I’ve made all my graphs for 2/3 of my sections now it’s just the most annoying one left#and it is. 11:15pm. I’m gonna be up for at least another hour trying to figure this out#my graphs are so pretty but at what cost#look upon my works ye mighty and despair#spatial effects. I have four variables. all of the differences in those need to be shown first before I go factorial#and of the response variables only one is interesting enough to show. so that’s then one plot with 8 bars. 16 with sex. two plots then with#8. I can make that work#truly terrible how every time I start shouting abt analysis here it actually helps me get my head together#OKAY. that’s very easy actually. I just have 6 more plots to make which is smth I can do#and then it’s just cleaning up the slides to make them presentable and then I can sleep#bc I’ve already figured out the setup content about like why do we care abt this and how did I do it#okay! cool! I can do this!! hopefully no more than 2 hours#luke.txt

0 notes

Text

Biomed Grid | Update on Parkinson’s Disease

Abstract

Parkinson’s disease (PD) is a progressive nervous system disorder that affect movement and present other symptoms, that can be different for everyone and the exact cause of this damage is still unknown. Parkinson’s disease can’t be cured, medications and surgeries might significantly improve their symptoms, these must be prescribed by the neurologist, but it is important to understand how the medication works, what do we expect from them and what other options are available today. In this review the goal is introduce and explain to PD patients and their caregivers the PD: symptoms, stages, treatments, causes and types.

Keywords: Parkinson’s disease; Tremors; Movement disorders; Postural instability; Levodopa; Parkinsonism; Idiopathic Parkinson’s; Atypical parkinsonism

Abbrevations: PD: Parkinson’s Disease; CNS: Central Nervous System; MRI: Magnetic Resonance Imaging; STN: Subthalamic Nucleus; GPI: Globus Pallidus Internal; DBS: Deep Brain Stimulation; IPG: Implanted Pulse Generator; UPDRS: Unified Parkinson’s Disease Rating Scale; L-DOPA: Levodopa; LRRK2: Leucine-Rich Repeat Kinase 2; PARK7: Parkinsonism Associated Deglycase; PRKN: Parkin Rbr E3 Ubiquitin Protein Ligase; TMS: Transcranial Magnetic Stimulation; THC: Tetrahydrocannabinol; CBD: Cannabidiol; TCE: Trichloroethylene; CSE: Chronic Solvent Encephalopathy; MSA: Multiple System Atrophy; PSP: Progressive Supranuclear Palsy; CBS: Corticobasal Syndrome; DLB: Dementia with Lewy Bodies; VP: Vascular Parkinsonism; PBA: Pseudobulbar Affect

Introduction

Parkinson’s Disease (PD) is a progressive neurodegenerative disease producing neuronal cell death, presenting loss of dopamineproduction in the brain area known as substancia nigra [1], altering the central nervous system CNS (brain and spinal cord), and affecting the regulation of the human movements and emotions. the exact cause of this damage is still unknown, and currently there is no cure for Parkinson’s disease. PD is a form of extrapyramidal disorder that affects movements disorder caused by damage to the extrapyramidal tract, a network of nerves that controls movements.

PD is a typical movement disorder [2] and the 2nd most common neurodegenerative condition after Alzheimer’s disease. PD is a continuous neurological disorder where the symptoms continue to worsen gradually [3]. PD is a highly variable disease, meaning that different patients have different combinations of symptoms, and those symptoms can be at varying severity levels.

Symptoms of PD

The main symptoms of PD are of three kinds: primary motors, secondary motors and non-motors [4]. Where

Motors (Directly Related to Movement)

a. Tremors (shaking) in the limbs,

b. Rigidity (muscle stiffness),

c. Bradykinesia (slowness of movements),

d. Postural instability (impaired balance or difficulty standing or walking,

Secondary Motor (Consequence of Movement Disorders)

a. Hypomimia - loss of facial expressions known also as Parkinson mask.

b. Freezing of gait or shuffling gait – the gait, or way of walking, may be affected by a temporary hesitation (freezing) or dragging of the feet (shuffling).

c. Unwanted accelerations – movements which are too quick, which may appear in movement or in speech.

d. Speech difficulty or changes in speech – including slurred speech or softness of voice e. Stooped posture – the body leans forward, and the head may be slightly turned down.

f. Dystonia – prolonged muscle contractions that can cause twisting of body parts or repetitive movements.

g. Impaired fine motor dexterity – difficulty with precise hand and finger movement, such as in writing, sewing, or fastening buttons.

h. Poverty of movement – lack of natural, subtle movements like the decreased arm swing during walking

i. Akathisia – restless movement, which may appear as being jumpy or fidgety.

j. Difficulty swallowing – challenges swallowing can also cause drooling or excess saliva.

k. Cramping – muscles may stay in a contracted position and cause pain

l. Sexual dysfunction – decreased sex drive, inability to orgasm, erectile dysfunction in men, decreased lubrication in women, or pain with intercourse in women.

Non-Motors (no Related to Movement Disorders)

a. Fatigue – excessive tiredness that isn’t relieved with sleep

b. Digestive issues – difficulty swallowing, nausea, bloating, and/or constipation

c. Sleep problems – including difficulty falling asleep, staying asleep, vivid dreams, physically acting out dreams, sleep apnea and sleep attacks (patients may be suddenly overcome with drowsiness and fall asleep).

d. Orthostatic hypotension – low blood pressure that occurs when rising to a standing position.

e. Increased sweating.

f. Increased drooling.

g. Pain – which may accompany muscle rigidity.

h. Hyposmia – reduced sense of smell.

i. Mood changes – including apathy, depression, anxiety and Pseudobulbar Affect PBA (frequent, involuntary and uncontrollable outbursts of crying or laughing)

j. Cognitive changes – including memory difficulties, slowed thinking, confusion, impaired visual-spatial skills (such as getting lost in familiar locations), and dementia.

k. Psychotic symptoms – including hallucinations, paranoia, and agitation.

l. Incontinence _Urinary problems.

m. Orthostatic hypotension (OH) - change of arterial pressure with postural changes

n. Melanoma - an invasive form of skin cancer that has been found to develop more often in people with Parkinson’s.

PD stages

There are typical patterns of progression in Parkinson’s disease that are defined in five stages, Not everyone will experience all the symptoms of Parkinson’s, and if they do, they won’t necessarily experience them in quite the same order or at the same intensity. The typical five stages are summarized in (Table 1).

Table 1: The typical five stages of Parkinson’s.

PD Treatments

There are a variety of treatments that can help manage the motor symptoms and improve the quality of life, but there is no known treatment to stop or slow the disease progression that is different in each patient. The current treatments available must be prescribed by a neurologist, these are: medications, surgical treatments and complementary/alternative therapies.

Medications

Levodopa is the most efficacious medication for PD, it is converted to dopamine. When initiated the levodopa treatment improves symptoms through the day and night. Overtime patients develop OFF time. Off time is the time during the day when PD symptoms return or worsen, typically 40% in 5 years, 90% in 10 years [1]. Off time PD symptoms may include motor, secondary motors and non-motors. Off periods is the sum of four times that levodopa is not working:

a. Early morning Off -patients typically present with poor motor function in the morning when they wake up, before the first dose of levodopa [6],

b. Wearing Off - symptoms of Parkinson’s start to return or worsen before the next dose of levodopa is due [7],

c. Delayed On – delay in the onset of benefit of a levodopa dose [8],

d. Dose Failure - when there is no benefit from dose of levodopa [9].

Current medication for PD [

10

].

Levodopa - The most potent medication for Parkinson’s disease (PD) is levodopa. Its development in the late 1960s represents one of the most important breakthroughs in the history of medicine. Levodopa in pill form is absorbed in the blood from the small intestine and travels through the blood to the brain, where it is converted into dopamine, needed by the body for movement. Plain levodopa produces nausea and vomiting.

a. Carbidopa/levodopa remains the most effective drug to treat PD. The addition of carbidopa prevents levodopa from being converted into dopamine prematurely in the bloodstream, allowing more of it to get to the brain. Therefore, a smaller dose of levodopa is needed to treat symptoms [11].

b. Carbidopa/levodopa (Sinemet®)- Levodopa is combined with carbidopa to prevent nausea and vomiting as side effect.

c. Carbidopa/levodopa (Rytary®) is a combination of long and short-acting capsule.

d. Carbidopa/levodopa (Parcopa®) is formulation that dissolves in the mouth without water.

e. Carbidopa/levodopa (Stalevo®) is combined formulation that includes the COMT inhibitor entacapone.

f. Dopamine Agonists [12]. They stimulate the parts of the human brain influenced by dopamine. In effect, the brain is tricked into thinking it is receiving the dopamine it needs. Dopamine agonists can be taken alone or in combination with medications containing levodopa. The most commonly prescribe oral pill are: pramipexole (Mirapex), ropinirole (Requip), rotigotine transdermal system (Neupro®), Bromocriptine (Parlodel®) and one special apomorphine (Apokyn), is a powerful and fast-acting injectable medication that promptly relieves symptoms of PD within minutes, but only provides 30 to 60 minutes of benefit. Its main advantage is its rapid effect. It is used for people who experience sudden wearing-off spells when their PD medication abruptly stops working, leaving them unexpectedly immobile.

g. Amantadine. it is a mild agent that is used in early PD to help tremor. In recent years, amantadine has also been found useful in reducing dyskinesias that occur with dopamine medication. h. COMT Inhibitors are used to prolong the effect of levodopa by blocking its metabolism. COMT Inhibitors are used primarily to help with “wearing off,” in which the effect of levodopa becomes short-lived. The most common are: Carbidopa/ levodopa (Stalevo®), Entacapone (Comtan®) and Tolcapone (Tasmar®)

i. Anticholinergic Drugs. They decrease the activity of acetylcholine, a neurotransmitter that regulates movement. It can be helpful for tremor and may ease dystonia associated with wearing-off or peak-dose effect. The most common are: trihexyphenidyl (Artane®), benztropine mesylate (Cogentin®) and procyclidine (no longer available in the U.S.), among others.

j. MAO-B Inhibitors. They block an enzyme in the brain that breaks down levodopa, this makes more dopamine available and reduces some of the motor symptoms of PD. When used together with other medications, MAO-B inhibitors may reduce “off” time and extend “on” time. They have been shown to delay the need for Sinemet when prescribed in the earliest stage of PD and have been approved for use in later stages of PD to boost the effects of Sinemet. The most common used are: Selegiline also called Depreny (Eldepryl® and Zelapar®) and rasagiline (Azilect®).

Caution: PD medications may have interactions with certain foods, other medications, vitamins, herbal supplements, over the counter cold pills and other remedies. Anyone taking a PD medication should talk to their doctor and pharmacist about potential drug interactions.

A summary of new treatments and future treatments for Parkinson’s disease are shown in (

Table 2

) [5]

Table 2:Summary of new treatments and future treatments for Parkinson’s disease

Surgical Treatments

Surgical treatments can be an effective treatment option for different symptoms of Parkinson’s disease (PD), only the symptoms that previously improved on levodopa have the potential to improve after the surgery. Surgical treatment is reserved for PD patients who have exhausted medical treatment of PD tremor or who suffer profound motor fluctuations (wearing off and dyskinesias), surgical treatments doesn’t cure PD and it does not slow PD progression. These are:

a. Carbidopa/levodopa intestinal fusion pump (DUOPA™). This is a gel formulation of the drug that requires a surgicallyplaced tube. provides 16 continuous hours of carbidopa and levodopa for motor symptoms. The small, portable infusion pump delivers carbidopa and levodopa directly into the small intestine obtaining better ON time.

b. Deep brain stimulation DBS [13]. DBS is only recommended for people who have had PD for at least four years and have motor symptoms not adequately controlled with medication. In DBS surgery, electrodes are inserted into a targeted area of the brain, using MRI (magnetic resonance imaging) and recordings of brain cell activity during the procedure. A second procedure is performed to implant an implanted pulse generator IPG, impulse generator battery (like a pacemaker). The IPG is placed under the collarbone or in the abdomen. The IPG provides an electrical impulse to a part of the brain involved in motor function. Those who undergo DBS surgery are given a controller to turn the device on or off. The most commonly utilized brain targets include the subthalamic nucleus (STN) and the Globus pallidus internal (GPI). Target choice should be tailored to a patient’s individual needs. The STN does seem to provide more medication reduction, while GPi may be slightly safer for language and cognition. Although most people still need to take medication after undergoing DBS, many people experience considerable reduction of their PD symptoms and can greatly reduce their medications. The amount of reduction varies from person to person.

Future Surgical Treatments

PD Stem Cell Therapy. Cell transplantation in PD patients include [14-16]: adrenal medullary, retinal, carotid body, patient’s fat (adipose tissue) and human and porcine fetal cells. Cell transplantation using aborted 6 to 9 weeks-old human embryos evolved historically as the most promising approach. One of the major concerns in cell transplantation for PD is the host immune response to the grafted tissue. Cell therapy for PD have been associated with significant concerns and complications.

Although the brain is often considered “immune-privileged”, there is in fact evidence that intracerebral immunologicallymediated graft rejection can and does occur [17]. The open-label studies, and the functional engraftment of the transplanted tissue – are enough to provide hope that improvements in cell replacement strategies for PD could yield tremendous positive impact on patients’ lives. The Food and Drug Administration FDA has not yet approved stem cell therapy as a treatment for Parkinson’s disease, clinical studies have demonstrated safety and potential efficacy. The FDA, however, requires further investigation before these kinds of treatment can be approved.

Complementary/Alternative Therapies

There many common Parkinson’s alternative available therapies for PD it is recommended speak with your doctor before, embarking on an alternative therapy. The most common are [18]:

a. Acupuncture, Acupuncture is recognized as a viable treatment for various illnesses and conditions. Acupuncture may improve PD‐related fatigue, but real acupuncture offers no greater benefit than sham treatments. PD‐related fatigue should be added to the growing list of conditions that acupuncture helps primarily through nonspecific or placebo effects [19].

b. Guided imagery (guided meditation), a gentle but powerful technique that focuses the imagination in proactive, positive ways. Motor imagery is a mental process by which an individual rehearses or simulates a given action. It is widely used in sport training as mental practice of action, neurological rehabilitation, and has also been employed as a research paradigm in cognitive neuroscience and cognitive psychology to investigate the content and the structure of covert processes (i.e., unconscious) that precede the execution of action. Motor imagery is thought to be helpful in treatment of neurological motor disabilities caused by stroke, Parkinson’s disease and spinal cord injuries [20].

c. Chiropractic. The theory of chiropractic care is based on the idea that the properly adjusted body, particularly the spine, is essential for health, with influence on life force and good health attained using spinal manipulation therapy for the removal of subluxations. The dosage of chiropractic care depends on the practitioner [21]. Some reports shown that the use of alternative treatment as chiropractic procedures appeared to help in Parkinson disease signs and symptoms [22].

d. Yoga, though yoga is one of the widely used mind-body medicine for health promotion, disease prevention and as a possible treatment modality for neurological disorders. Among various types of mind-body exercises, yoga was reported to be the largest and to produce the most significant beneficial effect in reducing Unified Parkinson’s Disease Rating Scale UPDRS III scores for people with mild to moderate PD [23].

e. Hypnosis might represent an interesting complementary therapeutic approach to movement disorders, as it considers not only symptoms, but also well-being, and empowers patients to take a more active role in their treatment. Well-designed studies considering some specific methodological challenges are needed to determine the possible therapeutic utility of hypnosis in movement disorders. In addition to the potential benefits for such patients, hypnosis might also be useful for studying the neuroanatomical and functional underpinnings of normal and abnormal movements [24].

f. Biofeedback seems to be a promising tool to improve gait outcomes for both healthy individuals and patient groups. However, due to differences in study designs and outcome measurements, it remains uncertain how different forms of feedback affect gait outcomes [25].

g. Aromatherapy uses plant materials and aromatic plant oils, including essential oils, and other aroma compounds for the purpose of altering one’s mood, cognitive, psychological or physical well-being. Aromatherapy in PD improve restlessness; anxiety, mood, works great; calming; relaxation; nausea; alertness; helped sleep; calming effect [26].

h. Herbal remedies are medication prepared from plants, including most of the world’s traditional remedies for disease. There are many herbal remedies that can be useful for PD but there are missing systematic approaches to test each one in neurologic diseases like PD. For example, Resveratrol as a natural polyphenolic compound extracted from red grapes, exerts neuroprotective effects on oxidative damage and neuronal damage through its antioxidant as well as antiinflammatory properties. Resveratrol has the potential to treat PD by inhibiting neuro-inflammation, apoptosis and promoting neuronal survival and can serve as a complementary medicine drug to reduce the L-DOPA dose needed to ameliorate PD [27].

i. Magnetic therapy, many studies conclude that further studies are needed on PD magnetic therapy. Investigations using repetitive Transcranial Magnetic Stimulation TMS in assessing the motor system function are still in an early phase and need further evaluation. Altogether, the value of TMS for studies of the physiology and pathophysiology of the motor system is beyond any doubt and the limits of these techniques have not yet been reached [28].

j. Massage. Parkinson’s disease typically causes muscle stiffness and rigidity, individuals who utilize massage therapy find it helps to alleviate joint and muscle stiffness.

k. Marijuana also called cannabis, is made up of two major parts: Tetrahydrocannabinol (THC) and cannabidiol (CBD). THC is the major part that causes one to feel “high.” THC can cause hallucinations and anxiety and is therefore to be used with caution, if at all, in Parkinson disease. CBD, by comparison, may help with sleep and anxiety. A few studies have suggested that marijuana helps with some aspects of Parkinson’s and may allow a person to reduce his or her use of prescription medications. But there is no definitive data on what dose of what parts of marijuana are helpful or harmful in Parkinson’s disease. The possible association of cannabinoid receptors with ubiquitin pathway needs to be studied further in order to understand its extensive role in PD. Furthermore, epigenetic modifications resulted by cannabinoid receptor activation needs to be elucidated which can be crucial to follow up the pathology of the disease [29].

PD causes

Around 80% populations with PD are considered as idiopathic because of their unknown source of etiology whereas the remaining 20% cases are presumed to be genetic. Variations in the genetic combination of certain genes elevate the risk of PD. Studies have reported that mutation in the LRRK2 (leucine-rich repeat kinase 2),PARK7 (Parkinsonism Associated Deglycase), PRKN (Parkin RBR E3 Ubiquitin Protein Ligase), PINK1 (PTEN-induced putative kinase 1) or SNCA (alpha-synuclein) contribute to the risk of PD[30].

Factor for Parkinson’s disease

Generally, scientists speculate that the interaction between gene mutations and environmental exposures can contribute to PD progression. Studies have listed few modifiable risk factors for PD, the following factors are considered as some of the causative factors of PD [31,32] are:

Exposure to pesticide: The evidence that pesticide and herbicide use is associated with an increased risk in PD, begs the question – are there specific pesticides that are most concerning? When data is collected on this topic in large populations, often the participants in the study are unaware of which specific pesticide or herbicide exposures they have had. This makes it difficult to determine which pesticides to avoid. From that data emerged paraquat and rotenone as the two most concerning pesticides [33] and Glyphosate as herbicide [34]. Where:

a. Paraquat’s mechanism of action is the production of reactive oxygen species, intracellular molecules that cause oxidative stress and damage cells.

b. Rotenone’s mechanism of action is disruption of the mitochondria, the component of the cell that creates energy for cell survival.

c. Glyphosate is the world’s most heavily applied herbicide, and an active ingredient in Roundup®

d. Well-water drinking. Rural residents who drink water from private wells are much more likely to have Parkinson’s disease, a finding that bolsters theories that farm pesticides may be partially to blame, according to a new California study [35].

e. Heavy metals, such as iron and manganese, are involved in neurologic disease. Most often these diseases are associated with abnormal environmental exposures or abnormal accumulations of heavy metals in the body. Many epidemiological studies have shown an association between PD and exposure to metals such as: mercury, lead, manganese, copper, iron, aluminium, bismuth, thallium, and zinc [38]. The combination of high concentration of iron and the neurotransmitter, dopamine, may contribute to the selective vulnerability of the brain in the substantia nigra pars compacta (SNpc) in the basal ganglia [36].

f. Solvents, case reports of parkinsonism, including PD, have been associated with exposures to various solvents, most notably trichloroethylene (TCE) [37]. The peripheral nervous system as well as the central nervous system can both be targeted. Prolonged workplace exposure to organic solvents can induce a chronic solvent encephalopathy (CSE) that persists even after the exposure is terminated [38].

g. Calcium, the international team, led by the University of Cambridge, found that calcium can mediate the interaction between small membranous structures inside nerve endings, which are important for neuronal signaling in the brain, and alpha-synuclein, the protein associated with Parkinson’s disease. Excess levels of either calcium or alpha-synuclein may be what starts the chain reaction that leads to the death of brain cells [39]. Ca2+ dysregulation and the direct consequences for mitochondrial health in PD [40].

h. Age, Parkinson’s disease can both be early- and late-onset. Many processes affected in Parkinson’s disease are linked to factors associated with age. The risk of Parkinson’s disease increases dramatically in individuals over the age of 60 and it is estimated that more than 1% of all seniors have some form of the condition [41].

i. Gender, more men than women are diagnosed with Parkinson’s disease (PD), and several gender differences have been documented in this disorder. One possible source of malefemale differences in the clinical and cognitive characteristics of PD is the effect of estrogen on dopaminergic neurons and pathways in the brain [42]

Parkinson ‘s diseases types

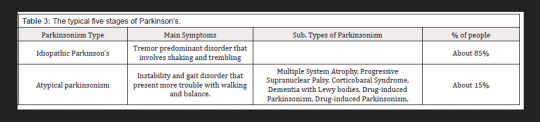

Parkinsonism is a constellation of signs and symptoms that are characteristically observed in Parkinson’s disease (PD), but that are not necessarily due to PD. Parkinsonism is the primary type of hypokinetic movement disorder. Parkinsonism describe the collection of signs and symptoms found in Parkinson’s disease (PD). These include slowness (bradykinesia), stiffness (rigidity), tremor and imbalance (postural instability). There are basically two general Parkinson’s types: Idiopathic Parkinson’s and atypical parkinsonism [43] (Table 3).

Table 3: The typical five stages of Parkinson’s.

Idiopathic Parkinson’s

Idiopathic Parkinson’s is the most common form of Parkinsonism. It is a tremor predominant disorder that involves shaking and trembling. About 85% of people with parkinsonism have idiopathic Parkinson’s. This type of Parkinson’s disease can begin at an earlier age but progresses more slowly. It has a lower risk of cognitive (brain function) decline, but the tremors may be more difficult to treat than other symptoms [44].

Atypical parkinsonism

Atypical parkinsonism is less common, it is an instability and gait disorder that present more trouble with walking and balance. About 15% of people with parkinsonism have Atypical parkinsonism disorders, these are rarer conditions and more difficult to treat. This type of Parkinson’s disease happens at an older age but tends to progress quickly. Although people may experience fewer tremors or no tremors at all, they have a higher risk of cognitive decline. Atypical parkinsonism includes the following variations:

a. Multiple System Atrophy MSA includes several neurodegenerative disorders in which one or more systems in the body deteriorates as: incoordination (ataxia), dysfunction in the autonomic nervous system that automatically controls things such as blood pressure and bladder function. These are in addition to variable degrees of parkinsonism including symptoms such as slowness, stiffness and imbalance. Average age of onset is in the mid-50’s.

b. Progressive Supranuclear Palsy PSP is the most common degenerative type of atypical parkinsonism. Symptoms tend to progress more rapidly than PD. People with PSP may fall frequently early in the course of disease. Later symptoms include limitations in eye movements, particularly looking up and down, which also contributes to falls. Those with PSP also often have problems with swallowing (dysphagia), difficulty in producing speech (dysarthria), sleep problems, memory and thinking problems (dementia). Its average age of onset is in the mid-60’s.

c. Corticobasal Syndrome CBS is the least common of the atypical causes of Parkinsonism. Usually begins with symptoms affecting one limb. In addition to parkinsonism, other symptoms can include abnormal posturing of the affected limb (dystonia), fast, jerky movements (myoclonus), difficulty with some motor tasks despite normal muscle strength (apraxia), difficulty with language (aphasia) among others. Typically begins after age 60 [45].

d. Dementia with Lewy bodies DLB is a progressive, neurodegenerative disorder in which abnormal deposits of a protein called alpha-synuclein build up in multiple areas of the brain. It is second to Alzheimer’s as the most common cause of degenerative dementedly first causes progressive problems with memory and fluctuations in thinking, as well as hallucinations. These symptoms are joined later in the course of the disease by parkinsonism with slowness, stiffness and other symptoms like PD.

e. Drug-induced Parkinsonism is the most common form of what is known as secondary parkinsonism. Side effects of some drugs, especially those affecting brain dopamine levels (anti-psychotic or anti-depressant medication), can cause parkinsonism. Although tremor and postural instability may be less severe, this condition may be difficult to distinguish from Parkinson’s. Some medications can cause the development of Parkinsonism as: Antipsychotics, some antidepressants, reserpine, some calcium channel blockers and others. Usually after stopping those medications parkinsonism gradually disappears over weeks to months, though symptoms may last for up to a year.

f. Vascular Parkinsonism VP is based in evidences that suggest that multiple small strokes in key areas of the brain may cause Parkinsonism. A severe onset of parkinsonism immediately following (or progressively occurring within a year of) a stroke may indicate VP.

Conclusions

Parkinson’ disease is a neurologic disease that affect the functionality if the brain modifying the neural connectivity due to cell death. All medication and procedures available today only help to improve the quality of life of the patients, there is a big necessity to focus in more ways to accelerate the research on PD, like creating a cloud database with information about the development, new medications available, new surgical procedures, new way to early detection, new criteria’s and many other factors.

Read More About this Article: https://biomedgrid.com/fulltext/volume2/update-on-parkinsons-disease.000614.php

For more about: Journals on Biomedical Science :Biomed Grid

#Biomedgrid#American medical journal#List of open access medical journal#Journals on medical research#medical and medicinal journal#Open access clinical and medical journal

0 notes

Text

I have been thinking quite a bit about the problem of theatrical space lately. Open any standard work of theatre history, and you are likely to find a fairly standardized account of how the spaces — the buildings, mostly — used for theatrical performances in the West have developed. Without too too much distortion, one could describe that account as the story of the rise and fall of the proscenium stage, as theatrical space moves from its democratic origins through monarchic single-point perspective back to a supposedly democratic (in-the-round, open-concept, black box, or thrust-stage) architectural form.

A curious feature of this standard account is that it tends to treat buildings as if they weren’t built to last, as if the emergence of a new kind of theatre meant the disappearance of other theatrical venues. This may be a problem of teleology, or perhaps just the inevitable side-effect of avantgardist thinking — a focus on the cutting edge that loses sight of the (usually much larger) rest of the knife. And because I am spending most of my time thinking about the 1920s at the moment, I have noticed another curious displacement: that of the actual in favour of the theoretical. Thus, the “Total Theatre” Walter Gropius designed for Erwin Piscator in 1927 regularly features in narratives about theatrical experiments in this period, even though Gropius’s design was never built; the drawings for the proposed building have been frequently reprinted. But the theatres in which Piscator actually realized all of his theatrical experiments with simultaneous stages and projections — the Volksbühne, the Staatliche Schauspielhaus, and, most extensively, the Theater am Nollendorfplatz — are rarely discussed in any detail. None of them, not even the relatively “democratic” Volksbühne, easily fit into the standard progressive narrative: they all were proscenium theatres. The Staatstheater, least progressive of all, was an imposing Neoclassical building designed by Karl Friedrich Schinkel for the Prussian royal court. (One of Berlin’s theatres, Max Reinhardt’s Grosses Schauspielhaus, might be seen as a uniquely progressive space, with its Greek-theatre-style seating, hugely variable stage, and enormous capacity, but it never became an engine of theatrical innovation and was soon used exclusively for popular entertainments. I will therefore not consider it in this discussion.)

Piscator himself complained about the many ways in which these theatres hindered his ability to realize his stage experiments. Even the Volksbühne, one of the most technically advanced theatres in Berlin at the time, did not have the necessary equipment, and needed to acquire better projection devices to serve Piscator’s needs. The Theater am Nollendorfplatz lacked the Volksbühne’s permanent dome horizon and didn’t have a scene dock, making turnovers between rehearsals and productions immensely complicated (and costly). From the artist’s perspective, these were limiting spaces: the kind of theatre Piscator wanted to make could only have been realized in the kind of venue Gropius had envisaged for him. But from the perspective of theatre history, the kind of theatre that actually ended up being made is arguably at least as important — as are the actual spaces that provided the conditions under which it was realized.

The Theater am Nollendorfplatz in the early 1940s. (TU Berlin Architekturmuseum, Inv Nr TBS 044, 10)

Take, for instance, Traugott Müller’s famous design for the production that opened the Piscatorbühne’s first season at the Nollendorfplatz house: Ernst Toller’s Hoppla, wir leben. A massive grid of iron tubes (3-inch gas pipes, in fact), weighing almost four metric tons, 11 metres wide, 3 metres deep, and over 8.5 metres tall, it created six small rooms, stacked on either side of a tall central chamber, which had as small, domed, eighth space on top of it. All eight chambers could be used and lit individually or simultaneously; each also did double duty as a projection screen. Those screens could be moved to the front of the chamber, allowing for invisible scene transitions behind them; they were also used for back-projections when the chambers were in use, those projections taking the place of all scenic paint work. Two sets of stairs on either side allowed external access and brought the total width of the structure to 13 metres. The entire edifice sat on tracks, so it could easily be moved closer to or further away from the audience, and it was placed on top of a revolve, so scenes could also take place on the stage in front of or behind it. Finally, in some scenes the proscenium was covered with a white and a black gauze drop onto which film was projected frontally, while the screen in the structure’s large central chamber was used for film projections from behind — on occasion, film clips would be projected simultaneously from front and back, with actors performing, spotlit, between the two projections. (For a very detailed analysis of this production, see Klaus Schwind, “Die Entgrenzung des Raum- und Zeiterlebnisses im ‘vierdimensionalen Theater’: Plurimediale Bewegungsrhythmen in Piscators Inszenierung von Hoppla, wir leben! (1927),” in Erika Fischer-Lichte, ed., TheaterAvantgarde: Wahrnehmung — Körper — Sprache [Tübingen und Basel, 1995], 58-88.)

Piscator and Müller’s set was obviously a strikingly inventive design — it almost makes the kind of work Robert Lepage is doing now, 90 years later, look unambitious by comparison. But it was invented for and thus enabled by the Theater am Nollendorfplatz’s specific stage: the proscenium opening there was 10 meters wide and 8.4 metres tall; set back from the edge of the stage, the entire structure would have been visible. The revolve, with a diameter of 15.5 metres, was well wide enough for the set. Only three other theatres in 1920s Berlin had as large a proscenium opening: the Volksbühne, the Staatliche Schauspielhaus, and the Schiller-Theater. None of the others, even though some had larger stages than the Nollendorfplatz theatre, could have accommodated Piscator and Müller’s concept. Max Reinhardt’s Deutsches Theater is a case in point: its stage was considerably larger, as was its revolve (with a diameter of 18 meters), but its proscenium opening was (and still is) only 8.5 meters wide — too narrow to allow for the three chambers to be placed side-by-side with sufficient distance from the back projectors.

For all of Piscator’s griping about the venue’s limitations, in a very real sense his production of Hoppla, wir leben! was only possible because this particular theatre was at his disposal in 1927. His declared goal may have been to “blow up the limited space of the proscenium stage,” but, paradoxically, it was the specific spatial conditions of a particular proscenium theatre that allowed him to pursue that project.

I have gone on at rather too great length about a single example, so let me get to my broader point: theatres are spaces designed with particular aesthetic and technical systems in mind, but they don’t enshrine those same systems. Over the course of their histories as buildings, they can accommodate entirely different, unanticipated aesthetic programs; they can be used in ways unimaginable to their architects. But they offer restrictions as well as opportunities. And the specific limits and possibilities they offer shape the theatrical imaginations of the artists that work in them. Shape, not control: of course artists have frequently rejected the spaces they inherited altogether; they have remade them; they have ignored them. But even so, those inherited spaces form a kind of base condition of theatrical creativity — and the differences between the inventory of such spaces in different cities and countries may be a significant factor in how theatre cultures develop their specific idiosyncratic histories. Let me make this less abstract.

By the 1930s, 13 of the 15 Berlin theatres regularly used to stage plays (rather than operas, or operettas, or cabarets, or variety shows, etc.) were equipped with revolves. The average stage was over 16 metres deep (ignoring the “Hinterbühne,” the backstage area not normally used for performance, and often significantly narrower than the stage itself) and almost 20 metres wide. And most of those theatres had fewer than 1,000 seats. Just for comparison’s sake, the Old Vic’s stage was 15 metres wide and 10 metres deep and didn’t have a revolve; but the theatre had room for 1,800 spectators (nowadays, the stage is a bit deeper, at 11.6 metres, and the theatre only seats around 1,000 — but there’s still no revolve). In fact, the smallest theatre in Berlin then, the Kammerspiele of the Deutsches Theater — a venue established by Max Reinhardt specifically for modern plays and a more intimate style — had a stage over 13 metres deep, and a revolve, while seating just over 400; only FOUR London theatres had (and have!) deeper stages than that, and only one of them was equipped with a revolve. That theatre, the Coliseum, had six times as many spectators as the Kammerspiele, though. On the other hand, a venue such as London’s Ambassadors Theatre, with a capacity comparable to the Kammerspiele, had to make do with a stage barely half as deep, at 6.9 meters. No stages as shallow as that were used for the production of plays in Berlin. A sense of what was normal also informed the public discourse: thus the stage of the Theater in der Königgrätzer Straße (today’s HAU 1), over 16 metres wide and almost 14 metres deep, appeared “extraordinarily small” to the author of a 1922 article about technical theatre innovations; I don’t know if anyone would have described the much smaller stage of the Old Vic in similar terms.

Theatre artists making new work under those circumstances could therefore expect certain spatial conditions: they could assume that their stage would be almost as deep as — and often deeper than — the auditorium; they could assume that they would have the use of a revolve; they could assume that they would have to contend with a relatively narrow proscenium opening, always much narrower than the stage’s depth. (That is another signal difference between the inventory of venues in Berlin and London: only a handful of theatres in London have a stage that is much deeper than the width of the proscenium; over half of London playhouses have a proscenium opening that is as wide as the stage is deep, or significantly wider.) Those specific circumstances would have been the creative starting point for their work. It’s not that a director or a designer in a different location might not, say, devise a set that requires circular movement, just as a director or designer working on a very deep stage may decide to reduce its depth dramatically and work in an artificially shallow space instead. But the former is a decision that requires complex construction and has serious budgetary consequences: it’s unlikely that a revolve (just e.g.) designed and built for a specific production would be scrapped if it turns out, in the rehearsal process, that it’s not as effective or useful as director and designer initially thought. An artificial wall is easy to scrap; a real wall cannot as easily be moved.

I don’t know how far I would want to push this kind of argument: are London productions in general, historically and now, more likely than Berlin productions to adopt sets and stagings that emphasize width over depth? I have no idea. But in specific instances, spatial conditions seem a clear and crucial factor in innovations in staging, as the material through, with, and against which new theatrical ideas are developed.

I wonder, for instance, if the fact that the Staatstheater, Berlin’s grandest and most important theatre of the Weimar years, did not have a revolve — and did not gain one until the early 1930s — may not have played a key role in the development of Leopold Jessner’s shockingly reductive stage aesthetics, the minimal, architectural sets for which “Jessnertreppe” (Jessner Stairs) almost instantly became an inaccurate shorthand. Jessner strongly propagated the importance of tempo in his stagings, and wanted to avoid lengthy scene breaks; the space in which he worked was monumentally large, but lacked the equipment for fast changes between elaborate sets that filled the depth of the stage. After a reconstruction effort in 1905, the Staatstheater had a side stage with a very large scene wagon, wide enough to completely fill the proscenium, and about 8 metres deep.

Genzmer, Felix (1856-1929), Schauspielhaus, Berlin. Reconstruction; floorplan. Note the deep and wide side stage on the right, which required extensive demolition and rebuilding work. (TU Berlin Architekturmuseum Inv. Nr. BZ-H 39,003)

Relatively shallow sets in front of a perspectival backdrop could thus be changed out quickly, but only at the cost of reducing the acting space to almost a third of the stage’s depth. Jessner’s approach, on the other hand, was to use the entire stage and add or remove simple, monumental structural elements upstage, downstage, or from above. In his 1920 Richard III, for instance, Emil Pirchan’s design initially consisted of little more than a tall upstage wall cutting across the entire stage, with a lower second wall in front of it: this formed an elevated platform and had a central opening which served as a gateway and as an interior space (for instance, as Clarence’s prison cell). After Richard’s rise to power, the famous stairs appeared, now running from the top of that wall towards the edge of the stage, painted blood red, widening progressively. I am not aware of an account of how this was accomplished, but the specific technical setup of the Staatstheater makes it likely that the stairs were waiting on the scene wagon and were simply moved in from the side stage. The lower of the two wall must thus have been at least seven metres upstage of the curtain line, with nothing in front of it: no wonder Jessner’s stages were so often described as “empty.”

The critic Max Osborn identified as particularly revolutionary Jessner’s ability to understand and shape “the cube of the stage” — and what a cube he had in the Staatstheater, almost 20 metres deep, 26 metres wide, soaring over 21 metres high below the grid, and with the second-tallest proscenium arch in the city at 8.4 metres: almost 11,000 cubic metres of space. Of course there were means of reducing the sheer presence of that empty space, rendering it dynamic, breaking it up into smaller units, making it feel smaller. Neither Schinkel nor the architect overseeing the 1904 reconstruction, Felix Genzmer, had intended all that emptiness to be made visible. But that space was there, as a challenge, and as an opportunity — and in the absence of a revolve, it was a space that almost demanded to be taken seriously as a whole, to be “understood” and “shaped” in its totality.

That space changed dramatically in 1935, when Göring, in his role as Prime Minister of Prussia and particular patron of the Staatstheater, commissioned a massive reconstruction project. This involved replacing the existing houses behind the theatre, across the Charlottenstrasse, with a large storage and construction facility for the theatre, which was directly connected to the backstage wall by a covered bridge through which preassembled scene wagons could move on two sets of tracks. This became, in effect, an enormous new backstage area, over 21 metres deep, over 11 metres wide, and almost 9 metres tall. Theoretically, with all the gates wide open, spectators seated in the middle of the stalls or the first circle could have seen all the way to the back wall of the storage building, over 80 metres beyond the proscenium. The goal of the reconstruction effort was not to create an impossibly deep stage, though. It was to equip the Staatstheater with the machinery necessary to combine elaborate, lavish sets with the greatest possible efficiency and speed for scene changes. A large revolve was installed, which, combined with the two scene wagons on the backstage tracks and the side stage that was retained, allowed for up to four separate sets, each as wide as the proscenium and taking up about half the revolve, to be assembled and made ready independently. The new facilities would allow for film-like scene transitions.

And yet, when Jürgen Fehling directed Richard III on this new stage, he ignored all those new possibilities — with the exception of the new depth of the space. An emptiness almost designed to remind audiences of Jessner’s — in 1937, seven years after the Jewish Social Democrat Jessner had been driven from office by reactionary critics and four years after he had fled Germany after the Nazis came to power. Paul Fechter described it as a “luminous box of space” that tore “one’s gaze far into the gigantic depths of this stage” — the raked floor painted in a light colour, the walls covered in bright cloth, steps far, far upstage descending who-knows-where, and finally, another bright cloth wall at the very back. As Hans-Thies Lehmann reports, some reviews vastly misjudged the depth of the set, thinking it to be almost twice as deep as its actual (and already unprecedented) 44 metres: that space, as remarkable as the production was for other reasons, was its most sensational and immediately overwhelming feature. Fehling and his designer Traugott Müller did not exactly transform a old space for new purposes, of course: their stage still smelled of fresh paint. But they took a facility constructed for one kind of theatrical aesthetics and used it — exposed it, really — in the service of an aesthetic program diametrically opposed to the Nazi theatre functionaries’ intentions. No one wonder Göring apparently left in the first intermission, muttering about “cultural bolshevism.”

The 1935 reconstruction of the Staatstheater was the opposite of an avant-garde project. But despite its reactionary intentions, it produced a space that created new possibilities for artists reimagining theatrical space. Just as Jessner’s productions put an Imperial theatre in the service of a radically reduced aesthetic and a Republican ethos, just as Piscator turned a standard proscenium venue into a site for technical, theatre-aesthetical, and political radicalism, Fehling pushed Jessner’s spatial thinking further and played with political fire, on the very stage designed as the Nazi state’s most impressive theatrical venue.

Innovation in theatre can be driven by new plays or new spaces. But as often as not, theatre reinvents itself through an engagement with old things: old plays, old styles, old rooms. Even the theatrical avant-garde has a habit of looping back, of looking backwards to go forwards. In that sense, there is no contradiction at all between making radical art in traditional spaces. It is simply the kind of thing theatre tends to do. But as theatre historians, we need to pay attention to both where those radical artist went in their work and to the seemingly negligible traditional places (and plays) where that work took place, and by which that work was enabled — because even though the artists may not say so, and though it may not be obvious, the work would have been different in different spaces. Theatre is always local, in its making and in its reception; and if we don’t understand a production’s specific local preconditions, we can’t really understand the process of its making, the nature of its reception, or, ultimately, the work itself.

Old Spaces, New Art: The Theatrical Avant-Garde and the Proscenium Stage I have been thinking quite a bit about the problem of theatrical space lately. Open any standard work of theatre history, and you are likely to find a fairly standardized account of how the spaces -- the buildings, mostly -- used for theatrical performances in the West have developed.

0 notes

Text

The Puzzle of Greater Male Variance

Abstract

Abstract

Greater male variability on tests of mental ability would explain why males predominate not only at the highest levels in mathematics and science, but in business, politics, and nearly all aspects of life. The literature on sex differences in mental test scores was reviewed to determine whether males in fact exhibit greater variability. Two methods were used to compare sex differences in variability, ratios of total test score variances and ratios of the number of males and females scoring at or above extreme score cutoffs, or “tail ratios”. Most samples were greater than 10K. The review also included studies of physical traits such as height, weight, and blood parameters; brain volume measures; and studies of variability in four taxa, mammals, birds, insects, and butterflies. In the vast majority of total test score ratios, males were more variable than females. Males were more likely to score in the extreme right tail, indicating higher aptitude, on tests of mathematics and spatial ability in which mean sex differences favor males. On tests of writing, vocabulary, and spelling, in which mean differences favor females, males were more extreme in the left tail, indicating lower ability. Males also exhibited greater variance in physical traits, blood parameters, and brain volume measures. Similar variance differences were also found in animals. Genetic theory proposes that greater variability depends on which sex is heterogametic, that is, has two different sex chromosomes. In mammals and insects, this is the male sex, with the XY sex chromosome pair. In birds and butterflies, this is the female sex, with the WZ sex chromosome pair. It is heterogamety and not sex that determines which sex is more variable. This is because in the heterogametic sex, recessives on the single X or Z chromosome are fully expressed generating a binomial distribution with higher trait variance, while in the homogametic sex, recessive traits are averaged generating a normal distribution with lower trait variance. Females have improved their performance on mathematics tests for the gifted, with ratios falling from 13:1 favoring males in 1980 to 3:1 favoring males from 1990 onward, but the improvement has stopped. Heterogamety predicts that females, because they are homogametic, will never equal males not only in the right extreme of mathematics ability and other ability distributions but in the distribution of any trait influenced by the X chromosome.

In 2007, Psychological Science in the Public Interest published an issue devoted to sex differences in science and mathematics written by a group of contributors from fields such as neuroscience, gifted-studies, cognitive development, cognitive gender differences, and evolutionary psychology (Halpern, Benbow, Geary, Gur, Hyde, & Gernsbacher). Each author reviewed material from their specialty relating to the problem of female under-representation in mathematics- and science-based fields in academia, research, and industry. The group concluded that observed sex differences result from both genetic influences and sociocultural differences in the treatment of males and females, most certainly an anodyne conclusion of the kind expected from a committee of disparate experts.

The 2007 article was in part a response to the controversy following statements made two years earlier by then Harvard University president Lawrence Summers to the effect that there were fewer women in academia and industry because fewer women score at the highest levels on tests of aptitude predicting success in mathematics and the hard sciences. This was tantamount to saying that, at the highest ability levels, women are inferior to men. Summers’ statement about women was a major factor in his 2006 resignation as Harvard’s president. Regardless of campus politics, which also played a large role in his departure, the question remains, why do men dominate at the highest levels of mathematics, science, business, and industry?

Last year, the issue of sex differences arose again, this time in the citadels of technology, in particular the Silicon Valley offices of Google and the claim by one software engineer, James Damore (2017), that, among other things, “differences in distributions of traits between men and women may in part explain why we don't have 50% representation of women in tech and leadership.” This was Summers’ explanation exactly, and for offering it, Damore experienced a fate similar to Summers’: He was fired.

The topic of cognitive gender differences being much too broad for any one article, this paper considers only the issue of greater male variance on mental ability tests. This review updates the 2007 Public Interest article now that more than 10 years have passed and considers new findings, which include much larger samples of test data than were then available, and recent research published by geneticists and behavior geneticists shedding new light on this controversy.

The approach I’ve taken in this review was dictated by the issue under study, the variance of mental ability as reported in a wide range of aptitude and achievement tests of children and adolescents. While it might seem preferable to perform a meta analysis of many study results, the variance statistic does not lend itself to this approach. Pooling effect sizes from different samples can give a better estimate of true population mean difference than individual studies. But it is difficult to know what group is represented in the result when a meta-analysis is performed on heterogeneous variances. Studies of variance should be based on large nationally representative samples, not heterogeneous studies of selected groups, some based on selected samples, thrown together and christened a meta-analysis leaving the reader with the question, “What is the population the parameter of which is being described?” For a brief yet astute discussion of the perils of using meta-analysis to study sex differences in variance, see Hedges and Nowell (1995) Pages 41-42.

If meta-analysis is inappropriate, what other approach can one take? In the studies reviewed here, a wide variety of tests were given to children ranging in age from early childhood through late adolescence. I have chosen to review the 17 studies individually, presenting reported sex differences in means, variances, and tail ratios in separate tables for each study. After reviewing each study, I discuss its merits, for example, Did the study assess aptitude or achievement? Did subject age affect the results? What patterns exist across tests of domains such as verbal, mathematical, spatial, and science aptitude; change in variances over time, etc?

None of the data in the studies reviewed here were generated by the researchers for the purpose of comparing male and female variance, nor could it be, the sample sizes required are too large. In every instance, tests were given to meet an institutional requirement such as college entrance or by a government agency assessing student progress or teacher/school effectiveness. The sample sizes were large, some having tested or screened millions of students randomly sampled from large countries such as the U.S. and UK, and in one case, the PISA test of 276,165 15-year-old students from 41 developed and developing nations. The results were data sets that largely spoke for themselves with little data manipulation needed. In most cases, I have reproduced the key tables from each study to enable inspection of the supporting evidence for the issue under review.

Requirements to Show Sex Differences in Variability

Sources of Differences in the Right Tail of Distributions

Of interest here is the difference in the frequency of extreme scores in the tails of test-score distributions. More males will be found in the extreme right of a distribution if males have a higher mean and both sexes have the same variance, or if both sexes have the same mean but males have a greater variance, or if males have both a higher mean and greater variance. No studies with the exception of Nowell and Hedges (1998) using a method developed by Lewis and Willingham (1995) attempt to partition the relative frequencies of extreme scores into components due to mean differences and variance differences.

Measures of Sex Differences

Virtually all of the reviewed studies of sex differences report the mean sex difference and the total test score variance ratio or VR. They also report “tail ratios,” a proportion comparing the number of males and females scoring above a given cut off in the extremes of distributions.

Means are universally compared using Cohen’s d (1988), the mean difference standardized by the pooled within-groups standard deviation:

(MeanM – MeanF)/√(VarM + VarF)

Cohen’s (1988) criteria for assessing the importance of d are .20, small; .50, medium; and .80, large. In most of the studies reviewed below, d was calculated as male mean – female mean. In those few studies were it was calculated female mean – male mean, I reversed the sign and so noted in the footnotes to the tables where I did this.

The standard deviation is the most commonly used measure of test-score dispersion, but its square, the variance, is a better measure of variability because its ratio can be used to compare the variability of different groups. To do this for males and females, the simple ratio of male over female variance is computed:

VarM/VarF.

A ratio of 1.0 indicates equal male and female variances. Ratios larger (smaller) than 1.0 indicate greater (lesser) male variance. Feingold (1992) suggested that a difference of 10 percent in the variance, or a variance ratio of 1.10, is the minimal required for the difference to have substantive importance. In all of the studies, the variance ratios were calculated as male variance/female variance.

Tail ratios are a simple means of comparing performance at the extremes of test score distributions. The counts of males and females within the 10%, 5%, and .01% cutoffs provides the ratio of males and females performing at or above that level:

CountM/CountF.

Much like VR, a tail ratio of 1.0 indicates that the number of males and females scoring above a given cut off is equal. Ratios larger (smaller) than 1.0 indicate more (fewer) males scored at or above the cut off. An increase in tail ratios with successively higher cut offs indicates that a greater disproportion of males is scoring at higher levels and thus possesses to a greater degree whatever latent trait the test measures. In all of the studies, the count ratios were calculated as male variance/female variance.

Some studies look only at the right tail of the distribution. Samples selected for high scorers will reduce the variance by truncating the left side of the distribution. For these studies, is it not useful to estimate the population variance but rather to compare the counts of males and females scoring at or above a given cut off score, that is, tail ratios. Lewis and Willingham (1995) found that the mean sex difference in restricted samples was correlated with the variance difference.

Volunteers are known to differ from the general population. Children who volunteer for enrichment programs, or whose parents do the volunteering, are likely to be more motivated and different from a nation-wide sample of non-volunteer same-age children in intelligence, motivation, SES, race-ethnicity, etc.

Factors Affecting Variance Differences

Age. Haworth, Wright, Luciano, Martin, de Geus, van Beijsterveldt, & Plomin (2014). found that the heritability of general intelligence increases with age, from .41 to .55 to .66 at ages 9, 11, and 17 respectively, in a study that pooled 10,689 MZ and DZ twin pairs from six studies done in four countries. Others have suggested that heritability is as high as .80 in late adulthood (Johnson, Carothers, & Deary, 2009; Plomin & Deary, 2015). At the very least, tests should be of young adults although there is no large-scale testing of persons older than those taking graduate school admissions tests like the GRE.

Range Restriction. Because we are interested in the relative number of males and females scoring in the tails of the test distribution, there should be no ceiling or floor effects. An ideal test would have few or no zero or perfect scores to assure that the difficulty of the test matched the ability of the test takers.

Unselected Samples. Ideally, samples should be unselected to ensure that the full distribution of ability within a population is tested. This can be achieved with a procedure such as national probability sampling, which is the best means to obtain a truly representative sample of the nation as a whole. There are large samples of selected populations such as the SAT and GRE, tests used to screen students for college and graduate school. But these samples are neither random nor representative despite samples numbering in the millions.

Aptitude Versus Achievement Tests. Aptitude tests measure student ability and achievement tests measure student learning and school effectiveness. It is better to study tests of aptitude rather than achievement if we are studying ability although it is impossible to study any ability divorced from previous learning experience. Unfortunately, there are few large-scale studies of “culture-free” tests of intelligence, such as the Raven Progressive Matrices. Societies economically developed enough to do large-scale testing also have compulsory schooling usually through the American equivalent of high school. Large-scale testing is done with school children and adolescents to monitor their progress and to screen for college and graduate school admissions. An example of using large-scale testing over a range of ages is the No Child Left Behind program, which required regular testing of elementary and high school children to determine whether they were achieving specific learning goals and whether teachers were performing up to standard (Zelizer, 2015). Some schools whose students failed to progress adequately were closed. The diversion of classroom time and school resources away from instruction to prepare students for these achievement tests has been a source of parental complaints (Strauss, 2015). To the extent that test preparation becomes “teaching to the test,” sex differences in the means and variances will be reduced.

Genetic theory

This paper will show that the sex difference in variance is due to the difference in their chromosome allotment, namely the difference in the sex chromosomes, XX for females and XY for males. There is no difference between males and females with regard to the 22 pairs of somatic chromosomes, the autosomes. Both males and females have the same chromosomes and the same coding regions and alleles on all 22 pairs of autosomes. The random process by which they are assigned those alleles is the same for both sexes. But with the sex chromosomes, X and Y, the genetic allotment is different. Because the Y chromosome that men receive is vestigial, it leaves the X chromosome unpaired so that not only are dominant alleles fully expressed, recessive alleles are also fully expressed. In females, the pairing of two X-chromosomes means that recessive traits are expressed only if there are recessive alleles on both X chromosomes, which reduces the probability that the recessive trait is expressed to the square of the probability for males, one source of lower female variability.

Johnson et al. (2009) demonstrated how this works in a simple model of a single gene with two alleles on the male single X chromosome,

A and a,

that will have the maximum population variance of

0.5 x 0.5 = 0.25

when the allele frequencies are equal. For both the dominant and recessive alleles, their probability of expression is equal to their proportion in the genome.

Matters are different for females, who have three genotypes arising from the same two alleles because the two alleles, A and a, are on one of two different chromosomes, XX,

AA = 0.25, Aa = 0.50, and aa = 0.25.

In a perfect world, this would lead to reduced variance in females because the phenotypic expression of AA and Aa are the same when there is complete dominance and thus a lower population variance of

(0.25 + 0.50) x (1.0 - 0.75)

0.75 x 0.25 = 0.188.

But the fact that females have two X chromosomes while males have one complicates matters. This is an imbalance that nature corrects by silencing or “inactivating” one of the two female X chromosomes. Which X chromosome is silenced is randomly determined early in gestation when the embryo is between 8 and 16 cells (Craig et al., 2009). Half of these cells will have chromosome X1 with allele A and half will have chromosome X2 with allele a. This equal splitting of the two X chromosomes and their different alleles will lead to phenotypic expression that is the average of allele A and a. Because half the females have the heterozygous genotype Aa, and one-quarter each have the homozygous AA and aa genotypes, their distribution is more approximately normal and has a smaller variance than the binomial distribution of A and a in males. In short, this is the source of greater male variance and is discussed in greater detail below.

Published Studies

The following studies present in some detail what I think is the most comprehensive review extant of the literature bearing on the issue of male and female variance in mental ability testing. The studies reviewed unmistakably make the case that 1) males are more variable with regard to virtually all tests of mathematical, spatial, and science aptitude and achievement at both the high and low ends of the respective test score distributions and 2) many tests of verbal aptitude and achievement especially at the low end of the test score distributions.

Benbow and Stanley (1980, 1983)

The issue of differential variance was given it’s current prominence by Benbow and Stanley who reported in two papers (1980 and 1983) on sex differences in mathematics based on large samples of mostly 7th grade children who were given both the verbal and mathematics sections of the Scholastic Aptitude Test as part of the Study of Mathematically Precious Youth (SMPY).

The SAT-Mathematics test (SAT-M) is normally taken by college-bound high school seniors, who at age 17 or 18, are 5 to 6 years older than the SMPY 7th graders, all of whom were age 12 except for a small number of 13 year olds in the early years of the study. Few of the SMPY children had taken algebra or had any formal training in the skills needed for the SAT-M. Benbow and Stanley (1980) gave the SAT-M not to test mathematical aptitude, but because the test was so far above the skill level of 7th graders that the SAT-M would be a test of their “numerical judgment, relational thinking, and logical reasoning.” Spearman (1904) would recognize the ability Benbow and Stanley were testing as general intelligence or g.

The 1980 report was based on the scores of 9,927 students who were recruited from the greater Baltimore region between 1972 and 1979 after scoring in the top 2 to 5 percent on a mathematics screening test. Tables 1 and 2 show the results for both the verbal and mathematics scores. Removing the 8th grade scores for December 1976 because of the small N, the mean d value of -.03 for the verbal scores shows that males and females were about equal in verbal reasoning and the mean variance ratio of 1.04 also suggests parity in variability. But the mean d value of .50 and the mean variance ratio of 1.58 for mathematics scores shows that males score a half standard deviation higher and were nearly 60 percent more variable than the females. The extreme mathematics scores were even more disparate, 16.6% of the males scoring above 600 but only 2.1% of the females.

In 1983, Benbow and Stanley reported results on the SAT-M from nearly 40,000 students in the mid-Atlantic region and another large group from a nationwide talent search within and beyond the Johns Hopkin’s talent search area. All students were under age 13. The results were similar to those from the earlier study. No difference was found in the SAT-Verbal, with the male and female means 367 and 365 respectively (Standard deviations not reported). But there was a 30-point mean difference on the SAT-Mathematics, with the male and female means 416 and 386 respectively. The variance ratio was 1.38, roughly the same as in 1980 and showing again that males are more variable than females. More importantly as shown in Table 3, the number of boys scoring above 700 on the SAT-M over both national search samples, was 13 times the number of girls (260:20), despite equal numbers of boys and girls taking the screening test.

Comment. Benbow and Stanley’s findings gave enormous impetus to research on sex differences in cognitive ability generally and to sex differences in variability specifically.

The extreme 13 to 1 ratio has become ingrained in the literature on sex differences in variability even though it has been out of date since 1990, the ratio now being 2.8:1, or in round numbers 3:1. Use of the SAT allowed Benbow and Stanley to avoid ceiling effects: Few students scored above 700, and in many years, no one scored a perfect 800. Their samples were young and highly selected, making it possible to generalize only to the very brightest students rather than to the population of seventh graders as a whole. The students were volunteers, a special group that probably differs in many ways from students in general although it is unlikely that these factors substantially affected the differential pattern of scoring. Comparing means and total score variances between two groups all of whom are in the right tail of the score distribution is questionable.

Benbow and Stanley (1980, 1983) were among the earliest to note that the traditional arguments made to explain the lower numbers of extremely able females in mathematics, such as lack of opportunity to study math and social attitudes discouraging females from pursuing careers in math and science, would create mean differences between the sexes not variance differences. Benbow and Stanley also noted that through 11th grade, boys and girls have taken the same math courses, obtain about the same grades, and rate similarly their liking for mathematics and their perception of mathematics as important. Summarizing their assessment of theories explaining male superiority at the highest score levels in their 1980 report, Benbow and Stanley stated that “boy-versus-girl socialization” as the only acceptable explanation of the sex difference is “premature,” and in 1983, said that the reasons boys “dominate the highest ranges of mathematical reasoning ability were unclear.”

1 note

·

View note

Text

New Post has been published on Pagedesignpro

New Post has been published on https://pagedesignpro.com/the-future-car-automobile-a-subjective-insight/

The Future Car Automobile, A Subjective Insight

The future car, let’s say a 2025 model will be a very different machine to its present day Grandfather. They will be packed with computers and safety devices ensuring that not even a stunt driver can crash, and they will run on carbon dioxide and emit strawberry scented oxygen. Well, they might.

Predictions of future vehicles are usually wildly inaccurate, by now we should all have space programs to rival NASA based out of our backyard. However, some of the automobile related cutting edge technology emerging now may well make it into production models of the future. But how much of this technology will be needed or even wanted? Car design of the present is already influenced by politicians, bureaucrats, health and safety regulations and emissions regulations and the stunning concept seen at the motor show emerges into the world as a 1.0-liter shopping cart, but at least it’s legal.

Personally, I like the idea of fuel efficient hybrid cars, and fuel cell cars emitting nothing but water. However, like most enthusiasts, I also like powerful loud convertibles with the ability to snap vertebrae at 10 paces. A sensible mix, therefore, is required and this will be the job entrusted to car designers and engineers of the future, to make a politically correct car that is also desirable.

Another imminent problem facing designers of the future is coming up with styling ideas that are fresh and new. Some of the more recent concepts are certainly striking but not necessarily beautiful in the classic sense of the word. But if there is one thing the automobile industry is good at that is innovation, and I for one am confident that radical and extreme concepts never before imagined will continue to appear at motor shows around the world.

Computer technology has already taken a firm hold of the automobile and the modern driver is less and less responsible for the actions of their vehicle, soon crash victims will try to sue the electronics companies for accidents they caused because their computer system failed to brake the car even though they were fast asleep at the wheel on a three lane highway. Until you have automation of every single vehicle on every single road computer driven cars are just not feasible, in my mind, there are just far too many on-the-road variables, with all the logic in the world you cannot beat a brain. Now computer-assisted driving is already available in certain Mercedes models which brake for you if your not looking where you’re going and you’re too close to the car in front, but there is still a driver in control of the car, or is there. What if for some reason you wanted to get closer to the car in front, you needed to get closer to the car, if you didn’t get closer to the car something terrible would happen to civilization. Where do you draw the line with vehicle automation?

In advanced cities of the future CCTV will be so prevalent that visible crimes such as car theft are all but wiped out, but that still won’t stop the determined thief. GPS (Global Positioning System) units fitted as standard to all new automobiles will be able to track any vehicle at anytime anywhere, this technology is already common but more widespread use is inevitable. In the UK the government is already talking about fitting GPS to charge motorists depending on what road they travel on and at what times to cut spiraling congestion. This technology coupled with an improved mobile phone and Bluetooth network can be used to track and recover stolen vehicles. When the vehicle is found to be stolen a call can be made which shuts down the engine of the car. At the same time, a call is sent out which alerts the available nearby police, by using the GPS installed in both the stolen car and the police car officers can track the car even if they cannot see it. The police will also have much more insight into a vehicle’s record when out on patrol, using Bluetooth technology a police car can tail a vehicle and receive information on the owner, the current driver and their driving history, and even find out recent top speed and acceleration figures. Of course, who wants that, you think you’ve just had a fun little blast on your favorite bit of road you get pulled over five miles later and the officer gives you a speeding ticket for something he didn’t see. But if this technology is mandatory then what choice do you have.