#stereoscopic sensor array

Explore tagged Tumblr posts

Text

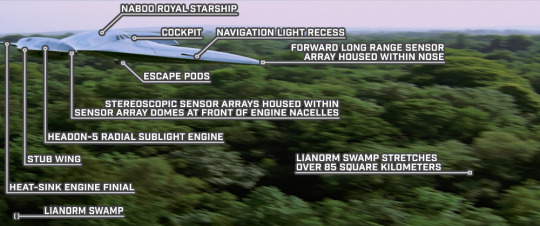

The Royal Starship Flies Over the Swamps

STAR WARS EPISODE I: The Phantom Menace 01:38:16

#Star Wars#Episode I#The Phantom Menace#Naboo#Lianorm Swamp#Naboo Royal Starship#cockpit#navigation light recess#forward long range sensor array#escape pod#stereoscopic sensor array#sensor array dome#engine nacelle#Headon-5 radial sublight engine#stub wing#heat-sink finial

1 note

·

View note

Text

Eye-Tracking 3D Display: The Future of Immersive Technology

Email: [email protected]

WhatsApp & Wechat: +86 18038197291

www.xygledscreen.com

What is an Eye-Tracking Function?

The eye-tracking function is a new tech that follows where your eyes go and how they move. It makes 3D stuff feel more real by knowing which way you're looking at it! This tech uses cameras and sensors to watch where your eyes are. It changes the screen so you see things in 3D. Basically, it copies the normal way our eyes see depth and puts it into the screen.

The eye-tracking function has been growing for many years, and now that technology is improving, it's being added to 3D screens. It's used a lot in different jobs, like art and movies. It's also being used to train doctors by simulating medical situations.

Just like any new gadget, the part that watches your eyes also has some problems and issues to deal with. These difficulties include how well it works and if you can trust it completely, which makers try hard each day to make it better. It can follow many eyes at once. This means it could change how we watch movies, play games, and use digital stuff forever. In short, the eye-tracking feature is very important for 3D displays that use your eyes. It gives a real and fun way to watch things.

Understanding Eye-Tracking Technology

The technology uses a combination of two essential components: Eye-tracking and an old lens grid. Eye-tracking uses sensors and cameras to watch how the eyes move, while the lenticular lens array is in charge of showing 3D things without needing special glasses or headsets. For many years, people have been working on this tech, and now, with the help of eye-tracking technology, it is being added to 3D screens.

The eye-tracking function works on the idea of stereoscopic vision. This is how our eyes see depth. When we look at something, our eyes shift and concentrate on different spots. This gives two very similar pictures of the same thing but with small differences. Our brain then works on these pictures and makes a feeling of depth, letting us see in 3D. Eye-tracking technology copies our eye movement natural process. It watches how we move our eyes and changes the show to make 3D pictures.

Another important part of the 3D display that uses eye-tracking is the lenticular lens setup. This tech uses many little lenses put on the screen to change how light rays go, making it look like something is in-depth. The lenses let each eye see different pictures, making a side-by-side effect that copies the normal 3D seeing of our eyes. This gets rid of the need for glasses or headsets and lets us watch in a deeper, more normal way.

This technology has some limits, like how well it tracks eyes and what angle you can view from. The correctness of eye-checking can change because of things outside, like distance, light, and movement. This causes a tiny delay between when the eyes move and what's shown on the screen. Makers are always trying to make eye-tracking more precise and dependable in order to fix these problems.

The Uses of Eye-Tracking 3D Display

As said before, the uses of these technologies are broad and different. They're growing all the time as technology gets better. One main use is in design and art. There, 3D models and designs can be made more exactly with this tool. This helps makers and artists see their work in a more real way. It makes the design process better and quicker.

In the health area, eye-tracking 3D shows are being used in practice sessions to help doctors and learners. The tech gives a deeper experience, making training feel real. It helps people learn better. It also lets careful and precise methods be done without endangering the safety of patients.

In the fun and enjoyable business, 3D screens that watch your eyes will change how you see movies and shows. This can be watched by more than one eye at once. It lets you play 3D games with others without headsets or glasses. This will make gaming more enjoyable and give players a better experience.

Also, 3D screen technology that watches your eyes is being looked at in the school world. This could make learning better for kids. This can show three-dimensional stuff without needing any fancy glasses. This makes it easier for students to learn and keeps things interesting.

In ads, eye-tracking 3D displays can help make them look better and catch people's attention. The tech can follow where a viewer is looking, so it allows for special and made-for-you adverts. This gives fresh chances for ways to sell things and connect with customers.

The possible uses for this technology are never-ending, and as they keep getting better, we will likely see them in more jobs or markets. It's clear that it can greatly improve how we use technology and media. It gives users a more involved experience with the things they interact with.

The Future Market of Eye-Tracking 3D Display Technology

As the 3D eye-tracking display tech gets better and cheaper, it's expected to have a big effect on how people buy these displays. Right now, the market is mostly ruled by glasses and headsets. But these can be bothersome when used for a long time. Makers are trying to reach new places like consumer gadgets and house fun by using the technology.

People think more individuals will want this tech in the future because of better eye-tracking and a growing love for 3D stuff. A report by Springer says that the world market for eye-tracking tech will be worth $3.4 billion in 2025, with much of it coming from technology used to show three-dimensional images on screen.

In conclusion, eye-tracking 3D screens are a big change in the world of three-dimensional technology. Its power to give glasses-free and shared enjoyment makes endless fun, design, or learning possible. As 3D eye-tracking technology gets better and easier to use, we can expect that it will become a normal part of our everyday life.

FAQs about eye-tracking 3d display

Is eye-tracking 3d display suitable for multi-users? How does it work?

One common question about 3D display with eye tracking is if it's good for more than just one person. The answer is yes because technology can watch many eyes at once. This creates new chances for playing games with others and doing group stuff. The technology works by using cameras and sensors to watch where each person's eyes are moving. Then, it changes the screen view so you can see things in 3D!

How far the distance an eye-tracking 3d display can track?

The distance a 3D display can follow your eye depends on the tech used by its maker. Now, most 3D displays that watch eye movements can see eyes up to 3 meters away. But, as eye-tracking tech keeps getting better and better, we can expect this distance to grow bigger in the future.

How large the eye-tracking 3d display can be?

Eye-tracking 3D displays can be of different sizes. They range from small ones for desktop use to big screens used at home or in ads by businesses. The size of the screen doesn't matter for eye tracking. It works fine as long as cameras and sensors are in the right place to watch where your eyes move.

Is it suitable for viewing from all angles?

Yes, a 3D display with eye-tracking gives a 3D view from every side. Unlike normal 3D displays that need you to be in a certain place for the 3-D effect, an eye-tracking display listens to where your eyes go. It lets you see the cooler stuff from any angle without moving too much.

0 notes

Text

A Brief Introduction to Immersive Systems: History of VR (part 3)

In 2001, SAS Cube is the first PC based cubic room. It leads to Virtools VR Pack. Virtools was a software developer and vendor, created in 1993 and owned by Dassault Systèmes since July 2005. It is no longer updated and has been taken down in March 2009.

Google introduced Street View launches in 2007. Immersive Media is identified as the contractor that captures the imagery for four of the five cities initially mapped by Street View, using its patented dodecahedral camera array on a moving car.

In 2010, Google introduces a stereoscopic 3D mode for Street View. Palmer Luckey, creates the first prototype of the Oculus Rift headset. It features a 90-degree field of vision, which has never been seen before, and relies on a computer’s processing power to deliver the images. This new development boosts and refreshes interest in VR.

In 2012, Luckey launches a Kickstarter campaign for the Oculus Rift which raises $2.4 million.

In 2014, Facebook buys the Oculus VR company for $2 billion. This is a defining moment in VR’s history because VR gains momentum rapidly after this.

Sony announces that they are working on Project Morpheus, a VR headset for the PlayStation 4 (PS4).

Google releases the Cardboard - a low-cost and do-it-yourself stereoscopic viewer for smartphones. It is very interesting how the Cardboard’s design reminds as of a Stereoscope, or a View-Master. Regardles of how advanced the technology gets, it always seems to be following the same basic principles.

Samsung announces the Samsung Gear VR, a headset that uses a Samsung Galaxy smartphone as a viewer.

More people start exploring the possibilities of VR, including adding innovative accessories, for example Cratesmith, an independent developer, recreates a hoverboard scene from Back to the Future by pairing the Oculus Rift with a Wii’s balance board.

In 2015, VR possibilities start becoming widely available to the general public, for example:

The BBC creates a 360-degree video where users view a Syrian migrant camp.

RYOT, a media company, exhibits Confinement, a short VR film about solitary confinement in US prisons.

Gloveone is successful in its Kickstarter campaign. These gloves let users feel and interact with virtual objects.

By 2016 hundreds of companies are developing VR products. Most of the headsets have dynamic binaural audio. Haptic interfaces are underdeveloped. Haptic interfaces are systems that allow humans to interact with a computer using their touch and movements - like the Gloveone gloves that are being developed. This means that handsets are typically button-operated. HTC releases its HTC VIVE SteamVR headset. This is the first commercial release of a headset with sensor-based tracking which allows users to move freely in a space. HTC Vive releases in 2016.

In 2017, many companies are developing their own VR headsets, including HTC, Google, Apple, Amazon, Microsoft Sony, Samsung etc. Sony may be developing a similar location tracking tech to HTC’s VIVE for the PlayStation 4.

In 2018, at Facebook F8, Oculus demonstrates a new headset prototype, the Half Dome. This is a varifocal headset with a 140 degrees field of vision. Virtual reality has significantly progressed and is now being used in a variety of ways, from providing immersive gaming experiences, to helping treat psychological disorders, to teaching new skills and even taking terminally ill people on virtual journeys. VR has many applications and with the rise in smartphone technology VR will be even more accessible. With large numbers of companies competing, novel controllers being explored and lots of uses for VR this field can only improve.

Barnard, Dom. "History of VR - Timeline of Events and Tech Development." Virtualspeech. August 06, 2019. https://virtualspeech.com/blog/history-of-vr.

#vr#virtual reality#history of vr#history of virtual reality#headsets#sony#google#facebook#playstation#playstation vr#oculus rift#immersion#immersive technology#wearable technology#technology#technology of the future

1 note

·

View note

Text

2020 Subaru Legacy Tech Dive: EyeSight, DriverFocus, Starlink Shine

Subaru Legacy Touring XT 2020 (April)

The 2020 Subaru Legacy is a near-perfect car if you’re looking for solid transportation and extensive safety technology across all trim lines. Every Legacy has all-wheel-drive, and enough driver-assist technology to be virtually self-driving on highways while protecting pedestrians in town (called Subaru EyeSight), track and alert inattentive drivers (DriverFocus), and call for help in an accident (Subaru Starlink).

The new, 2020 seventh-generation Legacy also has front cupholders deep enough to not spill a 32-ounce Big Gulp, were the car capable of a 4-second 0-60 run (it’s more like 7 to 9 seconds, depending on the engine). The engine’s “boxer” technology, similar to what Porsche uses, lowers the car’s center of gravity. The front and back rows are spacious and the trunk is enormous. Highway mileage is in the upper thirties.

So what’s not to like? Not much. This Subie won’t move the excitement needle quite like Mazda or Honda does among midsize sedans. It’s not as dazzling as the 2020 Hyundai Sonata. There’s less ground clearance than the similar Subaru Outback crossover. The new infotainment system and navigation had a few quirks, the kind a firmware upgrade typically cures, and stop-start twisted the steering wheel and my thumb a couple of times (more below).

The Nappa leather cockpit of the 2020 Subaru legacy.

The Car for Inattentive Drivers?

You say you’re a good driver; I say I’m a good driver. Yet surveys find the majority of Americans self-describe themselves as above-average drivers, which is statistically impossible. And yet, we also know people close to us whose driving skills or cognition worry us: teenagers and others in their first few years of driving, aging parents, a spouse or partner who’s had a couple of fender-benders that were the fault of “the other guy,” and people who text or create on-the-fly playlists even when they know it’s unsafe.

Subaru is a leader among automakers in making virtually all its safety technology standard across every one of the six trim lines, or model variants, of this new 2020 car. Buy any Legacy Base, Premium, Sport, Limited, Limited XT, or Touring XT and you get:

A dual front-facing camera system, Subaru EyeSight, to keep you in your driving lane, warn of / brake for possible forward collisions, detect and brake for pedestrians at speeds up to 20 mph.

Full-range adaptive cruise control as part of EyeSight.

An active driving assistance system that controls speed and lane centering, pacing any car in front of you, also part of EyeSight.

LED low and high-beam headlamps with automatic high-beam control.

All-wheel-drive for extra grip in snow or rain, or on gravel roads.

Any Legacy other than the base model has safety telematics (called Subaru Starlink) standard. Blind-spot warning is available, optional on two trim lines and standard on three; it also includes rear cross-traffic alert and automatic braking while backing up. An excellent eye-tracking driver distraction system, DriverFocus, is standard on the top two trim lines and optional on a third.

One feature not offered is a surround-view camera array that primarily improves tight-spaces parking, but it also protects you (if you watch the screen) from running into kids’ tricycles or kids on tricycles. Rear auto-braking provides that protection.

With the 260-hp turbo engine (top two trim lines only), you’ll hit 60 in 6-7 seconds. Add 2 seconds for the 182 hp engine on other Legacies.

Legacy on the Road: Mostly Smooth Sailing

I drove the top-of-the-line 2020 Subaru Legacy Touring XT, about $37,000 including shipping, with warm brown Nappa leather, moonroof, an 11-inch portrait-orientation center stack LCD, vented front seats, heated fronts and rears, onboard navigation, and immense amounts of back-seat legroom and trunk room.

Subaru lie-flat boxer engine: two cylinders go left, two cylinders go right.

With the new, 2.4-liter turbo engine of 260 hp and continuously variable transmission on the Limited and Touring XTs, it was quick, hitting 60 mph in 6-7 seconds. Highway miles went by quickly. Under foot-down acceleration, there wasn’t much noise from the CVT transmission; some testers have noted it on the non-turbo Legacy that has to be pushed harder to get up to highway speeds.

Most four-cylinder-engine cars have an inline design. Most Subarus including the Legacy have horizontally opposed, flat or boxer engines. They are effectively V engines where the angle is 180 degrees, not the 60 or 90 degrees of V6 or V8 engines. The engine is more compact, has less inherent vibration, gives the car a lower center of gravity, and allows for a lower hood and better driver sightlines. Against that, the engine requires two cylinder heads. Porsche also uses flat-six engines in the 911, Cayman, Spyder, and Boxster. The term boxer alludes not to the small crate it fits in, but rather the in-out motion of the two adjacent pistons that looks like a boxer’s fists.

Where most automakers use a combination of radar and a camera for driver assists, Subaru’s Eyesight system uses stereoscopic cameras. It’s standard on the 2020 Legacy, Forester, Outback, and Ascent; and available on the Impreza, Crosstrek, and WRX.

Pedestrian Detection Saves Another Jaywalker

Highway driving was enjoyable with the driver assists, a nicely sound-insulated cabin, very good Harman Kardon premium audio, Wi-Fi on Starlink telematics cars, and USB jacks for four people. In town, the driver assists work well; a jaywalker who popped out mid-block was picked up and the car came to a quick (sudden) stop. But spirited back-roads driving was not as much fun as some other cars in its class, notably the Mazda6 and Honda Accord. The 2020 Subaru Legacy is based on the same new platform as the 2020 Subaru Outback crossover-almost-wagon. But the Legacy’s ground clearance is 5.9 inches to 8.7 inches for the Outback. So the Legacy is fine in the rain, snow, and on gravel roads, but not the first choice in Subarus if the road to your country cabin is deeply rutted.

Subaru has rudimentary self-driving capabilities utilizing EyeSight, although Subaru doesn’t consider it to be formal self-drive tech and has no Eye-something shorthand name such as, say, EyeDrive. (BMW might not be amused.) Once activated, it centers you on a highway and proceeds at a pre-set speed, slowing for cars in front of you. It combines Subaru’s Advanced Adaptive Cruise Control feature with Lane Centering. As with other vehicles, activation is a multi-step process.

DriverFocus, on upper trim lines, combines a camera and infrared illuminator. It watches to see if the driver’s eyes are on the road ahead.

DriverFocus: Big Brother Is on Your Side

Take your eyes off the road, and the DriverFocus eye-tracker tells you to pay attention.

Subaru DriverFocus, an eyebrow module at the top of the center stack, contains a camera and IR illuminator to track where the driver is looking, and rats you out after 10-15 seconds of not looking ahead. GM’s highly regarded Super Cruise self-driving technology uses eye-tracking also.

Some driver-attention monitors count the micro-movements a driver continually makes as he or she drives.

I had two concerns with my test car: I was startled a couple of times by the gas-saving stop-start system. Occasionally as the engine came to a stop at a traffic light, the steering wheel on my test car abruptly turned a couple of inches and twice caught my thumb that was loosely holding the wheel next to the spoke. After the second time, I decided to keep my thumbs off the thumb grips once this car stopped at a light.

The infotainment system had trouble parsing some spoken commands, wanted to drive me to the intersecting street with the same name plus “Extension” at the end, and occasionally would not connect an iPhone using two different Apple cables or with Bluetooth. On sunny days, the LCD was sometimes hard to read and the brushed chrome-look trim strip around the center display reflected the sun’s glare.

The Subaru Legacy instrument panel. The center multi-information looks busy. (It is.) But it also gives the driver lots of information at a glance. If this feels like TMI, you can flip to simpler views.

EyeSight Is Improved, Still Unique

Subaru says EyeSight has been improved and I sensed that both in the ability to pick up a car ahead from a greater distance and to be less affected in the rain. In some ways, EyeSight in snowy conditions may be better than radar in that windshield wipers clear the paths in front of the two cameras. If snow blocks the radar sensor, you have to get out and scrape it off with a brush or your gloved hand, assuming the driver knows where the sensor is located in the grille. Also, snow and rain reduce the effectiveness of radar to some degree.

Subaru Legacy Touring XT, the top trim line.

Safety Features Abound

Even if you are a statistically good driver, a car such as the Legacy improves your odds of staying safe. It also improves pedestrians’ odds: A 2019 Insurance Institute for Highway Safety study found Eyesight-equipped Subarus reduce pedestrian-injury claims by 35 percent. IIHS also found Subarus with second-generation Eyesight did better than first-generation systems dating to 2010. IIHS said it found no significant self-selection bias, meaning the idea that safety-conscious good drivers might seek out safe-seeming Subarus and Volvos. Separately, IIHS found Subarus with EyeSight had up fewer rear-end collisions and passenger injuries.

How solid is Subaru on driver assists and safety technology? Here’s a rundown:

2020 Subaru Legacy Key Safety Technology, Driver Assists

Trim lines: Entry Middle Top Lane departure warning Std Std Std Lane-keeping assist Std Std Std Lane centering assist Std Std Std Blind-spot warning — $ / Std Std Adaptive cruise control Std Std Std Forward collision warning Std Std Std Auto emergency braking Std Std Std Pedestrian detection/braking Std Std Std Safety telematics (Starlink) — Std Std Driver-assist package (EyeSight) Std Std Std Driver monitoring (DriverFocus) — — / $ / Std Std Active driving assistance Std Std Std The table shows features as standard (Std), optional ($) or not available (–) on entry (Legacy base), middle (Premium, Sport, Limited, Limited XT) and top (Touring XT) trim lines.

Should You Buy?

The 2020 Subaru Legacy is a solid midsize car for people who don’t need a status symbol. The Legacy wins a lot of awards but not all of them. Consumer Reports has it as the best midsize sedan and one of only 10 CR Top Picks among 300 models for 2020. In contrast, Car and Driver put the Legacy eighth behind the Honda Accord, Hyundai Sonata, and the Mazda Mazda6, among others. Guess which publication favors safety features and comfortable ride versus spirited handling? The Legacy is also a 2020 IIHS Top Safety Pick+, which means good ratings in crash tests, advanced or superior ratings in available front crash prevention, and (the plus part) acceptable or good headlamps standard.

We like the Legacy a lot, even if within Subaru this is an outlier, a sedan in a company known for outdoorsy crossovers and SUVs: Crosstrek, Forester, Outback, Ascent. The Ascent had arguably been the best midsize SUV until the Kia Telluride / Hyundai Palisade came along last year. The Legacy had been unique in offering all-wheel-drive, but the Nissan Altima and Toyota Camry added it for 2020.

The steering wheel has big buttons and rockers, all legibly labeled. If only all cars were this clear with switchgear.

Subaru is a relatively reliable brand. The car is eminently practical. From the side, though, it’s hard to distinguish from a half-dozen other brands. Fuel economy is good, an EPA combined rating of 23 mpg for the turbo models, 29 mpg for the non-turbo. Real-world mileage should be several mpg higher, and with judicious driving, the non-turbo could approach 40 on the highway.

If you’re shopping Subaru for max safety, we’d suggest: Move past the Legacy base ($23,645 with freight) because you can’t get blind-spot warning / rear-cross-traffic alert or safety telematics, and past the Legacy Premium ($25,895) because you can get BSW / RCTA, but not reverse automatic braking (RAB). Blind-spot warning matters: Not all young drivers know to check side mirrors and look over their shoulders; older drivers may know, but may not have the dexterity to turn their heads sideways.

Every Legacy has dual front USB jacks (above) and, except for the base model (below), two more jacks in the back. Note how every jack and switch is nicely and legibly lettered.

The Legacy Sport ($27,845) lets you get BSW-RCTA-RAB in a $2,245 options package, along with a power moonroof and onboard navigation, for $30,090 total. Or for $30,645, you can get the Legacy Limited that includes BSW-RCTA-RAB, and the one options package, $2,045, gives you the moonroof again, a heated steering wheel, and DriverFocus. The top two trim lines, the Limited XT ($35,095) and Touring XT ($36,795), give you nice and nicer leather, DriverFocus, and the moonroof. So the sweet spot may be the Legacy Sport plus the options package, or the Legacy Limited, at about $30K each. Cross-brand shoppers comparing front-drive-only midsize competitors should attribute about $1,500 of Subaru’s price to AWD.

The Subaru Legacy should be at the top of your consideration set along with the Hyundai Sonata, the ExtremeTech 2020 Car of the Year. If you want a sporty car, look to the Mazda6, the Honda Accord, or – this is not a joke – the segment best-seller Toyota Camry with the TRD Sport, as in Toyota Racing Division.

Now read:

2020 Hyundai Sonata Review: Car of the Year? (It’s That Good)

Honda Accord Review: Way Better, and Honda Even Fixed Display Audio

2020 Subaru Forester Review: The Safety-First, Can’t-Go-Wrong-Buying-One Compact SUV

from ExtremeTechExtremeTech https://www.extremetech.com/extreme/309080-2020-subaru-legacy-review from Blogger http://componentplanet.blogspot.com/2020/04/2020-subaru-legacy-tech-dive-eyesight.html

1 note

·

View note

Text

Digital Mortar: Your In-Store Tracking Partner

Analyzing what is going on in your retail environment is one of the best ways to optimize customer experience, staffing strategies, merchandising and layout. With Digital Mortar hardware and software solutions the entirety of a store layout, ranging from supermarket, mall, big box or flagship, can be tracked. The resulting data are individual, anonymized customer records that provide end to end paths with geolocations updated sub second and location accuracy of better than 1 foot (0.3 meters). That information is positioned onto a flexible map of the store environment to enable analysis of where customers go first/next/last, which areas engage, how well displays convert, if sections are understaffed or overstaffed and when cashwrap queues form.

For retailers serious about optimizing their stores, in store shoppers tracking should be strongly considered.

Deploying excellent full journey tracking presents challenges, but with the help of Digital Mortar, you can get several advantages.

1. Metrics. Firstly, Digital Mortar is the most advanced retail customer analytics tool on the market and consolidates a broad array of shopper behaviorally descriptive behaviors into a set of metrics. It is easy to compare performance across multiple locations, within a single store, across day/time parts, seasonally and more with the available metrics. Available metrics include traffic, time spent, shop rate, conversion rate, shopper to associate ratio and more. What’s more is metrics are available for any tracked area of the store down to a single square foot.

2. Hardware. Digital Mortar uses the most advanced people tracking hardware available including 3D/stereoscopic cameras, LiDAR sensors and Bluetooth tracking. The software platform is hardware agnostic so clients can pick the type of tracking hardware that best meets their analytical needs, is most conducive for the store environment and fits within their budget.

3. Services. Analytical professional services are available for clients that choose to leverage Digital Mortar’s expertise maximizing the value of our in store tracking data. Digital Mortar can support the rollout of in-store A/B testing programs or create and deliver analytic roadmaps that provide new learnings on an ongoing basis. There is also the option to have your own internal resources be trained by Digital Mortar.

In the future it will be essential to understand your physical stores with the same data and detail as an ecommerce presence. It is not possible to optimize what is not measured and smart retailers will increasingly relay on customer journey data to drive decisions.

With a product like Digital Mortar in place a retailer can expect to increase sales through better layout and merchandising decisions while reducing labor costs through more efficient staffing models.

For more information, visit: https://digitalmortar.com/

Original source: https://bityl.co/ATCF

1 note

·

View note

Text

Neuralink: Can We Control A Computer Through Our Thoughts?

Neuralink: Can We Control A Computer Through Our Thoughts?

Computers have become the new backbone of humanity. Our economies, businesses, infrastructure, and markets are dependent on the paradigm of global interconnectedness. This isn’t seen on the macro level alone; it is also true for individuals. How often do you find yourself mindlessly scrolling through your Instagram feed? How often do you negotiate extra time with yourself so you can read one final Reddit thread? Personally, I find myself using my phone on and off for the better part of the day; it has truly become a critical part of our lived experience. Computers of varying sizes have become the extension through which we offload much of our cognitive function and they have enabled us to extend far beyond our biological limitations. In a sense, we have already become cyborgs.

Our phones and computers act as a tertiary layer to our brain (the limbic system and neocortex being the primary and secondary layers, respectively). It is only a matter of time before these devices become invisible and are integrated into our biology. Elon Musk’s company Neuralink aims to bridge this gap, providing the ability to interface with computers without physically interacting with them. This has been tried before, but most of the devices don’t offer high bandwidth (i.e., they have a slow rate of data transfer). Before jumping into the details of this burgeoning technology, we first need to ask, is the ability to control computers with our brain actually required? Why do we need to control computers with our brain? At first glance, this may seem like an unnecessary venture, just another feature in our ever-upgrading technological dependence. However, this piece of tech could have a life-changing effect, as the potential benefits outlined below clearly show. Computer control for people with severe motor impairment Being a paraplegic and needing assistance to work with a computer leads to even more frustration and feelings of isolation. A direct cognitive interface with computers would be a godsend for patients suffering from partial or total paralysis, making them increasingly independent (almost every household chore/errand can be done online these days) and giving them an extended community through the internet. This is not just a boon for communication; these chips could eventually help patients with spine-related injuries by restoring their movement! It could even assist in the treatment of debilitating neurological diseases like Alzheimer’s and Parkinson’s disease. Understanding the Brain Our brain is probably the most complex machine we have encountered in this universe. It is a 3-pound piece of matter sitting inside our skull that is simultaneously managing thousands of biological functions. Given that complexity, there remain several unanswered questions about the brain, such as how are memories stored and retrieved? What is consciousness? These interfaces could shed light on several age-old questions. Combating the looming Artificial Intelligence (AI) threat to humanity How Artificial Intelligence develops in the coming century will determine the ultimate state of humanity, and our chances of survival. If the premise of the Singularity is to be believed and computers reach the intelligence level of an average human (known as Artificial General Intelligence), then it is only a matter of time before computers surpass us and become Artificial Super Intelligent. We could then be at the mercy of a super-intelligent overlord; for whom we would be what ants presently are to us. The future could be a grim dystopia or an era of unfathomable prosperity, depending on our ability to tame this tech. One way to do this would be to somehow create a symbiosis with AI through brain-machine interfaces. Neuralink’s leap As you may know, the brain forms a large network of neurons through synapses. Neurons communicate at these junction points using chemical signals called neurotransmitters. These neurotransmitters are released as a response from a neuron when it receives an electrical signal called an ‘action potential’. A chain reaction is triggered, causing an action potential to fire when a cell receives the right kind of neurotransmitter input; this makes the neurons relay messages to the synapses.

Neurons communicating through synapses (Photo Credit : VectorMine/Shutterstock) Action potentials produce an electric field that spreads out from a neuron and can be detected by placing electrodes near the site. This allows for the recording of the information represented by a neuron. Neuralink’s first prototype N1 uses arrays of electrodes to communicate with the neuron. A single group contains 96 threads, and 3096 electrodes are distributed across them. A USB-C connector is provided for power and data transference. A single electrode group is encased inside a small implantable device that contains a custom wireless chip (4mm X 4mm in size). Attached to the case is a USB-C connector for power and data transfer.

A packaged sensor device. A. individual neural processing ASIC capable of processing 256 channels of data. This particular packaged device contains 12 of these chips for a total of 3,072 channels. B. Polymer threads C. Titanium enclosure D. Digital USB-C connector for power and data. (Photo Credit : biorxiv.org) The threads are minuscule, at about 1/10th the size of a human hair strand, and contain about 192 electrodes each. It isn’t humanly possible to insert these in the brain, so the company had to design a customized robot to perform this task. The threads are individually inserted into the brain with micron precision by a tiny needle at the end of the robot. This needle measures approximately 24 microns in diameter.

Needle pincher cartridge (NPC) compared with a penny for scale. A. Needle. B. Pincher. C. Cartridge. (Photo Credit : biorxiv.org) The robot is capable of inserting six threads per minute. The company’s goal is to make this procedure as safe and painless as possible. They aim to make this surgery as hassle-free as LASIK.

A. Loaded needle pincher cartridge. B. Low-force contact brain position sensor. C. Light modules with multiple independent wavelengths. D. Needle motor. E. One of four cameras focused on the needle during insertion. F. Camera with a wide angle view of the surgical field. G. Stereoscopic cameras. (Photo Credit : biorxiv.org) As many as 10 implants can be inserted into a brain hemisphere. These implants connect through very small wires under the scalp to a conductive coil behind the ear. This coil connects wirelessly through the skin to a wearable device that the company calls the Link, which contains a Bluetooth radio and battery. A single USB-C cable provides full-bandwidth data streaming from the device to a phone or computer, simultaneously recording all chip channels. The Link is controlled by a smartphone app and can be used to make software updates and fix bugs via Bluetooth, avoiding the need to tamper with the chip. When the link is offline, the device shuts down.

The chip’s encased electrodes connect to the Link, which can be used to control devices. (Photo Credit : skvalval/ Shutterstock) The device can enable at least 10,000 neurons in the brain to read the action potentials. The closest FDA approved device in the market today is a Parkinson Deep Brain Simulator, which contains just 10 electrodes. The N1 outpaces this older tech by an order of magnitude of 1000! Key takeaways This new technology could prove to be a massive boon for patients who have been diagnosed with neurological diseases and could even help in totally restoring movement in people with spinal cord injuries. This device could further enhance our relationship with computers and usher in completely new ways of interacting, not just with computers, but also with other people! The question is…. will Neuralink be able to achieve this? Only time will tell.

https://ift.tt/2pV7WsQ . Foreign Articles October 25, 2019 at 02:08PM

0 notes

Photo

Watch Nintendo managed to make 3D gaming remotely mainstream with its 3DS line of handheld consoles, and now a similar feature may be coming to Nintendo's home consoles. The United States Patent and Trademark Office (USPTO) recently published a Nintendo patent detailing home console-based, eye-tracking 3D visuals that’s been almost a decade in the making. The patent – which began in earnest way back in 2010, was filed in September 2018 by Nintendo and was published by the USPTO a few days before the time of writing – describes and illustrates how a game console can provide glasses-free 3D gaming through a TV. This invention involves a sensor array placed atop the television, which is used for head and eye-tracking. The sensor array superimposes two images to create a 2D stereoscopic picture, rendered in such a way as to generate a 3D visual effect for the player. While the system is designed to be used without a headset or accessory, players can use an additional marker attached to some.. video

#Games - #video -

0 notes

Text

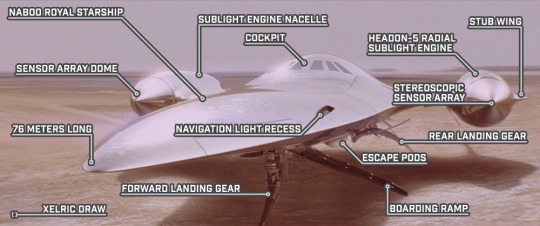

The Royal Starship Lifts Off

STAR WARS EPISODE I: The Phantom Menace 01:17:16

#Star Wars#Episode I#The Phantom Menace#Tatooine#Xelric Draw#Naboo Royal Starship#sensor array dome#landing gear#navigation light recess#sublight engine nacelle#cockpit#Headon-5 radial sublight engine#stub wing#stereoscopic sensor array#escape pod#boarding ramp

1 note

·

View note

Text

Jaunt ONE Camera Now Available to Consumers

Virtual reality (VR) production company Jaunt has become one of the biggest names in immersive content and distribution, with its app available on most platforms and regular experiences making their way to the service. Jaunt has also been busy developing its own 360-degree camera, ONE, which was previously codenamed NEO. Since 2015 the Jaunt ONE has been available to the company’s partners, from today onwards the device will now be available to consumers.

Jaunt ONE is a professional grade camera so it’s not aiming at the more entry-level end of the market like the Samsung Gear 360 for example.

The device features a 24-camera array with synchronized global shutter sensors, enabling it to provide full 360-degree stereoscopic coverage at up to 8K output resolution. Other specs include 18-stop exposure control, onboard media storage for up to 20 hours of continuous recording, and a rugged design to withstand harsh weather conditions.

For the 2017 National Association of Broadcasters Show (NAB), Jaunt has also unveiled several new features coming to the camera including support for 120 frames per second capture, real-time video feed, and updates to white balance correction in the Jaunt ONE’s companion software suite, Jaunt Cloud Services (JCS).

“Demand for the Jaunt ONE has been unwavering since we introduced it to rental houses last summer, ” said Koji Gardiner, VP of Hardware Engineering at Jaunt. “The natural next step was to provide filmmakers the ability to own the camera. These filmmakers are pushing the creative boundaries as they eagerly explore the medium and we could not be more proud to provide them with the only professional-grade VR camera designed specifically for their craft.”

Jaunt hasn’t yet stated how much the camera will retail for but it’ll be available through B&H as well as previous rental outlets AbelCine and Radiant Images.

For further coverage of Jaunt and the Jaunt ONE, keep reading VRFocus.

from VRFocus http://ift.tt/2pxVpXL

1 note

·

View note

Video

vimeo

A collaboration between: Mark-David Hosale, Jim Madsen, and Robert Allison ICECUBE LED Display [ILDm^3] is a cubic-meter model of the IceCube Neutrino Observatory, a cubic-kilometer telescope made of ice just below the surface at the South Pole. ILDm^3 sits low to the ground on a base of wood that supports 86 acrylic rods, each with 60 full-colour LED’s (5,160 total) representing a sensor within the IceCube array. A small interactive interface is used to control the work. The IceCube Neutrino Display represents an epistemological nexus between art and science. While scientifically precise, the display uses art methodologies as an optimal means for expressing imperceptible astrophysical events as sound, light and colour in the domain of the human sensorium. The result is an experience that is as aesthetically critical as it is facilitatory to an intuitive understanding of sub-atomic astrophysical data, leading to new ways of knowing about our Universe and its processes.

Mark-David Hosale is a computational artist and composer work has been exhibited internationally at venues such as SIGGRAPH Art Gallery (2005), International Symposium on Electronic Art (ISEA2006), BlikOpener Festival, Delft, The Netherlands (2010), the Dutch Electronic Art Festival (DEAF2012), Toronto’s Nuit Blanche (2012), Art Souterrain, Montréal (2013), and a Collateral event at the Venice Biennale (2015). His interdisciplinary practice that is often built on collaborations with architects, scientists, and other artists in the field of computational arts, resulting in the creation of interactive and immersive installation artworks and performances that explore the boundaries between the virtual and the physical world. Jim Madsen is the chair of the physics department at the University of Wisconsin–River Falls and an associate director of the IceCube Neutrino Observatory, where he leads the education and outreach team. He has deployed three times to Antarctica, and presented science talks on five continents. He enjoys providing opportunities to participate in astrophysics research that range from one-time talks for general audiences to extended research experiences for teachers and students, including field deployments at the South Pole. Robert Allison. Associate Professor, Centre for Vision Research, Electrical Engineering and Computer Science at York University. My main work involves basic and applied research on stereoscopic depth perception and virtual reality. I study how the brain, or a machine, can reconstruct a three-dimensional percept of the world around us from the two-dimensional images on the retinas and how we use this information to move about and interact with our environment. I am also interested in the measurement and analysis of eye movements and the applications of this technology. Artist, Mark-David Hosale (York University, Toronto, Canada), and Physicist, James Madsen (University of Wisconsin, River-Falls, USA) have been working regularly with each other since 2012 and have realized several projects that explores the visualization and sonification of data sets collected at the IceCube Neutrino Observatory. Rob Allison (York University, Toronto, Canada) joined the collaboration in 2015.

0 notes

Text

Photography History 3 via /#bestofcanvas

Photography History 3

Techniques-Photography History 3

Photography History 3 A large variety of photographic techniques and media are used in the process of capturing images for photography. These include the camera; stereoscopy; dual photography; full-spectrum, ultraviolet and infrared media; light field photography; and other imaging techniques.

Cameras

The camera is the image-forming device, and a photographic plate, photographic film or a silicon electronic image sensor is the capture medium. The respective recording medium can be the plate or film itself, or a digital magnetic or electronic memory.

Photographers control the camera and lens to “expose” the light recording material to the required amount of light to form a “latent image” (on plate or film) or RAW file (in digital cameras) which, after appropriate processing, is converted to a usable image. Digital cameras use an electronic image sensor based on light-sensitive electronics such as charge-coupled device (CCD) or complementary metal-oxide-semiconductor (CMOS) technology. The resulting digital image is stored electronically but can be reproduced on a paper.

The camera (or ‘camera obscura’) is a dark room or chamber from which, as far as possible, all light is excluded except the light that forms the image. It was discovered and used in the 16th century by painters. The subject being photographed, however, must be illuminated. Cameras can range from small to very large, a whole room that is kept dark while the object to be photographed is in another room where it is properly illuminated. This was common for reproduction photography of flat copy when large film negatives were used (see Process camera).

As soon as photographic materials became “fast” (sensitive) enough for taking candid or surreptitious pictures, small “detective” cameras were made, some actually disguised as a book or handbag or pocket watch (the Ticka camera) or even worn hidden behind an Ascot necktie with a tie pin that was really the lens.

The movie camera is a type of photographic camera which takes a rapid sequence of photographs on a recording medium. In contrast to a still camera, which captures a single snapshot at a time, the movie camera takes a series of images, each called a “frame”. This is accomplished through an intermittent mechanism. The frames are later played back in a movie projector at a specific speed, called the “frame rate” (number of frames per second). While viewing, a person’s eyes and brain merge the separate pictures to create the illusion of motion.

Stereoscopic

Photographs, both monochrome and color, can be captured and displayed through two side-by-side images that emulate human stereoscopic vision. Stereoscopic photography was the first that captured figures in motion. While known colloquially as “3-D” photography, the more accurate term is stereoscopy. Such cameras have long been realized by using film and more recently in digital electronic methods (including cell phone cameras).

Dual Photography

Dualphotography consists of photographing a scene from both sides of a photographic device at once (e.g. camera for back-to-back dual photography, or two networked cameras for portal-plane dual photography). The dualphoto apparatus can be used to simultaneously capture both the subject and the photographer, or both sides of a geographical place at once, thus adding a supplementary narrative layer to that of a single image.

Full-Spectrum, Ultraviolet And Infrared

Photography History 3 Ultraviolet and infrared films have been available for many decades and employed in a variety of photographic avenues since the 1960s. New technological trends in digital photography have opened a new direction in full spectrum photography, where careful filtering choices across the ultraviolet, visible and infrared lead to new artistic visions.

Modified digital cameras can detect some ultraviolet, all of the visible and much of the near infrared spectrum, as most digital imaging sensors are sensitive from about 350 nm to 1000 nm. An off-the-shelf digital camera contains an infrared hot mirror filter that blocks most of the infrared and a bit of the ultraviolet that would otherwise be detected by the sensor, narrowing the accepted range from about 400 nm to 700 nm.[48]

Replacing a hot mirror or infrared blocking filter with an infrared pass or a wide spectrally transmitting filter allows the camera to detect the wider spectrum light at greater sensitivity. Without the hot-mirror, the red, green and blue (or cyan, yellow and magenta) colored micro-filters placed over the sensor elements pass varying amounts of ultraviolet (blue window) and infrared (primarily red and somewhat lesser the green and blue micro-filters).

Uses of full spectrum photography are for fine art photography, geology, forensics and law enforcement.

Light Field

See also: Light-field camera

Photography History 3 Digital methods of image capture and display processing have enabled the new technology of “light field photography” (also known as synthetic aperture photography). This process allows focusing at various depths of field to be selected after the photograph has been captured.As explained by Michael Faraday in 1846, the “light field” is understood as 5-dimensional, with each point in 3-D space having attributes of two more angles that define the direction of each ray passing through that point.

These additional vector attributes can be captured optically through the use of microlenses at each pixel point within the 2-dimensional image sensor. Every pixel of the final image is actually a selection from each sub-array located under each microlens, as identified by a post-image capture focus algorithm.

Other

Besides the camera, other methods of forming images with light are available. For instance, a photocopy or xerography machine forms permanent images but uses the transfer of static electrical charges rather than photographic medium, hence the term electrophotography. Photograms are images produced by the shadows of objects cast on the photographic paper, without the use of a camera. Objects can also be placed directly on the glass of an image scanner to produce digital pictures

Source

Submitted December 16, 2019 at 04:13PM via #bestofcanvas https://www.reddit.com/r/u_HoustonCanvas/comments/ebm42b/photography_history_3/?utm_source=ifttt

0 notes

Link

New Nintendo Switch: everything we want to see from the next Switch console New Nintendo Switch, Nintendo Switch 2, Switch Pro, Switch Mini, or a pair of new Switch consoles – whatever the new handheld from Nintendo is called, there's going to be at least one other Switch model coming down the line at some point soon. With the Nintendo Switch coming up to the two-year mark, it's safe to say the hybrid handheld / portable console has taken the world by storm. It's the fastest selling console ever in the US and making Nintendo an absolute shedload of money. But hardware ages quickly nowadays, and Nintendo will be looking to a hardware upgrade to ensure its console stays relevant and attractive to today's gamers. So how to build on a success like the Switch? Hardware upgrades are nothing new for consoles, let alone those from Nintendo. The Japanese gaming giant has a fruitful record for iterating its handheld consoles: there have been multiple models of the 3DS family available in different sizes, shapes, and with varying 3D capability, but all able to play the same game cartridges as each other. Even Sony and Microsoft have come round to iterative releases, offering mid-cycle upgrades such as the Xbox One S, Xbox One X, or PS4 Slim and PS4 Pro. So when will we see a new Nintendo Switch, and what new features will it offer? While we wait for more definitive answers, we've run through the likely (and unlikely) possibilities below... [Update: Sony official confirms PlayStation 5 details.] New Nintendo Switch release date With your average console lifecycle floating around the five or six year mark, an announcement this summer to be followed by a winter release would see Nintendo on par for a mid-generation console refresh. It's far from unusual for Nintendo – everything from the Gameboy to the Wii to the 3DS received multiple updated across their life spans. But given that Nintendo hasn't officially confirmed there's a Nintendo Switch 2 in the works (the latest rumors seem solid but are based on a Wall Street Journal report), it's hard to guess what kind of release date we’re looking at. . We know that the company is hoping the Switch will go further than the standard five to six year lifecycle of a console, after Shigeru Miyamoto told investors as much in a Q&A. Prior to this, Nintendo's consoles have usually topped out at the five to six year mark before moving onto the next generation. Clearly Nintendo has something up its sleeve for the Switch 2, and we wonder if it's going to follow in the footsteps of Sony and Microsoft, both of which launch hardware upgrades at strategic intervals to prolong the current generation. Based on these industry trends, it would make sense for Nintendo to release an upgraded version of the Switch hardware between two and three years into its lifetime, which would mean we could see Nintendo Switch 2.0 in 2019 or 2020. With an iterative approach that sees a power upgrade and some design refinements, it could be that we see the Switch line run easily into 2022. We'd expect Nintendo to release a new model before the Xbox Two or PS5 hits shelves – likely in late 2019 – chiming with a report from the Wall Street Journal that predicted a new Nintendo Switch model in mid-to-late 2019. Nintendo president Shuntaro Furukawa has since shut those rumors down, however, saying no successor was currently in the works. But if the new Nintendo Switch ends up with a 2020 release, it's likely that it will be in direct competition with the next Xbox and PS5 release as Sony has already confirmed we won't see the PS5 in 2019 and we haven't got high hopes of seeing Xbox Scarlett by the end of the year - even if we do expect the next Xbox to be announced at E3 2019... Tetris 99 and the best free Nintendo Switch games New Nintendo Switch price Obviously redesigns cost money, and redesigns with improved hardware doubly so. Rather than jack up the price, though, we imagine the new model will match the current £279 / $299 pricing, alongside a price drop for what will then become the outdated model. If, however, the new Nintendo Switch model is actually a premium or 'Pro' version that sits alongside the standard console, or even a cheaper handheld-only 'Switch Mini' – then we could see that figure jump considerably in either direction. (Given the naming conventions of the 3DS, we think 'New Nintendo Switch' is a likely moniker.) New Nintendo Switch news and leaks Now, despite the fact that Nintendo hasn’t confirmed it’s planning to release a Nintendo Switch 2, there have been reports and leaks which suggest plans are, at the very least, being made. Two new Nintendo Switch devices Rather than being a straight-up Nintendo Switch 2, a Wall Street Journalreport suggests that Nintendo is working on two individual variants that, rather than making the current Nintendo Switch obsolete, would put it in the middle of a range growing to suit all budgets. According the publication's sources, the first of these new devices will be aimed at budget gamers, and will see the Switch presented in a more traditional handheld-first format. It'll replace removable Joy-Cons with fixed ones, and ditch their HD Rumble feature in order to bring costs down, making it presumably more difficult to play in the docked mode with a TV without the purchase of a separate controller. The second new version of the Nintendo Switch, going by the rumor, is a little harder to pin down, but would be a premium version of the console with "enhanced features targeted at avid videogamers." That's not to suggest it would be aiming for 4K or HDR visuals, but would more likely have features and services baked in that would accommodate the modern obsession with streaming to platforms like Twitch. Could a cheaper Switch model say goodbye to detachable controllers? E3 rumors A report by Bloomberg suggested we could see the budget model launch as early as June, with E3 2019 proving a natural time to showcase the product. Nintendo has since quashed rumors of an E3 reveal, though the general timeline might not be far off. The 5.0 firmware dig Though the Nintendo Switch’s 5.0 firmware update wasn’t up to much on the surface, hackers on Switchbrew dug into the upgrade and found evidence which suggests a hardware refresh is in the works. Switchbrew discovered references to a new T214 chip (which would be a small improvement on the current T210) as well as an updated printed circuit board and 8GB of RAM instead of the current 4GB. While any kind of chip upgrade could simply be Nintendo’s response to some hardware security problems which have emerged with the current SoC – like the homebrew hackers who have started creating pirated Switch titles – the new PCB and increased RAM suggest something more than this: a more powerful device. Labo's cardboard accessories show Nintendo's intense focus on peripherals It's important to note that the files uncovered by these Switchbrew members contain neither a timescale nor a definitive statement of intent from Nintendo. The Switch is proof that state-of-the-art technology isn't the sole key to success in a wider sense, but it’s important to remember that as far as handhelds are concerned, the Switch is at the cutting edge and Nintendo will need to keep it there. If these hardware upgrade rumors turn out to be true, Nintendo has plenty of time before it has to do any kind of refresh. AR and VR support VR and AR support for the Switch never looked likely, with the MD of Nintendo France citing a lack of mainstream appeal for the technology as recently as 2018, but the new Labo VR Kit shows Nintendo has changed its mind about the viability of VR. The Switch doesn't have the high resolution of most VR gaming rigs, so we could see an advanced model with 2K or 4K resolution to improve those close-up VR experiences. However, given the VR Kit is still focused mainly on children, we'd be surprised to see a visual overhaul simply for this one peripheral. Don't expect to see this anytime soon What about 3D? Another Nintendo patent came to light in early 2019: this time for a 3D sensor array that sits above your television, and creates a stereoscopic image in a similar way to the 3DS, meaning you wouldn't even need glasses. 3D visuals felt like a passing gimmick even with Nintendo's handheld consoles – and the troubled Microsoft Kinect camera will no doubt keep it wary of unnecessary TV peripherals. But getting convincing 3D imaging on standard 2D TVs may the step needed to bring 3D gaming mainstream. Could it support 4K? While Sony and Microsoft push at the 4K market, there isn’t really any big reason for Nintendo, the company that staunchly sets itself apart from other hardware producers, to follow suit. In the same interview where he dismissed VR, Nintendo France General Manager Philippe Lavoué also brushed off 4K saying that the technology has “not been adopted by the majority” and it would, therefore, be too early for Nintendo to jump in. Nintendo didn’t enter the HD console market until 2012 when it released the Wii U. This was around four years after Sony and Microsoft and at the point when more than 75% of US households actually had HD displays in their home. Miyamoto however has said he wished Nintendo had done the jump to HD sooner than this, saying that the display technology became popular around three years before Nintendo expected it to. It's expected that by 2020, 50% of US households will have adopted 4K technology and it might be at this point that Nintendo decides to join the 4K fray, rather than waiting until the 75% market saturation of before. New Nintendo Switch: what we want to see More screen, less bezel One way of iterating productively on the Nintendo Switch would be cutting back at its rather sizeable bezel. An upgraded screen could cut into that dark space without interfering with the console's general dimensions, and could even pave the way for 1080p play on the handheld itself, instead of its current 720p resolution. What will likely prevent Nintendo messing with its formula too much are the Joy-Cons. If the body of the console changes considerably, the millions of Joy-Cons already on the market would suddenly become obsolete to upgrading players – maybe a good money-spinner for new peripherals, but something that would very easily irritate its player base. Charge less for necessary accessories or sell better bundles While in theory everything you need to start playing your Nintendo Switch is in the box, there are some sold-separately accessories that still feel pretty essential yet they’re very expensive. Things like Joy-Con compatible steering wheels are fun, utterly unnecessary and they’re also relatively cheap. Additional Joy-Cons and charging grips, on the other hand, are surprisingly expensive. For Nintendo Switch 2, we’d either like to see things like Joy-Con charging grips come as standard, or see a wider range of bundles which include accessories like these for a more reasonable price. At least with this console, the charger came as standard unlike the 3DS. Baby steps, we suppose. These are the best Nintendo Switch accessories you can get Keep it iterative The basic concept of the Nintendo Switch is great as it is and other than some small hardware advancements we actually don't want Nintendo to change too much. What we definitely don’t want is for the current Nintendo Switch library to be unusable. If Nintendo is going to bring out a second generation Switch console, we want it to take the Microsoft backwards compatibility approach with the games. Switch games aren't cheap and we want them to last as long as possible. Given the Nintendo 3DS can play all Nintendo DS games, we know this is something Nintendo isn't against. A smaller, more portable dock While we like the design of the Nintendo Switch at the moment, when it comes to the console’s docking station we’d like to see something smaller and more portable, something which third-party manufacturers are already delivering – even if Nintendo seems set on bricking consoles that use them. Third parties are releasing smaller more portable docks More internal memory We love the fact that the Nintendo Switch has expandable memory – with some great Nintendo Switch SD cards out there – but we’d like to not have to rely on it quite as quickly as we’ve had to. If a Nintendo Switch 2 is in the works, we’d like to have an option with more internal memory for those that rely largely on a digital library. Given a large number of Switch games (particularly indie titles) are digital only, this seems especially important. Battery life that lives on We're hoping Nintendo will use a hardware upgrade as an opportunity to improve the console's rather small three-hour battery life in handheld. Complaints about the battery life have somewhat subsided as players realised they didn't need as long a battery life as their day-long-lasting smartphones. There are also plenty of workarounds like portable chargers and battery banks to get around this. But stretching out the battery to a six-hour charge might be what cements the console as a truly practical handheld device. (Image credits: Nintendo) Looking forward to the next generation of gaming? Read more about what we want to see from the Xbox Two and PS5 #Newsytechno.com #Latest_Technology_Trends #Cool_Gadgets

0 notes

Text

How Microsoft is making its most sensitive HoloLens depth sensor yet

Sensors, including the depth camera, in the original HoloLens.

Image: Microsoft

The next-generation HoloLens sensor is a feat of research and design that crams advanced signal processing and silicon engineering into a tiny, reliable module that Microsoft intends to sell to customers and other manufacturers as Project Kinect for Azure. The company will also use the new sensor in its own products, including the next HoloLens.

At Build this year, Satya Nadella promised it would be “the most powerful sensor with the least amount of noise”, and with an ultra-wide field of view. It’s small enough and low power enough to use in mobile devices. But what does the sensor do, and how will a HoloLens (or anything else using it) ‘see’ the world into which it mixes 3D holograms?

There are different ways of measuring depth. Some researchers have tried to use ultrasound, while the traditional geometric methods use precisely structured patterns of light, in stereo — with two beams of light hitting the same object, you can calculate the distance from the angle between the beams. Microsoft has used stereoscopic sensors in the past, but this time took a different approach called ‘phased time-of-flight’.

Cyrus Bamji, the hardware architect who led the team to build Microsoft’s first time-of-flight (ToF) camera into the Kinect sensor that came with Xbox One, explained to ZDNet how the new sensor works.

It starts by illuminating the scene with a number of lasers, with about a 100 milliwatts of power each, that act like a floodlight. It’s uniform everywhere, covers the whole scene at once and turns on and off very quickly.

As the light from the laser bounces off 3D objects like walls and people, it reflects back at the sensor. The distance isn’t measured directly by how quickly the light is reflected back (like a radar gun), but by how much the phase of the light envelope that comes back has shifted from the original signal that the lasers emit. Compare the difference in phase (removing any harmonics introduced by minor changes in voltage or temperature to clean up the signal along the way), and you get a very precise measurement of how far away the point is that the light reflected off.

That depth information isn’t just useful for scaling holograms to be the right size as you look around in mixed reality; it’s also critical for making computer vision more accurate. Think of trick photographs of people pushing over the Leaning Tower of Pizza: with a depth camera you can immediately see that it’s a trick shot.

Microsoft’s phased time-of-flight sensors are small and thin enough to fit into small mobile devices, and robust enough to go into consumer gadgets that might get knocked around. That’s because, unlike stereo vision systems, they’re not pricey precision optics that have to be kept in a precise alignment: they can cope with a signal made noisier than usual by the voltage of the electrical current dipping slightly or the equipment warming up, and they use simple calculations that require less processing power than the complex algorithms required for stereo vision calculations.

Depending on how you want to use it, the sensor lets you pick different depth ranges, frame rates and image resolution, as well as picking a medium or large field of view.

[embedded content]

The next-generation HoloLens sensor in action at the Microsoft 2018 Faculty Research Summit.

And best of all, phased ToF sensors are silicon that can be mass-produced in high-volume in a fab using standard CMOS processes at low cost. That’s just what you need for a consumer product, or something cheap enough to go into large-scale industrial systems.

Small, cheap and fast

The new sensor makes a number of technical breakthroughs: lower power, higher frequency, higher resolution (1,024 by 1,024 pixels), and smaller pixels that determine depth more accurately from further away. Often, improvements in one area mean trade-offs in others — frequency and distance, for example. “It turns out that if you increase the frequency, you get better resolution; the higher the frequency the less the jitter, but you also get a smaller and smaller operating range,” Bamji explains.

A low frequency covers a longer distance from the sensor, but is less accurate. The problem is that while a higher frequency gets more precise results, those results will be the same for multiple distances — because the readings that come back are with a curve that repeats over and over again. At a frequency of 50MHz, light bouncing off something 3 metres away has the same phase as light bouncing off an object 6 meters or 9 metres away.

The Microsoft team used a clever mathematical trick (called phase unwrapping) to avoid that confusion so they could increase the frequency and also operate at longer distances. The sensor uses multiple different frequencies at the same time and the firmware combines the results. That could be low frequencies that tell you approximately where something is and high frequencies to locate it precisely, or different high frequencies that have different phases so they only line up at one particular distance. That way, says Bamji, “we were able to keep increasing the frequency and get better and better accuracy and still maintain the operating range. We found a way to have our cake and eat it too!”

Phase unwrapping allowed the team to take the frequency of the sensor up from just 20MHz in the earliest research, to 320MHz. Higher frequencies allow the sensor to have smaller pixels, which deliver better depth resolution in the 3D image. The new sensor has high enough resolution to show the wrinkles in someone’s clothes as they walk past the camera or the curve of a ping-pong ball in flight — just 2 centimeters from the centre to the edge — from a meter away, and all without reducing the performance and accuracy of the sensor the way smaller pixels would do at lower frequencies.

The fourth-generation phased time-of-flight sensor can pick up the curve of a ping-pong ball as it flies through the air.

Image: Microsoft / IEEE

Small pixels have another big advantage: the sensor itself can be smaller. “If you have small pixels, that brings down the optical stack height,” Bamji points out. “Our array is small, which means it can go into devices that are thin.”

The new sensor also manages to deliver small, accurate pixels using only one-eighth the power consumption of previous versions (the overall system power is between 225 and 950 milliwatts). It’s a trade-off that Bamji characterises as equivalent to Moore’s Law. “If you just put in enough power you can get good quality, but for a consumer product that’s not good enough. You increase the frequency and that burns more power, but it increases the accuracy and then you decrease the pixel size. As you go through the cycle, you end up where you were but with smaller pixels.”

The pixels in the imaging array started out at 50 by 50 microns. That went down to 15 and then 10 microns, and now the pixels are just 3.5 by 3.5 microns each. That’s larger than the pixels in a smartphone camera, which are usually 1 to 2 microns square, while the state of the art for RGB sensors is about 0.8 microns square.

On the other hand, the pixels in the Microsoft sensor have a global shutter. Instead of a physical cover that stops any more light coming in, to stop it interfering with the reflected light that’s already been captured by the sensor, a global shutter is an extra feature built right into the silicon that tells the sensor to switch off and stop being sensitive to light until it’s time to take the next measurement. At the end of 2017, the smallest pixels with global shutters were about 3 microns square, but they didn’t have the time-of-flight sensor that this one does.

A complex silicon dance

However, pixels this small can run into quantum mechanical problems with getting electrons to go where you want them to. The sensor detects light by collecting photo charges that it converts into voltages, and those need to be measured precisely.

Bamji compares the problem to inflating a tyre. When you remove the pump and put the cap back on, some air always comes back out. The same thing happens when you reset the part of the sensor where the photo charge is stored: some of the charge can get sucked back in as the reset happens, resulting in what’s called kTC noise (that’s a shorthand for the formula used to calculate how much noise can get added to the signal). The amount of charge that gets sucked back into the charge varies, so you can’t automatically correct for it.

kTC noise only happens where the charge can flow in both directions — air can come back out of a tyre, but sand can’t flow back up into the top half of an hourglass. If there’s a complete transfer of the charge, you don’t get the noise.

So the Microsoft team gets around the problem by storing the photo charges as what are called minority carriers — the less common charge-carrying particles in the semiconductor that move more slowly. To convert these charges to a voltage that can be read, they’re transferred in what’s called a floating diffusion. The sensor resets the floating diffusion and measures the voltage in it immediately after the reset, then moves the photo charge into the floating diffusion and measures the new voltage. Subtracting the first value — taken right after the reset — circumvents the problem of kTC noise.

The new sensor is the first time-of-flight-system to use this technique, which increases the complexity of silicon engineering required. “But we had to do that because as pixels are becoming smaller and smaller, this problem becomes more and more acute,” Bamji explains.

When you get all the way down to each individual (tiny) pixel, it’s made up of two interlocking polysilicon ‘photofingers’ per pixel (earlier generations of the sensor had four or eight fingers, but there’s only room for two fingers in the new, smaller pixel).

The fingers take turns to generate a low or high (3.3v) electrical charge. This is a ‘drift’ field that makes photons drift towards the finger that’s generating the high charge faster than they’d normally diffuse across the material. They need to move fast because at the full 320MHz the other finger takes over after 2 nanoseconds and any charges that haven’t been captured aren’t useful any more. At 320MHz, 78 percent of charges make it all the way to the correct finger.

This finger structure makes the sensors easy to manufacture — even though few fabs have any experience making this new kind of silicon device — because it’s much less sensitive than other kinds of time-of-flight sensors to any defects in the CMOS process. “We are building a hunk of silicon out of CMOS, but it’s unlike the other pieces of silicon on the same chip,” Bamji says. “It’s a standard CMOS process, but we’re building a structure that’s unlike any of the other transistors.”

In the real world

After all the work to make the pixels smaller, it turns out that smaller pixels aren’t ideal for every scene. So to make the sensor more flexible, it can fake having larger pixels by grouping four pixels into one and reading the values from them at the same time (the technical term for that is ‘binning’). Which pixel size works best depends on how far away things are and how much of the laser light makes it back to the array in the sensor.

“If you have an object that’s close up with plenty of light, you can afford to have small pixels. If you want high resolution for facial recognition, if it’s sitting 60cm away from you and you have plenty of light, you want small pixels,” says Bamji. “Whereas if you’re looking at an object that’s very far, at the back of the room, you may not be able to see it accurately with the small pixels and bigger pixels would be better. Where you’re starving for laser light, or the laser light has been corrupted by sunlight, you need bigger pixels.”

The global shutter helps here, but how much of a problem is sunlight or other ambient light? Sitting in a meeting room on Microsoft’s Silicon Valley campus in the afternoon, Bamji estimates the lighting is 200-300 lux; bright sunlight coming in through the window might take it up to 300 or 400 lux. “Our spec is up to 3,000 lux, and the camera functions up to about 25,000 lux. Outdoors on the beach at noon with the sun at full blast somewhere like Cancun, that’s 100,000 lux.”

Obviously that’s something we won’t be able to test until products are available, but demos we’ve seen in normal office environments seem to bear those figures out (and if you were on the beach, you wouldn’t be able to see a screen either).

How expensive is this fast, powerful sensor going to be? That depends on manufacturing volumes, but if it’s popular enough to be made in large numbers, Bamji expects it to be ‘affordable’ — “Affordable, chip-wise, means single digits. This contains silicon and a laser, and in high volumes those are also single digits.”

What’s still up in the air is what devices beyond HoloLens we’ll see the new sensor in, that would sell enough to bring the price down through economies of scale.

Project Kinect for Azure.

Image: Microsoft

Microsoft seems unusually open to selling the sensor to other hardware vendors, but industrial partners are likely to be the first buyers, through Project Kinect for Azure. Imagine an industrial image recognition camera that could see all the way to the back of the fridge for stock checking, with built-in machine learning to recognise what’s in the fridge. Devices like that are an ideal example of the ‘intelligent edge’ that Microsoft is so fond of talking about, and if this sensor could be made at scale as cheaply as say a smartphone camera, we’ll see lots of devices that take advantage of precise 3D imaging.

RECENT AND RELATED CONTENT

Microsoft touts HoloLens rentals, business uses for mixed reality The Microsoft AR/VR pendulum seems to be swinging back toward emphasizing the potential business use of HoloLens and mixed reality headsets.

Microsoft continues its quest for the HoloLens grail The company remains ahead of a host of competitors with its augmented reality headset, which can justify its expense for mission-critical applications. Mainstream business use, however, will require more than better, cheaper hardware.

Microsoft delivers Windows 10 April 2018 Update for HoloLens Microsoft is starting to roll out its first Windows 10 feature update for HoloLens since 2016, and is making previews of two new first-party business apps available for the device.

Could the Microsoft HoloLens revolutionize business? (TechRepublic) Microsoft’s HoloLens could transform the enterprise. But what exactly is HoloLens, and why is it unique?