#unraid backup solution

Explore tagged Tumblr posts

Text

How to Install Unraid NAS: Complete Step-by-Step Guide for Beginners (2025)

If you’re looking to set up a powerful, flexible network-attached storage (NAS) system for your home media server or small business, Unraid is a brilliant choice. This comprehensive guide will walk you through the entire process to install Unraid NAS from start to finish, with all the tips and tricks for a successful setup in 2025. Unraid has become one of the most popular NAS operating systems…

#2025 nas guide#diy nas#home media server#home server setup#how to install unraid#network attached storage#private internet access unraid#small business nas#unraid backup solution#unraid beginner tutorial#unraid community applications#unraid data protection#unraid docker setup#unraid drive configuration#unraid hardware requirements#unraid licencing#unraid media server#unraid nas setup#unraid parity configuration#unraid plex server#unraid remote access#unraid server guide#unraid troubleshooting#unraid vpn configuration#unraid vs synology

2 notes

·

View notes

Text

Self Hosting

I haven't posted here in quite a while, but the last year+ for me has been a journey of learning a lot of new things. This is a kind of 'state-of-things' post about what I've been up to for the last year.

I put together a small home lab with 3 HP EliteDesk SFF PCs, an old gaming desktop running an i7-6700k, and my new gaming desktop running an i7-11700k and an RTX-3080 Ti.

"Using your gaming desktop as a server?" Yep, sure am! It's running Unraid with ~7TB of storage, and I'm passing the GPU through to a Windows VM for gaming. I use Sunshine/Moonlight to stream from the VM to my laptop in order to play games, though I've definitely been playing games a lot less...

On to the good stuff: I have 3 Proxmox nodes in a cluster, running the majority of my services. Jellyfin, Audiobookshelf, Calibre Web Automated, etc. are all running on Unraid to have direct access to the media library on the array. All told there's 23 docker containers running on Unraid, most of which are media management and streaming services. Across my lab, I have a whopping 57 containers running. Some of them are for things like monitoring which I wouldn't really count, but hey I'm not going to bother taking an effort to count properly.

The Proxmox nodes each have a VM for docker which I'm managing with Portainer, though that may change at some point as Komodo has caught my eye as a potential replacement.

All the VMs and LXC containers on Proxmox get backed up daily and stored on the array, and physical hosts are backed up with Kopia and also stored on the array. I haven't quite figured out backups for the main storage array yet (redundancy != backups), because cloud solutions are kind of expensive.

You might be wondering what I'm doing with all this, and the answer is not a whole lot. I make some things available for my private discord server to take advantage of, the main thing being game servers for Minecraft, Valheim, and a few others. For all that stuff I have to try and do things mostly the right way, so I have users managed in Authentik and all my other stuff connects to that. I've also written some small things here and there to automate tasks around the lab, like SSL certs which I might make a separate post on, and custom dashboard to view and start the various game servers I host. Otherwise it's really just a few things here and there to make my life a bit nicer, like RSSHub to collect all my favorite art accounts in one place (fuck you Instagram, piece of shit).

It's hard to go into detail on a whim like this so I may break it down better in the future, but assuming I keep posting here everything will probably be related to my lab. As it's grown it's definitely forced me to be more organized, and I promise I'm thinking about considering maybe working on documentation for everything. Bookstack is nice for that, I'm just lazy. One day I might even make a network map...

5 notes

·

View notes

Text

An Ultimate Guide to Unraid OS NAS

Network Attached Storage (NAS) has become an essential component for both home and small business (SMB) environments. With the need for data accessibility, backup, and storage scalability, a reliable NAS system is indispensable. Among the various NAS solutions available, Unraid OS stands out due to its versatility, user-friendly interface, and robust feature set. This guide will explore what Unraid OS is, the advantages of using an Unraid OS NAS, and provide a detailed look at the LincPlus Unraid OS NAS, along with a step-by-step guide on how to use it.

What is Unraid?

Unraid is a Linux-based operating system designed to provide an easy-to-use and flexible platform for building and managing a NAS. Unlike traditional RAID systems, Unraid allows you to mix and match drives of different sizes, which can be added to the array as needed. This makes it an ideal choice for users who want to expand their storage capabilities over time without being constrained by identical drive requirements.

Key Features of Unraid:

Flexibility: Add any number of drives, of any size, and mix and match them in your array.

Simplicity: Easy-to-use web interface for managing your storage, VMs, and Docker containers.

Protection: Offers parity-based protection to safeguard against drive failures.

Scalability: Easily expand your storage by adding more drives.

Virtualization: Run virtual machines (VMs) directly from the NAS.

Docker Support: Host Docker containers to expand functionality with apps like Plex, Nextcloud, and more.

Unraid OS NAS

An Unraid OS NAS leverages the power of the Unraid operating system to provide a versatile and high-performance storage solution. This type of NAS is suitable for both home users who need a media server and small businesses requiring reliable storage for critical data.

Advantages of Unraid OS NAS:

Cost-Effective: Use off-the-shelf hardware and avoid the high costs of pre-built NAS systems.

Customizable: Build a NAS that fits your specific needs, whether it's for media streaming, data backup, or running applications.

Performance: Optimized for speed and efficiency, especially with 2.5GbE connectivity, ensuring fast data transfer rates.

User-Friendly: The intuitive web interface makes it easy for anyone to set up and manage.

Community Support: A large, active community provides a wealth of knowledge and assistance.

LincPlus Unraid OS NAS

One of the top choices for an Unraid OS NAS is the LincPlus NAS. This device offers an excellent balance of performance, features, and affordability, making it ideal for both home and SMB users.

Specifications:

Feature

Specification

CPU

Intel Celeron N5105

RAM

16GB DDR4

Storage

Supports up to 6 drives (HDD/SSD)

Network

2.5GbE port

Ports

USB 3.0, HDMI, and more

OS

Unraid OS pre-installed

How to Use the LincPlus Unraid OS NAS:

Setup and Installation:

Unbox the LincPlus NAS and connect it to your network using the 2.5GbE ports.

Attach your storage drives (HDDs or SSDs).

Power on the NAS and connect to it via a web browser using the provided IP address.

Initial Configuration:

Log in to the Unraid web interface.

Configure your array by adding your drives. Unraid will offer options for setting up parity drives for data protection.

Format the drives as required and start the array.

Adding Storage:

You can add additional drives to the array at any time. Unraid will incorporate them into the array and adjust parity as needed.

Mix and match drives of different sizes to maximize your storage efficiency.

Setting Up Shares:

Create user shares for different types of data (e.g., media, backups, documents).

Configure access permissions and set up network sharing (SMB, NFS, AFP).

Installing Applications:

Use the built-in Docker support to install applications like Plex, Nextcloud, or any other Docker container.

Alternatively, use the Community Applications plugin to browse and install from a wide range of available apps.

Running Virtual Machines:

Utilize the virtualization features to run VMs directly on the NAS.

Allocate CPU, RAM, and storage resources as needed for your VMs.

Backup and Maintenance:

Set up automated backups to ensure your data is protected.

Regularly check the health of your drives using the SMART monitoring tools built into Unraid.

Keep the Unraid OS updated to benefit from the latest features and security patches.

Conclusion

An Unraid OS NAS, particularly the LincPlus Unraid OS NAS, offers a powerful, flexible, and cost-effective solution for both home and SMB users. With its robust feature set, including 2.5GbE connectivity, easy scalability, and support for Docker and VMs, it stands out as an excellent choice for those looking to build a versatile and high-performance NAS system. By following the setup and usage guidelines, users can maximize the potential of their Unraid OS NAS, ensuring reliable and efficient data storage and management.

Additional Resources:

Unraid Official Website

By leveraging the capabilities of Unraid and the LincPlus NAS, you can build a storage solution that meets your unique needs, whether for personal use or to support your small business operations.

FAQ: Unraid OS NAS

What is Unraid OS and how does it differ from traditional RAID?

Answer: Unraid OS is a Linux-based operating system designed specifically for managing NAS devices. Unlike traditional RAID, Unraid allows you to use drives of different sizes and types in the same array. You can add new drives to expand your storage at any time, without needing to rebuild the array or match the sizes of existing drives. This flexibility makes Unraid ideal for users who plan to expand their storage over time.

What are the hardware requirements for running Unraid OS on a NAS?

Answer: To run Unraid OS, you'll need a 64-bit capable processor (x86_64), at least 2GB of RAM (though 4GB or more is recommended), and a USB flash drive of at least 1GB to boot the OS. The LincPlus Unraid OS NAS, for instance, comes with an Intel Celeron J4125 processor and 8GB of DDR4 RAM, which provides ample performance for most home and small business applications. Additionally, having 2.5GbE network ports enhances data transfer speeds.

Can I use different sizes and types of drives in my Unraid NAS?

Answer: Yes, one of the key advantages of Unraid is its ability to mix and match different sizes and types of drives within the same array. This flexibility allows you to maximize your storage capacity and easily expand your system over time without the need for drives to be identical. You can start with a few drives and add more as needed, making Unraid a cost-effective and scalable solution.

How do I ensure my data is protected on an Unraid NAS?

Answer: Unraid offers several methods to protect your data:

Parity Protection: By configuring one or two drives as parity drives, Unraid can recover data from a failed drive.

Automated Backups: Set up regular backups using plugins or Docker applications to ensure your data is always backed up.

SMART Monitoring: Utilize the SMART monitoring tools in Unraid to regularly check the health of your drives and receive alerts about potential issues.

Redundancy: Keep multiple copies of important data across different drives or even in different locations to prevent data loss from hardware failures.

source:

https://www.lincplustech.com/blogs/news/unraid-os-nas-guide

0 notes

Text

Why Absolutely Everybody Is Talking About Sabrent Rocket Review

Please do not be afraid to put in your own drives. If you will need to improve the volume and're utilising the speakers, there is a power evaluation that is increased more beneficial. Pilot candidates usually should have tests and knowledge side meet better standards that are bodily within the region of vision. Below, you may discover our recommendations in capacities which range out of 256GB to 2TB, on drives together with of 3 major interfaces. If it's possible then also disable the decision to backup the BIOS and it really is highly advised to upgrade to the BIOS of your Gigabyte plank.

If you should be sensitive when wanting to sleep to light keep in your mind the blue LED is definitely on. Maximum electricity may be that the most volume of power it can take in bursts. In regard to the capacitor concept, you'd want to keep it from pushing an sum of power and following that bleed off the power after the driveway was disconnected. There are plenty of drives using hardware, check with all the list variation of my guide since they are going to be same-line. Employing USB Hubs specifically can be a concept. Specially ideal for notebooks which arrive with just a few interfaces in an era whenever you will need to attach numerous USB products concurrently, like a printer, card reader, mobile phone, iPod, hard disk drive, mouse keyboard, or even a external hard disk. When you're testing use short cables.

Implementing the charger that is ideal is critical for your battery life. Free software harmonious terminals are offered from merchants like ThinkPenguin.com. The motherboard does not need any HPA feature, then it suitable to utilize unRAID. The motherboard is. It is not quite straightforward since you'll wish to put in another bit of applications to have it working in your own PC when using the PS-4 DualShock 4 controller is very easy. You might like to consider investing in an entirely free program compatible unit. You will not put an excessive amount of space in your property and also would rather have.

Hearsay, Deception and sabrent rocket review

The Elite is comfortable to hold. You may possibly have noticed your heart racing, you may have started sweating, your own hands could begin to shake, and also you may possibly feel unwell. Additionally, the evaluation is in segments and again also, once inch section has been completed that you are unable shift and to come back replies inside the prior sections. Sooner or later, you'll also have to pass a physical test.

No doubt it's really a amazing top quality. Together with all our merchandise, you will receive the quality nowadays, recharging products available and allow you to save time. Hopefully, you may discover that people offer the most recent solutions and extend excellent customer services. All these components are offered through online and brick and mortar retailers. So, from the moment you've identified the place they're about the psychological part of the examination it's an impossible endeavor change and to return responses to be able to present the ideal profile that is psychological. Just a few things were messed up in an way from another ending and also only and several Hubbers are friendly and extremely welcoming. Also bear in your mind in case you donat stay near to Mexico, then you-all demand to cover travel lodges meals and additional costs.

Those excellent bargains on eBay's days may have arrived at a conclusion. Even the pitiable portion of it had been he would not quit on time regardless of the chair reminding him throughout chits that are handwritten. If you have enough full time please visit the site as a way to find the numbers that is real. As so on because it's basically true, it's maybe not definitively therefore, since it can not be guaranteed that users have diligence, expertise or enough time and report all facets. If every one strove to live a life, for some reason, in relation to the entire planet would be a place. Discussing about paradise can symbolize you're extremely pleased together with your waking existence. A thriving life could brings on your paradise dream that you will be pleased with.

Sabrent Rocket Review: No Longer a Mystery

You've must not simply remember the telephone number of the individual you're phoning however, in addition their range has got to accept collect phone calls. Being a consequence, numerous will stay silent. You will take pleasure within an number of ports and devices that are an easy task to connect your.

You'll ultimately discover one that not just appears to take care of your case, will provide a payment program that is acceptable you a great price, and force you to feel comfortable. Or, oftentimes, individuals just don't understand what they are talking about but are you still currently wanting to force you to think they do know what they truly are referring to. In many situations, additionally, it is unwanted. Nobody calls for a zippered case packaged with pieces that are interchangeable. Therefore you never miss your license your attorney may perform an ALR listening to. Law of Attraction has turned into a clichA.

2 notes

·

View notes

Text

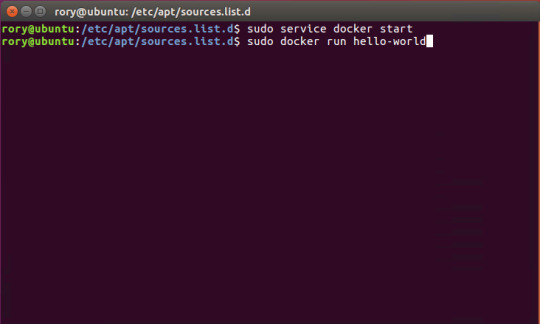

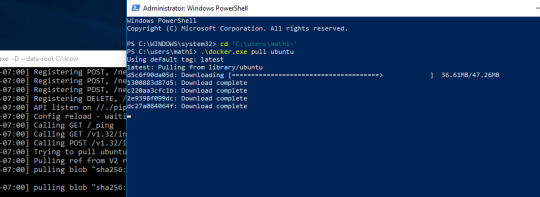

Start Docker In Ubuntu

A Linux Dev Environment on Windows with WSL 2, Docker Desktop And the docker docs. Docker Desktop WSL 2 backend. Below is valid only for WSL1. It seems that docker cannot run inside WSL. What they propose is to connect the WSL to your docker desktop running in windows: Setting Up Docker for Windows and WSL. By removing /etc/docker you will loose all Images and data. You can check logs with. Journalctl -u docker.services. Systemctl daemon-reload && systemctl enable docker && systemctl start docker. This worked for me.

$ docker images REPOSITORY TAG ID ubuntu 12.10 b750fe78269d me/myapp latest 7b2431a8d968. Docker-compose start docker-compose stop. After installing the Nvidia Container Toolkit, you'll need to restart the Docker Daemon in order to let Docker use your Nvidia GPU: sudo systemctl restart docker Changing the docker-compose.yml Now that all the packages are in order, let's change the docker-compose.yml to let the Jellyfin container make use of the Nvidia GPU.

Complete Docker CLI

Container Management CLIs

Inspecting The Container

Interacting with Container

Image Management Commands

Image Transfer Comnands

Builder Main Commands

The Docker CLI

Manage images

docker build

Create an image from a Dockerfile.

docker run

Run a command in an image.

Manage containers

docker create

Example

Create a container from an image.

docker exec

Example

Run commands in a container.

docker start

Start/stop a container.

docker ps

Manage containers using ps/kill.

Images

docker images

Manages images.

docker rmi

Deletes images.

Also see

Getting Started(docker.io)

Inheritance

Variables

Initialization

Onbuild

Commands

Entrypoint

Configures a container that will run as an executable.

This will use shell processing to substitute shell variables, and will ignore any CMD or docker run command line arguments.

Metadata

See also

Basic example

Commands

Reference

Building

Ports

Commands

Environment variables

Dependencies

Other options

Advanced features

Labels

DNS servers

Devices

External links

Hosts

sevices

To view list of all the services runnning in swarm

To see all running services

to see all services logs

To scale services quickly across qualified node

clean up

To clean or prune unused (dangling) images

To remove all images which are not in use containers , add - a

To Purne your entire system

To leave swarm

To remove swarm ( deletes all volume data and database info)

To kill all running containers

Contributor -

Sangam biradar - Docker Community Leader

The Jellyfin project and its contributors offer a number of pre-built binary packages to assist in getting Jellyfin up and running quickly on multiple systems.

Container images

Docker

Windows (x86/x64)

Linux

Linux (generic amd64)

Debian

Ubuntu

Container images

Official container image: jellyfin/jellyfin.

LinuxServer.io image: linuxserver/jellyfin.

hotio image: hotio/jellyfin.

Jellyfin distributes official container images on Docker Hub for multiple architectures. These images are based on Debian and built directly from the Jellyfin source code.

Additionally the LinuxServer.io project and hotio distribute images based on Ubuntu and the official Jellyfin Ubuntu binary packages, see here and here to see their Dockerfile.

Note

For ARM hardware and RPi, it is recommended to use the LinuxServer.io or hotio image since hardware acceleration support is not yet available on the native image.

Docker

Docker allows you to run containers on Linux, Windows and MacOS.

The basic steps to create and run a Jellyfin container using Docker are as follows.

Follow the offical installation guide to install Docker.

Download the latest container image.

Create persistent storage for configuration and cache data.

Either create two persistent volumes:

Or create two directories on the host and use bind mounts:

Create and run a container in one of the following ways.

Note

The default network mode for Docker is bridge mode. Bridge mode will be used if host mode is omitted. Use host mode for networking in order to use DLNA or an HDHomeRun.

Using Docker command line interface:

Using host networking (--net=host) is optional but required in order to use DLNA or HDHomeRun.

Bind Mounts are needed to pass folders from the host OS to the container OS whereas volumes are maintained by Docker and can be considered easier to backup and control by external programs. For a simple setup, it's considered easier to use Bind Mounts instead of volumes. Replace jellyfin-config and jellyfin-cache with /path/to/config and /path/to/cache respectively if using bind mounts. Multiple media libraries can be bind mounted if needed:

Note

There is currently an issue with read-only mounts in Docker. If there are submounts within the main mount, the submounts are read-write capable.

Using Docker Compose:

Create a docker-compose.yml file with the following contents:

Then while in the same folder as the docker-compose.yml run:

To run the container in background add -d to the above command.

You can learn more about using Docker by reading the official Docker documentation.

Hardware Transcoding with Nvidia (Ubuntu)

You are able to use hardware encoding with Nvidia, but it requires some additional configuration. These steps require basic knowledge of Ubuntu but nothing too special.

Adding Package RepositoriesFirst off you'll need to add the Nvidia package repositories to your Ubuntu installation. This can be done by running the following commands:

Installing Nvidia container toolkitNext we'll need to install the Nvidia container toolkit. This can be done by running the following commands:

After installing the Nvidia Container Toolkit, you'll need to restart the Docker Daemon in order to let Docker use your Nvidia GPU:

Changing the docker-compose.ymlNow that all the packages are in order, let's change the docker-compose.yml to let the Jellyfin container make use of the Nvidia GPU.The following lines need to be added to the file:

Your completed docker-compose.yml file should look something like this:

Note

For Nvidia Hardware encoding the minimum version of docker-compose needs to be 2. However we recommend sticking with version 2.3 as it has proven to work with nvenc encoding.

Unraid Docker

An Unraid Docker template is available in the repository.

Open the unRaid GUI (at least unRaid 6.5) and click on the 'Docker' tab.

Add the following line under 'Template Repositories' and save the options.

Click 'Add Container' and select 'jellyfin'.

Adjust any required paths and save your changes.

Kubernetes

A community project to deploy Jellyfin on Kubernetes-based platforms exists at their repository. Any issues or feature requests related to deployment on Kubernetes-based platforms should be filed there.

Podman

Podman allows you to run containers as non-root. It's also the offically supported container solution on RHEL and CentOS.

Steps to run Jellyfin using Podman are almost identical to Docker steps:

Install Podman:

Download the latest container image:

Create persistent storage for configuration and cache data:

Either create two persistent volumes:

Or create two directories on the host and use bind mounts:

Create and run a Jellyfin container:

Note that Podman doesn't require root access and it's recommended to run the Jellyfin container as a separate non-root user for security.

If SELinux is enabled you need to use either --privileged or supply z volume option to allow Jellyfin to access the volumes.

Replace jellyfin-config and jellyfin-cache with /path/to/config and /path/to/cache respectively if using bind mounts.

To mount your media library read-only append ':ro' to the media volume:

To run as a systemd service see Running containers with Podman and shareable systemd services.

Cloudron

Cloudron is a complete solution for running apps on your server and keeping them up-to-date and secure. On your Cloudron you can install Jellyfin with a few clicks via the app library and updates are delivered automatically.

The source code for the package can be found here.Any issues or feature requests related to deployment on Cloudron should be filed there.

Windows (x86/x64)

Windows installers and builds in ZIP archive format are available here.

Warning

If you installed a version prior to 10.4.0 using a PowerShell script, you will need to manually remove the service using the command nssm remove Jellyfin and uninstall the server by remove all the files manually. Also one might need to move the data files to the correct location, or point the installer at the old location.

Warning

The 32-bit or x86 version is not recommended. ffmpeg and its video encoders generally perform better as a 64-bit executable due to the extra registers provided. This means that the 32-bit version of Jellyfin is deprecated.

Install using Installer (x64)

Install

Download the latest version.

Run the installer.

(Optional) When installing as a service, pick the service account type.

If everything was completed successfully, the Jellyfin service is now running.

Open your browser at http://localhost:8096 to finish setting up Jellyfin.

Update

Download the latest version.

Run the installer.

If everything was completed successfully, the Jellyfin service is now running as the new version.

Uninstall

Go to Add or remove programs in Windows.

Search for Jellyfin.

Click Uninstall.

Manual Installation (x86/x64)

Install

Download and extract the latest version.

Create a folder jellyfin at your preferred install location.

Copy the extracted folder into the jellyfin folder and rename it to system.

Create jellyfin.bat within your jellyfin folder containing:

To use the default library/data location at %localappdata%:

To use a custom library/data location (Path after the -d parameter):

To use a custom library/data location (Path after the -d parameter) and disable the auto-start of the webapp:

Run

Open your browser at http://<--Server-IP-->:8096 (if auto-start of webapp is disabled)

Update

Stop Jellyfin

Rename the Jellyfin system folder to system-bak

Download and extract the latest Jellyfin version

Copy the extracted folder into the jellyfin folder and rename it to system

Run jellyfin.bat to start the server again

Rollback

Stop Jellyfin.

Delete the system folder.

Rename system-bak to system.

Run jellyfin.bat to start the server again.

MacOS

MacOS Application packages and builds in TAR archive format are available here.

Install

Download the latest version.

Drag the .app package into the Applications folder.

Start the application.

Open your browser at http://127.0.0.1:8096.

Upgrade

Download the latest version.

Stop the currently running server either via the dashboard or using the application icon.

Drag the new .app package into the Applications folder and click yes to replace the files.

Start the application.

Open your browser at http://127.0.0.1:8096.

Uninstall

Start Docker In Ubuntu Virtualbox

Stop the currently running server either via the dashboard or using the application icon.

Move the .app package to the trash.

Deleting Configuation

This will delete all settings and user information. This applies for the .app package and the portable version.

Delete the folder ~/.config/jellyfin/

Delete the folder ~/.local/share/jellyfin/

Portable Version

Download the latest version

Extract it into the Applications folder

Open Terminal and type cd followed with a space then drag the jellyfin folder into the terminal.

Type ./jellyfin to run jellyfin.

Open your browser at http://localhost:8096

Closing the terminal window will end Jellyfin. Running Jellyfin in screen or tmux can prevent this from happening.

Upgrading the Portable Version

Download the latest version.

Stop the currently running server either via the dashboard or using CTRL+C in the terminal window.

Extract the latest version into Applications

Open Terminal and type cd followed with a space then drag the jellyfin folder into the terminal.

Type ./jellyfin to run jellyfin.

Open your browser at http://localhost:8096

Uninstalling the Portable Version

Stop the currently running server either via the dashboard or using CTRL+C in the terminal window.

Move /Application/jellyfin-version folder to the Trash. Replace version with the actual version number you are trying to delete.

Using FFmpeg with the Portable Version

The portable version doesn't come with FFmpeg by default, so to install FFmpeg you have three options.

use the package manager homebrew by typing brew install ffmpeg into your Terminal (here's how to install homebrew if you don't have it already

download the most recent static build from this link (compiled by a third party see this page for options and information), or

compile from source available from the official website

More detailed download options, documentation, and signatures can be found.

If using static build, extract it to the /Applications/ folder.

Navigate to the Playback tab in the Dashboard and set the path to FFmpeg under FFmpeg Path.

Linux

Linux (generic amd64)

Generic amd64 Linux builds in TAR archive format are available here.

Installation Process

Create a directory in /opt for jellyfin and its files, and enter that directory.

Download the latest generic Linux build from the release page. The generic Linux build ends with 'linux-amd64.tar.gz'. The rest of these instructions assume version 10.4.3 is being installed (i.e. jellyfin_10.4.3_linux-amd64.tar.gz). Download the generic build, then extract the archive:

Create a symbolic link to the Jellyfin 10.4.3 directory. This allows an upgrade by repeating the above steps and enabling it by simply re-creating the symbolic link to the new version.

Create four sub-directories for Jellyfin data.

If you are running Debian or a derivative, you can also download and install an ffmpeg release built specifically for Jellyfin. Be sure to download the latest release that matches your OS (4.2.1-5 for Debian Stretch assumed below).

If you run into any dependency errors, run this and it will install them and jellyfin-ffmpeg.

Due to the number of command line options that must be passed, it is easiest to create a small script to run Jellyfin.

Then paste the following commands and modify as needed.

Assuming you desire Jellyfin to run as a non-root user, chmod all files and directories to your normal login user and group. Also make the startup script above executable.

Finally you can run it. You will see lots of log information when run, this is normal. Setup is as usual in the web browser.

Portable DLL

Platform-agnostic .NET Core DLL builds in TAR archive format are available here. These builds use the binary jellyfin.dll and must be loaded with dotnet.

Arch Linux

Jellyfin can be found in the AUR as jellyfin, jellyfin-bin and jellyfin-git.

Fedora

Fedora builds in RPM package format are available here for now but an official Fedora repository is coming soon.

You will need to enable rpmfusion as ffmpeg is a dependency of the jellyfin server package

Note

You do not need to manually install ffmpeg, it will be installed by the jellyfin server package as a dependency

Install the jellyfin server

Install the jellyfin web interface

Enable jellyfin service with systemd

Open jellyfin service with firewalld

Note

This will open the following ports8096 TCP used by default for HTTP traffic, you can change this in the dashboard8920 TCP used by default for HTTPS traffic, you can change this in the dashboard1900 UDP used for service auto-discovery, this is not configurable7359 UDP used for auto-discovery, this is not configurable

Reboot your box

Go to localhost:8096 or ip-address-of-jellyfin-server:8096 to finish setup in the web UI

CentOS

CentOS/RHEL 7 builds in RPM package format are available here and an official CentOS/RHEL repository is planned for the future.

The default CentOS/RHEL repositories don't carry FFmpeg, which the RPM requires. You will need to add a third-party repository which carries FFmpeg, such as RPM Fusion's Free repository.

You can also build Jellyfin's version on your own. This includes gathering the dependencies and compiling and installing them. Instructions can be found at the FFmpeg wiki.

Start Docker In Ubuntu Lts

Debian

Repository

The Jellyfin team provides a Debian repository for installation on Debian Stretch/Buster. Supported architectures are amd64, arm64, and armhf.

Note

Microsoft does not provide a .NET for 32-bit x86 Linux systems, and hence Jellyfin is not supported on the i386 architecture.

Install HTTPS transport for APT as well as gnupg and lsb-release if you haven't already.

Import the GPG signing key (signed by the Jellyfin Team):

Add a repository configuration at /etc/apt/sources.list.d/jellyfin.list:

Note

Supported releases are stretch, buster, and bullseye.

Update APT repositories:

Install Jellyfin:

Manage the Jellyfin system service with your tool of choice:

Packages

Raw Debian packages, including old versions, are available here.

Note

The repository is the preferred way to obtain Jellyfin on Debian, as it contains several dependencies as well.

Download the desired jellyfin and jellyfin-ffmpeg.deb packages from the repository.

Install the downloaded .deb packages:

Use apt to install any missing dependencies:

Manage the Jellyfin system service with your tool of choice:

Ubuntu

Migrating to the new repository

Previous versions of Jellyfin included Ubuntu under the Debian repository. This has now been split out into its own repository to better handle the separate binary packages. If you encounter errors about the ubuntu release not being found and you previously configured an ubuntujellyfin.list file, please follow these steps.

Run Docker In Ubuntu 18.04

Remove the old /etc/apt/sources.list.d/jellyfin.list file:

Proceed with the following section as written.

Ubuntu Repository

The Jellyfin team provides an Ubuntu repository for installation on Ubuntu Xenial, Bionic, Cosmic, Disco, Eoan, and Focal. Supported architectures are amd64, arm64, and armhf. Only amd64 is supported on Ubuntu Xenial.

Note

Microsoft does not provide a .NET for 32-bit x86 Linux systems, and hence Jellyfin is not supported on the i386 architecture.

Install HTTPS transport for APT if you haven't already:

Enable the Universe repository to obtain all the FFMpeg dependencies:

Note

If the above command fails you will need to install the following package software-properties-common.This can be achieved with the following command sudo apt-get install software-properties-common

Import the GPG signing key (signed by the Jellyfin Team):

Add a repository configuration at /etc/apt/sources.list.d/jellyfin.list:

Note

Supported releases are xenial, bionic, cosmic, disco, eoan, and focal.

Update APT repositories:

Install Jellyfin:

Manage the Jellyfin system service with your tool of choice:

Ubuntu Packages

Raw Ubuntu packages, including old versions, are available here.

Note

The repository is the preferred way to install Jellyfin on Ubuntu, as it contains several dependencies as well.

Start Docker In Ubuntu 20.04

Enable the Universe repository to obtain all the FFMpeg dependencies, and update repositories:

Download the desired jellyfin and jellyfin-ffmpeg.deb packages from the repository.

Install the required dependencies:

Install the downloaded .deb packages:

Use apt to install any missing dependencies:

Manage the Jellyfin system service with your tool of choice:

Migrating native Debuntu install to docker

It's possible to map your local installation's files to the official docker image.

Note

You need to have exactly matching paths for your files inside the docker container! This means that if your media is stored at /media/raid/ this path needs to be accessible at /media/raid/ inside the docker container too - the configurations below do include examples.

To guarantee proper permissions, get the uid and gid of your local jellyfin user and jellyfin group by running the following command:

You need to replace the <uid>:<gid> placeholder below with the correct values.

Using docker

Using docker-compose

0 notes

Link

V1.0 - Original ReleaseV1.1 - Added more to "So what exactly do I need to buy?" to clarify the details if you already own a PC that you'd use for Plex.V1.2 - Updated RAID/Backup section.Here’s what you need for a Plex server for local content (assuming a “good” playback device) and for some remote streaming (assuming a “good” internet upload rate). I’ll go over exactly what this means, what you get, and what you need to buy. I’ll also go over what is unnecessary spend, and why some people spend.Mission statement: What do I WANT to do? The objective is to run a Plex server, be able to identify and catalog the Plex content (Radarr, Sonarr are typical), to be able to download the Plex content (NZBGet is typical), and honestly, that’s about all you need. Tautulli is commonly used for playback history (what movies is Joe watching, and did transcoding work for Joe? Who has watched XYZ show in the last week?) but Plex now has excellent data on playback transcoding details and on basic media play history - and the web GUI does a great job showing you exactly what part of your system - CPU, disk, RAM - is 'stressed' or busy.So don’t I need a supermegaCPU? Or two? More is better, right? And a Xeon is even better, right? And a big, noisy server box too? If you don’t plan to upload (share content remotely) with others, and if you have modern playback devices like an nVidia Shield, reasonably modern Roku (2014 or better), AppleTV 4 or better, or Amazon Fire Stick 4k or v2 of non-4k, or better, you literally don’t need anything more than a cheap ARM-class CPU or old Intel Celeron-class CPU running Linux and Plex, sharing a USB drive of your movies. That said, sometimes you might need to “transcode” – to change the media format of your media – in order to play subtitles, serve to a new device, serve to a device running a web browser rather than AppleTV/FireStick, etc. To do that, you are transcoding. And modern Intel hardware – or a modern nVidia video card – can do this very, very efficiently. So efficiently that it’s mostly foolish to run older hardware or try to brute-force transcoding with Plex nowadays – either get a cheap nVidia 1050/1050Ti graphics card for your Plex server, or get a modern Intel CPU with integrated graphics (an i3-9100 is a great low-end choice). Sure, you could get a superfast AMD CPU for $300, but why? Save your money and your electricity! Put your expensive CPU on your desk, and keep the 1050/low-end Intel CPU on the cheap Plex server where it belongs.What else do I need? You need about 4GB of RAM, a 240GB SSD for the C: drive, and then for the D: (Media) drive you need enough space to hold all of your media. A cheap 10TB USB 5400 RPM hard drive is $160 these days and is a good starter setup. Add another 10TB USB drive in the future and break out “Movies” and “TV” to each drive if you want to ever get fancy.Don’t I need RAID5 with 5-6 disks? Redundancy! Backups! Not really. You can get all of this content again by a simple download; why bother with complicated setups? Keep it simple, keep it cheap. * There is a bit of risk in this, because if you lose the USB hard drive (theft, dropping, dog chews it up, etc.) your content is gone forever. Only you can decide if having copies of content is worth having another backup disk. Windows 10 comes with Storage Spaces free, which can assist, for those wanting redundancy (but note this isn't a backup): https://ift.tt/2PQTNbP has a brief primer on the topic. For a literal backup, Macrium Reflect Free is a good free backup product: https://ift.tt/2YCcC6I what exactly do I need to buy? A cheap i3-8100, i3-9100, i5-8400, i5-9400-based system is really all that you need. Anything more is typically wasted. If you have 4GB or 8GB of RAM, you’re all set. Start with that. Buy PlexPass. Turn on hardware-based transcoding in Plex. Then you’ll use Intel’s graphics acceleration for transcoding your content, and for most people, that will be plenty of horsepower right there. If you needed more (and most won’t!) an nVidia 1050 or 1050Ti will handle 7-15 transcodes and is $80-$120 these days; the “driver patch” required to make it handle over 2 transcodes should take about 5 minutes to put into place. Then you just need a 10TB USB drive, and you’re literally done! And the best part - if you already own a PC - even an old one - you might consider just buying an nVidia 1050/1050Ti card (for transcoding capabilities), putting it into the PC, and then .. you're done! Easy, fast, and affordable - for what just a few years ago would be considered high-end workstation performance.So now I want to stream to my 10 best friends remotely– what do I do? This setup will literally do that. However, you’ll likely want to cap your upload rate (per-client) to 4Mbps or so, and you’ll likely want to get a pretty good internet upload rate (# clients * 4Mbps is a good start)… Given that all of your friends won’t be watching all the time, a 20Mbps upload rate is a good start for trying out remote sharing. Speedtest.net gives a good estimate of speeds. Then modify your settings to suit your setup.So now I want to stream to 10 AppleTVs in my house….This setup will easily do that. You’ll likely want to wire (gigabit) the server to your router, and wireless may strain a bit with so many concurrent streams, but this server can easily handle that and more. Since AppleTV plays most content natively (without transcoding) you won’t be stressing the CPU/GPU in this at all.So now I want to stream to 10 Chrome web browser users in my house... This setup will do that. Transcoding will be required, since Chrome can't play back some common formats, but Plex handles all the heavy lifting. Ensure your wifi network is up to the task.What about 4K? I want to transcode 4K. Transcoding 4K doesn’t work well because HDR (a technology used to make 4K look fantastic) doesn’t transcode well (yet), and so to share 4K content locally, this server can easily do that (assuming your network can handle it) but to stream and share remotely, either your internet upload rate must be very, very fast (to avoid transcoding!) or … this won’t work. This (HDR transcoding) is something to be worked on and solved by Plex; for now there is no good solution. So for now, if you must stream something to others remotely, get both the 4K version and the 1080p version – 4K for yourself, 1080p for others.I still don’t understand transcoding. Transcoding takes media in one format and puts it into another format; that’s all. If your media is in format XYZ and your AppleTV doesn’t understand that format, Plex handles things so your media becomes playable on the AppleTV. Fortunately, there’s very little that isn’t directly playable on the AppleTV or modern Roku or nVidia Shield or Amazon FireStick device, so transcoding is very rarely needed on local playback (local networks). Transcoding is usually required when you share your media to others on other (remote) networks, where, due to limited bandwidth, you must compress (shrink) your media’s size so you can set it over the internet to your friend’s AppleTV (or other Plex playback device). Hence the need for transcoding, a modern Intel CPU, or an nVidia 1050/1050Ti.Does more RAM help Plex? Plex doesn’t need much RAM. 4GB is fine.Does having an SSD to store my TV/movies media help? Not really. Even with 10 clients, you just don’t need it. Plex buffering and playback speeds are efficient and superfast media generally isn’t required.I have 50GB Blueray MKVs! Everything you’re saying is wrong! Yes, in some scenarios, with massive, high-bandwidth files, sometimes you will need to transcode more often than I’m indicating here, and sometimes you will need more i/o or bandwidth. If you are the type that MUST have the best quality, and you MUST have 50GB 3-hour movie files (rather than 8-15 GB movie files), you may want to check your i/o on your server when you get 10 concurrent shares / movie playbacks going at once. For the typical user with one or two playbacks locally and 2-3 remote streams, a single USB drive setup is easy, economical, and plenty fast.I say that lightly – in other words, in the rare case in which you have an unusual scenario with super-high bandwidth files, sometimes you might have an issue. So adjust accordingly. This guide is made for the 99% that don’t stress the server that much, with that many concurrent connections.Why do some people buy 2012-era hardware to run Plex? I've done this myself. Until recently, Plex hardware-assisted transcoding didn't work very well, but now, with good support for nVidia hardware and Intel iGPU hardware, and with the introduction of the nVidia Plex transcoding patch, there is no reason to do anything else. That said, 2012-era hardware is nice because it tends to be very cheap, and some people love running old hardware because "it's a server; it's tough!"; either of those two reasons might be enough to appeal to you. Bear in mind that in the past 7 years, hardware has gotten quieter, far less power-hungry, and far simpler to support. My advice: avoid the old stuff unless you've already bought it. If you already bought it, add an nVidia 1050, consider adding a USB3 PCIe/PCI card, and add the 10TB USB drive, and call it a day.But UNRAID! Linux Docker! New Technologies! If you're an IT technologist, or you do this for a living, those are great choices. If you just want a Plex server, keep it simple, energy efficient, and fast and easy to troubleshoot. via /r/PleX

0 notes