#well maybe this 10 seconds of video content will explain a giant mystery and the entire next campaign hmmm yes

Text

Let’s talk about the Veil

I’m gonna start out by saying this fully theorizing, complete spinfoil, little evidence but big dreams. This post is not going to be about the campaign, I have different post on my blog talking about it if you really want to know. As you might expect, huge spoilers for the campaign. Alright, it’s lore time babey!

Alright so the Veil is super mysterious. As mentioned in the campaign, there is very little we understand about the Veil. Osiris has never seen anything like it, the Neomuni really just use it as a battery. We really only know three things:

The Witness used it to open a portal (of some sort).

In interacts with both the Light and the Darkness.

It powers Neomuna and specifically is integral to the operation of the CloudArk.

That is really not a lot to go on. However, I do think it is actually enough to figure out what is going on. I believe that the Veil acts as a gateway between the Light and Darkness, or more specifically the physical and the metaphysical. Now that is a pretty wild claim but here’s why.

During the campaign, Osiris muses on the nature of Light and Dark. Specifically, he mentions that the Light deals with the physical: causing things to grow, letting us bend the rules of physics, etc. On the other side, the Darkness deals with the metaphysical: dreams and nightmares (Nezarec alert!), feelings of control, emotions, etc. He notes that these two forces are fundamentally incompatible. Frankly, I disagree, I think this is a case of Osiris purposely coming to the incorrect conclusion to have a reveal in Final Shape but that’s a different theory entirely. For now, the important thing to note is that there are a lot of questions around what happens when Light and Dark collide (Strand, Tormentors, Savathun, both “sides” using both powers, etc).

Now let’s look at the Ishtar Collective facility and Neomuna. In the final mission, Nimbus notes that a device we see is a CloudArk prototype. Later we see some wacky chairs that probably were part of that project. I cannot fully remember but I also remember the implication that the Veil is used in part of the upload process to the CloudArk. Here, we see minds crossing into a digital space, disconnected from their physical body. They go from entirely physical to entirely mental.

Okay Witness time. We see the Witness carve a giant triangle into the Traveler and then open a Veil portal, passing beyond it. I am reading this as the Witness has entered the Traveler. I’ll come back to that. But again, the Witness has passed into an unknown space, Darkness goes into a space that is ostensibly defined by the Light. Furthermore, the Radial Mast is specifically identified as a source of Light during the campaign and our ghost (a creation of the Light) is used to channel the Veil’s power with Osiris telling us to get our ghost away from the Veil. Again, the convergence of Light and Dark.

Alright let’s bring it all together. In all of these cases, we see the Veil being used as a sort of translation layer between physical and nonphysical states or more specifically, Light and Dark. I think the Veil allows for Light and Darkness to coexist in a way that is otherwise impossible. This is why Strand is present on Neomuna (our Light powers getting reflected into a metaphysical state), this is why our ghost can be semi-hijacked by the Witness, this is why Neomuni can be uploaded to the CloudArk which really feels a lot like the VexNet, and this is why the Radial Mast / a source of Light (us) was needed for the Witness to interact with the Veil. It plays really nicely on the phrase “beyond the Veil” which, while often used in conjunction with death, actually just refers a mysterious division between worlds. Again, plays really nicely with the ideas I mention here about moving between worlds, and moving between the domains of Light and Dark.

So that begs the questions, what the heck is going on with the Witness and the Traveler? Well take a trip with me into deep theory territory here cause I’m moving from little evidence to zero evidence. In the final cutscene, we see the Witness send its Pyramid ships into the portal before following. We know a couple of things. According to Osiris, the Light deals with the physical. We also know the Pyramid ships respond, at least in part, to our thoughts and desires. What if the inside of the Traveler is a massive space, able to fit in the relatively small Traveler ball Tardis style because of the physics warping nature of the Light like the thought warping nature of the Darkness in the Pyramids? The Witness needs some way to enter that space, but literally cannot because it is such a channeller of the Darkness. So, to get its ships and forces inside, it needs a translation layer. Something that will allow it to enter this space and allow the Darkness and Light to mix. That’s why it needs the Veil, to pass beyond, to actually get to the heart of whatever the Traveler is, whether that’s a Witness-like creature or of something more abstract.

Also, I am wondering if the reason why nothing we do gets past the Veil is because we are all physical and Light based. Would explain why the Witness converts its ships into smokey wisps to pass through. So perhaps the answer is we have to become heavy on the Darkness side or... think our way through? Not sure about this one tbh XD. Either way, I am sure we will see more about the Veil in the lead up to Final Shape.

#destiny#destiny 2#destiny spoilers#destiny 2 spoilers#lightfall#lightfall spoilers#I mean look in reality we have no idea what the veil is and neither do the characters#and maybe the raid and nezarec will have some more info but it really is a situation of like#well maybe this 10 seconds of video content will explain a giant mystery and the entire next campaign hmmm yes#I do think the idea of it acting as a gateway is sound though given the cloudark of it all#we have the gate just gotta figure out the key#and maybe the key is some sweet loot#or a god in a gun given destiny's track record

68 notes

·

View notes

Text

Avatar Aang

First of all I’m so sorry that this is so late, i had a lot of thoughts to sort out and then once I had written about half of this review my computer crashed and it didn’t get saved so I had to write the whole thing again. Save your drafts people! Anyway this review was supposed to be for just the last part of the finale, but I’m kind of treating it as a review for the whole finale. So this is gonna be a long one. Full review under the cut! Enjoy!

Okay. This finale was absolutely SO GOOD I love this show so much!! And I’m sad it’s over but man what a perfect ending. I really think that ending was so great for this show, it accomplished everything it set out to do, the whole story wrapped up so nicely and every character and story arc was completed. That’s what happens when a show is planned out from the beginning, it has a good ending instead of going on for too long and eventually losing sight of the end goal and going off the rails by the end. (not naming any names but I’m sure we all know those shows.)

We completed the main objective, the goal we got at the very beginning: defeat the fire lord. And I knew for a while that it wasn’t just going to be a cut and dry, “beat the boss in a video game” type deal. There was gonna be something more to it, some kind of nuance added to make it interesting, but I didn’t know what. But oh boy did I love what we got!

I will say, all the stuff with the lion turtle was a little confusing and it kinda came out of nowhere. Like Aang needed a way to defeat Ozai without killing him, and then this giant island that is actually a talking animal/spirit thing just rolls up and gives him a way to do it. Because I guess LT (lion turtle) sensed that Aang needed help so he came and taught him that energy bending thing? Which is also a little confusing and I wish they had explained that more. I had no idea what was going on until Aang said “I took your firebending away.” But that’s not really a bad thing, I think it’s kinda cool that it was kept a mystery. I just wanna know more about it!

(Maybe if we knew beforehand that taking someone’s bending away was a thing that could happen, like they mentioned at some point “there was a legend long ago that this happened” or something and then Aang actively tries to learn it instead of just like.. absorbing the ability from LT. But idk I’m kinda just nitpicking. I still like the way it was handled in the episode)

But let’s get to what Aang actually did which was take Ozai’s bending away! I didn’t expect that at all but it was honestly so perfect. The only proper punishment for him (aside from death) is to take away all his power, and what gave him his power? Firebending. (And also control of a fascist government but firebending is a good representation for that.) Now he can sit in prison for the rest of his life while a bunch of his son’s friends insult himand he can’t do anything about it. It’s what he deserves.

And Zuko is the new fire lord now! Oh my god I’m SO proud of him!! Like he’s gonna be such a good leader, him and Aang and the others are all just kids but they literally are gonna save the whole world and reverse the effects of a hundred year long war. Good for them.

And when Zuko was talking to Ozai and he’s recognized that he never needed his father’s love,, and his banishment was actually the one good thing his father did for him,,, my GOD he has come so far :’) And now he’s getting closure about his mother which is good. I love Zuko very much I’m gonna make a separate post about him.

Then there’s Azula.. holy shit what happened to her was so sad man. I know she was a terrible person but she was FOURTEEN..she didn’t deserve this, to be raised in the environment she was, having immense pressure put on her to please her father as the firebending prodigy, to be pitted against her own brother from a young age, it’s all just tragic. I got so sad watching that last scene with her and like.. I hope she ends up okay! Idk if it’s in the comics with like, her getting better and unlearning all this toxic behavior, but I would really love to see it. Her character is so so interesting.

Also in this episode we got the scene with Sokka, Toph and Suki, and there were three characters but it was really Sokka’s time to shine. That whole time I was thinking about how far he’s come, like the scene where him and Toph are hanging off the airship about to fall (that was rly sad) but he was just so strong. The whole time. As my second fave character I am proud of him as well. (And then Suki came to save the day which was great.)

I loved the very last scene, where they’re in ba sing se and everyone’s together and happy and Sokka’s painting everybody and they’re all just having a good time.. and we see Aang and Katara with the beautiful sunset (which I did end up making my desktop wallpaper) and they kiss and AAH! that’s what I LIKE TO SEE!! It was just,, so peaceful? It gave me such good vibes and was such a good note to end on, I was crying by then so yeah. Good show. 10/10.

Uh there’s a lot of other stuff I want to talk about but that’s probably it for now.. I really want to make posts about each of the individual characters and what I thought of them and their arcs and stuff so you can hopefully expect that soon. And I’m also gonna answer some asks so feel free to send those! Congrats if you read this whole thing, you get a special bonus point. And uh, yeah thanks for reading my liveblog!! It’s been real fun :) and don’t worry I’m totally gonna post more content after this as well. See ya then!

21 notes

·

View notes

Text

TerraMythos' 2020 Reading Challenge - Book 22 of 26

Title: House of Leaves (2000)

Author: Mark Z. Danielewski

Genre/Tags: Horror, Fiction, Metafiction, Weird, First-Person, Third-Person, Unreliable Narrator

Rating: 6/10

Date Began: 7/28/2020

Date Finished: 8/09/2020

House of Leaves follows two narrative threads. One is the story of Johnny Truant, a burnout in his mid-twenties who finds a giant manuscript written by a deceased, blind hermit named Zampanò. The second is said manuscript -- The Navidson Record -- a pseudo-academic analysis of a found-footage horror film that doesn’t seem to exist. In it, Pulitzer Prize-winning photojournalist Will Navidson moves into a suburban home in Virginia with his partner Karen and their two children. Navidson soon makes the uncomfortable discovery that his new house is bigger on the inside than it is on the outside. Over time he discovers more oddities -- a closet that wasn’t there before, and eventually a door that leads into an impossibly vast, dark series of rooms and hallways.

While Johnny grows more obsessed with the work, his life begins to take a turn for the worse, as told in the footnotes of The Navidson Record. At the same time, the mysteries of the impossible, sinister house on Ash Tree Lane continue to deepen.

To get a better idea try this: focus on these words, and whatever you do don’t let your eyes wander past the perimeter of this page. Now imagine just beyond your peripheral vision, maybe behind you, maybe to the side of you, maybe even in front of you, but right where you can’t see it, something is quietly closing in on you, so quiet in fact you can only hear it as silence. Find those pockets without sound. That’s where it is. Right at this moment. But don’t look. Keep your eyes here. Now take a deep breath. Go ahead and take an even deeper one. Only this time as you start to exhale try to imagine how fast it will happen, how hard it’s gonna hit you, how many times it will stab your jugular with its teeth or are they nails? don’t worry, that particular detail doesn’t matter, because before you have time to even process that you should be moving, you should be running, you should at the very least be flinging up your arms--you sure as hell should be getting rid of this book-- you won’t have time to even scream.

Don’t look.

I didn’t.

Of course I looked.

Some story spoilers under the cut.

Whoo boy do I feel torn on this one. House of Leaves contains some really intriguing ideas, and when it’s done right, it’s some of the best stuff out there. Unfortunately, there are also several questionable choices and narrative decisions that, for me, tarnish the overall experience. It’s certainly an interesting read, even if the whole is ultimately less than the sum of its parts.

First of all, I can see why people don’t like this book, or give up on it early (for me this was attempt number three). Despite an interesting concept and framing device, the first third or so of the book is pretty boring. Johnny is just not an interesting character. He does a lot of drugs and has a lot of (pretty unpleasant) sex and... that’s pretty much it, at least at the beginning. There’s occasional horror sections that are more interesting, where Johnny’s convinced he’s being hunted by something, but they’re few and far between. Meanwhile, the story in The Navidson Record seems content to focus on the relationship issues between two affluent suburbanites rather than the much more interesting, physically impossible house they live in. The early “exploration” sections are a little bit better, but overall I feel the opening act neglects the interesting premise.

However, unlike many, I love the gimmick. The academic presentation of the Navidson story is replete with extensive (fake) footnotes,and there’s tons of self-indulgent rambling in both stories. I personally find it hilarious; it’s an intentionally dense parody of modern academic writing. Readers will note early that the typographical format is nonstandard, with the multiple concurrent stories denoted by different typefaces, certain words in color, footnotes within footnotes, etc. House of Leaves eventually goes off the chain with this concept, gracing us with pages that look like (minor spoilers) this or this. This leads into the best part of this book, namely...

Its visual presentation! House of Leaves excels in conveying story and feeling through formatting decisions. The first picture I linked is one of many like it in a chapter about labyrinths. And reading it feels like navigating a labyrinth! It features a key “story”, but also daunting, multi-page lists of irrelevant names, buildings, architectural terms, etc. There are footnotes that don’t exist, then footnote citations that don’t seem to exist until one finds them later in the chapter. All this while physically turning the book or even grabbing a mirror to read certain passages. In short, it feels like navigating the twists, turns, and dead ends of a labyrinth. And that’s just one example -- other chapters utilize placement of the text to show where a character is in relation to others, what kind of things are happening around them, and so on. One chapter near the end features a square of text that gets progressively smaller as one turns the pages, which mirrors the claustrophobic feel of the narrative events. This is the coolest shit to me; I adore when a work utilizes its format to convey certain story elements. I usually see this in poetry and video games, but this is the first time I’ve seen it done so well in long-form fiction. City of Saints and Madmen and Shriek: An Afterword by Jeff VanderMeer, both of which I reviewed earlier this year, do something similar, and are clearly inspired by House of Leaves in more ways than one.

And yes, the story does get a little better, though it never wows me. The central horror story is not overtly scary, but eeriness suffices, and I have a soft spot for architectural horror. Even Johnny and the Navidsons become more interesting characters over time. For example, I find Karen pretty annoying and generic for most of the book, but her development in later chapters makes her much more interesting. While I question the practical need for Johnny’s frame story, it does become more engaging as he descends into paranoia and madness.

So why the relatively low rating? Well... as I alluded to earlier, there’s some questionable stuff in House of Leaves that leaves (...hah?) a bad taste in my mouth. The first is a heavy focus on sexual violence against women. I did some extensive thinking on this throughout my read, but I just cannot find a valid reason for it. The subject feels thrown in for pure shock value, and especially from a male author, it seems tacky and voyeuristic. If it came up once or twice I’d probably be able to stomach this more easily, but it’s persistent throughout the story, and doesn’t contribute anything to the plot or horror (not that that would really make it better). I’m not saying books can’t have that content, but it’s just not explored in any meaningful way, and it feels cheap and shitty to throw it in something that traumatizing just to shock the audience. It’s like a bad jump scare but worse on every level. There’s even a part near the end written in code, which I took the time to decode, only to discover it’s yet another example of this. Like, really, dude?

Second, this book’s portrayal of mental illness is not great. (major spoilers for Johnny’s arc.) One of the main things about Johnny’s story is he’s an unreliable narrator. From the outset, Johnny has occasional passages that can either be interpreted as genuine horror, or delusional breaks from reality. Reality vs unreality is a core theme throughout both stories. Is The Navidson Record real despite all evidence to the contrary? Is it real as in “is the film an actual thing” or “the events of the film are an actual thing”? and so on and so forth. Johnny’s sections mirror this; he’ll describe certain events, then later state they didn’t happen, contradict himself, or even describe a traumatic event through a made-up story. Eventually, the reader figures out parts of Johnny’s actual backstory, namely that when he was a small child, his mother was institutionalized for violent schizophrenia. Perhaps you can see where this is going...

Schizophrenia-as-horror is ridiculously overdone. But it also demonizes mental illness, and schizophrenia in particular, in a way that is actively harmful. Don’t misunderstand me, horror can be a great way to explore mental illness, but when it’s done wrong? Woof. Unfortunately House of Leaves doesn’t do it justice. While it avoids some cliches, it equates the horror elements of Johnny’s story to the emergence of his latent schizophrenia. This isn’t outwardly stated, and there are multiple interpretations of most of the story, but in lieu of solid and provable horror, it’s the most reasonable and consistent explanation. There’s also an emphasis on violent outbursts related to schizophrenia, which just isn’t an accurate portrayal of the condition.

To Danielewski’s credit, it’s not entirely black and white. We do see how Johnny’s descent into paranoia negatively affects his life and interpersonal relationships. There’s a bonus section where we see all the letters Johnny’s mother wrote him while in the mental hospital, and we can see her love and compassion for him in parallel to the mental illness. But the experimental typographical style returns here to depict just how “scary” schizophrenia is, and that comes off as tacky to me. I think this is probably an example of a piece of media not aging well (after all, this book just turned 20), and there’s been a definite move away from this kind of thing in horror, but that doesn’t change the impression it leaves. For a book as supposedly original/groundbreaking as this, defaulting to standard bad horror tropes is disappointing. And using “it was schizophrenia all along” to explain the horror elements in Johnny’s story feels like a cop-out. I wish there was more mystery here, or alternate interpretations that actually make sense.

Overall The Navidson Record part of the story feels more satisfying. I actually like that there isn’t a direct explanation for everything that happens. It feels like a more genuine horror story, regardless of whether you interpret it as a work of fiction within the story or not. There’s evidence for both. Part of me wishes the book had ended when this story ends (it doesn’t), or that the framing device with Johnny was absent, or something along those lines. Oh well-- this is the story we got, for better or worse.

I don’t regret reading House of Leaves, and it’s certainly impressive for a debut novel. If you’re looking for a horror-flavored work of metafiction, it’s a valid place to start. I think the experimental style is a genuine treat to read, and perhaps the negative aspects won’t hit you as hard as they did to me. But I can definitely see why this book is controversial.

7 notes

·

View notes

Text

So I just beat Yume Nikki -Dream Diary- 100%

I’m gonna write this as an in depth review I guess. So I got done just recently discussing it with an old friend, the very same friend who roughly a decade ago showed me the original game. We have pretty polar opposite opinions of the game and I’m seeing that seems to be the trend with people who have played it so far. This isn’t a call out post or “Your opinion is wrong and mine is right” bullshit. I just want to explain why I liked it and maybe help some people see the game from a different light.

First things first. I want to premise, if this even gets read, that nothing will ever top or match Yume Nikki the RPG maker game that has gained a cult following. Even Yume Nikki on a second playthrough, will never feel like Yume Nikki on a persons first playthrough in my opinion, granted that is if they enjoyed it. Before this re-imagining came out there were mostly 2 kinds of people and barely anyone in between. People who loved it for it’s entire concept and execution and people who thought it was the most boring chore in the world. There is barely anyone I know or have met that’s in between those who are just like “yeah it’s ok I guess” Just because this re-imagining came out does not mean the original is now bad or doesn’t exist and I will respect your opinion if you think one is better then the other, because it’s an opinion, and they aren’t the same.

Below this is the Steam Store page, I want anyone reading this to read it and read it again.

“Yume Nikki has been hailed as one of the greatest (and most controversial) games ever created with RPG Maker. The new YUMENIKKI -DREAM DIARY- is not a remake, but a full reimagining of the original―reconstructed and enhanced using elements and styles of modern indie games. “

If you read this and thought this meant that this is going to be the same game, you went in with your expectations to high. One of the biggest reasons Yume Nikki was so beloved and how most people go into it was, THEY KNEW NOTHING, hell I knew nothing, I got like 2 sentences and like a 5 minute gameplay video of the game and that sold me. Then these people were to learn after diving into this strange game that only told you things with visuals, the creator disappears. For years even. So to do both games right I want to break them down into some basically game design elements to the best of my ability. Gameplay, Soundtrack, Story, Visuals, and Atmosphere/Presentation. Of course I’m going to reference both games because that’s what everyone else is doing for each of these.

Gameplay, well there really wasn’t much of a game to play in Yume Nikki the RPG maker game. I’m sorry I love Yume Nikki, but there’s not a lot of interactivity. It’s more of an experience, a long giant question of How and Why did this game get to this point? If you were at all like me, you kept playing to answer these questions and ultimately you either didn’t get an answer and were happy with it or you found your own answer, which if you ask me is part of the magic of that first playthrough. But as a 2D free RPG Maker game with no admission to entry, it was an experience and just that AN EXPERIENCE. As for Yume Nikki -Dream Diary- the 3D platformer, there’s actually a game to be played which understandable makes it very different then the RPG maker original. But this is an re-imagining, it’s not fair to directly compare the 2. They are in completely different medians and special in their own ways. In it’s essence, whether you agree or not both games at their element are about exploring an experience. If one having gameplay elements, that if you ask me were fairly well tested but not perfect, makes it less of an experience and was frustrating, then I don’t think you remember some of the frustrating non-sense that the RPG maker version had, such as navigating NES World, Locating the Bike which for most of the playthrough you were guaranteed to use because the normal movement speed was slow as shit and that’s usually what stopped a bunch of people from getting farther into it. But I say this with pride, it was part of the experience, it was part of the fun and by all technicality, was it’s own form of rudimentary puzzles and gameplay. Now yes I hear some of you die hard fans cry but there’s not as many effects and not as many doors and worlds, not as many themes and they took out so much. I am sad to say some of the things they took out I will miss, that is if the original game was wiped off the face of the planet with this games release, but it wasn’t.The original is still there as it always was. Because it can’t be replaced. It won’t ever be replaced, people have tried. So onto the point, the gameplay of the 3D one. It’s a horror platformer with puzzle solving and a few jumpscares I guess. You are sitting here reading this, I hope, going wow it’s that easy to categorize? Well yeah sorry to say guys it’s 2018 not 2004, in the time between the first RPG maker classic which I love to death and this newer retake of the very concept of Yume Nikki in 2018 we have had 3 different presidents, saw the rise and fall of many platforms like Vine, went through not 1, not 2 but 3 generations of Video Game Consoles, got 10 versions of the IPhone, like 8 versions of Samsungs Phones and a bunch of other stuff. Needless to say, times change, information is easier to access and we have gotten older and more analytical when it comes to the things we do as hobbies or otherwise. If we are to just look at the word re-imagining at face value and by definition.

reinterpret (an event, work of art, etc.) imaginatively; rethink.

Is the new Yume Nikki a reinterpretation of the RPG classic? Absolutely, it’s a different take on what the game was. Key word different, problem is in 2018 things are easier to find then 2004, communities are larger, people are older, and things in general are going to less surprising. So from a gameplay standpoint is the new Yume Nikki the 3D Platformer a well thought out game from a gameplay standpoint? Yes it is, it has it’s bugs which is unfortunate, but even games that got GAME OF THE YEAR were horrible buggy messes when they came out and those were backed by Triple A developers, COUGH COUGH FALLOUT 3 EVEN THOUGH I LOVE YOU YOU ARE A MESS COUGH COUGH. Moral of the story for the gameplay, it’s different yes but that doesn’t make it bad just not the same. If you can effectively get from beginning to end regardless how the journey goes, the game did what it needed to do. If you felt obligated to finish -Dream Diary- that was nostalgia and that almost need to feel the magic of the first playthrough of the RPG Maker classic, meaning you aren’t taking Yume Nikki -Dream Diary- as it’s own game, you are trying to take it as a replacement for a beloved classic.

Now that I’m done with that portion I guess, hopefully my point was more or less digestible and hopefully didn’t come off as if you disagree I hate you, cause that’s not my intent, if you don’t like it, you don’t like it, I just want to give a separate perspective. The Soundtrack. I think the Soundtrack in both games is just weird and beautiful and bizarre and conveys messages on a spectrum going from of uncomfortable to serene to almost intimidating. I’m no music expert or major or whatever but the music in Yume Nikki -Dream Diary- not only was very good and did what it meant to do in my opinion but was an incredible nod at the RPG Maker classic featuring a lot of remastered tracks from the original which I think portrayed similar emotions as I felt from the first game. I don’t have much else to say on the soundtrack, it’s timing and consistency felt as good as the original in it’s own special way but should not be interpreted as the same.

So the next thing I want to bring up is story. Now if you are a fan of the RPG Maker classic, you know as well as I do that the story is ¯\_(ツ)_/¯, it’s almost entirely up to interpretation. The story was what you thought it was, you just get kind of a beginning and kind of a ending. People who wanted a classical game with a story with a beginning middle and end, would not really even touch this game. Cause it doesn’t hold your hand, but not in a “is this the dark souls of RPG Maker games?” kind of way. It’s entire conception, how it came to be, how the game played, how the game ends and how you get an ending to begin with and the mysterious creator and their disappearance are all ultimately part of Yume Nikkis’ Story in my opinion. The time the game came out, the so little information about the game that was known, the fact that Youtube was still young, fuck me I was using SKYPE when I played this game in like 2008, it was the perfect storm but only because of the games story outside of the game as well as inside. It was a mess, but it was such an amazing mess that was so hard to describe and time and time and time again the only thing people could really say is “you just have to play it, I can’t explain it without ruining it” That was also a part of the games story if you ask me. It was so strange and so meta but it always made people say the same thing. Now 14 years later, the developer is back, is older, sees his own mistakes better then any of us ever could, and I know if anyone reads this, some of you are artists or content creators or game designers and you know EXACTLY what I’m talking about being hyper critical of your own work. And now he has help now, a team of developers and even though he worked side by side with these people, of course there’s going to be a disconnect, something lost in translation, that’s exactly what fangames are, an interpretation of the original. So yes sadly there’s a bit more going on that’s coherent now but it’s 14 years later being lead by a guy who knows his own mistakes for a game he made alone, which is an achievement yes, but he has seen and allowed to be published in the very beginning of this new game a nod at where his last game left off. Meaning of course he acknowledges what he made and knows people adored it, but people also hated it. It was a judgement call, and you may not like the result but I assure you it was a decision in good faith, at least that the message I got from this game. Now enough beating around the bush, this games story? It does it;s job without telling you to much and honestly I still feel like in this games case like the last one, explaining what happens loses the impact, and some of the impact is because I played the game prior. So I think it does it’s job at re-imagining the story of Yume Nikki the RPG maker game well enough.

Next is visuals, now as an RPG Maker game you could argue it’s not good looking, till you think about it, that one guy, made all of that basically from scratch, it’s poetic, it’s awe-inspiring, that this one guy makes this strange game and it moved people so much and all you really do is just look at things. It’s a stunning game to the eyes because you want to know what this guy could have possibly created next and each environment is so different from the last, each effect so silly and cute or scary and gross all at the same time making you feel these mixed emotions of joy, excitement, nausea, tension all at the same time. It almost mesmerizes you into this feeling of wanting to do everything while simultaneously fearing when it’s all over and the dream ends both literally and metaphorically. It’s only because of this, in my opinion is why visually the RPG maker game is such a work of art. Now for Yume Nikki -Dream Diary- if you ask me I think the game is breathtaking, there’s so much care put into so many little details, yes there are clipping issues and the animations aren’t great but remember Yume Nikki the RPG Maker game wasn’t perfect either, we didn’t care though, we were young, we just wanted to take whatever it was in for what it was worth. From a technical standpoint I feel like this game is stunning in the visual department, but it’s not that complicated compared to games of it’s time, much like the older classic. The older classic came out the same year as Half Life 2, which was a technical marvel when it came out, now it’s kinda dated but regardless that didn’t make Yume Nikki in 2004 any less of an unique experience, and I feel like that same way of thinking should be applied to this new title as best as a person can. I’m going to steal a line from another reviewer loosely, Portal 2s biggest flaw is that it came out after Portal 1. I must have heard those words 5 years ago or something and they still stick with me. If you look at Portal 2 it’s literally an evolved embodiment of Portal, but you already knew the concept of Portal cause it already came out so it’s shock value, it’s rare and raw punch is lessened because something did that already. It’s the same reasons fangames and Yume Nikki -Dream Diary- won’t feel the exact same. Cause it came out afterwards.

Lastly I wish to touch on a final point before closing this review I guess, more of an analysis. The presentation and atmosphere. Broken down as simply as I can both games share this. They are Surreal Horror Exploration games whose job is to seemingly immerse you in the strange world of a little Japanese girls dreams. That’s where the disconnect begins. Cause even though you wouldn’t think of the RPG Maker classic to be a horror game it has horror themes and the occasional jumpscare or visual for shock value. Now as a re-imagining does the new Yume Nikki -Dream Diary- fit the bill for an amazing strange atmosphere just like the first? Absolutely, it tells most of it’s story passively, no dialogue, nothing crazy, just you and a simple platformer. But alas, it’s a platformer with tension and parts with severely more interaction then the original causing you to feel urgency and demand to escape or jump the next hurdle or challenge, which is not the same as original. Which understandably is this games biggest flaw, if from the very beginning it said it was a remake and that the original is no longer an actual concept. If we were to look at Yume Nikki -Dream Diary- the same way as the original, where we had nothing to compare it too, it’s a lot better of a game and it’s creepy atmosphere is on point, the environments are great and the nods it makes to the older game make me happy.

All in all I think that even with it’s flaws on release that Yume Nikki -Dream Diary- is a stunning love letter to the original game, written by a team of people copying down the words of the creator, ultimately dedicated to those of us who gave Yume Nikki it’s following while simultaneously being something more accessible to wider audience so that by chance they may also play the original game to fully understand why this game exists and what purpose it serves. It’s a thank you letter, an attempt to redo in a different sense what we have tried ourselves many times to recreate and even though a lot of the fan games are great they don’t feel the exact same and neither does this, it’s fantastic but we should all know by now that it can’t be done again, that’s why it’s special. But the creator knew this and wanted to try again but with more knowledge this time and I respect him for it, I respect the team who worked on this game, flaws and all. Perfect or not they wanted us to feel that special feeling one more time, and maybe it wasn’t what you wanted but I don’t even know what I wanted.

-Katy

20 notes

·

View notes

Text

Keep Beach City Weird! Outline & Review

The Keep Beach City Weird! book is a little paperback full of Ronaldo's revelations. It's about what you'd expect, with the nuggets of truth that we may not recognize as truth until after the fact!

This volume is a keeper for any Steven Universe fan, but not much about it will be a surprise for anyone who's paid close attention to the show. It's fun, as usual, to watch Ronaldo loudly congratulate himself for uncovering THE TRUTH (you can "hear" his voice throughout, of course), and a bunch of the references are great fun to anyone who knows what he's referring to even if the context isn't spelled out.

Fans will also be treated to some new artwork! The picture of Ronaldo imagining himself in the hand of the Temple Fusion's animated form as a trusted sidekick is particularly interesting.

And since Ronaldo is one of those broken clocks that's right twice a day, fans who know his schtick can't help but wonder what conveniently hidden bits are actually Ronaldo straight-up telling us the future.

The book opens with an intro from Ronaldo Fryman, who declares himself as the weirdest thing in Beach City. (The Gems are a close second, he says.) He shares his frustration over not having been able to sell his book to mainstream publishers (which has led him to self-publish it), and warns you that ads for the family fry shop will be interspersed with the content because his dad helped fund it.

He then goes right into a "Monsters" section (decorated with art of him in his horror movie outfit and Peedee in a version of the Snerson costume).

The list includes Centipeetle, a Giant Bird, the Worm Monster, the Puffer Fish, "Giant Women from the Sea," the Watermelon Stevens, the Fusion Experiments, the Great North monsters, and the Crab Monster.

Most of these are not creatures he should have seen, and he shouldn't have pictures, but whatever, I digress. Ronaldo theorizes that the Centipeetles could be humans bitten by a radioactive centipede or centipedes bitten by a radioactive human. He discusses the story of William Dewey being saved by a giant woman whose image bears a resemblance to the Crystal Gems' temple, and then concludes, "Probably no connection." He mentions having seen Sugilite wreck the beach gym.

He brings up reports of the horrible Fusion Experiments and concludes they are zombies. He suggests the Great North monsters are a function of global warming and praises his own bravery in filming the Crab Monster for a documentary. And he also brings up his debunked "Sneeple" theory, which has been swapped for the Rock People theory. Those dang Rock People who want to kidnap Earth and bring it to the Mud Galaxy.

Ronaldo then goes into weirdness from space, detailing the Red Eye ("Vampire Spaceship"), the Rock People Space Program (based on Pearl's failed ship from "Space Race") the Plug Robonoids (which he thinks are an attempt to play space pinball), the Hand Ship (which he knew exactly what that was), the shirt that hit him in the head from "Shirt Club," and crop circles (like in "Joy Ride").

And then Ronaldo covers "Local Weirdness," starting with a double page about the Crystal Gems and then moving on to outline the Cat Fingers incident (with speculation on what causes "Cat Finger Fever"), the Frybo incident (was it all Ronaldo's fault for exploring tuber-based witchcraft?), the Oldest Man in the World incident (from "So Many Birthdays"), Lion sightings, Lars's fire breath, Guacola, and . . . Onion.

Next, Ronaldo gives you a tour of all the Weird Places, beginning with Funland Arcade (with a handy guide of moves you can use if you play "Teens of Rage"!), and taking you around to Race Mountain, the Abandoned Warehouse, Brooding Hill (with an included guide of things to brood about), the many-holed cliff (which he calls a Gnome City), and the Lighthouse.

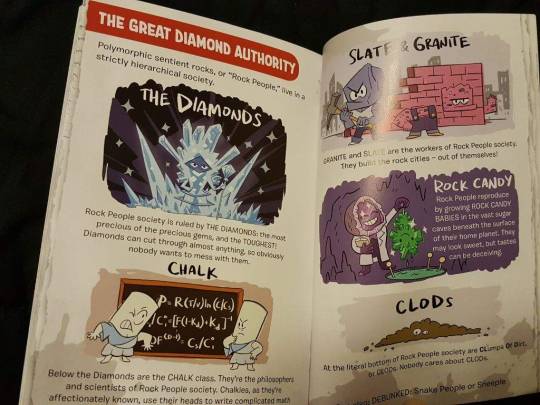

And then, the "Times It Got Really Weird" section details the flowers from Rose's moss in "Lars and the Cool Kids" (did you know they were produced by cloud seeding?), the mountain of duplicated G.U.Y.S. from "Onion Trade," the disappearing ocean from "Mirror Gem" and "Ocean Gem," the blackout incident from "Political Power," Peridot's interrupting broadcast, the Great Diamond Authority, and Cluster Quakes.

And he closes everything with a "Weirdilogue" and encourages you to keep your own town weird.

Some gems:

"Like most bad stories, this one started at a farmer's market."

"I guess that's the danger of genetically engineered food: It might punch you in the face."

"Beach Citywalk Fries. We promise to NEVER bring out that Frybo costume again."

"What's scarier than a beach ball? Well, I guess a lot of things. . . ."

Ronaldo discussing an experience that was either food poisoning or accidentally eating deep-fried wood.

The Zombie Apocalypse Flow Chart which always leads to "Lock myself in lighthouse and prepare to watch the world I know crumble before me."

Ronaldo pointing out that the shirt that hit him in the head from an extraterrestrial source should be a size extra large next time, not youth medium.

"They have a cool home base in an ancient magical temple. My base is a lighthouse that I'm not legally allowed to occupy."

After an entire paragraph outlining how gross Guacola is, Ronaldo heartily encourages you to pick up a can of it while visiting Beach City.

"[M]y favorite – Teens of Rage. Probably because I am a teen who is full of rage at a world that doesn't know how to pronounce 'manga.'"

"Race Mountain, AKA the Devil's Backbone, AKA the Devil's Laundry Chute, AKA the Devil's Poorly Planned Highway, AKA Old Man Carwreck's Road, AKA Municipal Maintenance Route 64!!!!"

"I took a picture of it, which Lars really did not want published. Check it out."

Ronaldo speculates that maybe the moon has its own moon.

And here are the things I found notable for fans!

1. The book is dedicated "For Jane, My Ohimesama." I sure hope they're on speaking terms again after what happened in "Restaurant Wars."

2. Ronaldo gives the Crystal Gems his own names, referring to them as Square Head, Princess Nose, and Purple Girl.

3. When covering the fire breath incident from "Joking Victim," Ronaldo refers to Lars as "Local sarcasm dispenser Lars Barriga." This was the first place where Lars's last name was dropped.

4. The page about Onion's weirdness details an interaction that did not happen in the show but explains one that did: The ketchup packets that he famously ran over with his scooter in "Onion Trade" were begged from Ronaldo one day. Onion offered a photo of Ronaldo in third grade in exchange. (???)

5. Funland was apparently established over a century ago under the name Frederick Ulysses Neptune's Land of Mechanical Oddities and Entertainment. The entry also mentions that it contained a future-telling robot, a reference to "Future Boy Zoltron."

6. An apparent contradiction: Ronaldo, while discussing the video game Teens of Rage, identifies with the game because he is a teen who has rage. This would make him at the oldest nineteen if he is a teen. But in the section on the Lighthouse, he refers to the Beach City Explorer Club he had in his childhood with Lars, and claims that "fifteen years later" they had an incident there with the Lighthouse Gem. If it was fifteen years later but he's no older than nineteen, that happened when he was four, and that's impossible; he was far older than four in the flashback scene with Lars.

7. In a note to cover his butt from the Labor Department, Ronaldo suggests his brother is just a really short eighteen-year-old to distract from the fact that the family business is most likely exploiting him in child labor.

8. In his bit about "Teens of Rage," Ronaldo tells you about special moves for his favorite character, Gary Sunglasses. I think this is a contradiction to the episode "Arcade Mania" because while Steven is trying to teach Garnet how to play the game, he narrates that he thinks she's "a Joe Rock kinda gal," and the same character is pictured on the choosing screen.

9. Ronaldo refers to Stevonnie as "the mysterious Racer S." while discussing the greatest race he ever saw at Race Mountain. Not sure where he got "S." from, especially since he appears to have been in attendance at Stevonnie's first appearance at the rave in "Alone Together" and presumably witnessed their unfusion.

10. Other wrestlers besides those shown in the wrestling episodes are introduced in a promo flyer. We now have After School Champion Assistant Principal Gene McCormick, Culinary Tag Team Champions Baste Face and the Iron Saucier, Women's Caped Crusader Champion Tina "Ten Fingers" Gonzales, X-Treme League Champion Presented by Guacola The Ocean Town Kid, Interdimensional Champion of the Multiverse Glossy Wayne, and Old Timey Senior League Tag Team Champions Sarsparilla Frank and the Colonial Terror.

11. When talking about how to brood properly, Ronaldo refers to his hair as his "frylocks." Ha.

12. The lighthouse was apparently constructed two hundred years ago to keep people away from Beach City, not to help the ships be guided in. This might be one of the true things in the book. Who knows?

13. In "explaining" Peridot's broadcast that happened during "Cry For Help," Ronaldo comments that Peridot called herself Peridot, but that it is actually pronounced "Peridot." (It isn't discussed exactly what he means here, but he's surely making reference to the fact that the more common pronunciation of "peridot" does not have the T pronounced, even though the show uses the version where the T IS pronounced.)

14. Ronaldo, while explaining the Great Diamond Authority to us, assigns the Diamonds' underlings into a strict hierarchical society made up of the classes Chalk, Slate & Granite, Rock Candy, and Clods.

15. Ronaldo terms the earthquakes from the beginning of Season 3 to be "Cluster Quakes" because they came in clusters. Fans will know that they actually did come from a giant mutant Gem Fusion buried in the Earth which is called the Cluster.

The book has some new art that isn't just screencaps from the show, and it's a fun ride. I recommend it, and it's cheap!

[SU Book and Comic Reviews]

59 notes

·

View notes

Photo

How to Turn Low-Value Content Into Neatly Organized Opportunities - Next Level

Posted by jocameron

Welcome to the newest installment of our educational Next Level series! In our last post, Brian Childs offered up a beginner-level workflow to help discover your competitor’s backlinks . Today, we’re welcoming back Next Level veteran Jo Cameron to show you how to find low-quality pages on your site and decide their new fate. Read on and level up!

With an almost endless succession of Google updates fluctuating the search results, it’s pretty clear that substandard content just won’t cut it.

I know, I know — we can’t all keep up with the latest algorithm updates. We’ve got businesses to run, clients to impress, and a strong social media presence to maintain. After all, you haven’t seen a huge drop in your traffic. It’s probably OK, right?

So what’s with the nagging sensation down in the pit of your stomach? It’s not just that giant chili taco you had earlier. Maybe it’s that feeling that your content might be treading on thin ice. Maybe you watched Rand’s recent Whiteboard Friday ( How to Determine if a Page is “Low Quality” in Google’s Eyes ) and just don’t know where to start.

In this edition of Next Level, I’ll show you how to start identifying your low-quality pages in a few simple steps with Moz Pro’s Site Crawl . Once identified, you can decide whether to merge, shine up, or remove the content.

A quick recap of algorithm updates The latest big fluctuations in the search results were said to be caused by King Fred: enemy of low-quality pages and champion of the people’s right to find and enjoy content of value.

Fred took the fight to affiliate sites, and low-value commercial sites were also affected .

The good news is that even if this isn’t directed at you, and you haven’t taken a hit yourself, you can still learn from this update to improve your site. After all, why not stay on the right side of the biggest index of online content in the known universe? You’ll come away with a good idea of what content is working for your site, and you may just take a ride to the top of the SERPs. Knowledge is power, after all.

Be a Pro It’s best if we just accept that Google updates are ongoing; they happen all.the.time. But with a site audit tool in your toolkit like Moz Pro’s Site Crawl, they don’t have to keep you up at night. Our shiny new Rogerbot crawler is the new kid on the block, and it’s hungry to crawl your pages.

If you haven’t given it a try, sign up for a free trial for 30 days:

Start a free trial

If you’ve already had a free trial that has expired, write to me and I’ll give you another , just because I can.

Set up your Moz Pro campaign — it takes 5 minutes tops — and Rogerbot will be unleashed upon your site like a caffeinated spider.

Rogerbot hops from page to page following links to analyze your website. As Rogerbot hops along, a beautiful database of pages is constructed that flag issues you can use to find those laggers. What a hero!

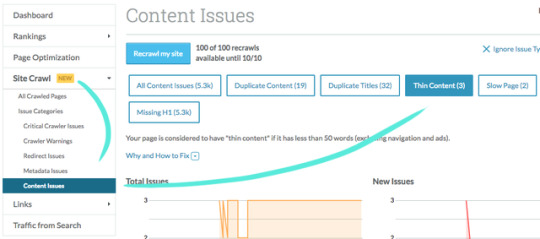

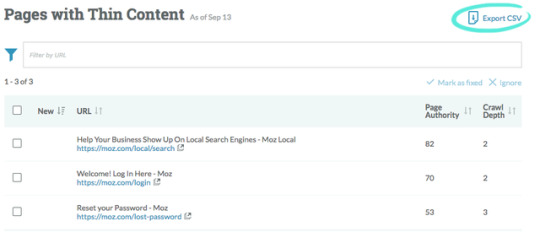

First stop: Thin content Site Crawl > Content Issues > Thin Content Thin content could be damaging your site. If it’s deemed to be malicious, then it could result in a penalty. Things like zero-value pages with ads or spammy doorway pages — little traps people set to funnel people to other pages — are bad news.

First off, let’s find those pages. Moz Pro Site Crawl will flag “thin content” if it has less than 50 words (excluding navigation and ads).

Now is a good time to familiarize yourself with Google’s Quality Guidelines . Think long and hard about whether you may be doing this, intentionally or accidentally.

You’re probably not straight-up spamming people, but you could do better and you know it. Our mantra is (repeat after me): “ Does this add value for my visitors?” Well, does it?

Ok, you can stop chanting now.

For most of us, thin content is less of a penalty threat and more of an opportunity. By finding pages with thin content, you have the opportunity to figure out if they’re doing enough to serve your visitors. Pile on some Google Analytics data and start making decisions about improvements that can be made.

Using moz.com as an example, I’ve found 3 pages with thin content. Ta-da emoji!

I’m not too concerned about the login page or the password reset page. I am, however, interested to see how the local search page is performing. Maybe we can find an opportunity to help people who land on this page.

Go ahead and export your thin content pages from Moz Pro to CSV.

We can then grab some data from Google Analytics to give us an idea of how well this page is performing. You may want to look at comparing monthly data and see if there are any trends, or compare similar pages to see if improvements can be made.

I am by no means a Google Analytics expert, but I know how to get what I want. Most of the time that is, except when I have to Google it, which is probably every second week.

Firstly: Behavior > Site Content > All Pages > Paste in your URL

Pageviews - The number of times that page has been viewed, even if it’s a repeat view.

Avg. Time on Page - How long people are on your page

Bounce Rate - Single page views with no interaction

For my example page, Bounce Rate is very interesting. This page lives to be interacted with. Its only joy in life is allowing people to search for a local business in the UK, US, or Canada. It is not an informational page at all. It doesn’t provide a contact phone number or an answer to a query that may explain away a high bounce rate.

I’m going to add Pageviews and Bounce Rate a spreadsheet so I can track this over time.

I’ll also added some keywords that I want that page to rank for to my Moz Pro Rankings. That way I can make sure I’m targeting searcher intent and driving organic traffic that is likely to convert.

I’ll also know if I’m being out ranked by my competitors. How dare they, right?

As we’ve found with this local page, not all thin content is bad content. Another example may be if you have a landing page with an awesome video that’s adding value and is performing consistently well. In this case, hold off on making sweeping changes. Track the data you’re interested in; from there, you can look at making small changes and track the impact, or split test some ideas. Either way, you want to make informed, data-driven decisions.

Action to take for tracking thin content pages Export to CSV so you can track how these pages are performing alongside GA data. Make incremental changes and track the results.

Second stop: Duplicate title tags Site Crawl > Content Issues > Duplicate Title Tags Title tags show up in the search results to give human searchers a taste of what your content is about. They also help search engines understand and categorize your content. Without question, you want these to be well considered, relevant to your content, and unique.

Moz Pro Site Crawl flags any pages with matching title tags for your perusal.

Duplicate title tags are unlikely to get your site penalized, unless you’ve masterminded an army of pages that target irrelevant keywords and provide zero value. Once again, for most of us, it’s a good way to find a missed opportunity.

Digging around your duplicate title tags is a lucky dip of wonder. You may find pages with repeated content that you want to merge, or redundant pages that may be confusing your visitors, or maybe just pages for which you haven’t spent the time crafting unique title tags.

Take this opportunity to review your title tags, make them interesting, and always make them relevant. Because I’m a Whiteboard Friday friend, I can’t not link to this title tag hack video. Turn off Netflix for 10 minutes and enjoy.

Pro tip: To view the other duplicate pages, make sure you click on the little triangle icon to open that up like an accordion.

Hey now, what’s this? Filed away under duplicate title tags I’ve found these cheeky pages.

These are the contact forms we have in place to contact our help team. Yes, me included — hi!

I’ve got some inside info for you all. We’re actually in the process of redesigning our Help Hub, and these tool-specific pages definitely need a rethink. For now, I’m going to summon the powerful and mysterious rel=canonical tag .

This tells search engines that all those other pages are copies of the one true page to rule them all. Search engines like this, they understand it, and they bow down to honor the original source, as well they should. Visitors can still access these pages, and they won’t ever know they’ve hit a page with an original source elsewhere. How very magical.

Action to take for duplicate title tags on similar pages Use the rel=canonical tag to tell search engines that http://bit.ly/2i0iYt8 is the original source.

Review visitor behavior and perform user testing on the Help Hub. We’ll use this information to make a plan for redirecting those pages to one main page and adding a tool type drop-down.

More duplicate titles within my subfolder-specific campaign Because at Moz we’ve got a heck of a lot of pages, I’ve got another Moz Pro campaign set up to track the URL moz.com/blog. I find this handy if I want to look at issues on just one section of my site at a time.

You just have to enter your subfolder and limit your campaign when you set it up.

Just remember we won’t crawl any pages outside of the subfolder. Make sure you have an all-encompassing, all-access campaign set up for the root domain as well.

Not enough allowance to create a subfolder-specific campaign? You can filter by URL from within your existing campaign.

In my Moz Blog campaign, I stumbled across these little fellows:

http://bit.ly/2i1CZPM

��http://bit.ly/2i1CZPM-10504

This is a classic case of new content usurping the old content. Instead of telling search engines, “Yeah, so I’ve got a few pages and they’re kind of the same, but this one is the one true page,” like we did with the rel=canonical tag before, this time I’ll use the big cousin of the rel=canonical, the queen of content canonicalization, the 301 redirect .

All the power is sent to the page you are redirecting to, as well as all the actual human visitors.

Action to take for duplicate title tags with outdated/updated content Check the traffic and authority for both pages, then add a 301 redirect from one to the other. Consolidate and rule.

It’s also a good opportunity to refresh the content and check whether it’s... what? I can’t hear you — adding value to my visitors ! You got it.

Third stop: Duplicate content Site Crawl > Content Issues > Duplicate Content When the code and content on a page looks the same are the code and content on another page of your site, it will be flagged as “Duplicate Content.” Our crawler will flag any pages with 90% or more overlapping content or code as having duplicate content.

Officially, in the wise words of Google, duplicate content doesn’t incur a penalty . However, it can be filtered out of the index, so still not great.

Having said that, the trick is in the fine print. One bot’s duplicate content is another bot’s thin content, and thin content can get you penalized. Let me refer you back to our old friend, the Quality Guidelines .

Are you doing one of these things intentionally or accidentally? Do you want me to make you chant again?

If you’re being hounded by duplicate content issues and don’t know where to start, then we’ve got more information on duplicate content on our Learning Center .

I’ve found some pages that clearly have different content on them, so why are these duplicate?

So friends, what we have here is thin content that’s being flagged as duplicate.

There is basically not enough content on the page for bots to distinguish them from each other. Remember that our crawler looks at all the page code, as well as the copy that humans see.

You may find this frustrating at first: “Like, why are they duplicates?? They’re different, gosh darn it!” But once you pass through all the 7 stages of duplicate content and arrive at acceptance, you’ll see the opportunity you have here. Why not pop those topics on your content schedule? Why not use the “queen” again, and 301 redirect them to a similar resource, combining the power of both resources? Or maybe, just maybe, you could use them in a blog post about duplicate content — just like I have.

Action to take for duplicate pages with different content Before you make any hasty decisions, check the traffic to these pages. Maybe dig a bit deeper and track conversions and bounce rate, as well. Check out our workflow for thin content earlier in this post and do the same for these pages.

From there you can figure out if you want to rework content to add value or redirect pages to another resource.

This is an awesome video in the ever-impressive Whiteboard Friday series which talks about republishing. Seriously, you’ll kick yourself if you don’t watch it.

Broken URLs and duplicate content Another dive into Duplicate Content has turned up two Help Hub URLs that point to the same page.

These are no good to man or beast. They are especially no good for our analytics — blurgh, data confusion! No good for our crawl budget — blurgh, extra useless page! User experience? Blurgh, nope, no good for that either.

Action to take for messed-up URLs causing duplicate content Zap this time-waster with a 301 redirect. For me this is an easy decision: add a 301 to the long, messed up URL with a PA of 1, no discussion. I love our new Learning Center so much that I’m going to link to it again so you can learn more about redirection and build your SEO knowledge.

It’s the most handy place to check if you get stuck with any of the concepts I’ve talked about today.

Wrapping up While it may feel scary at first to have your content flagged as having issues, the real takeaway here is that these are actually neatly organized opportunities.

With a bit of tenacity and some extra data from Google Analytics, you can start to understand the best way to fix your content and make your site easier to use (and more powerful in the process).

If you get stuck, just remember our chant: “Does this add value for my visitors?” Your content has to be for your human visitors, so think about them and their journey. And most importantly: be good to yourself and use a tool like Moz Pro that compiles potential issues into an easily digestible catalogue.

Enjoy your chili taco and your good night’s sleep!

Sign up for The Moz Top 10 , a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don’t have time to hunt down but want to read!

http://bit.ly/2i1D0mO

#leadgeneration #socialmediamarketing #articlewriting #internetmarketing #seo #bestlocalseo #blogpower #lagunabeachseo #newportbeachseo #huntingtonbeachseo

#leadgeneration#socialmediamarketing#articlewriting#internetmarketing#seo#bestlocalseo#blogpower#lagunabeachseo#newportbeachseo#huntingtonbeachseo

1 note

·

View note

Text

How to Turn Low-Value Content Into Neatly Organized Opportunities - Next Level

Posted by jocameron

Welcome to the newest installment of our educational Next Level series! In our last post, Brian Childs offered up a beginner-level workflow to help discover your competitor's backlinks. Today, we're welcoming back Next Level veteran Jo Cameron to show you how to find low-quality pages on your site and decide their new fate. Read on and level up!

With an almost endless succession of Google updates fluctuating the search results, it’s pretty clear that substandard content just won’t cut it.

I know, I know — we can’t all keep up with the latest algorithm updates. We’ve got businesses to run, clients to impress, and a strong social media presence to maintain. After all, you haven’t seen a huge drop in your traffic. It’s probably OK, right?

So what’s with the nagging sensation down in the pit of your stomach? It’s not just that giant chili taco you had earlier. Maybe it’s that feeling that your content might be treading on thin ice. Maybe you watched Rand’s recent Whiteboard Friday (How to Determine if a Page is "Low Quality" in Google's Eyes) and just don’t know where to start.

In this edition of Next Level, I’ll show you how to start identifying your low-quality pages in a few simple steps with Moz Pro's Site Crawl. Once identified, you can decide whether to merge, shine up, or remove the content.

A quick recap of algorithm updates

The latest big fluctuations in the search results were said to be caused by King Fred: enemy of low-quality pages and champion of the people’s right to find and enjoy content of value.

Fred took the fight to affiliate sites, and low-value commercial sites were also affected.

The good news is that even if this isn’t directed at you, and you haven’t taken a hit yourself, you can still learn from this update to improve your site. After all, why not stay on the right side of the biggest index of online content in the known universe? You’ll come away with a good idea of what content is working for your site, and you may just take a ride to the top of the SERPs. Knowledge is power, after all.

Be a Pro

It’s best if we just accept that Google updates are ongoing; they happen all.the.time. But with a site audit tool in your toolkit like Moz Pro's Site Crawl, they don’t have to keep you up at night. Our shiny new Rogerbot crawler is the new kid on the block, and it’s hungry to crawl your pages.

If you haven’t given it a try, sign up for a free trial for 30 days:

Start a free trial

If you’ve already had a free trial that has expired, write to me and I’ll give you another, just because I can.

Set up your Moz Pro campaign — it takes 5 minutes tops — and Rogerbot will be unleashed upon your site like a caffeinated spider.

Rogerbot hops from page to page following links to analyze your website. As Rogerbot hops along, a beautiful database of pages is constructed that flag issues you can use to find those laggers. What a hero!

First stop: Thin content

Site Crawl > Content Issues > Thin Content

Thin content could be damaging your site. If it’s deemed to be malicious, then it could result in a penalty. Things like zero-value pages with ads or spammy doorway pages — little traps people set to funnel people to other pages — are bad news.

First off, let’s find those pages. Moz Pro Site Crawl will flag "thin content" if it has less than 50 words (excluding navigation and ads).

Now is a good time to familiarize yourself with Google’s Quality Guidelines. Think long and hard about whether you may be doing this, intentionally or accidentally.

You’re probably not straight-up spamming people, but you could do better and you know it. Our mantra is (repeat after me): “Does this add value for my visitors?” Well, does it?

Ok, you can stop chanting now.

For most of us, thin content is less of a penalty threat and more of an opportunity. By finding pages with thin content, you have the opportunity to figure out if they're doing enough to serve your visitors. Pile on some Google Analytics data and start making decisions about improvements that can be made.

Using moz.com as an example, I’ve found 3 pages with thin content. Ta-da emoji!

I’m not too concerned about the login page or the password reset page. I am, however, interested to see how the local search page is performing. Maybe we can find an opportunity to help people who land on this page.

Go ahead and export your thin content pages from Moz Pro to CSV.

We can then grab some data from Google Analytics to give us an idea of how well this page is performing. You may want to look at comparing monthly data and see if there are any trends, or compare similar pages to see if improvements can be made.

I am by no means a Google Analytics expert, but I know how to get what I want. Most of the time that is, except when I have to Google it, which is probably every second week.

Firstly: Behavior > Site Content > All Pages > Paste in your URL

Pageviews - The number of times that page has been viewed, even if it’s a repeat view.

Avg. Time on Page - How long people are on your page

Bounce Rate - Single page views with no interaction

For my example page, Bounce Rate is very interesting. This page lives to be interacted with. Its only joy in life is allowing people to search for a local business in the UK, US, or Canada. It is not an informational page at all. It doesn’t provide a contact phone number or an answer to a query that may explain away a high bounce rate.

I’m going to add Pageviews and Bounce Rate a spreadsheet so I can track this over time.

I’ll also added some keywords that I want that page to rank for to my Moz Pro Rankings. That way I can make sure I’m targeting searcher intent and driving organic traffic that is likely to convert.

I’ll also know if I’m being out ranked by my competitors. How dare they, right?

As we've found with this local page, not all thin content is bad content. Another example may be if you have a landing page with an awesome video that's adding value and is performing consistently well. In this case, hold off on making sweeping changes. Track the data you’re interested in; from there, you can look at making small changes and track the impact, or split test some ideas. Either way, you want to make informed, data-driven decisions.

Action to take for tracking thin content pages

Export to CSV so you can track how these pages are performing alongside GA data. Make incremental changes and track the results.

Second stop: Duplicate title tags

Site Crawl > Content Issues > Duplicate Title Tags

Title tags show up in the search results to give human searchers a taste of what your content is about. They also help search engines understand and categorize your content. Without question, you want these to be well considered, relevant to your content, and unique.

Moz Pro Site Crawl flags any pages with matching title tags for your perusal.

Duplicate title tags are unlikely to get your site penalized, unless you’ve masterminded an army of pages that target irrelevant keywords and provide zero value. Once again, for most of us, it’s a good way to find a missed opportunity.

Digging around your duplicate title tags is a lucky dip of wonder. You may find pages with repeated content that you want to merge, or redundant pages that may be confusing your visitors, or maybe just pages for which you haven’t spent the time crafting unique title tags.

Take this opportunity to review your title tags, make them interesting, and always make them relevant. Because I’m a Whiteboard Friday friend, I can’t not link to this title tag hack video. Turn off Netflix for 10 minutes and enjoy.

Pro tip: To view the other duplicate pages, make sure you click on the little triangle icon to open that up like an accordion.

Hey now, what’s this? Filed away under duplicate title tags I’ve found these cheeky pages.

These are the contact forms we have in place to contact our help team. Yes, me included — hi!

I’ve got some inside info for you all. We’re actually in the process of redesigning our Help Hub, and these tool-specific pages definitely need a rethink. For now, I’m going to summon the powerful and mysterious rel=canonical tag.

This tells search engines that all those other pages are copies of the one true page to rule them all. Search engines like this, they understand it, and they bow down to honor the original source, as well they should. Visitors can still access these pages, and they won’t ever know they've hit a page with an original source elsewhere. How very magical.

Action to take for duplicate title tags on similar pages

Use the rel=canonical tag to tell search engines that http://ift.tt/2vLlcAU is the original source.

Review visitor behavior and perform user testing on the Help Hub. We’ll use this information to make a plan for redirecting those pages to one main page and adding a tool type drop-down.

More duplicate titles within my subfolder-specific campaign

Because at Moz we’ve got a heck of a lot of pages, I’ve got another Moz Pro campaign set up to track the URL moz.com/blog. I find this handy if I want to look at issues on just one section of my site at a time.

You just have to enter your subfolder and limit your campaign when you set it up.

Just remember we won’t crawl any pages outside of the subfolder. Make sure you have an all-encompassing, all-access campaign set up for the root domain as well.

Not enough allowance to create a subfolder-specific campaign? You can filter by URL from within your existing campaign.

In my Moz Blog campaign, I stumbled across these little fellows:

http://ift.tt/2xw5RBL

http://ift.tt/2i18byE

This is a classic case of new content usurping the old content. Instead of telling search engines, “Yeah, so I’ve got a few pages and they’re kind of the same, but this one is the one true page,” like we did with the rel=canonical tag before, this time I’ll use the big cousin of the rel=canonical, the queen of content canonicalization, the 301 redirect.

All the power is sent to the page you are redirecting to, as well as all the actual human visitors.

Action to take for duplicate title tags with outdated/updated content

Check the traffic and authority for both pages, then add a 301 redirect from one to the other. Consolidate and rule.

It’s also a good opportunity to refresh the content and check whether it's... what? I can’t hear you — adding value to my visitors! You got it.

Third stop: Duplicate content

Site Crawl > Content Issues > Duplicate Content

When the code and content on a page looks the same are the code and content on another page of your site, it will be flagged as "Duplicate Content." Our crawler will flag any pages with 90% or more overlapping content or code as having duplicate content.

Officially, in the wise words of Google, duplicate content doesn’t incur a penalty. However, it can be filtered out of the index, so still not great.

Having said that, the trick is in the fine print. One bot’s duplicate content is another bot’s thin content, and thin content can get you penalized. Let me refer you back to our old friend, the Quality Guidelines.

Are you doing one of these things intentionally or accidentally? Do you want me to make you chant again?

If you’re being hounded by duplicate content issues and don’t know where to start, then we’ve got more information on duplicate content on our Learning Center.

I’ve found some pages that clearly have different content on them, so why are these duplicate?

So friends, what we have here is thin content that’s being flagged as duplicate.

There is basically not enough content on the page for bots to distinguish them from each other. Remember that our crawler looks at all the page code, as well as the copy that humans see.

You may find this frustrating at first: “Like, why are they duplicates?? They're different, gosh darn it!” But once you pass through all the 7 stages of duplicate content and arrive at acceptance, you’ll see the opportunity you have here. Why not pop those topics on your content schedule? Why not use the “queen” again, and 301 redirect them to a similar resource, combining the power of both resources? Or maybe, just maybe, you could use them in a blog post about duplicate content — just like I have.

Action to take for duplicate pages with different content

Before you make any hasty decisions, check the traffic to these pages. Maybe dig a bit deeper and track conversions and bounce rate, as well. Check out our workflow for thin content earlier in this post and do the same for these pages.

From there you can figure out if you want to rework content to add value or redirect pages to another resource.

This is an awesome video in the ever-impressive Whiteboard Friday series which talks about republishing. Seriously, you’ll kick yourself if you don’t watch it.

Broken URLs and duplicate content

Another dive into Duplicate Content has turned up two Help Hub URLs that point to the same page.

These are no good to man or beast. They are especially no good for our analytics — blurgh, data confusion! No good for our crawl budget — blurgh, extra useless page! User experience? Blurgh, nope, no good for that either.

Action to take for messed-up URLs causing duplicate content

Zap this time-waster with a 301 redirect. For me this is an easy decision: add a 301 to the long, messed up URL with a PA of 1, no discussion. I love our new Learning Center so much that I’m going to link to it again so you can learn more about redirection and build your SEO knowledge.

It’s the most handy place to check if you get stuck with any of the concepts I’ve talked about today.

Wrapping up

While it may feel scary at first to have your content flagged as having issues, the real takeaway here is that these are actually neatly organized opportunities.

With a bit of tenacity and some extra data from Google Analytics, you can start to understand the best way to fix your content and make your site easier to use (and more powerful in the process).

If you get stuck, just remember our chant: "Does this add value for my visitors?” Your content has to be for your human visitors, so think about them and their journey. And most importantly: be good to yourself and use a tool like Moz Pro that compiles potential issues into an easily digestible catalogue.

Enjoy your chili taco and your good night’s sleep!

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don't have time to hunt down but want to read!

from The Moz Blog http://ift.tt/2yd2O5i

via IFTTT

1 note

·

View note

Text