Don't wanna be here? Send us removal request.

Text

Final Reflection (5/11/21)

With my second semester of the computer science senior seminar, and my undergraduate career, coming to a close, I would like to reflect on the experience that I had in the course and how it has been essential to my understanding of how lessons from computing fit into the larger world. An impactful piece of our seminar was the ethical discussions we had periodically throughout the semester. I found these extremely insightful because we were able to explore topics on current events and the ethical issues they pose that wouldn’t naturally be covered in course curriculums, but would be necessary for society to consider to maintain equity and morality. Furthermore, because classmates were able and willing to express disagreeing viewpoints, we were able to have enriching discussions that taught us various perspectives and considerations that we must have when making future decisions on the role technology can play in our lives.

The other major component of the seminar was my team’s engagement in the OpenMRS HFOSS community. It was profoundly difficult to get our feet wet in such a large open source project because of the complexities of the code base and its structure as well as the interactive technologies that it uses for its medical records database system. Nevertheless, since we were consistently faced with blockers and challenges, we also had the opportunity to be introduced to a lot of new technology and coding practices with the help and support of the OpenMRS community.

The experiences I had in this course helped to cultivate, in particular, my willingness to engage in the unfamiliar and the skill to adapt to a changing world. Every code and community interaction I had, I was faced with a new concept with minimal prior knowledge to supplement my understanding. I have struggled in the past in these situations because it is difficult to know where to start in order to get your bearings for an unfamiliar work environment such that you can become a helpful contributor. In OpenMRS, this process took months, but eventually I was able to begin to understand the interactions that community members have with each other to organize a large group project and I learned to understand smaller segments of the project that were more within my grasp. I found that asking the community for help was pivotal to this process. Having struggled with the unfamiliar and finding success in overcoming it, I feel that I am now much more willing to tackle a new unfamiliar circumstance with the understanding that it will not always be unfamiliar and that it is an opportunity to learn.

The discussions, on the other hand, developed my skill to adapt to a changing world. We discussed the entrance of new technologies and ethical problems into the world such as technological warfare and artificial intelligence, bioinformatics and facial recognition, and information collection and privacy. These topics are at the forefront of future and even recent applications of technology and require our due diligence to respond effectively to ethical concerns they pose. It is often the case that technology progresses faster than the policy to mitigate it and by understanding the ways in which we should check technology, I am better equipped to handle the dangers it imposes both on me and to other members of society.

The computer science senior seminar helped me to develop these skills which will continue to help my studies in the future. I am pursuing medical school, a field that is very unfamiliar to me. Given my experience in facing the unknown within computing, I know that the struggles you face early on in a new experience will eventually subside and I can be comfortable in knowing that to be an expert, I have to start as a beginner learning everything for the first time. The skill to adapt to a changing world will also compliment my efforts in the medical field as I will be watching for inequities within the medical field and policy responses that combat it.

0 notes

Text

Fawkes Software: How Effective is it at Protecting Your Face in Reality?

Given the prevalent use of social media, our faces are publicly available online for others to collect and possibly use for malicious purposes such as through deep fake and biometric hacking. A team of researchers has developed a tool, Fawkes, that could potentially solve the problem of our faces being recognized on the internet. The technology works by altering the pixels of an image without changing the image’s appearance to the human eye. With this cloak applied before the image is uploaded to the internet, it confuses the facial recognition technology that would try to identify the image. The technology has proven successful with a more than 95% protection rate against facial recognition models and remains at a more than 80% protection rate even if the model has obtained the corresponding uncloaked images. This suggests that the technology is rather resilient. Furthermore, there are a series of cloaking levels by which one can apply to a photo and each step upgrades the protection. The most robust cloak consistently scored a 100% protection rate against leading facial recognition models. The technology is also free and downloadable for Mac, Linux, and Windows operating systems.

The problem with using the software, however, is that it must be applied on your own device, with the app downloaded, each time before you post an image. While it only takes a few minutes to process each image with the software, this is enough of an inconvenience for many people to choose not to use the technology. Because all of society is at the mercy of facial recognition, the cloaking needs to be adopted throughout society for the proper protections to be in place. Currently, it is only the more technologically involved individuals who are taking this precaution. Unfortunately, even if all of society adopted the Fawkes software strategy, in many ways it is too late to enjoy its protection because firms already have access to billions of photos from the internet and there is no way to undo their access of those images. Even so, with enough time and adoption, the security will be improved. The Fawkes research team suggests that companies, like Facebook, should adopt the technology into their platforms such that they can use the uncloaked images within the app but then cloak them before they enter the web such that users are not at the mercy of malicious users. I think that this would be a good start, especially because it would remove the inconvenience that an individual would have to face by cloaking their own images.

I do wonder however, while the wide-scale introduction and use of this technology would greatly improve our privacy, what would be the cost to innovation and productivity?

0 notes

Text

Healthcare Data Security- HIPAA (4/16/21)

Data privacy is a major concern amongst people since the internet has become such a prolific source of communication and information congregation. Information is subject to both misuse and theft by hackers and data breaches which could easily cause millions of dollars in damage. Due to the sensitive and personal nature of medical information, data security in this industry has been forced by national security standards from the Health Insurance Portability and Accountability Act (HIPAA).

HIPAA provides the basic policies and procedures expected of people and technology that deal with healthcare information. It serves to protect individually identifiable healthcare information. The Security Rule under HIPAA requires that entities maintain safeguards to protect this information by ensuring safe and confidential creation and transmission of the information, anticipating potential security threats to the data, protecting against misuse of the data, and ensuring active compliance by the medical professionals. While these expectations are universal, they are flexible by means of implementation by provider. In other words, the provider can assess their own appropriate security needs. They are also expected to perform risk analysis measures on an ongoing basis to ensure the maintenance of the data integrity and safety.

Some ways in which providers adhere to HIPAA data security requirements are by using encryption methods to store information (and especially when transmitting the information), strictly using authorized and secure networks when transferring information between systems, enabling access control steps such as identity verification for authorized individuals, implementing audit controls for tracking access history, and supporting integrity controls to ensure data is not improperly altered or destroyed.

Note that while these are simply suggestions for providers to implement to maintain their patient information securely, they must put a great deal of emphasis on the efficacy of their efforts. In the event that a data breach occurs, they could face lawsuits from patients and furthermore, they are subject to pass compliance inspections to assure that they are conducting adequate precautions.

0 notes

Text

Quantum Computing May Be Here Tomorrow, So What Should We Do Today? How Can We Hedge Our Bets? (4/3/21)

A well voiced concern of the coming of the quantum era is how it will affect our information security. With Quantum computers, much of the encryption methods used today can be decrypted rapidly and thus there is a major concern that information will become vulnerable as this transition occurs. Several cryptography strategies that can be implemented on a regular computer can defend against quantum computing, in particular, lattice cryptography and symmetric encryption such as AES, DES, and the Simon cipher. But altering encryptions to these methods isn’t free. While encrypting data does have financial costs, the major deterrent is often the time associated with it. The process of decrypting and encrypting data can take hours, even days, for some data sets, meaning that there are large efficiency costs associated with the transition. Furthermore, many companies’ reluctance to encrypt stem from their belief that encryption causes substantially slower performance. However, while this is historically true, it is no longer a problem because a lot of encryption methods have been transferred from software to hardware and are otherwise much more efficient.

Nevertheless, more companies are realizing that the cost of a data breach (both direct financial cost and cost in reputation) vastly exceeds the cost of strong encryption. For instance, the cost to encrypt data for a single computer is on average $235 compared to the estimated $4,650 saved from protection against a data breach. For a company, using proper encryption of data can save an average of $20,000 per laptop, making it well worth the price of transition.

Given the importance of data protection both for the client and the company, measures must be taken now to prevent data breaches associated with the introduction of quantum computing. Since quantum computing could be a reality in as little as 10 years, companies need to undergo risk assessment to determine what data is critical to be kept secure and what data must remain secure for many years. Companies should start with this type of data, identify how its protection can be continued via hardware and software changes and ensure that this data is kept up to date with the best encryption practices. As the quantum era approaches, data that has a shorter life span should slowly adopt the cryptography practices that defend against quantum computing as well.

When discussing the risk of data and security breaches, all I can think of is why don’t we insure against this. After a quick search, I found that there is in fact cyber security and data breach insurance. I imagine that this industry should continue expanding such that companies can pay subscriptions for insurance in exchange for hedging against the costs of staying up to date on cryptography best practices and even remedy the costs when the information is maliciously accessed.

There is no doubt that there will be a security risk during the transition to quantum computing, but with preventative efforts and hedging, much of the financial risk of this transition can be reduced and therefore, companies today should begin preparations as soon as possible.

0 notes

Text

The Legality and Morality of Autonomous Weapons (3/23/2021)

With the rapid progression of Internet of Things technologies, especially in the military, it is essential that guidelines for the use of autonomous weapons are discussed and put into practice soon. There has been a strong push for the use of autonomous weapons to be banned, such as by the chair of Yale’s technology and ethics study group, Wendell Wallach, who called for an executive order declaring use of autonomous weapons to violate the laws of war. But, push back to this movement exists as the automation of warfare would be advantageous to the military and furthermore, it is difficult to regulate technology before it has been fully produced and put into practice. Though the counterargument for the latter is that once the systems are in place, it is much more difficult to restrict the use than to do so before it has been adopted. Technologies have been banned before they have been invented in the past, such as the ban on blinding lasers and human cloning, and in that regard, there is already a precedent on which to act upon for potentially banning autonomous weapons. Furthermore, there have been military weapons that have been banned such as land mines which were deemed unethical because there should be a human making the decision to kill within a decently short time frame of the kill. Many would prefer the technology to be banned altogether, but because it is likely inevitable that autonomous weapons will be heavily adopted soon, conclusions of their morality must be made.

What makes this ethical conversation more difficult is that as it stands, autonomous weapons do not violate the existing laws of war. The two key war laws state that weapons should not be indiscriminate, meaning they should be able to target a specific and lawful target, and should not cause unnecessary suffering. Autonomous weapons would not entail any certain unnecessary suffering on behalf of the target and although they would not be directed to a target by a human, they would still acquire a discriminate target with the proper programming ensuring they did not violate the rule against indiscriminate weapons. A notable distinction to make here though is that while an autonomous weapon can obtain a specific target, it must do so by identifying lawful targets, such as military individuals, rather than unlawful targets, like civilians. This can only be accurately done by thorough and proper training of the autonomous weapon and because reliability of identification could vary dramatically between autonomous weapons, it may constitute restrictions of the environment as to which the weapon may be used. For instance, use at a battleground where civilians are not present may be acceptable, but use in urban warfare may not. A proposed solution to this is to make the weapon, mostly autonomous in that it can acquire and aim at a target, but that the trigger be left to a human to make the final decision.

Opponents of autonomous warfare raise four key arguments against these weapons as summarized below:

Machine programming may never converge on satisfying fundamental ethical and legal principles required because machines do not have the capacity to replicate human emotions such as compassion, empathy, and sympathy.

It is wrong to remove a human moral agent completely from the decision-making process of lethal actions.

Machines and autonomous weapons cannot be held accountable for war crimes like a human can.

The introduction of autonomous weapons would remove soldiers from risk and thus would remove much of the incentive to avoid armed conflicts.

The addition of autonomous weapons would dramatically change the fundamental structure of wartime etiquette. And while there are certainly benefits, I think that the fear of improper use and mistakes will continue to spark intense push-back on their full adoption. I think that a happy medium in most circumstances to the benefits and problems associated with using autonomous weapons is to mostly autotomize the warfare equipment to a degree in which security can be maintained, and to remedy them with a human moral agent to make the final life or death decision. If autonomous weapons were completely removed from the military, I worry that the loss of information and strategic offense would render the military obsolete in the future. Therefore, while precautions must be made, they must be made in a way such that we are not left completely vulnerable to other military progress.

0 notes

Text

Check The Patriot Act (3/5/2021)

After 9/11, the Patriot Act was passed enabling the government to expand surveillance measures to bring terrorists to justice and to protect against future terrorist attacks. While the passing of this act benefited our country’s national security by streamlining anti-terrorism efforts, it also put citizens in a new, more exposed and vulnerable circumstance. The US Patriot act enables the government to investigate any person they deem fit, infringing on the right to privacy and right against unreasonable search and seizure.

Mary Lieberman described her own experience trying (and mostly failing) to fight the patriot act as the FBI demanded all information on her Iraqi-born clients. What she found to be the most terrifying was that the individuals being investigated were not informed, meaning the FBI has an unreasonable level of secrecy that is protected by the Patriot Act. Two obvious concerned arose to me after hearing her story. The first is how biased surveillance can be. In 2004 when she was interviewed, there were no checks on the patriot act and there need not be published probable cause for someone to be surveilled. This meant that many individuals were surveyed exclusively due to their nationality without any probable cause. This biased and targeted approach meant that the targeted individuals were constantly under scrutiny and had to remain vigilant at all times to ensure they did not get wrongly accused of terrorist activities. Without a check on who can be surveyed, the FBI has the power to act on their biases in unethical ways. The second major problem that surfaced was similarly, how open ended the act itself is. There were no established protections against surveillance included in the act for Americans. Thus, everyone was vulnerable to its secrecy and exploitation. We can only hope that our collected information is not used maliciously. While widespread abuse of the Patriot Act had not been recorded, its potential to occur cannot be left unchecked.

The information that can be collected and misused by government surveillance (which was expanded since the patriot act) put individuals and our democracy in danger. The information is not protected by judicial oversight which means it can be used by law enforcement without authorization or warrants, can be used to investigate innocent people, can be used to sway elections or other societal matters through targeted efforts, and is paid for by the American citizens who are at its mercy.

There is no denying that the Patriot Act helps improve the safety of the United States against foreign terrorist threats. But, simply having societal benefits is not sufficient for unchecked power. The COVID-19 tracking application for instance, provides a valuable resource to reduce the spread of COVID-19, but I still would not elect to download it because it would constantly share my personal information with a 3rd party. The benefits of the Patriot act are important and should be preserved, but not at the cost of all our privacy. Trusting the good will of government officials to not abuse its power is naïve. Its reach must be checked to preserve the safety and privacy of the US citizens.

0 notes

Text

What Your Biometrics Say Can and Will Be Used Against You In The Court Of Law (2/16/2021)

It has really started to feel like the more I learn about technology, the more I realize we are all gradually losing our rights. In my last post about Autonomous Vehicles, I spoke of how their programming and regulation could potentially cost individuals their rights to life by leaving the choice of who lives or dies up to the discretion of others. But, at least in that circumstance, it is still more a problem of the future, even if it is soon. That is not the case with biometrics. Courts have already ruled that your biometric data is not protected by the 5th Amendment which protects you from self-incrimination.

I find this startling to say the least. You can lock your phone with a password, and have it protected against a court, but if it unlocks with a scan of your face or finger, they can force you to unlock it and any incriminating evidence they find will be entered into evidence against you. I do not understand why this was ruled to be acceptable given that a suspect does not have to reveal a written password. Biometric access to devices was created to simplify and speed up the login process on devices while also keeping it more secure. Until a few days ago, I did not have any negative thoughts of using biometric data except for the chance it could be stolen. Now I wonder if there is a point in which the world should stop innovating because it will negate all of an individuals rights. A perfectly efficient world is not worth our freedoms.

You might think: unless you are breaking the law, why should this concern you? I am guessing you have heard of the Salem Witch Trials? In this mass hysteria event, over 200 people were accused of witchcraft, over 140 were arrested, 20 were found guilty and executed, and 0 of them were witches. This sad time in history is a prime example of how pointing a finger at someone can cost them, even when they are innocent. We have the 6th Amendment that states we are innocent until proven guilty because of how powerful blame can be to people’s judgement. While people are less likely to be executed for crimes they do not commit in recent times, inaccurate blame still costs people their livelihoods, their careers, and their families. My concern is that if we permit courts to require individuals to unlock their personal belongings, we are fueling the blame game fire and can put people in the line of unreasonable informational search and seizure. Personal devices are an extension of who we are as individuals, they contain our memories, conversations, and secrets both good and bad. If it were up to me, in most cases, the owner of a device should not have to unlock it and incriminate themselves or expose their privacy, even if it can be unlocked with biometric data, simply for evidence acquisition.

Nevertheless, I still think there is a place for biometric data to be used in the prosecution of individuals, even if it enables the investigators to unlock a person’s phone for incriminating evidence. For instance, if a fingerprint is collected from a crime scene, that is probable cause and the police could then potentially use the fingerprint they collected to unlock the suspects phone themselves. I think this is different because the suspect was not forced to expose themselves but did so by placing themselves at the scene of a crime. Similarly, if a person has already been found guilty of the crime and has been sentenced, their rights change from that of an innocent person. This however is a slippery slope because if someone is found to be a terrorist, searching their phone seems like an obvious aid to public safety. Alternatively, if the person was charged with tax evasion, it may be unreasonable search and seizure to look for any dirt on that individual they can find. Everything should be within reason, but the lines are extremely difficult to define.

At least in the case of an individual being forced to unlock their device with their biometrics, I think the line is much clearer. The collection of biometric data in this specific case was intended to be used in lieu of a password. In that sense, using it to incriminate the person could be seen as an unethical and corrupt use of the biometric data. There are many ways to determine guilt, but taking away their 4th Amendment right, should never be one of them.

0 notes

Text

Ethics of Autonomous Vehicles--Your Choice Matters (2/12/2021)

Autonomous Vehicles (AVs) are not, nor will they become, perfect. Even Tesla, the company at the forefront of self-driving cars, has had multiple fatal crashes, one even resulting in the death of a pedestrian crossing the street. Accidents will surely happen, and when they do, the programmed response of the vehicle will matter morally, economically, and legally. Many may think that the primary goal of an algorithm that decides who should live and who should die should act to maximize the number of lives saved. While there is no right or wrong answer to how these vehicles should be programmed, in this blog, I will contest the popular idea of saving the most number of lives by looking through the lens of the Trolley problem. Consider the Trolley Problem:

You see a Trolley without breaks approaching a split in the tracks. With the course it is currently headed, 5 people will be killed by the Trolley. If you pull the lever and switch the path of the Trolley, one unsuspecting person will be killed by the Trolley. Should you pull the lever?

This thought experiment does not have a morally correct answer and is highly debated. Nevertheless, its value in understanding problems of ethics is insurmountable. When this question was first presented to me, I did not understand why someone might choose inaction (to not pull the lever) and leave the five people to die when they could reduce the number of deaths to one. My perspective changed when I was presented with the same problem in a different context:

You are a doctor, and you have five patients who will surely die if they do not receive an organ donation. You also have one patient who is perfectly healthy. Should you sacrifice the healthy patient so that they can donate the organs to allow the five other individuals to live?

Unsurprisingly, most people would say no -- the doctor should not steal the life of the healthy person to save the others from dying. This decision is identical to the Trolley problem although we have an easier time agreeing with each other as to which outcome is moral in the new circumstance. I believe the difference in understanding is that we perceive the patient’s organs as belonging to that individual. In that sense, saving the other five patients would require stealing the organs from the healthy person. However, the same concept of theft still applies to the Trolley problem, except that you would be stealing the life of the single person to save the lives of the other five. In either circumstance, if you save the five people, it is at the cost of another person’s life. Who are we to decide, when it is or is not okay to steal what belongs to someone for the benefit of others? Whether or not they would sacrifice themselves to save the many should be their decision and they are the only one with the moral grounds to decide. The same should apply for self-driving cars, especially if they gain large scale traction in the industry. Neither the number of people nor personal traits of the people should be used for a programmer to decide who they will program to die. It is not and should not be in the programmer’s jurisdiction.

So how would AVs be programmed? Who should program them? Proponents of saving the most lives would suggest that the government regulates the programming of AVs such that this goal could be met and enforced. But think about it. By handing this power to the government, you are effectively signing over consent for the government to determine the value of your life for you. As I mentioned before, the only person who should be able to decide to save another at the expense of a life, is the person who chooses to sacrifice themselves for the other person. While it is surely noble for an individual to sacrifice themselves for another, they do not have the moral obligation to do so. I, for one, would choose to live with the freedom to choose my own fate rather than live in a utopia constructed from the government regulating who has the right to live. That does not mean that I would not sacrifice my life for the lives of others, but I would want it to be my choice to do so.

0 notes

Text

The Ethics of Internet Censorship (2/2/2021)

What did the founding fathers intend when they passed the First Amendment that guarantees the freedom of expression? They intended it to be a protection for citizens from the government for their beliefs and opinions. But, in 1791 when the Bill of Rights was adopted, the internet did not exist and therefore was not the primary avenue of communication that it is today. It also meant that this amendment does not specify the protection of expression online.

The internet is a prolific means for individuals to express themselves through social media platforms. But, this convenience also gives rise to controversial topics such as whether it is ethical for the information collected about you to be sold to other people. However, this is only one half of the major problem derived from major companies such as Google, Facebook, and Twitter. They have the power to expose the data they have collected on individuals, but they also have the power to inhibit the expression of those same people. Every year, I see more and more censorship instances in which major social media companies block posts or add warnings to content posted by users of their software. It has increasingly become an avenue for these companies to push for certain ideologies and oppress contrary views. It is no doubt that companies, such as Twitter, have used their influence to control what their users can see on their platforms. An article by Townhall dives into more specifically how big tech companies use their power to suppress conservatives. These acts consist of things that are seemingly minor, such as adding “fact-check” warnings to conservative posts, but not liberal ones. It makes sense that a company who wants liberals elected to office would have the incentive to use a series of propaganda to influence elections. But, is this biased censorship ethical?

It is true that by using the large tech companies’ services, the users are agreeing to their terms and conditions. So, you may think the company should certainly have the power to control what information is appropriate for their platforms. However, with the size and influence these companies have on the public eye and the ability for individuals to express their first amendment rights, it becomes much more complicated whether it is ethical for companies to exercise control over expression within their platform, especially since many would consider these companies to have monopoly power.

Let’s consider this situation under the Social Contract Theory. Assuming that the right to freedom of expression is an implicit agreement amongst the society, any actions that impede this right would be unethical as the individual preventing others’ freedom of speech are not bearing the societal burden of having to accept that other have the right to disagree. The problem with this situation however, is that the Social Contract Theory expects the government to enforce the protection of freedom of expression, which they have not done to a full enough extend on the internet. Society would better coexist if individuals listened to each other and accepted disagreement and discussion on controversial topics. The existence of biased censorship breaks down the ability of individuals to have these necessary discussions. Therefore, because of the social unrest caused by the censorship, I believe this theory would deem the censorship unethical.

Looking at the situation from the lens of Kantianism reaches a similar conclusion. In Kantianism, people must be treated as an end, not a means to an end, and rules must be universal. In the circumstances of censorship of specifically right-leaning individuals, both expectations of Kantianism are violated. Censoring only, or almost exclusively, conservatives means that the rule of maintaining truthful and non-misleading information is violated by bias and thus the rule is not universal. Furthermore, censoring one side of the political spectrum is also a means in which a company can impose their viewpoints on the public. Thus, they are using these censored individuals as a means to an end (or to promote their political agenda). Both of these reasons support that although the platform belongs to the company, their censorship of conservatives is unethical.

Of course, content that is in the form of bullying or is otherwise dangerous should be taken down and that is a different problem to address entirely. Nevertheless, in the circumstance that opinion or perspective based information is censored, we must ensure that it is censored uniformly across all ideologies or not at all. Otherwise, the censorship is unethical.

0 notes

Text

When Uncomfortable... GROW (12/04/2020)

Now that my senior fall semester is coming to a close, I would like to share some of the growth I’ve had as an individual and how my experiences learning about and working with the OSS community has cultivated this growth.

At the start of this semester, my only real-world application of development was at my summer internships. The more I learned, the more it became abundantly clear the magnitude of tools, technologies, lingo, and strategies in the development world that I did not understand. It was daunting to say the least. Our coursework to this point was predominantly theoretical and when we were writing code, we always had the tool sets needed to figure out how to complete the assignments. However, in my experience working, this was never the case. I constantly had to rely on Google to help me learn and problem solve unfamiliar tasks. Feeling lost in a new position, though uncomfortable, is common and necessary for learning and growth.

This past semester, in my senior seminar capstone course, was the first time in the computer science major that I felt this level of unsureness and discomfort. Learning that we would work with a FOSS project overwhelmed me with the prospect of having to figure out the development environment and integrative tools required for that type of collaboration. What became increasingly apparent however, was that as we spent more time with the new materials, I became more confident in my ability to understand new concepts and handle unknown complications.

This course was structured around readings and discussions, hands-on activities, and work with our respective FOSS projects. The readings were profoundly helpful for me to get my bearings in the developer community. Previously we have learned to code and how to solve problems, but the readings in this class began to open our eyes to how you would actually use these skill sets to contribute to a team and what technological components you would have to interact with to create a final product. The hands-on activities were our first insights into applying the skillsets we read about and while I was not able to remember how to do many of the activities at the time, I have found that they were the first insight into methods that I have used continuously with my FOSS project. Simply having more practice using the terminal provided valuable insight to interacting files, directories, ports, and technologies in a way that I could not have understood prior to engaging and troubleshooting with them in the terminal.

I spent this semester working with OpenMRS, an HFOSS project that provides a medical records system to those in need, especially in the developing world. The OpenMRS community is extremely large and the development kit it requires is very version specific and nitpicky. At first this was very frustrating to me because I have always struggled with configuring development environments and handling unfamiliar software. But as I continued to troubleshoot the developer installation, I slowly began to understand how to debug error messages on my own and how to identify possible reasons they occur. Every error message is unique and unfamiliar, but having had to trouble shoot dozens this semester, they are not as daunting as they once were because I know that I have overcome similar errors in the past. Instead of immediately asking someone for help, I am now much more willing and confident to troubleshoot independently first and often can even find my own solutions. I also have a better idea of when it is necessary to outsource support.

The OpenMRS community has been pivotal to my growth with overcoming uncertainty. While the project itself is hard to understand in its full capacity, the community members have been extremely supportive. They have helped me to understand the interacting software components and the structure of the project itself. It is now clear to me that while unfamiliarity is necessary for individual growth, it is the community that is supporting you that plays a large role in determining whether you can overcome that discomfort.

A few months before the start of this semester, I made the decision to pursue a career as a doctor. I was unsure if development was something that I would try to take with me through that experience. While it can help me to perform research in the medical community, I was concerned that I did not know enough about systems to be effective. This course has given me the insight and background to more confidently learn new technologies and strategies that will enable me to effectively use development in the medical field to help individuals in the way that I dream to as a physician. Working with OpenMRS in particular has propelled my excitement to have the background of a developer because technology is what enables us to help millions of individuals worldwide to close the gaps of access and support. As I have spent more time with the OpenMRS community I have become increasingly engaged with their mission statement and efforts. While I wasn’t originally planning to continue development after I graduate, I am now increasingly excited to continue to work with this community because it supports an effort to help others, a goal that I believe in, and I want to continue paying the community back for the help and lessons they provided me when I was overcoming my discomfort around technological uncertainty.

0 notes

Text

Meritocracy: not a problem with concept, but a problem of implementation (11/22/20)

“Meritocracy��is the belief that those with merit float to the top - that they should be given more opportunities and be paid higher.“

Without a doubt, the term “meritocracy” is now being met with great disdain from many, including individuals in the tech industry and those contributing to open source software (OSS). Opponents of meritocracy, like Ashe Dryden, argue that the use of meritocracy enables those deemed as having more merit to bully and harass other members of the community and in turn creates a hostile environment that prevents others from rising to the top. But, I would argue that this negative environment would be counteracted simply through a code of conduct and set of expectations as discussed by Sebastion Benthall and Nick Doty. Furthermore, they point out that the beauty of open source is that you have the freedom to choose your environment, the ability to fork a project, and to choose your level of involvement, all of which provide natural checks on the establishment of toxic culture. These are all components that are relatively unique to the open source community compared to the tech industry. Likely, meritocracy in the commercial industry is another conversation entirely.

There are certainly plenty more reasons people find meritocracy to be bad, though most can be counteracted with implementation design. Another argument that I feel is worth discussing is the reality that companies often make unfair hiring decisions because they do so based on an individuals contribution to OSS which by nature will hurt people of minorities and disadvantaged backgrounds as they would likely not have as much time and money to spend making contributions as their counterparts. And I will full heartedly agree that that would undermine a persons actual merit by creating a lesser perceived merit from the standpoint of a company, which is wrong. But I also don’t see that as an argument that meritocracy is a problem as a concept. Rather, it is a systemic problem with the evaluation tactics at firms who require OSS contributions as a means of merit evaluation. Those companies should match that type of qualification criteria with other testing criteria to determine merit beyond volunteer work to level the playing field for disadvantaged people.

I would absolutely agree that those at the top are often not the individuals who truly have the most merit, so I understand the resistance to meritocracy in that respect. But, in an idealistic system that could necessarily enable those with the most merit to rise to the top, I am confident that those individuals should have more power and compensation than those who can’t reach that level of proficiency and influence. I don’t think that many would disagree with that aspect of meritocracy; the disagreement comes with how a system defines and promotes “merit”. In many ways I think that OSS communities can overcome the idea of false merit because they are a congregation of individuals who should not have preexisting notions to cloud judgement of each other. More time and contributions should and do enable status elevation through the open source community and in a way that the bureaucratic tech industry cannot rival. Regardless of a person’s background and time allotment, those who can contribute more should have more power to make decisions within the community because they play a more vital role in the project’s outcome. I acknowledge that this structure can result in a less diverse, more privileged control structure, however in the community itself seen as a vacuum this design still makes functional sense. The merit within an open source community is generally much more fair as it is related to contributions. The perceived merit that people take from this experience, or lack thereof, and how it influences their ability to be hired is the true problem. That problem should be solved by efforts of companies to see past situational merit and hire those with the most potential.

From my perspective, the open source community is not responsible on its own to debunk representation problems beyond encouraging inclusion and support. Dryden unpacks some ways that the industry could overcome some of these problems of evaluation and I think her strategies would be a great start to overcoming the misunderstanding of the value of meritocracy.

There are definitely kinks to the meritocracy implementation in open source as well. ZDNet unpacks a problem with merit bias and describes a study that found that women on GitHub had 78.7% of pull requests accepted compared to men at 74.6%, but only if the women’s gender was made ambiguous. When they expressed that they were women in their profiles, their acceptance dropped to 58% compared to identifiable men at 61%. The study clearly suggests that there are biases in the OSS community that prevents pure, successful meritocracy from occurring. But, I think its important moving forward to address these sort of imbalances as discriminatory culture, rather than trying to argue that merit shouldn’t matter.

With all that being said, this conversation is a very slippery slope and is hard to discuss its depth and importance in a simple blog post. I can definitely see and appreciate both sides of the argument. A lot of aspects of what results from meritocracy is unfair, but I still believe the general concept is well intentioned and can be improved with conscious effort. Having the time and money to volunteer is not something that is common for everyone, and those who don’t shouldn’t find themselves unable to elevate through the ranks and assume more influential roles in their careers because of it. Their escalation should be based on their capability to develop the skill set to succeed in those roles. But there is a crucial difference between the success of meritocracy in volunteer work and paid work because paid work attempts to level the playing field through compensating effort.

Merit is extremely hard to identify when we factor in the disadvantages individuals have to overcome. It may in fact be too hard to overcome systemic problems with meritocracy, but I think it is important that we try!

0 notes

Text

Fact or Myth: Linux is the most secure OS (11/20/20)

There are three operating system giants: Windows, Mac, and Linux. The noteworthy difference between them, is that Windows and Mac are proprietary whereas Linux is Open Source. Another significant difference is with regards to the security risk associated with each of these three OS’s. Windows finds itself at the top of the list with regards to security risk, mainly because it is the oldest system and most widely used. According to Computerworld, 88% of users run Windows on their computers. Because of the dramatic weight of the industry that relies on Windows, it makes a naturally more appealing target for hackers as they can access most users with relatively little effort. Mac users tend to be safer against cyber attacks than Windows users, though it does not necessarily have as much to do with the system strength. Rather, the difference is more likely a result of it being newer software, and thus, less understood by hackers, and since it is used by a smaller community base, less desirable to hackers. I do not think that Mac is any more robust than Windows. I think it is just a matter of time before hackers decide to dedicate the time to overcome the newness of the Mac operating system. The final operating system, Linux, is renowned to be the most secure of the three giants.

But why is Linux the most secure? As I’m sure you can infer based on the other operating systems’ security trouble, it is possible that Linux is targeted less because it is used less in enterprises and therefore, may not be as lucrative a target for hackers. However, there are other implementation-based reasons for why Linux is believed to be the most secure OS. A primary reason for the great security of Linux is also the reason that open source is so innovative and profound in the first place: there are A LOT of contributors. Because Linux is open source, it has the power of the global developer community behind it. The result is that every aspect of the system is up for peer review and bugs and back doors are more likely to be found and fixed than they would be in a proprietary system. Linux also offers a larger variety of environments to users. This means, that within Linux itself, subdivisions make it increasingly difficult for hackers to target a sizable community that would be worth their efforts. Conversely, systems like Windows are fairly uniform throughout their customer base and thus, a hack for one computer, would be a hack for all of them. This is the same concept as the role genetic diversity plays in the survival of a species. If every species was susceptible to the same disease, the species would go extinct. But generally, certain members of a species are susceptible while another portion are immune. And in this way, a virus may affect some individuals, but the species would survive. Resiliency through diversity is a profound way to make a hacker’s efforts significantly less fruitful and for that reason would eliminate Linux as a target for most hackers. Further security measures of Linux proposed by Kaspersky include the password and user ID requirement and the relatively few automatic access rights users have.

Looking to the future, it is natural to wonder, is the security offered by Linux only temporary? And in response to that, I would say that it probably is. Eventually, the same threats posed to Windows and Mac will begin to target Linux. Since Linux is gaining popularity, they will be increasingly in the limelight as a target for software attacks. As time progresses, it will be very interesting to see how each of the three main operating systems adjust to the security threats posed to them. Only when all of the systems are as targeted as Windows will we truly know which system is the most secure.

0 notes

Text

Agile, Scrum, and How to Overcome Their Disadvantages in Practice

Agile is a buzz word. Companies describe themselves as agile as a form of self-promotion to convince clients and other businesses that they have streamlined processes. But the reality of the matter is that it is extremely difficult for a company to truly adopt an Agile workflow on a project, and therefore many of those who try to be agile, fail. On top of many companies being unable to effectively implement an agile mindset, those who can must also overcome a few disadvantages of Agile. I was fortunate enough to spend my last two summer internships at a company that truly knew what it meant to be agile. And because of that experience, I gained thorough exposure into Agile, and more specifically into scrum. I watched teams complete projects via the Scrum framework, and I worked through projects myself in that same way. I gained first hand exposure to how a successful Agile implementation is able to overcome its disadvantages as proposed by Lucidchart. In this blog I will describe how I’ve seen the three key disadvantages of Agile overcome.

The first main disadvantage of Agile is that its lack of rigid processes can result in teams getting sidetracked. Agile is known for its iterative flexibility which often means that insufficient documentation and ambiguity of the final product is common. Without a clear vision it can be difficult for a team to maintain their focus on what the end goal is and to stay within the scope of the project. At my internship, each project was accompanied by a roadmap that provided an overview of what should be accomplished over each iteration (or in this case, sprint) of the project. While projects differ, there are often similar tasks across projects that provide familiarity to effectively make good estimates for the duration and difficulty level of each project portion. The roadmap can change slightly throughout the course of the project to allow the flexibility to changing requirements that Agile prioritizes. Hence it permits project evolution, but also provides the structure necessary to maintain team vision for the long run goal. By maintaining this general vision, it is also easier for team members to assess the scope of the project during conversations with clients as requirements change. For instance, if the client wanted a new feature to be added to a project, it is important that that feature is something that fits the overall timeline and value associated with the project plan.

The second proposed disadvantage is that long-term projects don’t fair as well from incremental delivery because it often means that there are less safety mechanisms for less experienced contributors and because it can be difficult to predict necessary time, cost, and resources early on. My company would use a Trello board, a software implementation of a scrum board, to organize tasks in each sprint. They would prioritize tasks and project members usually, but not always, claimed tasks that they had more experience with. Before these completed tasks were accepted however, they were put under review and the project manager would confirm that they met the acceptance criteria. As an intern whose first task was coding in an unfamiliar language, in an unfamiliar software environment, and with an unfamiliar team structure, I definitely witnessed that projects can be successful with inexperienced members when they are given the proper support and structure. Trello also enabled the team to maintain a robust backlog to organize future tasks and assure that the most important tasks are prioritized.

The third disadvantage, which is associated with the difficulty in maintaining strong collaboration, was also counteracted at my company by using Trello. Strong collaboration often trickles off eventually because reducing time face-to-face saves team members time for work. Nevertheless, since successful Agile requires a lot of interaction and retrospective analysis, teams cannot neglect the collaborative aspects. Expanding on the function of Trello described previously, Trello is organized in a way such that group members can visualize tasks within a sprint and track the completion status of such tasks. In my experience, tasks could be placed in columns such as “ready for work”, “in progress”, “ready for review”, “complete”, and “backlog”. Having this organizational structure enables steady progress tracking and review. It effectively allows real time interaction and planning with significantly less overhead than other substitutes.

Ultimately, the shortcomings of agile can be overcome with proper planning and tool use. However, it remains true that difficulties can still arise, especially in the valuation of project work, a difficulty I have witnessed. But, often these difficulties will resolve with time and experience as team members become more familiar with project tasks and for that reason are better able to predict a future project’s life cycle. I would also like to point out that in many circumstances, uncertainty is mitigated in firms because firms undergo projects specifically that fall within their range of expertise. In doing so, it is fair to assume that their predictions are based on previous experiences with similar projects. It is rare that a company would not perform many projects that accomplish that same general subset of functions.

Furthermore, even with COVID-19 shutdowns, my company was able to maintain agile work through video conversations and continued use of the tools I have mentioned previously. Simple things, such as putting a few minute checkup each morning goes a long way to fostering agile attitudes. And while it is certainly harder to interact across a screen, there are associated benefits that counteract that hardship, such as greater ease in meeting with clients via video call. The key take away is that agile isn’t and won’t be perfect. But, since agile is not a framework, rather a set of principles to operate under, it can be maintained and adapted during times of hardship and tools can effectively minimize the existing shortcomings of Agile.

0 notes

Text

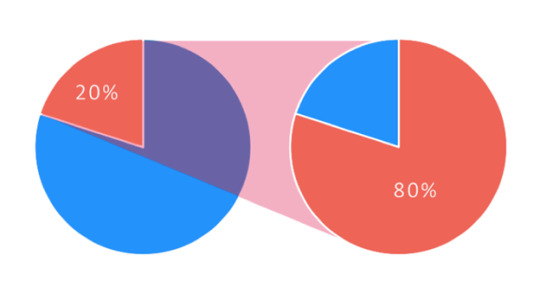

Understanding and Leveraging the Pareto Principle (11/9)

The Pareto principle and I are well acquainted. I often tell people that I would prefer writing two essays rather than finishing one because the process of finalizing a product to earn a 100% often takes significantly longer than designing and producing the paper in the first place. The 80-20 rule presented by the pareto principle states that about 80% of results come from 20% of the causes. In my essay example, 20% of the effort in writing a paper amounts to about 80% of the grade. The prominence of this concept in many daily activities resulted in my acceptance of what this would mean in the development world. For a developer interested in testing, this concept is applied in the sense that 20% of code may cause 80% of the errors associated with the project. In this scenario, the pareto principle may even work in the testers favor.

But, merely understanding that most errors originate from a small subset of code is not enough to help a developer or software tester on its own. The truth is that even identifying the most effectual 20% of code can be difficult. This begs the question: how should software testing teams engage this understanding to successfully identify the most productive use of their time and effort? I will discuss a critical strategy I have come across to help developers apply the pareto principle in practice. In my opinion, the most convincing way to identify the subset of faulty code causing the most errors is to find an effective way to group errors in accordance with their cause, rather than by how they affected the end product. This makes sense if you think about it. Consider the following life-based scenario.

Imagine you are an English speaker and have hired a tour guide to teach you about the history of the Pompeii. Unfortunately for you, you are unable to understand your tour guide because they do not speak your language (the first problem you need to resolve). Secondly, they do not actually know the history of Pompeii because they were given the job from a friend without merit. If in this situation, you noticed these two reasons for your having a bad touring experience and tried to address those reasons individually, you may find that it would take a lot of time to teach the tour guide both your language and the history of Pompeii. However, if you can instead identify that this specific tour guide was not the best suited for your task, you would be able to focus your energies on the one task of finding a more suitable tour guide, and in doing so, you will have identified the source of the problems.

The same general concept holds true for development. Perhaps there is an isolated portion of the code base that causes a variety of defects. If you were able to identify that these defects originated from the same system type, such as cross-platform integration software, focusing on the integration may solve a wide conglomerate of the problems. It often takes multiple collaborators to be able to identify such error origination groups, but it is ultimately worth the resources to identify because of the benefits that ensue. Other ways of identifying the problematic code could include analysis of user feedback or code review (rather than simple bug discovery).

The Pareto Principle applies beyond simply illustrating the need to find trouble areas of code. It also couples to teach developers efficient practices. It supports the concept that they cannot perform exhaustive testing (nor should they want to because it is inefficient), it encourages them to prioritize project pieces with the most critical functionality, and to focus on common problematic code early in development. The pareto principle applies in every aspect of our lives, but by understanding how to leverage it, it can become a tool rather than a burden.

0 notes

Text

Overcoming the Hidden Shortcomings of Design Patterns (11/7)

I was researching interesting uses of design patterns and all I could find were sources discussing how amazing design patterns are. They can effectively simplify common problems and in doing so, can help developers to conceptualize a problem and more efficiently overcome it by adopting a design process that the developer community has already begun (such as patterns in the famous Game of Four book). Eventually, I came across a blog post from Cathy Dutton that broke down some of the problems associated with design patterns. In this post I will discuss some of the points she makes and how they should be considered by developers throughout a project.

She continuously draws insights into the shortcomings of design patterns as they pertain to the desires of the users. While design patterns effectively reduce development hesitancy and push projects to production quickly, they often result in developers overlooking client needs including how they can interact with the final product and what use cases they prioritize. There are many qualities of a project that are user specific and this is a concept that a developer needs to keep in mind, especially knowing that design patterns are generalized and focused on an ‘average’, made-up client. Moreover, there are circumstances in which the technological tool set described by a possible design pattern is not the best solution for their problem. The example she provided on this concept was: if people are angry about the duration of a phone application, rather than streamlining the application with a pattern, they would be better off learning and accommodating the fact that the frustration was caused by lack of information to the applicant up front.

The concerns expressed on design patterns by Cathy Dutton make a lot of sense and I believe are largely solved when a development team follows agile methodologies throughout the course of a project. Since the iterative style of agile development keeps the clients consistently at the forefront of project direction and decisions, their input is the valuable aspect that assures a project ultimately solves the problem in the way they want it to. The developers can start with the design patterns they think fit the best in a certain project scenario, but ultimately, they should update and modify their efforts to keep them aligned with user preferences for each iteration of implementing the design of the project.

0 notes

Text

Waterfall Vs Incremental Design (10/30)

The two major methodologies for completing a project are incremental development and the waterfall method. The waterfall method is more simplistic than iterative models because it only has a single cycle in which a developer receives the requirements, designs the project, and implements, tests, and deploys the code. The simplicity of the waterfall model is also its downfall as projects are less likely to successfully meet the necessary criteria since the requirements are never reevaluated. Incremental design is a way in which developers can follow the same general process as the waterfall method, but in a way that will impose a greater success rate and will keep the client (or evolving objective) engaged throughout the entire development process. An incremental design model breaks a project into smaller tasks to be designed, developed, tested, and implemented independent of the later tasks. In this way, the trajectory of a project is subject to change as requirements evolve. The image below provides a simple understanding of the incremental development process.

My first exposure to the difference between waterfall and incremental design models was during my first summer interning at Enterprise Knowledge (EK). At EK, projects are completed using an agile framework, which is simply an implementation of the incremental design model. I learned that using the incremental agile framework allowed our project members to ensure that we were most effectively allocating our efforts to features that produced value to the client. Every two weeks represented a sprint (or increment) in which we underwent a review process, planned tasks for the next sprint, and refactored our work as necessary to maintain its alignment with the deliverable goal. Naturally, the breakdown of a project in this way enables strong project organization, effective allocation of effort, and even can encourage greater trust between the client and the project team. The agile framework also aids group collaboration by fostering functional team dynamics by dividing roles (such as scrum master or development team members) amongst project members. With the waterfall model, developers operate on assumptions they made that may not align with the actual requirements of a project and thus, does not have as high of a success rate as an incremental approach. Furthermore, working in increments allows the group to reduce risk, remain flexible, restore previous versions easily, and reduce the scope of the projects for each developer in a given period of time.

In general, projects should be completed in increments for the multitude of reasons described previously. While many people’s natural inclination is to develop in a way similar to the waterfall model, this generally only works for extremely small projects and is too rigid to enable consistently good quality deliverables.

0 notes

Text

Tivoization and GPLv3 (10/16)

The fundamental purpose of issuing a license on open source software is to enable the creator to have a say in how their project is interacted with moving forward. The GNU General Public License (GPL) is an example of a license that enables others to use, modify, and distribute the project’s code, and is one of the most popular licenses for FOSS projects. However, until the GPLv3 license, GPL has been solely responsible for the software portion of a project. The GPLv3 has enacted an additional caveat that aims to restrict tivoization (which I will discuss more about shortly). Because of the increased reach of this new license, it has had a lot of backlash from developers, such as Dave Neuer who claim that the software license overreaches its bounds and limits the developers longstanding choice of hardware among other freedoms. But, to understand what he is referring to in his message, we must first understand what the tivoization restriction is and why it was implemented in the GPLv3 license.

Tivoization is when an electronic product, or hardware, is configured by the vendor to require a specific electronic key that can limit its operation to specific software versions. As discussed in An Introduction to Tivoization, there are proper incentives for manufacturers to utilize the tivoization tactic and even advertise it as improving competition and functionality of technology. However, the reality is that tivoization is very distasteful to most software users and engineers as it actually limits competition and misaligns with the fundamental principles of the open source software that manufacturers interact it with. For example, the control it gives vendors to restrict the software users have prevents users from engaging with the many versions of open source software they have access to.

The GPLv3 license attempts to confront the growing usage of tivoization through its anti-tivoization clause. This clause seems to be the very reason that developers like Neuer prefer the older GPLv2 license. I would like to test Neuer’s sentiments however by posing a different perspective, and one similar to that taken in the other article. Yes, the GPLv3 license extends beyond the typical software restrictions of the GPLv2 license, however it does so without preventing the users ability to still use tivoization-based products, rather, it just adds a slight obstacle. I generally agree that the choice should be left to each individual and how they would like to interact with technologies. However, as an economics student, I can also value that sometimes an incentive must be provided to individuals to prevent a market failure or undesired outcome. In a sense, neglecting to respond to the growth of tivoization can essentially result in more restrictions on future products, similar to the restrictions that Neuer wishes to avoid. I do not have a strong understanding of the intricacies of each license, however, on the front of the tivoization clause, I can understand why there is such a contrasting opinion of the GPLv3 license among the developer community. Some see it as their loss in freedoms whereas other see it as a means to retain the freedoms they already have as software developers.

0 notes