#Big Data Profits

Explore tagged Tumblr posts

Text

The Power of Big Data Profits Lead Gen For Insurance & Real Estate Agents

Big Data Profits: Hyper-targeted Leads for Insurance & Real Estate Agents The data power of fortune 500 companies without breaking the bank! You know how Fortune 500 companies have access to massive amounts of data and use it to generate leads at will? Well, guess what? You can now tap into that same data power without breaking the bank! Imagine having access to the same level of data insights…

#Big Data#Big Data Profits#Birthday Marketing#Bonus Credits#business growth#churn reduction#Client Acquisition#Client Engagement#Comprehensive Training#Cross-Selling#Customer Acquisition#Customer Insights#Customer Retention#Data Analytics#Data Insights#Data Marketing Wizard#Data-Driven Marketing#data-driven strategies#Demographic Data#Digital Marketing#direct mail#email marketing#Exclusive Databases#Facebook Custom Audience#final expense insurance#Geo-Targeted Data#geo-targeted marketing#Geo-Targeting#Hyper-Targeted Leads#Increasing Revenue

0 notes

Text

I won’t pretend to be an expert on finances but looking at blue box reports they’re beyond lucky to have pitigi and yuto especially 😭

#like maybe I’m interpreting the data wrong but they’re the only group active in japan so ?? the big chunk of profits from japan have to be#mostly them right#??#*mostly if not only#jpn is 37% korea 49% ?

1 note

·

View note

Text

So, Discord has added a feature that lets other people "enhance" or "edit" your images with different AI apps. It looks like this:

Currently, you can't opt out from this at all. But here's few things you can do as a protest.

FOR SERVERS YOU ARE AN ADMIN IN

Go to Roles -> @/everyone roles -> Scroll all the way down to External Apps, and disable it. This won't delete the option, but it will make people receive a private message instead when they use it, protecting your users:

You should also make it a bannable offense to edit other user's images with AI. Here's how I worded it in my server, feel free to copypaste:

Do not modify other people's images with AI under ANY circumstances, such as with the Discord "enhancement" features, amidst others. This is a bannable offense.

COMPLAIN TO DISCORD

There's few ways to go around this. First, you can go to https://support.discord.com/hc/en-us/requests/new , select Help and Support -> Feedback/New Feature Request, and write your message, as seen in the screenshot below.

For the message, here's some points you can bring up:

Concerns about harassment (such as people using this feature to bully others)

Concerns about privacy (concerns on how External Apps may break privacy or handle the data in the images, and how it may break some legislations, such as GDPR)

Concerns about how this may impact minors (these features could be used with pictures of irl minors shared in servers, for deeply nefarious purposes)

BE VERY CLEAR about "I will refuse to buy Nitro and will cancel my subscription if this feature remains as it is", since they only care about fucking money

Word them as you'd like, add onto them as you need. They sometimes filter messages that are copypasted templates, so finding ways to word them on your own is helpful.

ADDING: You WILL NEED to reply to the mail you receive afterwards for the message to get sent to an actual human! Otherwise it won't reach anyone

UNSUSCRIBE FROM NITRO

This is what they care about the most. Unsuscribe from Nitro. Tell them why you unsuscribed on the way out. DO NOT GIVE THEM MONEY. They're a company. They take actions for profit. If these actions do not get them profit, they will need to backtrack. Mass-unsuscribing from WOTC's DnD beyond forced them to back down with the OGL, this works.

LEAVE A ONE-STAR REVIEW ON THE APP

This impacts their visibility on the App store. Write why are you leaving the one-star review too.

_

Regardless of your stance on AI, I think we can agree that having no way for users to opt out of these pictures is deeply concerning, specially when Discord is often used to share selfies. It's also a good time to remember internet privacy and safety- Maybe don't post your photos in big open public servers, if you don't want to risk people doing edits or modifications of them with AI (or any other way). Once it's posted, it's out of your control.

Anyways, please reblog for visibility- This is a deeply concerning topic!

19K notes

·

View notes

Text

AI images have such an uncanny feeling to it that I can't explain. There's nothing truly off with most of the images, they can look pretty fine and normal at times but there's still that feeling of it being off. It's like a whole body feeling to be alert because something's wrong, regardless of the context of the images. I have never had the experience of uncanny valley feeling before AI

#art#I feel tempted to study them to see if that feeling can be recreated by making art naturally#God knows I can find more than enough on Tumblr#i dont want to promote ai art i just want to see if i can figure out why its always off#also this isnt a foolproof way of determining whether somethings AI or not#ive just accepted that i will be fooled by AI sometimes no matter how hard i check#im so tired of AI#i looked at computers recently and it has AI built in#how is that not a privacy violation??????#yeah just monitor everything i ever do on my computer that sounds safe and secure!#i miss ai at the beginning when it just showed fucked up images of a computer misunderstanding the world#i dont think id ever feel comfortable using it until data centers figure out how to use less water#there are ways to make data centers more efficient#putting them in the middle of a hot desert is not the way to do that#you'd think companies wouldn't be so fucking dumb but they don't think about anything but profit#i think companies will always try to fuck over everyone if they can make more money#but i dont think ai can ever replace human art because i think a big part of art is connection#connection to the world and reality and to other people#a story from a person writing about their experience will always mean more and be more impactful than a computer generating words together#Godspeed if you're still reading i keep trying to look at art and seeing ai and its made me fall in love with art thats made by people#i especially love art by someone whos just starting to explore a new way to create#theres a freedom in the beginning of trying something new

1 note

·

View note

Quote

Let’s be clear: the UK’s mooted copyright scheme would effectively enable companies to nick our data – every post we make, every book we write, every song we create – with impunity. It would require us to sign up to every individual service and tell them that no, we don’t want them to chew up our data and spit out a poor composite image of us. Potentially hundreds of them, from big tech companies to small research labs. Lest we forget, OpenAI – a company now valued at more than $150bn – is planning to forswear its founding non-profit principles to become a for-profit company. It has more than enough money in its coffers to pay for training data, rather than rely on the beneficence of the general public. Companies like that can certainly afford to put their hands in their own pockets, rather than ours. So hands off.

Here’s the deal: AI giants get to grab all your data unless you say they can’t. Fancy that? No, neither do I | Chris Stokel-Walker | The Guardian

#AI#ethics#monopolisation#commodification#government#opt-out#copyright#unethical#openai#for profit#data#data scraping#big data#llms

0 notes

Text

If anyone wants to know why every tech company in the world right now is clamoring for AI like drowned rats scrabbling to board a ship, I decided to make a post to explain what's happening.

(Disclaimer to start: I'm a software engineer who's been employed full time since 2018. I am not a historian nor an overconfident Youtube essayist, so this post is my working knowledge of what I see around me and the logical bridges between pieces.)

Okay anyway. The explanation starts further back than what's going on now. I'm gonna start with the year 2000. The Dot Com Bubble just spectacularly burst. The model of "we get the users first, we learn how to profit off them later" went out in a no-money-having bang (remember this, it will be relevant later). A lot of money was lost. A lot of people ended up out of a job. A lot of startup companies went under. Investors left with a sour taste in their mouth and, in general, investment in the internet stayed pretty cooled for that decade. This was, in my opinion, very good for the internet as it was an era not suffocating under the grip of mega-corporation oligarchs and was, instead, filled with Club Penguin and I Can Haz Cheezburger websites.

Then around the 2010-2012 years, a few things happened. Interest rates got low, and then lower. Facebook got huge. The iPhone took off. And suddenly there was a huge new potential market of internet users and phone-havers, and the cheap money was available to start backing new tech startup companies trying to hop on this opportunity. Companies like Uber, Netflix, and Amazon either started in this time, or hit their ramp-up in these years by shifting focus to the internet and apps.

Now, every start-up tech company dreaming of being the next big thing has one thing in common: they need to start off by getting themselves massively in debt. Because before you can turn a profit you need to first spend money on employees and spend money on equipment and spend money on data centers and spend money on advertising and spend money on scale and and and

But also, everyone wants to be on the ship for The Next Big Thing that takes off to the moon.

So there is a mutual interest between new tech companies, and venture capitalists who are willing to invest $$$ into said new tech companies. Because if the venture capitalists can identify a prize pig and get in early, that money could come back to them 100-fold or 1,000-fold. In fact it hardly matters if they invest in 10 or 20 total bust projects along the way to find that unicorn.

But also, becoming profitable takes time. And that might mean being in debt for a long long time before that rocket ship takes off to make everyone onboard a gazzilionaire.

But luckily, for tech startup bros and venture capitalists, being in debt in the 2010's was cheap, and it only got cheaper between 2010 and 2020. If people could secure loans for ~3% or 4% annual interest, well then a $100,000 loan only really costs $3,000 of interest a year to keep afloat. And if inflation is higher than that or at least similar, you're still beating the system.

So from 2010 through early 2022, times were good for tech companies. Startups could take off with massive growth, showing massive potential for something, and venture capitalists would throw infinite money at them in the hopes of pegging just one winner who will take off. And supporting the struggling investments or the long-haulers remained pretty cheap to keep funding.

You hear constantly about "Such and such app has 10-bazillion users gained over the last 10 years and has never once been profitable", yet the thing keeps chugging along because the investors backing it aren't stressed about the immediate future, and are still banking on that "eventually" when it learns how to really monetize its users and turn that profit.

The pandemic in 2020 took a magnifying-glass-in-the-sun effect to this, as EVERYTHING was forcibly turned online which pumped a ton of money and workers into tech investment. Simultaneously, money got really REALLY cheap, bottoming out with historic lows for interest rates.

Then the tide changed with the massive inflation that struck late 2021. Because this all-gas no-brakes state of things was also contributing to off-the-rails inflation (along with your standard-fare greedflation and price gouging, given the extremely convenient excuses of pandemic hardships and supply chain issues). The federal reserve whipped out interest rate hikes to try to curb this huge inflation, which is like a fire extinguisher dousing and suffocating your really-cool, actively-on-fire party where everyone else is burning but you're in the pool. And then they did this more, and then more. And the financial climate followed suit. And suddenly money was not cheap anymore, and new loans became expensive, because loans that used to compound at 2% a year are now compounding at 7 or 8% which, in the language of compounding, is a HUGE difference. A $100,000 loan at a 2% interest rate, if not repaid a single cent in 10 years, accrues to $121,899. A $100,000 loan at an 8% interest rate, if not repaid a single cent in 10 years, more than doubles to $215,892.

Now it is scary and risky to throw money at "could eventually be profitable" tech companies. Now investors are watching companies burn through their current funding and, when the companies come back asking for more, investors are tightening their coin purses instead. The bill is coming due. The free money is drying up and companies are under compounding pressure to produce a profit for their waiting investors who are now done waiting.

You get enshittification. You get quality going down and price going up. You get "now that you're a captive audience here, we're forcing ads or we're forcing subscriptions on you." Don't get me wrong, the plan was ALWAYS to monetize the users. It's just that it's come earlier than expected, with way more feet-to-the-fire than these companies were expecting. ESPECIALLY with Wall Street as the other factor in funding (public) companies, where Wall Street exhibits roughly the same temperament as a baby screaming crying upset that it's soiled its own diaper (maybe that's too mean a comparison to babies), and now companies are being put through the wringer for anything LESS than infinite growth that Wall Street demands of them.

Internal to the tech industry, you get MASSIVE wide-spread layoffs. You get an industry that used to be easy to land multiple job offers shriveling up and leaving recent graduates in a desperately awful situation where no company is hiring and the market is flooded with laid-off workers trying to get back on their feet.

Because those coin-purse-clutching investors DO love virtue-signaling efforts from companies that say "See! We're not being frivolous with your money! We only spend on the essentials." And this is true even for MASSIVE, PROFITABLE companies, because those companies' value is based on the Rich Person Feeling Graph (their stock) rather than the literal profit money. A company making a genuine gazillion dollars a year still tears through layoffs and freezes hiring and removes the free batteries from the printer room (totally not speaking from experience, surely) because the investors LOVE when you cut costs and take away employee perks. The "beer on tap, ping pong table in the common area" era of tech is drying up. And we're still unionless.

Never mind that last part.

And then in early 2023, AI (more specifically, Chat-GPT which is OpenAI's Large Language Model creation) tears its way into the tech scene with a meteor's amount of momentum. Here's Microsoft's prize pig, which it invested heavily in and is galivanting around the pig-show with, to the desperate jealousy and rapture of every other tech company and investor wishing it had that pig. And for the first time since the interest rate hikes, investors have dollar signs in their eyes, both venture capital and Wall Street alike. They're willing to restart the hose of money (even with the new risk) because this feels big enough for them to take the risk.

Now all these companies, who were in varying stages of sweating as their bill came due, or wringing their hands as their stock prices tanked, see a single glorious gold-plated rocket up out of here, the likes of which haven't been seen since the free money days. It's their ticket to buy time, and buy investors, and say "see THIS is what will wring money forth, finally, we promise, just let us show you."

To be clear, AI is NOT profitable yet. It's a money-sink. Perhaps a money-black-hole. But everyone in the space is so wowed by it that there is a wide-spread and powerful conviction that it will become profitable and earn its keep. (Let's be real, half of that profit "potential" is the promise of automating away jobs of pesky employees who peskily cost money.) It's a tech-space industrial revolution that will automate away skilled jobs, and getting in on the ground floor is the absolute best thing you can do to get your pie slice's worth.

It's the thing that will win investors back. It's the thing that will get the investment money coming in again (or, get it second-hand if the company can be the PROVIDER of something needed for AI, which other companies with venture-back will pay handsomely for). It's the thing companies are terrified of missing out on, lest it leave them utterly irrelevant in a future where not having AI-integration is like not having a mobile phone app for your company or not having a website.

So I guess to reiterate on my earlier point:

Drowned rats. Swimming to the one ship in sight.

36K notes

·

View notes

Text

Getting to Know FRC Part 3: Full Service Catalogue

Renee Williams, President, Freight Revenue Consultants, LLC (FRC) At Freight Revenue Consultants (FRC), we’re all about making your trucking operations run smoother and more profitably. With our deep knowledge of the transportation industry and advanced data analytics skills, we tackle everything. What sets us apart is our extensive experience and strong network of contacts and vendors, which…

View On WordPress

#advanced data analytics#best practices#big data insights#business#business excellence#cash flow management#compliance challenges#continuous improvement#cost-saving strategies#fleet management#free consultation#Freight#freight industry#Freight Revenue Consultants#industry compliance#logistics#market trends#operational efficiency#operational optimization#profitability#small carriers#supply chain dynamics#technology solutions#Transportation#transportation industry#transportation regulations#transportation services#Trucking#trucking efficiency#trucking industry

0 notes

Text

Is NVIDIA (NVDA) the World’s Most Lucrative Company?

NVIDIA (NVDA) could be the world’s most lucrative company. Its revenues grew by an astonishing 205.51% in the quarter ending on 31 October 2023. NVIDIA’s quarterly revenues grew from $5.931 billion on 31 October 2022 to $18.120 billion on 31 October 2023. Similarly, NVIDIA’s quarterly gross profit grew from $3.177 billion on 31 October 2022 to $13.40 billion on 31 October 2023. Plus, the…

View On WordPress

#How much Money is Nvidia (NVDA) making?#How NVIDIA profits from Big Data#Is NVIDIA (NVDA) the World’s Most Lucrative Company?

0 notes

Text

Bossware is unfair (in the legal sense, too)

You can get into a lot of trouble by assuming that rich people know what they're doing. For example, might assume that ad-tech works – bypassing peoples' critical faculties, reaching inside their minds and brainwashing them with Big Data insights, because if that's not what's happening, then why would rich people pour billions into those ads?

https://pluralistic.net/2020/12/06/surveillance-tulip-bulbs/#adtech-bubble

You might assume that private equity looters make their investors rich, because otherwise, why would rich people hand over trillions for them to play with?

https://thenextrecession.wordpress.com/2024/11/19/private-equity-vampire-capital/

The truth is, rich people are suckers like the rest of us. If anything, succeeding once or twice makes you an even bigger mark, with a sense of your own infallibility that inflates to fill the bubble your yes-men seal you inside of.

Rich people fall for scams just like you and me. Anyone can be a mark. I was:

https://pluralistic.net/2024/02/05/cyber-dunning-kruger/#swiss-cheese-security

But though rich people can fall for scams the same way you and I do, the way those scams play out is very different when the marks are wealthy. As Keynes had it, "The market can remain irrational longer than you can remain solvent." When the marks are rich (or worse, super-rich), they can be played for much longer before they go bust, creating the appearance of solidity.

Noted Keynesian John Kenneth Galbraith had his own thoughts on this. Galbraith coined the term "bezzle" to describe "the magic interval when a confidence trickster knows he has the money he has appropriated but the victim does not yet understand that he has lost it." In that magic interval, everyone feels better off: the mark thinks he's up, and the con artist knows he's up.

Rich marks have looong bezzles. Empirically incorrect ideas grounded in the most outrageous superstition and junk science can take over whole sections of your life, simply because a rich person – or rich people – are convinced that they're good for you.

Take "scientific management." In the early 20th century, the con artist Frederick Taylor convinced rich industrialists that he could increase their workers' productivity through a kind of caliper-and-stopwatch driven choreographry:

https://pluralistic.net/2022/08/21/great-taylors-ghost/#solidarity-or-bust

Taylor and his army of labcoated sadists perched at the elbows of factory workers (whom Taylor referred to as "stupid," "mentally sluggish," and as "an ox") and scripted their motions to a fare-the-well, transforming their work into a kind of kabuki of obedience. They weren't more efficient, but they looked smart, like obedient robots, and this made their bosses happy. The bosses shelled out fortunes for Taylor's services, even though the workers who followed his prescriptions were less efficient and generated fewer profits. Bosses were so dazzled by the spectacle of a factory floor of crisply moving people interfacing with crisply working machines that they failed to understand that they were losing money on the whole business.

To the extent they noticed that their revenues were declining after implementing Taylorism, they assumed that this was because they needed more scientific management. Taylor had a sweet con: the worse his advice performed, the more reasons their were to pay him for more advice.

Taylorism is a perfect con to run on the wealthy and powerful. It feeds into their prejudice and mistrust of their workers, and into their misplaced confidence in their own ability to understand their workers' jobs better than their workers do. There's always a long dollar to be made playing the "scientific management" con.

Today, there's an app for that. "Bossware" is a class of technology that monitors and disciplines workers, and it was supercharged by the pandemic and the rise of work-from-home. Combine bossware with work-from-home and your boss gets to control your life even when in your own place – "work from home" becomes "live at work":

https://pluralistic.net/2021/02/24/gwb-rumsfeld-monsters/#bossware

Gig workers are at the white-hot center of bossware. Gig work promises "be your own boss," but bossware puts a Taylorist caliper wielder into your phone, monitoring and disciplining you as you drive your wn car around delivering parcels or picking up passengers.

In automation terms, a worker hitched to an app this way is a "reverse centaur." Automation theorists call a human augmented by a machine a "centaur" – a human head supported by a machine's tireless and strong body. A "reverse centaur" is a machine augmented by a human – like the Amazon delivery driver whose app goads them to make inhuman delivery quotas while punishing them for looking in the "wrong" direction or even singing along with the radio:

https://pluralistic.net/2024/08/02/despotism-on-demand/#virtual-whips

Bossware pre-dates the current AI bubble, but AI mania has supercharged it. AI pumpers insist that AI can do things it positively cannot do – rolling out an "autonomous robot" that turns out to be a guy in a robot suit, say – and rich people are groomed to buy the services of "AI-powered" bossware:

https://pluralistic.net/2024/01/29/pay-no-attention/#to-the-little-man-behind-the-curtain

For an AI scammer like Elon Musk or Sam Altman, the fact that an AI can't do your job is irrelevant. From a business perspective, the only thing that matters is whether a salesperson can convince your boss that an AI can do your job – whether or not that's true:

https://pluralistic.net/2024/07/25/accountability-sinks/#work-harder-not-smarter

The fact that AI can't do your job, but that your boss can be convinced to fire you and replace you with the AI that can't do your job, is the central fact of the 21st century labor market. AI has created a world of "algorithmic management" where humans are demoted to reverse centaurs, monitored and bossed about by an app.

The techbro's overwhelming conceit is that nothing is a crime, so long as you do it with an app. Just as fintech is designed to be a bank that's exempt from banking regulations, the gig economy is meant to be a workplace that's exempt from labor law. But this wheeze is transparent, and easily pierced by enforcers, so long as those enforcers want to do their jobs. One such enforcer is Alvaro Bedoya, an FTC commissioner with a keen interest in antitrust's relationship to labor protection.

Bedoya understands that antitrust has a checkered history when it comes to labor. As he's written, the history of antitrust is a series of incidents in which Congress revised the law to make it clear that forming a union was not the same thing as forming a cartel, only to be ignored by boss-friendly judges:

https://pluralistic.net/2023/04/14/aiming-at-dollars/#not-men

Bedoya is no mere historian. He's an FTC Commissioner, one of the most powerful regulators in the world, and he's profoundly interested in using that power to help workers, especially gig workers, whose misery starts with systemic, wide-scale misclassification as contractors:

https://pluralistic.net/2024/02/02/upward-redistribution/

In a new speech to NYU's Wagner School of Public Service, Bedoya argues that the FTC's existing authority allows it to crack down on algorithmic management – that is, algorithmic management is illegal, even if you break the law with an app:

https://www.ftc.gov/system/files/ftc_gov/pdf/bedoya-remarks-unfairness-in-workplace-surveillance-and-automated-management.pdf

Bedoya starts with a delightful analogy to The Hawtch-Hawtch, a mythical town from a Dr Seuss poem. The Hawtch-Hawtch economy is based on beekeeping, and the Hawtchers develop an overwhelming obsession with their bee's laziness, and determine to wring more work (and more honey) out of him. So they appoint a "bee-watcher." But the bee doesn't produce any more honey, which leads the Hawtchers to suspect their bee-watcher might be sleeping on the job, so they hire a bee-watcher-watcher. When that doesn't work, they hire a bee-watcher-watcher-watcher, and so on and on.

For gig workers, it's bee-watchers all the way down. Call center workers are subjected to "AI" video monitoring, and "AI" voice monitoring that purports to measure their empathy. Another AI times their calls. Two more AIs analyze the "sentiment" of the calls and the success of workers in meeting arbitrary metrics. On average, a call-center worker is subjected to five forms of bossware, which stand at their shoulders, marking them down and brooking no debate.

For example, when an experienced call center operator fielded a call from a customer with a flooded house who wanted to know why no one from her boss's repair plan system had come out to address the flooding, the operator was punished by the AI for failing to try to sell the customer a repair plan. There was no way for the operator to protest that the customer had a repair plan already, and had called to complain about it.

Workers report being sickened by this kind of surveillance, literally – stressed to the point of nausea and insomnia. Ironically, one of the most pervasive sources of automation-driven sickness are the "AI wellness" apps that bosses are sold by AI hucksters:

https://pluralistic.net/2024/03/15/wellness-taylorism/#sick-of-spying

The FTC has broad authority to block "unfair trade practices," and Bedoya builds the case that this is an unfair trade practice. Proving an unfair trade practice is a three-part test: a practice is unfair if it causes "substantial injury," can't be "reasonably avoided," and isn't outweighed by a "countervailing benefit." In his speech, Bedoya makes the case that algorithmic management satisfies all three steps and is thus illegal.

On the question of "substantial injury," Bedoya describes the workday of warehouse workers working for ecommerce sites. He describes one worker who is monitored by an AI that requires him to pick and drop an object off a moving belt every 10 seconds, for ten hours per day. The worker's performance is tracked by a leaderboard, and supervisors punish and scold workers who don't make quota, and the algorithm auto-fires if you fail to meet it.

Under those conditions, it was only a matter of time until the worker experienced injuries to two of his discs and was permanently disabled, with the company being found 100% responsible for this injury. OSHA found a "direct connection" between the algorithm and the injury. No wonder warehouses sport vending machines that sell painkillers rather than sodas. It's clear that algorithmic management leads to "substantial injury."

What about "reasonably avoidable?" Can workers avoid the harms of algorithmic management? Bedoya describes the experience of NYC rideshare drivers who attended a round-table with him. The drivers describe logging tens of thousands of successful rides for the apps they work for, on promise of "being their own boss." But then the apps start randomly suspending them, telling them they aren't eligible to book a ride for hours at a time, sending them across town to serve an underserved area and still suspending them. Drivers who stop for coffee or a pee are locked out of the apps for hours as punishment, and so drive 12-hour shifts without a single break, in hopes of pleasing the inscrutable, high-handed app.

All this, as drivers' pay is falling and their credit card debts are mounting. No one will explain to drivers how their pay is determined, though the legal scholar Veena Dubal's work on "algorithmic wage discrimination" reveals that rideshare apps temporarily increase the pay of drivers who refuse rides, only to lower it again once they're back behind the wheel:

https://pluralistic.net/2023/04/12/algorithmic-wage-discrimination/#fishers-of-men

This is like the pit boss who gives a losing gambler some freebies to lure them back to the table, over and over, until they're broke. No wonder they call this a "casino mechanic." There's only two major rideshare apps, and they both use the same high-handed tactics. For Bedoya, this satisfies the second test for an "unfair practice" – it can't be reasonably avoided. If you drive rideshare, you're trapped by the harmful conduct.

The final prong of the "unfair practice" test is whether the conduct has "countervailing value" that makes up for this harm.

To address this, Bedoya goes back to the call center, where operators' performance is assessed by "Speech Emotion Recognition" algorithms, a psuedoscientific hoax that purports to be able to determine your emotions from your voice. These SERs don't work – for example, they might interpret a customer's laughter as anger. But they fail differently for different kinds of workers: workers with accents – from the American south, or the Philippines – attract more disapprobation from the AI. Half of all call center workers are monitored by SERs, and a quarter of workers have SERs scoring them "constantly."

Bossware AIs also produce transcripts of these workers' calls, but workers with accents find them "riddled with errors." These are consequential errors, since their bosses assess their performance based on the transcripts, and yet another AI produces automated work scores based on them.

In other words, algorithmic management is a procession of bee-watchers, bee-watcher-watchers, and bee-watcher-watcher-watchers, stretching to infinity. It's junk science. It's not producing better call center workers. It's producing arbitrary punishments, often against the best workers in the call center.

There is no "countervailing benefit" to offset the unavoidable substantial injury of life under algorithmic management. In other words, algorithmic management fails all three prongs of the "unfair practice" test, and it's illegal.

What should we do about it? Bedoya builds the case for the FTC acting on workers' behalf under its "unfair practice" authority, but he also points out that the lack of worker privacy is at the root of this hellscape of algorithmic management.

He's right. The last major update Congress made to US privacy law was in 1988, when they banned video-store clerks from telling the newspapers which VHS cassettes you rented. The US is long overdue for a new privacy regime, and workers under algorithmic management are part of a broad coalition that's closer than ever to making that happen:

https://pluralistic.net/2023/12/06/privacy-first/#but-not-just-privacy

Workers should have the right to know which of their data is being collected, who it's being shared by, and how it's being used. We all should have that right. That's what the actors' strike was partly motivated by: actors who were being ordered to wear mocap suits to produce data that could be used to produce a digital double of them, "training their replacement," but the replacement was a deepfake.

With a Trump administration on the horizon, the future of the FTC is in doubt. But the coalition for a new privacy law includes many of Trumpland's most powerful blocs – like Jan 6 rioters whose location was swept up by Google and handed over to the FBI. A strong privacy law would protect their Fourth Amendment rights – but also the rights of BLM protesters who experienced this far more often, and with far worse consequences, than the insurrectionists.

The "we do it with an app, so it's not illegal" ruse is wearing thinner by the day. When you have a boss for an app, your real boss gets an accountability sink, a convenient scapegoat that can be blamed for your misery.

The fact that this makes you worse at your job, that it loses your boss money, is no guarantee that you will be spared. Rich people make great marks, and they can remain irrational longer than you can remain solvent. Markets won't solve this one – but worker power can.

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#alvaro bedoya#ftc#workers#algorithmic management#veena dubal#bossware#taylorism#neotaylorism#snake oil#dr seuss#ai#sentiment analysis#digital phrenology#speech emotion recognition#shitty technology adoption curve

2K notes

·

View notes

Text

from the bottom of my heart fuck you google literally all of my work in on fucking google docs :///

#ppl are saying that google doesnt really use your data unless you allow them to so leaving your stuff on there is a matter of trust and well#im not that dumb to trust a big ass company who only cares about profit#fuck you fuck you fuck you i have to learn new shit now#pain suffering and agony#midnightfangz.txt

1 note

·

View note

Note

I read your post about open enrollment for the ACA and was hoping you might expand on why you believe it would take years to dismantle. I've been terrified that with a Republican house/senate, Trump could just snap his fingers and make it go away within months of taking office. I'd love some reassurance that that's not possible.

Hiya, sure I can share some thoughts on the matter! First, it's very important to understand the ACA is a huuuuuuuuuuuuge system with subject matter experts in dozens of places throughout the process. I'm one of those SMEs, but I am at the end of the process where the revenue is generated, so my insight is limited on the public facing pieces.

What this means is that I am professionally embedded in the ACA in a position that exists purely to show what conditions people are treated for and then generate that data into what's called a "risk score". There's about 6 pages I could write on it, but the takeaway is that the ACA is

1) intricately interwoven with the federal government

2) increasingly profitable, sustainable, and growing (it is STILL a for-profit system if you can believe it)

3) wholeheartedly invested in by the largest insurance companies in the country LARGELY due to the fact that they finally learned the rules of how to make the ACA a thriving center of business

4) since the big issuers are arm+leg invested in the ACA, there is a lot of resistance politically and on an industry level to leave it behind (think of the lobbyists, politicians, corporations that will fight tooth and nail to protect their profit + investment)

The process to calculate a risk score takes roughly 2 years. There is an audit for the concurrent year and then a vigorous retro audit for the prev year - - this is a rolling cycle every year. Medicare has a similar process. These are RVP + RADV audits if you would like the jargon.

Eliminating the ACA abruptly is as internally laughable as us finishing the RADV audit ahead of schedule. If Trump were to blow the ACA into smithereens on day 1, he would be drowning in issuer complaints and an economic health sector that is essentially bleeding out. You cut off the RVP early? We have half of next RADV stuck in the gears now. You cut off the RADV early? No issuer will get their "risk adjusted" payments for services rendered in the prev benefit year (to an extent, again very complex multi-process system).

The ACA is GREAT for the public and should be defended on that basis alone. However, the inner capitalistic nature of the ACA is a powerful armor that has conservatives + liberals defending it on a basis of capital + market growth. It's not sexy, but it makes too much money consistently for the system to be easily dismantled.

Or at least that's what I can tell you from the money center of the ACA. they don't bring us up in political conversation because we are confusing to seasoned professionals, boring to industry outsiders, and consistently we are anathema to the anti-ACA talking points.

I am already preparing for next year's RVP for this window of open enrollment. That RVP process will feed into the RADV in 2026. In 2025, we begin the RADV for 2024. If nothing else, the slow fucking gears of CMS will keep the ACA alive until we finish our work at the end of the process. I highly doubt that will be the only reason the ACA is safeguarded, but it is a powerful type of support to pair with people protecting the ACA for other reasons.

I work every day to show, defend, and educate on how many diagnoses are managed thru my company's ACA plans. My specialty is cancer and I see a lot of it. The revenue drive comes from the Medical Loss Ratio (MLR) rule stating only 20% MAX of profit may go to the issuer + the 80% at a minimum must go back to the customer or be invested in expanding benefits. The more people on the plan using it, the higher that 20% becomes for the issuer and the more impactful that 80% becomes for the next year of benefit growth. It is remarkably profitable once issuers stop seeking out "healthy populations". The ACA is a functional method for issuers to tap into a stable customer base (sick/chronic ill customers) that turns a profit, grows, and builds strong consumer bases in each state.

The industry can never walk away from this overnight - - this is the preferred investment for many big players. Changing the direction of those businesses will be a monumental effort that takes years (at least 2 with the audits). In the meantime, you still have benefits, you still have care, and you still have reason to sign up. Let us deal with the bureaucracy bullshit, go get your care and know you have benefits thru 2025 and we will be working to keep it that way for 2026 and forward. This is a wing of the federal government, it is not a jenga tower like Trump wishes.

1K notes

·

View notes

Text

if you’re ever looking for a great free email option (which also offers a relatively affordable paid option that includes a vpn, password manager, and a calendar as well as email aliases to give to pesky companies so they don’t get your personal email) then I can’t recommend protonmail enough

their free service offers all of what google does in gmail but they are a privacy-oriented nonprofit that will never sell any of your data AND they have a much more robust and functional spam filter than gmail does

protonmail also prevents images with trackers imbedded from loading in your inbox to protect your privacy

i’ve never been happier with an email provider and used their free service for years before deciding to switch over to paying to use their calendar and vpn—a choice i haven’t regretted because i know they aren’t one of the many vpns that sells your data to advertisers and because their vpn works really well across every device I’ve installed it on (including my tv)

i am also contemplating buying a flip phone (i’m a bit of a privacy nut) but this has been a great stop-gap measure for me before i make the transition (if i ever do lol i’ve been saying i’ll revert to a flip phone for over a decade now. still hasn’t happened yet)

Just noticed gmail has ads. like spam emails weren’t enough now companies can pay gmail to put shit in your inbox based off browser cookies without you ever giving out your email. I am SO close to buying a flip phone.

#a lot of proton’s profits also go back into supporting other companies that protect internet privacy#and for other privacy geeks they are based in a non 14 eyes country so your data won’t be available to government big brother#just like it also will be protected from capitalist/ corporate big brother#really important to use a vpn and email that isn’t in a#five eyes#or nine eyes or fourteen eyes country#what good is privacy if it only protects you from google and not from the nanny state?#proton mail#proton#data privacy#internet privacy#resisting google#anti google

29 notes

·

View notes

Text

Ellipsus Digest: March 18

Each week (or so), we'll highlight the relevant (and sometimes rage-inducing) news adjacent to writing and freedom of expression.

This week: AI continues its hostile takeover of creative labor, Spain takes a stand against digital sludge, and the usual suspects in the U.S. are hard at work memory-holing reality in ways both dystopian and deeply unserious.

ChatGPT firm reveals AI model that is “good at creative writing” (The Guardian)

... Those quotes are working hard.

OpenAI (ChatGPT) announced a new AI model trained to emulate creative writing—at least, according to founder Sam Altman: “This is the first time i have been really struck by something written by AI.” But with growing concerns over unethically scraped training data and the continued dilution of human voices, writers are asking… why?

Spoiler: the result is yet another model that mimics the aesthetics of creativity while replacing the act of creation with something that exists primarily to generate profit for OpenAI and its (many) partners—at the expense of authors whose work has been chewed up, swallowed, and regurgitated into Silicon Valley slop.

Spain to impose massive fines for not labeling AI-generated content (Reuters)

But while big tech continues to accelerate AI’s encroachment on creative industries, Spain (in stark contrast to the U.S.) has drawn a line: In an attempt to curb misinformation and protect human labor, all AI-generated content must be labeled, or companies will face massive fines. As the internet is flooded with AI-written text and AI-generated art, the bill could be the first of many attempts to curb the unchecked spread of slop.

Besos, España 💋

These words are disappearing in the new Trump administration (NYT)

Project 2025 is moving right along—alongside dismantling policies and purging government employees, the stage is set for a systemic erasure of language (and reality). Reports show that officials plan to wipe government websites of references to LGBTQ+, BIPOC, women, and other communities—words like minority, gender, Black, racism, victim, sexuality, climate crisis, discrimination, and women have been flagged, alongside resources for marginalized groups and DEI initiatives, for removal.

It’s a concentrated effort at creating an infrastructure where discrimination becomes easier… because the words to fight it no longer officially exist. (Federally funded educational institutions, research grants, and historical archives will continue to be affected—a broader, more insidious continuation of book bans, but at the level of national record-keeping, reflective of reality.) Doubleplusungood, indeed.

Pete Hegseth’s banned images of “Enola Gay” plane in DEI crackdown (The Daily Beast)

Fox News pundit-turned-Secretary of Defense-slash-perpetual-drunk-uncle Pete Hegseth has a new target: banning educational materials featuring the Enola Gay, the plane that dropped the atomic bomb on Hiroshima. His reasoning: that its inclusion in DEI programs constitutes "woke revisionism." If a nuke isn’t safe from censorship, what is?

The data hoarders resisting Trump’s purge (The New Yorker)

Things are a little shit, sure. But even in the ungoodest of times, there are people unwilling to go down without a fight.

Archivists, librarians, and internet people are bracing for the widespread censorship of government records and content. With the Trump admin aiming to erase documentation of progressive policies and minority protections, a decentralized network is working to preserve at-risk information in a galvanized push against erasure, refusing to let silence win.

Let us know if you find something other writers should know about, (or join our Discord and share it there!) Until next week, - The Ellipsus Team xo

619 notes

·

View notes

Text

"Any chance we're wrong about Covid?"

It's a valid question many people earnestly think about — even the very cautious.

'it becomes important to ask: "what does the data actually say?"'

Quoting a few good answers from a thread:

"Covid left me disabled in 2020. I know with 100% certainty that I am not wrong about Covid. I live with the proof every minute of every day for the rest of my life."

"The insurance companies and government statisticians care, or rather they have taken an objective interest." > https://fred.stlouisfed.org/series/LNU01074597 > https://insurancenewsnet.com/innarticle/insurance-industry-coalition-forms-non-profit-to-study-excess-mortality

"There are parallels between how governments are responding to COVID-19 and how they responded to tobacco back in the day. “it would be a mistake to assume governments would automatically protect people from a public health threat in the face of more immediate economic considerations…there would be resistance to change that might be costly until evidence to justify it was overwhelming.”" > https://johnsnowproject.org/insights/merchants-of-doubt/

"I suspect most of us entertain this thought from time to time, especially when it’s this absurdly difficult and lonely to maintain a Covid Conscious lifestyle. But it’s important to remember that history is littered with people making terrible choices en masse: with handling past pandemics, the holocaust, slavery, witch burnings, etc. Hell pretty much everyone used to smoke and putting lead in everything was A-ok. Just because a lot of people believe something doesn’t mean they’re right. So it becomes important to ask what does the data actually say? The research and the statistical data on this subject paint an ugly but fairly quantifiable picture by which we can gauge our understanding of the situation and our choices in response to it. Read the science. Look at the data on things like Long Covid. There are also many of us who have already had our health absolutely ravaged by this virus or lost loved ones to it etc., and everyone in that position has first hand evidence for how dangerous this virus is. It’s tremendously difficult to swim against the current like we are and self-doubt is natural in those conditions, but that’s when seeking out factual information on the subject is the best course of action."

"But what it all comes back to for me is - say we're wrong, and covid is a big nothingburger and lockdowns are the root of all evil. Ok, well, what I'm doing is acting on the best information available to me at this time to protect my family. I can't regret that. I will always be able to look my kids in the eye and say "I did my best with what I had."" ... So if we're wrong - well, we wore masks, changed our social habits, reduced our consumerism and our contribution to the destruction of our planet, and reduced how often we got sick. None of those things are bad. If they're wrong, they and their kids are screwed. I'd rather err on the side of caution.

1K notes

·

View notes

Text

Americans, our democracy is under threat.

Do you reject fascism and oppose the Trump-Musk coup? Want to do something, but aren’t sure what you can do to make a difference? Keep reading for ways big and small you can fight back:

Attend a Protest New to protesting? Here’s a primer for first-time protesters and a schedule of upcoming national days of action:

Mar 1st and ongoing (Tesla Takedown) Website | Find an event

Mar 4th (50501: 50 protests, 50 states) Website | Find an event

Mar 7th (Stand up for Science) Website | Find an event

Mar 8th (Women’s March) Website | Find an event

Search for future protests at /r/ProtestFinderUSA and join the mailing list of grassroots organizations like Indivisible to be alerted to future actions.

Put Pressure on Congress Want your elected officials to stand up to Trump-Musk and push back against the unconstitutional executive orders, disastrous DOGE cuts, and illegal funding freezes? Already calling your Reps and Senators daily using 5calls.org?

Then it’s time to escalate to in-person action. Visit their websites, join their mailing lists, follow their socials, and call their offices to find out when the next local event will be and make your voice heard.

Applying pressure to congress works, and we are already seeing the results of constituent push back. House Democrats recently voted as a unified block against the Trump-sponsored billionaire tax cuts, with members breaking maternity leave and leaving the hospital to fly back to Washington just to cast their votes. And on the Republican side, negative town hall blowback has the GOP running scared.

If your congressperson is hiding from you, stage a protest event and put their cowardice on blast. For more information on how to implement these tactics, see the Indivisible congressional recess toolkit.

If your congressperson is already fighting the good fight, then make sure to thank them and provide encouragement to continue opposing the budget cuts. Courage is contagious, and vocal public support will help spur congress to fight that much harder.

And finally, regardless of where you live, you can sign up to phone bank and reach out to voters in red congressional districts.

Get Out the Vote Did you know there are Special Elections as soon as April 1st that could flip control of the House back to Democrats? We simply cannot wait for the 2026 midterms, we must take action now! You can help get out the vote for Gay Valimont (FL-1), Joshua Weil (FL-6), and Blake Gendebien (NY-21).

In addition, the Muskrat is spending millions to buy the Wisconsin Supreme Court. Phone bank or write letters to keep a MAGA extremist off the high court and protect Wisconsin elections from future gerrymandering.

Fight the Broligarchy If you own TSLA stock, or *gasp* an actual Tesla vehicle, drop it like a scorching case of herpes, then join the picket line at your nearest Tesla showroom.

On socials, delete your Nazi-infested X and Meta (Facebook, IG, Threads) accounts and join the open source BlueSky. If you must remain on Meta, at minimum change your account settings so Fuckerberg can’t profit from your data.

Stop using Google search/Chrome and install privacy-focused alternatives like DuckDuckGo or Firefox. As a bonus, in the DuckDuckGo browser you can permanently hide AI garbage from your search results.

Show your monetary support for companies that have renewed their commitment to DEI programs (like Costco and Apple) and boycott those who have not (like Target and Amazon). Also look up how other corporations score on the democracy scale and adjust your spending accordingly.

And last, but not least, pledge to join the General Strike!

Stay Informed Corporate media has capitulated to Trump. From the cancellation of minority-hosted shows on MSNBC to the Bezos takeover of the Washington Post editorial pages, MSM cannot be relied upon to provide unbiased coverage of the Trump-Musk regime.

Support independent journalists and media and follow AltGov accounts on Bluesky to stay informed as to what is actually going on in Washington.

Get to Know your Community Authoritarians want you to feel helpless and isolated because they know we the people vastly outnumber them. Get to know your neighbors and join a group/team/club - anything that gets you interacting with your local community whether it is political or not.

Under Trump-Musk, federal programs like SNAP, Medicaid, Medicare, and even Social Security are in danger. We will need to increasingly rely on our own communities to have our backs. Visit mutualaidhub.org to locate resources and learn how to start your own network.

And finally, remember that resistance is a marathon, not a sprint. So be sure to stop doomscrolling and simply enjoy life as AOC reminds us:

568 notes

·

View notes

Text

That's Right: It's Another Hot Take About That Dead Healthcare CEO

The websites are abuzz with debate on the utilitarian calculus of whether some guy getting shot was a good thing. What are the odds that the assassination will scare the horrible greedy health insurance companies into changing their ways and fixing the system? Is it worth killing someone over? Will the fear of being blasted by some guy with stylishly-engraved bullets put the fat cats in line? Or will their greed win out over their fear, leaving the nightmarish system unchanged?

Well, what if that was totally irrelevant?

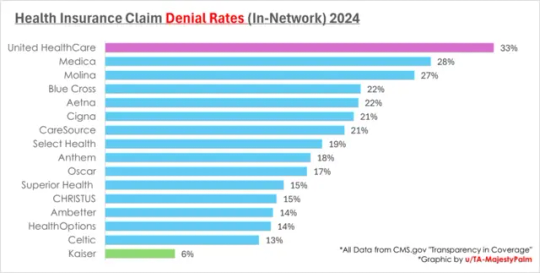

You may have seen a graph that looks like this:

I've seen a few of these going around. These are the rates at which various health insurance companies say "no, you don't get the money" when someone says "hey I need money for this medical thing". UHC, the one whose CEO got shot, is notably really bad in this respect. They've got algorithmic claims denials and all kinds of nasty things that people don't like. All that money they're saving on paying out on claims must be making them rich, right? Let's look at their own financial reports:

Whoa! Big numbers! Six percent looks like a small number, but multiply and they make like thirty billion dollars doing this! That's a lot, right?

Well hang on. They're an insurance company. We can roughly model their profit as the amount people pay them for insurance, minus the amount they have to pay out for claims. Let's look at 2023: simple subtraction, their expenses are $339.2 billion. We simplify other overhead and assume that's all claims. So... that represents those 67% of claims they don't reject. What happens if they approve all the claims?

Multiply: $506.3 billion. They don't have that kind of money. They have $371.6 billion in revenue. So okay- they have to deny some claims. That's pretty normal. But let's pretend they're extremely afraid of assassins now and want to be completely non-greedy: they're okay making zero profit. They make $32.4 billion in profit- how many otherwise-rejected claims can they now afford to approve?

...uh. Well, they can afford to pay out, at most, 73.4% of claims. Still a denial rate of 26.6%, higher than most of their competitors. Not a huge improvement. And in reality, they can't afford to make 0 profit- a company that's making 0 profit is a company investors pull out of immediately, leaving it to collapse, because they can make more money investing in the ones that aren't as afraid of assassins. They've got to at least hover around the same profit margin as their competitors. Which is...

That's average profit margins for the whole US healthcare industry. So, okay, if we match those other companies' profit margins and try to hover around 3-4%... uh. Wait. Hang on. Here's another graph with more recent data on UHC specifically:

Wait, they're still just making that little 3-4% profit margin, even with all these shady automated denials- so how are those other companies doing better on claims? They're obviously not less greedy. They must be making more money somehow, right?

(My guess, sight-unseen, would be that they charge more for their plans, or offer less comprehensive coverage, or use a network of less expensive providers, or other things that make the amount they have to pay out smaller and the amount they're taking in larger. I don't feel like doing a comprehensive consumer review of what every insurance provider's healthcare plans are, but there's always these tradeoffs to make. UHC seems to be offering the tradeoff of "better or cheaper care, on paper" for "but there's a higher risk of getting denied", which is one annoying tradeoff among many.)

Okay But That's Enough Graphs

"Yeah yeah yeah shut up about profit margins and coverage tradeoffs. Is it a good thing that the CEO got shot or not?"

Well, their profit margin at the time he was shot was 3.63%. A company can't survive making 0 or less, so whatever effect fear of assassination has on UHC's greediness, it is going to be no larger than 3.63%.

They may learn the lesson that having their denial rates too high will get them assassinated. Accordingly, they may decrease that metric- by charging higher premiums, kicking expensive doctors out of their network, or reducing their stated coverage. They will not (because they cannot, without ceasing to exist as a company) simply start approving more claims without squeezing their customers elsewhere. They legally cannot do that. No matter how afraid you make the CEOs, you cannot make them afraid to a degree larger than their profit margin.

Well What The Fuck, Then

Like, what, are we supposed to accept that things will literally never get better and that this horrorshow is the best we can hope for? That's some bullshit! If we can't scare the CEOs, who can we scare?

Man I dunno.

Like, for some reason healthcare is stupid expensive! People can't afford to pay for healthcare without insurance- it's like thousands of dollars for basic procedures! Why? Maybe...

Doctors inflate their prices 10x because they know insurance companies will use complicated legal tricks to only pay 10% of the asking price, and this is a constantly escalating price war that serves mainly to fuck over the uninsured

Drug manufacturers and health technology companies fight tooth and nail to maintain monopolies over treatment, so they can charge gazillions to make back the gazillions they had to spend on FDA approval trials

(Trials those same companies lobby to keep necessary because the more money you have to pay for FDA approval, the harder it is for competitors to enter the market since they don't already have the gazillions)

Doctors operate as a cartel and lobby to gatekeep access to medical training so that they can keep doctoring a prestigious and exclusive position, and keep their own salaries high enough to pay their medical school debt and make them rich afterwards- leading to a (profitable) shortage of medical professionals

There is no limit to how expensive things can get but how much people are physically capable of paying, because frequently the alternative to "pay a ridiculous amount for healthcare" is "die", and so healthcare is subject to near-infinitely inelastic demand

Also like a thousand other equally annoying and complicated perverse incentives and stupid situations

This is the human condition: Shit is annoying and complicated and difficult to fix, pretty much 100% of the time forever. A few bullets in some fucko's back isn't really going to make a dent.

(But like, sure, fuck that guy. He probably sucked, as do the hundred other identical suits in line to replace him. Just... don't expect this to help.)

587 notes

·

View notes