#Database Scalability

Explore tagged Tumblr posts

Text

Trends to Follow for Staunch Scalability In Microservices Architecture

Scalability in microservices architecture isn’t just a trend—it’s a lifeline for modern software systems operating in unpredictable, high-demand environments. From streaming platforms handling millions of concurrent users to fintech apps responding to real-time transactions, scaling right means surviving and thriving.

As a software product engineering service provider, we’ve witnessed how startups and enterprises unlock growth with a scalable system architecture from day 1. It ensures performance under pressure, seamless deployment, and resilience against system-wide failures.

And as 2025 brings faster digital transformation, knowing how to scale smartly isn’t just beneficial—it’s vital.

At Acquaint Softtech, we don’t just write code—we craft scalable systems!

Our team of expert engineers, DevOps specialists, and architectural consultants work with you to build the kind of microservices infrastructure that adapts, survives, and accelerates growth.

Let Talk!

Why Scalability in Microservices Architecture Is a Game-Changer

Picture this: your product’s user base doubles overnight. Traffic spikes. Transactions shoot up. What happens?

If you're relying on a traditional monolithic architecture, the entire system is under stress. But with microservices, you’re only scaling what needs to be scaled!

That’s the real power of understanding database scalability in microservices architecture. You’re not just improving technical performance, you’re gaining business agility!

Here’s what that looks like for you in practice:

Targeted Scaling: If your search service is flooded with requests, scale that single microservice without touching the rest!

Fail-Safe Systems: A failure in your payment gateway won’t crash the whole platform—it’s isolated.

Faster Deployments: Teams can work on individual services independently and release updates without bottlenecks.

📊 Statistics to Know:

According to a 2024 Statista report, 87% of companies embracing microservices list scalability as the #1 reason for adoption—even ahead of speed or modularity. Clearly, modern tech teams know that growth means being ready.

Scalability in microservices architecture ensures you’re ready—not just for today’s demand but for tomorrow’s expansion.

But here’s the catch: achieving that kind of flexibility doesn’t happen by chance!

You need the right systems, tools, and practices in place to make scalability effortless. That’s where staying updated with current trends becomes your competitive edge!

Core Principles that Drive Scalability in Microservices Architecture

Understanding the core fundamentals helps in leveraging the best practices for scalable system architecture. So, before you jump into trends, it's essential to understand the principles that enable true scalability.

Without these foundations, even the most hyped system scalability tools and patterns won’t get you far in digital business!

1. Service Independence

It's essential for each microservice to operate in isolation. Decoupling allows you to scale, deploy, and debug individual services without impacting the whole system.

2. Elastic Infrastructure

Your system must incorporate efficient flexibility with demand. Auto-scaling and container orchestration (like Kubernetes) are vital to support traffic surges without overprovisioning.

3. Smart Data Handling

Scaling isn’t just compute—it’s efficient and smart data processing. Partitioning, replication, and eventual consistency ensure your data layer doesn’t become the bottleneck.

4. Observability First

Monitoring, logging, and tracing must be built in within every system to be highly scalable. Without visibility, scaling becomes reactive instead of strategic.

5. Built-in Resilience

Your services must fail gracefully, if its is destined to. Circuit breakers, retries, and redundancy aren’t extras—they’re essentials at scale.

These principles aren’t optional—they’re the baseline for every modern system architecture. Now you’re ready to explore the trends transforming how teams scale microservices in 2025!

Top Trends for Scalability in Microservices Architecture in 2025

As microservices continue to evolve, the focus on scalability has shifted from simply adding more instances to adopting intelligent, predictive, and autonomous scaling strategies. In 2025, the game is no longer about being cloud-native—it’s about scaling smartly!

Here are the trends that are redefining how you should approach scalability in microservices architecture.

🔹 1. Event-Driven Architecture—The New Default

Synchronous APIs once ruled microservices communication. Today, they’re a bottleneck. Event-driven systems using Kafka, NATS, or RabbitMQ are now essential for high-performance scaling.

With asynchronous communication:

Services don’t wait on each other, reducing latency.

You unlock horizontal scalability without database contention.

Failures become less contagious due to loose coupling.

By 2025, over 65% of cloud-native applications are expected to use event-driven approaches to handle extreme user loads efficiently. If you want to decouple scaling from system-wide dependencies, this is no longer optional—it’s foundational.

🔹 2. Service Mesh for Observability, Security, & Traffic Control

Managing service-to-service communication becomes complex during system scaling. That’s where service mesh solutions like Istio, Linkerd, and Consul step in.

They enable:

Fine-grained traffic control (A/B testing, canary releases)

Built-in security through mTLS

Zero-instrumentation observability

A service mesh is more than just a networking tool. It acts like the operating system of your microservices, ensuring visibility, governance, and security as you scale your system. According to CNCF's 2024 report, Istio adoption increased by 80% year-over-year among enterprises with 50+ microservices in production.

🔹 3. Kubernetes Goes Fully Autonomous with KEDA & VPA

Though Kubernetes is the gold standard for orchestrating containers, managing its scaling configurations manually can be a tedious job. That’s where KEDA (Kubernetes Event-Driven Autoscaling) and VPA (Vertical Pod Autoscaler) are stepping in.

These tools monitor event sources (queues, databases, API calls) and adjust your workloads in real time, ensuring that compute and memory resources always align with demand. The concept of the best software for automated scalability management say that automation isn't just helpful—it’s becoming essential for lean DevOps teams.

🔹 4. Edge Computing Starts to Influence Microservices Design

As latency-sensitive applications (like real-time analytics, AR/VR, or video processing) become more common, we’re seeing a shift toward edge-deployable microservices!

Scaling at the edge reduces the load on central clusters and enables ultra-fast user experiences by processing closer to the source. By the end of 2025, nearly 40% of enterprise applications are expected to deploy at least part of their stack on edge nodes.

🔹 5. AI-Powered Scaling Decisions

AI-driven autoscaling based on the traditional metrics ensures a more predictive approach. Digital platforms are now learning from historical traffic metrics, usage patterns, error rates, and system load to:

Predict spikes before they happen

Allocate resources preemptively

Reduce both downtime and cost

Think: Machine learning meets Kubernetes HPA—helping your system scale before users feel the lag. Great!

Modern Database Solutions for High-Traffic Microservices

Data is the bloodstream of your system/application. Every user interaction, transaction, or API response relies on consistent, fast, and reliable access to data. In a microservices environment, things get exponentially more complex as you scale, as each service may need its separate database or shared access to a data source.

This is why your choice of database—and how you architect it—is a non-negotiable pillar in the system scaling strategy. You're not just selecting a tool; you're committing to a system that must support distributed workloads, global availability, real-time access, and failure recovery!

Modern database systems must support:

Elastic growth without manual intervention

Multi-region deployment to reduce latency and serve global traffic

High availability and automatic failover

Consistency trade-offs depending on workload (CAP theorem realities)

Support for eventual consistency, sharding, and replication in distributed environments

Now, let’s explore some of the top database solutions for handling high traffic—

MongoDB

Schema-less, horizontally scalable, and ideal for rapid development with flexible data models.

Built-in sharding and replication make it a go-to for user-centric platforms.

Cassandra

Distributed by design, Cassandra is engineered for write-heavy applications.

Its peer-to-peer architecture ensures zero downtime and linear scalability.

Redis (In-Memory Cache/DB)

Blazing-fast key-value store used for caching, session management, and real-time analytics.

Integrates well with primary databases to reduce latency.

CockroachDB

A distributed SQL database that survives node failures with no manual intervention.

Great for applications needing strong consistency and horizontal scale.

YugabyteDB

Compatible with PostgreSQL, it offers global distribution, automatic failover, and multi-region writes—ideal for SaaS products operating across continents.

PostgreSQL + Citus

Citus transforms PostgreSQL into a horizontally scalable, distributed database—helpful for handling large analytical workloads with SQL familiarity.

Amazon Aurora

A managed, high-throughput version of MySQL and PostgreSQL with auto-scaling capabilities.

Perfect for cloud-native microservices with relational needs.

Google Cloud Spanner

Combines SQL semantics with global horizontal scaling.

Offers strong consistency and uptime guarantees—ideal for mission-critical financial systems.

Vitess

Used by YouTube, Vitess runs MySQL underneath but enables sharding and horizontal scalability at a massive scale—well-suited for read-heavy architectures.

Bottomline

Scaling a modern digital product requires more than just technical upgrades—it demands architectural maturity. Scalability in microservices architecture is built on clear principles of—

service independence,

data resilience,

automated infrastructure, and

real-time observability.

Microservices empower teams to scale components independently, deploy faster, and maintain stability under pressure. The result—Faster time to market, better fault isolation, and infrastructure that adjusts dynamically with demand.

What truly validates this approach are the countless case studies on successful product scaling from tech companies that prioritized scalability as a core design goal. From global SaaS platforms to mobile-first startups, the trend is clear—organizations that invest early in scalable microservices foundations consistently outperform those who patch their systems later.

Scalability in microservices architecture starts with the right foundation—not reactive fixes. Consult the software experts at Acquaint Softtech to assess and align your system for scale. Contact us now to start building with long-term resilience in mind.

Get in Touch

FAQs

1. What is scalability in microservices architecture?

Scalability in microservices architecture refers to the ability of individual services within a system to scale independently based on workload. This allows you to optimize resource usage, reduce downtime, and ensure responsiveness during high-traffic conditions. It enables your application to adapt dynamically to user demand without overburdening the entire system.

2. Why are databases critical in scalable architectures?

A scalable system is only as strong as its data layer. If your services scale but your database can't handle distributed loads, your entire application can face performance bottlenecks. Scalable databases offer features like replication, sharding, caching, and automated failover to maintain performance under pressure.

3. What are the best practices for automated scalability?

Automated scalability involves using tools like Kubernetes HPA, KEDA, and VPA to auto-adjust resources based on real-time metrics. Best practices also include decoupling services, setting scaling thresholds, and implementing observability tools like Prometheus and Grafana. We just disclosed them all in the blog above!

4. Are there real-world case studies on successful product scaling?

Yes, many leading companies have adopted microservices and achieved remarkable scalability. For instance, Netflix, Amazon, and Uber are known for leveraging microservices to scale specific features independently. At Acquaint Softtech, we’ve also delivered tailored solutions backed by case studies on successful product scaling for startups and enterprises alike. Get in touch with our software expert to know more!

#Microservices#Cloud Computing#Software Product Engineering#System Architecture#Database Scalability#DevOps Practices

0 notes

Text

Data Unbound: Embracing NoSQL & NewSQL for the Real-Time Era.

Sanjay Kumar Mohindroo Sanjay Kumar Mohindroo. skm.stayingalive.in Explore how NoSQL and NewSQL databases revolutionize data management by handling unstructured data, supporting distributed architectures, and enabling real-time analytics. In today’s digital-first landscape, businesses and institutions are under mounting pressure to process massive volumes of data with greater speed,…

#ACID compliance#CIO decision-making#cloud data platforms#cloud-native data systems#column-family databases#data strategy#data-driven applications#database modernization#digital transformation#distributed database architecture#document stores#enterprise database platforms#graph databases#horizontal scaling#hybrid data stack#in-memory processing#IT modernization#key-value databases#News#NewSQL databases#next-gen data architecture#NoSQL databases#performance-driven applications#real-time data analytics#real-time data infrastructure#Sanjay Kumar Mohindroo#scalable database solutions#scalable systems for growth#schema-less databases#Tech Leadership

0 notes

Text

Simple Logic migrated a database from MSSQL to MySQL, achieving cost savings, better performance, and enhanced security with Linux support and open-source flexibility. 🚀 Challenges: High licensing costs with MSSQL💸 Limited Linux support, creating compatibility issues🐧 Our Solution: Seamlessly migrated all data from MSSQL to MYSQL🚛 Rewrote stored procedures and adapted them for MYSQL compatibility🔧 Efficiently transitioned sequences to ensure data consistency📜 Enabled significant cost savings by moving to an open-source database💰 The Results: Enhanced database performance and scalability🚀 Improved security and robust Linux support🛡️ Open-source flexibility, reducing dependency on proprietary systems🔓 Ready to transform your database infrastructure? Partner with Simple Logic for reliable migration services! 🎯 💻 Explore insights on the latest in #technology on our Blog Page 👉 https://simplelogic-it.com/blogs/ 🚀 Ready for your next career move? Check out our #careers page for exciting opportunities 👉 https://simplelogic-it.com/careers/ 👉 Contact us here: https://simplelogic-it.com/contact-us/

#MSSQL#SQL#MySQL#Migration#Linux#OpenSource#Data#Database#Scalability#LinixSupport#Flexibility#Systems#DatabaseIngrastructure#MigrationServices#SimpleLogicIT#MakingITSimple#MakeITSimple#SimpleLogic#ITServices#ITConsulting

1 note

·

View note

Text

Mydbops, with over 8 years of expertise, is a leading provider of specialized managed and consulting services for open-source databases, including MySQL, MariaDB, MongoDB, PostgreSQL, TiDB, and Cassandra. Their team of certified professionals offers comprehensive solutions tailored to optimize database performance, enhance security, and ensure scalability. Serving over 300 clients and managing more than 6,000 servers, Mydbops is ISO & PCI-DSS certified and holds an advanced AWS consulting partnership.

#database#database management#mysql#postgresql#mariadb#mongodb#consulting services#Security#scalability#MYDBOPS

1 note

·

View note

Text

Boost performance with a scalable database solution. Explore expert database development services to enhance security, efficiency, and growth.

0 notes

Text

How online Office Systems use Laravel Eloquent Relationships

In an online office furniture system, handling data efficiently is crucial for managing products, orders, and customers. Using an ORM like Eloquent in Laravel simplifies database interactions by allowing developers to work with objects instead of raw SQL queries.

For example, retrieving all products in a category can be done with $category->products instead of writing complex SQL joins. This makes the code more readable, maintainable, and secure, reducing the risk of errors and SQL injection.

So, you can also leverage Eloquent powerful features, such as relationships and query builders, developers can build scalable and efficient applications while keeping the codebase clean and organized.

#laravel#laravel framework#Eloquent#ORM#Object Relational Mapping#object oriented programming#Database#Database relationships#office furniture#web development#scalable apps#web applications#managing data#developer tools#back end design#web design#PHP#office desks#office chairs#products#categories

0 notes

Text

Discover the advantages of scalable NoSQL databases for modern applications. Built for flexibility, exceptional performance, and the ability to manage extensive workloads, NoSQL databases are well-suited for real-time data processing and evolving business requirements. Explore how these databases enable seamless scalability, empowering organizations to excel in today’s data-driven landscape.

0 notes

Text

ColdFusion Database Pooling and Query Caching: Optimizing Performance for Scalable Applications

#ColdFusion Database Pooling and Query Caching: Optimizing Performance for Scalable Applications#ColdFusion Database Pooling and Query Caching#ColdFusion Database Pooling#ColdFusion Query Caching

0 notes

Text

VastEdge offers MySQL Cloud Migration services, enabling businesses to smoothly transition their MySQL databases to the cloud. Benefit from enhanced performance, scalability, and security with our expert migration solutions. Migrate your MySQL databases with minimal downtime and zero data loss.

#MySQL cloud migration#cloud database migration#VastEdge MySQL services#MySQL migration solutions#secure MySQL migration#cloud database performance#scalable cloud solutions#minimal downtime migration#MySQL cloud scalability#MySQL data migration

0 notes

Text

Navigating Cloud Databases: Azure Cosmos DB and AWS Aurora in Focus

When embarking on new software development projects, choosing the right database technology is pivotal. In the cloud-first world, Azure Cosmos DB and AWS Aurora stand out for their unique offerings. This article explores these databases through practical T-SQL code examples and applications, guiding you towards making an informed decision. Azure Cosmos DB, a globally distributed, multi-model…

View On WordPress

#Azure Cosmos DB vs AWS Aurora#cloud databases comparison#database scalability solutions#global distribution databases#T-SQL examples

0 notes

Text

Also preserved on our archive (Thousands of reports, sources, and resources! Daily updates!)

By Robert Stevens

A COVID wave fuelled by the XEC variant is leading to hospitalisations throughout Britain.

According to the UK Health Security Agency (UKHSA), the admission rate for patients testing positive for XEC stood at 4.5 per 100,000 people in the week to October 6—up significantly from 3.7 a week earlier. UKHSA described the spread as “alarming”.

Last week, Dr. Jamie Lopez Bernal, consultant epidemiologist at the UKHSA, noted of the spread of the new variant in Britain: “Our surveillance shows that where Covid cases are sequenced, around one in 10 are the ‘XEC’ lineage.”

The XEC variant, a combination of the KS.1.1 and KP.3.3 variants, was detected and recorded in Germany in June and has been found in at least 29 countries—including in at least 13 European nations and the 24 states within United States. According to a New Scientist article published last month, “The earliest cases of the variant occurred in Italy in May. However, these samples weren’t uploaded to an international database that tracks SARS-CoV-2 variants, called the Global Initiative on Sharing All Influenza Data (GISAID), until September.”

The number of confirmed cases of XEC internationally exceeds 600 according to GISAID. This is likely an underestimation. Bhanu Bhatnagar at the World Health Organization Regional Office for Europe noted that “not all countries consistently report data to GISAID, so the XEC variant is likely to be present in more countries”.

Another source, containing data up to September 28—the Outbreak.info genomic reports: scalable and dynamic surveillance of SARS-CoV-2 variants and mutations—reports that there have been 1,115 XEC cases detected worldwide.

Within Europe, XEC was initially most widespread in France, accounting for around 21 percent of confirmed COVID samples. In Germany, it accounted for 15 percent of samples and 8 percent of sequenced samples, according to an assessment from Professor Francois Balloux at the University College London, cited in the New Scientist.

Within weeks of those comments the spread of XEC has been rapid. Just in Germany, it currently accounts for 43 percent of infections and is therefore predominant. Virologists estimate that XEC has around twice the growth advantage of KP.3.1.1 and will be the dominant variant in winter.

A number of articles have cited the comments made to the LA Times by Eric Topol, the Director of the Scripps Research Translational Institute in California. Topol warns that XEC is “just getting started”, “and that’s going to take many weeks, a couple months, before it really takes hold and starts to cause a wave. XEC is definitely taking charge. That does appear to be the next variant.”

A report in the Independent published Tuesday noted of the make-up of XEC, and its two parent subvariants: “KS.1.1 is a type of what’s commonly called a FLiRT variant. It is characterised by mutations in the building block molecules phenylalanine (F) altered to leucine (L), and arginine (R) to threonine (T) on the spike protein that the virus uses to attach to human cells.

“The second omicron subvariant KP.3.3 belongs to the category FLuQE where the amino acid glutamine (Q) is mutated to glutamic acid (E) on the spike protein, making its binding to human cells more effective.”

Covid cases are on the rise across the UK, with recent data from the UK Health Security Agency (UKHSA) indicating a 21.6 percent increase in cases in England within a week.

There is no doubt that the spread of XEC virus contributed to an increase in COVID cases and deaths in Britain. In the week to September 25, there were 2,797 reported cases—an increase of 530 from the previous week. In the week to September 20 there was a 50 percent increase in COVID-related deaths in England, with 134 fatalities reported.

According to the latest data, the North East of England is witnessing the highest rate of people being hospitalised, with 8.12 people per 100,000 requiring treatment.

Virologist Dr. Stephen Griffin of the University of Leeds has been an active communicator of the science and statistics of the virus on various public platforms and social media since the start of the pandemic. He was active in various UK government committees during the height of the COVID-19. In March 2022, he gave an interview to the World Socialist Web Site.

This week Griffin spoke to the i newspaper on the continuing danger of allowing the untrammelled spread of XEC and COVID in general. “The problem with COVID is that it evolves so quickly,” he said.

He warned, “We can either increase our immunity by making better vaccines or increasing our vaccine coverage, or we can slow the virus down with interventions, such as improving indoor air quality. But we’re not doing those things.”

“Its evolutionary rate is something like three or four times faster than that of the fastest seasonal flu. So you’ve got this constant change in the virus, which accelerates the number of susceptible people.

“It’s creating its own new pool of susceptibles every time it changes to something that’s ‘immune evasive’. Every one of these subvariants is distinct enough that a whole swathe of people are no longer immune to it and it can infect them. That’s why you see this constant undulatory pattern which doesn’t look seasonal at all.”

There are no mitigations in place in Britain, as is the case internationally, to stop the spread of this virus. Advice for those with COVID symptoms is to stay at home and limit contact with others for just five days. The National Health Service advises, “You can go back to your normal activities when you feel better or do not have a high temperature”, despite the fact that the person may well still be infectious. Families are advised that children with symptoms such as a runny nose, sore throat, or mild cough can still “go to school or childcare' if they feel well enough.

The detection and rapid spread of new variants disproves the lies of governments that the pandemic is long over and COVID-19 should be treated no differently to influenza.

Deaths due to COVID in the UK rose above 244,000 by the end of September. It is only a matter of time before an even deadlier variant emerges. Last month, Sir Chris Whitty, England’s chief medical officer, told the ongoing public inquiry into COVID-19 “We have to assume a future pandemic on this scale [the global pandemic which began in 2020] will occur… That’s a certainty.”

#mask up#covid#pandemic#wear a mask#public health#covid 19#wear a respirator#still coviding#coronavirus#sars cov 2

145 notes

·

View notes

Text

How to Optimize Databases for Scalability from Day 1| 2025 Guide

Introduction

Scaling a digital product without database foresight is like building a tower without a foundation. It may stand for a while, but it won’t survive pressure. If you want your application to scale with confidence, you must optimize databases for scalability right from the planning phase!

What it means,Selecting the right technologies, modeling data intentionally, and aligning performance expectations with long-term architectural goals.

From startups preparing for growth to enterprises modernizing legacy systems, the principles are the same: Scalable performance doesn’t start at scale,it starts during setup!

In this blog, we’ll explore the strategies, design patterns, and decisions that ensure your database layer doesn’t just support growth but accelerates it!

Why Optimizing Databases for Scalability Matters From Day 1?

Mostly, businesses think about scaling their system only after traffic surges;

But by then, it’s usually too late. A fully scalable system handles heavy workloads and survives, but databases that aren’t built with scaling in mind become silent bottlenecks,slowing performance, increasing response times, and risking system failure just when user demand is rising.

The irony? The most critical scaling problems usually begin with small oversights in the early architecture phase:

poor schema design,

lack of indexing strategy, or

choosing the wrong database engine altogether.

These not-so-worthwhile decisions regarding databases for scalability don’t hurt at launch. But over time, they manifest as fragile joins, sluggish queries, and outages that cost both revenue and user trust.

Starting with scalability as a design principle means recognizing the role your data layer plays,not just in storing information but in sustaining business performance.

"You don’t scale databases when you grow; you grow because your databases are already built to scale."

Understanding the Different Types of System Scalability

When planning for future growth, it’s essential to understand how your system will scale. Adopting the right scalability path introduces unique engineering trade-offs that directly affect performance, resilience, and cost over time.

Types of System Scalability

Vertical Scalability

Vertical scaling refers to enhancing a single server’s capacity by adding more memory, CPU, or storage. While it’s easy to implement early on, it's inherently limited. Over time, you’ll become bound by the physical capacity of a single machine, and costs will grow exponentially as you move toward high-performance hardware.

Horizontal Scalability

This approach distributes workloads across multiple machines or nodes. It enables dynamic scaling by adding/removing resources as needed,making it more suitable for cloud-native systems, large-scale platforms, and distributed databases. However, it requires early architectural planning and operational maturity.

Challenges To Optimize Databases for Scalability

One of the biggest mistakes businesses make is assuming scalability will auto-operate with time. But the earlier you scale poorly, the faster your system becomes brittle.

Here are a few early-stage scaling challenges within the system when scalability isn't planned from day one:

Single Point of Failure:

Without horizontal scaling or replication, your database becomes a bottleneck and a risk to uptime.

Lack of Data Partitioning Strategy:

As data grows, unpartitioned tables cause slow reads and writes,especially under concurrent access.

Improper Indexing:

Skipping index design early leads to query lag, full table scans, and long-term maintenance pain.

Assuming Traffic Uniformity:

Businesses underestimate how read/write patterns shift as the application scales, leading to overloaded services.

Delayed System Monitoring:

Without early observability, issues appear only after users are impacted, making root cause analysis much harder.

Real scalability requires foresight, analyzing usage patterns, building room for flexibility, and choosing scaling methods that suit your data structure,it's not something just your initial timeline strategy.

Schema Design To Optimize Databases for Scalability

A scalable database begins with a schema that anticipates change. While schema design often starts with immediate functionality in mind,tables, relationships, and constraints,the real challenge lies in ensuring those structures remain efficient as the data volume and complexity grow.

The Right Way to Schema Methodology

Normalize Awareness, Denormalize Intent

Businesses often over-normalize to avoid redundancy, but negligence can kill performance at scale. On the other hand, blind denormalization can lead to bloated storage and data inconsistency. Striking the right balance requires understanding your application’s read/write patterns and future query behavior.

Plan for Index Evolution

Indexing is not just about speed,it’s about adaptability. What works for 100 users might slow down drastically for 10,000. Schema design must allow for the addition of compound indexes, partial indexes, and even filtered indexes as usage evolves. The key is in designing your tables knowing that indexes will change over time.

Design for Flexibility, Not Fragility

Rigid schemas resist change and do not adapt easily, hence, businesses struggle a lot with them. To address this, use fields that support variation, like JSON columns in PostgreSQL or document structures in MongoDB, when anticipating fluid requirements. This allows your application to evolve without costly migrations or schema overhauls.

Anticipate Data Volume

Schema design should not just represent logic,it must reflect scale tolerance. You must first decide in yourself whether your system is worth enough to serve a larger audience, and ask yourself questions like,

What happens when this table grows 100x?

Will your queries still return in milliseconds?

Will storage costs stay manageable?

Designing APIs & Mobile Systems That Scale

Scalability isn’t just about backend systems,it’s deeply tied to how your product interacts with users, especially through APIs and mobile interfaces. Ignoring such touchpoints during early database planning often leads to invisible friction that only surfaces when it’s too late.

Scalability Considerations in API Design

Your APIs act as the gateways to your database. Poorly designed APIs,those that allow heavy joins, unfiltered bulk data calls, or lack pagination,can unintentionally overload the database layer.

A scalable API controls query scope, handles versioning, and prevents N+1 query patterns. If your APIs don’t align with your data model, no amount of backend optimization can save you under high load.

Scalability Considerations for Mobile Apps

Mobile users often operate in low-bandwidth, high-latency environments. This makes data fetching, sync operations, and caching far more critical.

Design mobile-facing services to be lightweight, cache-aware, and tolerant to intermittent connectivity. Use batched reads, compressed payloads, and sync queuing to reduce the database strain caused by mobile churn and retries.

Signs Your Application Needs to Scale

Sometimes, performance degradation creeps in quietly. Long-running queries, delayed API responses, increased memory usage, or slow mobile syncs are often early signs that your application needs to scale.

If your team is writing more workarounds than improvements,or you’re afraid to touch core tables because of breakage risks,it’s time to rethink your system architecture.

Infrastructure and Tools That Support Scaling

A well-structured schema and carefully selected database engine are only as effective as the infrastructure that supports them. As your application grows, infrastructure becomes the silent enabler,or barrier,to sustainable database performance, depending upon how you’ve planned to scale it, or not! Choosing the right tools and deployment strategies early helps you avoid costly migrations and reactive firefighting later on!

Managed Services vs. Self-Hosted

Managed database services like Amazon RDS, Azure SQL, and Google Cloud Spanner offer automatic backups, scaling, replication, and failover with minimal configuration. For businesses focused on building products rather than managing infrastructure, these platforms provide a clear advantage. However, self-hosted setups still suit companies needing full control, compliance-specific setups, or cost optimization at scale.

Sharding and Replication

Sharding splits data across multiple nodes, enabling horizontal scaling and isolation of high-volume workloads. Replication, on the other hand, ensures availability and load distribution by duplicating data across regions or read replicas. Together, they form the backbone of database scaling for high-concurrency systems.

Caching Layers

Adding tools like Redis or Memcached helps offload repetitive read operations from your database, drastically improving response time. Caching frequently accessed data, authentication tokens, or even full query results minimizes database strain during traffic spikes.

Monitoring and Observability

Without real-time insights, even scalable systems can fall behind. Tools like Prometheus, Grafana, Datadog, and pg_stat_statements help track performance metrics, query slowdowns, and resource bottlenecks. Observability is not a luxury within a system,it’s a necessity to make intelligent scaling decisions.

Legacy Upgrade Paths

Many businesses struggle with outdated systems that weren’t designed with scale in mind. Here, affordable solutions for legacy system upgrades come into play. For example, introducing read replicas, extracting services into microservices, or gradually transitioning to cloud-native databases etc. Incremental modernization allows you to gain performance without rewriting everything at once.

Bottomline

Database bottlenecks don’t appear overnight. They build silently,through overlooked indexing, rigid schema choices, and wrong infrastructure scalability decisions implemented with short-term convenience in mind. By the time symptoms show, the cost of correction is steep,not just technically, but operationally and financially as well.

To truly optimize databases for scalability, the process must begin before the first record is stored. From choosing the right architecture and data model to aligning APIs, mobile experiences, and infrastructure,scaling is not a one-time adjustment but a long-term strategy to continue with.

The most resilient systems to optimize databases for scalability anticipate growth, respect complexity, and adopt the right tools at the right time. Whether you're modernizing a legacy platform or building from the ground up, thoughtful database design remains the cornerstone of sustainable scale.

FAQs

What are the risks of ignoring database scalability in early-stage development?

Neglecting scalability often leads to slow queries, frequent outages, and data integrity issues as your application grows. Over time, these issues disrupt user experience and make system maintenance expensive. Delayed optimization usually involves painful migrations and performance degradation, costing a hefty sum of money.

What are the signs my current application needs to scale its database?

Common signs include slow response times, increasing query load, high CPU/memory usage, and growing replication lag. If you’re adding more users but also experiencing delays or errors, these are clear signs your application needs to scale. Regulated monitoring of the system with advanced tools can reveal these patterns before they escalate and hinder its progress.

Can legacy systems be optimized for scalability without a full rewrite?

Yes. Businesses can apply affordable solutions for legacy system upgrades, like introducing read replicas, refactoring slow queries, applying caching layers, or incrementally shifting to scalable architectures. Strategic modernization often delivers strong results with lower risk, eliminating unnecessary complete rewrites.

#Optimize databases for scalability#Scalability considerations in API design#Databases for scalability

0 notes

Text

I genuinely cannot believe I’m saying this and that it is not a top-of-the-page headline story across the country, but the US Army just swore in four tech executives as Lieutenant Colonels: Shayam Sankar, the CTO of Palantir (Peter Thiel’s company), Andrew Bosworth, the CTO of Meta (Mark Zuckerberg’s company) and OpenAI’s chief product officer Kevin Weil and former chief research officer Bob McGrew, (the company belonging to Sam Altman.)

These four men are all now one rank away from a General. Second-in-command for field-grade officers. There are 16 ranks lower than a Lieutenant Colonel and these four untrained civilians just leapfrogged over soldiers who have dedicated their entire career and lives to the US military. Apparently, these men are going to work on “targeted projects to help guide rapid and scalable tech solutions to complex problems.”

A sentence that means absolutely nothing because clearly this is just a way of giving these companies access to top-secret information without them having to go through any pesky background checks. If that doesn’t scare you, it really should, because we already know that Palantir is building a massive centralized database using much of the information they acquired from DOGE, Elon Musk’s foray into the American government.

These billionaire tech-bros are compiling lists of our most sensitive information. Our tax records, medical data, bank accounts, Social Security information, immigration status, etc. This administration is allowing them to build the infrastructure for techno-feudalism. Authoritarianism in the form of data and surveillance.

It is a horrifying and blatant conflict of interest that harms the safety and security of all Americans. There should not be this much overlap between private tech and the US government, certainly not between private tech and the US military. I do not know how asleep at the wheel Congress can possibly be. But they should be shutting this down immediately and every single one of us should be demanding that they do it.

[Barry Caldwell]

#Barry Caldwell#Palantir#Banana Republic#DOGE#the US Military#conflict of interest#corruption#Peter Thiel

39 notes

·

View notes

Text

#Scrabble

Crack the Code! 🔢

Guess the scrambled tech word of the day! 🚀💡

Comments your answer below👇

💻 Explore insights on the latest in #technology on our Blog Page 👉 https://simplelogic-it.com/blogs/

🚀 Ready for your next career move? Check out our #careers page for exciting opportunities 👉 https://simplelogic-it.com/careers/

#scrabblechallenge#scrabble#scrabbletiles#scrabbleart#scrabbleframe#scrabbleletters#words#boardgame#fun#scrabblecraft#nosql#database#data#unstructureddata#scalable#program#webpage#simplelogic#makingitsimple#simplelogicit#makeitsimple#itservices#itconsulting

0 notes

Text

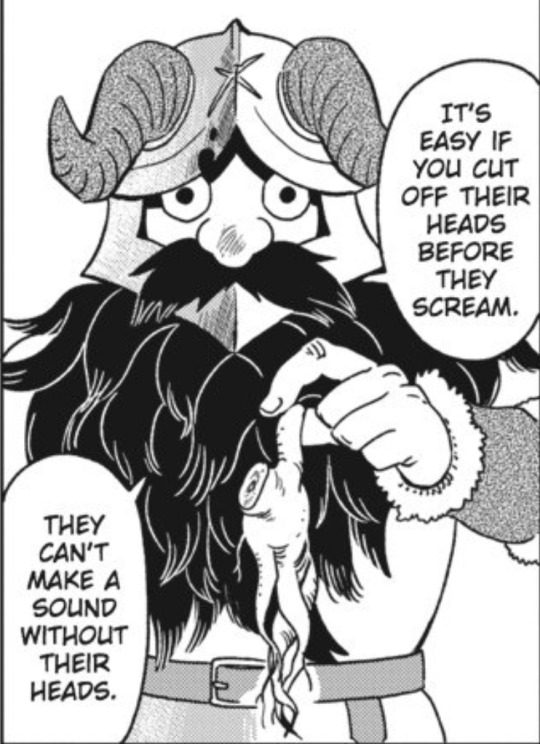

Dungeon Meshi Chapter 4

What was it like to read this series as it came out? This chapter feels like Kui is trying to address complaints about Marcille's usefulness.

The chapter image implies Marcille and Falin went to the same magic school. I assume the book in the bottom-left corner is the one Marcille refers to on how to pluck Mandrakes.

Looking back on all the chapters so far, Marcille has spent much of the journey complaining about the whole "Eating monsters" thing and hasn't actually contributed anything to the journey. In fact, I'd say she's mostly been a detriment since she had to get saved from a slime and a man-eating plant.

(Granted, Chilchuck also hasn't done anything of value, but he also hasn't needed rescuing, required the party to rest, or been complaining about things.)

And Marcille is acutely aware that she's not been helpful at all. She is so desperate throughout this chapter to show that her magic and education can help everyone.

The elaborate and highly inefficient method for harvesting madrakes in Marcille's book vs the very simple way Senshi harvests them kind of is reminding me about something that was talked about recently in a databases class I'm currently taking.

The problem the professor went over was "We have n number of CPUs we could divide our data between to speed up processing. We can make a lookup table that decides which CPU should be given which datapoint based on a cross reference of two fields in each datapoint. How do we ensure we maximize our CPU usage?"

The professor showed us what they called the "PhD student solution" which involved an elaborate pattern algorithm that causes you to build your lookup table in a complex snaking pattern. And in the end, the method is better at the things the existing methods were bad at but worse at solving problems that existing methods were already great at.

Then the professor showed us the "15 years experience" solution which used very simple calculations and was a light modification of the existing methods which allowed it to keep the strengths of the existing method and managed to avoid most of the issues with the existing method. The solution was elegant, easy to follow and replicate, and it was scalable to higher values of n and higher dimensional tables.

Anyway, Marcille's book is a PhD student solution. It works, but it was made by someone who was looking for a flashy solution that would get people's attention. How many dogs died because of this person's methods? Laios's solution sounds dumb but it's likely far better than Marcille's. Maybe the solution could just be to magic up a silence field so the Mandrakes can't make any noise when they scream.

Meanwhile, Senshi has the practical 15 years experience solution.

And Marcille decides to go through an elaborate process to show the value of the elaborate method as one might expect a PhD student to do.

Whatever Marcille was going to cast in chapter 2, it was different from what she cast this chapter. The runes she speaks are different and I can't find anything that looks the same.

That heart-to-heart was nice. Marcille wants to be the reliable one who can resolve every issue they encounter. But Laios doesn't want to exhaust Marcille by making her handle every situation they encounter. Being the reliable one all the time is exhausting; it's good to be able to defer to others in situations you're not the most capable in.

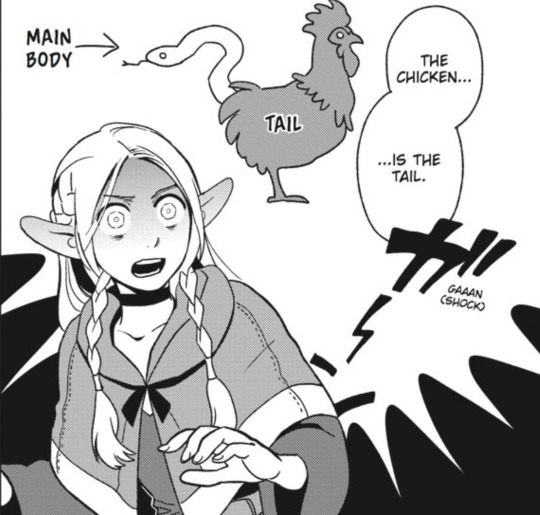

I was equally as shocked as Marcille when Laios said this. And this explains so much about the things I thought were strange about the basilisk.

If the chicken is the tail, then it doesn't actually matter that it was a rooster. It doesn't actually determine the basilisk's role in reproduction.

Nice touch putting a name and face to the basilisk researcher. It makes this world a little more alive that there is a person we can tie this silly fact to rather than it just being an arbitrarily known thing.

I noticed but didn't call him out on it last chapter, but I'm going to call him out this time: Senshi refers to Marcille as "the Elf-girl". And he only started calling her by her name when it turned out the mandrake she plucked tasted better than the mandrakes plucked with Senshi's methods.

Senshi's method is definitely the most practical way to handle killing mandrakes but it turns out that it's not the best way to harvest them. Meanwhile Marcille's method is flashy and harvests better quality mandrakes, but is overall too complex to be useful and still worse in general than Senshi's method.

If monster cuisine becomes a mainstream concept, maybe one day someone will find an effective method to harvest better quality mandrakes (Silence field).

I could hear the "beeowoop" on that last panel.

back

14 notes

·

View notes

Text

Harnessing the Potential of Scalable NoSQL Databases

In today's era of big data and real-time applications, businesses are increasingly adopting NoSQL databases due to their scalability, flexibility, and performance. Unlike traditional relational databases, NoSQL databases are specifically designed to manage diverse data types and large volumes of information, making them well-suited for modern applications that require high availability and low latency. But what are the key factors that make a NoSQL database scalable, and why might it be the right choice for your next project?

Understanding NoSQL Databases

NoSQL databases, also known as "non-relational" databases, depart from the structured schema of traditional relational databases. They utilize diverse data models, including document, key-value, column-family, and graph, to store and manage data. This versatility enables NoSQL databases to effectively handle unstructured, semi-structured, and structured data, often generated in real-time by modern applications.

The Importance of Scalability

As organizations grow, the volume of data they generate and process increases substantially. Traditional relational databases often face challenges in meeting these demands, particularly when managing variable workloads or scaling across distributed systems. NoSQL databases provide a scalable and adaptable solution, designed to align with the dynamic requirements of expanding businesses.

Key Features of Scalable NoSQL Databases

Horizontal Scalability: A defining feature of NoSQL databases is their ability to scale horizontally. Instead of relying on upgrading to more powerful servers (vertical scaling), additional nodes can be integrated into a distributed system to manage increased workloads. This approach offers both efficiency and cost-effectiveness as data volumes grow.

Distributed Architecture: Scalable NoSQL databases leverage a distributed architecture, enabling data to be stored across multiple nodes within a cluster. This design enhances performance while ensuring high availability and fault tolerance.

Flexible Schema: NoSQL databases support dynamic schemas, allowing developers to store and query data without predefined table structures. This flexibility is particularly advantageous for applications handling diverse or evolving data types.

High Throughput and Low Latency: Designed for efficiency, scalable NoSQL databases optimize high-speed read and write operations. This ensures that applications deliver real-time performance, even under heavy workloads.

Replication and Sharding: Replication enhances data redundancy by copying it across multiple nodes, while sharding partitions the data into smaller, manageable segments. Together, these techniques improve both scalability and reliability.

Use Cases for Scalable NoSQL Databases

Scalable NoSQL databases are extensively utilized across industries for applications requiring high performance and adaptability. Key use cases include:

E-commerce: Managing product catalogs, user sessions, and real-time inventory updates.

Social Media: Efficiently storing and retrieving large volumes of user-generated content.

IoT and Analytics: Processing time-series data generated by connected devices.

Gaming: Enabling real-time leaderboards and managing player profiles.

Leading Scalable NoSQL Databases

Several NoSQL databases have established themselves as reliable solutions for scalability:

MongoDB: A document-oriented database renowned for its flexibility and developer-friendly ecosystem.

Cassandra: A column-family database designed to deliver high availability and linear scalability.

Redis: A high-performance key-value store specialized in real-time data processing.

Amazon DynamoDB: A fully managed NoSQL service offering seamless scalability and deep integration within the AWS ecosystem.

Benefits of Scalable NoSQL Databases

Adopting a scalable NoSQL database empowers businesses to:

Manage rapid data growth without sacrificing performance.

Adjust to dynamic and evolving application requirements.

Achieve cost-efficient scalability through distributed architectures.

Deliver a seamless user experience with minimal operational downtime.

Conclusion

Scalable NoSQL databases have become a fundamental component of modern data architectures, offering the performance and flexibility essential in today's fast-paced digital environment. Whether you are developing a social media platform, an IoT application, or a real-time analytics solution, a scalable NoSQL database can support your business objectives effectively. By selecting the appropriate database and utilizing its scalability features, businesses can future-proof their applications and deliver exceptional value to their users.

0 notes