#MIT physics

Explore tagged Tumblr posts

Text

#love theoretically#hadron collider#physics#academia#phd#diabetes#type 1 diabetes#ali hazelwood#elsie hannaway#jack smith turner#MIT physics#understanding#large hadron collider#CERN

3 notes

·

View notes

Text

Chris Williams

Born in New York City, Chris Williams considers Potomac, Maryland, to be his hometown. A private pilot and Eagle Scout, Williams is a board-certified medical physicist and holds a doctorate in physics from MIT. https://go.nasa.gov/49YJJmf

Make sure to follow us on Tumblr for your regular dose of space!

#NASA#astronaut#NASA Yearbook#graduation#Class of 2024#space#Inspiration#Maryland#Eagle Scout#physics#MIT#STEM

2K notes

·

View notes

Text

Meine Firma hatte neuerdings eine zweite Auszubildende. Mit vorheriger Ausbildung in einer verwandten Brache, daher wurde ihr beim Einstellungsgespräch angeboten doch das 1. Lehrjahr zu überspringen. Ist nicht so ungewöhnlich, hab ich damals auch gemacht, weiter kein Thema. Sie sagte ja, Vertrag wurde unterschrieben, Antrag auf Einstieg im 2. Lehrjahr gestellt und genehmigt.

Mein Chef hat jetzt, 6 Monate später, allerdings bemerkt dass es doch irgendwie nervig sei dass beide Azubinen jetzt im 2. Lehrjahr sind. Beide am gleichen Tag in der Berufsschule, beide machen gleichzeitig Prüfung, schien alles ein großes Problem für ihn zu sein. Womit er dann nicht erstmal zu dem Typen rannte der für Personal-Angelegenheiten zuständig ist, sondern die Azubine am Freitagmorgen anblöffte. Keine Ahnung, lösungsorientiertes Mobving nennt sich das. Was weiß ich. Handwerk halt. Erstmal losbrüllen, vielleicht löst sich das Problem vor Schreck von allein. Was ein vibe

Er wollte jetzt, dass sie (nach Beginn des Schuljahres, mind you) zurück in's 1. geht. Die Azubine beworb sich vor Schreck dann über's Wochenende bei zwei anderen Firmen, weil fuck that. Verständlich. Leider erwischte sie dabei die Firma des besties unseres Chefs, der sofort am Telefon hing um die Azubine bei unserem Chef anzuschwärzen. Die Stimmung am Montag war bestens, der Azubine wurde Vertrauensbruch vorgeworfen (wobei... Lunte zu riechen weil man nach einem halben Jahr spontan den Ausbildungsvertrag ändern will ist in my book kein Vetrauensbruch), gegen Mittag stand dann ihre Mutter auf der Matte. Thank god, endlich ein Erwachsener.

Mein Chef ließ sich nicht dazu herab dem Gespräch mit Azubine plus Mutter beizuwohnen. Ihre einzige Forderung war dabei, dass sie nach wie vor in's zweite Lehrjahr wollte- wie's in ihrem Ausbildungsvertrag steht. Seit 6 Monaten. Nö, is nich.

Und dann hatten wir halt eine Azubine weniger. Noch können wetten abgegeben werden wann hier wieder jemand einen monolog darüber hält dass die jungen Leute alle nicht richtig arbeiten wollen, und wir deswegen kaum Lehrlinge bekommen

#ich musste ne heulende achtzehnjährige trösten die eigentlich lieber mit einem der “erwachsenen” mitarbeiter gesprochen hätte#leider wartet mein chef immer ab bis alle die was zu melden haben bereirs weg sind. und packt dann die richtig miesen nummern aus#also durfte sie sich vom klassenclown des hauses anhören dass sie alles richtig gemacht hat etc etc#cool cool cool cool cool. im emotionally devastated and physically fucked ich liebe diesen laden#es ist montag.

212 notes

·

View notes

Text

someone literally just flagged me down at Wbf and tried to pray over me because I'm on a crutch???

Like since when does this shit happen in Vienna. They were two people like my age or younger as well. "We believe Jesus heals and we saw that you go with a crutch so we thought we'd lay hands on you and heal you" don't fucking touch me ihr gschissana was ist

like I'm literally dressed like this as well what were they thinking

#I'm genuinely kind of upset about it too#I hate when this happens#absolutely hate it#yeah I'm physically disabled ihr trottel that doesn't mean you have to bother me in public#was ist falsch mit euch#shitpost nach sacher art

30 notes

·

View notes

Text

Adam/Leo + Sherlock/John // (thrift shop)

"we can't giggle, it's a crime scene, stop it"

#hörk hat schon sehr johnlock vibes finde ich#nur mit mehr physical contact#was mach ich also mit den parallelen? ein random edit#spatort#tatort saarbrücken#bbc sherlock#hörk#johnlock#sherlock#leo hölzer#adam schürk#my edits

49 notes

·

View notes

Text

MIT physicists have created a new and long-lasting magnetic state in a material, using only light. In a study that will appear in Nature, the researchers report using a terahertz laser -- a light source that oscillates more than a trillion times per second -- to directly stimulate atoms in an antiferromagnetic material. The laser's oscillations are tuned to the natural vibrations among the material's atoms, in a way that shifts the balance of atomic spins toward a new magnetic state. The results provide a new way to control and switch antiferromagnetic materials, which are of interest for their potential to advance information processing and memory chip technology.

Read more.

31 notes

·

View notes

Text

I've got a question to someone more Mathematically Learned than me:

What is the current Edge Of Human Knowledge in regards to the origins and fundamentals of the natural world? What's the current thread that most people are trying to follow to get down to like. The exact reason for the nature of the universe? I've heard that in the first second directly after the big bang, things were quote "a little weird," unquote. And I want to know what that means. I'd like to know what gaps in our knowledge of physics we are most focused on trying to fill right now.

#text#random thought#physics#STEM#im just gonna tag people who might search for this shit#theoretical physics#conspiracy theories#uhh#NASA#MIT#fuckin uhhhhhhhhh uhh who else uhhhhh#nerds#science#learning#math#thats all i got#please help me smart people im academically helpless and need the strong embrace of your hot wet muscley brains :)

194 notes

·

View notes

Text

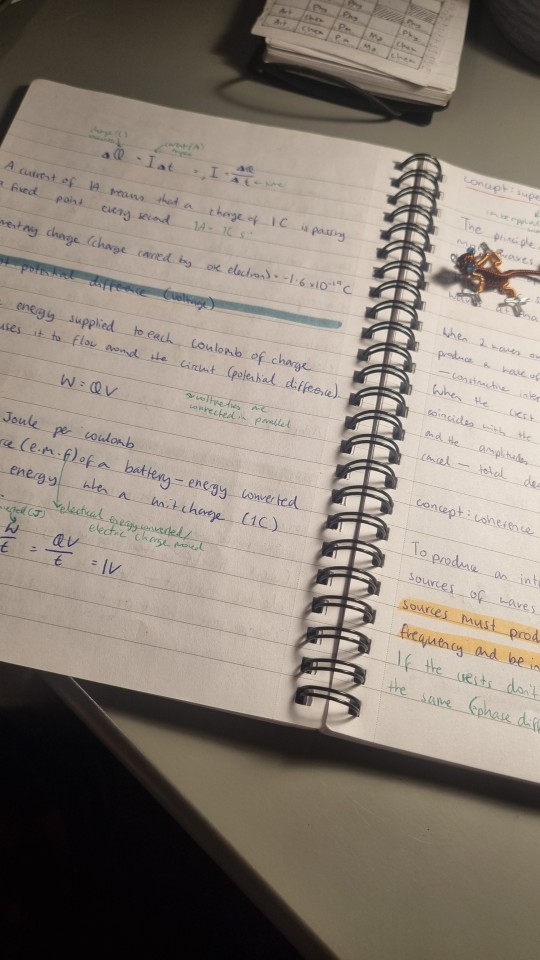

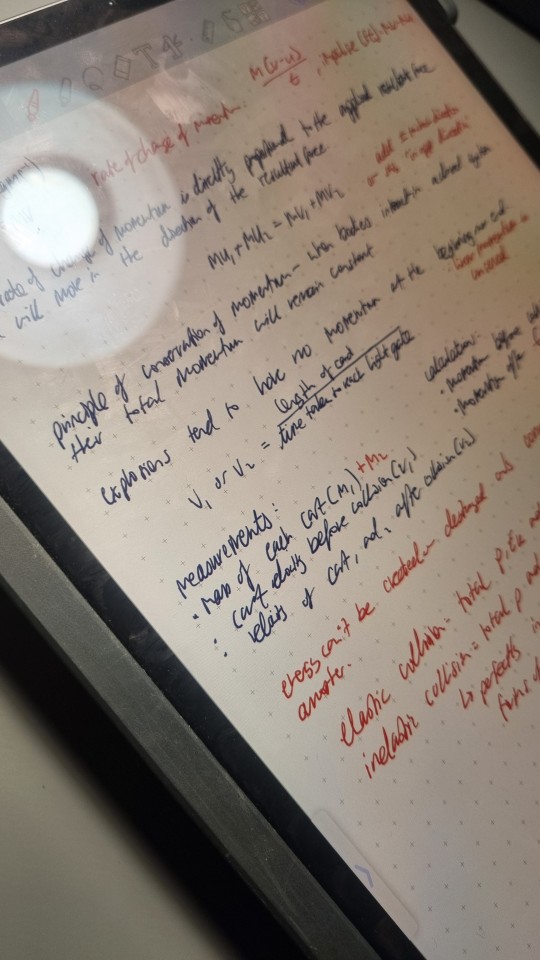

|| ༻`` 28 Jan 24 — Sunday

100 days of productivity 28/100

I wanted to get up at 5 today to study as I couldn't really study yesterday. I messed with my alarm until it was after 8 when I finally got up... Despite ne being really disappointed and angry with myself for that (I was really looking forward to getting to so early to study the night before), the day turned out to be really nice. I:

spent quality time with some family

listened to some good music

revised physics for an hour (decided sleep is more important than studying right now)

watched some short self-improvement videos

wrote down my MIT's for tomorrow and rough plan for next week

Goodnight <3

#studyblr#dark academia#light academia#chaotic academia#study motivation#100dop#study inspiration#100 days of productivity#100 days of productivity challenge#student#self improvement#discipline#family#physics#studying#music#o2life#mit#digital notes#study notes

24 notes

·

View notes

Text

I'm curious about everyones headcanons about this

#skyrim#khajiit#skyrim the elder scrolls#the elder scrolls#apparently the way they drink is also called cutta cutta drinking named after the cat called Cutta Cutta#who belonged to a certain Dr.Stocker who just so ahppened to be working at MIT and doing physics related stuff (very oversimplified by me)#and the dude just at one point wondered how the fuck a cat lapping it's drink actually works physics and hydrodynamics wise#so he got a bunch of homies together and they spent 3 years observing the way Cutta Cutta drank#You can actually find the videos of the observations on the MIT youtube channel by simply looking for the cats name lmao#I learned all of this simply because making the poll made me wonder if there is a specific name for how cats drink#and there is!#so now I must share it all with you#edit: I made a spelling mistake in the title question and tumblr won't let me fix it. time to throw myself off the throat of the world

16 notes

·

View notes

Text

The Elegant Math of Machine Learning

Anil Ananthaswamy’s 3 Greatest Revelations While Writing Why Machines Learn.

— By Anil Ananthaswamy | July 23, 2024

Image: Aree S., Shutterstock

1- Machines Can Learn!

A few years ago, I decided I needed to learn how to code simple machine learning algorithms. I had been writing about machine learning as a journalist, and I wanted to understand the nuts and bolts. (My background as a software engineer came in handy.) One of my first projects was to build a rudimentary neural network to try to do what astronomer and mathematician Johannes Kepler did in the early 1600s: analyze data collected by Danish astronomer Tycho Brahe about the positions of Mars to come up with the laws of planetary motion.

I quickly discovered that an artificial neural network—a type of machine learning algorithm that uses networks of computational units called artificial neurons—would require far more data than was available to Kepler. To satisfy the algorithm’s hunger, I generated a decade worth of data about the daily positions of planets using a simple simulation of the solar system.

After many false starts and dead-ends, I coded a neural network that—given the simulated data—could predict future positions of planets. It was beautiful to observe. The network indeed learned the patterns in the data and could prognosticate about, say, where Mars might be in five years.

Functions of the Future: Given enough data, some machine learning algorithms can approximate just about any sort of function—whether converting x into y or a string of words into a painterly illustration—author Anil Ananthaswamy found out while writing his new book, Why Machines Learn: The Elegant Math Behind Modern AI. Photo courtesy of Anil Ananthaswamy.

I was instantly hooked. Sure, Kepler did much, much more with much less—he came up with overarching laws that could be codified in the symbolic language of math. My neural network simply took in data about prior positions of planets and spit out data about their future positions. It was a black box, its inner workings undecipherable to my nascent skills. Still, it was a visceral experience to witness Kepler’s ghost in the machine.

The project inspired me to learn more about the mathematics that underlies machine learning. The desire to share the beauty of some of this math led to Why Machines Learn.

2- It’s All (Mostly) Vectors.

One of the most amazing things I learned about machine learning is that everything and anything—be it positions of planets, an image of a cat, the audio recording of a bird call—can be turned into a vector.

In machine learning models, vectors are used to represent both the input data and the output data. A vector is simply a sequence of numbers. Each number can be thought of as the distance from the origin along some axis of a coordinate system. For example, here’s one such sequence of three numbers: 5, 8, 13. So, 5 is five steps along the x-axis, 8 is eight steps along the y-axis and 13 is 13 steps along the z-axis. If you take these steps, you’ll reach a point in 3-D space, which represents the vector, expressed as the sequence of numbers in brackets, like this: [5 8 13].

Now, let’s say you want your algorithm to represent a grayscale image of a cat. Well, each pixel in that image is a number encoded using one byte or eight bits of information, so it has to be a number between zero and 255, where zero means black and 255 means white, and the numbers in-between represent varying shades of gray.

It was a visceral experience to witness Kepler’s ghost in the machine.

If it’s a 100×100 pixel image, then you have 10,000 pixels in total in the image. So if you line up the numerical values of each pixel in a row, voila, you have a vector representing the cat in 10,000-dimensional space. Each element of that vector represents the distance along one of 10,000 axes. A machine learning algorithm encodes the 100×100 image as a 10,000-dimensional vector. As far as the algorithm is concerned, the cat has become a point in this high-dimensional space.

Turning images into vectors and treating them as points in some mathematical space allows a machine learning algorithm to now proceed to learn about patterns that exist in the data, and then use what it’s learned to make predictions about new unseen data. Now, given a new unlabeled image, the algorithm simply checks where the associated vector, or the point formed by that image, falls in high-dimensional space and classifies it accordingly. What we have is one, very simple type of image recognition algorithm: one which learns, given a bunch of images annotated by humans as that of a cat or a dog, how to map those images into high-dimensional space and use that map to make decisions about new images.

3- Some Machine Learning Algorithms Can Be “Universal Function Approximators.”

One way to think about a machine learning algorithm is that it converts an input, x, into an output, y. The inputs and outputs can be a single number or a vector. Consider y = f (x). Here, x could be a 10,000-dimensional vector representing a cat or a dog, and y could be 0 for cat and 1 for dog, and it’s the machine learning algorithm’s job to find, given enough annotated training data, the best possible function, f, that converts x to y.

There are mathematical proofs that show that certain machine learning algorithms, such as deep neural networks, are “universal function approximators,” capable in principle of approximating any function, no matter how complex.

Voila, You Have A Vector Representing The Cat In 10,000-Dimensional Space.

A deep neural network has layers of artificial neurons, with an input layer, an output layer, and one or more so-called hidden layers, which are sandwiched between the input and output layers. There’s a mathematical result called universal approximation theorem that shows that given an arbitrarily large number of neurons, even a network with just one hidden layer can approximate any function, meaning: If a correlation exists in the data between the input and the desired output, then the neural network will be able to find a very good approximation of a function that implements this correlation.

This is a profound result, and one reason why deep neural networks are being trained to do more and more complex tasks, as long as we can provide them with enough pairs of input-output data and make the networks big enough.

So, whether it’s a function that takes an image and turns that into a 0 (for cat) and 1 (for dog), or a function that takes a string of words and converts that into an image for which those words serve as a caption, or potentially even a function that takes the snapshot of the road ahead and spits out instructions for a car to change lanes or come to a halt or some such maneuver, universal function approximators can in principle learn and implement such functions, given enough training data. The possibilities are endless, while keeping in mind that correlation does not equate to causation.

— Anil Ananthaswamy is a Science Journalist who writes about AI and Machine Learning, Physics, and Computational Neuroscience. He’s a 2019-20 MIT Knight Science Journalism Fellow. His latest book is Why Machines Learn: The Elegant Math Behind Modern AI.

#Nautilus#Mathematics#Elegant Math#Machine Learning#Mathematics | Mostly Vectors#Algorithms | “Universal Function Approximators”#Anil Ananthaswamy#Physics#Computational Neuroscience#MIT | Knight Science Journalism Fellow

4 notes

·

View notes

Text

Btw MIT and some other universities have some of their lectures posted for free on YouTube

2 notes

·

View notes

Text

the split between the polytechnic-type and the liberal-arts-college type in the US isn't so large in lower-ranked universities but the higher up you go the more apparent it gets. sure, princeton and MIT are the number 1 and 2 ranks for universities in the US, but the culture there could not be more different.

this is why miles morales wanting to study quantum physics at princeton is funny

#my man if you have the chops for princeton you have the chops for MIT#don't go for the ivy league. it's a soul killer for the physical sciences#many such cases!

9 notes

·

View notes

Text

Fear of a Black Universe: An Outsider's Guide to the Future of Physics - Stephon Alexander

....a top cosmologist argues that physics must embrace the excluded and listen to the unheard

When asked by legendary theoretical physicist Christopher Isham why he had attended graduate school, cosmologist Stephon Alexander answered: "To become a better physicist." As a young student, he could hardly have anticipated Isham's response: "Then stop reading those physics books." Instead, Isham said, Alexander should start listening to his dreams.

This is only the first of the many lessons in Fear of a Black Universe. As Alexander explains, greatness in physics requires transgression, a willingness to reject conventional expectations. He shows why progress happens when some physicists come to think outside the mainstream, and why, as in great jazz, great physics requires a willingness to make things up as one goes along.

Compelling and necessary, Fear of a Black Universe offers us remarkable insight into the art of physics and empowers us all to think big.

[Stephon Alexander is a professor of theoretical physics at Brown University and an established jazz musician. He was the scientific consultant to Ava DuVernay for the feature film A Wrinkle in Time. His work has been featured by the New York Times, the Wall Street Journal, and many other outlets.]

The MIT Press // Bookstore

2 notes

·

View notes

Text

This is exactly what I want my future hypothetical family to be. This is what I will pass down to my children.

my family is fucking addicted to macgyvering and it's becoming a problem. every time something in this house breaks, instead of doing the sensible thing of replacing it or calling someone qualified to fix it, we all group around the offending object with a manic look in our eyes and everyone gets a try at fixing it while being cheered on or ridiculed by the rest.

it's a beautiful bonding activity, but the "creative" fixes have turned our house into a quasihaunted escape room like contraption where everything works, but only in the wonkiest of ways. you need a huge block of iron to turn on the stove. the oven only works if a specific clock is plugged in. the bread machine has a huge wood block just stapled to it that has become foundational to its function. sometimes when you use the toaster the doorbell rings. and that's just the kitchen.

it's all fun and games until you have guests over and you have to lay out the rules of the house like it's a fucking board game. welcome to the beautiful guest room. don't pull out the couch yourself you need a screwdriver for that, and that metal rod makes the lamp work so don't move it. it also made me a terrifying roommate in college, because it makes me think i can fix anything with enough hubris and a drill. you want to call the landlord about a leaky faucet? as if. one time my dad made me install a new power socket because we ran our of extension cords

#I have a similar approach that was much less state sanctioned#which arose from being a neglected child that was very mechanically apt (defended from aircraft mechanics#electrical engineers#and physicists)#I wasn’t TRAINED per se but the tinkering gene was alive and well in these hands#and we lived in a probably legally condemnable house#so I macgyvered the shit out of anything and everything#did I electrocute myself a lot? Hell yes#did I learn by age 7 where the breakers were and how to turn them off before doing wiring? also hell yes. improvise adapt overcome bitches#our parents didn’t care to actually repair anything so if I wanted a functional house for myself and my brother I had to figure it out#and I loved a puzzle and I liked SOLVING a puzzle even more#so you better bet there was a catch to like nearly every function in that house#‘don’t touch that screwdriver it’s stabbed into the Sheetrock there for a reason it’s conducting the electricity for the dining room light’#’yeah I know this screwdriver is in the laundry room just trust me pls don’t remove it it took forever to find where to stab it’#on the flip side I have SINCE gotten a degree in engineering and 9/10ths of a doctorate in physics so#I can do very state sanctioned macgyvering now#with the spirit of my winging-it youth and the knowledge of MIT#I can send my family off into the world with solid fixit skills

61K notes

·

View notes

Text

Is ChatGPT the new "Boob Tube?"

JB: Hi. In his book, “Four Arguments for the Elimination of Television,” Jerry Mander (such a zeitgeist name – am I right), noted that scientific studies showed that children had lower brain activity watching television than they did when they were asleep. Now, in an article for The Sunday Times titled, “Using ChatGPT for Work? It Might Make You Stupid,” Mark Sellman describes experiments done by…

#AI#artificial-intelligence#chatgpt#Cognitive Atrophy#Four Arguments for the Elimination of Television#Jerry Mander#Luxury Collapse#Mark Sellman#MIT#Moral Outsourcing#Physical Degeneration#Reduced Brain Activity#technology#the times#Using ChatGPT for Work?#Weaker Engagement#Worse Memory Retention

0 notes

Video

youtube

(via New form of magnetism could revolutionize spintronics)

0 notes