#Microservices application Monitoring

Explore tagged Tumblr posts

Text

Struggling with Microservices Monitoring?

Struggling with Microservices Monitoring?

Microservices architectures bring agility but also complexity. From Microservices application Monitoring to tackling real-time challenges in Microservices Monitoring, the right APM tool makes all the difference.

🔍 Discover how Application Performance Monitoring (APM) boosts microservices observability, performance, and reliability.

✅ Ensure seamless APM for Microservices ✅ Resolve bottlenecks faster ✅ Achieve end-to-end visibility

📖 Read now: https://www.atatus.com/blog/importance-of-apm-in-microservices

#APM#MicroservicesMonitoring#Observability#DevOps#APMTool#Application Performance monitoring#apm tool#apm for microservices#microservices observability#Microservices architectures#Microservices application Monitoring#Challenges in Microservices Monitoring

0 notes

Text

Interview Questions to Ask When Hiring a .NET Developer

The success of your enterprise or web apps can be significantly impacted by your choice of .NET developer. Making the correct decision during interviews is crucial because .NET is a powerful framework that is utilized in a variety of industries, including finance and e-commerce. Dot Net engineers that are not only familiar with the framework but also have the ability to precisely and clearly apply it to real-world business problems are sought after by many software businesses.

These essential questions will assist you in evaluating candidates' technical proficiency, coding style, and compatibility with your development team as you get ready to interview them for your upcoming project.

Assessing Technical Skills, Experience, and Real-World Problem Solving

What experience do you have with the .NET ecosystem?

To find out how well the candidate understands .NET Core, ASP.NET MVC, Web API, and associated tools, start with a general question. Seek answers that discuss actual projects and real-world applications rather than only theory.

Follow-up: What version of .NET are you using right now, and how do you manage updates in real-world settings?

Experience with more recent versions, such as .NET 6 or .NET 8, can result in fewer compatibility problems and improved performance when hiring Dot Net developers.

How do you manage dependency injection in .NET applications?

One essential component of the scalable .NET design is dependency injection. An excellent applicant will discuss built-in frameworks, how they register services, and how they enhance modularity and testability.

Can you explain the difference between synchronous and asynchronous programming in .NET?

Performance is enhanced by asynchronous programming, particularly in microservices and backend APIs. Seek a concise description and examples that make use of Task, ConfigureAwait, or async/await.

Advice: When hiring backend developers, candidates who are aware of async patterns are more likely to create apps that are more efficient.

What tools do you use for debugging and performance monitoring?

Skilled developers know how to optimize code in addition to writing it. Check for references to Postman, Application Insights, Visual Studio tools, or profiling tools such as dotTrace.

This demonstrates the developer's capacity to manage problems with live production and optimize performance.

How do you write unit and integration tests for your .NET applications?

Enterprise apps require testing. A trustworthy developer should be knowledgeable about test coverage, mocking frameworks, and tools like xUnit, NUnit, or MSTest.

Hiring engineers with strong testing practices helps tech organizations avoid expensive errors later on when delivering goods on short notice.

Describe a time you optimized a poorly performing .NET application.

This practical question evaluates communication and problem-solving abilities. Seek solutions that involve database query optimization, code modification, or profiling.

Are you familiar with cloud deployment for .NET apps?

Now that a lot of apps are hosted on AWS or Azure, find out how they handle cloud environments. Seek expertise in CI/CD pipelines, containers, or Azure App Services.

This is particularly crucial if you want to work with Dot Net developers to create scalable, long-term solutions.

Final Thoughts

You may learn more about a developer's thought process, problem-solving techniques, and ability to operate under pressure via a well-structured interview. These questions provide a useful method to confidently assess applicants if you intend to hire Dot Net developers for intricate or high-volume projects.

The ideal .NET hire for expanding tech organizations does more than just write code; they create the framework around which your products are built.

2 notes

·

View notes

Text

How Python Powers Scalable and Cost-Effective Cloud Solutions

Explore the role of Python in developing scalable and cost-effective cloud solutions. This guide covers Python's advantages in cloud computing, addresses potential challenges, and highlights real-world applications, providing insights into leveraging Python for efficient cloud development.

Introduction

In today's rapidly evolving digital landscape, businesses are increasingly leveraging cloud computing to enhance scalability, optimize costs, and drive innovation. Among the myriad of programming languages available, Python has emerged as a preferred choice for developing robust cloud solutions. Its simplicity, versatility, and extensive library support make it an ideal candidate for cloud-based applications.

In this comprehensive guide, we will delve into how Python empowers scalable and cost-effective cloud solutions, explore its advantages, address potential challenges, and highlight real-world applications.

Why Python is the Preferred Choice for Cloud Computing?

Python's popularity in cloud computing is driven by several factors, making it the preferred language for developing and managing cloud solutions. Here are some key reasons why Python stands out:

Simplicity and Readability: Python's clean and straightforward syntax allows developers to write and maintain code efficiently, reducing development time and costs.

Extensive Library Support: Python offers a rich set of libraries and frameworks like Django, Flask, and FastAPI for building cloud applications.

Seamless Integration with Cloud Services: Python is well-supported across major cloud platforms like AWS, Azure, and Google Cloud.

Automation and DevOps Friendly: Python supports infrastructure automation with tools like Ansible, Terraform, and Boto3.

Strong Community and Enterprise Adoption: Python has a massive global community that continuously improves and innovates cloud-related solutions.

How Python Enables Scalable Cloud Solutions?

Scalability is a critical factor in cloud computing, and Python provides multiple ways to achieve it:

1. Automation of Cloud Infrastructure

Python's compatibility with cloud service provider SDKs, such as AWS Boto3, Azure SDK for Python, and Google Cloud Client Library, enables developers to automate the provisioning and management of cloud resources efficiently.

2. Containerization and Orchestration

Python integrates seamlessly with Docker and Kubernetes, enabling businesses to deploy scalable containerized applications efficiently.

3. Cloud-Native Development

Frameworks like Flask, Django, and FastAPI support microservices architecture, allowing businesses to develop lightweight, scalable cloud applications.

4. Serverless Computing

Python's support for serverless platforms, including AWS Lambda, Azure Functions, and Google Cloud Functions, allows developers to build applications that automatically scale in response to demand, optimizing resource utilization and cost.

5. AI and Big Data Scalability

Python’s dominance in AI and data science makes it an ideal choice for cloud-based AI/ML services like AWS SageMaker, Google AI, and Azure Machine Learning.

Looking for expert Python developers to build scalable cloud solutions? Hire Python Developers now!

Advantages of Using Python for Cloud Computing

Cost Efficiency: Python’s compatibility with serverless computing and auto-scaling strategies minimizes cloud costs.

Faster Development: Python’s simplicity accelerates cloud application development, reducing time-to-market.

Cross-Platform Compatibility: Python runs seamlessly across different cloud platforms.

Security and Reliability: Python-based security tools help in encryption, authentication, and cloud monitoring.

Strong Community Support: Python developers worldwide contribute to continuous improvements, making it future-proof.

Challenges and Considerations

While Python offers many benefits, there are some challenges to consider:

Performance Limitations: Python is an interpreted language, which may not be as fast as compiled languages like Java or C++.

Memory Consumption: Python applications might require optimization to handle large-scale cloud workloads efficiently.

Learning Curve for Beginners: Though Python is simple, mastering cloud-specific frameworks requires time and expertise.

Python Libraries and Tools for Cloud Computing

Python’s ecosystem includes powerful libraries and tools tailored for cloud computing, such as:

Boto3: AWS SDK for Python, used for cloud automation.

Google Cloud Client Library: Helps interact with Google Cloud services.

Azure SDK for Python: Enables seamless integration with Microsoft Azure.

Apache Libcloud: Provides a unified interface for multiple cloud providers.

PyCaret: Simplifies machine learning deployment in cloud environments.

Real-World Applications of Python in Cloud Computing

1. Netflix - Scalable Streaming with Python

Netflix extensively uses Python for automation, data analysis, and managing cloud infrastructure, enabling seamless content delivery to millions of users.

2. Spotify - Cloud-Based Music Streaming

Spotify leverages Python for big data processing, recommendation algorithms, and cloud automation, ensuring high availability and scalability.

3. Reddit - Handling Massive Traffic

Reddit uses Python and AWS cloud solutions to manage heavy traffic while optimizing server costs efficiently.

Future of Python in Cloud Computing

The future of Python in cloud computing looks promising with emerging trends such as:

AI-Driven Cloud Automation: Python-powered AI and machine learning will drive intelligent cloud automation.

Edge Computing: Python will play a crucial role in processing data at the edge for IoT and real-time applications.

Hybrid and Multi-Cloud Strategies: Python’s flexibility will enable seamless integration across multiple cloud platforms.

Increased Adoption of Serverless Computing: More enterprises will adopt Python for cost-effective serverless applications.

Conclusion

Python's simplicity, versatility, and robust ecosystem make it a powerful tool for developing scalable and cost-effective cloud solutions. By leveraging Python's capabilities, businesses can enhance their cloud applications' performance, flexibility, and efficiency.

Ready to harness the power of Python for your cloud solutions? Explore our Python Development Services to discover how we can assist you in building scalable and efficient cloud applications.

FAQs

1. Why is Python used in cloud computing?

Python is widely used in cloud computing due to its simplicity, extensive libraries, and seamless integration with cloud platforms like AWS, Google Cloud, and Azure.

2. Is Python good for serverless computing?

Yes! Python works efficiently in serverless environments like AWS Lambda, Azure Functions, and Google Cloud Functions, making it an ideal choice for cost-effective, auto-scaling applications.

3. Which companies use Python for cloud solutions?

Major companies like Netflix, Spotify, Dropbox, and Reddit use Python for cloud automation, AI, and scalable infrastructure management.

4. How does Python help with cloud security?

Python offers robust security libraries like PyCryptodome and OpenSSL, enabling encryption, authentication, and cloud monitoring for secure cloud applications.

5. Can Python handle big data in the cloud?

Yes! Python supports big data processing with tools like Apache Spark, Pandas, and NumPy, making it suitable for data-driven cloud applications.

#Python development company#Python in Cloud Computing#Hire Python Developers#Python for Multi-Cloud Environments

2 notes

·

View notes

Text

Exploring the Azure Technology Stack: A Solution Architect’s Journey

Kavin

As a solution architect, my career revolves around solving complex problems and designing systems that are scalable, secure, and efficient. The rise of cloud computing has transformed the way we think about technology, and Microsoft Azure has been at the forefront of this evolution. With its diverse and powerful technology stack, Azure offers endless possibilities for businesses and developers alike. My journey with Azure began with Microsoft Azure training online, which not only deepened my understanding of cloud concepts but also helped me unlock the potential of Azure’s ecosystem.

In this blog, I will share my experience working with a specific Azure technology stack that has proven to be transformative in various projects. This stack primarily focuses on serverless computing, container orchestration, DevOps integration, and globally distributed data management. Let’s dive into how these components come together to create robust solutions for modern business challenges.

Understanding the Azure Ecosystem

Azure’s ecosystem is vast, encompassing services that cater to infrastructure, application development, analytics, machine learning, and more. For this blog, I will focus on a specific stack that includes:

Azure Functions for serverless computing.

Azure Kubernetes Service (AKS) for container orchestration.

Azure DevOps for streamlined development and deployment.

Azure Cosmos DB for globally distributed, scalable data storage.

Each of these services has unique strengths, and when used together, they form a powerful foundation for building modern, cloud-native applications.

1. Azure Functions: Embracing Serverless Architecture

Serverless computing has redefined how we build and deploy applications. With Azure Functions, developers can focus on writing code without worrying about managing infrastructure. Azure Functions supports multiple programming languages and offers seamless integration with other Azure services.

Real-World Application

In one of my projects, we needed to process real-time data from IoT devices deployed across multiple locations. Azure Functions was the perfect choice for this task. By integrating Azure Functions with Azure Event Hubs, we were able to create an event-driven architecture that processed millions of events daily. The serverless nature of Azure Functions allowed us to scale dynamically based on workload, ensuring cost-efficiency and high performance.

Key Benefits:

Auto-scaling: Automatically adjusts to handle workload variations.

Cost-effective: Pay only for the resources consumed during function execution.

Integration-ready: Easily connects with services like Logic Apps, Event Grid, and API Management.

2. Azure Kubernetes Service (AKS): The Power of Containers

Containers have become the backbone of modern application development, and Azure Kubernetes Service (AKS) simplifies container orchestration. AKS provides a managed Kubernetes environment, making it easier to deploy, manage, and scale containerized applications.

Real-World Application

In a project for a healthcare client, we built a microservices architecture using AKS. Each service—such as patient records, appointment scheduling, and billing—was containerized and deployed on AKS. This approach provided several advantages:

Isolation: Each service operated independently, improving fault tolerance.

Scalability: AKS scaled specific services based on demand, optimizing resource usage.

Observability: Using Azure Monitor, we gained deep insights into application performance and quickly resolved issues.

The integration of AKS with Azure DevOps further streamlined our CI/CD pipelines, enabling rapid deployment and updates without downtime.

Key Benefits:

Managed Kubernetes: Reduces operational overhead with automated updates and patching.

Multi-region support: Enables global application deployments.

Built-in security: Integrates with Azure Active Directory and offers role-based access control (RBAC).

3. Azure DevOps: Streamlining Development Workflows

Azure DevOps is an all-in-one platform for managing development workflows, from planning to deployment. It includes tools like Azure Repos, Azure Pipelines, and Azure Artifacts, which support collaboration and automation.

Real-World Application

For an e-commerce client, we used Azure DevOps to establish an efficient CI/CD pipeline. The project involved multiple teams working on front-end, back-end, and database components. Azure DevOps provided:

Version control: Using Azure Repos for centralized code management.

Automated pipelines: Azure Pipelines for building, testing, and deploying code.

Artifact management: Storing dependencies in Azure Artifacts for seamless integration.

The result? Deployment cycles that previously took weeks were reduced to just a few hours, enabling faster time-to-market and improved customer satisfaction.

Key Benefits:

End-to-end integration: Unifies tools for seamless development and deployment.

Scalability: Supports projects of all sizes, from startups to enterprises.

Collaboration: Facilitates team communication with built-in dashboards and tracking.

4. Azure Cosmos DB: Global Data at Scale

Azure Cosmos DB is a globally distributed, multi-model database service designed for mission-critical applications. It guarantees low latency, high availability, and scalability, making it ideal for applications requiring real-time data access across multiple regions.

Real-World Application

In a project for a financial services company, we used Azure Cosmos DB to manage transaction data across multiple continents. The database’s multi-region replication ensure data consistency and availability, even during regional outages. Additionally, Cosmos DB’s support for multiple APIs (SQL, MongoDB, Cassandra, etc.) allowed us to integrate seamlessly with existing systems.

Key Benefits:

Global distribution: Data is replicated across regions with minimal latency.

Flexibility: Supports various data models, including key-value, document, and graph.

SLAs: Offers industry-leading SLAs for availability, throughput, and latency.

Building a Cohesive Solution

Combining these Azure services creates a technology stack that is flexible, scalable, and efficient. Here’s how they work together in a hypothetical solution:

Data Ingestion: IoT devices send data to Azure Event Hubs.

Processing: Azure Functions processes the data in real-time.

Storage: Processed data is stored in Azure Cosmos DB for global access.

Application Logic: Containerized microservices run on AKS, providing APIs for accessing and manipulating data.

Deployment: Azure DevOps manages the CI/CD pipeline, ensuring seamless updates to the application.

This architecture demonstrates how Azure’s technology stack can address modern business challenges while maintaining high performance and reliability.

Final Thoughts

My journey with Azure has been both rewarding and transformative. The training I received at ACTE Institute provided me with a strong foundation to explore Azure’s capabilities and apply them effectively in real-world scenarios. For those new to cloud computing, I recommend starting with a solid training program that offers hands-on experience and practical insights.

As the demand for cloud professionals continues to grow, specializing in Azure’s technology stack can open doors to exciting opportunities. If you’re based in Hyderabad or prefer online learning, consider enrolling in Microsoft Azure training in Hyderabad to kickstart your journey.

Azure’s ecosystem is continuously evolving, offering new tools and features to address emerging challenges. By staying committed to learning and experimenting, we can harness the full potential of this powerful platform and drive innovation in every project we undertake.

#cybersecurity#database#marketingstrategy#digitalmarketing#adtech#artificialintelligence#machinelearning#ai

2 notes

·

View notes

Text

Cloud-Native Development in the USA: A Comprehensive Guide

Introduction

Cloud-native development is transforming how businesses in the USA build, deploy, and scale applications. By leveraging cloud infrastructure, microservices, containers, and DevOps, organizations can enhance agility, improve scalability, and drive innovation.

As cloud computing adoption grows, cloud-native development has become a crucial strategy for enterprises looking to optimize performance and reduce infrastructure costs. In this guide, we’ll explore the fundamentals, benefits, key technologies, best practices, top service providers, industry impact, and future trends of cloud-native development in the USA.

What is Cloud-Native Development?

Cloud-native development refers to designing, building, and deploying applications optimized for cloud environments. Unlike traditional monolithic applications, cloud-native solutions utilize a microservices architecture, containerization, and continuous integration/continuous deployment (CI/CD) pipelines for faster and more efficient software delivery.

Key Benefits of Cloud-Native Development

1. Scalability

Cloud-native applications can dynamically scale based on demand, ensuring optimal performance without unnecessary resource consumption.

2. Agility & Faster Deployment

By leveraging DevOps and CI/CD pipelines, cloud-native development accelerates application releases, reducing time-to-market.

3. Cost Efficiency

Organizations only pay for the cloud resources they use, eliminating the need for expensive on-premise infrastructure.

4. Resilience & High Availability

Cloud-native applications are designed for fault tolerance, ensuring minimal downtime and automatic recovery.

5. Improved Security

Built-in cloud security features, automated compliance checks, and container isolation enhance application security.

Key Technologies in Cloud-Native Development

1. Microservices Architecture

Microservices break applications into smaller, independent services that communicate via APIs, improving maintainability and scalability.

2. Containers & Kubernetes

Technologies like Docker and Kubernetes allow for efficient container orchestration, making application deployment seamless across cloud environments.

3. Serverless Computing

Platforms like AWS Lambda, Azure Functions, and Google Cloud Functions eliminate the need for managing infrastructure by running code in response to events.

4. DevOps & CI/CD

Automated build, test, and deployment processes streamline software development, ensuring rapid and reliable releases.

5. API-First Development

APIs enable seamless integration between services, facilitating interoperability across cloud environments.

Best Practices for Cloud-Native Development

1. Adopt a DevOps Culture

Encourage collaboration between development and operations teams to ensure efficient workflows.

2. Implement Infrastructure as Code (IaC)

Tools like Terraform and AWS CloudFormation help automate infrastructure provisioning and management.

3. Use Observability & Monitoring

Employ logging, monitoring, and tracing solutions like Prometheus, Grafana, and ELK Stack to gain insights into application performance.

4. Optimize for Security

Embed security best practices in the development lifecycle, using tools like Snyk, Aqua Security, and Prisma Cloud.

5. Focus on Automation

Automate testing, deployments, and scaling to improve efficiency and reduce human error.

Top Cloud-Native Development Service Providers in the USA

1. AWS Cloud-Native Services

Amazon Web Services offers a comprehensive suite of cloud-native tools, including AWS Lambda, ECS, EKS, and API Gateway.

2. Microsoft Azure

Azure’s cloud-native services include Azure Kubernetes Service (AKS), Azure Functions, and DevOps tools.

3. Google Cloud Platform (GCP)

GCP provides Kubernetes Engine (GKE), Cloud Run, and Anthos for cloud-native development.

4. IBM Cloud & Red Hat OpenShift

IBM Cloud and OpenShift focus on hybrid cloud-native solutions for enterprises.

5. Accenture Cloud-First

Accenture helps businesses adopt cloud-native strategies with AI-driven automation.

6. ThoughtWorks

ThoughtWorks specializes in agile cloud-native transformation and DevOps consulting.

Industry Impact of Cloud-Native Development in the USA

1. Financial Services

Banks and fintech companies use cloud-native applications to enhance security, compliance, and real-time data processing.

2. Healthcare

Cloud-native solutions improve patient data accessibility, enable telemedicine, and support AI-driven diagnostics.

3. E-commerce & Retail

Retailers leverage cloud-native technologies to optimize supply chain management and enhance customer experiences.

4. Media & Entertainment

Streaming services utilize cloud-native development for scalable content delivery and personalization.

Future Trends in Cloud-Native Development

1. Multi-Cloud & Hybrid Cloud Adoption

Businesses will increasingly adopt multi-cloud and hybrid cloud strategies for flexibility and risk mitigation.

2. AI & Machine Learning Integration

AI-driven automation will enhance DevOps workflows and predictive analytics in cloud-native applications.

3. Edge Computing

Processing data closer to the source will improve performance and reduce latency for cloud-native applications.

4. Enhanced Security Measures

Zero-trust security models and AI-driven threat detection will become integral to cloud-native architectures.

Conclusion

Cloud-native development is reshaping how businesses in the USA innovate, scale, and optimize operations. By leveraging microservices, containers, DevOps, and automation, organizations can achieve agility, cost-efficiency, and resilience. As the cloud-native ecosystem continues to evolve, staying ahead of trends and adopting best practices will be essential for businesses aiming to thrive in the digital era.

1 note

·

View note

Text

Spring Boot Interview Questions: Prepare for Success

Spring Boot has become one of the most popular frameworks in the Java ecosystem, streamlining robust and scalable web application development. Whether you’re a seasoned developer or just getting started, acing a Spring Boot interview can be a significant milestone in your career. To help you prepare effectively, here are the latest Spring Boot interview questions that will test your knowledge and give you a deeper understanding of how the framework works. These questions will be beneficial if you're pursuing a Spring Boot Certification Training Course at eMexo Technologies, in Electronic City Bangalore.

1. What is Spring Boot, and how is it different from Spring Framework?

This is a fundamental question that often appears in Spring Boot interviews. Spring Boot is an extension of the Spring Framework to simplify the development process. It eliminates the need for extensive XML configuration and provides default configurations to facilitate rapid application development. Spring Framework requires developers to configure components manually, while Spring Boot auto-configures them.

By understanding this, you can highlight how Spring Boot training in Electronic City Bangalore at eMexo Technologies helps developers focus more on writing business logic rather than dealing with complex configurations.

2. What are the main features of Spring Boot?

Spring Boot stands out due to several features:

Auto-Configuration: Automatically configures your application based on the libraries on the classpath.

Embedded Servers: It allows the deployment of web applications on embedded servers like Tomcat, Jetty, and Undertow.

Spring Boot Starters: Pre-configured templates that simplify dependency management.

Spring Boot CLI: A command-line interface that allows you to develop Spring applications quickly.

Actuator: Monitors and manages application performance.

These features make Spring Boot an attractive option for developers, which is why the best Spring Boot training institute in Electronic City Bangalore emphasizes hands-on experience with these functionalities.

3. What is the role of @SpringBootApplication in Spring Boot?

The @SpringBootApplication annotation is a core part of Spring Boot, often referred to as the ‘meta-annotation.’ It is a combination of three annotations:

@Configuration: Marks the class as a configuration class for Spring Beans.

@EnableAutoConfiguration: Enables Spring Boot’s auto-configuration feature.

@ComponentScan: Scans the components within the specified package.

This annotation is crucial to understanding Spring Boot’s internal architecture and its ability to simplify configuration.

4. What is Spring Boot Starter, and how is it useful?

A Spring Boot Starter is a set of pre-configured dependencies that simplify the inclusion of libraries in your project. For instance, spring-boot-starter-web includes everything you need for web development, like Spring MVC, embedded Tomcat, and validation support.

Starters save a lot of time, as they eliminate the need to find and include individual dependencies manually. When studying at eMexo Technologies, you’ll get an in-depth look at the variety of Spring Boot Starters available and their importance in building scalable applications.

5. What is a Spring Boot Actuator, and how is it used?

Spring Boot Actuator provides production-ready features to help monitor and manage your Spring Boot application. It offers a wide array of tools like health checks, metrics, and auditing endpoints. The actuator allows you to easily monitor application performance, which is a crucial aspect of microservices-based applications.

6. What are Microservices, and how does Spring Boot help in building them?

Microservices are small, independent services that work together in a larger application. Each service is responsible for a specific business functionality and can be developed, deployed, and maintained independently. Spring Boot simplifies the development of microservices by providing tools like Spring Cloud and Spring Boot Actuator.

7. How does Spring Boot handle dependency injection?

Dependency Injection (DI) is a key feature of the Spring Framework, and Spring Boot uses it to manage object creation and relationships between objects automatically. In Spring Boot, DI is usually handled through annotations like @Autowired, @Component, and @Service.

8. How can you configure a Spring Boot application?

Spring Boot applications can be configured in multiple ways:

application.properties or application.yml files.

Using the @Configuration classes.

Via command-line arguments.

Environment variables.

9. What are profiles in Spring Boot, and how are they used?

Profiles in Spring Boot allow developers to create different configurations for different environments. For example, you can have one profile for development, one for testing, and one for production. You can specify which profile to use by setting it in the application.properties file or as a command-line argument.

10. What are the limitations of Spring Boot?

Despite its many benefits, Spring Boot has some limitations:

Lack of control over auto-configuration can sometimes lead to unexpected behaviors.

Increased memory usage due to embedded servers.

Limited flexibility in large-scale applications that require extensive custom configuration.

Addressing these limitations demonstrates that you have a well-rounded understanding of the framework and can make informed decisions about when and where to use it.

11. How does Spring Boot handle security?

Spring Boot simplifies security through Spring Security, which can be easily integrated into your application. By adding the spring-boot-starter-security dependency, you can configure authentication and authorization in a few lines of code. You can also customize login, registration, and session management features.

12. What is the role of the Spring Initializr in Spring Boot?

The Spring Initializr is an online tool used to generate Spring Boot projects. It allows developers to choose the dependencies and configuration options before downloading the skeleton code. This tool speeds up the initial setup phase, saving time and effort.

In conclusion, being well-prepared for Spring Boot interviews is crucial, especially in a competitive job market. Whether you're taking a Spring Boot course in Electronic City Bangalore or aiming for Spring Boot Certification Training, knowing these key concepts will give you the edge you need. At eMexo Technologies, you’ll receive hands-on training, not just theory, preparing you to answer interview questions and excel in your career confidently.

Join Now: https://www.emexotechnologies.com/

#springboot#tech education#certification course#career growth#career development#tech skills#learning#learn to code#software training#emexo technologies#bangalore#technology

2 notes

·

View notes

Text

Breaking Barriers With DevOps: A Digital Transformation Journey

In today's rapidly evolving technological landscape, the term "DevOps" has become ingrained. But what does it truly entail, and why is it of paramount importance within the realms of software development and IT operations? In this comprehensive guide, we will embark on a journey to delve deeper into the principles, practices, and substantial advantages that DevOps brings to the table.

Understanding DevOps

DevOps, a fusion of "Development" and "Operations," transcends being a mere collection of practices; it embodies a cultural and collaborative philosophy. At its core, DevOps aims to bridge the historical gap that has separated development and IT operations teams. Through the promotion of collaboration and the harnessing of automation, DevOps endeavors to optimize the software delivery pipeline, empowering organizations to efficiently and expeditiously deliver top-tier software products and services.

Key Principles of DevOps

Collaboration: DevOps champions the concept of seamless collaboration between development and operations teams. This approach dismantles the conventional silos, cultivating communication and synergy.

Automation: Automation is the crucial for DevOps. It entails the utilization of tools and scripts to automate mundane and repetitive tasks, such as code integration, testing, and deployment. Automation not only curtails errors but also accelerates the software delivery process.

Continuous Integration (CI): Continuous Integration (CI) is the practice of automatically combining code alterations into a shared repository several times daily. This enables teams to detect integration issues in the embryonic stages of development, expediting resolutions.

Continuous Delivery (CD): Continuous Delivery (CD) is an extension of CI, automating the deployment process. CD guarantees that code modifications can be swiftly and dependably delivered to production or staging environments.

Monitoring and Feedback: DevOps places a premium on real-time monitoring of applications and infrastructure. This vigilance facilitates the prompt identification of issues and the accumulation of feedback for incessant enhancement.

Core Practices of DevOps

Infrastructure as Code (IaC): Infrastructure as Code (IaC) encompasses the management and provisioning of infrastructure using code and automation tools. This practice ensures uniformity and scalability in infrastructure deployment.

Containerization: Containerization, expressed by tools like Docker, covers applications and their dependencies within standardized units known as containers. Containers simplify deployment across heterogeneous environments.

Orchestration: Orchestration tools, such as Kubernetes, oversee the deployment, scaling, and monitoring of containerized applications, ensuring judicious resource utilization.

Microservices: Microservices architecture dissects applications into smaller, autonomously deployable services. Teams can fabricate, assess, and deploy these services separately, enhancing adaptability.

Benefits of DevOps

When an organization embraces DevOps, it doesn't merely adopt a set of practices; it unlocks a treasure of benefits that can revolutionize its approach to software development and IT operations. Let's delve deeper into the wealth of advantages that DevOps bequeaths:

1. Faster Time to Market: In today's competitive landscape, speed is of the essence. DevOps expedites the software delivery process, enabling organizations to swiftly roll out new features and updates. This acceleration provides a distinct competitive edge, allowing businesses to respond promptly to market demands and stay ahead of the curve.

2. Improved Quality: DevOps places a premium on automation and continuous testing. This relentless pursuit of quality results in superior software products. By reducing manual intervention and ensuring thorough testing, DevOps minimizes the likelihood of glitches in production. This improves consumer happiness and trust in turn.

3. Increased Efficiency: The automation-centric nature of DevOps eliminates the need for laborious manual tasks. This not only saves time but also amplifies operational efficiency. Resources that were once tied up in repetitive chores can now be redeployed for more strategic and value-added activities.

4. Enhanced Collaboration: Collaboration is at the heart of DevOps. By breaking down the traditional silos that often exist between development and operations teams, DevOps fosters a culture of teamwork. This collaborative spirit leads to innovation, problem-solving, and a shared sense of accountability. When teams work together seamlessly, extraordinary results are achieved.

5. Increased Resistance: The ability to identify and address issues promptly is a hallmark of DevOps. Real-time monitoring and feedback loops provide an early warning system for potential problems. This proactive approach not only prevents issues from escalating but also augments system resilience. Organizations become better equipped to weather unexpected challenges.

6. Scalability: As businesses grow, so do their infrastructure and application needs. DevOps practices are inherently scalable. Whether it's expanding server capacity or deploying additional services, DevOps enables organizations to scale up or down as required. This adaptability ensures that resources are allocated optimally, regardless of the scale of operations.

7. Cost Savings: Automation and effective resource management are key drivers of long-term cost reductions. By minimizing manual intervention, organizations can save on labor costs. Moreover, DevOps practices promote efficient use of resources, resulting in reduced operational expenses. These cost savings can be channeled into further innovation and growth.

In summation, DevOps transcends being a fleeting trend; it constitutes a transformative approach to software development and IT operations. It champions collaboration, automation, and incessant improvement, capacitating organizations to respond to market vicissitudes and customer requisites with nimbleness and efficiency.

Whether you aspire to elevate your skills, embark on a novel career trajectory, or remain at the vanguard in your current role, ACTE Technologies is your unwavering ally on the expedition of perpetual learning and career advancement. Enroll today and unlock your potential in the dynamic realm of technology. Your journey towards success commences here. Embracing DevOps practices has the potential to usher in software development processes that are swifter, more reliable, and of higher quality. Join the DevOps revolution today!

10 notes

·

View notes

Text

You can learn NodeJS easily, Here's all you need:

1.Introduction to Node.js

• JavaScript Runtime for Server-Side Development

• Non-Blocking I/0

2.Setting Up Node.js

• Installing Node.js and NPM

• Package.json Configuration

• Node Version Manager (NVM)

3.Node.js Modules

• CommonJS Modules (require, module.exports)

• ES6 Modules (import, export)

• Built-in Modules (e.g., fs, http, events)

4.Core Concepts

• Event Loop

• Callbacks and Asynchronous Programming

• Streams and Buffers

5.Core Modules

• fs (File Svstem)

• http and https (HTTP Modules)

• events (Event Emitter)

• util (Utilities)

• os (Operating System)

• path (Path Module)

6.NPM (Node Package Manager)

• Installing Packages

• Creating and Managing package.json

• Semantic Versioning

• NPM Scripts

7.Asynchronous Programming in Node.js

• Callbacks

• Promises

• Async/Await

• Error-First Callbacks

8.Express.js Framework

• Routing

• Middleware

• Templating Engines (Pug, EJS)

• RESTful APIs

• Error Handling Middleware

9.Working with Databases

• Connecting to Databases (MongoDB, MySQL)

• Mongoose (for MongoDB)

• Sequelize (for MySQL)

• Database Migrations and Seeders

10.Authentication and Authorization

• JSON Web Tokens (JWT)

• Passport.js Middleware

• OAuth and OAuth2

11.Security

• Helmet.js (Security Middleware)

• Input Validation and Sanitization

• Secure Headers

• Cross-Origin Resource Sharing (CORS)

12.Testing and Debugging

• Unit Testing (Mocha, Chai)

• Debugging Tools (Node Inspector)

• Load Testing (Artillery, Apache Bench)

13.API Documentation

• Swagger

• API Blueprint

• Postman Documentation

14.Real-Time Applications

• WebSockets (Socket.io)

• Server-Sent Events (SSE)

• WebRTC for Video Calls

15.Performance Optimization

• Caching Strategies (in-memory, Redis)

• Load Balancing (Nginx, HAProxy)

• Profiling and Optimization Tools (Node Clinic, New Relic)

16.Deployment and Hosting

• Deploying Node.js Apps (PM2, Forever)

• Hosting Platforms (AWS, Heroku, DigitalOcean)

• Continuous Integration and Deployment-(Jenkins, Travis CI)

17.RESTful API Design

• Best Practices

• API Versioning

• HATEOAS (Hypermedia as the Engine-of Application State)

18.Middleware and Custom Modules

• Creating Custom Middleware

• Organizing Code into Modules

• Publish and Use Private NPM Packages

19.Logging

• Winston Logger

• Morgan Middleware

• Log Rotation Strategies

20.Streaming and Buffers

• Readable and Writable Streams

• Buffers

• Transform Streams

21.Error Handling and Monitoring

• Sentry and Error Tracking

• Health Checks and Monitoring Endpoints

22.Microservices Architecture

• Principles of Microservices

• Communication Patterns (REST, gRPC)

• Service Discovery and Load Balancing in Microservices

1 note

·

View note

Text

OpenShift + AWS Observability: Track Logs & Metrics Without Code

In a cloud-native world, observability means more than just monitoring. It's about understanding your application behavior—in real time. If you’re using Red Hat OpenShift Service on AWS (ROSA), you’re in a great position to combine enterprise-grade Kubernetes with powerful AWS tools.

In this blog, we’ll explore how OpenShift applications can be connected to:

Amazon CloudWatch for application logs

Amazon Managed Service for Prometheus for performance metrics

No deep tech knowledge or coding needed — just a clear concept of how they work together.

☁️ What Is ROSA?

ROSA (Red Hat OpenShift Service on AWS) is a fully managed OpenShift platform built on AWS infrastructure. It allows you to deploy containerized apps easily without worrying about the backend setup.

👁️ Why Is Observability Important?

Think of observability like a fitness tracker — but for your application.

🔹 Logs = “What just happened?” 🔹 Metrics = “How well is it running?” 🔹 Dashboards & Alerts = “What do I need to fix or optimize?”

Together, these help your team detect issues early, fix them fast, and make smarter decisions.

🧰 Tools That Work Together

Here’s how ROSA integrates with AWS tools: PurposeToolWhat It DoesApplication LogsAmazon CloudWatchCollects and stores logs from your OpenShift appsMetricsAmazon Managed Service for PrometheusTracks performance data like CPU, memory, and networkVisualizationAmazon Managed GrafanaShows dashboards using logs & metrics from the above

⚙️ How It All Connects (Simplified Flow)

✅ Your application runs inside OpenShift (ROSA).

📤 ROSA forwards logs (like errors, activity) to Amazon CloudWatch.

📊 ROSA sends metrics (like performance stats) to Amazon Managed Prometheus.

📈 Grafana connects to both and gives you beautiful dashboards.

No code needed — this setup is supported through configuration and integration provided by AWS & Red Hat.

🔒 Is It Secure?

Yes. ROSA uses IAM roles and secure endpoints to make sure your logs and data are only visible to your team. You don’t have to worry about setting up security from scratch — AWS manages that for you.

🌟 Key Benefits of This Integration

✅ Real-time visibility into how your applications behave ✅ Centralized monitoring with AWS-native tools ✅ No additional tools to install — works right within ROSA and AWS ✅ Better incident response and proactive issue detection

💡 Use Cases

Monitor microservices in real time

Set alerts for traffic spikes or memory usage

View errors as they happen — without logging into containers

Improve app performance with data-driven insights

🚀 Final Thoughts

If you’re using ROSA and want a smooth, scalable, and secure way to monitor your apps, AWS observability tools are the answer. No complex coding. No third-party services. Just native integration and clear visibility.

🎯 Whether you’re a DevOps engineer or a product manager, understanding your application’s health has never been easier.

For more info, Kindly follow: Hawkstack Technologies

0 notes

Text

Unlocking Agility and Innovation Through Cloud Native Application Development

In today’s hyper-connected digital world, agility is the key to competitive advantage. Enterprises can no longer afford the slow pace of traditional software development and deployment. What they need is a modern, scalable, and resilient approach to building applications—this is where Cloud Native Application Development takes center stage.

By utilizing the full potential of cloud computing, organizations can accelerate digital innovation, deliver superior user experiences, and respond to changes with unmatched speed. Cloud native is not merely a buzzword; it is a complete paradigm shift in how software is created, delivered, and managed.

Understanding Cloud Native: A New Way to Build Applications

Cloud native application development is about designing software specifically for cloud environments. Unlike legacy systems that are simply hosted on the cloud, cloud native apps are built in and for the cloud from day one.

Key characteristics include:

Distributed Microservices: Each component is independently deployable and scalable.

Containerization: Applications run in lightweight containers that ensure portability and consistency.

Dynamic Orchestration: Automated scaling and recovery using platforms like Kubernetes.

DevOps Integration: Continuous integration and continuous deployment pipelines for rapid iteration.

This approach enables development teams to build flexible, fault-tolerant systems that can evolve with the needs of the business.

The Building Blocks of Cloud Native Applications

🌐 Microservices Architecture

Cloud native applications are composed of small, autonomous services. Each service handles a specific function, like user authentication, payment processing, or notifications. This modularity allows updates to be made independently, without affecting the entire system.

📦 Containers

Containers bundle an application and all its dependencies into a single, self-sufficient unit. This ensures that the application runs the same way across different environments, from development to production.

⚙️ Orchestration with Kubernetes

Kubernetes automates the deployment, scaling, and management of containers. It ensures that applications are always running in the desired state and can handle unexpected failures gracefully.

🔄 DevOps and CI/CD

Automation is at the heart of cloud native development. Continuous Integration (CI) and Continuous Delivery (CD) allow teams to ship updates faster and with fewer errors. DevOps practices promote collaboration between developers and operations teams, leading to more reliable releases.

🔍 Observability and Monitoring

With distributed systems, visibility is critical. Cloud native applications include tools for logging, monitoring, and tracing, helping teams detect and fix issues before they affect users.

Why Cloud Native Matters for Modern Enterprises

1. Rapid Innovation

The ability to release features quickly gives businesses a major edge. Cloud native enables faster development cycles, allowing companies to experiment, gather feedback, and improve continuously.

2. Resilience and High Availability

Cloud native systems are designed to withstand failures. If one service fails, others continue to function. Auto-healing and failover mechanisms ensure uptime and reliability.

3. Scalability on Demand

Applications can scale horizontally to handle increased loads. Whether it’s handling traffic spikes during promotions or growing user bases over time, cloud native apps scale effortlessly.

4. Operational Efficiency

Containerization and orchestration reduce resource waste. Teams can optimize infrastructure usage, cut operational costs, and avoid overprovisioning.

5. Vendor Independence

Thanks to container portability, cloud native applications are not tied to a specific cloud provider. Organizations can move workloads freely across platforms or opt for hybrid and multi-cloud strategies.

Ideal Use Cases for Cloud Native Development

Cloud native is a powerful solution across industries:

Retail & E-commerce: Deliver seamless shopping experiences, handle flash sales, and roll out features like recommendations and live chat rapidly.

Banking & Finance: Build secure and scalable digital banking apps, real-time analytics engines, and fraud detection systems.

Healthcare: Create compliant, scalable platforms for managing patient data, telehealth, and appointment scheduling.

Media & Entertainment: Stream content reliably at scale, deliver personalized user experiences, and support global audiences.

Transitioning to Cloud Native: A Step-by-Step Journey

Assess and Plan Evaluate the current application landscape, identify bottlenecks, and prioritize cloud-native transformation areas.

Design and Architect Create a blueprint using microservices, containerization, and DevOps principles to ensure flexibility and future scalability.

Modernize and Build Refactor legacy applications or build new ones using cloud-native technologies. Embrace modularity, automation, and testing.

Automate and Deploy Set up CI/CD pipelines for faster releases. Deploy to container orchestration platforms for better resource management.

Monitor and Improve Continuously monitor performance, user behavior, and system health. Use insights to optimize and evolve applications.

The Future of Software is Cloud Native

As digital disruption accelerates, the demand for applications that are fast, secure, scalable, and reliable continues to grow. Cloud native application development is the foundation for achieving this digital future. It’s not just about technology—it’s about changing how businesses operate, innovate, and deliver value.

Enterprises that adopt cloud native principles can build better software faster, reduce operational risks, and meet the ever-changing expectations of their customers. Whether starting from scratch or transforming existing systems, the journey to cloud native is a strategic move toward sustained growth and innovation.

Embrace the future with Cloud Native Application Development Services.

0 notes

Text

United States of America – The Insight Partners is delighted to release its new extensive market report, "Serverless Computing Market: A Detailed Analysis of Trends, Challenges, and Opportunities." This report provides a detailed and comprehensive view of the dynamic market, reporting existing dynamics, and predicting growth.

Overview

The Serverless Computing industry is in the midst of major change. Spurred by technological upheaval at a breakneck speed, changing regulatory landscapes, and evolving consumer behaviors, this market segment is becoming an instrumental facilitator of digital transformation. This report probes what is driving market momentum and what roadblocks must be overcome to achieve enduring growth.

Key Findings and Insights

Market Size and Growth

Historical Data and Forecast: The Serverless Computing Market is anticipated to register a CAGR of 18.2% during the forecast period.

Key Factors Affecting Growth:

Growing demand for scalable and economical cloud services

Growing use of microservices architecture

Less complex operations and infrastructure management

Booming DevOps and automation trends

Get Sample Report: https://www.theinsightpartners.com/sample/TIPRE00039622

Market Segmentation

By Service Type

Compute

Serverless Storage

Serverless Database< Application Integration

Monitoring & Security

Other Service Types

By Service Model

Function-as-a-service

Backend-as-a-service

By Deployment

Public Cloud

Private Cloud

Hybrid Cloud

By Organization Size

SMEs

Large Enterprises

Identifying Emerging Trends

Technological Advancements

Integration with AI and ML as part of serverless platforms

Improved observability and monitoring capabilities

Execution with containers along with serverless functions (hybrid architecture)

Support for real-time edge computing

Shifting Consumer Preferences

Shift in favor of pay-as-you-go pricing models

Increasing need for vendor-agnostic platforms

Greater emphasis on performance, latency minimization, and flexibility

Moving away from monolithic towards event-driven architectures

Regulatory Shifts

Regulatory compliance requirements under GDPR, HIPAA, and CCPA impacting data processing

Policies for data residency and sovereignty impacting cloud strategy

Increased third-party API and data sharing practices scrutiny

Growth Opportunities

Cloud-first strategies expanding into emerging markets

Hyperscaler and startup collaborations for developing serverless tools

Higher demand for IoT use cases propelling edge-serverless models

Adoption by non-traditional verticals such as education and agriculture

Vendor innovations in reducing cold start times and improving developer experiences

Conclusion

The Serverless Computing Industry Market: Global Trends, Share, Size, Growth, Opportunity, and Forecast Period report offers valuable information for companies and investors interested in entering this emerging market. By examining the competitive space, regulatory setting, and technological advancements, this report enables evidence-based decision-making to unlock new business prospects.

About The Insight Partners

The Insight Partners is among the leading market research and consulting firms in the world. We take pride in delivering exclusive reports along with sophisticated strategic and tactical insights into the industry. Reports are generated through a combination of primary and secondary research, solely aimed at giving our clientele a knowledge-based insight into the market and domain. This is done to assist clients in making wiser business decisions. A holistic perspective in every study undertaken forms an integral part of our research methodology and makes the report unique and reliable.

0 notes

Text

Master Infra Monitoring & Alerting with This Prometheus MasterClass

In today’s fast-paced tech world, keeping an eye on your systems is not optional — it's critical. Whether you're managing a handful of microservices or scaling a complex infrastructure, one tool continues to shine: Prometheus. And if you’re serious about learning it the right way, the Prometheus MasterClass: Infra Monitoring & Alerting is exactly what you need.

Why Prometheus Is the Gold Standard in Monitoring

Prometheus isn’t just another monitoring tool — it’s the backbone of modern cloud-native monitoring. It gives you deep insights, alerting capabilities, and real-time observability over your infrastructure. From Kubernetes clusters to legacy systems, Prometheus tracks everything through powerful time-series data collection.

But here’s the catch: it’s incredibly powerful, if you know how to use it.

That’s where the Prometheus MasterClass steps in.

What Makes This Prometheus MasterClass Stand Out?

Let’s be honest. There are tons of tutorials online. Some are free, some outdated, and most barely scratch the surface. This MasterClass is different. It’s built for real-world engineers — people who want to build, deploy, and monitor robust systems without guessing their way through half-baked guides.

Here’s what you’ll gain:

✅ Hands-On Learning: Dive into live projects that simulate actual infrastructure environments. You won’t just watch — you’ll do.

✅ Alerting Systems That Work: Learn to build smart alerting systems that tell you what you need to know before things go south.

✅ Scalable Monitoring Techniques: Whether it’s a single server or a Kubernetes cluster, you’ll master scalable Prometheus setups.

✅ Grafana Integration: Turn raw metrics into meaningful dashboards with beautiful visualizations.

✅ Zero to Advanced: Start from scratch or sharpen your existing skills — this course fits both beginners and experienced professionals.

Who Is This Course For?

This isn’t just for DevOps engineers. If you're a:

Software Developer looking to understand what’s happening behind the scenes…

System Admin who wants smarter monitoring tools…

Cloud Engineer managing scalable infrastructures…

SRE or DevOps Pro looking for an edge…

…then this course is tailor-made for you.

And if you're someone preparing for a real-world DevOps job or a career upgrade? Even better.

Monitoring Isn’t Just a “Nice to Have”

Too many teams treat monitoring as an afterthought — until something breaks. Then it’s chaos.

With Prometheus, you shift from being reactive to proactive. And when you take the Prometheus MasterClass, you’ll understand how to:

Set up automatic alerts before outages hit

Collect real-time performance metrics

Detect slowdowns and performance bottlenecks

Reduce MTTR (Mean Time to Recovery)

This isn’t just knowledge — it’s job-saving, career-accelerating expertise.

Real-World Monitoring, Real-World Tools

The Prometheus MasterClass is packed with tools and integrations professionals use every day. You'll not only learn Prometheus but also how it connects with:

Grafana: Create real-time dashboards with precision.

Alertmanager: Manage all your alerts with control and visibility.

Docker & Kubernetes: Learn how Prometheus works in containerized environments.

Blackbox Exporter, Node Exporter & Custom Exporters: Monitor everything from hardware metrics to custom applications.

Whether it’s latency, memory usage, server health, or request failures — you’ll learn how to monitor it all.

Learn to Set Up Monitoring in Hours, Not Weeks

One of the biggest challenges in learning a complex tool like Prometheus is the time investment. This course respects your time. Each module is focused, practical, and designed to help you get results fast.

By the end of the Prometheus MasterClass, you’ll be able to:

Set up Prometheus in any environment

Monitor distributed systems with ease

Handle alerts and incidents with confidence

Visualize data and performance metrics clearly

And the best part? You’ll actually enjoy the learning journey.

Why Now Is the Right Time to Learn Prometheus

Infrastructure and DevOps skills are in huge demand. Prometheus is used by some of the biggest companies — from startups to giants like Google and SoundCloud.

As more companies embrace cloud-native infrastructure, tools like Prometheus are no longer optional — they’re essential. If you're not adding these skills to your toolbox, you're falling behind.

This MasterClass helps you stay ahead.

You’ll build in-demand monitoring skills, backed by one of the most powerful tools in the DevOps ecosystem. Whether you're aiming for a promotion, a new job, or leveling up your tech stack — this course is your launchpad.

Course Highlights Recap:

🚀 Full Prometheus setup from scratch

📡 Create powerful alerts using Alertmanager

📊 Build interactive dashboards with Grafana

🐳 Monitor Docker & Kubernetes environments

⚙️ Collect metrics using exporters

🛠️ Build real-world monitoring pipelines

All of this, bundled into the Prometheus MasterClass: Infra Monitoring & Alerting that’s designed to empower, not overwhelm.

Start Your Monitoring Journey Today

You don’t need to be a Prometheus expert to start. You just need the right guidance — and this course gives it to you.

Whether you’re monitoring your first server or managing an enterprise-grade cluster, the Prometheus MasterClass gives you everything you need to succeed.

👉 Ready to take control of your infrastructure monitoring?

Click here to enroll in Prometheus MasterClass: Infra Monitoring & Alerting and take the first step toward mastering system visibility.

0 notes

Text

Understanding Grpc: A Complete Guide For Modern Developers

I was reading about the gRPC recently and was wondering what all this about? Believe me, I was in the same boat not too long ago. I didn't even know what exactly gRPC means before this.

If you're curious about how to inspect gRPC traffic, check out this guide on capturing gRPC traffic going out from a server.

In this blog, I will walk you through everything I have learned about the game changing technology that is changing the world of distributed system.

What is gRPC?

Imagine this, You are building a microservices architecture, and you want your services to talk to each other efficiently. In this case, traditional APIs work, but what If I told you that there is something faster and more secure and developer friendly? That`s gRPC!

gRPC which is know as Google Remote Procedure Call is a high performance, open source framework which allows application to communicate with each other as they are a local function. It is like having a magic route between the services that speaks multiple language fluently.

You know, the beauty of gRPC lies in its simplicity from a developer’s point of view. Because Instead of just crafting HTTP request, you can call methods which feels like a local object. But under the table, it does some seriously impressive work to make that happen fluently.

Key Components of gRPC

Let me explain to you about the component of gRPC in detail:

Protocol Buffers(Protobuf): This is like a secret sauce. You can think of it as a super efficient way to define your data structures and service contracts. Basically, It is like having a universal translator that every programming language understands.

gRPC Runtime: Well, This is all about handling the heavy lifting - serialization, network communication, error handling and many more. In this, you define your services and the runtime takes care of making it work across the network.

Code Generation: Here is thing where this is cool. From the protocol buffer definition, gRPC generates the client and server code in you language of choice. So, there is no more writing boilerplate of HTTP clients! Yes, you heard it right.

Channel Management: It manages connection intelligently by handling things like load balancing, retries and timeouts automatically.

Implementing gRPC: Best Practices

After reading and working with gRPC for a while now, I have picked some best practices that will actually save you from the headache and from wasting your time.

Okay, start with your .proto files and get them correct. These are basically your contracts and changing them later can be little tricky. Track the version of your services from day one, even if you think that you don`t need it. You have to trust me on this one.

Use proper error handling. gRPC has a rich error model which means do not just throw a generic error. Your teammates will thank you!

Implement proper logging and monitoring. gRPC calls can fail in different ways and you want to know what is happening. So, Interceptor is your best friend in this.

Lastly, choose your deployment strategy early. Look, gRPC works great in Kubernetes but it if you are dealing with browser clients, you will need gRPC web or a proxy for it.

What Makes gRPC So Popular?

You know what is more interesting about the gRPC? Let me tell you. gRPC has gone from being Google`s internal tool to becoming one of the most adopted technologies in the cloud native environment, and there is a reason for it.

gRPC is a CNCF Incubation Project

Yes, you read correctly! The Cloud Native Computing Foundation doesn`t just accept any project. That means this is actually a big deal. gRPC is an incubation project means it has proven itself in the production environment, has a healthy ecosystem and is currently being maintained by an active community.

It is not just a Google pet project anymore; It has become a cornerstone of the modern cloud native architecture.

Why gRPC Has Taken Off

1. Performance

In this, let`s talk about numbers because we mostly believe in numbers. gRPC is fast compared to traditional REST APIs. I have seen performance improvements of 5 to 10 times in real-world applications. The combination of HTTP/2, binary seralization and efficient compression makes a huge difference, especially when you are dealing with large payloads.

2. Language Support

This is the place where gRPC actually outperformed others. You can have a python service talking to a Java service, which calls a Go service and all seamlessly. The generated code feels native in each language.

I have worked with the team where we had microservices in different languages and trust me gRPC made it feel like we are working with a monolith in terms of type safety and ease of integration.

3. Streaming

Real time feature gives pain to implement it. With gRPC streaming, you can build real time dashboards, chat application or live data feed with the little code. The streaming support is bidirectional too that means you can have clients and servers both sending data as needed.

4. Interoperability

You know, the cross platform nature of gRPC is incredible. Mobile apps can talk to backend services using the same efficient protocol. Any IOT device can communicate with the cloud services. In this, web app can maintain same contracts as server to server communication.

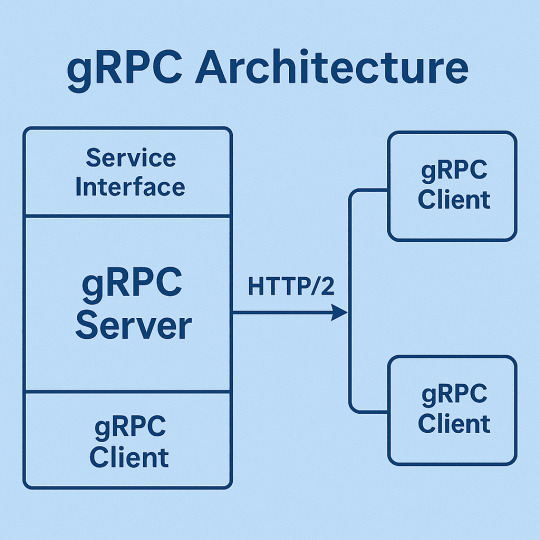

gRPC Architecture

Here is one of the most important topic to understand. Like how gRPC works and how the architecture look like.

The architecture is pretty simple. You have a gRPC server that implement your service interface and clients that call method on that service such that they were local functions. The gRPC runtimes handles serialization, network transport and deserialication transparently.

What interesting is how it uses HTTP/2 as the transport layer. This gives you all the benefits of HTTP/2 (multiplexing, flow control, header compression) while maintaining the simplicity of procedure calls. The client and server don't need to know about HTTP at all - they just see method calls and responses.

Why is gRPC So Fast?

1. HTTP/2 -

Look, this is huge. While REST APIs are typically stuck with the HTTP/1.1`s request and response limitations, but gRPC leverages HTTP/2 multiplexing. Multiple request can be on a same boat simultaneously over a single connection. No more connection pooling headaches or head of line blocking.

2. Binary serialisation

JSON is my best friend and I know yours too which is great for us but not for machines. In this what happens, protocol buffers create much smaller payloads and I have seen 60 to 80 % size reduction compared to equivalent JSON. Smaller payloads means faster network transmission and less bandwidth usage, right!

3. Compression

As we know, gRPC compress data automatically. By combining with the compact binary format, you are looking at the best efficient data transfer. This is especially notable In mobile applications or when we are dealing with limited bandwidth.

4. Streaming

Instead of multiple round trips, we can stream data continuously. Consider scenarios like real time analytics or live updates, this eliminates the latency of establishing new connections repeatedly.

Features of gRPC

The feature are pretty cool. You get authentication and encryption out of the box. Load balancing is the built in feature. Automatic retries and timeout helps with the resilience. Health checking is standardised.

But what I love most about gRPC is the developer experience. IntelliSense works perfectly because everything is strongly typed. API documentation is generated from the .proto files automatically, and refactoring across service boundaries becomes safe.

Working with Protocol Buffers

You might be thinking, what are protocol buffers? Right. So, Protocol Buffers is the heart of gRPC. You can think of this as a more efficient, strongly typed JSON. In this, you define the data structure in .proto files using a simple syntax and the compiler generates code for the language you want.

But syntax is intuitive. You define messages like structs and services like interfaces. Field numbering is important for backward compatibility and there are rules about how to evolve your schemas safely.

Let me show you how this actually works. Here is a simple .proto file that defines a user service.CopyCopysyntax = "proto3"; package user; service UserService { rpc GetUser(GetUserRequest) returns (User); rpc CreateUser(CreateUserRequest) returns (User); rpc ListUsers(ListUsersRequest) returns (ListUsersResponse); } message User { int32 id = 1; string name = 2; string email = 3; int64 created_at = 4; } message GetUserRequest { int32 id = 1; } message CreateUserRequest { string name = 1; string email = 2; } message ListUsersRequest { int32 page_size = 1; string page_token = 2; } message ListUsersResponse { repeated User users = 1; string next_page_token = 2; }

From this single .proto file, you can generate client and server code in multiple languages.

Here is how the implementation will look in Golang:

func (s *server) GetUser(ctx context.Context, req *pb.GetUserRequest) (*pb.User, error) { // Your business logic here user := &pb.User{ Id: req.Id, Name: "John Doe", Email: "[email protected]", CreatedAt: time.Now().Unix(), } return user, nil }

Protocol Buffer Versions

In protocol buffers, there are two main versions: Proto2 and Proto3.

Proto3 is simpler and more widely supported. unless you have some specific requirements that need proto2 features then go with proto3. The syntax is cleaner and it is what most of the new projects are using currently.

gRPC Method Types

gRPC supports four types of method types calls:

Unary RPc: This is a traditional request response which is like REST APIs but faster.

Server Streaming: In this, client sends one request, server sends back a stream of responses. It is great for downloading large datasets or real time updates.

Client Streaming: Client sends a stream of requests and server responds back with a single response. It is perfect for uploading data and aggregating information.

Bidirectional Streaming: In bidirectional streaming, both sides can send streams independently. This is where things get really interesting for real time applications.

gRPC vs. REST

This is the question everyone asks, right? REST is not going anywhere, but gRPC has some great advantages. gRPC is faster, efficient and it provides more better tooling. The type safety alone is worth considering and is great to compare. API evolution is more structured by using protocol buffers.

As we know, REST has broader ecosystem support, everyone is using REST, especially for public APIs. It is easier to debug with the standard HTTP tools. Browser support is more straightforward here.

Let me show you the difference in a real scenario by getting a user by ID:

REST Approach

Client code const response = await fetch('/api/users/123', { method: 'GET', headers: { 'Content-Type': 'application/json', 'Authorization': 'Bearer ' + token } }); const user = await response.json(); // You need to manually handle: // - HTTP status codes // - JSON parsing errors // - Type checking (user.name could be undefined) // - Error response formats

gRPC Approach

Client code (with gRPC-Web) const request = new GetUserRequest(); request.setId(123); client.getUser(request, {}, (err, response) => { if (err) { // Structured error handling console.log('Error:', err.message); } else { // Type-safe response console.log('User:', response.getName()); } }); // You get: // - Automatic serialization/deserialization // - Type safety (response.getName() is guaranteed to exist) // - Structured error handling // - Better performance

The difference is night and day here. With REST, you're dealing with strings, manual parsing, and hoping the API contract hasn't changed. But with gRPC, everything is typed, validated, and the contract is enforced at compile time.

So, my opinion on this comparison will be that you can use gRPC for the service-to-service communication, especially in microservice architectures and consider REST APIs for the public APIs or when you need maximum compatibility.

What is gRPC used for?

Look, the use cases for this are pretty diverse. That means microservices communication is the obvious one, but I have read articles in which gRPC is used for mobile app backends, IoT device communications, real-time features and even as a replacement for message queues in some places.

Currently, big tech companies use this. Netflix uses it for their natural services. Dropbox also built its entire storage system on gRPC. Even traditional enterprises are adopting it for modernizing their architectures.

Integration Testing With Keploy

Now, here is something really exciting that I found recently. You know how testing gRPC can be a real pain, right? Well, there's this tool called Keploy that's making it pretty easy.

Keploy provide the support for gRPC integration testing and it is definitely a great for testing gRPC services.

It watches your gRPC calls while your app is running and automatically creates test cases from real interactions. I'm not kidding, you just use your application normally, and it records everything. Then later, it can replay those exact same interactions as tests. You can read about integration testing with keploy here.

The dependency thing is genius: Remember how we always struggle with mocking databases and external services in our tests? Keploy captures all of that too. So when it replays your tests, it uses the exact same data that was returned during the recording. So basically, it doesn't spend more hours setting up test databases or writing complex mocks.

Catching regressions: You know, this is where it outperformed others. When you make changes to your gRPC services, Keploy compares the new responses with what it recorded before. If something changes unexpectedly, it flags it immediately.

Keploy represents the future of API testing that is intelligent, automated, and incredibly developer friendly. So, If you're building gRPC services, definitely check out what Keploy can do for your testing workflow. It's one of those tools that makes you wonder how you ever tested APIs without it.

Benefits of gRPC

The benefits are quite interesting:

Performance improvements are real and measurable.

Development velocity increases because of the strong typing and code generation.

Cross language interoperability becomes trivial.

Operational complexity decreases because of standardized health checks, metrics and tracing

The ecosystem of gRPC is rich, too. There are interceptors for logging, authentication, and monitoring. In this, cloud providers also offer native support.

Challenges of gRPC