#Software for Life Science

Explore tagged Tumblr posts

Text

" Pipe dreams : VALVe turns vaporware tangible and student project into showstealer! "

Computer Gaming World n267 - October, 2006.

#VALVe#VALVe Software#Source#Source Engine#Team Fortress#Team Fortress 2#Tf2#Tf2 Art#Portal#Portal Gun#Aperture Science#Half Life#Half Life 2#Half Life 2 Episode 2#HL2 EP2#Tf2 Scout#Tf2 Soldier#Tf2 Pyro#Tf2 Demoman#Tf2 Heavy#Tf2 Engineer#Tf2 Medic#Tf2 Sniper#Tf2 Spy#Dr Kleiner

630 notes

·

View notes

Note

Okay so let’s say you have a basement just full of different computers. Absolute hodgepodge. Ranging in make and model from a 2005 dell laptop with a landline phone plug to a 2025 apple with exactly one usbc, to an IBM.

And you want to use this absolute clusterfuck to, I don’t know, store/run a sentient AI! How do you link this mess together (and plug it into a power source) in a way that WONT explode? Be as outlandish and technical as possible.

Oh.

Oh you want to take Caine home with you, don't you! You want to make the shittiest most fucked up home made server setup by fucking daisy chaining PCs together until you have enough processing power to do something. You want to try running Caine in your basement, absolutely no care for the power draw that this man demands.

Holy shit, what have you done? really long post under cut.

Slight disclaimer: I never actually work with this kind of computing, so none of this should be taken as actual, usable advice. That being said, I will cite sources as I go along for easy further research.

First of all, the idea of just stacking computers together HAS BEEN DONE BEFORE!!! This is known as a computer cluster! Sometimes, this is referred to as a supercomputer. (technically the term supercomputer is outdated but I won't go into that)

Did you know that the US government got the idea to wire 1,760 PS3s together in order to make a supercomputer? It was called the Condor Cluster! (tragically it kinda sucked but watch the video for that story)

Now, making an at home computer cluster is pretty rare as it's not like computing power scaled by adding another computer. It takes time for the machines to communicate in between each other, so trying to run something like a videogame on multiple PCs doesn't work. But, lets say that we have a massive amount of data that was collected from some research study that needs to be processed. A cluster can divide that computing among the multiple PCs for comparatively faster computing times. And yes! People have been using this to run/train their own AI so hypothetically Caine can run on a setup like this.

Lets talk about the external hardware needed first. There are basically only two things that we need to worry about. Power (like ya pointed out) and Communication.

Power supply is actually easier than you think! Most PCs have an internal power supply, so all you would need to do is stick the plug into the wall! Or, that is if we weren't stacking an unknowable amount of computers together. I have a friend that had the great idea to try and run a whole ass server rack in the dormitory at my college and yeah, he popped a fuse so now everyone in that section of the building doesn't have power. But that's a good thing, if you try to plug in too many computers on the same circuit, nothing should light on fire because the fuse breaks the circuit (yay for safety!). But how did my friend manage without his server running in his closet? Turns out there was a plug underneath his bed that was on it's own circuit with a higher limit (I'm not going to explain how that works, this is long enough already).

So! To do this at home, start by plugging everything into an extension cord, plug that into a wall outlet and see if the lights go out. I'm serious, blowing a fuse won't break anything. If the fuse doesn't break, yay it works! Move onto next step. If not, then take every other device off that circuit. Try again. If it still doesn't work, then it's time to get weird.

Some houses do have higher duty plugs (again, not going to explain how your house electricity works here) so you could try that next. But remember that each computer has their own plug, so why try to fit everything into one outlet? Wire this bad boy across multiple circuits to distribute the load! This can be a bit of a pain though, as typically the outlets for the each circuits aren't close to each other. An electrician can come in and break up which outlet goes to which fuse, or just get some long extension cords. Now, this next option I'm only saying this as you said wild and outlandish, and that's WIRING DIRECTLY INTO THE POWER GRID. If you do that, the computers can now draw enough power to light themselves on fire, but it is no longer possible to pop a fuse because the fuse is gone. (Please do not do this in real life, this can kill you in many horrible ways)

Communication (as in between the PCs) is where things start getting complex. As in, all of those nasty pictures of wires pouring out of server racks are usually communication cables. The essential piece of hardware that all of these computers are wired into is the switch box. It is the device that handles communication between the individual computers. Software decided which computer in the cluster gets what task. This is known as the Dynamic Resource Manager, sometimes called the scheduler (may run on one of the devises in the cluster but can have it's own dedicated machine). Once the software has scheduled the task, the switch box handles the actual act of getting the data to each machine. That's why speed and capacity are so important with switch boxes, they are the bottleneck for a system like this.

Uhh, connecting this all IBM server rack? That's not needed in this theoretical setup. Choose one computer to act as the 'head node' to act as the user access point and you're set. (sorry I'm not exactly sure what you mean by connect everything to an IBM)

To picture what all of this put together would look like, here’s a great if distressingly shaky video of an actual computer cluster! Power cables aren't shown but they are there.

But what about cable management? Well, things shouldn't get too bad given that fixing disordered cables can be as easy as scheduling the maintenance and ordering some cables. Some servers can't go down, so bad management piles up until either it has to go down or another server is brought in to take the load until the original server can be fixed. Ideally, the separate computers should be wired together, labeled, then neatly run into a switch box.

Now, depending on the level of knowledge, the next question would be "what about the firewall". A firewall is not necessary in a setup like this. If no connections are being made out of network, if the machine is even connected to a network, then there is no reason to monitor or block who is connecting to the machine.

That's all of the info about hardware around the computers, let's talk about the computers themselves!

I'm assuming that these things are a little fucked. First things first would be testing all machines to make sure that they still function! General housekeeping like blasting all of the dust off the motherboard and cleaning out those ports. Also, putting new thermal paste on the CPU. Refresh your thermal paste people.

The hardware of the PCs themselves can and maybe should get upgraded. Most PCs (more PCs than you think) have the ability to be upgraded! I'm talking extra slots for RAM and an extra SADA cable for memory. Also, some PCs still have a DVD slot. You can just take that out and put a hard drive in there! Now upgrades aren't essential but extra memory is always recommended. Redundancy is your friend.

Once the hardware is set, factory reset the computer and... Ok, now I'm at the part where my inexperience really shows. Computer clusters are almost always done with the exact same make and model of computer because essentially, this is taking several computers and treating them as one. When mixing hardware, things can get fucked. There is a version of linux specifically for mixing hardware or operating systems, OSCAR, so it is possible. Would it be a massive headache to do in real life and would it behave in unpredictable ways? Without a doubt. But, it could work, so I will leave it at that. (but maybe ditch the Mac, apple doesn't like to play nice with anything)

Extra things to consider. Noise level, cooling, and humidity! Each of these machines have fans! If it's in a basement, then it's probably going to be humid. Server rooms are climate controlled for a reason. It would be a good idea to stick an AC unit and a dehumidifier in there to maintain that sweat spot in temperature.

All links in one spot:

What's a cluster?

Wiki computer cluster

The PS3 was a ridiculous machine

I built an AI supercomputer with 5 Mac Studios

The worst patch rack I've ever worked on.

Building the Ultimate OpenSees Rig: HPC Cluster SUPERCOMPUTER Using Gaming Workstations!

What is a firewall?

Your old PC is Your New Server

Open Source Cluster Application Resources (OSCAR)

Buying a SERVER - 3 things to know

A Computer Cluster Made With BROKEN PCs

@fratboycipher feel free to add too this or correct me in any way

#Good news!#It's possible to do in real life what you are asking!#Bad news#you would have to do it VERY wrong for it to explode#Not really outlandish but very technical#...I may prefer youtube videos over reading#can you tell that I know more about the hardware than the software?#holy fuck it's not the way that this would be wired that would make this setup bad#connecting them is the easy part!#getting the computers to actually TALK to each other?#oh god oh fuck#i love technology#tadc caine#I'm tagging this as Caine#stemblr#ask#spark#computer science#computer cluster

32 notes

·

View notes

Text

Asking "would you rather work at Aperture or at Black Mesa" is like asking "Do you want to shove your hand into a Barrel of Radioactive Waste or A Set Of Active Hydraulics" so I propose a different sort of poll. WHICH COMPANY DO YOU THINK WOULD GET CANCELLED FASTER

CONTENDER #1: APERTURE SCIENCE PROS: -Progressive as fuck -They'd do numbers when it comes to social media -It's probably a decent workplace if you do like.. accounting? IT work, HR maybe?? -Food and water provided (granted, it's shitty water jugs, cans of beans and cartons of milk from what we've seen) -They do "Bring your Daughter to Work" and "Bring your Cat to Work" days! Fun! -Somehow not as likely to cause an interdimensional alien invasion. -They dabble in home security. CONS: -Toxic as fuck workplace environment -They'll probably give you cancer on purpose -Oh, y'know, the inhumane harvesting of the homeless to use as guinea pigs. -If you're a janitor, work maintenance, or something along those lines you are most certainly going to contract tetanus -THIS PLACE PROBABLY SUCKS ASS FOR ANYONE WHO'S SCARED OF HEIGHTS -Considering Cave did canonically fire someone for being in a wheelchair to cut costs on ramps.. um? -If you do bring your daughter you do have the option (and are actively encouraged to) make her run through a potentially lethal test course.

CONTENDER #2: BLACK MESA PROS: -Dormitories and on-site cafeterias provided -Progressive as fuck (I'd say more than Aperture) -They actually accomodate the disabled -Has their own security force -Generally more trusted by the government -I feel like their social media presence would be way more reduced and way less obnoxious CONS: -THERE'S RADIOACTIVE WASTE FUCKING EVERYWHERE. -Entire department dedicated to dissecting live alien lifeforms that range from the size and intelligence of a foofoo dog to "this thing is smart and it WILL break free and it WILL snap your spine in half" -They'll probably give you cancer on accident -Breen feels like the type of guy to pull a Musk on social media -Y'know. The dozens and dozens of corpses that piled up as a result of sending scientists to an alien dimension. -Generally way more involved with the government. ewwwww -Workplace environment is sooorta rough and employee competition seems to be commonplace (unless Magnusson and Kleiner are just fucked up) -If the lead-up to the Resonance Cascade is anything to go by, they're not beyond doing rush-jobs when it comes to important tests. It also seems like they're not beyond taking bribes

Do be sure to put your reasonings in the tags if you do reblog this poll. It'd be pretty fun to read everyone's different thoughts and reasonings.

#portal#portal 2#aperture science#half life#hl#hl1#black mesa#valve#valve games#valve software#wallace breen#cave johnson#caroline#I think I can already predict how this'd go buuut this still seems like a funny poll to do

61 notes

·

View notes

Text

🍓⸝⸝˚₊・september

days 5-7 of 30

THURSADY, 5TH SEPTEMBER-SATURDAY, 7TH SEPTEMBER

whew!! uni kicked my ass lol. it's finally the weekend, but I have a lot of work i need to catch up on. but i met my friends for dinner yesterday, which was pretty awesome. it's so weird to have all my friends living so far away because we all ended up in different unis lol. but the weather has been very nice recently, and i hope it stays that way. also happy ganesh chathurthi to everyone who celebrates!! it's one of my favourite festivals

things i did today:

did a LOT of coding; i learnt C today, and i'll never look at semi-colons the same again

played the piano <3

went to the temple

🎧: gajanana by sukhwinder singh

#studyblr#spotify#study motivation#studyspo#student life#stemblr#women in stem#study notes#study blog#computer science#coding#software engineering#college student#college life#college#productivityboost#productivity challenge#ganeshchaturthi#ganeshutsav

41 notes

·

View notes

Text

Meanwhile, at the Annual Mollusk Taxonomy Convention

Taxonomist 1: I think this population of blorb snails count as their own species under the phylogenetic and biological species concepts. Their last common ancestor was between 5 and 100 million years ago, idk, my 35 year old copy of Clustal finally exploded so I just eyeballed it, but they totally got wack cytochrome C oxidase genes, even though for the most part they're genetically pretty similar and they still look and act exactly the same in every way and also both live in the same place as each other. The only reason why they don’t interbreed is they have like a single incompatible protein thingo, which is also more or less the only meaningful phenotypic difference between them.

(this is an actual thing that can happen. They’re called cryptic species and species complexes and they hurt my soul)

Taxonomist 2: No. It’s more pragmatic and useful to just use the morphological and ecological species concepts here; and they say fuck you and your dumb snails. I wanna lump all existing species into half as many species, there’s too many fucking species.

Taxonomist 1: you wanna fucking say that to my face you little shit?

Taxonomist 3: Hey, real quick, what do you guys think about the possibility of reclassifying the Blorb genus under Littorinidae instead of Muricidae? Because I already wrote a paper on it, so that's the case now. Cry about it.

Taxonomist 2: I think today is the day bitches die.

*Mexican standoff using conch shells as blunt weapons ensued, there were no survivors.*

*This is unfortunately the leading cause of death among all taxonomists*

#science#biology#taxonomy#evolution#marine life#scientists sitcom#roughly based on a totally true story from my professor. He is a veteran of the mollusk taxonomy wars#No you cannot actually just 'eyeball' it but you would not believe some of the ancient software we be usin' in taxonomy.#sea snails#mollusk#blorbsday

82 notes

·

View notes

Text

14 notes

·

View notes

Note

you quit your software engineering job to pursue environmental science?? do tell!

(i have a software engineering job. it makes me sad.)

so when i was like. 10. i had this teacher who taught us basic scratch programming. and i was like. freakishly good at it. i picked it up super quickly and was even helping the other students to fix their problems. and so he said to me "you know, you could be a great computer programmer one day" and i was like. yeah! i could!

so throughout highschool my One and Only goal was to become a software engineer. every time i went to the career counsellor thats what i said. so i did computer science at gcse, and got a 9, and i did computer science at a level and got an A*. (i did other subjects too of course. but those were the ones i was focussed on)

then i finished my a levels and i went straight into looking for an apprenticeship. no one was really interested in me because i didnt have any experience or a degree. so then my dad got me an internship with some guys he knew at a company that worked in his building, and i managed to build up some actual industry skills. then i got an apprenticeship! it paid super well and the team was great and it was work from home.

and i hated it.

i was just sitting in my room at my dads house 9-5 mon-fri writing code all by myself. it was lonely and boring and i didnt really know what i was doing. it was supposed to be an apprenticeship but it just felt like a job. they didnt teach me how to do anything they just said "do this and come to me if you run into a problem". half the time they didnt even give me any work to do for days at a time so i was just watching youtube or scrolling on tiktok. which sounds great but it wasnt because i felt guilty the whole time and was terrified of being found out and fired, even tho it wasnt my fault? they literally werent giving me work to do?

anyway. a few months into it i was like man Fuck this. im going to university. so i started looking at courses. it actually started with astrophysics, but since i didnt take a science at a level i didnt have the requirements for that. then i found environmental science! it was all the stuff im passionate about: climate change, conservation, natural processes and earth science. so i worked on my application letter and applied, and i got in!

so i went to my boss and was like hey. im putting in my notice. i got into university. and they were like "oh noooo we're so sad to see you go :( you were doing so well and we were so pleased with your work and your progress :(" (and i was like. huh?? i literally didnt fucking do anything. but oh well.)

so i worked until the end of my 6 week notice and then i handed my stuff back in and left. i had a bit of a summer vacation and then started uni! and ive been here for just over a year now :)

its honestly so much better. i have so many new friends, i got to move out of my mums house, im in full control of my life.

so take this as your reminder that its never too late! you can always change your path.

you are in control.

#inbox#ask#inbox open#life advice#software engineering#environmental science#university#career change

50 notes

·

View notes

Text

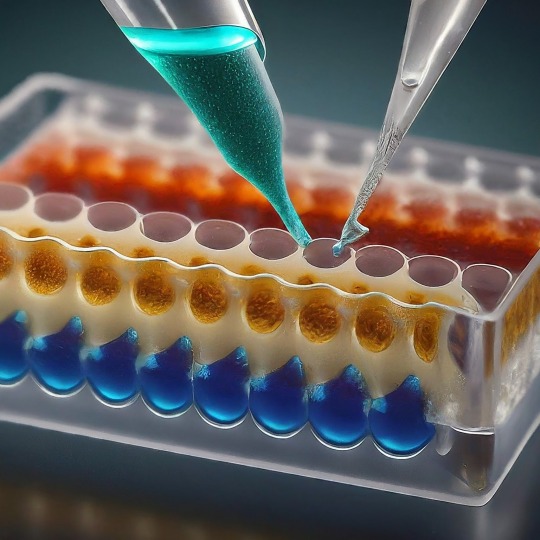

ELISA: A Powerful Tool for Detecting the Invisible

ELISA, or Enzyme-Linked Immunosorbent Assay, has become a cornerstone of medical diagnostics and biological research. This versatile technique allows scientists to detect and quantify minute amounts of target molecules, such as proteins, antibodies, and even viruses, with remarkable accuracy. In this blog, we'll delve into the world of ELISA, exploring its various types, its applications, and the exciting future directions this technology holds.

At its core, ELISA relies on the exquisite specificity of antibodies. Antibodies are highly specialized proteins produced by the immune system in response to foreign invaders. Each antibody can bind to a unique structure, called an antigen, on a specific molecule. In an ELISA, scientists leverage this binding property to create a sensitive detection system.

The 1960s witnessed a surge in interest in immunoassays, techniques that utilize the specificity of antibodies to detect target molecules. One such technique, radioimmunoassay (RIA), developed by Rosalyn Yalow and Solomon Berson, revolutionized medical diagnostics. RIA used radioactively labeled antibodies to detect antigens, offering high sensitivity. However, concerns regarding the safety of radioactive materials fueled the search for a safer alternative. The year 1971 marked a turning point. Independently, Eva Engvall and Peter Perlmann published their work on a novel technique – the enzyme-linked immunosorbent assay (ELISA). ELISA replaced radioactive labels with enzymes, eliminating the safety concerns associated with RIA. Like RIA, ELISA harnessed the specific binding between antibodies and antigens. However, it employed enzymes that could generate a detectable signal, such as a color change, upon interacting with a substrate. This innovation paved the way for a safer and more user-friendly diagnostic tool.

The basic ELISA protocol involves immobilizing the target antigen on a solid surface like a plate well. Then, a sample containing the molecule of interest (e.g., a suspected virus) is introduced. If the target molecule is present, it will bind to the immobilized antigen. Next, an antibody specific to the target molecule, linked to an enzyme, is introduced. This "detection antibody" binds to the target molecule already attached to the antigen. Finally, a substrate specific to the enzyme is added. This antigen-antibody binding is visualized using an enzyme linked to a reporter molecule. When the enzyme encounters its substrate, a detectable signal is produced, such as a color change or luminescence. The intensity of this signal is directly proportional to the amount of antigen present in the sample, allowing for quantification. The beauty of ELISA lies in its adaptability. Several variations exist, each tailored for specific detection needs.

The Four Main ELISA Formats are:

Direct ELISA: Simplicity at its finest. In this format, the antigen is directly coated onto the ELISA plate. A labeled antibody specific to the antigen is then introduced, binding directly to its target. After washing away unbound molecules, the enzyme linked to the antibody generates a signal upon addition of the substrate. Direct ELISA offers a rapid and straightforward approach, but sensitivity can be lower compared to other formats due to the lack of amplification.

Indirect ELISA: Unveiling the Power of Amplification. Similar to the direct ELISA, the antigen is first coated onto the plate. However, instead of a labeled primary antibody, an unlabeled one specific to the antigen is used. This is followed by the introduction of a labeled secondary antibody that recognizes the species (e.g., mouse, rabbit) of the primary antibody. This two-step approach acts as an amplification strategy, significantly enhancing the signal compared to the direct ELISA. However, the presence of an extra incubation step and the potential for cross-reactivity with the secondary antibody add complexity.

Sandwich ELISA: Capturing the Antigen Between Two Antibodies. Here, the capture antibody, specific for one region of the antigen, is pre-coated onto the ELISA plate. The sample containing the antigen is then introduced, allowing it to be "sandwiched" between the capture antibody and a detection antibody specific for a different region of the same antigen. A labeled secondary antibody or a labeled detection antibody itself can then be used to generate the signal. Sandwich ELISA boasts high sensitivity due to the double-antibody recognition and is often the preferred format for quantifying analytes.

Competitive ELISA: A Race for Binding Sites. In this format, the antigen competes with a labeled antigen (usually a known amount) for binding sites on a capture antibody pre-coated onto the plate. The more antigen present in the sample, the less labeled antigen can bind to the capture antibody. Following a washing step, the amount of bound labeled antigen is measured, providing an inverse relationship between the signal and the concentration of antigen in the sample. Competitive ELISA is particularly useful for studying small molecules that may be difficult to directly conjugate to an enzyme.

ELISA's Reach: From Diagnostics to Research. The applications of ELISA are as vast as they are impressive. Let's delve into some key areas where ELISA plays a vital role:

Unveiling the Mysteries of Disease: Diagnostics: ELISA is a cornerstone of diagnosing infectious diseases like HIV, Hepatitis, and Lyme disease. It detects antibodies produced by the body in response to the invading pathogen, providing valuable information for early detection and treatment. Monitoring Autoimmune Diseases: ELISA helps monitor autoimmune diseases like rheumatoid arthritis and lupus by measuring specific antibodies associated with these conditions. Cancer Screening: Certain cancers can be detected by identifying tumor markers, proteins elevated in the blood of cancer patients. ELISA assays are being developed to detect these markers for early cancer screening.

Safeguarding Food Quality: Allergen Detection: Food allergies can be life-threatening. ELISA ensures food safety by enabling the detection of allergens like peanuts, gluten, and milk in food products, protecting consumers with allergies. Monitoring Foodborne Pathogens: ELISA can identify harmful bacteria, viruses, and toxins in food, preventing outbreaks of foodborne illnesses.

Environmental Monitoring: Pollutant Detection: ELISA can detect pollutants like pesticides and herbicides in water and soil samples, contributing to environmental protection efforts. Microbial Analysis: This technique can be used to identify and quantify specific microbes in environmental samples, providing insights into ecosystem health.

Research and Development: ELISA plays a crucial role in various research fields: Drug Discovery: It helps researchers assess the effectiveness of new drugs by measuring drug-target interactions and monitoring drug levels in the body. Vaccine Development: ELISA is instrumental in developing vaccines by evaluating immune responses to vaccine candidates. Basic Research: Scientists use ELISA to study various biological processes by detecting and quantifying specific molecules involved in these processes.

Despite its established role, ELISA is evolving alongside technological advancements. New multiplex platforms allow for the simultaneous detection of various targets in a single sample, boosting efficiency in biomarker discovery and disease analysis. Automation streamlines workflows minimizes errors, and increases throughput, making high-throughput screening feasible in drug development and clinical settings. Miniaturization and portable devices enable rapid on-site diagnostics, providing healthcare professionals with real-time data for quicker interventions. Additionally, ongoing research is improving assay sensitivity, reducing background noise, and expanding detection limits, allowing for the identification of trace analytes and early disease biomarkers with greater accuracy than ever before. Integration of ELISA with emerging technologies such as microfluidics, nanotechnology, and artificial intelligence holds promise for enhancing assay performance, scalability, and data analysis capabilities.

These advancements hold promise for even wider applications of ELISA in the future. ELISA has revolutionized our ability to detect and quantify biological molecules. Its versatility, accuracy, and adaptability make it an invaluable tool across various scientific disciplines. As research continues to refine and innovate ELISA techniques, we can expect even more exciting possibilities to emerge in the years to come. ELISA's future is bright, promising to play a pivotal role in unraveling the mysteries of the biological world and improving human health.

#science sculpt#life science#molecular biology#science#biology#artists on tumblr#ELISA#immunology#immunotherapy#diagnostic management software#diagnosticimaging#history of immunology#scientific advancements#biotechnology#scientific research#scientific equipment#scientific instruments#techniques in biotechnology#scientific illustration#lab equipment#sciencenature#laboratory#lab skills#molecular diagnostics market

11 notes

·

View notes

Text

i actually think hatori is more of an electrical/hardware engineer than an informatics/information technology/software engineering person

#from the fanbook - he says he has the ability to ''flip switches he isn't supposed to''#in other words - 1s and 0s and currents#off and on#i think at the very granular level that's the mechanics of hatori's power#and i mean this is applicable to computer science and IT - but not that much#the electrical and hardware manipulation is VERY abstracted away into programming languages#and im of the opinion that hatori... doesn't know how to program computers#also when we see him demonstrate his abilities they are either hacking drones and helicopters that are likely programmed in lower-#-level languages and place a larger emphasis on electrical engineering#or hacking radio waves which i mean that's still some sort of off-on thing#the software engineering route of changing ports n permissions n stuff is.. i think not hatori's thing#but who knows... i really like hatori infosec interpretations... its just that i also think in canon he's an electrical engineer type guy#(not shitting on electrical engineers - infact i think they do better stuff than me - the loser infosec guy who can't do physics#to save his life)#my post canon hc for him is that he cleans up and goes to post-secondary school and finally learns the theory behind all of the stuff#-he CAN do#i think he'd unlock a lot of potential that way#but what do i know i am just speculating on the mechanics of psychic powers#milk (normal)#hatori tag#ah this is just me rambling i wanted to get the thought out

13 notes

·

View notes

Text

SOFTWARE DESIGN, ARCHITECTURE AND ENGINEERING : CONCEPTS AND PRACTICE by P.C.P Bhatt

We are proud to introduce our bestselling textbook SOFTWARE DESIGN, ARCHITECTURE & ENGINEERING. Perfect for Computer Science, Engineering, and Information Technology enthusiasts!

Key Features:

Comprehensive Curriculum

Theoretical & Practical Balance

Real-world Case Studies

Ideal for students and practitioners

Expand your software design horizons with our textbook. Learn from the expert and mentor in the field. Order your copy today! Link: http://social.phindia.com/WGX5ZeJ3

#phibooks#philearning#phibookclub#ebook#undergraduate#education#books#computerscience#computer science#computer scientist#software design#softwareengineering#software development#college textbooks#textbook#textbook publishing#collegebooks#university books#college life#studying#student life#university#college student#academic books#academic publishing#academic publisher

3 notes

·

View notes

Text

CloudFusion Full Course

Store & Deliver All Your Videos, Images, Trainings, Audios, & Media Files at Blazing-Fast Speed. No Monthly Fee Ever… When you purchase this deeply discounted bundle deal, you can get CloudFusion with all the upgrades for 70% off and save over $697.

GET Complete CloudFusion Package (FE + ALL Upgrades + Agency License).

No Monthly Payment Hassles- It's Just A One-Time Payment.

GET Priority Support from Our Dedicated Support Engineers.

Provide Top-Notch Services to Your Clients.

Grab All Benefits For A Low, ONE-TIME Discounted Price.

GET 30-Days Money Back Guarantee.

CLICK HERE

Hurry! Special offer for Limited Time Only!

#make money from home#student life#student#university#book review#nature#marketing#make money online#software engineering#science#digital art#digitalmarketing#women#woman#words#music#my work#original work#work form home#work for it#work force#pets#peter parker#bd/sm pet#dog#cute pets#kitten#cat#cute animals#animals

2 notes

·

View notes

Text

Monday CRM offers customizable and automated workflows that reduce manual tasks and improve sales tracking. Its flexible boards and automation rules help teams align sales activities with strategic goals and adapt quickly to market changes.

#bioscience#OctaneX Labs#API clinical trial management system#intermediates manufacturers#chemicals API#fine chemical#synthesis#CDMO Companies#CDMO India#life science chemicals#pharmaceutical fine chemicals#capsules#it#technology#it jobs#tech#crm benefits#crm services#sierra consulting#current events#technews#crm#crm strategy#sales crm#crm platform#crm integration#crm software#crm solutions#businesssolutions#business growth

0 notes

Text

Quality Management Software for Life Sciences: Tackling Data Integrity and Traceability at Scale

In the rapidly evolving life sciences industry, data integrity and traceability are not just compliance requirements—they are essential for maintaining product safety, regulatory readiness, and operational efficiency. As organizations scale and globalize, managing data from clinical trials, production, supply chains, and post-market activities becomes increasingly complex. Quality Management Software for Life Sciences addresses these challenges by providing a unified platform that ensures data accuracy, traceability, and regulatory compliance.

Enhancing Data Integrity With Quality Management Software for Life Sciences

Data integrity is the cornerstone of quality management in life sciences. Ensuring that data remains accurate, consistent, and reliable throughout its lifecycle is critical for FDA and EMA compliance. Quality management software for life sciences enforces data integrity through automated data capture, secure storage, and audit trails.

Automating Data Capture to Minimize Human Error

Manual data entry introduces the risk of human error. By leveraging life sciences quality management software, companies can automate data collection from laboratory instruments, production equipment, and digital logs. This minimizes inaccuracies and establishes a single source of truth for critical data.

Improving Traceability Across the Product Lifecycle

Life sciences organizations must maintain complete traceability to meet regulatory standards like FDA 21 CFR Part 11 and EU Annex 11. Quality management software for life sciences ensures end-to-end traceability by linking data from research, development, manufacturing, and post-market monitoring.

Tracking Every Step With Robust Documentation Controls

Life sciences qms platforms maintain digital records that track every change, update, and approval, preserving the chain of custody throughout the product lifecycle. This transparency is vital for demonstrating compliance during inspections.

Reducing Nonconformance With Automated Quality Control

Nonconformance incidents can lead to costly recalls, regulatory penalties, and reputational damage. Implementing a life sciences quality management system with real-time monitoring and automated alerts helps detect quality issues before they escalate.

Streamlining Corrective and Preventive Actions (CAPA)

When nonconformances are detected, the system triggers CAPA workflows to investigate root causes, implement corrective measures, and validate their effectiveness. This proactive approach significantly reduces recurrence rates.

Ensuring Compliance Through Configurable Workflows

Quality management software for life sciences is designed to support dynamic regulatory environments. By configuring workflows to align with FDA, EMA, and global standards, organizations can ensure continuous compliance without the need for extensive manual oversight.

Enabling Real-Time Audit Readiness

With comprehensive logging, automated documentation, and user access control, the system prepares companies for audits at any moment. This readiness builds confidence during regulatory inspections and supports compliance with data integrity guidelines.

Integrating Supplier Quality Management for Enhanced Collaboration

Suppliers are integral to the quality ecosystem. Life sciences qms platforms integrate supplier management modules that facilitate transparent collaboration and robust quality checks.

Maintaining a Single, Collaborative Quality Platform

By bringing suppliers into the Quality Management ecosystem, organizations can ensure that raw materials and components meet stringent quality standards from the outset, reducing downstream risks.

Facilitating Change Management in Regulated Environments

Adapting to regulatory changes without disrupting operations is a persistent challenge. Life sciences quality management software offers configurable change management features that ensure controlled updates to processes and documentation.

Mitigating Risk With Real-Time Change Control

When changes are required, the system automatically evaluates potential impacts and triggers relevant approvals. This structured approach minimizes the risk of non-compliance during transition phases.

Leveraging Advanced Analytics for Quality Improvement

Data analytics within quality management software for life sciences enable organizations to identify trends, anticipate quality issues, and make data-driven decisions.

Using Predictive Insights to Prevent Recurring Issues

Advanced analytics help pinpoint root causes by analyzing data patterns, allowing quality teams to implement targeted improvements and reduce Nonconformance incidents.

Conclusion: Why ComplianceQuest Is Essential for Life Sciences Quality Management in 2025

ComplianceQuest provides a comprehensive, cloud-native quality management software for life sciences, addressing the industry's unique data integrity and traceability challenges. By enabling automated data capture, robust traceability, and proactive compliance management, ComplianceQuest empowers life sciences companies to maintain regulatory readiness while driving operational excellence. In 2025 and beyond, leveraging a unified, cloud-based QMS platform will be essential for staying competitive and compliant.

0 notes

Text

Our organization flourishes through partnerships rooted in shared values and ambitions. With quality at the center, we serve the Pharma, Agro, Fine, and Specialty Chemicals markets, offering complete solutions including high-quality CRO and CDMO services that drive innovation and performance.

#bioscience#OctaneX Labs#API clinical trial management system#intermediates manufacturers#chemicals API#fine chemical#synthesis#CDMO Companies#CDMO India#life science chemicals#pharmaceutical fine chemicals#capsules#chemicals#cro#cdmo#cdmo companies in india#cdmo services#science#chemical synthesis#chemistry#healthcare#cro services#CONTRACT#contract manufacturing#contract management software

0 notes

Text

Generative AI: Revolutionizing Healthcare and Life Sciences

Imagine a future where AI crafts medical images, tailors personalized treatment plans, accelerates drug discovery, and creates synthetic patient data to drive groundbreaking research. That future is here.

Generative AI is unlocking unparalleled opportunities for innovation in healthcare and life sciences, reshaping how we heal, discover, and grow.

Curious about how this transformative technology can redefine your business? 👉 Dive into our blog to explore the game-changing impact of GenAI on life sciences.

0 notes