#ai data labeling

Explore tagged Tumblr posts

Text

Data Labeling Services | AI Data Labeling Company

AI models are only as effective as the data they are trained on. This service page explores how Damco’s data labeling services empower organizations to accelerate AI innovation through structured, accurate, and scalable data labeling.

Accelerate AI Innovation with Scalable, High-Quality Data Labeling Services

Accurate annotations are critical for training robust AI models. Whether it’s image recognition, natural language processing, or speech-to-text conversion, quality-labeled data reduces model errors and boosts performance.

Leverage Damco’s Data Labeling Services

Damco provides end-to-end annotation services tailored to your data type and use case.

Computer Vision: Bounding boxes, semantic segmentation, object detection, and more

NLP Labeling: Text classification, named entity recognition, sentiment tagging

Audio Labeling: Speaker identification, timestamping, transcription services

Who Should Opt for Data Labeling Services?

Damco caters to diverse industries that rely on clean, labeled datasets to build AI solutions:

Autonomous Vehicles

Agriculture

Retail & Ecommerce

Healthcare

Finance & Banking

Insurance

Manufacturing & Logistics

Security, Surveillance & Robotics

Wildlife Monitoring

Benefits of Data Labeling Services

Precise Predictions with high-accuracy training datasets

Improved Data Usability across models and workflows

Scalability to handle projects of any size

Cost Optimization through flexible service models

Why Choose Damco for Data Labeling Services?

Reliable & High-Quality Outputs

Quick Turnaround Time

Competitive Pricing

Strict Data Security Standards

Global Delivery Capabilities

Discover how Damco’s data labeling can improve your AI outcomes — Schedule a Consultation.

#data labeling#data labeling services#data labeling company#ai data labeling#data labeling companies

0 notes

Text

Scale AI's recent integration of Pesto AI's team marks a significant advancement in global AI talent integration.

Scale AI’s recent integration of Pesto AI’s team marks a significant advancement in global AI talent integration.

Strategic Expansion of Talent Pool: By incorporating Pesto AI’s expertise in remote developer recruitment, Scale AI broadens its access to a diverse and skilled global talent pool. This move enhances Scale AI’s capacity to source and manage top-tier developers worldwide, aligning with its mission to advance AI technologies.

Enhanced Training and Upskilling Initiatives: Pesto AI’s focus on developer education complements Scale AI’s objectives by facilitating the upskilling of personnel. This integration ensures that developers are well-equipped to meet the evolving demands of AI data annotation and model evaluation, thereby improving the overall quality of AI solutions.

Improved Operational Efficiency: Leveraging Pesto AI’s methodologies in remote workforce management, Scale AI is poised to optimize project execution timelines and quality. This operational efficiency is crucial for maintaining a competitive edge in the fast-paced AI industry.

Positive Impact on the Global Developer Community: For developers worldwide, this acquisition opens up new opportunities for remote work and professional growth. It underscores the increasing recognition of global talent in contributing to cutting-edge AI developments, fostering a more inclusive and expansive AI community.

What was Scale AI concentrating on with Global Talent Integration?

Scale AI’s focus on global talent integration means they want to:

Access top engineering talent worldwide, especially from emerging markets like India where there is a big pool of skilled developers.

Build distributed teams that work remotely across different countries and time zones.

Create a sustainable, diverse talent pipeline that supports the company’s rapid growth and innovation.

Improve collaboration and productivity by integrating these global talents into Scale AI’s existing workforce and projects.

What were they going to do through this strategy and why?

By acquiring Pesto AI’s team (a platform known for training and placing remote engineers from India), Scale AI aims to:

Secure a steady stream of highly skilled engineers who are already trained and proven.

Reduce reliance on traditional, local hiring, which is competitive and often limited.

Speed up product development by having a larger, diverse team working around the clock.

Expand innovation capabilities by leveraging diverse experiences and skills from global talent.

Stay competitive in the global AI race by combining strong technology with world-class human capital.

Read More : Scale AI’s recent integration of Pesto AI’s team marks a significant advancement in global AI talent integration.

#Scale AI#Pesto AI#AI talent integration#global developer workforce#AI acquisition#remote software engineers#developer upskilling#AI data labeling#machine learning#human-in-the-loop AI#AI workforce strategy#Scale AI acquisition#remote work in tech#AI industry growth#global tech talent

0 notes

Text

Decoding the Power of Speech: A Deep Dive into Speech Data Annotation

Introduction

In the realm of artificial intelligence (AI) and machine learning (ML), the importance of high-quality labeled data cannot be overstated. Speech data, in particular, plays a pivotal role in advancing various applications such as speech recognition, natural language processing, and virtual assistants. The process of enriching raw audio with annotations, known as speech data annotation, is a critical step in training robust and accurate models. In this in-depth blog, we'll delve into the intricacies of speech data annotation, exploring its significance, methods, challenges, and emerging trends.

The Significance of Speech Data Annotation

1. Training Ground for Speech Recognition: Speech data annotation serves as the foundation for training speech recognition models. Accurate annotations help algorithms understand and transcribe spoken language effectively.

2. Natural Language Processing (NLP) Advancements: Annotated speech data contributes to the development of sophisticated NLP models, enabling machines to comprehend and respond to human language nuances.

3. Virtual Assistants and Voice-Activated Systems: Applications like virtual assistants heavily rely on annotated speech data to provide seamless interactions, and understanding user commands and queries accurately.

Methods of Speech Data Annotation

1. Phonetic Annotation: Phonetic annotation involves marking the phonemes or smallest units of sound in a given language. This method is fundamental for training speech recognition systems.

2. Transcription: Transcription involves converting spoken words into written text. Transcribed data is commonly used for training models in natural language understanding and processing.

3. Emotion and Sentiment Annotation: Beyond words, annotating speech for emotions and sentiments is crucial for applications like sentiment analysis and emotionally aware virtual assistants.

4. Speaker Diarization: Speaker diarization involves labeling different speakers in an audio recording. This is essential for applications where distinguishing between multiple speakers is crucial, such as meeting transcription.

Challenges in Speech Data Annotation

1. Accurate Annotation: Ensuring accuracy in annotations is a major challenge. Human annotators must be well-trained and consistent to avoid introducing errors into the dataset.

2. Diverse Accents and Dialects: Speech data can vary significantly in terms of accents and dialects. Annotating diverse linguistic nuances poses challenges in creating a comprehensive and representative dataset.

3. Subjectivity in Emotion Annotation: Emotion annotation is subjective and can vary between annotators. Developing standardized guidelines and training annotators for emotional context becomes imperative.

Emerging Trends in Speech Data Annotation

1. Transfer Learning for Speech Annotation: Transfer learning techniques are increasingly being applied to speech data annotation, leveraging pre-trained models to improve efficiency and reduce the need for extensive labeled data.

2. Multimodal Annotation: Integrating speech data annotation with other modalities such as video and text is becoming more common, allowing for a richer understanding of context and meaning.

3. Crowdsourcing and Collaborative Annotation Platforms: Crowdsourcing platforms and collaborative annotation tools are gaining popularity, enabling the collective efforts of annotators worldwide to annotate large datasets efficiently.

Wrapping it up!

In conclusion, speech data annotation is a cornerstone in the development of advanced AI and ML models, particularly in the domain of speech recognition and natural language understanding. The ongoing challenges in accuracy, diversity, and subjectivity necessitate continuous research and innovation in annotation methodologies. As technology evolves, so too will the methods and tools used in speech data annotation, paving the way for more accurate, efficient, and context-aware AI applications.

At ProtoTech Solutions, we offer cutting-edge Data Annotation Services, leveraging expertise to annotate diverse datasets for AI/ML training. Their precise annotations enhance model accuracy, enabling businesses to unlock the full potential of machine-learning applications. Trust ProtoTech for meticulous data labeling and accelerated AI innovation.

#speech data annotation#Speech data#artificial intelligence (AI)#machine learning (ML)#speech#Data Annotation Services#labeling services for ml#ai/ml annotation#annotation solution for ml#data annotation machine learning services#data annotation services for ml#data annotation and labeling services#data annotation services for machine learning#ai data labeling solution provider#ai annotation and data labelling services#data labelling#ai data labeling#ai data annotation

0 notes

Note

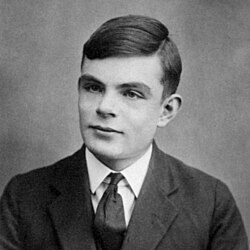

you know what i dont think theres anything wrong with these guys

also GOD the amount of ai search results for alan turing is so STUPID, like he birthed modern computing as a WHOLE, not just shitty ai, hell AI was a pipe dream at that point in the computing world

waves alan turing hiiiii alan turing

#lemon speaks#also Yeah that is dumb . you could attribute 'early ai' to like fifty billion different people#because it's just a specific method of data collection and regurgitation#like. we've had the data Collection bit for ages#the new bit is. getting it to spew out something passable based off of what its been fed. (and also the label of 'ai' as its used now)#alan turing did not in fact do all that .keep him outta this...

3 notes

·

View notes

Text

Just saw an ad for an "AI powered" fertility hormone tracker and I'm about to go full Butlerian jihad.

#in reality this gadget is probably not doing anything other fertility trackers aren't already#the AI label is just marketing bs#like it always is#anyway ladies you don't need to hand your cycle data over to Big Tech#paper charts work just fine

9 notes

·

View notes

Text

Top Data Annotation Companies for Agritech in 2025

This labeled data helps AI algorithms make informed decisions, supporting farmers in monitoring fields and augmenting productivity.

Data annotation for agriculture data (sensor readings, images, etc) with relevant information allows AI systems to see and understand the crucial details of a farm environment. Do you want to explore where to outsource data labeling services personalized to your agriculture needs? You are reading the right blog, as we will list the top data annotation companies for agriculture in 2025. Let’s delve deeper!

0 notes

Text

of COURSE builder.ai was exploiting and concealing the labor of Indian software developers. of COURSE.

#the tech industry has a huge amount of shit like this#especially ai tools#from underpaid data labelers to shit like this

1 note

·

View note

Text

The Role of Content Moderation in Online Advertising: A Case Study

Introduction to Content Moderation

The digital landscape is a bustling marketplace, teeming with brands vying for attention. In this dynamic arena, content moderation services have become essential. They serve as the gatekeepers of online advertising, ensuring that messages are not just seen but also resonate positively with audiences. Imagine scrolling through your favorite platform and encountering an ad that feels out of place or offensive. This experience can tarnish a brand's reputation in an instant. As marketers navigate these challenges, understanding the role of content moderation becomes crucial. It not only protects brands but also enhances user experience. Join us as we explore how effective content moderation shapes online advertising strategies and safeguards brand integrity in today’s fast-paced digital world.

The Impact of Inappropriate Advertising on Brands

Inappropriate advertising can have devastating effects on brands. When ads appear alongside offensive or controversial content, the brand's reputation often takes a hit. Consumers may perceive the brand as insensitive or out of touch. This misalignment can lead to lost trust and loyalty. Customers expect brands to reflect their values, and any deviation can trigger backlash. Social media amplifies this issue, enabling rapid spread of negative sentiment. Moreover, financial repercussions are common. Brands may face decreased sales as a result of damaged reputations. Companies might also incur costs from crisis management efforts aimed at restoring consumer confidence. Navigating these pitfalls requires vigilance in monitoring ad placements and the surrounding content. An effective strategy involves implementing robust content moderation services that ensure advertisements align with brand image and values while fostering positive engagement with target audiences.

The Importance of Content Moderation in Online Advertising

Content moderation is a critical aspect of online advertising. It ensures that the ads displayed are appropriate, relevant, and align with brand values. Inappropriate content can damage a brand’s reputation. A single offensive ad can lead to public backlash, affecting customer trust and loyalty. Brands need to safeguard their image in an increasingly digital landscape. Effective moderation helps maintain a positive user experience. When users see relevant ads without inappropriate content, they feel more engaged and valued. This leads to higher conversion rates. Moreover, robust content moderation services assist platforms in complying with legal regulations. Adhering to guidelines protects both users and advertisers from potential pitfalls related to harmful or misleading content. Investing in quality moderation not only enhances brand integrity but also fosters healthier online communities where constructive dialogue thrives without fear of encountering objectionable material.

Case Study: YouTube's Content Moderation Policies

YouTube, one of the largest video-sharing platforms, has faced significant challenges with content moderation. Its policies aim to create a safe environment for users and advertisers alike. In recent years, YouTube has ramped up efforts to tackle inappropriate content. The platform employs advanced algorithms alongside human moderators. This dual approach helps identify harmful videos quickly and efficiently. The impact on advertising is profound. Brands want assurance that their messages appear in suitable contexts. When ads run alongside offensive or misleading content, trust erodes. YouTube’s transparency reports reflect ongoing improvements in its moderation practices. They reveal how many videos are removed and why, giving brands insights into the enforcement process. Despite these advancements, controversies remain regarding bias and censorship claims. Balancing user freedoms while ensuring brand safety continues to be a tightrope walk for YouTube's team.

How Other Platforms Handle Content Moderation

Different platforms adopt varied approaches to content moderation, tailored to their unique audience and goals. Facebook employs a mix of automated tools and human reviewers. This combination helps them address harmful content swiftly while still allowing for nuanced judgment. Twitter takes a more decentralized route. They empower users by offering reporting tools, enabling the community to flag inappropriate material. Their focus lies in transparency, often sharing regular updates about enforcement actions. Instagram emphasizes visual integrity as well. With advanced image recognition technology, they filter out objectionable visuals before they even reach users’ feeds. TikTok combines algorithmic assessments with user feedback mechanisms too. The platform strives for rapid response times to keep its vibrant community safe from misleading or offensive content. Each platform’s strategy reflects its identity and values while navigating the fine line between freedom of expression and maintaining a respectful environment.

Challenges and Controversies Surrounding Content Moderation in Advertising

Content moderation in advertising faces numerous challenges. One major issue is the balance between free expression and protecting brands from harmful content. Companies often struggle to define what constitutes inappropriate material, leading to inconsistencies. Additionally, automated systems used for moderation can misinterpret context. This results in false positives or negatives that may harm brand reputation. Public backlash also complicates matters. Users frequently express dissatisfaction with perceived censorship while brands fear being associated with controversial topics. There’s a growing demand for transparency too. Advertisers want clarity on moderation practices but maintaining confidentiality can be tough for platforms. As social media evolves, so do the tactics employed by those seeking to exploit loopholes in content policies. Adapting to these shifting dynamics remains a constant battle for companies offering content moderation services.

The Future of Content Moderation in Online Advertising

The future of content moderation in online advertising is poised for transformation. As technology advances, artificial intelligence will play a larger role in identifying inappropriate content. Algorithms are becoming smarter and more responsive to nuanced contexts. Real-time moderation tools will empower brands to react instantly to potential issues. This agility can safeguard brand reputation effectively while maintaining user engagement. Human oversight remains crucial, however. The balance between AI efficiency and human judgment ensures that subtlety isn’t lost in automated processes. Additionally, regulatory frameworks are evolving. Advertisers must adapt to new guidelines surrounding transparency and ethical practices in targeting audiences. As consumer expectations shift towards authenticity, companies may increasingly prioritize responsible ad placements alongside effective moderation strategies. These changes promise a dynamic landscape where trust becomes foundational for digital marketing success.

Conclusion

Content moderation plays a crucial role in the online advertising landscape. It helps safeguard brands from inappropriate or harmful content that can tarnish their reputation. As seen with YouTube, effective content moderation policies are essential to maintaining advertiser trust and ensuring a safe environment for users. Other platforms have also developed their own strategies to tackle this issue, but challenges remain. The balance between free expression and protecting advertisers is delicate and often controversial. Navigating these waters requires constant adaptation as new issues arise. Looking ahead, the need for robust content moderation services will only grow as digital advertising continues to evolve. Brands must stay vigilant about where they place their ads and how those environments are managed. This ongoing commitment will ensure that both users and advertisers benefit from healthier online interactions. The future of online advertising depends on how well we handle these complexities today. With thoughtful approaches to content moderation, brands can thrive while fostering safer spaces across all digital platforms.

#content moderation services#human moderation#content moderation company#content moderation solution#generative ai services#generative ai solution#data labeling services

0 notes

Text

should you delete twitter and get bluesky? (or just get a bluesky in general)? here's what i've found:

yes. my answer was no before bc the former CEO of twitter who also sucked, jack dorsey, was on the board, but he left as of may 2024, and things have gotten a lot better. also a lot of japanese and korean artists have joined

don't delete your twitter. lock your account, use a service to delete all your tweets, delete the app off of your phone, and keep your account/handle so you can't be impersonated.

get a bluesky with the same handle, even if you won't use it, also so you won't be impersonated.

get the sky follower bridge extension for chrome or firefox. you can find everyone you follow on twitter AND everyone you blocked so you don't have to start fresh: https://skyfollowerbridge.com/

learn how to use its moderation tools (labelers, block lists, NSFW settings) so you can immediately cut out the grifters, fascists, t*rfs, AI freaks, have the NSFW content you want to see if you so choose, and moderate for triggers. here's a helpful thread with a lot of tools.

the bluesky phone app is pretty good, but there is also tweetdeck for bluesky, called https://deck.blue/ on desktop, if you miss tweetdeck.

bluesky has explicitly stated they do not use your data to train generative AI, which is nice to hear from an up and coming startup. obviously we can’t trust these companies and please use nightshade and glaze, but it’s good to hear.

21K notes

·

View notes

Text

The Ethics Of Data Annotation: Addressing Bias And Fairness

In the era of artificial intelligence and machine learning, data annotation plays a crucial role in shaping the performance and fairness of AI models. However, the ethical implications of data annotation must be considered. Ensuring ethical data annotation, addressing data annotation bias, and promoting fairness in data labeling is essential for creating unbiased and fair AI systems.

In this blog, we will explore the importance of ethical data annotation, strategies for reducing bias in AI, and the role of data annotation companies in India, like EnFuse Solutions, in setting high standards for fairness and accuracy.

Understanding Data Annotation And Its Ethical Implications

Data annotation involves labeling data to train AI models, enabling them to recognize patterns and make decisions. The quality and fairness of these annotations directly impact the performance and ethicality of AI systems. Ethical data annotation practices are vital to prevent the propagation of biases that can lead to discriminatory outcomes in AI applications.

Identifying And Addressing Data Annotation Bias

Data annotation bias occurs when the labels applied to training data reflect the prejudices of the annotators or the dataset itself. This bias can manifest in various forms, such as gender, racial, or cultural biases, and can lead to unfair AI predictions and decisions. To mitigate data annotation bias, it is essential to:

1. Diversify Annotator Teams: Ensuring that annotator teams are diverse can help bring multiple perspectives and reduce the risk of biased annotations. A diverse team can better understand and address cultural and social nuances.

2. Implement Annotator Training: Providing comprehensive training for annotators on recognizing and avoiding biases is crucial. This training should include guidelines on ethical data annotation practices and the importance of fairness in data labeling.

3. Use Bias Detection Tools: Employing tools that can detect and flag potential biases in annotated data can help maintain the quality and fairness of the dataset. Regular audits of the annotated data can identify and rectify biases.

Promoting Fairness In Data Labeling

Fairness in data labeling ensures that AI models do not favor or discriminate against any particular group. This can be achieved by:

1. Establishing Clear Annotation Standards: Developing and adhering to standardized annotation guidelines can help ensure consistency and fairness in data labeling. These standards should emphasize the importance of neutrality and objectivity.

2. Conducting Regular Reviews: Regular reviews and quality checks of the annotated data can help maintain high ethical standards. Involving external reviewers can provide an unbiased assessment of the annotations.

3. Ensuring Transparency: Maintaining transparency in the data annotation allows for accountability and trust. Documenting the annotation guidelines, procedures, and decision-making processes can help build confidence in the fairness of the labeled data.

The Role Of Data Annotation Companies In India

Data annotation companies in India, such as EnFuse Solutions, are at the forefront of promoting ethical AI practices. These companies are committed to delivering high-quality, unbiased, and fair data annotations that adhere to stringent ethical standards. EnFuse Solutions, for instance, emphasizes the importance of ethical AI training data and employs robust strategies to prevent annotator bias.

Conclusion

The ethics of data annotation are paramount in creating fair and unbiased AI systems. Addressing data annotation bias, promoting fairness in data labeling, and implementing ethical AI practices are essential steps in this direction. By adhering to high ethical standards, data annotation companies in India, like EnFuse Solutions, are setting a benchmark for fairness and accuracy in AI training data.

As we continue to advance in the field of AI, ensuring ethical data annotation will be crucial in building AI systems that are not only intelligent but also just and equitable.

#Data Annotation#Ethical Data Annotation#Data Annotation Bias#Fairness In Data Labeling#Reducing Bias In AI#Ethical AI Practices#Bias In Machine Learning#Annotator Bias Prevention#Ethical AI Training Data#Data annotation Standards#Data Annotation Companies in India#EnFuse Solutions

0 notes

Text

A leading predictive biotechnology research company developing AI-powered models for drug toxicity sought to evaluate whether its in silico systems could accurately detect Drug-Induced Liver Injury (DILI) using real-world clinical narratives, 2D molecular structure data, and high-content imaging. However, early model development was hindered by inconsistent image annotations, which introduced ambiguity in key cytotoxic phenotypes such as ER stress and cell death. These inconsistencies limited both the model’s accuracy and its interpretability.

0 notes

Text

Precision Data Labeling for AI Success

At Green Rider Technology, we have specialized in the delicate process of data labeling as an aspect central to the creation of AI solutions of very high quality.

We specialize in creating well-annotated, high-quality datasets from raw data, through which we take the performance and precision of applying artificial intelligence models to a higher level.

We are your reliable partner from India offering highly customized AI solutions that are conceptualized exclusively for your needs and have worldwide impact in a big way. Count on Green Rider Technology to provide the very best in data and the latest in AI results, your project is supported with the highest level of quality and precision.

1 note

·

View note

Text

Generative AI | High-Quality Human Expert Labeling | Apex Data Sciences

Apex Data Sciences combines cutting-edge generative AI with RLHF for superior data labeling solutions. Get high-quality labeled data for your AI projects.

#GenerativeAI#AIDataLabeling#HumanExpertLabeling#High-Quality Data Labeling#Apex Data Sciences#Machine Learning Data Annotation#AI Training Data#Data Labeling Services#Expert Data Annotation#Quality AI Data#Generative AI Data Labeling Services#High-Quality Human Expert Data Labeling#Best AI Data Annotation Companies#Reliable Data Labeling for Machine Learning#AI Training Data Labeling Experts#Accurate Data Labeling for AI#Professional Data Annotation Services#Custom Data Labeling Solutions#Data Labeling for AI and ML#Apex Data Sciences Labeling Services

1 note

·

View note

Text

Human vs. Automated Data Labeling: How to Choose the Right Approach

Today, technology is evolving rapidly, making it crucial to choose the right data labeling approach for training AI datasets.

In our article, we have discussed human vs. automated data labeling and how to select the best approach for your AI models. We have also explored the benefits and limitations of both methods, providing you with a clear understanding of which one to choose.

#Data Labeling#Human Labeling#Automated Labeling#Machine Learning#AI Data Annotation#Data Quality#Efficiency in Data Labeling#Labeling Techniques#AI Training Data#Data Annotation Tools#Data Labeling Best Practices#Cost of Data Labeling#Hybrid Labeling Methods

0 notes

Text

Content Moderation Services in the Era of Deepfakes and AI-Generated Content

Introduction to content moderation services

Welcome to the era where reality and fiction blur seamlessly, thanks to the rapid advancements in technology. Content moderation services have become indispensable in navigating through the sea of online content flooded with deepfakes and AI-generated materials. As we delve into this digital landscape, let's explore the impact of these technologies on online platforms and how content moderators are tackling the challenges they present.

The impact of deepfakes and AI-generated content on online platforms

In today's digital age, the proliferation of deepfakes and AI-generated content has significantly impacted online platforms. These advanced technologies have made it increasingly challenging to distinguish between genuine and fabricated content, leading to misinformation and manipulation on a mass scale. The rise of deepfakes poses serious threats to individuals, businesses, and even governments as malicious actors can exploit these tools to spread false information or defame others. Online platforms are now facing the daunting task of ensuring the authenticity and credibility of the content shared by users amidst this growing trend of deception. AI-generated content, on the other hand, has revolutionized how information is created and disseminated online. While it offers numerous benefits in terms of efficiency and creativity, there are also concerns regarding its potential misuse for spreading propaganda or fake news. As we navigate this complex landscape of evolving technologies, it becomes imperative for content moderation services to adapt and leverage innovative solutions to combat the negative repercussions of deepfakes and AI-generated content on online platforms.

Challenges faced by content moderators in identifying and removing fake or harmful content

Content moderators face a myriad of challenges in identifying and removing fake or harmful content on online platforms. With the advancement of deepfake technology and AI-generated content, distinguishing between what is real and what is fabricated has become increasingly difficult. The speed at which misinformation spreads further complicates the moderation process, requiring quick action to prevent its viral spread. Moreover, malicious actors are constantly evolving their tactics to bypass detection algorithms, making it a constant cat-and-mouse game for content moderators. The sheer volume of user-generated content uploaded every minute adds another layer of complexity, as manual review becomes almost impossible without technological assistance. The psychological toll on human moderators cannot be overlooked either, as they are exposed to graphic violence, hate speech, and other disturbing material on a daily basis. This can lead to burnout and compassion fatigue if not properly addressed by support systems in place.

How technology is being used to combat the rise of deepfakes and AI-generated content

In the ongoing battle against deepfakes and AI-generated content, technology is playing a pivotal role in combating their proliferation. Advanced algorithms and machine learning models are being deployed to detect inconsistencies in videos and images that indicate manipulation. These technologies analyze factors such as facial expressions, voice patterns, and contextual cues to flag potentially fake content for review by human moderators. Additionally, blockchain technology is being explored to create tamper-proof digital records of original content. Furthermore, platforms are investing in developing tools that can authenticate the source of media files and track their digital footprint across the internet. By leveraging the power of artificial intelligence, content moderation services are constantly evolving to stay ahead of malicious actors creating deceptive content.

The role of human moderators in content moderation services

Human moderators play a crucial role in content moderation services, bringing a unique ability to understand context, nuance, and cultural sensitivities that technology may struggle with. Their expertise allows them to make judgment calls on complex cases that automated systems might overlook. Through their experience and training, human moderators can identify subtle signs of manipulation or misinformation that AI algorithms may not detect. They provide a human touch in the decision-making process, ensuring that content removal is done thoughtfully and ethically. Furthermore, human moderators contribute to creating safer online environments by upholding community guidelines and fostering healthy discussions. Their intervention helps maintain credibility and trust within online platforms by distinguishing genuine content from harmful or misleading information. In today's digital landscape filled with deepfakes and AI-generated content, the role of human moderators remains irreplaceable in safeguarding the integrity of online spaces.

Benefits and limitations of using technology for content moderation

Technology has revolutionized content moderation services, offering numerous benefits. Automated tools can quickly scan vast amounts of data to detect potential fake or harmful content, enhancing efficiency and speed in the moderation process. These tools also provide consistency in decision-making, reducing human error and bias. However, technology has its limitations. AI algorithms may struggle to distinguish between sophisticated deepfakes and authentic content, leading to false positives or negatives. Moreover, these tools lack contextual understanding and emotional intelligence that human moderators possess, making it challenging to interpret nuanced situations accurately. Despite these limitations, the integration of technology in content moderation is crucial for combating the increasing volume of deceptive content online. By leveraging a combination of AI technologies and human expertise, platforms can achieve a more comprehensive approach to maintaining a safe online environment for users.

Future implications and advancements in content moderation services

As technology continues to evolve, the future of content moderation services holds promising advancements. With the increasing sophistication of deepfakes and AI-generated content, there is a growing need for more advanced tools to detect and combat these threats. In the coming years, we can expect to see enhanced machine learning algorithms that can better differentiate between real and fake content with higher accuracy. This will enable platforms to stay ahead of malicious actors who seek to spread misinformation or harmful material online. Additionally, the integration of blockchain technology may provide a secure way to track and verify the authenticity of digital content, making it harder for fake news or manipulated media to proliferate unchecked. Furthermore, as generative AI services become more prevalent, content moderation providers will need to adapt by investing in cutting-edge solutions that can effectively identify and remove AI-generated content from their platforms. The future implications and advancements in content moderation services point towards a continued arms race between technological innovation and malicious actors seeking to exploit vulnerabilities in online spaces.

Conclusion: The importance of

Content moderation services play a crucial role in maintaining the integrity and safety of online platforms. As deepfakes and AI-generated content continue to pose challenges, it is essential for content moderators to adapt and utilize technology effectively. By combining the strengths of both advanced algorithms and human judgment, content moderation services can stay ahead of malicious actors seeking to spread fake or harmful content. The importance of investing in reliable content moderation services cannot be overstated. With the right tools, strategies, and expertise in place, online platforms can create a safer environment for users to engage with each other. As technology continues to evolve, so too must our approach to combating misinformation and harmful content online. By staying vigilant and proactive, we can help ensure that the digital world remains a place where authenticity thrives over deception.

0 notes

Text

Pollution Annotation / Pollution Detection

Pollution annotation involves labeling environmental data to identify and classify pollutants. This includes marking specific areas in images or videos and categorizing pollutant types. ### Key Aspects: - **Image/Video Labeling:** Using bounding boxes, polygons, keypoints, and semantic segmentation. - **Data Tagging:** Adding metadata about pollutants. - **Quality Control:** Ensuring annotation accuracy and consistency. ### Applications: - Environmental monitoring - Research - Training machine learning models Pollution annotation is crucial for effective pollution detection, monitoring, and mitigation strategies. AigorX Data annotationsData LabelerDataAnnotationData Annotation and Labeling.inc (DAL)DataAnnotation Fiverr Link- https://lnkd.in/gM2bHqWX

#image annotation services#artificial intelligence#annotation#machinelearning#annotations#ai data annotator#ai image#ai#ai data annotator jobs#data annotator#video annotation#image labeling

0 notes