#speech data annotation

Explore tagged Tumblr posts

Text

Decoding the Power of Speech: A Deep Dive into Speech Data Annotation

Introduction

In the realm of artificial intelligence (AI) and machine learning (ML), the importance of high-quality labeled data cannot be overstated. Speech data, in particular, plays a pivotal role in advancing various applications such as speech recognition, natural language processing, and virtual assistants. The process of enriching raw audio with annotations, known as speech data annotation, is a critical step in training robust and accurate models. In this in-depth blog, we'll delve into the intricacies of speech data annotation, exploring its significance, methods, challenges, and emerging trends.

The Significance of Speech Data Annotation

1. Training Ground for Speech Recognition: Speech data annotation serves as the foundation for training speech recognition models. Accurate annotations help algorithms understand and transcribe spoken language effectively.

2. Natural Language Processing (NLP) Advancements: Annotated speech data contributes to the development of sophisticated NLP models, enabling machines to comprehend and respond to human language nuances.

3. Virtual Assistants and Voice-Activated Systems: Applications like virtual assistants heavily rely on annotated speech data to provide seamless interactions, and understanding user commands and queries accurately.

Methods of Speech Data Annotation

1. Phonetic Annotation: Phonetic annotation involves marking the phonemes or smallest units of sound in a given language. This method is fundamental for training speech recognition systems.

2. Transcription: Transcription involves converting spoken words into written text. Transcribed data is commonly used for training models in natural language understanding and processing.

3. Emotion and Sentiment Annotation: Beyond words, annotating speech for emotions and sentiments is crucial for applications like sentiment analysis and emotionally aware virtual assistants.

4. Speaker Diarization: Speaker diarization involves labeling different speakers in an audio recording. This is essential for applications where distinguishing between multiple speakers is crucial, such as meeting transcription.

Challenges in Speech Data Annotation

1. Accurate Annotation: Ensuring accuracy in annotations is a major challenge. Human annotators must be well-trained and consistent to avoid introducing errors into the dataset.

2. Diverse Accents and Dialects: Speech data can vary significantly in terms of accents and dialects. Annotating diverse linguistic nuances poses challenges in creating a comprehensive and representative dataset.

3. Subjectivity in Emotion Annotation: Emotion annotation is subjective and can vary between annotators. Developing standardized guidelines and training annotators for emotional context becomes imperative.

Emerging Trends in Speech Data Annotation

1. Transfer Learning for Speech Annotation: Transfer learning techniques are increasingly being applied to speech data annotation, leveraging pre-trained models to improve efficiency and reduce the need for extensive labeled data.

2. Multimodal Annotation: Integrating speech data annotation with other modalities such as video and text is becoming more common, allowing for a richer understanding of context and meaning.

3. Crowdsourcing and Collaborative Annotation Platforms: Crowdsourcing platforms and collaborative annotation tools are gaining popularity, enabling the collective efforts of annotators worldwide to annotate large datasets efficiently.

Wrapping it up!

In conclusion, speech data annotation is a cornerstone in the development of advanced AI and ML models, particularly in the domain of speech recognition and natural language understanding. The ongoing challenges in accuracy, diversity, and subjectivity necessitate continuous research and innovation in annotation methodologies. As technology evolves, so too will the methods and tools used in speech data annotation, paving the way for more accurate, efficient, and context-aware AI applications.

At ProtoTech Solutions, we offer cutting-edge Data Annotation Services, leveraging expertise to annotate diverse datasets for AI/ML training. Their precise annotations enhance model accuracy, enabling businesses to unlock the full potential of machine-learning applications. Trust ProtoTech for meticulous data labeling and accelerated AI innovation.

#speech data annotation#Speech data#artificial intelligence (AI)#machine learning (ML)#speech#Data Annotation Services#labeling services for ml#ai/ml annotation#annotation solution for ml#data annotation machine learning services#data annotation services for ml#data annotation and labeling services#data annotation services for machine learning#ai data labeling solution provider#ai annotation and data labelling services#data labelling#ai data labeling#ai data annotation

0 notes

Text

Join our global work-from-home opportunities: varied projects, from short surveys to long-term endeavors. Utilize your social media interest, mobile device proficiency, linguistics degree, online research skills, or passion for multimedia. Find the perfect fit among our diverse options.

#image collection jobs#Data Annotation Jobs#Freelance jobs#Text Data Collection job#Speech Data Collection Jobs#Translation jobs online#Data annotation specialist#online data annotation jobs#image annotation online jobs

0 notes

Text

https://justpaste.it/cg903

0 notes

Text

Annotated Text-to-Speech Datasets for Deep Learning Applications

Introduction:

Text To Speech Dataset technology has undergone significant advancements due to developments in deep learning, allowing machines to produce speech that closely resembles human voice with impressive precision. The success of any TTS system is fundamentally dependent on high-quality, annotated datasets that train models to comprehend and replicate natural-sounding speech. This article delves into the significance of annotated TTS datasets, their various applications, and how organizations can utilize them to create innovative AI solutions.

The Importance of Annotated Datasets in TTS

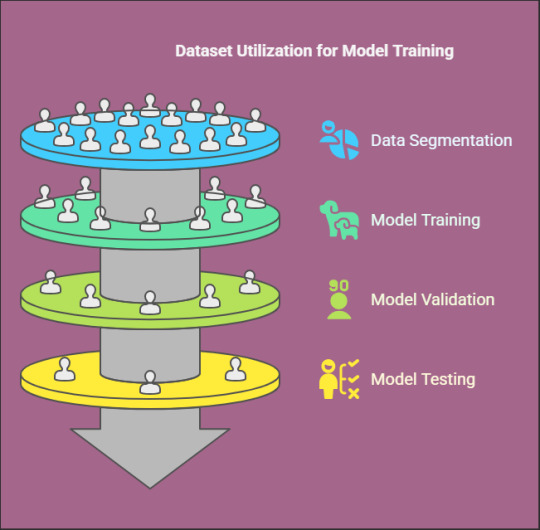

Annotated TTS datasets are composed of text transcripts aligned with corresponding audio recordings, along with supplementary metadata such as phonetic transcriptions, speaker identities, and prosodic information. These datasets form the essential framework for deep learning models by supplying structured, labeled data that enhances the training process. The quality and variety of these annotations play a crucial role in the model’s capability to produce realistic speech.

Essential Elements of an Annotated TTS Dataset

Text Transcriptions – Precise, time-synchronized text that corresponds to the speech audio.

Phonetic Labels – Annotations at the phoneme level to enhance pronunciation accuracy.

Speaker Information – Identifiers for datasets with multiple speakers to improve voice variety.

Prosody Features – Indicators of pitch, intonation, and stress to enhance expressiveness.

Background Noise Labels – Annotations for both clean and noisy audio samples to ensure robust model training.

Uses of Annotated TTS Datasets

The influence of annotated TTS datasets spans multiple sectors:

Virtual Assistants: AI-powered voice assistants such as Siri, Google Assistant, and Alexa depend on high-quality TTS datasets for seamless interactions.

Audiobooks & Content Narration: Automated voice synthesis is utilized in e-learning platforms and digital storytelling.

Accessibility Solutions: Screen readers designed for visually impaired users benefit from well-annotated datasets.

Customer Support Automation: AI-driven chatbots and IVR systems employ TTS to improve user experience.

Localization and Multilingual Speech Synthesis: Annotated datasets in various languages facilitate the development of global text-to-speech (TTS) applications.

Challenges in TTS Dataset Annotation

Although annotated datasets are essential, the creation of high-quality TTS datasets presents several challenges:

Data Quality and Consistency: Maintaining high standards for recordings and ensuring accurate annotations throughout extensive datasets.

Speaker Diversity: Incorporating a broad spectrum of voices, accents, and speaking styles.

Alignment and Synchronization: Accurately aligning text transcriptions with corresponding speech audio.

Scalability: Effectively annotating large datasets to support deep learning initiatives.

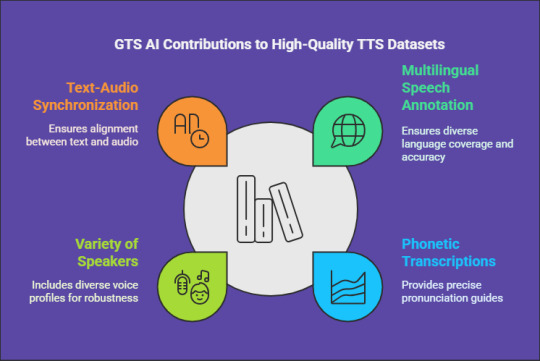

How GTS Can Assist with High-Quality Text Data Collection

For organizations and researchers in need of dependable TTS datasets, GTS AI provides extensive text data collection services. With a focus on multilingual speech annotation, GTS delivers high-quality, well-organized datasets specifically designed for deep learning applications. Their offerings guarantee precise phonetic transcriptions, a variety of speakers, and flawless synchronization between text and audio.

Conclusion

Annotated text-to-speech datasets are vital for the advancement of high-performance speech synthesis models. As deep learning Globose Technology Solutions progresses, the availability of high-quality, diverse, and meticulously annotated datasets will propel the next wave of AI-driven voice applications. Organizations and developers can utilize professional annotation services, such as those provided by GTS, to expedite their AI initiatives and enhance their TTS solutions.

0 notes

Text

The revolution in communication brought about by text-to-speech technology is a testament to the power of AI in bridging human-computer interaction gaps. The key to unlocking this potential lies in the development of comprehensive, diverse, and high-quality text-to-speech datasets.

0 notes

Text

some related doodles with thinking about how orion helps out megatronus when it comes to writing things like his speeches and manifestos..

i like to think that despite the whole robots and use of datapads and keyboards and stuff, orion still likes to scribble and write in the margins of things like poems and novels. he works with lots of data all the time, cataloguing, organizing, etc. thinking of those as always typed out for efficiency's sake because to him, even if he is earnest and passionate about it, it is still something he considers as work first and foremost. for things he is more personally invested in and values, he chooses to scribble in between those margins. words and symbols personalized for the text.

his diligence and thoroughness with each detail and comment is something born out of a deep love, care and respect for megatronus' work as well as what he fights for.

thinking about megatron hearing mostly criticisms from orion's initial reads but when he goes over to look at /all/ of the annotations theres actually a lot of comments that goes over a lot of how much orion agrees with him, is enlightened and greatly admires his work.

#transformers prime#transformers#transformers exodus#tfp megatron#megop#tfp megop#tfp optimus prime#orion pax#tfp orion pax

1K notes

·

View notes

Text

Pantima: Global Data Collection & Annotation Platform

Explore Pantima, your trusted global data collection and annotation solution. Our platform seamlessly integrates technology with a diverse crowd of contributors, linguists, annotators, scientists, and engineers. Empower your AI initiatives with precise and reliable data annotation. Partner with Pantima for cutting-edge advancements in artificial intelligence.

0 notes

Note

my friend is somewhat considering majoring in linguistics but neither of us know a ton about what you do in linguistics so could you share a little of what you know?

Of course! Obviously, there are different paths you can take within linguistics, and this is just my program, but some basics:

We learn the IPA (these /sɪmbəlz/) and how to transcribe speech. We learn about phonemes and allophones. We learn how speech is produced, how the vocal tract functions. We learn how to analyze data sets of words/phrases from languages to determine what each segment means based on the given translation (i can give an example if you like). We learn glossing (breaking down a word/utterance into morphemes and translating it morpheme by morpheme--can also give examples). We learn to analyze and annotate the grammar of a sentence--what is an object, subject, agent, patient, predicate, referring phrase, peripheral argument, subordinate clause, etc (again--have examples). Essentially, how does language function to make and communicate meaning? What IS language?

Then you can get a little more specialized. You can go a more sociolinguistic path, which is looking at how language is used socially--do women use these words more than men? How do queer people speak (accent, diction, etc)?

Or you could focus on historical linguistics and look at how languages change, or how languages might've been connected/in contact in the past. Or focus on documenting language, working with databases and on revitalization.

Many many more paths to choose from, as language is in everything! Linguistics also overlaps pretty heavily at some points with the speech and hearing sciences (audiology, speech language pathology), so you'll probably pick up a thing or two from that as well.

Hopefully that's comprehensive and gives you a bit of an idea? I'm more than happy to talk more on any of it :)

#quil's queries#rosy-cozy-radio#again. this is just my experience in my linguistics program#oh! idk if this is a requirement elsewhere but in my program you're also required to take 2 years of a foreign language#any language is fine the point is just exposure to non-english languages#for reasons that are probably obvious

6 notes

·

View notes

Text

Summary of Read-It-Later App Alternatives Following Pocket's Shutdown

This document summarizes several read-it-later applications as alternatives to Pocket, which Mozilla shut down in May 2025. Pocket users have until October 8, 2025, to export their data. The alternatives offer varying features and pricing models:

Paid Options with Significant Features:

Matter: Offers AI-powered co-reading, podcast transcription, and reading speed adjustments. A yearly subscription costs $79.99, but a discount is available for Pocket users.

Instapaper: A long-standing app with iOS and Android support. A yearly premium subscription ($59.99) unlocks features like note-taking, text-to-speech, and full-text search. Pocket users get a three-month free trial upon importing their data.

Raindrop.io: Primarily a bookmark manager, but also functions as a read-it-later app. A yearly subscription ($33) provides AI-powered organization, full-text search, and other features.

Plinky: Allows saving and categorizing various link types. A pro subscription ($3.99/month, $39.99/year, or $159.99 one-time) removes link, folder, and tag limits. A 50% discount on the Pro tier was offered until the end of May 2025.

Paperspan: A simple app with a reading list, note-taking, and text-to-speech. A monthly subscription ($8.99) unlocks advanced search and playlist creation. Note that this app hasn't been updated recently.

Readwise Reader: Integrates with Readwise for annotation features, offline search, and an AI assistant. Requires a $9.99/month Readwise subscription after a 30-day trial. Offers Pocket archive import.

DoubleMemory: An Apple-focused app with offline reading and search. Offers monthly ($3.99) and annual ($17.99) subscriptions.

Recall: Uses AI to summarize content and categorize it. A free plan allows up to 10 AI-generated summaries; a $7/month plan offers unlimited summaries and other features.

Wallabag: An open-source app available as a €11/year hosted subscription. Offers reader mode and supports importing from other services.

Free or Primarily Free Options:

Readeck: An open-source web app (with a hosted version planned for 2025) for organizing web content. A mobile app is also under development.

Obsidian Web Clipper: A free open-source browser extension for saving web pages to the Obsidian note-taking app.

Karakeep: An open-source bookmarking app with AI-powered tagging.

Dewey: A "save everything" app with organizational tools and AI bulk tagging. Paid plans start at $7.50/month.

The document notes that this list is not exhaustive and more tools may be added.

This information is current as of the date of the document. Since the document's date is not specified, it is advisable to verify the pricing, features, and availability of these apps with their respective websites before making a decision.

#read-it-later#application#Android apps#alternatives#Pocket#Android OS app#shutdown#bookmark 🔖#note taking#InstaPaper#PaperSpan#RainDrop 🌧️

2 notes

·

View notes

Text

This day in history

On July 14, I'm giving the closing keynote for the fifteenth HACKERS ON PLANET EARTH, in QUEENS, NY. Happy Bastille Day! On July 20, I'm appearing in CHICAGO at Exile in Bookville.

#20yrsago ESC-key creator dies https://www.nytimes.com/2004/06/25/us/robert-w-bemer-84-pioneer-in-computer-programming.html

#20yrsago Orrin Hatch criminalizes the iPod https://web.archive.org/web/20040627001053/https://www.eff.org/IP/Apple_Complaint.php

#20yrsago Schneier: More police power = less security https://www.schneier.com/essays/archives/2004/06/unchecked_police_and.html

#20yrsago Ernest Miller savages Orrin Hatch’s grotesque new law https://corante.com/importance/the-obsessively-annotated-introduction-to-the-induce-act/

#20yrsago MP candidates on the “Canadian DMCA” https://web.archive.org/web/20040812131225/https://ddll.sdf1.net/archives/002667.html

#15yrsago How the Canadian copyright lobby uses fakes, fronts, and circular references to subvert the debate on copyright https://www.michaelgeist.ca/2009/06/copyright-policy-laundering/

#15yrsago Julian Comstock: Robert Charles Wilson’s masterful novel of a post-collapse feudal America: “If Jules Verne had read Karl Marx, then sat down to write The Decline and Fall of the Roman Empire“ https://memex.craphound.com/2009/06/24/julian-comstock-robert-charles-wilsons-masterful-novel-of-a-post-collapse-feudal-america-if-jules-verne-had-read-karl-marx-then-sat-down-to-write-the-decline-and-fall-of-the-roman-empire/

#15yrsago Health insurance versus health https://web.archive.org/web/20090709060418/http://voices.washingtonpost.com/ezra-klein/2009/06/the_truth_about_the_insurance.html

#15yrsago Guards are the worst prison-rapists https://web.archive.org/web/20090624183205/http://nprec.us/publication/report/executive_summary.php

#15yrsago Great Firewall of Australia to block video games unsuitable for people under 15 https://www.smh.com.au/technology/web-filters-to-censor-video-games-20090625-cxrx.html

#10yrsago Thomas Piketty’s Capital in the 21st Century https://memex.craphound.com/2014/06/24/thomas-pikettys-capital-in-the-21st-century/

#10yrsago How accounting forced transparency on the aristocracy and changed the world https://www.bostonglobe.com/ideas/2014/06/07/the-vanished-grandeur-accounting/3zcbRBoPDNIryWyNYNMvbO/story.html

#5yrsago America’s super-rich write to Democratic presidential hopefuls, demanding a wealth tax https://medium.com/@letterforawealthtax/an-open-letter-to-the-2020-presidential-candidates-its-time-to-tax-us-more-6eb3a548b2fe

#5yrsago You can’t recycle your way out of climate change https://www.vox.com/the-highlight/2019/5/28/18629833/climate-change-2019-green-new-deal

#5yrsago US election security: still a dumpster fire https://www.wired.com/story/election-security-2020/

#5yrsago “I Shouldn’t Have to Publish This in The New York Times”: my op-ed from the future https://www.nytimes.com/2019/06/24/opinion/future-free-speech-social-media-platforms.html

#5yrsago Bernie Sanders will use a tax on Wall Street speculators to wipe out $1.6 trillion in US student debt https://berniesanders.com/issues/free-college-cancel-debt/

#5yrsago Mandatory childbirth: how the anti-abortion crusade masks cruelty to women in the “sacralizing of fetuses” https://www.nytimes.com/2019/06/23/opinion/anti-abortion-history.html

#5yrsago The internet has become a “low-trust society” https://www.wired.com/story/internet-made-dupes-cynics-of-us-all/

#5yrsago “PM for a day”: dissident Tories plan to bring down the government the day after Boris Johnson becomes Prime Minister https://www.bbc.com/news/uk-politics-48742881

#5yrsago Report: UK “Ransomware consultants” Red Mosquito promise to unlock your data, but they’re just paying off the criminals (and charging you a markup!) https://www.propublica.org/article/sting-catches-another-ransomware-firm-red-mosquito-negotiating-with-hackers

#5yrsago Lessons from Microsoft’s antitrust adventure for today’s Big Tech giants https://web.archive.org/web/20190622131345/https://www.sfgate.com/business/article/Microsoft-s-missteps-offer-antitrust-lessons-for-14030092.php

Support me this summer on the Clarion Write-A-Thon and help raise money for the Clarion Science Fiction and Fantasy Writers' Workshop!

5 notes

·

View notes

Text

“My sisters have died,” the young boy sobbed, chest heaving, as he wailed into the sky. “Oh, my sisters.” As Israel began airstrikes on Gaza following the Oct. 7 Hamas terrorist attack, posts by verified accounts on X, the social media platform formerly called Twitter, were being transmitted around the world. The heart-wrenching video of the grieving boy, viewed more than 600,000 times, was posted by an account named “#FreePalestine 🇵🇸.” The account had received X’s “verified” badge just hours before posting the tweet that went viral.

Days later, a video posted by an account calling itself “ISRAEL MOSSAD,” another “verified” account, this time bearing the logo of Israel’s national intelligence agency, claimed to show Israel’s advanced air defense technology. The post, viewed nearly 6 million times, showed a volley of rockets exploding in the night sky with the caption: “The New Iron beam in full display.”

And following an explosion on Oct. 14 outside the Al-Ahli Hospital in Gaza where civilians were killed, the verified account of the Hamas-affiliated news organization Quds News Network posted a screenshot from Facebook claiming to show the Israel Defense Forces declaring their intent to strike the hospital before the explosion. It was seen more than half a million times.

None of these posts depicted real events from the conflict. The video of the grieving boy was from at least nine years ago and was taken in Syria, not Gaza. The clip of rockets exploding was from a military simulation video game. And the Facebook screenshot was from a now-deleted Facebook page not affiliated with Israel or the IDF.

Just days before its viral tweet, the #FreePalestine 🇵🇸 account had a blue verification check under a different name: “Taliban Public Relations Department, Commentary.” It changed its name back after the tweet and was reverified within a week. Despite their blue check badges, neither Taliban Public Relations Department, Commentary nor ISRAEL MOSSAD (now “Mossad Commentary”) have any real-life connection to either organization. Their posts were eventually annotated by Community Notes, X’s crowdsourced fact-checking system, but these clarifications garnered about 900,000 views — less than 15% of what the two viral posts totaled. ISRAEL MOSSAD deleted its post in late November. The Facebook screenshot, posted by the account of the Quds News Network, still doesn’t have a clarifying note. Mossad Commentary and the Quds News Network did not respond to direct messages seeking comment; Taliban Public Relations Department, Commentary did not respond to public mentions asking for comment.

An investigation by ProPublica and Columbia University’s Tow Center for Digital Journalism shows how false claims based on out-of-context, outdated or manipulated media have proliferated on X during the first month of the Israel-Hamas conflict. The organizations looked at over 200 distinct claims that independent fact-checks determined to be misleading, and searched for posts by verified accounts that perpetuated them, identifying 2,000 total tweets. The tweets, collectively viewed half a billion times, were analyzed alongside account and Community Notes data.

[...]

False claims that go viral are frequently repeated by multiple accounts and often take the form of decontextualized old footage. One of the most widespread false claims, that Qatar was threatening to stop supplying natural gas to the world unless Israel halted its airstrikes, was repeated by nearly 70 verified accounts. This claim, which was based on a false description of an unrelated 2017 speech by the Qatari emir to bolster its credibility, received over 15 million views collectively, with a single post by Dominick McGee (@dom_lucre) amassing more than 9 million views. McGee is popular in the QAnon community and is an election denier with nearly 800,000 followers who was suspended from X for sharing child exploitation imagery in July 2023. Shortly after, X reversed the suspension. McGee denied that he had shared the image when reached by direct message on X, claiming instead that it was “an article touching it.”

Another account, using the pseudonym Sprinter, shared the same false claim about Qatar in a post that was viewed over 80,000 times. These were not the only false posts made by either account. McGee shared six debunked claims about the conflict in our dataset; Sprinter shared 20.

6 notes

·

View notes

Note

There’s a string of light pink pearls left in Stories’ chamber- the data inside each pearl is a different Ancient folk tale, all annotated in detail by the owner of the pearls.

The annotations are familiar by mannerism- speech patterns Stories could swear he’s seen before. Most of the annotations date back to when the fourth generation of iterators were starting to be built.

13ES: Hmm... some of these I've heard, but there's a few I haven't. Many of these tales seem like campfire stories. Some of them seem made up, while others could be very much true.

Interesting...

2 notes

·

View notes

Text

AI Boom Boosts Demand for Domain-Specific Datasets in Finance, Retail, and Healthcare

Market Overview

The AI training dataset market is rapidly evolving as artificial intelligence (AI) technologies continue to transform industries across the globe. These datasets—critical for teaching algorithms to interpret, analyze, and act on data—are becoming the cornerstone of AI development. Whether in self-driving cars or chatbots, AI models are only as good as the data they are trained on. This dependency on quality and diverse datasets is pushing demand across sectors such as automotive, healthcare, BFSI, and more.

In a world increasingly driven by automation and smart technology, the AI training dataset market is playing a pivotal role by providing the foundational data necessary for machine learning models. As organizations race toward digital transformation, the importance of accurate, labeled, and high-volume data cannot be overstated.

Click to Request a Sample of this Report for Additional Market Insights: https://www.globalinsightservices.com/request-sample/?id=GIS24749

Market Size, Share & Demand Analysis

The AI training dataset market is experiencing robust growth and is expected to witness significant expansion by 2034. From data types like text, image, video, and audio to specialized sensor and time series data, demand is booming. Various learning types—including supervised, unsupervised, reinforcement, and semi-supervised learning—require tailor-made datasets to enhance training performance.

Additionally, with advancements in speech recognition, robotics, machine translation, and computer vision, demand for diverse datasets is escalating. The need for labeled and annotated data is especially high in applications like healthcare diagnostics, fraud detection, virtual assistants, and autonomous vehicles.

Companies are now heavily investing in high-quality data for model training, which is contributing to the growing market share of data services such as annotation, cleaning, augmentation, and integration. This surge in demand reflects the rising need for training datasets that align with real-world applications and business goals.

Market Dynamics

Several factors are driving the AI training dataset market, including the rising adoption of AI across enterprises and the increased complexity of AI models. As machine learning algorithms become more intricate, the volume and quality of required training data increase substantially.

On the supply side, the emergence of automated data labeling tools, open-source data platforms, and crowd-sourced annotation services are streamlining data preparation.

However, challenges such as data privacy, lack of standardization, and high costs associated with data acquisition and labeling still pose hurdles. Despite this, the market continues to thrive thanks to technological innovations and growing AI integration in sectors like healthcare, retail, telecommunications, and manufacturing.

Key Players Analysis

Key companies driving the AI training dataset market include Figure Eight (Appen), Scale AI, Lionbridge AI, Amazon Web Services, Google, and Microsoft. These players offer turnkey and custom solutions to cater to enterprise-specific needs.

Their offerings cover everything from data collection and preprocessing to validation and deployment. Additionally, major players are investing in AI-focused subsidiaries and platforms that provide end-to-end data services, which strengthens their market position and improves customer retention.

These companies are also working on automating annotation processes and offering hybrid deployment options—both cloud-based and on-premises—to meet varying business needs.

Regional Analysis

North America currently dominates the AI training dataset market, primarily due to its advanced technological infrastructure and early adoption of AI in sectors like automotive and finance. The U.S. holds a major market share, with tech giants and startups contributing heavily to innovation in this space.

Europe follows, with strong growth fueled by its emphasis on ethical AI, data privacy regulations, and smart city projects. Meanwhile, the Asia-Pacific region is emerging as a promising market due to increasing digitization in countries like China, India, and Japan, supported by government initiatives and growing investments in AI R&D.

Recent News & Developments

Recent years have seen several strategic developments in the AI training dataset market. Appen launched a new data annotation platform with integrated machine learning support, while Scale AI raised significant funding to enhance its data labeling infrastructure.

Google and Microsoft have also expanded their cloud-based dataset services to support industry-specific use cases. Moreover, the integration of synthetic data generation is gaining traction, as companies look for cost-effective ways to scale model training while preserving privacy.

Browse Full Report @ https://www.globalinsightservices.com/reports/ai-training-dataset-market/

Scope of the Report

The AI training dataset market is vast and expanding, covering diverse components like data security, analytics, storage, and management. With deployment models ranging from cloud and on-premises to hybrid solutions, companies have more flexibility than ever before.

From turnkey to custom and open-source solutions, the scope of services is continuously broadening. The application of AI training datasets spans predictive maintenance, personalized marketing, and beyond, making it a critical enabler of digital transformation across industries.

As innovation continues and AI permeates deeper into business processes, the AI training dataset market is expected to play a foundational role in the future of intelligent technologies.

Discover Additional Market Insights from Global Insight Services:

Supply Chain Security Market: https://www.openpr.com/news/4089723/supply-chain-security-market-is-anticipated-to-expand-from-4-8

Edutainment Market: https://www.openpr.com/news/4089586/edutainment-market-to-hit-16-9-billion-by-2034-growing-at-12-6

Magnetic Sensor Market: https://www.openpr.com/news/4090470/magnetic-sensor-market-set-to-reach-12-48-billion-by-2034

AI Agent Market: https://www.openpr.com/news/4091894/ai-agent-market-to-surge-past-32-5-billion-by-2034-fueled

Anime Market: https://www.openpr.com/news/4094049/anime-market-is-anticipated-to-expand-from-28-6-billion-in-2024

#breaking news#research#services#trending news#usa news#100 days of productivity#news#business#growth

0 notes

Text

The Transition from Data to Diagnosis: The Impact of Healthcare Datasets on Machine Learning

Introduction:

The healthcare sector is experiencing a significant digital transformation, with Healthcare Datasets For Machine Learning as a crucial component in enhancing patient care. From forecasting illnesses to tailoring treatment strategies, ML algorithms depend fundamentally on one essential factor: data. High-quality healthcare datasets form the foundation of these advancements, facilitating precise diagnoses and enhancing patient outcomes.

In this article, we will examine how healthcare datasets empower machine learning and propel progress in medical diagnosis and treatment.

The Importance of Data in Machine Learning

The effectiveness of machine learning models is directly linked to the quality of the data used for training. In the healthcare domain, datasets serve as the essential input for algorithms to identify patterns, generate predictions, and provide valuable insights. These datasets are available in various formats, including:

Electronic Health Records (EHRs): Detailed patient histories encompassing diagnoses, treatments, and laboratory results.

Medical Imaging Data: CT scans, MRIs, and X-rays utilized for identifying conditions such as cancer and fractures.

Genomic Data: DNA sequences that support personalized medicine and the exploration of genetic disorders.

Wearable Device Data: Real-time information such as heart rate and activity levels for the management of chronic conditions.

The Contribution of Healthcare Datasets to Machine Learning

Enhanced Diagnostic Accuracy

Healthcare datasets empower ML algorithms to identify diseases with remarkable accuracy. For example:

Radiology: Labeled medical images assist ML models in recognizing tumors, fractures, and other irregularities more swiftly and accurately than conventional techniques.

Pathology: The integration of digital pathology slides with datasets facilitates automated analysis, minimizing the potential for human error.

Predictive Analytics

Models developed using healthcare datasets can anticipate potential health concerns prior to their occurrence:

Chronic Diseases: Facilitating the early detection of risk factors associated with conditions such as diabetes or cardiovascular disease.

Hospital Readmissions: Assessing which patients may be prone to readmission, thereby allowing for proactive care measures.

Personalized Treatment

Genomic information enables machine learning models to suggest customized treatments based on unique genetic characteristics. For instance:

Cancer Therapy: Detecting mutations that qualify patients for specific targeted therapies.

Pharmacogenomics: Forecasting patient responses to particular medications.

Operational Efficiency

In addition to clinical uses, healthcare datasets enhance the efficiency of hospital operations:

Resource Allocation: Streamlining staff scheduling and optimizing the use of equipment.

Fraud Detection: Recognizing irregularities in billing practices.

Challenges in Utilizing Healthcare Datasets

Despite their significant potential, the application of healthcare datasets presents several challenges:

Data Privacy: Maintaining adherence to regulations such as HIPAA and GDPR.

Data Quality: Managing issues related to incomplete, inconsistent, or noisy data.

Bias in Data: Tackling biases that may result in unfair outcomes.

Integration: Combining datasets from various sources into a unified format.

The Role of Curated Datasets

Curated healthcare datasets are preprocessed and annotated, saving researchers time and effort. They ensure:

High Accuracy: Data is cleaned and validated for ML readiness.

Consistency: Standardized formats make integration seamless.

Scalability: Large datasets support robust model training.

Organizations like Healthcare specialize in providing high-quality datasets tailored to the unique needs of healthcare AI projects. Their expertise ensures that your ML models are built on a solid foundation of reliable data.

Real-World Applications

Healthcare datasets are already driving transformative ML applications:

Sepsis Prediction: Predictive models analyze EHR data to detect sepsis in its early stages.

Mental Health: Sentiment analysis on text data from therapy sessions aids in diagnosing mental health conditions.

Final Thoughts

The power of healthcare datasets in machine learning lies in their ability to turn raw data into actionable insights. From diagnosis to treatment, these datasets are transforming how we approach healthcare. By addressing challenges and leveraging curated datasets from trusted providers like Globose Technology Solutions Healthcare, the potential for innovation is limitless.

As we continue to integrate AI into medicine, one thing is clear: the journey from data to diagnosis is just the beginning of a new era in healthcare.

0 notes

Text

How to Develop a Video Text-to-Speech Dataset for Deep Learning

Introduction:

In the swiftly advancing domain of deep learning, video-based Text-to-Speech (TTS) technology is pivotal in improving speech synthesis and facilitating human-computer interaction. A well-organized dataset serves as the cornerstone of an effective TTS model, guaranteeing precision, naturalness, and flexibility. This article will outline the systematic approach to creating a high-quality video TTS dataset for deep learning purposes.

Recognizing the Significance of a Video TTS Dataset

A video Text To Speech Dataset comprises video recordings that are matched with transcribed text and corresponding audio of speech. Such datasets are vital for training models that produce natural and contextually relevant synthetic speech. These models find applications in various areas, including voice assistants, automated dubbing, and real-time language translation.

Establishing Dataset Specifications

Prior to initiating data collection, it is essential to delineate the dataset’s scope and specifications. Important considerations include:

Language Coverage: Choose one or more languages relevant to your application.

Speaker Diversity: Incorporate a range of speakers varying in age, gender, and accents.

Audio Quality: Ensure recordings are of high fidelity with minimal background interference.

Sentence Variability: Gather a wide array of text samples, encompassing formal, informal, and conversational speech.

Data Collection Methodology

a. Choosing Video Sources

To create a comprehensive dataset, videos can be sourced from:

Licensed datasets and public domain archives

Crowdsourced recordings featuring diverse speakers

Custom recordings conducted in a controlled setting

It is imperative to secure the necessary rights and permissions for utilizing any third-party content.

b. Audio Extraction and Preprocessing

After collecting the videos, extract the speech audio using tools such as MPEG. The preprocessing steps include:

Noise Reduction: Eliminate background noise to enhance speech clarity.

Volume Normalization: Maintain consistent audio levels.

Segmentation: Divide lengthy recordings into smaller, sentence-level segments.

Text Alignment and Transcription

For deep learning models to function optimally, it is essential that transcriptions are both precise and synchronized with the corresponding speech. The following methods can be employed:

Automatic Speech Recognition (ASR): Implement ASR systems to produce preliminary transcriptions.

Manual Verification: Enhance accuracy through a thorough review of the transcriptions by human experts.

Timestamp Alignment: Confirm that each word is accurately associated with its respective spoken timestamp.

Data Annotation and Labeling

Incorporating metadata significantly improves the dataset's functionality. Important annotations include:

Speaker Identity: Identify each speaker to support speaker-adaptive TTS models.

Emotion Tags: Specify tone and sentiment to facilitate expressive speech synthesis.

Noise Labels: Identify background noise to assist in developing noise-robust models.

Dataset Formatting and Storage

To ensure efficient model training, it is crucial to organize the dataset in a systematic manner:

Audio Files: Save speech recordings in WAV or FLAC formats.

Transcriptions: Keep aligned text files in JSON or CSV formats.

Metadata Files: Provide speaker information and timestamps for reference.

Quality Assurance and Data Augmentation

Prior to finalizing the dataset, it is important to perform comprehensive quality assessments:

Verify Alignment: Ensure that text and speech are properly synchronized.

Assess Audio Clarity: Confirm that recordings adhere to established quality standards.

Augmentation: Implement techniques such as pitch shifting, speed variation, and noise addition to enhance model robustness.

Training and Testing Your Dataset

Ultimately, utilize the dataset to train deep learning models such as Taco Tron, Fast Speech, or VITS. Designate a segment of the dataset for validation and testing to assess model performance and identify areas for improvement.

Conclusion

Creating a video TTS dataset is a detailed yet fulfilling endeavor that establishes a foundation for sophisticated speech synthesis applications. By Globose Technology Solutions prioritizing high-quality data collection, accurate transcription, and comprehensive annotation, one can develop a dataset that significantly boosts the efficacy of deep learning models in TTS technology.

0 notes

Text

Revolutionising UK Businesses with AI & Machine Learning Solutions: Why It’s Time to Act Now

Embracing AI & Machine Learning: A Business Imperative in the UK

Artificial intelligence (AI) and machine learning (ML) are no longer just buzzwords – they’re business-critical technologies reshaping how UK companies innovate, operate, and grow. Whether you're a fintech startup in London or a retail chain in Manchester, adopting AI & Machine Learning solutions can unlock hidden potential, streamline processes, and give you a competitive edge in today's fast-moving market.

Why UK Businesses Are Investing in AI & ML

The demand for AI consultants and data scientists in the UK is on the rise, and for good reason. With the right machine learning algorithms, companies can automate repetitive tasks, forecast market trends, detect fraud, and even personalize customer experiences in real-time.

At Statswork, we help businesses go beyond the basics. We provide full-spectrum AI services and ML solutions tailored to your specific challenges—from data collection and data annotation to model integration & deployment.

Building the Right Foundation: Data Architecture and Management

No AI system can work without clean, well-structured data. That’s where data architecture planning and data dictionary mapping come in. We work with your teams to design reliable pipelines for data validation & management, ensuring that your models are trained on consistent, high-quality datasets.

Need help labeling raw data? Our data annotation & labeling services are perfect for businesses working with training data across audio, image, video, and text formats.

From Raw Data to Real Intelligence: Advanced Model Development

Using frameworks like Python, R, TensorFlow, PyTorch, and scikit-learn, our experts build powerful machine learning algorithms tailored to your goals. Whether you're interested in supervised learning techniques or looking to explore deep learning with neural networks, our ML consulting & project delivery approach ensures results-driven implementation.

Our AI experts also specialize in convolutional neural networks (CNNs) for image and video analytics, and natural language processing (NLP) for understanding text and speech.

Agile Planning Meets Real-Time Insights

AI doesn't operate in isolation—it thrives on agility. We adopt agile planning methods to ensure our solutions evolve with your needs. Whether it's a financial forecast model or a recommendation engine for your e-commerce site, we stay flexible and outcome-focused.

Visualising your data is equally important. That’s why we use tools like Tableau and Power BI to build dashboards that make insights easy to understand and act on.

Scalable, Smart, and Secure Deployment

After building your model, our team handles model integration & deployment across platforms, including Azure Machine Learning and Apache Spark. Whether on the cloud or on-premises, your AI systems are made to scale securely and seamlessly.

We also monitor algorithmic model performance over time, ensuring your systems stay accurate and relevant as your data evolves.

What Sets Statswork Apart?

At Statswork, we combine deep technical expertise with business acumen. Our AI consultants work closely with stakeholders to align solutions with business logic modeling, ensuring that every model serves a strategic purpose.

Here’s a glimpse of what we offer:

AI & ML Strategy Consultation

Custom Algorithm Design

Data Sourcing, Annotation & Data Management

Image, Text, Audio, and Video Analytics

Ongoing Model Maintenance & Monitoring

We don't believe in one-size-fits-all. Every UK business is different—and so is every AI solution we build.

The Future is Now—Don’t Get Left Behind

In today’s data-driven economy, failing to adopt AI & ML can leave your business lagging behind. From smarter automation to actionable insights, the benefits are enormous—and the time to start is now.

Whether you're building your first predictive model or looking to optimize existing processes, Statswork is here to guide you every step of the way.

Ready to Transform Your Business with AI & Machine Learning? Reach out to Statswork—your trusted partner in AI-powered innovation for UK enterprises.

0 notes