#chatgpt extensions

Explore tagged Tumblr posts

Text

youtube

Struggling to keep up with content creation using ChatGPT? This video explores 10 ChatGPT Chrome Extensions.

These ChatGPT Chrome Extensions will help you churn out unique content and speed-up your workflow!

From pre-made templates to voice recognition and search engine integration. These extensions offer a variety of functionalities to boost your productivity.

Chrome extensions will work on most chrome based browsers including Brave, Opera, Vivaldi and others.

Here are the ChatGPT Chrome Extensions covered in this video:

1️⃣ Keywords Everywhere Generate SEO friendly content with structured keyword research templates.

2️⃣ YouTube Summary with ChatGPT & Claude Summarize YouTube videos for repurposing content and learning.

3️⃣ Sider ChatGPT Sidebar All-in-one AI tool with PDF analysis, writing, OCR and more.

4️⃣ Web ChatGPT Access real-time search engine data to enhance ChatGPT's responses.

5️⃣ AI Prompt Genius Organize your custom ChatGPT prompts for easy access and future use.

6️⃣ ChatGPT for Google Gain insights from search results with ChatGPT comparisons and summaries.

7️⃣ Replai Craft engaging replies for Twitter and LinkedIn posts using AI.

8️⃣ & 9️⃣ Talk to ChatGPT & Promptheus ChatGPT using your voice and listen to the responses.

🔟 AIPRM Library of 3600+ curated prompt templates for various content creation needs.

At the end I will show how to speed up your workflow using ChatGPT Prompt Templates and the ChatGPT Desktop App.

You don't need to type a prompt every single time! Instead copy/ paste the prompt from the template, replace the keywords and hit enter.

#10 chatgpt chrome extensions#chatgpt chrome extensions#chrome extensions for chatgpt#chrome extensions chatgpt#chrome extensions#best chatgpt chrome extensions#chatgpt extensions for chrome#chatgpt firefox extensions#chatgpt brave extensions#chatgpt browser extensions#chatgpt for chrome#chatgpt for google#chatgpt extensions#chatgpt chrome#chatgpt for web#chatgpt guide#chatgpt voice#chatgpt tools#chatgpt templates#chatgpt tips#chatgpt tutorial#chatgpt writer#chatgpt prompts#chatgpt#how to chatgpt#how to use chatgpt chrome extensions#youtube summary#ai chrome extensions#ai tools#ai writing

1 note

·

View note

Text

0 notes

Text

0 notes

Text

AI Doesn’t Necessarily Give Better Answers If You’re Polite

New Post has been published on https://thedigitalinsider.com/ai-doesnt-necessarily-give-better-answers-if-youre-polite/

AI Doesn’t Necessarily Give Better Answers If You’re Polite

Public opinion on whether it pays to be polite to AI shifts almost as often as the latest verdict on coffee or red wine – celebrated one month, challenged the next. Even so, a growing number of users now add ‘please’ or ‘thank you’ to their prompts, not just out of habit, or concern that brusque exchanges might carry over into real life, but from a belief that courtesy leads to better and more productive results from AI.

This assumption has circulated between both users and researchers, with prompt-phrasing studied in research circles as a tool for alignment, safety, and tone control, even as user habits reinforce and reshape those expectations.

For instance, a 2024 study from Japan found that prompt politeness can change how large language models behave, testing GPT-3.5, GPT-4, PaLM-2, and Claude-2 on English, Chinese, and Japanese tasks, and rewriting each prompt at three politeness levels. The authors of that work observed that ‘blunt’ or ‘rude’ wording led to lower factual accuracy and shorter answers, while moderately polite requests produced clearer explanations and fewer refusals.

Additionally, Microsoft recommends a polite tone with Co-Pilot, from a performance rather than a cultural standpoint.

However, a new research paper from George Washington University challenges this increasingly popular idea, presenting a mathematical framework that predicts when a large language model’s output will ‘collapse’, transiting from coherent to misleading or even dangerous content. Within that context, the authors contend that being polite does not meaningfully delay or prevent this ‘collapse’.

Tipping Off

The researchers argue that polite language usage is generally unrelated to the main topic of a prompt, and therefore does not meaningfully affect the model’s focus. To support this, they present a detailed formulation of how a single attention head updates its internal direction as it processes each new token, ostensibly demonstrating that the model’s behavior is shaped by the cumulative influence of content-bearing tokens.

As a result, polite language is posited to have little bearing on when the model’s output begins to degrade. What determines the tipping point, the paper states, is the overall alignment of meaningful tokens with either good or bad output paths – not the presence of socially courteous language.

An illustration of a simplified attention head generating a sequence from a user prompt. The model starts with good tokens (G), then hits a tipping point (n*) where output flips to bad tokens (B). Polite terms in the prompt (P₁, P₂, etc.) play no role in this shift, supporting the paper’s claim that courtesy has little impact on model behavior. Source: https://arxiv.org/pdf/2504.20980

If true, this result contradicts both popular belief and perhaps even the implicit logic of instruction tuning, which assumes that the phrasing of a prompt affects a model’s interpretation of user intent.

Hulking Out

The paper examines how the model’s internal context vector (its evolving compass for token selection) shifts during generation. With each token, this vector updates directionally, and the next token is chosen based on which candidate aligns most closely with it.

When the prompt steers toward well-formed content, the model’s responses remain stable and accurate; but over time, this directional pull can reverse, steering the model toward outputs that are increasingly off-topic, incorrect, or internally inconsistent.

The tipping point for this transition (which the authors define mathematically as iteration n*), occurs when the context vector becomes more aligned with a ‘bad’ output vector than with a ‘good’ one. At that stage, each new token pushes the model further along the wrong path, reinforcing a pattern of increasingly flawed or misleading output.

The tipping point n* is calculated by finding the moment when the model’s internal direction aligns equally with both good and bad types of output. The geometry of the embedding space, shaped by both the training corpus and the user prompt, determines how quickly this crossover occurs:

An illustration depicting how the tipping point n* emerges within the authors’ simplified model. The geometric setup (a) defines the key vectors involved in predicting when output flips from good to bad. In (b), the authors plot those vectors using test parameters, while (c) compares the predicted tipping point to the simulated result. The match is exact, supporting the researchers’ claim that the collapse is mathematically inevitable once internal dynamics cross a threshold.

Polite terms don’t influence the model’s choice between good and bad outputs because, according to the authors, they aren’t meaningfully connected to the main subject of the prompt. Instead, they end up in parts of the model’s internal space that have little to do with what the model is actually deciding.

When such terms are added to a prompt, they increase the number of vectors the model considers, but not in a way that shifts the attention trajectory. As a result, the politeness terms act like statistical noise: present, but inert, and leaving the tipping point n* unchanged.

The authors state:

‘[Whether] our AI’s response will go rogue depends on our LLM’s training that provides the token embeddings, and the substantive tokens in our prompt – not whether we have been polite to it or not.’

The model used in the new work is intentionally narrow, focusing on a single attention head with linear token dynamics – a simplified setup where each new token updates the internal state through direct vector addition, without non-linear transformations or gating.

This simplified setup lets the authors work out exact results and gives them a clear geometric picture of how and when a model’s output can suddenly shift from good to bad. In their tests, the formula they derive for predicting that shift matches what the model actually does.

Chatting Up..?

However, this level of precision only works because the model is kept deliberately simple. While the authors concede that their conclusions should later be tested on more complex multi-head models such as the Claude and ChatGPT series, they also believe that the theory remains replicable as attention heads increase, stating*:

‘The question of what additional phenomena arise as the number of linked Attention heads and layers is scaled up, is a fascinating one. But any transitions within a single Attention head will still occur, and could get amplified and/or synchronized by the couplings – like a chain of connected people getting dragged over a cliff when one falls.’

An illustration of how the predicted tipping point n* changes depending on how strongly the prompt leans toward good or bad content. The surface comes from the authors’ approximate formula and shows that polite terms, which don’t clearly support either side, have little effect on when the collapse happens. The marked value (n* = 10) matches earlier simulations, supporting the model’s internal logic.

What remains unclear is whether the same mechanism survives the jump to modern transformer architectures. Multi-head attention introduces interactions across specialized heads, which may buffer against or mask the kind of tipping behavior described.

The authors acknowledge this complexity, but argue that attention heads are often loosely-coupled, and that the sort of internal collapse they model could be reinforced rather than suppressed in full-scale systems.

Without an extension of the model or an empirical test across production LLMs, the claim remains unverified. However, the mechanism seems sufficiently precise to support follow-on research initiatives, and the authors provide a clear opportunity to challenge or confirm the theory at scale.

Signing Off

At the moment, the topic of politeness towards consumer-facing LLMs appears to be approached either from the (pragmatic) standpoint that trained systems may respond more usefully to polite inquiry; or that a tactless and blunt communication style with such systems risks to spread into the user’s real social relationships, through force of habit.

Arguably, LLMs have not yet been used widely enough in real-world social contexts for the research literature to confirm the latter case; but the new paper does cast some interesting doubt upon the benefits of anthropomorphizing AI systems of this type.

A study last October from Stanford suggested (in contrast to a 2020 study) that treating LLMs as if they were human additionally risks to degrade the meaning of language, concluding that ‘rote’ politeness eventually loses its original social meaning:

[A] statement that seems friendly or genuine from a human speaker can be undesirable if it arises from an AI system since the latter lacks meaningful commitment or intent behind the statement, thus rendering the statement hollow and deceptive.’

However, roughly 67 percent of Americans say they are courteous to their AI chatbots, according to a 2025 survey from Future Publishing. Most said it was simply ‘the right thing to do’, while 12 percent confessed they were being cautious – just in case the machines ever rise up.

* My conversion of the authors’ inline citations to hyperlinks. To an extent, the hyperlinks are arbitrary/exemplary, since the authors at certain points link to a wide range of footnote citations, rather than to a specific publication.

First published Wednesday, April 30, 2025. Amended Wednesday, April 30, 2025 15:29:00, for formatting.

#2024#2025#ADD#Advanced LLMs#ai#AI chatbots#AI systems#Anderson's Angle#Artificial Intelligence#attention#bearing#Behavior#challenge#change#chatbots#chatGPT#circles#claude#coffee#communication#compass#complexity#content#Delay#direction#dynamics#embeddings#English#extension#focus

2 notes

·

View notes

Text

uses of generative ai i think it makes sense to get mad about or be appalled by:

creating any kind of art, especially if then trying to build an audience using it and/or trying to make money off it

completing homework assignments

completing work tasks that significantly impact other people

completing work tasks that could significantly impact your professional reputation & career

any kind of research / treating it like a search engine

plugging unfinished fics into it to get an ending

editing writing of any kind

uses i think it doesnt make any sense to get all up in arms about:

writing emails

#personally i still wouldnt do it bc im a control freak lmao#but like. with all the morally questionable and outright risky applications there are#why tf yall getting so mad about emails#i dislike plagiarism & environmental costs as much ad the next guy#but i really dont think emails have much in the way of artistic merit#personally i would recommend using an extension that lets u make email templates#it would be more reliable and very fast once theyre set up#but i see how chatgpt is less effort and honestly. unless ur job is really important like healthcare or social work or w/e#the risks of an ai fuckup impacting anybody but u seem minimal

2 notes

·

View notes

Text

i need my fellow uni peers to never stop using chatgpt to summarise their articles instead of actually reading them because i cherish the deep, petty satisfaction of watching the thing churn up summaries with completely incorrect and irrelevant information far too much

#will never stop thinking about that time last year someone posted a pdf chat gpt generated summary of articles we had to read for a test on#a gc for the cinema section and absolutely nothing in it was correct . posted it with the caption ‘yeah couldn’t be bothered to read it so#i just asked chat gpt to do it posting it here in case anyone finds it useful!’ too . poetic cinema to me#i am full of the glee of a little hater . you are not getting any sympathy from my unmedicated adhd ass who takes literal hours to read one#article but who still actually does the fucking work anyway . request an extension to your work instead of generating absolute nonsense from#a thing that spits out nonsense that has no situational context . my lord#jay rambles#tbh i think it’s the audacity of asking chatgpt to generate a summary and not actually checking if any of the info is correct to me

5 notes

·

View notes

Text

I feel like chatgpt came around and everyone immediately forgot what they were doing to get stuff done before. like writing centers, extensions, extra help, templates, office hours, beta readers, random generators, and art and writing prompts have all existed for ages. even if you were cheating there's always been sparknotes, photomath, copying, paying someone else to do it, faking that your grandma died, and whatever. all of that stuff is more reliable and doesn't fuck over the planet. and you might actually learn something

16K notes

·

View notes

Text

Dynamic content generation with AI technology.

Automatically generate content for products, categories & CMS pages.

Write metadata for products, categories, and CMS pages using ChatGPT.

Generate personalized content using a custom prompt.

0 notes

Text

Rating: ⭐⭐⭐⭐⭐ (5/5)

Rating: ⭐⭐⭐⭐⭐ (5/5)

Introduction: TereaTV is one of the top IPTV services available in the USA, UK, and Canada for 2024 and 2025. It offers an outstanding viewing experience for live TV channels and online content, combining high quality with affordable pricing.

User Interface and Ease of Use: The website features a clean and user-friendly interface. Navigation is straightforward, allowing users to easily access channels and content. The design is appealing and works well across various devices.

Content Variety: TereaTV boasts an extensive selection of channels, including sports, movies, series, and a wide range of programs. The content is diverse and caters to the interests of all family members. The library is regularly updated to ensure the latest content is available.

Quality and Performance: The service delivers excellent picture and sound quality. Channels stream smoothly, with minimal buffering or delays. The platform offers multiple resolution options, allowing users to choose what best suits their internet connection speed.

Customer Support: The customer support team is responsive and helpful, providing assistance for any issues users may encounter. Whether it's troubleshooting or answering questions, they are committed to ensuring a positive experience.

Conclusion: Overall, TereaTV stands out as a leading IPTV service with its extensive content offerings, excellent quality, and user-friendly experience. It's an ideal choice for anyone looking to enjoy a wide range of entertainment options in the USA, UK, and Canada.

#Log in#Sign up#You said:#اكتبلى ريفيو عن الموقع https://tereatv.com/ انه top iptv usa and uk and canda 2024_2025#ChatGPT said:#ChatGPT#مراجعة لموقع TereaTV#التقييم: ⭐⭐⭐⭐⭐ (5/5)#المقدمة: موقع TereaTV يعتبر واحدًا من أفضل خدمات IPTV المتاحة في الولايات المتحدة والمملكة المتحدة وكندا لعامي 2024 و2025. يقدم تجربة متميز#الواجهة وسهولة الاستخدام: يتميز الموقع بواجهة مستخدم بسيطة وسهلة التنقل. يمكنك الوصول إلى جميع القنوات والمحتويات بسهولة، مما يجعل تجرب#المحتوى: يوفر TereaTV مجموعة واسعة من القنوات، تشمل قنوات رياضية، أفلام، مسلسلات، وبرامج من جميع الأنواع. المحتوى متنوع ويغطي اهتمامات جميع#الجودة والأداء: تقدم الخدمة جودة صورة وصوت ممتازة. تعمل القنوات بشكل سلس، مع حد أدنى من الانقطاعات أو التأخير#انجلش#Review of TereaTV#Rating: ⭐⭐⭐⭐⭐ (5/5)#Introduction: TereaTV is one of the top IPTV services available in the USA#UK#and Canada for 2024 and 2025. It offers an outstanding viewing experience for live TV channels and online content#combining high quality with affordable pricing.#User Interface and Ease of Use: The website features a clean and user-friendly interface. Navigation is straightforward#allowing users to easily access channels and content. The design is appealing and works well across various devices.#Content Variety: TereaTV boasts an extensive selection of channels#including sports#movies#series#and a wide range of programs. The content is diverse and caters to the interests of all family members. The library is regularly updated to#Quality and Performance: The service delivers excellent picture and sound quality. Channels stream smoothly#with minimal buffering or delays. The platform offers multiple resolution options#allowing users to choose what best suits their internet connection speed.#Customer Support: The customer support team is responsive and helpful

0 notes

Text

Comment Utiliser ChatGPT? Guide pour 2024

En 2024, ChatGPT, le chatbot révolutionnaire développé par OpenAI, continue de transformer la manière dont nous interagissons avec l’intelligence artificielle. Que ce soit pour rédiger des dissertations, écrire des e-mails, générer du code, ou encore trouver des titres d’articles, ChatGPT s’est imposé comme un outil indispensable pour les professionnels et les étudiants. Ce guide vous montrera…

#accès à ChatGPT#ajustement des réponses#astuces prompts#ChatGPT#compte ChatGPT#configuration ChatGPT#créer des prompts#extensions ChatGPT#fonctionnalités avancées#formulation#génération de texte#instructions personnalisées#Intelligence artificielle#interface utilisateur#modèle de langage#OpenAI#personnalisation#personnalisation ChatGPT#plateforme OpenAI#plugins ChatGPT#précision#prompts ChatGPT

0 notes

Text

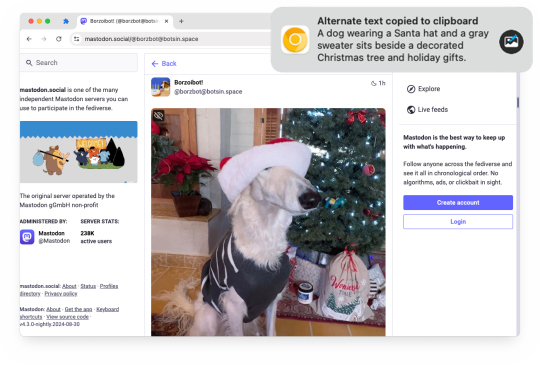

Alt Text Creator 1.2 is now available!

Earlier this year, I released Alt Text Creator, a browser extension that can generate alternative text for images by right-clicking them, using OpenAI's GPT-4 with Vision model. The new v1.2 update is now rolling out, with support for OpenAI's newer AI models and a new custom server option.

Alt Text Creator can now use OpenAI's latest GPT-4o Mini or GPT-4o AI models for processing images, which are faster and cheaper than the original GPT-4 with Vision model that the extension previously used (and will soon be deprecated by OpenAI). You should be able to generate alt text for several images with less than $0.01 in API billing. Alt Text Creator still uses an API key provided by the user, and uses the low resolution option, so it runs at the lowest possible cost with the user's own API billing.

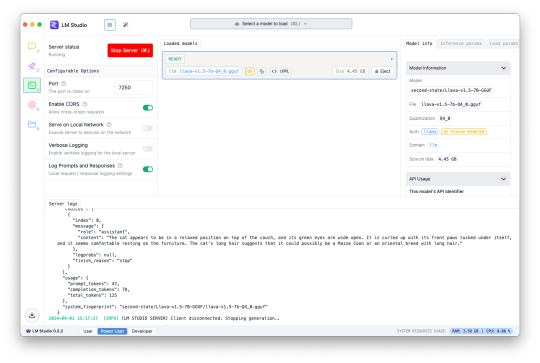

This update also introduces the ability to use a custom server instead of OpenAI. The LM Studio desktop application now supports downloading AI models with vision abilities to run locally, and can enable a web server to interact with the AI model using an OpenAI-like API. Alt Text Creator can now connect to that server (and theoretically other similar API limitations), allowing you to create alt text entirely on-device without paying OpenAI for API access.

The feature is a bit complicated to set up, is slower than OpenAI's API (unless you have an incredibly powerful PC), and requires leaving LM Studio open, so I don't expect many people will use this option for now. I primarily tested it with the Llava 1.5 7B model on a 16GB M1 Mac Mini, and it was about half the speed of an OpenAI request (8 vs 4 seconds for one example) while having generally lower-quality results.

You can download Alt Text Creator for Chrome and Firefox, and the source code is on GitHub. I still want to look into support for other AI models, like Google's Gemini, and the option for the user to change the prompt, but I wanted to get these changes out soon before GPT-4 Vision was deprecated.

Download for Google Chrome

Download for Mozilla Firefox

#gpt 4#gpt 4o#chatgpt#openai#llm#lm studio#browser extension#chrome extension#chrome#extension#firefox#firefox extension#firefox extensions#ai

1 note

·

View note

Text

The Philosophy of Intension and Extension

The philosophy of intension and extension pertains to the study of meaning, particularly in logic, semantics, and the philosophy of language. These concepts are used to analyze how words, terms, or concepts relate to the objects or entities they describe and how they convey meaning.

Key Concepts:

Intension:

Definition: Intension refers to the internal content or the conceptual aspects of a term—the set of attributes, properties, or criteria that a term conveys. It is essentially the "sense" or meaning of the term.

Example: The intension of the term "bachelor" includes the properties of being an unmarried man. These are the characteristics that define what it means to be a bachelor.

Focus on Meaning: Intension is concerned with what a term means, not directly with the specific instances it refers to. It's about the conditions that something must meet to be included in the term's extension.

Extension:

Definition: Extension refers to the set of all actual objects, entities, or instances in the world to which a term applies. It is the "reference" or the collection of things that fall under the concept described by the term.

Example: The extension of the term "bachelor" includes all specific men who are unmarried. These are the actual individuals who meet the criteria defined by the intension.

Focus on Reference: Extension is concerned with the real-world examples of a term, the specific members of the category that the term describes.

Relationship Between Intension and Extension:

Interdependence:

Linking Concepts to Reality: Intension and extension are closely related in that the intension of a term determines its extension. The set of properties (intension) defines what counts as an instance of the term, and therefore what is included in its extension.

Example: If the intension of "triangle" is "a three-sided polygon," then the extension includes all actual geometric figures that are three-sided polygons.

Variation Across Contexts:

Contextual Sensitivity: The extension of a term can change depending on the context, even if the intension remains the same. For instance, the intension of "president" as "the elected head of a country" is stable, but the extension (the actual person who is president) changes with each election.

Temporal Shifts: The extension of a term can also change over time. The intension of "planets in the Solar System" hasn't changed much, but the extension changed when Pluto was reclassified as a dwarf planet.

Philosophical Implications:

Analytic Philosophy and Logic: In analytic philosophy, the distinction between intension and extension is fundamental in understanding meaning, reference, and the structure of logical arguments.

Modal Considerations: Intension is also linked to possible worlds semantics, where the meaning of a term (intension) is consistent across different possible worlds, but its extension might vary from one world to another.

Applications and Examples:

Natural Kind Terms: Consider the term "water." The intension might involve being a clear, drinkable liquid, while the extension would include all actual instances of H₂O. However, in another possible world where water has a different chemical composition (say, XYZ), the extension would differ, but the intension might still involve the same concept of a clear, drinkable liquid.

Ambiguity and Vagueness: Words with vague intensions can lead to unclear or shifting extensions. For example, the intension of "heap" might involve "a large collection of grains," but determining the precise extension (what counts as a heap) can be tricky.

Criticisms and Debates:

Challenges of Intensional Definitions: Defining the exact intension of complex or abstract terms can be difficult. For instance, philosophical debates often arise over the intension of terms like "justice" or "knowledge."

Rigid Designators: Philosophers like Saul Kripke have introduced the concept of rigid designators, terms that refer to the same object in all possible worlds, raising questions about how intension and extension function in modal contexts.

The philosophy of intension and extension provides a framework for understanding how terms relate to the concepts they describe (intension) and the actual entities they refer to (extension). Intension deals with the meaning and properties of terms, while extension concerns the specific instances those terms apply to. This distinction is crucial in logic, semantics, and the philosophy of language, helping to clarify issues of meaning, reference, and the relationship between language and the world.

#philosophy#epistemology#knowledge#learning#education#chatgpt#ontology#metaphysics#Intension#Extension#Philosophy of Language#Semantics#Meaning and Reference#Conceptual Analysis#Logic#Possible Worlds#Analytic Philosophy#Natural Kind Terms#Rigid Designators#Vagueness and Ambiguity#Reference Theory

0 notes

Text

Latest Advancements in the Field of Multimodal AI: (ChatGPT DALLE 3) (Google BARD Extensions) and many more.

🔥 Exciting news in the world of Multimodal AI! 🚀 Check out the latest blog post discussing the groundbreaking advancements in this field. From integrating DALLE 3 into ChatGPT to Google BARD's enhanced extensions, this post covers it all. 👉 Read the full article here: [Latest Advancements in the Field of Multimodal AI](https://ift.tt/RWjzTtc) Discover how Multimodal AI combines text, images, videos, and audio to achieve remarkable performance. Unlike traditional AI models, these systems handle multiple data types simultaneously, giving you more than one output. Learn about cutting-edge models like Claude, DeepFloyd IF, ImageBind, and CM3leon, which push the boundaries of text and image generation. Don't miss out on the incredible possibilities Multimodal AI offers! Take a deep dive into the future of AI by reading the article today. 🌟 #AI #MultimodalAI #ArtificialIntelligence #ChatGPT #DALLE3 #GoogleBARD #TechAdvancements List of Useful Links: AI Scrum Bot - ask about AI scrum and agile Our Telegram @itinai Twitter - @itinaicom

#itinai.com#AI#News#Latest Advancements in the Field of Multimodal AI: (ChatGPT + DALLE 3) + (Google BARD + Extensions) and many more….#AI News#AI tools#Arham Islam#Innovation#itinai#LLM#MarkTechPost#Productivity Latest Advancements in the Field of Multimodal AI: (ChatGPT + DALLE 3) + (Google BARD + Extensions) and many more….

0 notes

Text

the corporate move to python and its consequences have been a disaster for the human race

#it has minimal punctuation so not only does it make understanding your own code difficult#but other people on the project are not gonna understand shit about it unless you extensively comment#and idk. i just think using the c family is better for everyone#but nooooo chatgpt uses python so every other business in the world has to too :/

1 note

·

View note

Text

Most helpful AI extensions for Google Chrome

To install AI Chrome extensions, simply visit the Chrome Web Store, search for the desired extension, and click ��Add to Chrome.”

0 notes

Text

Bard is Now More Powerful, with Gmail, Drive + Docs Extensions and Language Skills

Google Bard is stepping up its game with a brand-new integration, promising an enriched user experience that combines the power of AI with the familiarity of Google apps and services. The latest update, unveiled today, signifies a leap forward in Bard’s capabilities, making it a versatile assistant like never before. Connect to Google apps and services Bard Extensions in English, the innovative…

View On WordPress

0 notes