#difference between traditional sdlc and secure sdlc

Explore tagged Tumblr posts

Text

Transforming Insurance Operations with Odoo ERP – A Future-Ready Solution

Introduction

Insurance companies today face growing pressure to improve efficiency, stay compliant, and deliver better customer experiences. Traditional systems often fall short slowing down processes, increasing risks, and limiting scalability. Odoo ERP offers a powerful, integrated solution to address these challenges and support digital transformation across the insurance industry.

At SDLC CORP, a trusted Odoo development company in US, we help insurance providers modernize operations through tailored ERP solutions. From policy management to compliance automation, our goal is to streamline your business and future-proof your systems.

Key Challenges Faced by Insurance Providers

1. Fragmented Data and Systems

Many insurance firms rely on siloed software, leading to inefficiencies and reporting issues. Odoo ERP unifies data for better visibility and control.

2. Regulatory Complexity

Meeting compliance standards such as IRDAI, Solvency II, or HIPAA is tough without automation. Manual tracking increases the risk of non-compliance.

3. Manual Workflows

Manual claims handling and underwriting slow down service delivery and increase operational costs.

4. Scalability Limitations

Legacy systems often can’t support new product launches, market expansions, or digital enhancements.

Key Features of Odoo ERP for Insurance Providers

Financial and Policy Management

Odoo centralizes financial and operational workflows in one platform. We tailor features specifically for insurance firms:

Customer Invoices – Automate billing and renewal reminders.

Vendor Bills – Streamline payments to partners and service providers.

Bank & Cash Accounts – Maintain accurate records and enable easy reconciliation.

Online Payments – Offer secure, quick digital payment options.

Fiscal Localizations – Ensure compliance with tax rules in different regions.

Compliance and Risk Management

With Odoo, compliance becomes more manageable:

Audit Trails – Maintain transparent records for policies and claims.

Data Security – Protect sensitive customer and financial information.

Risk Monitoring – Identify fraud risks or claim anomalies in real time.

Real-Time Reporting & Insights

We configure Odoo to deliver actionable insights through:

Custom Dashboards – Visualize key metrics like claims ratio, policy lapses, and revenue.

Advanced Reports – Track customer trends, financial performance, and compliance indicators.

Process Automation

Odoo automates time-consuming tasks, helping your team focus on strategic goals:

Claims Automation – Set rules for auto-approvals and document routing.

Alerts & Reminders – Stay ahead of renewals, deadlines, and customer actions.

Scalable & Adaptable Platform

Our Odoo development services in US ensure that your ERP evolves as your business grows:

Custom Modules – Add features for underwriting, agent commissions, and customer portals.

Integrations – Connect with CRM, finance tools, and existing insurance software.

Why Odoo ERP is the Future for Insurers

Improved Operational Efficiency

Odoo reduces delays and errors across core processes like claims handling, accounting, and renewals.

Better Customer Experience

Self-service portals and automated communications lead to faster responses and greater satisfaction.

Lower Costs, Higher ROI

With Odoo’s open-source model and automation, insurers can reduce expenses while boosting productivity.

Multi-Currency & Multi-Entity Support

Perfect for insurers operating across states or borders, Odoo simplifies complex transactions.

SDLC CORP: Your Insurance-Focused Odoo Partner

At SDLC CORP, we offer tailored Odoo development services in US specifically for the insurance sector. With our deep industry knowledge, we help you implement, optimize, and scale your ERP solution for long-term success.

Dedicated Support – We provide 24/7 technical help.

Smooth Integrations – Ensure seamless communication between systems.

Conclusion

Odoo ERP is transforming how insurers manage operations, compliance, and customer engagement. By partnering with an experienced Odoo development company in US like SDLC CORP, insurance providers can unlock new efficiencies, reduce costs, and stay ahead in a competitive market.

Ready to modernize your insurance operations with Odoo? Let SDLC CORP guide your journey.

#OdooERP#InsuranceTech#DigitalTransformation#InsurTech#ERPforInsurance#OdooDevelopment#OdooDevelopmentServices#InsuranceAutomation#InsuranceIndustry#OdooSolutions#ComplianceAutomation#InsuranceOperations#FutureOfInsurance#TechInInsurance#DataIntegration#ScalableSolutions#OdooForBusiness

0 notes

Text

Software Development Engineer in Test : Meaning, Role, and Salary Insights

The world of software development is constantly evolving, and with it, the roles in software testing have transformed. Gone are the days when testing was only about manually clicking through user interfaces. Today, automation, DevOps, and continuous testing drive the industry, making roles like Software Development Engineer in Test (SDET) more crucial than ever.

If you've ever wondered what an SDET is, how they differ from traditional QA testers and test engineers, and how their salaries compare, this blog is for you. Let's break it all down.

What Is an SDET?

A Software Development Engineer in Test (SDET) is a specialized professional who blends software development and testing expertise. Unlike a traditional tester who primarily focuses on executing test cases, an SDET actively contributes to test automation, framework development, and software quality assurance at the code level.

Key Responsibilities of an SDET

SDETs wear multiple hats in the software development lifecycle (SDLC). Here are some of their primary responsibilities:

Test Automation Development – Writing test scripts, developing automation frameworks, and integrating automated tests into CI/CD pipelines.

Collaboration with Developers – Working closely with software engineers to review code, suggest improvements, and ensure testability.

API and Performance Testing – Verifying backend APIs, load testing, and ensuring that software meets performance benchmarks.

Security Testing – Identifying security vulnerabilities in code and ensuring compliance with security best practices.

DevOps and Continuous Testing – Integrating testing into DevOps processes, ensuring automated tests run in CI/CD pipelines for faster releases.

Test Environment Setup – Managing test environments, setting up virtual machines, and ensuring smooth test execution.

Unlike a traditional QA Tester, who mainly executes tests, reports bugs, and validates fixes, an SDET focuses on developing robust automation solutions to reduce manual testing efforts.

SDET vs. Test Engineer vs. QA Tester

The terms SDET, Test Engineer, and QA Tester are often used interchangeably, but they have distinct roles. Let's break down the differences:

1. QA Tester

A QA Tester is responsible for manual testing, executing test cases, and validating software functionality. They:

Write and execute manual test cases.

Identify and report bugs.

Perform regression, functional, and exploratory testing.

Have limited coding knowledge (if any).

Rely on automation tools built by others.

QA testers ensure the end-user experience is smooth but typically do not write automation scripts or interact with code extensively.

2. Test Engineer

A Test Engineer is a step ahead of a QA Tester and often works with test automation but may not have the same development skills as an SDET. They:

Perform manual and automated testing.

Have experience with automation tools like Cypress, Selenium or JUnit.

Focus on test execution and maintenance rather than framework development.

Identify software quality issues but may not contribute significantly to fixing them.

Test Engineers are crucial for automation testing but may lack the depth of programming knowledge required to develop complex testing frameworks.

3. Software Development Engineer in Test (SDET)

An SDET is a hybrid role that combines software development and testing expertise. They:

Write test automation frameworks from scratch.

Develop custom testing tools to improve test efficiency.

Work on unit testing, API testing, performance testing, and security testing.

Have strong coding skills in languages like Java, Python, JavaScript, or C#.

Work closely with DevOps engineers for continuous integration and continuous deployment (CI/CD).

Key Difference Between an SDET and a QA Tester/Test Engineer

An SDET is essentially a developer who tests software rather than just a tester who executes tests.

Why Are SDETs in High Demand?

Companies today aim for faster releases, higher code quality, and reduced testing time. This is where SDETs shine.

1. The Shift to Test Automation

Manual testing is slow and error-prone. Businesses rely on automated testing to speed up releases, making SDETs a critical part of Agile and DevOps teams.

2. CI/CD and DevOps Integration

Since SDETs understand both coding and testing, they help integrate testing in CI/CD pipelines, ensuring rapid and reliable software releases.

3. Cost-Effectiveness

Instead of hiring separate developers and testers, companies prefer SDETs who can write tests and debug code, reducing overall development costs.

4. Enhanced Software Quality

SDETs prevent bugs at the development stage rather than just finding them after deployment, improving overall software reliability.

SDET Salary: How Much Do They Earn?

The demand for Software Development Engineers in Test (SDETs) has grown significantly as companies move toward test automation, DevOps, and continuous testing. This shift has led to a significant pay gap between traditional Software Testers and SDETs. If you’re currently in a manual testing role or even working as a Test Engineer, you might be wondering:

How much more do SDETs make compared to traditional testers?

What skills can increase a tester’s salary?

How can you transition from a tester to an SDET?

Let’s break it all down.

Factors Affecting SDET Salaries

Experience – More years of experience means higher pay.

Tech Stack – SDETs skilled in Python, Java, Selenium, Kubernetes, and cloud technologies earn more.

Company – Big tech companies like Google, Amazon, and Microsoft pay higher salaries.

Certifications – Certifications in AWS, Azure, Kubernetes, or performance testing boost salary potential.

Salary Comparison

According to Payscale, the national average salary for an SDET is $88,000 per year, while a Software Tester earns an average of $55,501 per year. That’s a difference of over $32,000 annually!

If we look at salary trends in the UK, the job board ItJobsWatch shows a similar trend, where SDETs earn considerably more than traditional testers.

Clearly, moving from a Software Tester to an SDET can increase your salary by at least 30-50%, depending on your location and experience.

What Skills Can Increase a Tester’s Salary?

One of the biggest reasons for the salary gap between testers and SDETs is skillset differences. If you want to increase your earning potential, you need to develop key SDET-related skills.

Here are some of the most in-demand skills that can significantly boost your pay:

1. Selenium & Test Automation Tools

Mastering automation tools like Selenium, Cypress, Playwright, TestNG, and Appium can increase your value as a tester. Companies prefer candidates who can write automation scripts instead of just executing manual test cases.

2. Programming Skills (Java, Python, C#)

Many testing job descriptions now require programming knowledge. The most in-demand languages are:

Java – Used with Selenium, TestNG, Keploy.

Python – Popular in API testing and automation.

C# – Preferred in .NET-based environments.

If you want to increase your salary and become an SDET, you should at least be proficient in one of these languages.

3. API Testing & Postman

APIs are a crucial part of modern applications, and knowing how to test them is a must-have skill for higher-paying testing roles.

Postman – A widely used tool for manual and automated API testing.

Keploy – A powerful tool for API test generation and mocking.

4. DevOps & CI/CD (Jenkins, Docker, Kubernetes)

SDETs work closely with DevOps engineers to integrate testing into CI/CD pipelines. Companies prefer testers who can:

Set up automated test pipelines in Jenkins or GitHub Actions.

Use Docker to create test environments.

Work with Kubernetes for test orchestration.

5. Performance & Security Testing

Adding skills in performance testing (JMeter, Gatling) or security testing (OWASP, Burp Suite) can further increase your salary.

6. Cloud & AI-Based Testing

With the rise of cloud computing and AI, testers who know how to:

Test AWS/Azure applications

Use AI-driven test automation tools

Final Thoughts

The SDET role is one of the most exciting and high-paying career paths in software testing today. With the right skills, you can transition from manual testing to automation and development, securing a rewarding career in top tech companies.

If you're a QA Tester or Test Engineer looking to level up, now is the perfect time to start learning test automation, programming, and DevOps to become an SDET. The demand is high, and companies are willing to pay a premium for skilled professionals who can ensure quality at speed.

FAQs

How can a manual tester transition into an SDET role?

Learn programming (Java, Python, C#), master test automation (Selenium, Cypress), practice API testing (Postman, Keploy), and gain DevOps skills (CI/CD, Docker, Kubernetes). Work on real-world projects and contribute to open-source automation tools.

Do SDETs only focus on automation testing?

No, SDETs also develop test frameworks, perform API, performance, and security testing, integrate tests into CI/CD, and collaborate with developers and DevOps teams to improve software quality. They play a crucial role in the entire software development lifecycle.

Are SDET roles limited to specific industries?

No, SDETs are in demand across various industries, including finance, healthcare, e-commerce, and tech. Any company adopting DevOps, test automation, and CI/CD practices benefits from hiring SDETs to ensure software quality and faster releases.

Is coding mandatory for an SDET role?

Somewhat yes, since SDETs need strong coding skills in Java, Python, or C#. They write automation scripts, build test frameworks, and contribute to software development. Unlike manual testers, coding is essential for writing efficient, scalable automated tests.

0 notes

Text

Navigating the Future: Key Trends Driving Quality Assurance Services in 2025

Key Trends Driving Quality Assurance Services in 2025

The quality assurance (QA) landscape is undergoing a seismic shift as we step into 2025. With technological advancements and increasing consumer demands for flawless digital experiences, QA has evolved from being a mere checkpoint in product development to a strategic enabler of business success. For QA Managers, QA Leads, Marketing Managers, and Project Managers, understanding the latest trends in quality assurance services is critical to staying competitive and delivering value to stakeholders.

This article explores the key trends driving the future of QA services in

2025, offering insights into how businesses can adapt and thrive in this rapidly changing environment.

The Shift Toward Proactive Quality Assurance

Historically, QA was seen as a reactive process focused on identifying and fixing defects at the end of the development lifecycle. However, in 2025, QA has transformed into a proactive discipline that integrates quality at every stage of product development. This shift is driven by several key trends:

1. Shift-Left Testing

One of the most prominent trends shaping QA in 2025 is "Shift-Left Testing." This approach involves moving testing activities earlier in the software development lifecycle (SDLC). By embedding QA into the requirements and design phases, teams can identify potential issues before they become costly problems.

Benefits of Shift-Left Testing:

Early Bug Detection: Catching defects early reduces the cost and time required to fix them.

Improved Collaboration: Encourages closer collaboration between developers, testers, and product managers.

Faster Time-to-Market: Streamlined processes enable quicker delivery of high-quality products.

The Rise of Automation and AI in QA

Automation has long been a cornerstone of modern QA practices, but its scope has expanded significantly in 2025. The integration of artificial intelligence (AI) and machine learning (ML) into quality assurance services is revolutionizing how testing is conducted.

2. AI-Driven Test Automation

AI-powered tools are now capable of generating, maintaining, and executing test scripts with minimal human intervention. These tools leverage ML algorithms to predict potential defects, analyze historical data, and optimize testing strategies.

Key Applications of AI in QA:

Predictive Analytics: Anticipates defects before they occur based on historical patterns.

Visual Testing: Uses AI to detect UI inconsistencies across different devices and platforms.

Test Data Generation: Creates realistic test data for diverse scenarios using synthetic data generation techniques.

3. Hyper-Automation

Hyper-automation goes beyond traditional test automation by integrating advanced technologies like robotic process automation (RPA), natural language processing (NLP), and AI. This trend enables end-to-end automation across functional, performance, security, and compliance testing.

Cloud-Based Quality Management Systems

The adoption of cloud technology continues to reshape how businesses approach quality assurance. By 2025, over 85% of organizations are expected to use cloud-based quality management systems (QMS), according to industry reports1.

Benefits of Cloud-Based QMS:

Scalability: Easily scale testing environments up or down based on project needs.

Cost Efficiency: Reduces infrastructure costs by leveraging Software-as-a-Service (SaaS) models.

Collaboration: Facilitates seamless collaboration among distributed teams through centralized access to testing resources.

Cloud-based solutions also enhance agility by enabling continuous integration/continuous deployment (CI/CD) pipelines, ensuring faster delivery cycles without compromising quality.

Consumer-Centric Testing Strategies

In 2025, consumer expectations are higher than ever. Businesses are prioritizing user-centric approaches to ensure their products not only function correctly but also deliver exceptional user experiences.

4. User-Centric Testing

User-centric testing focuses on validating that software meets real-world user needs. This involves incorporating usability testing, performance testing under real-world conditions, and gathering direct feedback from end-users.

Key Practices for User-Centric Testing:

Usability Testing: Observes how users interact with the product to identify pain points.

Performance Testing for User Satisfaction: Ensures software performs optimally under various conditions.

Feedback Loops: Engages users during beta testing phases to refine features based on their input2.

5. Customer-Centric Metrics

QA teams are increasingly adopting metrics that reflect customer satisfaction rather than just technical outcomes. Metrics like Net Promoter Score (NPS) and Customer Satisfaction Score (CSAT) provide valuable insights into how well products meet user expectations.

Distributed QA Teams and Remote Collaboration

The rise of remote work has led to distributed QA teams becoming the norm rather than the exception. This trend brings both challenges and opportunities for businesses:

Challenges:

Maintaining team cohesion across different time zones.

Ensuring consistent communication among remote team members.

Opportunities:

Accessing a global talent pool with diverse expertise.

Leveraging collaboration tools like Slack, Jira, or Microsoft Teams to streamline workflows.

By adopting robust communication strategies and investing in collaboration tools, businesses can maximize the productivity of their distributed QA teams while maintaining high standards of quality2.

Predictive Analytics for Smarter Decision-Making

Predictive analytics is emerging as a game-changer in quality assurance services, enabling teams to make smarter decisions based on data-driven insights:

Applications of Predictive Analytics:

Identifying high-risk areas within applications that require additional testing.

Forecasting potential bottlenecks in CI/CD pipelines.

Optimizing resource allocation for maximum efficiency.

By leveraging predictive analytics tools, businesses can proactively address issues before they impact users, ensuring smoother product launches and higher customer satisfaction4.

Preparing for Industry 4.0 Integration

The ongoing Industry 4.0 revolution is driving significant changes across all sectors, including quality assurance. As IoT devices become more prevalent and manufacturing processes become increasingly automated, QA teams must adapt their strategies accordingly:

Key Trends:

IoT Testing: Ensuring interoperability among connected devices while maintaining security standards.

Cybersecurity Focus: Protecting sensitive data from breaches as digital ecosystems expand.

Integration with DevOps: Embedding QA seamlessly into DevOps workflows for continuous improvement7.

Conclusion

As we navigate through 2025, it’s clear that quality assurance is no longer just about finding bugs—it’s about delivering value at every stage of the product lifecycle. From proactive approaches like Shift-Left Testing to transformative technologies like AI-driven automation and predictive analytics, the future of quality assurance services is brimming with innovation.

For QA Managers, QA Leads, Marketing Managers, and Project Managers looking to stay ahead in this evolving landscape, embracing these trends is not optional—it’s essential. By adopting these cutting-edge practices and technologies today, businesses can ensure they remain competitive while meeting the ever-growing expectations of modern consumers.

#qualityassuranceservices#qualityassurancetesting#qualityassurancecompany#quality assurance services

0 notes

Text

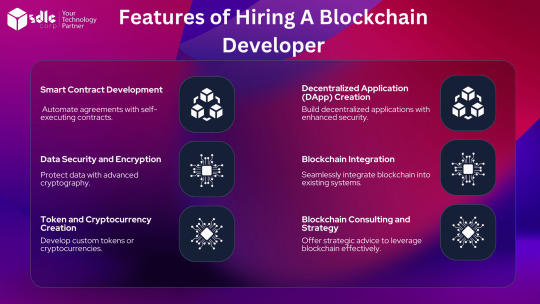

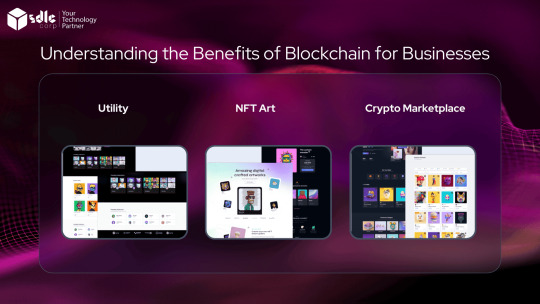

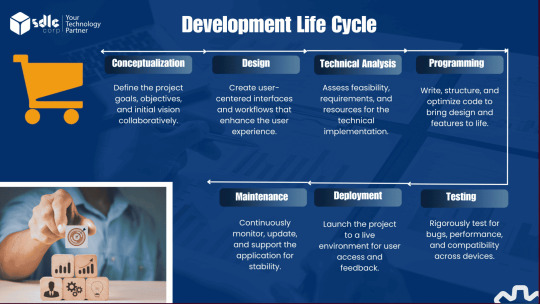

Hire A Blockchain Developer

SDLC Corp engaged a team of expert Blockchain Developers to create a decentralized application (dApp) leveraging blockchain technology, aimed at enhancing data security and transparency for the healthcare and finance sectors. The project involved developing smart contracts on the Ethereum blockchain, with the Blockchain Developers ensuring that transactions were secure, immutable, and could be seamlessly integrated with existing systems.

The solution was specifically designed to streamline data management, improve compliance with regulatory standards such as HIPAA and GDPR, and mitigate risks associated with centralized data storage. Through the innovative use of blockchain, Blockchain Developers enabled the secure sharing of sensitive information, building a transparent platform that empowered clients to enhance operational efficiency, reduce costs, and foster trust with stakeholders. By incorporating blockchain technology, SDLC Corp delivered a forward-thinking solution that positioned the client as a leader in adopting next-generation technologies.

Challenges:

SDLC Corp, a leading tech solutions provider, undertook the development of a cutting-edge blockchain-based project aimed at transforming data security for healthcare and finance sectors. The goal was to create a decentralized application (dApp) that enabled secure data sharing, ensuring transparency and immutability of transactions through smart contracts and blockchain technology. However, several challenges surfaced during the development phase.

The primary challenge was the complexity involved in selecting the right blockchain platform. Given the project’s scope, the team had to decide whether to build the solution on Ethereum, Solana, or another blockchain network, each offering different advantages and limitations in terms of scalability, transaction speed, and cost. Additionally, the team faced issues related to smart contract development, as the dApp needed to ensure the highest level of security and be resistant to potential vulnerabilities such as reentrancy attacks or exploits.

The project’s requirements also included developing a seamless integration between the blockchain and traditional systems, such as healthcare data management software and financial transaction systems, all while complying with stringent regulatory standards such as HIPAA and GDPR. This presented both technical and legal challenges. Moreover, the team needed to ensure that the blockchain solution could handle a large number of concurrent transactions without compromising on performance or security.

Solution:

To overcome these challenges, SDLC Corp adopted a structured approach, leveraging blockchain expertise and advanced technologies to build a robust solution.

Platform Selection and Smart Contract Development: The team conducted an extensive evaluation of multiple blockchain platforms, ultimately selecting Ethereum for its strong ecosystem and security features. Solidity was used to develop the smart contracts, while development was carefully aligned with Ethereum's gas limits and transaction fees to optimize cost efficiency. The smart contracts were designed to automate key functions, such as authentication and data sharing, in a trustless, transparent manner.

Integration with Existing Systems: SDLC Corp’s team worked closely with healthcare and finance domain experts to ensure seamless integration between the blockchain-based solution and legacy systems. APIs and middleware were developed to bridge the gap between the decentralized network and centralized systems, ensuring smooth data flow and enabling secure, real-time transactions.

Security and Compliance: Given the critical nature of the data being handled, the development process included a robust security framework. The team implemented various cryptographic techniques and conducted thorough security audits of the smart contracts and overall infrastructure to prevent exploits. Furthermore, SDLC Corp ensured that the blockchain solution met all relevant regulatory requirements by working with legal and compliance experts throughout the development process.

Scalability and Performance Optimization: To address scalability concerns, the team leveraged Layer 2 solutions and off-chain storage where appropriate. This ensured that the blockchain network could handle high throughput and large-scale data processing without compromising performance or security.

#blockchain#blockchain developer#blockchain developement#blockchain development company#blockchain development services#blockchain app development#blockchain services

0 notes

Text

GitLab on Google Cloud for faster delivery and security

GitLab On Google Cloud

Using an integrated solution that improves speed, security, and scalability, modernise the way you deploy software.

Product, development, and platform teams are always under pressure to produce cutting-edge software rapidly and at scale while lowering business risk in today’s fast-paced business climate. Nevertheless, fragmented toolchains for the software development lifecycle (SDLC) impede advancement. A handful of the difficulties that organisations have in contemporary development are as follows:

Different instruments

Workflows that are inefficient and context switching are caused by disconnected toolchains.

Worries about security

Vulnerabilities are introduced by traditional authentication techniques such service account keys.

Scalability problems

Maintaining scalable self-service deployment via Continuous Integration / Continuous Delivery (CI/CD) can become a significant challenge when enterprises take on an increasing number of projects.

As per the 2023 State of DevSecOps Report, enhancing daily workflow positively affects cultural components. Google Cloud has worked with GitLab on an integrated solution that reimagines how businesses approach DevSecOps to expedite the delivery of apps from source code on GitLab to Google Cloud runtime environments in an effort to enhance the daily experience of developers.

GitLab on Google Cloud Integration

The Google Cloud – GitLab integration enhances the developer experience by simplifying tool management and assisting workers in maintaining “flow.” The integration between GitLab and Google Cloud provides a holistic solution that improves software delivery, simplifies development, and increases security by reducing the need for context switching that comes with using various tools and user interfaces.

Google Cloud Gitlab

Without the requirement for service accounts or service account keys, the GitLab on Google Cloud integration employs workload identity federation for permission and authentication for GitLab workloads on Google Cloud.

Refer to the GitLab instructional Google Cloud IAM for instructions on configuring workload identity federation and the required Identity and Access Management (IAM) roles for the GitLab on Google Cloud integration.

GitLab elements

To make Google Cloud tasks within GitLab pipelines simpler, the GitLab on Google Cloud integration makes use of GitLab components that are developed and maintained by Google. You must follow the directions in the GitLab tutorial Google Cloud Workload Identity Federation and IAM policies to configure authentication and authorization for GitLab to Google Cloud in order to use the components for this connection.

Management of Artefacts

Using the GitLab on Google Cloud interface, you can quickly deploy your GitLab artefacts to Google Cloud runtimes by uploading them to the Artefact Registry. The artefacts can be seen in GitLab or the Artefact Registry, and Google Cloud provides access to the metadata for each artefact.

Continuous deployment and integration

With the GitLab on Google Cloud connection, you can execute your Google Cloud workloads by configuring the GitLab runner parameters directly in your GitLab project using Terraform.

You can use the Cloud Deploy or Deploy to GKE components if you have already configured Workload Identity Federation for authentication and permission to Google Cloud.

A cohesive strategy for DevSecOps

Imagine working on a single integrated platform where you can easily transition from developing code to deploying it. This is the reality made possible by the interaction between GitLab and Google Cloud. Google Cloud created a unified environment that empowers developers and promotes innovation by combining Google Cloud’s trustworthy infrastructure and services with GitLab’s source code management, CI/CD pipelines, and collaboration tools. Many advantages for customers come from this integration:

Reduced context switching

Developers don’t have to switch between GitLab and Google Cloud; they can remain in one tool.

Simple delivery

By making it easier for clients to set up their pipelines in GitLab and deliver containers to Google Cloud runtime environments, google cloud has decreased friction and complexity.

Adapted to suit business requirements

The Google Cloud – GitLab connection makes sure your DevSecOps pipelines can scale to match the demands of your expanding organisation by using Google Cloud’s infrastructure as the foundation.

To put it briefly, you can use Workload Identity Federation to securely integrate GitLab with Google Cloud, access your containers in both the Google and GitLab Artefact Registry, and deploy to Google Cloud runtime environments using CI/CD components specifically designed for the task. Let’s investigate more closely.

Prioritising security

Because the security of your programme is so important, Google Cloud included Workload Identity Federation (WLIF) in this integration. Static service account keys are no longer required thanks to this technology, which replaces them with transient tokens that drastically lower the possibility of compromise. Furthermore, Workload Identity Federation facilitates the mapping of identity and access management roles across GitLab and Google Cloud, simplifying management by centralising authentication through your current identity provider.

Coordinated management of artefacts

You can view your containers directly in GitLab and manage them in Google Artefact Registry repositories thanks to this connection. This allows you to utilise security scanning and has complete traceability of your created artefacts from GitLab to Google Cloud, all while adhering to GitLab’s developer workflow.

Pipelines that can be configured

Google Cloud has also released a set of CI/CD components as part of this integration to make pipeline building repeatable, easy to configure, and straightforward. The deployment to Google Cloud runtime environments was considered throughout the construction of these Google Cloud managed components. The ability to deploy an image to Google Kubernetes Engine, manage pipeline delivery with Cloud Deploy, and publish an image to the Google Artefact Registry are among the five components that are already accessible. Compared to using the Google CLI, Google Cloud’s preliminary benchmarking reveals that these components can be executed in GitLab CI pipelines more quickly and in smaller quantities.

Proceed with the following action

Are you prepared to enhance your DevSecOps process? Get a free trial of GitLab from the GitLab Web Store or buy it from the Google Cloud Marketplace if you don’t already have it. In the event that you already have a GitLab account, set up the integration right now. Additionally, if you’d like to talk to them about this integration or take part in customer experience research.

Read more on Govindhtech.com

#gitlab#GoogleCloud#vulnerabilities#GKEcomponents#GoogleKubernetesEngine#DevSecOpsprocess#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

Digital Quality Engineering: Ensuring Excellence in Software Development

In today’s rapidly evolving digital landscape, the demand for high-quality software has never been greater. With the increasing complexity of applications and the need for quick deployment, organizations are turning to digital quality engineering (DQE) as a solution. DQE focuses on ensuring the excellence of software through a comprehensive and integrated approach. This article will explore the concept of DQE, its key principles, and how organizations can implement it to guarantee the delivery of top-notch software.

What is Digital Quality Engineering? Digital quality engineering, also known as QE in the digital age, is an approach that aims to integrate quality assurance throughout the software development lifecycle (SDLC). It goes beyond the traditional quality assurance (QA) process by providing a holistic view of software quality, focusing on continuous integration, continuous delivery, and continuous testing. DQE is an integral part of DevOps and Agile methodologies, aiming to deliver high-quality software at a rapid pace.

Principles of Digital Quality Engineering 1. Shift left approach: DQE emphasizes early involvement of quality engineers by adopting the “shift left” approach. This involves integrating quality practices from the very beginning of the SDLC, enabling early detection and prevention of defects. By catching issues early on, organizations can reduce the cost and effort required for bug fixing later in the development process.

2. Automation: Automation plays a crucial role in DQE. Manual testing alone cannot keep up with the speed and complexity of modern software development. By automating repetitive and time-consuming tasks, such as regression testing and deployment, organizations can achieve faster feedback loops and significantly reduce the time to market. Popular automation tools like Selenium, Appium, and Jenkins can be used to streamline the testing process.

3. Continuous testing: DQE promotes a culture of continuous testing, where testing activities are integrated seamlessly into the development process. This includes automated unit testing, integration testing, and user acceptance testing. Continuous testing ensures that the software meets the desired quality standards at every stage of development, allowing for early detection and resolution of issues.

4. Performance and security testing: Digital quality engineering recognizes the importance of performance and security testing. With applications becoming more complex and handling sensitive user data, it is crucial to ensure their performance under expected workloads and their ability to withstand security vulnerabilities. By incorporating performance and security testing into the SDLC, organizations can proactively identify and address potential bottlenecks and vulnerabilities.

Implementation of Digital Quality Engineering To implement DQE effectively, organizations should consider the following practices:

1. Collaboration: Foster collaboration between development, testing, and operations teams. Emphasize communication and openness to ensure a shared understanding of quality goals and objectives.

2. Continuous integration and delivery: Build a robust CI/CD pipeline, enabling frequent, automated deployments. This allows for faster feedback cycles and facilitates continuous testing.

3. Test infrastructure as code: Treat test infrastructure as code, utilizing infrastructure automation tools like Terraform or Ansible. This ensures consistency, repeatability, and scalability of the test environment.

4. Adoption of testing frameworks: Leverage popular testing frameworks, such as JUnit, Cucumber, or TestNG, to standardize and streamline the testing process. These frameworks provide a common language for different teams involved in the development process.

5. Monitoring and analytics: Utilize monitoring and analytics tools to gain insights into the real-time performance and user experience of the software. This allows for proactive identification and resolution of any performance or quality issues.

Digital quality engineering is a critical aspect of software development in the digital age. By integrating quality practices early in the SDLC, adopting automation and continuous testing, and prioritizing performance and security, organizations can ensure the delivery of high-quality software at a rapid pace. Implementing DQE through collaboration, continuous integration and delivery, infrastructure automation, testing frameworks, and monitoring and analytics provides organizations with a comprehensive approach to software quality assurance. Embracing DQE can enable organizations to stay competitive in the digital landscape by delivering software that not only meets but exceeds customer expectations.

0 notes

Text

Understanding the Software Development Life Cycle (SDLC)

The software development life cycle (SDLC) is the roadmap for creating high-quality software. It's a structured process that takes a concept from initial planning all the way to deployment and ongoing maintenance. By following a defined SDLC, development teams can minimize risks, ensure software meets user needs, and deliver projects on time and within budget.

Why is the SDLC Important?

The SDLC offers several advantages over ad-hoc development approaches. Here are some key benefits:

Reduced Risks: A structured SDLC helps identify and mitigate potential issues early in the development process. This can save time and money compared to fixing problems later in the cycle.

Improved Quality: The SDLC emphasizes clear requirements gathering, design, and testing phases. This leads to software that is more functional, reliable, and user-friendly.

Enhanced Efficiency: By breaking down the development process into manageable phases, teams can work more efficiently and collaboratively.

Clear Communication: The SDLC fosters clear communication between stakeholders, including developers, clients, and end-users. This ensures everyone is on the same page and working towards the same goals.

Predictable Delivery: The SDLC helps teams define timelines and milestones, leading to more predictable project delivery schedules.

Common Phases of the SDLC

While specific SDLC models may vary, most follow a similar core set of phases:

Planning and Requirement Gathering: This initial phase involves defining the project goals, identifying target users, and outlining the software's functionalities.

System Design: Based on the gathered requirements, this phase focuses on designing the software's architecture, user interface (UI), and data flow.

Development: Here, programmers write code based on the approved design documents.

Testing: The software undergoes rigorous testing to identify and fix bugs and ensure it meets all functional and non-functional requirements.

Deployment: The finished software is released to the end-users, whether through a traditional installation or a cloud-based deployment.

Maintenance: Software requires ongoing maintenance to fix bugs, address security vulnerabilities, and implement new features as needed.

Popular SDLC Models

There are different SDLC models that cater to specific project needs and development methodologies. Here are a few of the most common:

Waterfall Model: This traditional linear model follows a rigid sequence of phases, where each phase must be completed before moving to the next.

Agile Model: This iterative and incremental approach focuses on delivering working software in short cycles, with continuous feedback and adaptation throughout the development process.

Spiral Model: This risk-driven model combines elements of the waterfall and agile approaches, with a focus on early risk identification and iterative development cycles.

How Prometteur Can Help You

At Prometteur, we have a team of experienced software developers who are well-versed in various SDLC methodologies. We understand the importance of choosing the right SDLC model for your project and can help you implement it effectively.

We offer a range of services to streamline your software development process, including:

Requirements gathering and analysis

System design and architecture

Agile development and project management

Comprehensive software testing

Deployment and maintenance support

By partnering with Prometteur, you can ensure your software development projects are delivered on time, within budget, and meet the highest quality standards.

Contact us today to learn more about how we can help you navigate the SDLC and achieve your software development goals.

0 notes

Photo

Secure the Design for Low Cost Security Control Implementation

#secure sdlc#ssdlc#how to secure the design with low cost control#why do we need secure design#threat model#hack2secure secure design#secure design architecture#difference between traditional sdlc and secure sdlc#practices for secure design

0 notes

Text

Metabase vs looker

Power Bi provides numerous data points to provide visualization. It also identifies the resource automatically. Power BI cannot connect to Hadoop databases whereas it enables data extraction from Azure, Salesforce and googles analytics. Tableau allows accessing data in the cloud and connecting to Hadoop databases. Below are the most important key Differences Between Power BI vs Tableau Data Access Power BI vs Tableau differs majorly in the visualization standpoint ability in extracting the data from different servers. Key Differences Between Power BI and Tableauīelow are the lists of points, describe the difference between Power BI and Tableau: With PopSQL, users can easily understand their data model, write version controlled SQL, collaborate with live presence, visualize data in charts and dashboards, schedule reports, share results, and organize foundational queries for search and discovery.Įven if your team is already leveraging a large BI tool, like Tableau or Looker, or a hodge podge of SQL editors, PopSQL enables seamless collaboration between your SQL power users, junior analysts, and even your less technical stakeholders who are hungry for data insights.Hadoop, Data Science, Statistics & others Head to Head Comparison Power BI vs Tableau (Infographics)īelow is the Top 7 Comparison Power BI vs Tableau: We provide a beautiful, modern SQL editor for data focused teams looking to save time, improve data accuracy, onboard new hires faster, and deliver insights to the business fast. PopSQL is the evolution of legacy SQL editors like DataGrip, DBeaver, Postico. Connect and transform datasets quickly and at scale, building robust data pipelines that help drive deeper insights. Stay in control with SAML-based SSO and security and privacy controls built into every layer of Domo’s platform, along with compliance certifications including SOC2, HIPAA, and GDPR. Build and transform massive datasets, implement scalable governance and security that grow with your organization and use enterprise applications to streamline important processes. Domo is built to deliver the speed and flexibility that helps businesses achieve enterprise scale. Make analytics accessible with interactive, customizable dashboards that tell a story with real-time data. With Domo’s fully integrated cloud-native platform, critical business processes can now be optimized in days instead of months or more. Domo’s low-code data app platform goes beyond traditional business intelligence and analytics to enable anyone to create data apps to power any action in their business, right where work gets done. Come see what makes us the perfect choice for SaaS providers.ĭomo transforms business by putting data to work for everyone. Everything from pricing and licensing, to SDLC compliance and support make it easy to grow with Qrvey as your applications grow. Qrvey’s entire business model is optimized for the unique needs of SaaS providers. The Qrvey team has decades of experience in the analytics industry. And, since Qrvey deploys into your AWS account, you’re always in complete control of your data and infrastructure. We feature a modern architecture that’s 100% cloud-native and serverless using the power of AWS microservices. We’re the only all-in-one solution that unifies data collection, transformation, visualization, analysis and automation in a single platform. Product managers choose Qrvey because we’re built for the way they build software. It dramatically speeds up deployment time, getting powerful analytics applications into the hands of your users as fast as possible, by reducing cost and complexity. Qrvey is the embedded analytics platform built for SaaS providers. Manage your BI resources and data across all your customers from a single environment. Offer every end-user (from code-first to code-free) the ability to create custom ad hoc reports and interactive dashboards.Įnhance your SaaS-based business applications with a BI platform that natively supports multi-tenancy. Leverage Wyn's powerful and fast reporting engine to develop complex BI reports.Īnalyze data and deliver actionable information with interactive dashboards, multi-dimensional dynamic analysis, and intelligent drilling.ĭrag-and-drop controls enable non-technical end-users to visualize, analyze, and distribute permitted data easily.Įliminate your dependence on the IT departments and data analysts. Integrate dashboards into your business applications with API and iFrame capabilities. Seamlessly embed analytics within your own applications. Designed for self-service BI, Wyn's offers limitless visual data exploration, allowing the everyday user to become data-driven. Wyn is a seamless embedded business intelligence platform.

0 notes

Text

The Best Codeless Test Automation Tools In Consumer's Market -2021

Do you know? What’s the next big thing that’s going to transform the testing process in an SDLC? I’m talking about the codeless automation testing. With the help of Agile and DevOps, most of the organizations could save time by adapting to the low-code or no-code approach so effectively by fulfilling all the necessary software demands faster.

Codeless automation testing helps global enterprises to deliver software more easily by maximizing the reuse and minimizing manual coding. By switching to this codeless automated testing now, teams can keep more focus on high-level work, which adds more business value. However, the testing tools market has matured enough in streamlining the software development process.

If you’re in search of finding the best codeless test automation tool for addressing all the coding challenges by minimizing the process of writing scripts then, try Sun Technologies’ IntelliSWAUT scriptless test automation tool to get high-quality automation results instantly.

Read our case study to know how IntelliSWAUT has improved a global gaming application performance.

What is Codeless Test Automation?

Codeless automation testing is a process in which it creates automated tests without writing a single code of line. It also let teams to automate the process of writing test scripts. This process relieves developers and testers from intensive coding. The codeless testing is best to embed in to your testing process. However, there is a massive gap in implementing test automation when it comes to continuous testing.

Differences Between Low-code Vs No-code

Most global industry leaders often refer to some of the tools and frameworks that require no code. Tt's known as scriptless automation testing. Whether it is No-code or low-code, it's the same thing. The advantage of using test automation tools is it allows users to create and execute tests without any coding experience.

Working of Codeless Testing

Testers won’t need to know how to code in the codeless automation process, but they need to leverage tools which develop proper test scripts. The codeless automation uses MI (machine learning) and AI (Artificial Intelligence) to self-heal.

Generally, the codeless automation is carried out in two ways:

1. Record and play

2. Selecting the action items, creating the flow, and running the test

Benefits of Codeless Automation

· No need to manually create tests

· Codeless test automation is more cost-effective when compared with traditional automation tools

· You could able to test quickly

· Easily generate testing reports

· It effortlessly tracks bugs in the process

· Manage all the complex tests

· Programming background is not necessary

· Codeless automation integrates with CI tools

Best Automation Testing Tools

IntelliSWAUT – IntelliSWAUT is an AI-empowered all-in-one scriptless test automation tool built with a lightweight approach. It addresses all your coding challenges, script maintenance, results in execution and analysis. It is an in-house tool developed by Sun Technologies’ that is user-friendly and intelligent at the same time. By upgrading to these scriptless test automation tool, you can quickly create test automation scripts without any coding knowledge. As we mention, it’s an all-in-one tool that covers all the software developments cycle, right from test generation to test reporting.

Kobiton – Kobiton is an open-source script-based tool that automates functional, visual performance, including compatibility tests. It ensures quality and delivers quickly as of DevOps. Some of the key features of kobiton such as detect automated crush, visual validation, supports CI/CD integration, reporting and on-premises set-ups.

TestProject – TestProject is a cloud-based test automation platform that enables user to test apps on Android, iOS, and the web. Further, the team could easily collaborate with Selenium and Appium by ensuring quality and speed. By using TestProject, users get detailed reports of dashboards. No need of coding skills or complex configurations.

Ranorex – Ranorex is an all-in-one test automation tool that comes with a codeless click-and-go user interface. It supports end-to-end testing on desktop, web, and mobile. This tool integrates with Git, Jenkins, Bamboo, NeoLoad, and more.

Subject7 – Subject7 is a no-code platform that supports end-to-end automation for web, mobile, desktop, database, load, security, and more. It is an interface that enables non-coders to run test flows efficiently with minimal training. Subject7 supports flexible reporting, parallel execution and integrates with DevOps tools.

Conclusion

In today’s software industry, adapting to automated testing tools is essential for DevOps to keep up with the high demands of the current market. The code-less test automation executes automated test scenarios without the need of coding. In case, if you really want to save developers time by increasing their productivity then, try IntelliSWAUT to minimizes the process of writing the test scripts manually, which benefits testers and DevOps.

Contact us today for the best scriptless test automation solution by clicking here.

0 notes

Photo

Digital Transformation with Continuous Testing

With enterprises increasingly leaning towards agile methodologies and DevOps integration, a smart testing strategy has become critical. The businesses are transforming digitally. They need a robust and full-proof test strategy that will ensure optimal efficiency and decrease software failures. Companies no longer have the luxury of time that they used to have with traditional SDLC. In such scenarios, continuous testing emerges as the only answer.

Continuous Testing mainly refers to unobstructed testing at each phase of a software development life cycle. The comprehensive study from Forrester and DORA (DevOps Research and Assessment) shows that continuous testing is significant for the success of DevOps and other digital transformation proposals and initiatives. However, adoption levels of Continuous testing adoption are low, even though test teams had already started practicing DevOps. Those selective enterprises have already attained remarkable results in terms of accelerating innovation while reducing business risks and improving cost efficiency.

Why Continuous Testing Strategy?

We mainly consider DevOps as a philosophy and process that guides software testers, developers, and IT operations to produce more software releases at higher speeds and with better outcomes. On the other side, continuous delivery is the method that invokes DevOps principles.

Continuous Delivery is about automating tasks to reduce manual efforts in the process of constantly integrating software. This paces up to push the tested software into production. Expert QA teams can make improvements along the way derived from continuous automated feedback about what truly works, and what is required to be done better.

The shift to experimentation is an essential part of making an agile landscape for digital transformation. The IT Companies can easily launch the perfectly working software, by adding additional functionality to existing systems and then work on improving and correcting the highly prioritized list of glitches. Even dealing with the critical issues in the shortest possible time with the tight feedback loops.

The continuous delivery model also gives IT companies the agility and production readiness it requires to promptly respond to market changes. It makes your end products more stable, your teams more productive, and accelerate your flexibility to facilitate fast and continuous innovation everywhere. Additionally, you can drive faster growth, help the business expand into new areas, and compete in the digital age with the improved responsiveness that this model reflects.

Four Sub-dimensions of Continuous Deployment

Using SAFe for Continuous Delivery:

Deploy to production– Covers the skills required to deploy a solution to a production environment

Verify the solution – Encompasses the skills required to confirm the changes operate in production as planned before they are released to customers

Monitor for problems– Covers the skills to check and report on solutions

Respond and recover– Includes the skills to rapidly tackle any issues that happen during deployment

Agile and DevOps Digital Transformation- The Three Ways

With the sprawling foothold of Agile and DevOps, backed by continuous testing, companies can follow ‘The Three Ways’ explained in The Phoenix Project to optimize business processes and increase profitability. These ways stand true for both the businesses and IT processes.

The 3 ways guiding the ‘Agile and DevOps’ digital transformation are:

Work should flow in one direction- downstream

Create, shorten, and amplify feedback loops

Continuous experimentation, to learn from mistakes and attain mastery

These three ways help impart direct client value by integrating the best quality in products while managing efficiency and speed.

DevOps is Key to enable Continuous Delivery

Continuous delivery perfectly suits companies that are well-equipped with a collaborative DevOps culture. In a continuous paradigm, you can’t afford silos and handoffs between operation and development teams. If an organization does not embrace a DevOps-based culture, it will face complexity in building IT and development environments that are needed to compete in the digital era.

It is a concern when professionals need to focus on relentless improvement in technology. In different cases, it calls for an important shift in mindset. For example, to achieve the continuous delivery aim is to speed up releases and improve software quality, and for that, we need to make a smooth shift-left of a series of activities like security and continuous testing. This causes cross-functional and proactive communication at each level of the organizations to bridge the gap that has traditionally existed among the businesses.

Instituting such the culture will also encourage autonomous teams. Development and operations teams need to get empowered to make their own decisions without having to undergo complicated decision-making processes.

Industry Insights

Digital transformation, Agile, and DevOps will continuously thrive and become the norm. It is time that companies should start taking it seriously to transform digitally. Irrespective of the tools and methodologies used, digital transformation efforts will fail without a continuous testing strategy.

Digital transformation, Agile, and DevOps are together building a future where innovation is at the heart of all the processes. But this picture needs continuous testing to become complete. Integrating Continuous Testing with the existing system will enable organizations to proactively respond to glitches and instigate stability in their products.

ImpactQAexperts with years of experience in Continuous Integration can configure and execute the most popular tools like Jenkins, Bamboo, Microsoft TFS and more.

LinkedIn: https://www.linkedin.com/company/impactqa-it-services-pvt-ltd/

Twitter: https://twitter.com/Impact_QA

Facebook: https://www.facebook.com/ImpactQA/

Instagram: https://www.instagram.com/impactqa/

P.S. We are always happy to read your comments and thoughts ;)

#ImpactQA#continuous delivery#continuous testing#digital transformation#new edge test#technology#agile#devops testing

0 notes

Text

Why DevOps and QA belong together?

The consumers of today are a smarter lot who want the best quality software with cutting-edge features and functionalities. This changing dynamics of demand and preferences for quality products or services has led to a churning of sorts where staying competitive is hinged on ensuring quick market reach and superior quality of software products. The churning has led to the advent of DevOps process of developing software applications. DevOps is an enhancement of the Agile model of software development where development and testing take place simultaneously with the active involvement of other departments, especially operations. Enterprises, in order to address the rapidly changing customer preferences and level of competition, are adopting the DevOps model. By doing so, they are better responding to the market need for delivering quality products at a quicker pace.

DevOps is also about detecting and preventing glitches in any software application and ensuring all future releases of the application remain glitch-free as well. In other words, DevOps leads to the delivery of better-quality software (and all future releases) in the quickest possible time. DevOps QA does not stop at merely delivering the software application to the customer but ensuring the same continues to work to its optimum level as well. In a competitive business landscape, when customers have plenty of options to choose from, glitch-free software applications can help enterprises to uphold their brand equity and stay competitive.

DevOps and its outcomes

An extension of Agile, DevOps ensures continuous delivery and improvement of software applications in the SDLC. It leads to the below mentioned outcomes

· Seamless and transparent collaboration between departments, especially Development and Operations (the acronym for DevOps).

· Reduces transaction cost by streamlining the software development and delivery pipeline.

· Quick release of quality software application and maintain consistency in delivering periodic upgrades to meet the market demand.

· Create a stable operating environment.

· Early detection (and prevention) of glitches with DevOps test automation.

Is DevOps queering the pitch for QA professionals?

The DevOps outcomes of continuous improvement and delivery have necessitated creating an automated pipeline where the existence of separate QA departments is called into question. However, this is far from true, for DevOps testing services have become even more important albeit operating with different mindset and objectives. The need to establish a test-driven development environment with continuous testing at its core has rendered DevOps specialists irreplaceable in the scheme of things.

Traditionally, development, QA, and operations worked in their respective silos with different deliverables and objectives. For example, the development team is all about building new software, adding new features, and providing periodic upgrades based on market demand or customer preferences. Similarly, the operations team is about delivering stability and responsiveness to customer services. However, the key department that joins the above two teams is that of Quality Assurance and Testing. It ensures that software application to be delivered to the end customer should not contain any glitch or bug, and all the features run seamlessly. However, DevOps entails integrating all the above teams or departments into one entity and calls for a common DevOps QA procedure in the SDLC. Thus, in the DevOps-led software development pipeline, the role of quality assurance did not reduce but rather became prominent.

Why QA and DevOps are bound together?

Since DevOps is all about achieving continuous improvement and delivery, DevOps software testing becomes an integral component of the whole approach. Therefore, once a software code is written in a sprint, it is simultaneously tested for glitches based on a number of parameters. The DevOps specialists incorporate QA in the sprint and make everyone responsible to achieve a single goal of delivering products or services that offer the best user experience. The notion that in a DevOps setup QA may take a backseat is wholly misplaced. On the contrary, DevOps QA moves into a more strategic space of creating a robust test infrastructure.

The success of DevOps in collaboration with QA will depend on the following factors -

· Executing DevOps test automation in the entire SDLC

· Executing continuous testing

· Adoption of Lean and Agile processes

· Adoption of test virtualization, optimization, and standardization

· Incorporating quality engineering

Contrary to the traditional approach where QA comes toward the end of a delivery cycle, QA comes at every level in DevOps. In the development sprint, the QA team receives the code, tests it, and passes it on to the production pipeline (provided there are no glitches). The DevOps model ensures the incorporation of QA tools and infrastructure to help release the software application with consistent quality.

Moreover, in the present competitive environment where there is a need to release (and update) web or mobile applications quickly, DevOps quality assurance plays a significant role. In the DevOps approach, quality testing is done in real-time instead of testing the code offline. With quality engineering stepping into the whole process, the test infrastructure checks for the performance of every component of an application before its eventual release.

Another dimension of executing QA in today’s software development lifecycle is ensuring security. With threats emanating from malware, trojans, and viruses becoming more pronounced and cybercrime a distinct reality, any software application worth its salt should undergo security testing. Since an application can remain vulnerable to security threats, it becomes everyone’s responsibility to ensure proper safeguards are maintained. For example, if the development and QA team ensures vulnerabilities are done away with, the operations team should ensure incidents like unauthorized log-ins and password sharing should be nipped in the bud. Thus, the SDLC should incorporate DevSecOps where security becomes a collaborative approach.

Conclusion

DevOps without QA will be an unmitigated disaster as the whole approach of delivering quality software application in the quickest possible time will stand defeated. The QA specialists should use appropriate tools to ensure the released application is shorn of glitches and delivers a great user experience.

Diya works for Cigniti Technologies, Global Leaders in Independent Quality Engineering & Software Testing Services to be appraised at CMMI-SVC v1.3, Maturity Level 5, and is also ISO 9001:2015 & ISO 27001:2013 certified.

#DevOps test automation#DevOps testing services#DevOps Specialists#quality assurance#DevSecOps#DevOps software testing#DevOps quality assurance#DevOps QA#software quality assurance services#software quality assurance testing

0 notes

Text

What is the MEAN stack? JavaScript web applications

The MEAN stack is a software stack—that is, a set of the technology layers that make up a modern application—that’s built entirely in JavaScript. MEAN represents the arrival of JavaScript as a “full-stack development” language, running everything in an application from front end to back end. Each of the initials in MEAN stands for a component in the stack:

MongoDB: A database server that is queried using JSON (JavaScript Object Notation) and that stores data structures in a binary JSON format

Express: A server-side JavaScript framework

Angular: A client-side JavaScript framework

Node.js: A JavaScript runtime

A big part of MEAN’s appeal is the consistency that comes from the fact that it’s JavaScript through and through. Life is simpler for developers because every component of the application—from the objects in the database to the client-side code—is written in the same language.

This consistency stands in contrast to the hodgepodge of LAMP, the longtime staple of web application developers. Like MEAN, LAMP is an acronym for the components used in the stack—Linux, the Apache HTTP Server, MySQL, and either PHP, Perl, or Python. Each piece of the stack has little in common with any other piece.

This isn’t to say the LAMP stack is inferior. It’s still widely used, and each element in the stack still benefits from an active development community. But the conceptual consistency that MEAN provides is a boon. If you use the same language, and many of the same language concepts, at all levels of the stack, it becomes easier for a developer to master the whole stack at once.

Most MEAN stacks feature all four of the components—the database, the front end, the back end, and the execution engine. This doesn’t mean the stack consists of only these elements, but they form the core.

MongoDB

Like other NoSQL database systems, MongoDB uses a schema-less design. Data is stored and retrieved as JSON-formatted documents, which can have any number of nested fields. This flexibility makes MongoDB well-suited to rapid application development when dealing with fast-changing requirements.

Using MongoDB comes with a number of caveats. For one, MongoDB has a reputation for being insecure by default. If you deploy it in a production environment, you must take steps to secure it. And for developers coming from relational databases, or even other NoSQL systems, you’ll need to spend some time getting to know MongoDB and how it works. InfoWorld’s Martin Heller dove deep into MongoDB 4 in InfoWorld’s review, where he talks about MongoDB internals, queries, and drawbacks.

As with any other database solution, you’ll need middleware of some kind to communicate between MongoDB and the JavaScript components. One common choice for the MEAN stack is Mongoose. Mongoose not only provides connectivity, but object modeling, app-side validation, and a number of other functions that you don’t want to be bothered with reinventing for each new project.

Express.js

Express is arguably the most widely used web application framework for Node.js. Express provides only a small set of essential features—it’s essentially a minimal, programmable web server—but can be extended via plug-ins. This no-frills design helps keep Express lightweight and performant.

Nothing says a MEAN app has to be served directly to users via Express, although that’s certainly a common scenario. An alternative architecture is to deploy another web server, like Nginx or Apache, in front of Express as a reverse proxy. This allows for functions like load balancing to be offloaded to a separate resource.

Because Express is deliberately minimal, it doesn’t have much conceptual overhead associated with it. The tutorials at Expressjs.com can take you from a quick overview of the basics to connecting databases and beyond.

Angular

Angular (formerly AngularJS) is used to build the front end for a MEAN application. Angular uses the browser’s JavaScript to format server-provided data in HTML templates, so that much of the work of rendering a web page can be offloaded to the client. Many single-page web apps are built using Angular on the front end.

One important caveat: Developers work with Angular by writing in TypeScript, a JavaScript-like typed language that compiles to JavaScript. For some people this is a violation of one of the cardinal concepts of the MEAN stack—that JavaScript is used everywhere and exclusively. However, TypeScript is a close cousin to JavaScript, so the transition between the two isn’t as jarring as it might be with other languages.

For a deep dive into Angular, InfoWorld’s Martin Heller has you covered. In his Angular tutorial he’ll walk you through the creation of a modern, Angular web app.

Node.js

Last, but hardly least, there’s Node.js—the JavaScript runtime that powers the server side of the MEAN web application. Node is based on Google’s V8 JavaScript engine, the same JavaScript engine that runs in the Chrome web browser. Node is cross-platform, runs on both servers and clients, and has certain performance advantages over traditional web servers such as Apache.

Node.js takes a different approach to serving web requests than traditional web servers. In the traditional approach, the server spawns a new thread of execution or even forks a new process to handle the request. Spawning threads is more efficient than forking processes, but both involve a good deal of overhead. A large number of threads can cause a heavily loaded system to spend precious cycles on thread scheduling and context switching, adding latency and imposing limits on scalability and throughput.

Node.js is far more efficient. Node runs a single-threaded event loop registered with the system to handle connections, and each new connection causes a JavaScript callback function to fire. The callback function can handle requests with non-blocking I/O calls and, if necessary, can spawn threads from a pool to execute blocking or CPU-intensive operations and to load-balance across CPU cores.

Node.js requires less memory to handle more connections than most competitive architectures that scale with threads—including Apache HTTP Server, ASP.NET, Ruby on Rails, and Java application servers. Thus, Node has become an extremely popular choice for building web servers, REST APIs, and real-time applications like chat apps and games. If there is one component that defines the MEAN stack, it’s Node.js.

Advantages and benefits of the MEAN stack

These four components working in tandem aren’t the solution to every problem, but they’ve definitely found a niche in contemporary development. IBM breaks down the areas where the MEAN stack fits the bill. Because it’s scalable and can handle a large number of users simultaneously, the MEAN stack is a particularly good choice for cloud-native apps. The Angular front end is also a great choice for single-page applications. Examples include:

Expense-tracking apps

News aggregation sites

Mapping and location apps

MEAN vs. MERN

The acronym “MERN” is sometimes used to describe MEAN stacks that use React.js in place of Angular. React is a framework, not a full-fledged library like Angular, and there are pluses and minuses to swapping React into a JavaScript-based stack. In brief, React is easier to learn, and most developers can write and test React code faster than they can write and test a full-fledged Angular app. React also produces better mobile front ends. On the other hand, Angular code is more stable, cleaner, and performant. In general, Angular is the choice for enterprise-class development.

But the very fact that this choice is available to you demonstrates that MEAN isn’t a limited straitjacket for developers. Not only can you swap in different components for one of the canonical four layers; you can add complementary components as well. For example, caching systems like Redis or Memcached could be used within Express to speed up responses to requests.

MEAN stack developers

Having the skills to be a MEAN stack developer basically entails becoming a full-stack developer, with a focus on the particular set of JavaScript tools we’ve discussed here. However, the MEAN stack’s popularity means that many job ads will be aimed at full-stack devs with MEAN-specific skills. Guru99 breaks down the prerequisites for snagging one of these jobs. Beyond familiarity with the basic MEAN stack components, a MEAN stack developer should have a good understanding of:

Front-end and back-end processes

HTML and CSS

Programming templates and architecture design guidelines

Web development, continuous integration, and cloud technologies

Database architecture

The software development lifecycle (SDLC) and what it’s like developing in an agile environment[Source]-https://www.infoworld.com/article/3319786/what-is-the-mean-stack-javascript-web-applications.html

62 Hours Mean Stack Training includes MongoDB, JavaScript, A62 angularJS Training, MongoDB, Node JS and live Project Development. Demo Mean Stack Training available.

0 notes

Text

Original Post from Rapid7 Author: Bria Grangard

Every modern company is a software company. Whether you sell a technology product, food delivery, or insurance, you interface with customers through applications—and those applications are a prime target for attackers. In fact, the most common attack pattern associated with an actual breach is typically at the web application layer.

It is essential to have continuous protection for your applications across the entire development lifecycle. Inserting application security practices into the development process as early as possible will help you identify weaknesses sooner, save your team time remediating any issues, and improve collaboration between security and DevOps teams. But no matter how well you secure an app to begin with, you can’t just set it and forget it. You need real-time monitoring and analysis of application behavior.

In other words, for full coverage of your apps, you’ll require multiple application security solutions. Dynamic application security testing (DAST) and runtime application self-protection (RASP) are two of the key ingredients in that mix.

The continuous process of application security

While DAST and RASP are only two parts of a complete application security plan, they do represent two very important sides of a continuous process. DAST is a proactive solution used to scan an application in the running environment. It’s used during the build and test phases and can carry on into delivery and production. DAST simulates attacker behavior to look for the app’s behavioral weaknesses.

Related: Learn more about Rapid7’s DAST solution, InsightAppSec