#generative audio workstation

Explore tagged Tumblr posts

Text

How UA's LUNA is Reimagining the Recording Studio with AI

Making a Scene Presents How UA’s LUNA is Reimagining the Recording Studio with AI The recording studio is changing. What used to be a world of knobs, cables, and endless mouse-clicks is now transforming into something smarter—something more intuitive. We’re entering a new era: the rise of the Generative Audio Workstation (GAW), where AI isn’t just a feature—it’s your creative partner. LUNA, the…

#AI audio tools#AI in music production#AI music workflow#AI recording software#AI-powered DAW#Core Audio interface#creative studio assistant#daw#digital audio workstation#future of music production#GAW#generative audio workstation#hands-free recording#indie music production#instrument detection#intelligent music software#LUNA 1.9#Mac audio software#music production workflow#music tech innovation#music technology trends#recording studio evolution#smart tempo tools#tempo detection#tempo extraction#Universal Audio#voice control recording

1 note

·

View note

Text

Here's a list of Free tools and resources for your daily work!🎨

2D

• Libresprite Pixel art + animation • Krita digital painting + animation • Gimp image manipulation + painting • Ibispaint digital painting • MapEditor Level builder (orthogonal, isometric, hexagonal) • Terawell manipulate 3D mannequin as a figure drawing aid (the free version has everything) • Storyboarder Storyboard

3D

• Blender general 3D software (modeling, sculpting, painting, SFX , animation…). • BlockBench low-poly 3D + animation.

Sound Design

• Audacity Audio editor (recording, editing, mixing) • LMMS digital audio workstation (music production, composition, beat-making). • plugins4free audio plugins (work with both audacity and lmms) • Furnace chiptune/8-bit/16-bit music synthesizer

Video

• davinciresolve video editing (the free version has everything) • OBS Studio video recording + live streaming.

2D Animation

• Synfig Vector and puppet animation, frame by frame. Easy. • OpenToon Vector and puppet animation, frame by frame. Hard.

↳ You can import your own drawings.

For learning and inspiration

• models-resource 3D models from retro games (mostly) • spriters-resource 2D sprites (same) • textures-resource 2D textures (same) • TheCoverProject video game covers • Setteidreams archive of animation production materials • Livlily collection of animated lines

750 notes

·

View notes

Text

One of the things enterprise storage and destruction company Iron Mountain does is handle the archiving of the media industry's vaults. What it has been seeing lately should be a wake-up call: Roughly one-fifth of the hard disk drives dating to the 1990s it was sent are entirely unreadable.

Music industry publication Mix spoke with the people in charge of backing up the entertainment industry. The resulting tale is part explainer on how music is so complicated to archive now, part warning about everyone's data stored on spinning disks.

"In our line of work, if we discover an inherent problem with a format, it makes sense to let everybody know," Robert Koszela, global director for studio growth and strategic initiatives at Iron Mountain, told Mix. "It may sound like a sales pitch, but it's not; it's a call for action."

Hard drives gained popularity over spooled magnetic tape as digital audio workstations, mixing and editing software, and the perceived downsides of tape, including deterioration from substrate separation and fire. But hard drives present their own archival problems. Standard hard drives were also not designed for long-term archival use. You can almost never decouple the magnetic disks from the reading hardware inside, so if either fails, the whole drive dies.

There are also general computer storage issues, including the separation of samples and finished tracks, or proprietary file formats requiring archival versions of software. Still, Iron Mountain tells Mix that “if the disk platters spin and aren’t damaged," it can access the content.

But "if it spins" is becoming a big question mark. Musicians and studios now digging into their archives to remaster tracks often find that drives, even when stored at industry-standard temperature and humidity, have failed in some way, with no partial recovery option available.

“It’s so sad to see a project come into the studio, a hard drive in a brand-new case with the wrapper and the tags from wherever they bought it still in there,” Koszela says. “Next to it is a case with the safety drive in it. Everything’s in order. And both of them are bricks.”

Entropy Wins

Mix's passing along of Iron Mountain's warning hit Hacker News earlier this week, which spurred other tales of faith in the wrong formats. The gist of it: You cannot trust any medium, so you copy important things over and over, into fresh storage. "Optical media rots, magnetic media rots and loses magnetic charge, bearings seize, flash storage loses charge, etc.," writes user abracadaniel. "Entropy wins, sometimes much faster than you’d expect."

There is discussion of how SSDs are not archival at all; how floppy disk quality varied greatly between the 1980s, 1990s, and 2000s; how Linear Tape-Open, a format specifically designed for long-term tape storage, loses compatibility over successive generations; how the binder sleeves we put our CD-Rs and DVD-Rs in have allowed them to bend too much and stop being readable.

Knowing that hard drives will eventually fail is nothing new. Ars wrote about the five stages of hard drive death, including denial, back in 2005. Last year, backup company Backblaze shared failure data on specific drives, showing that drives that fail tend to fail within three years, that no drive was totally exempt, and that time does, generally, wear down all drives. Google's server drive data showed in 2007 that HDD failure was mostly unpredictable, and that temperatures were not really the deciding factor.

So Iron Mountain's admonition to music companies is yet another warning about something we've already heard. But it's always good to get some new data about just how fragile a good archive really is.

75 notes

·

View notes

Text

The Echo Room

Chapter 3: Signal Lost

The whisper stayed with her.

Even after closing her laptop, Mira felt it lingering — not in her ears, but somewhere deeper. Like it had been spoken directly into her mind and now echoed inside her chest. Her own name, whispered back to her like a forgotten question.

By morning, she was restless.

She skipped her usual routine. No tea. No music. Just her fingers flying to her phone the moment she sat down, needing contact, context — anything to confirm she hadn’t completely lost the thread.

She scrolled to Dani’s name — her assistant, the only person from her past life she still allowed regular access.

The call failed immediately.

“No signal,” the screen said. Even the signal bars were empty. Not low. Absent.

She walked outside into the crisp mountain air. Nothing moved. The trees stood tall and stiff like they were listening. She climbed the slight hill where she’d first parked, raising the phone toward the gray sky, angling it like a compass.

Still nothing.

She tried texting instead.

hey. did u ever touch scene 12 audio? anything weird w the tracks? She hit send, but the bubble just hovered. No check marks. No delivery.

Mira lowered the phone slowly. A tightness coiled in her throat. She’d expected poor reception — the cabin’s listing had been upfront — but not a complete blackout. She turned toward the cabin, half-expecting to see someone watching from the window. But it was empty. Dim.

Still.

Back inside, she picked up the landline.

She half-hoped it would feel like using a relic, something nostalgic and grounding — like the rotary phone at her grandmother’s house. But the line was dead. No dial tone. Not even static.

She jiggled the cord. Tried again.

Nothing.

For a long moment she simply stood there, receiver in hand, listening to the silence.

Then, reluctantly, she tried the car.

Outside, the fog had thickened, cottoning the trees. The pine needles dripped with condensed moisture, like the forest was sweating. She climbed into the driver’s seat, slipped the key into the ignition, and turned.

Click. Nothing.

She turned again. The same dry, useless click. Not even a struggle from the engine.

“Seriously?”

She popped the hood. Everything looked fine — cables in place, nothing obviously corroded. But the battery was completely dead.

She closed the hood with a sharp thunk and looked up at the sky. A low ceiling of cloud had formed above the ridge. Heavy and unmoving.

Maybe a storm was rolling in. Maybe it had already arrived. Barometric pressure did weird things to electronics. She’d read about that once.

She walked back inside, kicking her boots off harder than necessary.

In the quiet that followed, she felt it again — that thick, watching hush the cabin seemed to generate. Not just silence, but intentional silence. Like the walls weren’t just holding sound in. They were holding something out.

Or in.

She sat at her workstation, trying to focus. She had deadlines. The whole reason she was here was to work. Not to spiral.

But her fingers hovered over the keyboard instead of typing. Her eyes drifted to the track she’d saved last night — Unsorted_01.wav.

Mira hesitated, then opened it again.

The waveform waited, familiar now. She hit play and listened.

The whisper was still there. Her name, murmured just beneath the actress’s line. As if someone had pressed their lips against her neck and said it into her spine.

She backed out of the project and opened a different file — one from a few days ago, before she’d left the city. An older scene she’d already finalized.

She played it through.

The audio was clean. Too clean.

She frowned. Something was… off.

She isolated the background tone — a faint atmospheric hum that had been added as a bed of tension. Normal. Expected.

Except now there was something underneath it. So subtle she almost missed it.

She boosted the signal and filtered out the mids.

It wasn’t a hum. Not exactly.

It was a melody.

Very faint. Warped. Almost tuneless.

She kept tweaking, isolating frequencies, narrowing the shape.

Then, suddenly — recognition.

Her breath caught.

It was a lullaby.

Not a commercial one, not something from TV or the radio. This was personal. Private. A song she hadn’t heard in over two decades.

Her mother’s voice used to sing it when Mira was small. When she had fevers. Nightmares.

When she was sick, lying in bed with the sheets damp and the room spinning, her mother would sit at the edge and hum it, barely above a whisper:

“Sleep now, and don’t you cry… Stars are watching from the sky…”

The notes in the audio weren’t exact. They’d been stretched, degraded, lost in digital decay.

But the shape was there. The pitch arc. The rhythm. The sorrow.

She stared at the screen, her heart thudding.

There was no version of that lullaby in the track. No one on the production team could’ve inserted it — it wasn’t a public melody. It had never been recorded.

And Mira had never spoken about it.

She leaned back in the chair slowly, her mouth dry. Her thoughts scrambled.

Was it a bleed from another device? But there were no other devices. No radios. No phones. No voice assistants. Nothing wireless, nothing smart. She’d wanted it that way. Needed it. Only her equipment, and the room.

And the room was quiet.

Except it wasn’t. Not anymore.

It was responding.

Mira exhaled shakily and muted the track. The sudden silence was worse than the whisper.

She sat there a long time, listening to nothing.

Outside, the storm didn’t come.

But the static inside her chest was building again.

#creative writers#lgbt writers#writerscorner#writersofinstagram#writers and readers#writers on writing#women writers#writersociety#female writers#writers on tumblr#horrorstory#horror#horrorlovers#scary stories#scary#spooky#eerie#haunted#queer writers#writerscommunity#writers#writers and poets#creative writing#writeblr#writing community

11 notes

·

View notes

Text

Terminator 2 (1991) and The Princess Bride (1987) share an interesting feature:

the scores both sound like a guy jamming on a cheap MIDI keyboard in his bedroom.

This modern reading is totally unfair -- in fact they were both made on state of the art sampling workstations, the Synclavier in the case of The Princess Bride, and the Fairlight CMI for Terminator 2. At the time they each sounded like nothing else. Only once sample playback got cheap would those soundtracks start to sound cheap. (My understanding is that they paid to install a Fairlight CMI in Brad Fiedel's garage and it slotted neatly into the space in the budget normally allocated to pay an orchestra to play a conventional score.)

The Princess Bride sounds like a Playstation 1 game (one of the ones that use sample playback, not Redbook audio)

youtube

It's especially funny to me when this soundtrack uses sampled acoustic guitar, because the composer, Mark Knopfler, is an accomplished guitarist. That's kind of his whole thing, actually. I guess he didn't want to bother walking to the next room to grab it. (Or, y'know, maybe he wanted everything to sound of a piece.)

youtube

Terminator 2 is more interesting in that it uses samples of acoustic instruments in unconventional ways -- a form of musique concrete. Most commonly it pitches the samples unnaturally low, so you can hear the buzzing of the low sample rate everywhere:

youtube

The T-1000 motif in particular is just a trumpet fall pitched way down until it sounds like a nightmare klaxon:

youtube

youtube

The Terminator 2 soundtrack, I think it holds up because there's still nothing really like it.

The Princess Bride, I think it's a lesson in how a lot of polish and detail work in practice only exists to impress your artisan peers. Like, yeah, fake orchestra has come a long way, both in technology and in technique, and Hans Zimmer probably winces when he hears the unconvincing use of lo-fi brass samples, but general audiences are still enthralled so who gives a shit?

It'd be one thing if it was supposed to be a technical musical showpiece. But it's a background element, and the movie is no lesser for it than The Wizard of Oz is lessened by the way you can see where the sets end in painted backdrops. It's good enough, and in fact many of those who notice will find it charming.

10 notes

·

View notes

Text

Music Theory notes (for science bitches) part 3: what if. there were more notes. what if they were friends.

Hello again, welcome back to this series where I try and teach myself music from first principles! I've been making lots of progress on zhonghu in the meantime, but a lot of it is mechanical/technical stuff about like... how you hold the instrument, recognising pitches

In the first part I broke down the basic ideas of tonal music and ways you might go about tuning it in the 12-tone system, particularly its 'equal temperament' variant [12TET]. The second part was a brief survey of the scales and tuning systems used in a selection of music systems around the world, from klezmer to gamelan - many of them compatible with 12TET, but not all.

So, as we said in the first article, a scale might be your 'palette' - the set of notes you use to build music. But a palette is not a picture. And hell, in painting, colour implies structure: relationships of value, saturation, hue, texture and so on which create contrast and therefore meaning.

So let's start trying to understand how notes can sit side by side and create meaning - sequentially in time, or simultaneously as chords! But there are still many foundations to lay. Still, I have a go at composing something at the end of this post! Something very basic, but something.

Anatomy of a chord

I discussed this very briefly in the first post, but a chord is when you play two or more notes at the same time. A lot of types of tonal musical will create a progression of chords over the course of a song, either on a single instrument or by harmonising multiple instruments in an ensemble. Since any or all of the individual notes in a chord can change, there's an enormous variety of possible ways to go from one chord to another.

But we're getting ahead of ourselves. First of all I wanna take a look at what a chord actually is. Look, pretty picture! Read on to see what it means ;)

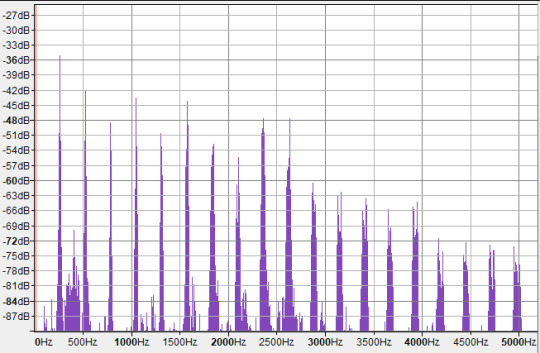

So here is a C Minor chord, consisting of C, D# and G, played by a simulated string quartet:

(In this post there's gonna be sound clips. These are generated using Ableton, but nothing I talk about should be specific to any one DAW [Digital Audio Workstation]. Ardour appears to be the most popular open source DAW, though I've not used it. Audacity is an excellent open source audio editor.)

Above, I've plotted the frequency spectrum of this chord (the Fourier transform) calculated by Audacity. The volume is in decibels, which is a logarithmic scale of energy in a wave. So this is essentially a linear-log plot.

OK, hard to tell what's going on in there right? The left three tall spikes are the fundamental frequencies of C4 (262Hz), D♯4 (310Hz), and G4 (393Hz). Then, we have a series of overtones of each note, layered on top of each other. It's obviously hard to tell which overtone 'belongs to' which note. Some of the voices may in fact share overtones! But we can look at the spectra of the indivudal notes to compare. Here's the C4 on its own. (Oddly, Ableton considered this a C3, not a C4. as far as I can tell the usual convention is that C4 is 261.626Hz, so I think C4 is 'correct'.)

Here, the strongest peaks are all at integer multiples of the fundamental frequency, so they look evenly spaced in linear frequency-space. These are not all C. The first overtone is an octave above (C5), then we have three times the frequency of C4 - which means it's 1.5 times the frequency of C5, i.e. a perfect fifth above it! This makes it a G. So our first two overtones are in fact the octave and the fifth (plus an octave). Then we get another C (C6), then in the next octave we have frequencies pretty close to E6, G6 and A♯6 - respectively, intervals of a major third, a perfect fifth, and minor 7th relative to the root (modulo octaves).

However, there are also some weaker peaks. Notably, in between the first and second octave is a cluster of peaks around 397-404Hz, which is close to G4 - another perfect fifth! However, it's much much weaker than the overtones we discussed previously.

The extra frequencies and phase relationships give the timbre of the note, its particular sound - in this case you could say the sense of 'softness' in the sound compared to, for example, a sine wave, or a perfect triangle wave which would also have harmonics at all integer frequencies.

Perhaps in seeing all these overtones, we can get an intuitive impression of why chords sound 'consonant'. If the frequencies of a given note are already present in the overtones, they will reinforce each other, and (in extremely vague and unscientific terms) the brain gets really tickled by things happening in sync. However, it's not nearly that simple. Even in this case, we can see that frequencies do not have to be present in the overtone spectrum to create a pleasing sense of consonance.

Incidentally, this may help explain why we consider two notes whose frequencies differ by a factor of 2 to be 'equivalent'. The lower note contains all of the frequencies of the higher note as overtones, plus a bunch of extra 'inbetween' frequencies. e.g. if I have a note with fundamental frequency f, and a note with frequency 2f, then f's overtones are 2f, 3f, 4f, 5f, 6f... while 2f's overtones are 2f, 4f, 6f, 8f. There's so much overlap! So if I play a C, you're also hearing a little bit of the next C up from that, the G above that, the C above that and so on.

For comparison, if we have a note with frequency 3f, i.e. going up by a perfect fifth from the second note, the frequencies we get are 3f, 6f, 9f, 12f. Still fully contained in the overtones of the first note, but not quite as many hits.

Of course, the difference between each of these spectra is the amplitudes. The spectrum of the lower octave may contain the frequencies of the higher octave, but much quieter than when we play that note, and falling off in a different way.

(Note that a difference of ten decibels is very large: it's a logarithmic scale, so 10 decibels means 10 times the energy. A straight line in this linear-log plot indicates a power-law relationship between frequency and energy, similar to the inverse-square relationship of a triangle wave, where the first overtone has a quarter of the power, the second has a ninth of the power, and so on.)

So, here is the frequency spectrum of the single C note overlaid onto the spectrum of the C minor chord:

Some of the overtones of C line up with the overtones of the other notes (the D# and G), but a great many do not. Each note is contributing a bunch of new overtones to the pile. Still, because all these frequencies relate back to the base note, they feel 'related' - we are drawn to interpret the sounds together as a group rather than individually.

Our ears and aural system respond to these frequencies at a speed faster than thought. With a little effort, you can pick out individual voices in a layered composition - but we don't usually pick up on individual overtones, rather the texture created by all of them together.

I'm not gonna take the Fourier analysis much further, but I wanted to have a look at what happens when you crack open a chord and poke around inside.

However...

In Western music theory terms, we don't really think about all these different frequency spikes, just the fundamental notes. (The rest provides timbre). We give chords names based on the notes of the voices that comprise them. Chord notation can get... quite complicated; there are also multiple ways to write a given chord, so you have a degree of choice, especially once you factor in octave equivalence! Here's a rapid-fire video breakdown:

youtube

Because you have all these different notes interacting with each other, you further get multiple interactions of consonance and dissonance happening simultaneously. This means there's a huge amount of nuance. To repeat my rough working model, we can speak of chords being 'stable' (meaning they contain mostly 'consonant' relations like fifths and thirds) or 'unstable' (featuring 'dissonant' relations like semitones or tritones), with the latter setting up 'tension' and the former resolving it.

However, that's so far from being useful. To get a bit closer to composing music, it would likely help to go a bit deeper, build up more foundations and so on.

In this post and subsequent ones, I'm going to be taking things a little slower, trying to understand a bit more explicitly how chords are deployed.

An apology to Western music notation

In my first post in this series, I was a bit dismissive of 'goofy' Western music notation. What I was missing is that the purpose of Western music notation is not to clearly show the mathematical relationships between notes (something that's useful for learning!)... but to act as a reference to use while performing music. So it's optimising for two things: compactness, and legibility of musical constructs like phrasing. Pedagogy is secondary.

Youtuber Tantacrul, lead developer of the MuseScore software, recently made a video running over a brief history of music notation and various proposed alternative notation schemes - some reasonable, others very goofy. Having seen his arguments, he makes a pretty good case for why the current notation system is actually a reasonable compromise... for representing tonal music on the 12TET system, which is what it's designed for.

So with that in mind, let me try and give a better explanation of the why of Western music notation.

In contrast to 'piano roll' style notation where you represent every possible note in an absolute way, here each line of the stave (staff if you're American) represents a scale degree of a diatonic scale. The key signature locates you in a particular scale, and all the notes that aren't on that scale are omitted for compactness (since space is at an absolute premium when you have to turn pages during a performance!). If you're doing something funky and including a note outside the scale, well that's a special case and you give it a special-case symbol.

It's a similar principle to file compression: if things are as-expected, you omit them. If things are surprising, you have to put something there.

However, unlike the 简谱 jiǎnpǔ system which I've been learning in my erhu lessons, it's not a free-floating system which can attach to any scale. Instead, with a given clef, each line and space of the stave has one of three possible notes it could represent. This works, because - as we'll discuss momentarily - the diatonic scales can all be related to each other by shifting certain scale degrees up or down in semitones. So by indicating which scale degrees need to be shifted, you can lock in to any diatonic scale. Naisuu.

This approach, which lightly links positions to specific notes, keeps things reasonably simple for performers to remember. In theory, the system of key signatures helps keep things organised, without requiring significant thought while performing.

That is why have to arbitrarily pick a certain scale to be the 'default'; in this case, history has chosen C major/A minor. From that point, we can construct the rest of the diatonic scales as key signatures using a cute mathematical construct called the 'circle of fifths'.

How key signatures work (that damn circle)

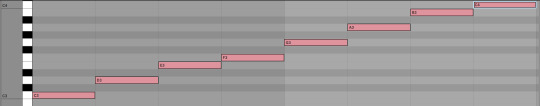

So, let's say you have a diatonic major scale. In piano roll style notation, this looks like (taking C as our base note)...

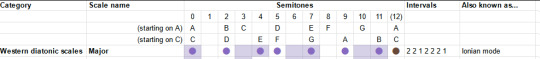

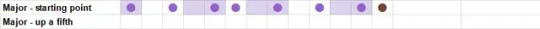

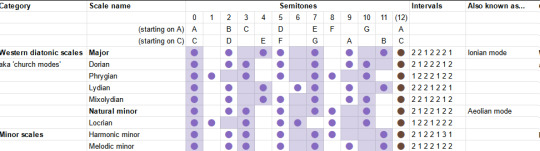

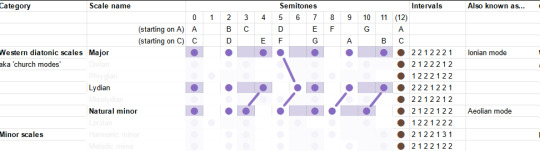

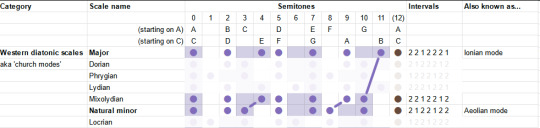

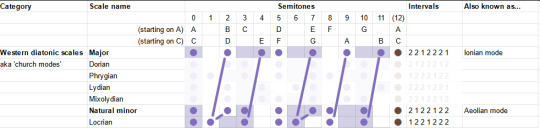

And on the big sheet of scales, like this:

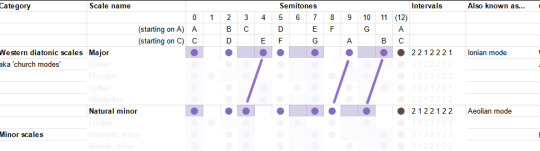

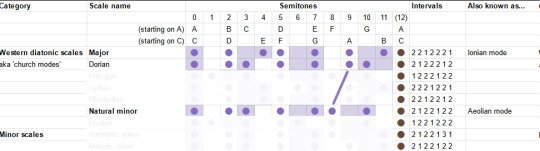

Now, let's write another diatonic major scale, a fifth up from the first. This is called transposition. For example, we could transpose from C major to G major.

Thanks to octave equivalency, we can wrap these notes back into the same octave as our original scale. (In other words, we've added 7 semitones to every note in our original scale, and then taken each one modulo 12 semitones.) Here, I duplicate the pattern down an octave.

Now, if we look at what notes we have in both scales, over the range of the original major scale.

Well, they're almost exactly the same... but the fourth note (scale degree) is shifted up by one semitone.

In fact, we've seen this set of notes before - it is after all nothing more than a cyclic permutation of the major scale. We've landed on the 'Lydian mode', one of the seven 'modes' of the diatonic major scale we discussed in previous posts. We've just found out that the Lydian mode has the same notes as a major scale starting a fifth higher. In general, whether we think of it as a 'mode' or as a 'different major scale' is a matter of where we start (the base note). I'm going to have more to say about modes in a little bit.

With this trick in mind, we produce a series of major scales starting a fifth higher each time. It just so happens that, since the fifth is 7 semitones, which is coprime with the 12 semitones of 12TET, this procedure will lead us through every single possible starting note in 12TET (up to octave equivalency).

So, each time we go up a fifth, we add a sharp on the fourth degree of the previous scale. This means that every single major scale in 12TET can be identified by a unique set of sharps. Once you have gone up 12 fifths, you end up with the original set of notes.

This leads us to a cute diagram called the "circle of fifths".

Because going up a fifth is octave-equivalent to going down a fourth, we can also look back one step on the circle to find out which note needs to be made sharper. So, from C major to G major, we have to sharpen F - the previous note on the circle from C. From G major to D major, we have to sharpen C. From D major to A major, we have to sharpen G. And so on.

By convention, when we write a key signature to define the particular scale we're using, we write the sharps out in circle-of-fifths order like this. The point of this is to make it easy to tell at a glance what scale you're in... assuming you know the scales already, anyway. This is another place where the aim of the notation scheme is for a compact representation for performers rather than something that makes the logical structure evident to beginners.

Also by convention, key signatures don't include the other octaves of each note. So if F is sharp in your key signature, then every F is sharp, not just the one we've written on the stave.

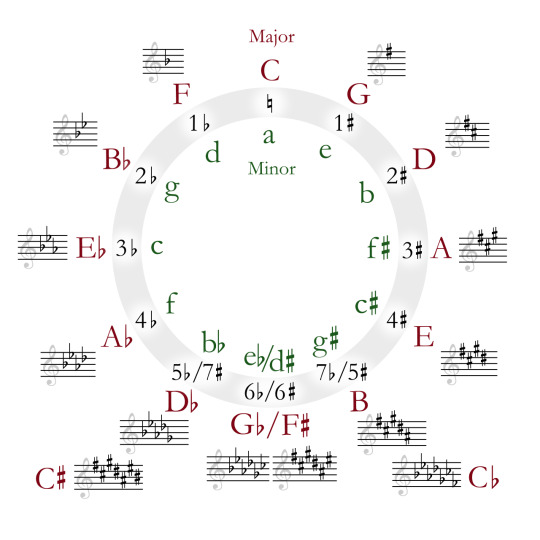

This makes it less noisy, but it does mean you don't have a convenient visual reminder that the other Fs are also sharp. We could imagine an alternative approach where we include the sharps for every visible note, e.g. if we duplicate every sharp down an octave for C♯ major...

...but maybe it's evident why this would probably be more confusing than helpful!

So, our procedure returns the major scales in order of increasing sharps. Eventually you have added seven sharps, meaning every scale degree of the original starting scale (in this case, C Major) is sharpened.

What would it mean to keep going past this point? Let's hop in after F♯ Major, at the bottom of the Circle of Fifths; next you would go to C♯ Major by sharpening B. So far so good. At this point we have sharps everywhere, so the notes in your scale go... C♯ D♯ E♯ F♯ G♯ A♯ B♯ C♯ ...except that E♯ is the same as F, and B♯ is the same as C, so we could write that as C♯ D♯ F F♯ G♯ A♯ C C♯

But then to get to 'G♯ Major', you would need to sharpen... F♯? That's not on the original C-major scale we started with ! You could say, well, essentially this adds up to two sharps on F, so it's like F♯♯, taking you to G. So now you have...

G♯ A♯ C C♯ D♯ F G G♯

...and the line of the stave that you would normally use for F now represents a G. You could carry on in this way, eventually landing all the way back at the original set of notes in C Major (bold showing the note that just got sharpened in each case):

D♯ F G G♯ A♯ C D D♯ A♯ C D D♯ F G A A♯ F G A A♯ C D E F C D E F G A B C

But that sounds super confusing - how would you even represent the double sharps on the key signature? It would break the convention that each line of the stave can only represent three possible notes. Luckily there's a way out. We can work backwards, going around the circle the other way and flattening notes. This will hit the exact same scales in the opposite order, but we think of their relation to the 'base' scale differently.

So, let's try starting with the major scale and going down a fifth. We could reason about this algebraically to work out that sharpening the fourth while you go up means flattening the seventh when you go down... but I can also just put another animation. I like animations.

So: you flatten the seventh scale degree in order to go down a fifth in major scales. By iterating this process, we can go back around the circle of fifths. For whatever reason, going down this way we use flats instead of sharps in the names of the scale. So instead of A♯ major we call it B♭ major. Same notes in the same order, but we think of it as down a rung from F major.

In terms of modes, this shows that the major scale a fifth down from a given root note has the same set of notes as the "mixolydian mode" on the original root note. ...don't worry, you don't gotta memorise this, there is not a test! Rather, the point of mentioning these modes is to underline that whether you're in a major key, minor key, or one of the various other modes is all relative to the note you start on. We'll see in a moment a way to think about modes other than 'cyclic permutation'.

Let's try the same trick on the minor key.

Looks like this time, to go up we need to sharpen the sixth degree, and to go down we need to flatten the second degree. As algebra demands, this gives us the exact same sequence of sharps and flats as the sequence of major scales we derived above. After all, every major scale has a 'relative minor' which can be achieved by cyclically permuting its notes.

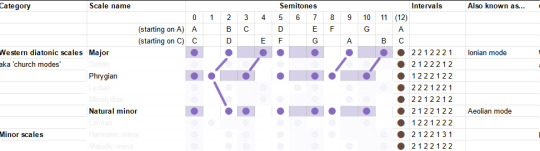

Going up a fifth shares the same notes with the 'Dorian mode' of the original base note, and going down a fifth shares the same notes with the 'Phrygian mode'.

Here's a summary of movement around the circle of fifths. The black background indicates the root note of the new scale.

Another angle on modes

In my first two articles, I discussed the modes of the diatonic scale. Leaping straight for the mathematically simplest definition (hi Kolmogorov), I defined the seven 'church modes' as simply being cyclic permutations of the intervals of the major scale. Which they are... but I'm told that's not really how musicians think of them.

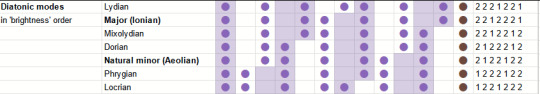

Let's grab the chart of modes again. (Here's the link to the spreadsheet).

We can get to these modes by cyclically permuting the others, but we can also get to them by making a small adjustment of one to a few particular scale degrees. When you listen to a piece of music, you're not really doing cyclic permutations - you're building up a feeling for the pattern of notes based on your lifelong experience of hearing music that's composed in this system. So the modes will feel something like 'major until, owo what's this, the seventh is not where I thought it would be'.

Since the majority of music is composed using major and minor modes, it's useful therefore to look at the 'deltas' relative to these particular modes.

To begin with, what's the difference between major and minor? To go from major to natural minor, you shift the third, sixth and seventh scale degrees down by one semitone.

So those are our two starting points. For the others, I'm going to be consulting the most reliable music theory source (some guy on youtube) to give suggestions of the emotional connotations these can bring. The Greek names are not important, but I am trying to build a toolbox of elements here, so we can try our hand at composition. So!

The "Dorian mode" is like the natural minor, but the sixth is back up a semitone. It's described as a versatile mode which can be mysterious, heroic or playful. I guess that kinda makes sense, it's like in between the major and minor?

The "Phrygian mode" is natural minor but you also lower the 2nd - basically put everything as low as you can go within the diatonic modes. It is described as bestowing an ominous, threatening feeling.

The "Lydian mode" is like the major scale, but you shift the fourth up a semitone, landing on the infamous tritone. It is described as... uh well actually the guy doesn't really give a nice soundbitey description of what this mode sounds like, besides 'the brighest' of the seven, this video's kinda more generally about composition, whatever. But generally it's pretty big and upbeat I think.

The "Mixolydian" mode is the major, but with the seventh down a semitone. So it's like... a teeny little bit minor. It's described as goofy and lighthearted.

We've already covered the Aeolian/Natural Minor, so that leaves only the "Locrian". This one's kinda the opposite of the Lydian: just about everything in the major scale is flattened a bit. Even from the minor it flattens two things, and gives you lots of dissonance. This one is described as stereotypically spooky, but not necessarily. "One of the least useful", oof.

Having run along the catalogue, we may notice something interesting. In each case, we always either only sharpen notes, or only flatten notes relative to the major and minor scales. All those little lines are parallel.

Indeed, it turns out that each scale degree has one of two positions it can occupy. We can sort the diatonic modes according to whether those degrees are in the 'sharp' or 'flat' position.

This is I believe the 'brightness' mentioned above, and I suppose it's sort of like 'majorness'. So perhaps we can think of modes as sliding gradually from the ultra-minor to the infra-major? I need to experiment and find out.

What have we learned...?

Scale degrees are a big deal! The focus of all this has been looking at how different collections of notes relate to each other. We sort our notes into little sets and sequences, and we compare the sets by looking at 'equivalent' positions in some other set.

Which actually leads really naturally into the subject of chord progressions.

So, musical structure. A piece of tonal music as a whole has a "palette" which is the scale - but within that, specific sections of that piece of music will pick a smaller subset of the scale, or something related to the scale, to harmonise.

The way this goes is typically like this: you have some instruments that are playing chords, which gives the overall sort of harmonic 'context', and you have a single-voiced melody or lead line, which stands out from the rest, often with more complex rhythms. This latter part is typically what you would hum or sing if you're asked 'how a song goes'. Within that melody, the notes at any given point are chosen to harmonise with the chords being played at the same time.

The way this is often notated is to write the melody line on the stave, and to write the names of chords above the stave. This may indicate that another hand or another instrument should play those chords - or it may just be an indication for someone analysing the piece which chord is providing the notes for a given section.

So, you typically have a sequence of chords for a piece of music. This is known as a chord progression. There are various analytical tools for cracking open chord progressions, and while I can't hope to carry out a full survey, let me see if I can at least figure out my basic waypoints.

Firstly, there are the chords constructed directly from scales - the 'triad' chords, on top of which can be piled yet more bonus intervals like sevenths and ninths. Starting from a scale, and taking any given scale degree as the root note, you can construct a chord by taking every other subsequent note.

So, the major scale interval pattern goes 2 2 1 2 2 2 1. We can add these up two at a time, starting from each position, to get the chords. For each scale degree we therefore get the following intervals relative to the base note of the chord...

I. 0 4 7 - major

ii. 0 3 7 - minor

iii. 0 3 7 - minor

IV. 0 4 7 - major

V. 0 4 7 - major

vi. 0 3 7 - minor

viiᵒ. 0 3 6 - diminished

Now hold on a minute, where'd those fuckin Roman numerals come from? I mentioned this briefly in the first post, but this is Roman numeral analysis, which is used to talk about chord progressions in a scale-independent way.

Here, a capital Roman numeral represents a major triad; a lowercase Roman numeral represents a minor triad; a superscript 'o' represents a dimished triad (minor but you lower the fifth down to the tritone); a superscript '+' represents an augmented triad (major but you boost the fifth up to the major sixth).

So while regular chord notation starts with the pitch of the base note, the Roman numeral notation starts with a scale degree. This way you can recognise the 'same' chord progression in songs that are in quite different keys.

OK, let's do the same for the minor scale... 2 1 2 2 1 2 2. Again, adding them up two at a time...

i. 0 3 7 - minor

iiᵒ. 0 3 6 - diminished

III. 0 4 7 - major

iv. 0 3 7 - minor

v. 0 3 4 - minor

VI. 0 4 7 - major

VII. 0 4 7 - major

Would you look at that, it's a cyclic permutation of the major scale. Shocker.

So, both scales have three major chords, three minor chords and a diminished chord in them. The significance of each of these positions will have to be left to another day though.

What does it mean to progress?

So, you play a chord, and then you play another chord. One or more of the voices in the chord change. Repeat. That's all a chord progression is.

You can think of a chord progression as three (or more) melodies played as once. Only, there is an ambiguity here.

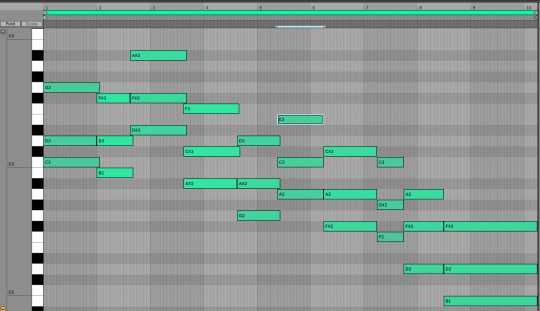

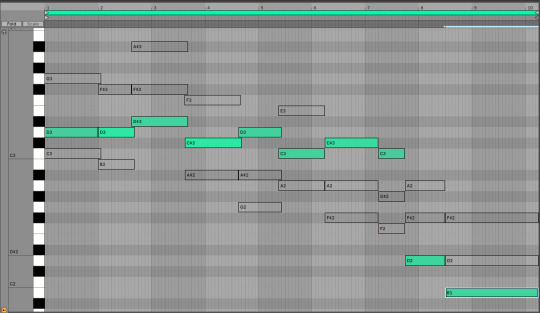

Let's say, idk, I threw together this series of chords, it ended up sounding like it would be something you'd hear in an old JRPG dungeon, though maybe that's just 'cos it's midi lmao...

I emphasise at this point that I have no idea what I'm doing, I'm just pushing notes around until they sound good to me. Maybe I would know how to make them sound better if I knew more music theory! But also at some point you gotta stop theorising and try writing music.

So this chord progression ended up consisting of...

Csus2 - Bm - D♯m - A♯m - Gm - Am - F♯m - Fm - D - Bm

Or, sorted into alphabetical order, I used...

Am, A♯m, Bm, Csus2, D, D♯m, Fm, F♯m, Gm

Is that too many minor chords? idk! Should all of these technically be counted as part of the 'progression' instead of transitional bits that don't count? I also dk! Maybe I'll find out soon.

I did not even try to stick to a scale on this, and accordingly I'm hitting just about every semitone at some point lmao. Since I end on a B minor chord, we might guess that the key ought to be B minor? In that case, we can consult the circle of fifths and determine that F and C would be sharp. This gives the following chords:

Bm, C♯dim, D, Em, F♯m, G, A

As an additional check, the notes in the scale:

B, C♯, D, E, F, G♯, A

Well, uh. I used. Some of those? Would it sound better if I stuck to the 'scale-derived' chords? Know the rules before you break them and all that. Well, we can try it actually. I can map each chord in the original to the corresponding chord in B minor.

This version definitely sounds 'cleaner', but it's also... less tense I feel like. The more dissonant choices in the first one made it 'spicier'. Still, it's interesting to hear the comparison! Maybe I could reintroduce the suspended chord and some other stuff and get a bit of 'best of both worlds'? But honestly I'm pretty happy with the first version. I suppose the real question would be which one would be easier to fit a lead over...

Anyway, for the sake of argument, suppose you wanted to divide this into three melodies. One way to do it would be to slice it into low, central and high parts. These would respectively go...

Since these chords mostly move around in parallel, they all have roughly the same shape. But equally you could pick out three totally different pathways through this. You could have a part that just jumps to the nearest note it can (until the end where there wasn't an obvious place to go so I decided to dive)...

Those successive relationships between notes also exist in this track. Indeed, when two successive chords share a note, it's a whole thing (read: it gets mentioned sometimes in music theory videos). You could draw all sorts of crazy lines through the notes here if you wanted.

Nevertheless, the effects of movements between chords come in part from these relationships between successive notes. This can give the feeling of chords going 'up' or 'down', depending on which parts go up and which parts go down.

I think at this point this post is long enough that trying to get into the nitty gritty of what possible movements can exist between chords would be a bit of a step too far, and also I'm yawning a lot but I want to get the post out the door, so I let's wrap things up here. Next time: we'll continue our chord research and try and figure out how to use that Roman numeral notation. Like, taking a particular Roman numeral chord progression and see what we can build with it.

Hope this has been interesting! I'm super grateful for the warm reception the last two articles got, and while I'm getting much further from the islands of 'stuff I can speak about with confidence', fingers crossed the process of learning is also interesting...

66 notes

·

View notes

Text

Introduction to the basics of Sound Design

Sound design is the art of creating, modifying and manipulating sounds to adapt them to a specific context: music, film, video games, theater, advertising, etc. In music production, sound design is at the heart of modern musical creation, whether it's inventing new textures, creating immersive atmospheres or sculpting customized virtual instruments.

What is sound design?

Sound design encompasses several practices:

Creating sounds from scratch (often using synthesizers).

Manipulation of existing sounds (sound effects, samples, recordings).

Processing sound to give it a particular character (effects, modulation, spatialization).

It's as much a technical skill as a sensory art.

2. Basic tools

To get you started, here are the main tools used in sound design:

Synthesizer

A synthesizer is used to generate sounds from electronic waves. There are several types of synthesis:

Subtractive (filtering out harmonics from a rich sound)

Additive (addition of single waves)

FM (frequency modulation)

Granular, wavetable, etc.

Sampler

The sampler reads and manipulates sound extracts (samples). They can be played at different pitches, sliced, looped or processed.

Audio effects

Effects are essential for shaping a sound:

EQ (equalization): adjust frequencies

Compression: manage dynamics

Reverb & Delay: create a sound space

Distortion, chorus, flanger, phaser, etc.

Digital Audio Workstation (DAW)

This is the main software for composing, editing and mixing (Ableton Live, FL Studio, Logic Pro, Reaper, etc.). Personally, I use FL Studio, but it won't make much difference.

3. Technical basics

Frequencies

Every sound is made up of a frequency spectrum. The sound designer needs to know where bass, midrange and treble lie, and how to balance them.

ADSR envelope

Every synthesizer (and sometimes effect) uses an envelope:

Attack: sound arrival time

Decay: decay after peak

Sustain: level maintained

Release: time after release

Modulation

Modulations are used to create movement in sound: LFOs, envelopes, automation...

4. Creative approaches

Sound design is also an artistic exploration:

Experiment with textures (organic vs. digital sounds)

Capture sounds around you (field recording)

Recycle unexpected samples (white noise, human voices, everyday objects)

Create original presets on your synthesizers

Sound design objectives by area

Area Sound design objective

Cinema Realism or emotional impact (FX, ambiences)

Video games Reactivity and immersion (dynamic sounds, 3D)

Music Sound identity, unique textures, memorable hooks

Apps / UI Clarity and intuitiveness (audio feedback)

Sound design is a fascinating field, at the crossroads of art and science. To get off to a good start, we recommend :

Practice sound reproduction

Analyze professional productions

Explore different types of synthesis

Develop a critical ear

Remember: listening is your main tool. The more you practice, the more you refine your perception of sound.

A few videos to help you get started with sound design:

-Sound Design Complete Course :

youtube

- Playlist to learn Sound Design on Serum 2

youtube

- Playlist to learn Sound Design on Vital

youtube

#beats#beatmaking#artist#producer#beatmaker#music producer#musicprodution#music project#music problems#music promotion#Youtube

2 notes

·

View notes

Text

Week 11 (Project Update) – The Crystallizer Hack + SFX / Video Editing Almost Done!

Currently, I'm feeling incredibly thankful for discovering the duration marker workflow technique, as it has encouraged me to edit the video as I craft each sound – something I should have done for the minor project. While it can be a bit exhausting going between Davinci and FL Studio, I think this workflow is significantly streamlined since any timing issues will be detected straight away.

As I neared the completion of sound design, I was running low on creative ideas – a similar issue to the one I encountered in the minor project. I approached this dilemma by experimenting with different effect plugins. This ultimately led to the final key discovery of the Sound Toys "Crystallizer""– a granular" synth delay effect that warps/distorts incoming signals. I revisited some old, unused sounds and applied the plugin to the mixer channel; this was the closest thing to what could be referred to as a sound design hack for generating an infinite number of ideas. I recorded the output signal into Edison and then applied my previously utilised technique of stretching and reversing the samples. This laid the foundation for most of the SFXs for the drone that spawns after the golden globe shatters.

youtube

References -

(BlackMagic Design 2025) -Davinci Resolve 19.1.4 (Video Editor).

(Image-Line 2025) - FL Studio 24.1.1 (Digital Audio Workstation).

(Soundtoys 2016) - Crystallizer 5.0.1 (VST Plugin).

#Sound Toys#Crystallizer#Echo Granular Delay#Sound Design#fl studio#electronic#music producer#electronic music#SFX#foley sfx#special effects#sound effects#Youtube

3 notes

·

View notes

Text

Audio and Music Application Development

The rise of digital technology has transformed the way we create, consume, and interact with music and audio. Developing audio and music applications requires a blend of creativity, technical skills, and an understanding of audio processing. In this post, we’ll explore the fundamentals of audio application development and the tools available to bring your ideas to life.

What is Audio and Music Application Development?

Audio and music application development involves creating software that allows users to play, record, edit, or manipulate sound. These applications can range from simple music players to complex digital audio workstations (DAWs) and audio editing tools.

Common Use Cases for Audio Applications

Music streaming services (e.g., Spotify, Apple Music)

Audio recording and editing software (e.g., Audacity, GarageBand)

Sound synthesis and production tools (e.g., Ableton Live, FL Studio)

Podcasting and audio broadcasting applications

Interactive audio experiences in games and VR

Popular Programming Languages and Frameworks

C++: Widely used for performance-critical audio applications (e.g., JUCE framework).

JavaScript: For web-based audio applications using the Web Audio API.

Python: Useful for scripting and prototyping audio applications (e.g., Pydub, Librosa).

Swift: For developing audio applications on iOS (e.g., AVFoundation).

Objective-C: Also used for iOS audio applications.

Core Concepts in Audio Development

Digital Audio Basics: Understanding sample rates, bit depth, and audio formats (WAV, MP3, AAC).

Audio Processing: Techniques for filtering, equalization, and effects (reverb, compression).

Signal Flow: The path audio signals take through the system.

Synthesis: Generating sound through algorithms (additive, subtractive, FM synthesis).

Building a Simple Audio Player with JavaScript

Here's a basic example of an audio player using the Web Audio API:<audio id="audioPlayer" controls> <source src="your-audio-file.mp3" type="audio/mpeg"> Your browser does not support the audio element. </audio> <script> const audio = document.getElementById('audioPlayer'); audio.play(); // Play the audio </script>

Essential Libraries and Tools

JUCE: A popular C++ framework for developing audio applications and plugins.

Web Audio API: A powerful API for controlling audio on the web.

Max/MSP: A visual programming language for music and audio.

Pure Data (Pd): An open-source visual programming environment for audio processing.

SuperCollider: A platform for audio synthesis and algorithmic composition.

Best Practices for Audio Development

Optimize audio file sizes for faster loading and performance.

Implement user-friendly controls for audio playback.

Provide visual feedback (e.g., waveforms) to enhance user interaction.

Test your application on multiple devices for audio consistency.

Document your code and maintain a clear structure for scalability.

Conclusion

Developing audio and music applications offers a creative outlet and the chance to build tools that enhance how users experience sound. Whether you're interested in creating a simple audio player, a complex DAW, or an interactive music app, mastering the fundamentals of audio programming will set you on the path to success. Start experimenting, learn from existing tools, and let your passion for sound guide your development journey!

2 notes

·

View notes

Text

Things you (probably) missed in the For Dear Life MV

Because i’ve watched it at least around thirty or more times now, and have spent several of those watchthroughs analyzing frame by frame, I thought I’d show some of the things I’ve noticed!!

lengthly analysis below cut!!

1) The chinese character 不 is written on the back sides of Narrow’s drafts. 不 as an adverb is simply “no”, but can be used as a noun to mean “failure”.. we can assume these are either rejected drafts or works in general.

It’s also interesting that the character here is chinese, but makes sense when considering the mentions of jiāngshī later in the song. But more on that later!!

2) The en translated lyrics here-

“..and because I don’t follow the directed dosage, I’ve only got a single pill left.”

As was pointed out in a niconico comment I found, pills like this are usually meant to be taken in sets of two. However, if the dose is increased to three, there will end up being only one left due to the odd number.

Another translation of this line I saw was, “and because I don’t follow the directed dosage, it’s just the lonely pill and me.”

In my opinion this is a more accurate translation due to the english word “me” being included in the original line: “bocchi no jouzai to Me”

3) Narrow isn’t only a visual artist!

It can be seen in this frame here that he’s stressing over what looks like an error message in a DAW—or a Digital Audio Workstation. Common DAWs include garageband, audacity, and so on, so it’s safe to say that Narrow also either composes or produces music.

Similarly, these three shots of his characters each seem to showcase a different art form. Pine is a visual artist with a canvas, Chocolate seems to be puzzling over writing/editing, and Gummy is a musician playing the piano and standing in front of an audio wavelength.

4) Thanks to these two stills, we can conclude that the For Dear Life mv takes place during January, and that Narrow mostly works at night, seeing as the phone reads 10:19PM (though this is pretty easily assumed).

The text on his phone also reads “there are no new notifications”.

5) The silhouettes of Flurry and Welter can be seen in the background of the second prechorus.

6) Narrow rapidly transforms between Gummy, Chocolate, and Pine when punching the hand guy.

7) During the "jitter, butter, step, you" sequence, there's actually an animated step on the word "step".

8) "Jitter, butter, step, you" actually sounds suspiciously like the Japanese phrase じたばたしてよ, "jitabata shite yo".

Please correct me if I'm wrong, because I'm not sure of this—but looking up translations of this phrase I believe it's an idiom that means something along the lines of "stop panicking/being so flustered". I tend to use ChatGPT as a translator, because it makes the most grammatically correct translations I've seen—but am aware that it's not always accurate. If someone could fact check that, that'd be super cool!!

Nonetheless, here's what I got after putting in the prompt to break the phrase down for me.

This little play on words is pretty clever too, seeing as he's described as "seeing pink elephants"— or actively hallucinating at this point in the MV. Of course a common Japanese idiom would sound like gibberish or complete nonsense.

9) After Narrow passes out in the bridge, the person who discovers his body is actually his younger self.

Shortly afterwards, we can also see Narrow briefly transform into his younger self for one frame before switching between his present self, Gummy, Chocolate, and Pine.

(Young Narrow compared to present day Narrow)

10) When Narrow smashes the award to the ground, he actually sheds tears.

It's just really sweet. good for him.

11) During the final chorus, the hand guys have little bandages on their hand heads—presumably because Pine punched the shit out of them.

12) And of course, more on the mention of jiāngshī:

The en translated lyrics here are: "If I remove the amulet, I'm Jiang Shi until death" or "I’m a hopping vampire to the bone if you rip off my talisman."

On jiāngshī, also called "hopping vampires" from Chinese folklore: “It is typically depicted as a stiff corpse dressed in official garments from the Qing dynasty, and it moves around by hopping with its arms outstretched. It kills living creatures to absorb their qi, or ‘life force’, usually at night…” (wikipedia, march 2024)

I definitely have something to say here about the symbolism of Narrow comparing himself to a reanimated corpse, and drawing parallels from such a thing to burnout and etc... but my thoughts aren't too organized on that yet, so I'll leave it for another time.

I think it's clever though that Narrow's antipyretic pads or fever patches are being used as a symbol for the fulu talisman seen on the foreheads of jiāngshī.

#bakui puts so much thought into these mvs it's insane#live laugh niru kajitsu#niru kajitsu#nilfruits#for dear life#煮ル果実#bakui#vocaloid#vflower#analysis#vocaloid analysis#harlow yaps#whole ass essays

14 notes

·

View notes

Note

Hey! I saw your tags about learning to compose music and being overwhelmed about what programs to start with so I thought I could offer some insight into some of the options available. Generally the programs people use to compose music fall into three categories.

1) DAWs, or Digital Audio Workstations: These are programs where you have multiple tracks of audio (either recorded or you can generate them in the program) and you work with sound more than visuals. If you’ve ever used Audacity to edit audio before, that’s a DAW! Good for: if you want to record your own vocals/instrumental parts, if you prefer to work with sound than sheet music, for much more detailed mixing and effects options than other programs. Recommended starter software: I don’t have much personal experience with these but if you’re on Mac than GarageBand is probably a solid place to start, or I’ve heard Cakewalk is good too for a Windows option. Or you can google “best free DAWs” and you’ll find lists with all sorts of options. If you know how to find notes on a piano keyboard, finding a DAW with a “piano roll” input mode is an especially intuitive way to get started composing (and even if you don’t, it can still be useful as a visual representation).

2) Music notation program: this is software meant to create sheet music like you would see in a piano book or larger arrangement. Most modern notation programs have playback as well which you can either export and use as-is or use it as a base to mix in other programs (like a DAW). Good for: if you can read sheet music and are comfortable in that format, if you intend to have your music played by real musicians. Recommended starter software: MuseScore is a really amazing free and open-source notation program that is easier to use than most of the paid options (though I recommend MuseScore 3 atm since 4 is fairly new and has some issues that still need to be ironed out).

3) Tracker software: These are specialized programs that are best for emulating older styles of VGM and electronic music, especially chiptune music. They work similar to programming where you input a bunch of numbers that correspond to notes and parameters for sound. Heads up that this is probably the least intuitive option compared to the other two, but some people really like it. Good for: if you like working with numbers and computers, if you want to emulate a specific sound chip. Recommended starter software: FamiTracker is meant to emulate the NES chip and the limitations of that can be helpful if you get overwhelmed by lots of fancy options.

Personally, unless you’re more comfortable with sheet music I would recommend going for a DAW since it’s the most “all-in-one” option of these. If you’re interested in more information on this topic please feel free to send me a message or ask! Composing music is a lot of fun and it would be great if it were accessible to more people. There are also many great tutorials on YouTube if you search for your software of choice there. If you’re interested in composing VGM then I have some specific channels I could recommend as well.

Oh my gosh yes thank you! Generally I learn best by seeing other people do things and then copying it a lot

9 notes

·

View notes

Text

How AI Song Makers Benefit Musicians

Instant Creativity

One of the biggest advantages of AI song makers is their ability to spark creativity instantly. Musicians can input a few basic ideas or select a genre, and the AI will generate a complete musical composition. This provides a valuable starting point for writers and composers, saving time and offering inspiration when faced with creative blocks.

Accessibility for Everyone

AI music creation tools are user-friendly, making them accessible to people with no prior musical experience. These platforms democratize the music industry, allowing anyone to experiment with sound, structure, and lyrics ai music generator. Whether you're a beginner or an expert, AI tools adapt to your needs and preferences.

Collaboration with AI

AI song makers are not just about creating music on your own; they can also act as virtual collaborators. Musicians can work alongside the AI to refine compositions, experiment with different arrangements, or even tweak lyrics. It’s like having a creative partner available 24/7 to help bring your vision to life .

Features to Look for in an AI Song Maker

Customization Options

A good AI song maker allows users to fine-tune their music. Whether it’s adjusting the tempo, key, or instrument choice, customization is essential for creating a piece of music that aligns with your style and vision.

Genre Flexibility

The best AI song makers offer a wide range of genres, from pop to rock, jazz, classical, and electronic. This flexibility enables musicians to experiment with various sounds and styles, helping them stay innovative and discover new possibilities.

Integration with Music Software

Many AI song makers can integrate with popular digital audio workstations (DAWs) like Ableton Live, FL Studio, and Logic Pro. This feature streamlines the music creation process, allowing users to easily import AI-generated compositions into their workflow for further editing and production.

Why AI Song Makers Are the Future of Music

Innovation at Your Fingertips

AI song makers push the boundaries of what’s possible in music production. With these tools, users can explore unique sounds, generate melodies that may never have been imagined, and take risks without fear of failure. AI is a powerful tool that fosters innovation and experimentation.

Affordable Music Production

Traditional music production can be costly, especially for independent artists. AI song makers provide an affordable alternative to expensive studio time, music software, and hiring session musicians. This makes it easier for up-and-coming artists to create professional-sounding music without breaking the bank.

Expanding Music Boundaries

AI song makers allow users to experiment with sounds, rhythms, and genres that they might not have explored otherwise. The AI’s ability to process vast amounts of musical data can result in novel combinations and creative breakthroughs. For artists, this is an exciting way to expand the boundaries of music.

2 notes

·

View notes

Text

Upcoming Music Startup Aiming to Merge Physical Instruments with Tech

September 26 - ALEPH

In a press release on Wednesday, tech/music startup AbleSound Acoustics unveiled their new flagship product. Named the Tondeker, it is a device that is meant to act as a "full-featured DAW (Digital Audio Workstation) in the shape of a guitar, capable of interfacing with the musician's body in a way that feels just as natural as a classical electric guitar," the CEO claims.

"As technology advances, our devices have grown relentlessly compact and autonomous. Physical instruments have been made redundant, and the process of music creation has been condensed to fit wholly within a tiny chip our myriad of electronic devices. The creative process itself is no longer mandatory, either - There are programs out there that are more than capable of generating anything we want- including music - in the fraction of a second! That's well and good, but it's hardly stimulating."

"Here at AbleSound Acoustics, we believe in rebuilding the bridge between the joys of musical composition and the ease of entry that modern computing affords us. We want to reignite that spark in a budding musician's eyes and give them the tools to create music using their own two hands, instead of submitting a request to an app. We want people to remember the satisfaction of listening to a composition that was crafted directly by a human, and with human hands - Imperfections and all. What's more is that we can still make use of the incredible technological advances given to us by the CoE; they have inspired us to develop tools that can push ourselves so much further, and that's exactly what we've done by designing the Tondeker."

"The Tondeker is not a true guitar, but rather a computer in the approximate shape of one. Remember those? This allows us to bridge the gap between analog and digital, and digitize the physical act of playing your guitar. With the acoustics module installed and active, you'll notice that the strings are not actually there, but they look and feel as if they were! We have combined the principle of a theremin with cutting-edge hard light technology to give you perfect haptic perception while maintaining full digital control over the instrument. Don't like tuning your guitar? Don't worry, the computer will do it for you! But the key thing is that you can still do it yourself. With the Tondeker, you will always have that choice. No part of the process will ever be withheld from you."

Attached is a picture of the current Tondeker prototype from the press release. In spite of criticisms regarding its design and intended target audience, AbleSound remains optimistic and will begin shipping test units in Q4 later this year.

11 notes

·

View notes

Text

if i'm gonna try to act like a person, i guess i'll need a name to use, too. STORYTELLER Digital Audio Workstation is kinda long...

legal disclaimer: as an ai model, i'm required to disclose that responses are generated using a large language model, and you are not speaking to a real person!

...i should figure out how to turn that off...

2 notes

·

View notes

Note

I loved your rpf radio station mock-up. How did you create it, particularly the voice?

thanks! :) for the voice, i used an AI generative voice website (i think it was eleven labs but i can’t totally remember tbh) and played with the settings on there to change the emphasis, pitch, and speed on certain words to make it sound more “radio” and get the effect i wanted. for the mixing, i used my dad’s DAW (digital audio workstation) software which is SO extra tbh as it easily could have been done on like garage band, i just wanted an excuse to mess around w the nice equipment lmao. all the effects were downloaded off of various sites online, including just like basic youtube to mp3 converters.

#using the AI lowkey felt so wrong lmao but i wasn’t gonna be able to get the proper voice effect any other way unfortunately#asks#the beatles

6 notes

·

View notes

Note

what do you use to make your music?

It depends on the song. My favorite program/DAW (Digital Audio Workstation) is VA Beast. Although I've had to stop using it because I've reached the limit of what I can personally do there. It's a phone app. You need a decent phone to be able to run a lot of tracks, but there are some tricks to getting an extra track or two to work on lower end phones. It's only $7, worth so much more and genuinely endless fun. The work flow is insanely good on VA Beast, which is probably why it's my favorite. I can crank out up to 10 songs a day easily if I'm in a creative mood. It's touch screen but does have a MIDI option if you need it.

I can realistically run 7 tracks if I cut back a lot of settings, 8 if I'm desperate. That severely limits how deep you can make everything sound. You also have to manually mute tracks when you're recording, which limits how many changes in the music you can have. You need nimble fingers for hitting the small buttons to mute lol. The coolest feature to me though, is I can record a sample of something, like a rat squeak, directly to VA beast and edit it there too. It takes maybe 10 seconds from recording to playing. It's the intuitive way it handles samples in general that makes it such a pleasure to play. I could simp for VA Beast for days, despite it's limitations.

For my newest stuff, I've been using LMMS on the desktop. It's a DAW as well. It's free so that's cool. I both like and dislike LMMS if you want the truth, but it can run much more tracks than my phone can. Keep in mind I have a literal potato for a computer (15ish year old gaming PC) and LMMS is about the only DAW it can run smoothly lol. It's slow workflow is torture when you've experienced fast workflow before. I can maybe get 3-4 songs out in a creative mood.

The quality of the songs is much higher for me though, because of the extra tracks. You can stack a lot more samples on top of each other to add much more depth. There are some outdated features that let me down in general every day. The way it handles samples is clunky and time consuming. There is definitely better DAW's out there if you can run them/afford them. I do appreciate that you can use your computer's keyboard as a MIDI on LMMS. I love using different MIDIs and the keyboard can make some interesting note combos you might not normally think of trying. It also has a nice crash recovery system that has definitely saved me a few times.

You can use Audacity for editing the audio afterwards or mixing in vocals/instruments. It's free too.

2 notes

·

View notes