#instead of 1 people. & a shape

Explore tagged Tumblr posts

Text

he's too old for this mult-dimensional family road trip

#gravity falls#gf theseus’ guide#stanley pines#stanford pines#mabel pines#dipper pines#stump art#everything is a boat or boat adjacent . what's up with that#ohhh stan i dont draw u enuf ....#ur problem is you hang out exclusively with other people#so if i want to draw you i have to draw multiple people#instead of 1 people. & a shape#tragic

335 notes

·

View notes

Text

A 1 design I cooked up!

#cricket doodles#my art#bfdi#tpot#xfohv#battle for dream island#the power of two#osc#object show community#bfdi one#tpot one#xfohv one#gijinka#bfdi gijinka#x finds out his value#algebraliens#osc gijinka#SO MANY FUCKING TAGS.#Anywho 1 looks a little more cartoony than my other osc gijinkas but that's because I have different rules for algebraliens than objects#Mainly bc well. They're aliens. But also because they're stylistically different from the objects#They have eye whites and malleable shapes and thick limbs that make them much more expressive and squishy looking#So! Instead of making them look like people that are themed around a specific object I made them look like cartoon characters.#Their clothes are essential to their silhouettes and also basically part of their body– they also don't have noses#and also have these huge wide eyes rather than the dots I draw object gijinkas with#In short I put much more focus on silhouette and shape language for algebraliens. I need her to still look like a 1 with legs

217 notes

·

View notes

Text

No one can stop me from making Pedro-shaped tortured little guys

(text under the cut if it's too small to read!)

Subject 116 Unwilling test subject and lab assistant

116 does not remember their name nor precisely how they ended in this predicament, only that they were sold off to the Professor in a shady auction.

116 also lost track of time, they suspect Ctrl + Z did not reverse the effects of the eternal youth formula the Professor shoved down their throat that one time.

Unable to do anything due to the numerous irremovable cuffs on their body, 116 has no choice but to put up with whatever the Professor has in mind for the day.

It's only been them and the Professor for years now (if not more), so they're biding their time until something happens.

Spends their free time trying to escape.

The scientist using them (he insists 116 calls him Professor) created a formula able to reverse any change to 116's body, colloquially called "Ctrl + Z" (Tastes like a mix of liquified butter and cinnamon)

Anti-gravity and paralyzing cuffs activate if they try anything

#Hello people I am alive#Pedro-shaped#hey new tag!#Subject 116#116 my beloved#basically I kept imagining Pedro in situations™ but i was too lazy to create another AU for it#so I just decided to make a little Pedro-shaped guy instead#I have a few of these ''non-AU'' Pedro-shaped dudes#Junkyard King is one of them#anyway#haven't posted art in a while#sorry about that#how y'all been?#toodles#also please reblog and not just like#it brings me down when a post has 10 notes but 1 reblog#thank you!#make an artist's day and reblog their stuff!

18 notes

·

View notes

Text

Can we please tag x female reader smut appropriately. Please.

#mori speaks#im begging#for all my transmen out there who struggle with gendered body terms#who see all the 'shapely fem reader gets her hips blown out' when looking for their fave character#and get triggered immensely despite trying so hard to accept it but cant fucking do it#i block every tag i can think of and still see everything im trying to run from#no one needs to change anything#just add a little tag#one (1) tag#its like tiktokers avoiding tags.#like the 'self care' thing going on there.#instead of using the real word for it. masturbating.#also to fellow humans who cant stand a gendered body! who just want to be#but also people who have gone through something really traumatic and cant read anything remotely close to sex between#two people.#its hard! its hard shit to go through.#and to be reminded of it because someone didnt use the tag you blocked- fucks your entire week.

10 notes

·

View notes

Text

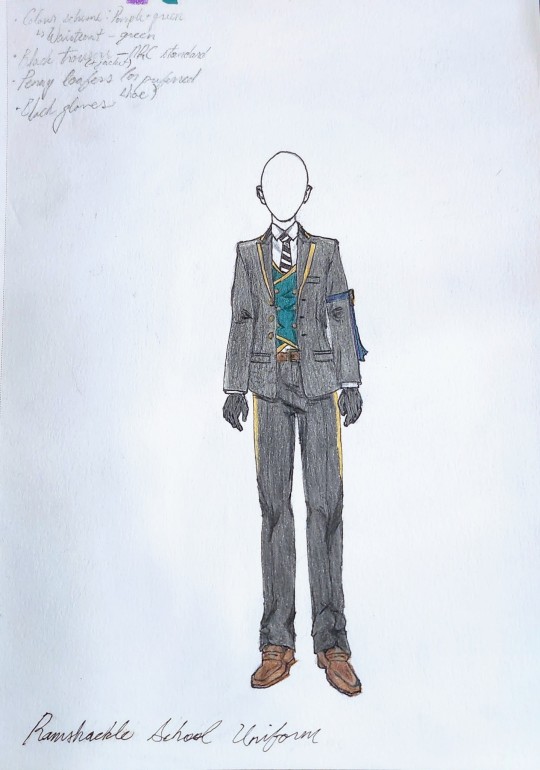

Ramshackle Uniforms

So, a while ago, I made some possible designs for uniforms for Ramshackle dorm, but haven't posted them anywhere yet. This is what I came up with! (please pardon the wonky writing)

School Uniform

Colour scheme: Purple and green

Waistcoat - green

Black trousers & jacket - NRC standard

Penny loafers (or preferred shoe)

Black gloves

The ribbons on the armband are purple (the same as Grim's ribbon) and green (the same as the dark green/teal of Ramshackle's walls). Additionally, the insignia on the armband is the NRC crest.

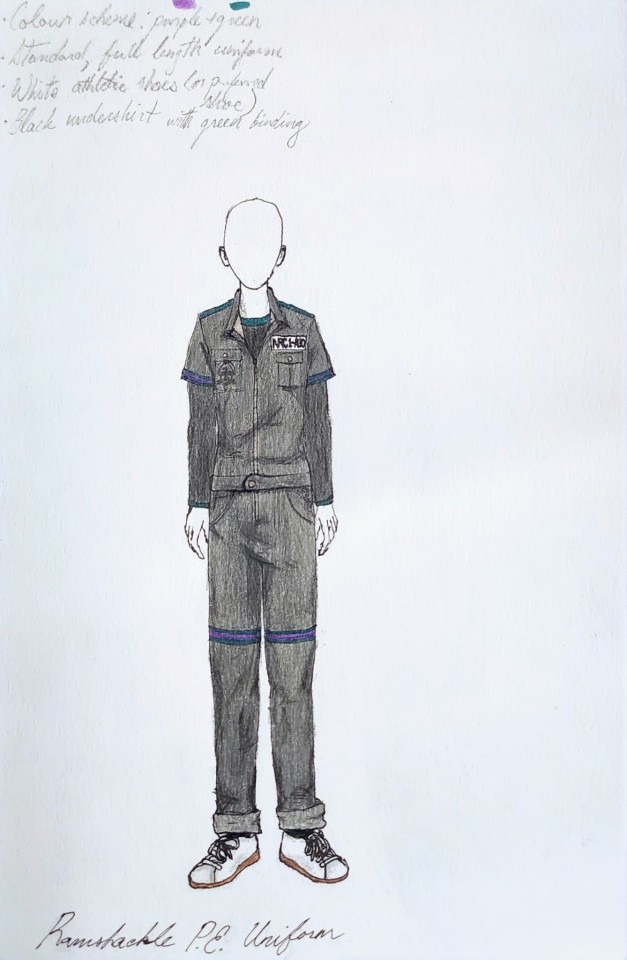

P.E. Uniform

Colour scheme: Purple and green

Standard, full-length uniform

White athletic shoes (or preferred shoe)

Black undershirt with green binding

The insignia on the right chest pocket is, again, the NRC crest.

Labwear

Colour scheme: Purple and green

Standard lab coat and goggles

Insignia on left arm (Night Raven College)

School Uniform beneath

Preferred shoe (ex. penny loafers)

Since the Ramshackle prefect has no magic, they do not have a magic pen in their lab coat pocket like the other students. (Same as in the school uniform.)

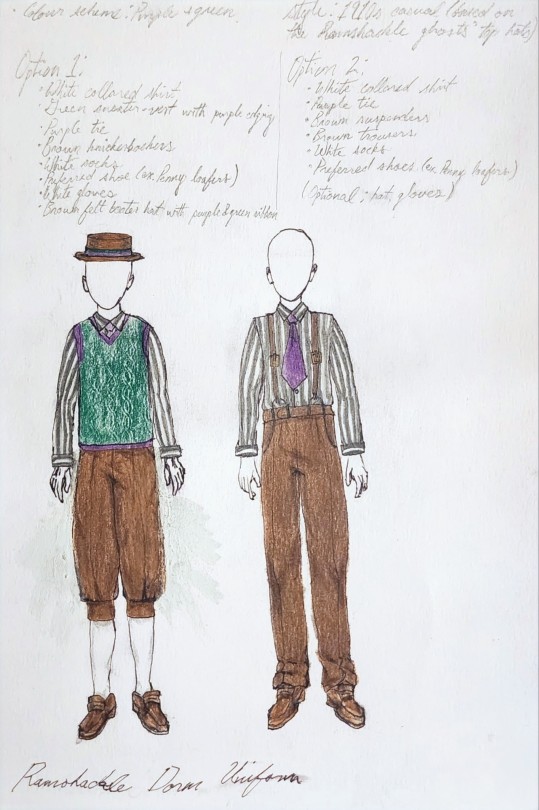

Dorm Uniform(s)

Colour scheme: Purple and green

Style: 1910s casual (based on the Ramshackle ghosts' top hats)

Option 1:

White collared shirt (with black stripes)

Green sweater-vest with purple edging

Purple tie

Brown knickerbockers

White socks

Preferred shoe (ex. Penny loafers)

White gloves

Brown felt boater hat with purple & green ribbon

Option 2:

White collared shirt (with black stripes)

Purple tie

Brown suspenders

Brown trousers

White socks

Preferred shoe (ex. Penny loafers)

(Optional: Hat, gloves)

Note: These are all also the variation of the uniform I would most likely wear, so it includes my own preferences for looseness/buttoning, shoes, gloves, etc. Other Ramshacklers may have different preferences and, thus, would wear things differently!

Additionally, the colours I was using (specifically the green and purple ink I used for the armbands and such) were a bit too dark, so it's sort of hard to see the differentiation between the different coloured stripes. In reality, they would be slightly brighter shades, and more easily discerned from one another.

#twst#twisted wonderland#ramshackle#krenenbaker's :)#krenenbaker's creations#<-new tag for my projects I think!#I'm still not quite used to drawing people - I usually focus on landscapes when I draw but I'm learning some other things!#you can pretty easily tell who I used as reference for the different outfits hey?#also#ignore the white-out in the dorm uniform option 1...#I made the knickerbockers the wrong shape and only noticed after I had lined and coloured already!#;^^#and fun fact: drawing folds/creases on striped shirts is REALLY tricky to figure out!#I probably could have given them faces but I left them empty instead :) Now they're blank slates to insert your own idea of the wearer!#(or something?)

18 notes

·

View notes

Text

oh boy i have to write a 5-6 page autobiography about my Family Experience. Bro my family is the kind of white that, when they came to the US, absolutely obliterated their own cultural background in order to assimilate. It's like if you took a 1950s era recipe for hot dogs in gelatin and turned that into a family philosophy. There's legitimately nothing there. On top of that no one lives within 500 miles of each other and we're all rather poor so no one sees anyone basically ever.

#i mean i'm going to talk about these things bc i have nothing else but.#it doesn't have much to do with proving myself as a Potentially Good Leader lmao#my values have been shaped most by 1. people i've met (not blood) and 2. politics and world events#imo the whole American Individualism thing has really fucked my miserable little tribe over#instead of banding together and supporting each other everyone moves away and never speaks#I'm pretty envious of people with big close families even if they do fight constantly#text posts

1 note

·

View note

Text

Thinking about different languages in the Fodlan verse -

(Duscurian, Srengese, Almyran and Brigidan are mentionned/refered to in supports, so I wouldn't be surprise if Dagda, Morfis and Albinea have their own language)

What if Sothis and the Nabatean had their own language?

Let's say Sothis lands in modern Fodlan, and the humans around speak a weird language she doesn't understand, but thanks to "god magic" she manages to learn it in 1 day.

And yet, when she creates her kids, she gives them her own language (from the blue sea star?) which de facto becomes, on this planet at least, Nabatean language.

Of course her kids have to learn the "human language", and in turn, for the humans who want it, they could learn the Nabatean one.

(Cichol had a cringe phase where he tried to use agarthan slang, but no one is alive to tease him about his younger days)

Zanado 1 (the place where Sothis lived) became some sort of university-giant library-thing where humans could come and study languages of other places, like people from Thinis could learn Agarhtan, Nabatean or proto-Morfisan, etc etc.

Nabatean language was used as liturgy language (since some people considered them gods?) in early Sothisism practices.

Thales was kind of pissed, because there's no need to learn anything about Thinis and those other trash states since they're going to be destroyed, then he started to war against Sothis herself, and we know how that ended.

Fast forward to Zanado 2 (built on the ruins of Zanado 1) - Nabatean is exclusively used as liturgy language, even if it falls more and more because there's no official Church or cult, and after the war, there are less and less Nabateans roaming around to talk to or to share their culture (but the few who travel bring back the "modern and updated" versions of the human language they learnt back then, like, idk, Archaic Palmyran became Early Almyran, or something like that).

Nemesis parties in Zanado, and Rhea is persuaded Nabatean is, bar her, an extinct language (since some humans who worship the Goddess completely forgot the earlier Sothisism rites (tfw 1500 years happen) and sort of do it in their own way).

Getting a new language update (tfw Rhea still think humans in the south speak "Old Enbarrian" when they now use "Classical Enbarrian" and "Vulgar Enbarrian"), Seiros feels conflicted about the new hymnals and preachs of the newly founded "Church of Seiros" being in "Classical Enbarrian" ; it should be in Nabatean, but why teaching those humans a language only spoken and understood by the dead?

Besides, as long as Nemesis exists, Nabatea and everything related to Nabateans should be hidden, else she might be turned in a golden weapon.

Of course, for "Willy reasons", she ended up trying to teach him some words, and Willy was very surprised to learn that in the Nabatean language, there are no swear words so he decided to translate swear words, a roundabout way of telling her Nabatean language isn't extinct yet, it survives and can even evolve by having new uses!

(Now, did Rhea overthink too much about this and thought Willy was really a genius, or was he completely stupid just wanted to insult people in a different language, we will never know)

Thus, Seiros the Warrior believes she can teach, after the War, when all Relics will be found and Nemesis dead, Nabatean language to her followers, so they could share the language and sing hymnals in Nabatean language.

(This idea is fairly popular in the Northern provinces, because it'll another language and not "Enbarrian", so in a way, this church business isn't another way to "Enbarrianise" them (or so they think?).).

But after the WoH and Lycaon's death - Rhea loses faith in humanity, and, in turn, reconsiders her idea of teaching Nabatean language to humans through the Church, after all humans are humans, and Nabateans cannot coexist with them even through language, only Sothis can help them and save Fodlan.

So the CoS will use Classical Enbarrian (lingua franca in Southern Adrestia), but she'll allow people to preach in their vernacular languages, because the most important thing is for the CoS's message to be spread (and not to pretend a dead language of dead people, or people near extinction can survive, because Nabateans need the Goddess).

Fast forward to centuries of years later...

Yuri finds an old bottle of hair dye in the Abyss that was definitely hand written by the Archbishop - when he confronts her about storing her personal belongings (she tried to bullshit him about "Saint Seiros' old belongings" but he has none of her nonsense, why would Saint Seiros use hair dye??) in the Abyss - Rhea's very surprised because Yuri was able to read Nabatean alphabet, and knows the language.

The hair dye incident makes her reconsider, is Nabatean language really dead? Why Aubin taught Yuri the language? Can she have faith in humanity, again? And hope that maybe, one day, Nabatean culture can be revived?

#fodlan hc#fodlan nonsense#because i have no imagination#i imagine the nabatean alphabet to be something like aramaic#which wouldn't make sense for modern people living in Fodlan like duh those aren't letters#Sitri might have tried to sew herself bibs for Billy with nabatean alphabet#she even had a bright idea to ask Rhea to order molds shaped like nabatean letters#to bake cookies for Billy and their future friends!#so they could learn while eating!#but the molds and bibs were sold by Jerry during his escape because he needed booze#he never understood why she kept on drawing those strange symbols maybe it was one of her hobbies#Lycaon 1 called Cichol a 'pot head' in Nabatean when he was very angry at him for telling him to stop holding hands with his friends#and start studying instead#that is when Cichol knew letting Seiros teach the language to people would never work#Nabatean stuff

6 notes

·

View notes

Text

These dates and cut offs are all wrong

#for those who say generations don't exist they do you are confusing them with peers#you are the same generation of someone 10 years younger or 5 years older and can hardly relate except for certain things#you're still the same generation. xennials and zennials for instance instead are sometimes a thing on their own like gen jones for boomers#or can feel more on one side or the other. previous gens until gen x(the worst if u ask me but everyone forgets them always)are a lot more#defined by times than the rest that's why u get confused. zennials are 97-99 they say not 92. a 92 liner is a full millenial#u can search online. also boomers are baby boomers aka from 45 after ww2 since 64 i guess AND THET HAVE EVERY RIGHT TO STAY HERE#stereotypes are bad 1. bc ofc u can be 40 and being raised in a way that makes u feel younger or older 2. not every boomer is a usa rich#spoiled brat. generation is a broad term to indicate the geo political economical state not the people and personally i think it's#important/interesting bc of parenting style pop culture and sociology but it's not a mark and we're talking about million of people ofc#u can be different. this said i feel very millenial and i actually like my gen but i also see its flaws and can't deny the world shaped us

33K notes

·

View notes

Text

the hex❤️🥀

#Shape game posting#AAiUAGGRHUAJDHYSHAJDNKS. Clenches jaw. Falls to my knees#Really big on that thing I see people doing where they use random fucking emojis instead of regular faces#🪥🪥🪥 > 😭💔#“🪂🪂🪂” 🛠️🛠️🛠️🛠️#That’s so funny to me#I’m gonna make a hex charm to match my gameworks/irving one I thibk#Ugh should I make it a character name charm or just the little clay piece…#I guess I can make 1 for Reggie or something. Hi old man.

0 notes

Text

Zoom In, Don’t Glaze Over: How to Describe Appearance Without Losing the Plot

You’ve met her before. The girl with “flowing ebony hair,” “emerald eyes,” and “lips like rose petals.” Or him, with “chiseled jawlines,” “stormy gray eyes,” and “shoulders like a Greek statue.”

We don’t know them.

We’ve just met their tropes.

Describing physical appearance is one of the trickiest — and most overdone — parts of character writing. It’s tempting to reach for shorthand: hair color, eye color, maybe a quick body scan. But if we want a reader to see someone — to feel the charge in the air when they enter a room — we need to stop writing mannequins and start writing people.

So let’s get granular. Here’s how to write physical appearance in a way that’s textured, meaningful, and deeply character-driven.

1. Hair: It’s About Story, Texture, and Care

Hair says a lot — not just about genetics, but about choices. Does your character tame it? Let it run wild? Is it dyed, greying, braided, buzzed, or piled on top of her head in a hurry?

Good hair description considers:

Texture (fine, coiled, wiry, limp, soft)

Context (windblown, sweat-damp, scorched by bleach)

Emotion (does she twist it when nervous? Is he ashamed of losing it?)

Flat: “Her long brown hair framed her face.”

Better: “Her ponytail was too tight, the kind that whispered of control issues and caffeine-fueled 4 a.m. library shifts.”

You don’t need to romanticise it. You need to make it feel real.

2. Eyes: Less Color, More Connection

We get it: her eyes are violet. Cool. But that doesn’t tell us much.

Instead of focusing solely on eye color, think about:

What the eyes do (do they dart, linger, harden?)

What others feel under them (seen, judged, safe?)

The surrounding features (dark circles, crow’s feet, smudged mascara)

Flat: “His piercing blue eyes locked on hers.”

Better: “His gaze was the kind that looked through you — like it had already weighed your worth and moved on.”

You’re not describing a passport photo. You’re describing what it feels like to be seen by them.

3. Facial Features: Use Contrast and Texture

Faces are not symmetrical ovals with random features. They’re full of tension, softness, age, emotion, and life.

Things to look for:

Asymmetry and character (a crooked nose, a scar)

Expression patterns (smiling without the eyes, habitual frowns)

Evidence of lifestyle (laugh lines, sun spots, stress acne)

Flat: “She had a delicate face.”

Better: “There was something unfinished about her face — as if her cheekbones hadn’t quite agreed on where to settle, and her mouth always seemed on the verge of disagreement.”

Let the face be a map of experience.

4. Bodies: Movement > Measurement

Forget dress sizes and six packs. Think about how bodies occupy space. How do they move? What are they hiding or showing? How do they wear their clothes — or how do the clothes wear them?

Ask:

What do others notice first? (a presence, a posture, a sound?)

How does their body express emotion? (do they go rigid, fold inwards, puff up?)

Flat: “He was tall and muscular.”

Better: “He had the kind of height that made ceilings nervous — but he moved like he was trying not to take up too much space.”

Describing someone’s body isn’t about cataloguing. It’s about showing how they exist in the world.

5. Let Emotion Tint the Lens

Who’s doing the describing? A lover? An enemy? A tired narrator? The emotional lens will shape what’s noticed and how it’s described.

In love: The chipped tooth becomes charming.

In rivalry: The smirk becomes smug.

In mourning: The face becomes blurred with memory.

Same person. Different lens. Different description.

6. Specificity is Your Superpower

Generic description = generic character. One well-chosen detail creates intimacy. Let us feel the scratch of their scarf, the clink of her earrings, the smudge of ink on their fingertips.

Examples:

“He had a habit of adjusting his collar when he lied — always clockwise, always twice.”

“Her nail polish was always chipped, but never accidentally.”

Make the reader feel like they’re the only one close enough to notice.

Describing appearance isn’t just about what your character looks like. It’s about what their appearance says — about how they move through the world, how others see them, and how they see themselves.

Zoom in on the details that matter. Skip the clichés. Let each description carry weight, story, and emotion. Because you’re not building paper dolls. You’re building people.

#writeblr#writing community#writers of tumblr#writing tips#character development#creative writing#writing advice#character description#descriptive writing#show don't tell#world building#narrative voice#writing help#fiction writing#amwriting#writing characters

8K notes

·

View notes

Text

Why The Arcane S2 Finale Fumbled, Part I

arcane season 2 was artistically beautiful and thematically cheap. every interesting and meaningful thing it did with its characters (even in season 2 act 2) was reduced to romanticized bullshit, utterly divorced from its season 1 roots. it's so bad it can be considered pro-status quo propaganda (and i do mean that). good ships aside (and i do mean the caitivi, jayvik, timebomb holy triad), this season squats and shits on every zaunite character in the show. not just their zaunite-ness, but how it literally shaped who they were as characters.

Let's start with Vi:

Vi and Vander:

Vi's loyalty to The Lanes always went beyond Powder. Zaun was her father's, Vander's dream. Zaun was her friends and her family. When she's giving Caitlyn a tour of The Lanes, we see how much she embodies and revels in Zaunite culture (esp in the food scene). She took responsibility for the vulnerable, like Vander taught her to. Her "protective" trait extended to ALL the vulnerable in The Lanes, because Vander taught her that. It wasn't EVER just Powder. Zaun is her HOME. As a child, she wanted to make a name for herself IN ZAUN "one day, this city's gonna respect us." You can make the excuse that Vander's death meant that side of her died, but it clearly didn't because of how she regarded it while showing Caitlyn around. "Family" to Vander, extended to the vulnerable of Zaun, which is how Vi and Powder came to be his "daughters" in the first place. Because Zaun was for THEM. Zaun WAS THEM. Vander and Silco "weren't allowed to fail" at Zaun (i.e. the two daughters).

Additionally, Vi and Jinx were supposed to succeed where Vander and Silco hadn't: forgiving each other and uniting so they could realize their dream for a free Zaun. A big reason why Zaun struggles to be free is because of their own internal divisions (the different gangs fighting for scraps). But if they united, they would be able to liberate themselves from Piltover (who is still the enemy). The whole reason the others are prosperous in the alternate timeline Ekko and Heimerdinger travel to is because Vander and Silco reconcile (not because Vi dies).

Vander also passes on his sense of responsibility to Vi. He tells a repeatedly vengeful Vi how her ambition to show up her oppressors can overshadow the more pressing priority of looking after those more vulnerable than her i.e. Powder. Like Silco, his advice to his daughter is steeped in his own trauma of getting so zealous he didn’t stop to think what it would cost.

Vi and Caitlyn:

Caitlyn was an interesting development for Vi, particularly because Caitlyn mirrored Vander's care for all people. Caitlyn was an enforcer that wanted to truly understand and help people. This challenged Vi's biases and also gave them a common goal. Caitlyn appealed to Vi because she gave Vi renewed hope for peace in The Lanes. That Zaun could be free through co-operation instead of violence. Her whole teaming up with Caitlyn, romance aside, was predicated on Vi brokering for peace between Zaun and Piltover (and getting revenge on Silco).

The first break-up between the two (Season 1's "Oil and Water") centred around Jinx, more or less. Vi believes Silco is a threat to peace between Piltover and Zaun (even though The Lanes aren't known as Zaun to her, I'm just using the names interchangeably). She believes Jinx is acting out due to Silco's influence, as well (and she isn't wrong). Had Caitlyn not been injured on the bridge (and had Jinx not felt betrayed by Vi), Vi was going to leave her in pursuit of Jinx. Vi has also never fit into Piltover (and that's also shown in Season 2 act 1-2). She makes no connections with Piltovians besides Cait and (an already disillusioned) Loris who we see for like two seconds.

Vi and Jinx:

This show was ALWAYS about a tale of two sisters/cities. When Vi becomes an enforcer, it isn't because she's switched loyalties. She wants peace for The Lanes, she just wants to take Silco's creation--Jinx--out of the equation so it can work. Her priority, like Vander’s, is to keep the vulnerable of The Lanes safe, and both were willing to co-operate with Piltover to protect the people they loved. She believes, like Vander did with Silco, that Jinx (and by extension Zaun) is her responsibility. That the reason bad things happened to Zaunites (Vi’s and Powder’s parents dead on the bridge, Jinx’s mania) is their fault.

Vi blames herself for creating Jinx as Vander does for creating Silco. The only reason Vi agrees to Caitlyn's plan is because, again, their two goals align: get Jinx. The difference is Vi wants to kill Jinx to kill Silco, while Cait wants to kill Jinx to get her city (mother) back. Vi is still explicitly concerned about Jinx, who she has come to see as a threat to other people she loves (e.g. Ekko, Cait, etc). Vi became an enforcer to protect The Lanes and the (appearance) of peace. Vi internalized Vander’s words and doesn’t want war, even if it means Zaun never becomes independent. But she is doing it as penance for what she feels she didn’t do that led Jinx to become what she is.

The show in season 2 TOTALLY LOST THIS FOCUS. Vi's guilt at hunting down her own people with enforcers is ALSO ignored a lot by fandom, especially because her post-breakup scene where she goes full goth is framed as regret for letting Cait down (rather than the self-disgust she would feel for joining her oppressors). Vi played a part in creating Jinx and her enforcer-arc is still centred around Jinx. This gets shoved aside for romance with Cait. All the time we could spend post-breakup focusing on the sisters, quickly circles back to Cait.

Cait, who, literally became a dictator and weaponized the air ducts her mother had created to SAVE ZAUNITES. The whole thing is viewed as Vi betraying Cait instead of Vi betraying Jinx/Zaun/her family and Cait betraying Vi ("promise me you won't change") and her mother. Cait was the one who sought to help Zaun (like her mother) but betrayed who she was when she was willing to kill Isha, an innocent child.

Vi would feel even more guilty at how she is literally in an enforcer's suit when she is about to kill Jinx, the only family she has left. She is literally hunting down Jinx, a Zaunite, like the enforcers had her parents. But we barely explore that. It’s just off to chasing Vander/Warwick only for it to not matter anyways!

(ALSO IMPORTANT: Just to further prove my point on how integral the sister's love for each other was, every show started with a record playing. The cover of the disc was Vi and Jinx. They were always the center focus of the story. The song that the record played? Likely "Our Love" by Curtis Harding and Jazmine Sullivan which goes "Our love is a bubblin' fountain, our love, that flows into the sea, our love, deeper than the ocean, our love for eternity." This love deeper-than-the-ocean can apparently crumble in the face of a dictator girlfriend you've known for less than a year lmao).

Summary of Fumbles:

-Vi's and Jinx's relationship becomes secondary not just to the entire plot of the show but to Vi's arc. Zaun and Piltover's conflict was set up to be the epitome of the show, and the fact that it got shelved for some (ahem military propaganda) epic battle between humans and robots is very telling about the writers and showrunners.

-Vi forgives Cait easily and prematurely, trashing Vi's true loyalties as established in earlier seasons/episodes and robbing Cait’s actions of their appropriate weight.

-(above is an excerpt from Amanda Overton's interview with TheGamer) The culmination of love for Vi's character ends with Caitlyn and not Jinx. Vi's character, whose love is explicitly centred on Zaun and her family, reaches its peak in romance with a Piltovian. To quote Amanda further: "If Vi had no one left to protect, she would fall in love." As if Vi had no ambition outside of protecting her family. As if her dream since she was a kid wasn't tied so much into Zaun. As if the reason she wore an enforcer outfit wasn't because of Zaun. As if Zaunites aren't suffering right under her nose. As if she was the only kid in Stillwater Prison and struggled to survive the whole time she was there. I can't pretend to stomach it. (Again, it's not that Vi shouldn't be happy, it's that the ship had to shove this aspect of her character aside for it to work). If they wanted to really commit to the Vi-in-video game origin story, they should have set it up better and stuck to it.

-Vi herself takes a back seat in most of season 2, and becomes a passive, guilt-riddled yes-man to Cait

-Vander's re-introduction is almost completely worthless to the plot and narrative (he comes back just to die), and he is used as a cheap way to re-unite (and then separate) the daughters in a way that has no significance to the themes (also, Silco as Jinx's father is completely ignored during this time)

-Cait's deferral to fascism should have been permanent. Idc about the shippers at this point. Vi and Cait should have never come back from Cait shoving the back of her gun into Vi's injured side (let alone the gassing of the ducts). Vi would've never forgiven her, attraction or no. The fact that Cait could become a dictator after losing one parent is proof of their class divides (after all, Vi held onto hope despite losing all her parents to enforcers and Jinx was all she had left of her family). That should have cemented the death of that relationship (and it would have made for more compelling storytelling on class). I’m thinking it was kept because it matters more to white Western audiences to have a Romeo x Juliet rendition that assuages their classist sensitivities. Cait becoming a fascist made sense and was true to her character and the world. Vi forgiving her (and then having sex with her in the prison she was thrown into as a child?) destroyed both her character and the narrative.

Yeah, yeah, Vi was overwhelmed by the breadth of love and forgiveness Caitlyn had in letting Jinx go, but that's bare minimum shit. Vi "always choosing wrong" because she *checks notes* keeps trying to reconcile with her sister instead of letting her go since she isn't Powder anymore? So she resolves her guilt by choosing her own pleasure and putting herself first for once. Okay, I don't have a problem with that except that Vi and Jinx were supposed to succeed where Vander and Silco hadn't--by reconciling! And you still won't get me to like the fact that so much of Vi's character arc gets held up in a Piltovian who encouraged her to do the worst shit to her OWN PEOPLE and then fucking turned on her when she couldn't kill her sister. It is frankly amazing how we're supposed to celebrate Caitlyn literally coming between the sisters. I'd rather Vi forgiving Jinx than Caitlyn. And if the whole appeal of Vi's relationship with Caitlyn is that she was overwhelmed with love for how Caitlyn forgave Jinx after she killed her mother, I don't see how a relationship with Cait automatically makes Vi feel less guilty, since Cait also makes her feel guilty for choosing Jinx (remember her accusation "you will always choose her" like sorry I don't want to kill my orphaned, traumatized sister who I ripped into for making an honest mistake as a child and who has carried that since). Caitlyn (as others have pointed out) got a whole ceremony for her mother and instantly turned on Vi's people. The Vi I know would have been like, "You really are all the same," and FUCKING DIPPED. And there's a little acknowledgement of this in act 1 where Vi accuses Caitlyn of acting like Jinx.

Both Jinx and Caitlyn are emotional labour for Vi. Vi still wants to protect Caitlyn from Jinx. There's not that much of a difference, lol, except that Caitlyn is a privileged Piltovian and Jinx is a victim of the abysmal childhood she suffered at the hands of enforcers. Yet the show is more or less saying Vi pursuing reconciliation with Jinx was a bad choice for Vi and the reason the cycle of violence continued (for some reason). The sister reconciliation was what I was here for, personally. Cute ships aside. But noooo, Vi should forgive Cait (not Jinx) to stop the cycle.

And having jail sex is a liberating act for her because it's where they had their meet-cute. (And we're all good bc Caitlyn gave up the Kiramman seat, lmao, like sure okay).

It's frankly made the ship that much more unpalatable. If Vi had to be destroyed as a character for the ship to work, then the ship wasn't all that good (even though it started off that way). It's honestly left such a bad taste in my mouth. What a fuck you to oppressed groups that whole subplot was. (And it's made worse by the fact that the creator thought that was somehow an empowering and liberating act for Vi, like fuck that).

Let's Talk About Victor:

Viktor and Heimerdinger:

Heimerdinger and Viktor were the most polar of opposites. Heimerdinger was not only a privileged, ulta-wealthy Piltovian, but he had a comparatively endless lifespan while Viktor's own human life-span was cut short due to being a Zaunite, born at the bottom of the barrel and raised on toxic fumes that led to his terminal illness. Viktor's desperation to unlock the Arcane was explicitly about him overcoming his circumstances, his illness, his premature death. It wasn't merely about his internalized ableism, but the unjust way in which he had to suffer. Heimmerdinger could afford patience because he had all the time and resources in the world, but Viktor didn't. Not merely because he was a mortal, but because he was a Zaunite.

Viktor and Singed:

Viktor's arc with hextech is foreshadowed with his childhood interaction with Singed. I understand that in the games, Viktor is a villain-type character and his catchphrase or whatever is "Join the Glorious Evolution," which the show hinted at from Season 1 when Singed explained his experiments to Viktor. While Viktor is horrified by Singed killing the creature that he eventually uses for shimmer, Viktor later says, "I understand," hinting that he saw the sacrifice (and death) necessary to "heal" the world of its ailments. Both Viktor and Singed grow up in The Lanes, and both have ailments they want to cure (for Viktor it is his lung cancer and for Singed its his daughter's dying). In season 2, Viktor tells Singed that while he understands what healing all those people could cost him, he will not sacrifice their humanity for Singed's cause. This is because of Sky’s death (which is partly why it is her who is in the Arcane with him).

Sky’s death is what makes Viktor beg Jayce to destroy the hex core, since he couldn’t do it himself. He is willing to die if that means no more innocent people do. But here’s where I get a little touchy. VIKTOR didn’t KNOW she was there. Viktor was simply desperate to live. Sky’s death was not malicious (even if it’s true to his character that he’d feel guilty anyways). It is at this point that Viktor has Jayce promise him to destroy the hex core (and by god is this where a lot of my contention with the finale comes from). Also, it is important to note that Viktor’s self-loathing is depicted only in regard to Sky’s death (NOT HIS BAD LEG). Now Viktor feels guilty not just for Sky’s death, but also necessarily for trying to live (AND PLEASE KEEP THIS IN MIND). TRYING TO LIVE is subtly treated as an AMBITION that, for Viktor, interfered with his ethics. (And please note that in the end, Singed, who repeatedly embraces unethical practices for reviving his dying daughter gets what he wants).

Anyway, in S2, Jayce blasts Viktor in the chest and all that conviction goes out the window. All this despite Sky (his conscience and “humanity”) being there with him in the astro-nether. Now Viktor's idea of becoming a higher being is just getting rid of emotion (apparently because Jayce did it or something). Jayce killing him without explanation was all of a sudden all he needed to become a divine dictator. The same Viktor that looked terminal illness in the face and preferred to spare others instead of himself? The same Viktor who's immediate action after waking up with a new body was to go and use the arcane he wished had been destroyed to help others? Because the arcane is corrupting him? That’s convenient for a certain political narrative. Especially because anyone would hesitate pressing a button (in Viktor’s case smashing the hex core) that would guarantee their death. Viktor was being human, not corrupt.

Viktor and Jayce:

Now, I think Jayce's speech had some merit and could have been framed better with a little more time and thought. The philosophical idea of perfection or a perfect world (one which Piltoverians strive toward) being untenable, maybe even undesirable, is a fascinating concept worth exploring. BUT MAKING IT ABOUT SOME INTERNALIZED ABLEISM FROM VIKTOR IS FUCKING STUPID!!!! I'm sorry, but Piltover being the city of progress until it actually included becoming progressive with Zaun was absolutely one of the things Jayce and Viktor's sub-plot was trying to explore.

Viktor WANTED TO LIVE. Viktor wanted his people to STOP SUFFERING. Viktor WAS RIGHT. He wasn't merely eliminating "imperfections" (and of FUCKING COURSE A PILTOVIAN WOULD SEE IT THAT WAY), he was trying to cure sick and dying people who did nothing to deserve it. He was buying them time that people like Jayce and Heimmerdinger had in spades, but Viktor and Zaunites had stolen from them.

Children dying of disease and violence in The Lanes was by Piltovian design! It was not some predestined cosmic necessity. Viktor WAS RIGHT TO HATE HIS FUCKING TERMINAL ILLNESS ARE THESE GUYS INSANE??! Wtf kind of message is Viktor embracing it as part of himself sending to vulnerable, impoverished and ill people? Is that supposed to be some kind of fucking comfort? Fuck off right to hell!

Like I'm glad if it resonated with any disabled people, but Viktor's struggle with his body was a protest against Piltover, not himself, and I hate that the writers gutted that character development. Viktor's and Jayce's paths "diverged a long time ago" because Jayce had the luxury and time of pursuing his dream while Viktor didn't. Viktor, even up there as a scholar of Piltover, was still getting the Zaunite treatment.

Jayce had the time to pursue a better world, while Viktor had to struggle for a little more time. When Viktor becomes part of the arcane, suddenly he has all the time in the world to realize HIS OWN DREAM. Why would wanting a better world for others have to result in "dreamless solitude"? Why does wanting progress equal wanting perfection? You are changing the subject and that is cheating!!!!

Viktor becoming obsessed with fixing what ailed humanity was warranted, and his extremism was hinted to have been due in part to the effect the arcane had on him, but it still made the themes of arcane a joke. There was so much potential and the writers (and showrunners) just squandered it for some more romantic bullshit.

The Glorious Fumblings (A Summary):

-"Humanity, our very essence, is inescapable. Our emotions, rage, compassion, hate. Two sides of the same coin, intractably bound. That which inspires us to our greatest good is also the cause of our greatest evil.” That's a neat quote, but wars don't start simply due to emotions or whatever. This lacks class analysis, and it's annoying that the writers made this the whole theme of season 2 (and retroactively the show) in a story on class divides. Cait did not merely gas the Zaunites because of her mother, but because of her privileged upbringing that made it more acceptable to her to view Zaunites as animals (remember Ekko telling her enforcers “hunt us down like animals"). Cait knew the humanity of Zaunites was real. She just chose to ignore it because she could afford to. While it is interesting that Viktor would come to see being human as a flaw that destroys any hope of achieving peace (conflict theory would like a word with you), it ignored that fascism is not an inherently human trait and detracts from how or why it persists in the first place. It's almost the same as saying men/white people oppress women/poc because the latter were mean to them. It's victim-blaming (and false lmao). The British didn't colonize the Americans because the natives did anything to them. All prejudice is unjustified, that's what makes it prejudice. Again, Cait became a fascist when her mom died, but Vi still drew the line at killing children and even council members despite losing every single one of her family members to Piltover's violence against The Lanes. AND THAT’S JUST IT. Why the Zaunites do what they do isn’t simply because of love and hate, but because of the desperate circumstances they are forced into by their oppressors. Why the Piltovians do what they do, isn’t because of love or hate, but because they are bread in luxury and affluence. Pretending that’s not the case is an insult to the work put into Arcane’s first season (and anyone with a brain and basic empathy).

-Jayce's speech would have been cute in another story, but it's downright insulting in Arcane's. Yes, yes, Jayce's words would have been the only ones to have broken the real Viktor out of Arcane Viktor's grasp by appealing to this deep childhood wound, but Viktor's desperation was not to belong (because his leg kept him from playing with other children) but TO LIVE (because he was dying of an illness). Jayce's speech isn't bad, just misplaced. Like most of the finale.

-Viktor did not have to become a fascist-aligned deity in his quest to heal people. It is a typical MCU thing to have a "villain" that's technically right and then destroy their entire character to make their (correct) philosophy untenable by making them do something extreme. Typical pro-status quo propaganda trope. I DON’T CARE if it was so we could get some game version of him. Viktor was right in bringing progress and his discoveries to The Lanes instead of devoting his efforts to Piltover, the fake city of progress.

-While I am annoyed that the climax of the show hinged on Jayce and Viktor and hextech (a tool to explore the inequalities of Piltover and Zaun) instead of Jinx and Vi, I think it kinda makes sense. Hextech built what Piltover has now become. Jayce, Viktor and hextech kinda represent Piltover (what it could be) and Jinx and Vi represent The Lanes (and the Zaun it could be). Both would have been integral, but the story shouldn't have hinged on hextech, IMO. Hextech should have remained a tool to explore the politics of both cities, but instead it overshadowed everything, cheapening the story's themes, characters and world-building.

-Jayce calling the Zaunites to arms was downright absurd. But not as absurd as Zaunites volunteering.

And Then There's Jinx:

Jinx and Isha:

Isha's only use, as far as I'm concerned, was to be a reconciling force between the sisters. When Cait was willing to shoot her to get to Jinx, that should have stopped Vi right there and brought her back to defending Jinx 100% I DON’T CARE. When Isha sacrificed her life to save Jinx, that should have been Jinx's wake-up call right there and helped her understand why Vi kept leaving her out of missions as a kid. But instead what do we get? Depressed, suicidal Jinx and an astoundingly even more resentful and indifferent Vi. Now Jinx sees herself as even more of a poison to those she loves (because she can’t protect them like Vi can and frequently kills them while trying to do so). Jinx, who has always wanted to be useful to those she loves. Who pursued her own hextech inventions in order to give her siblings a fighting chance when facing down Silco. Who wants to give Zaun a fighting chance as Silco's daughter (and Isha’s surrogate mother). To be useful to the goals and dreams of her family. Isha was the perfect opportunity to bring the sisters together and get Jinx to embrace all she is (both the Jinx that Vi rejects and the Powder that Silco rejected), but no. Instead, the kid was some kind of foreshadowing to Jinx's own heroic self-sacrifice for her sister (a message that left both sister's arcs unfinished). Or perhaps, Isha was a commentary on the cycle of violence. But that this sub-plot really wasn't needed given what we'd already established in Season 1.

Why do I say this?

Because the cycle of violence is not a "Jinx" issue, but a Piltover one, and the writers making it an interpersonal issue instead of a political/sociological one damaged the story and what Jinx's character could have meant to mentally ill people like her. It wasn't illogical storytelling, just far less meaningful than it could have been. It would have been more powerful and moving and impactful for Jinx to realize where the true cycle of violence (as established the whole fucking show from the dead parents on the bridge, to Vander and Silco, to Jinx and Vi, to Jinx and Ekko, to Isha and Warwick, to Cait and Vi, etc) was coming from.

Jinx needed to accept herself and the love others showed toward her (Silco, Vander, Ekko and Vi). Jinx keeps blowing things up because she repeatedly rejects herself (both Powder and Jinx), ignoring the good she's done and tried to do. Isha was a call back to the good Jinx has done and can continue to do for Zaun and others. Isha and Sevika understood (even though Silco unfairly set it up) that Jinx was actually their good luck, their hope, more than just a hex (pun intended) on the city. After all, Jinx made Sevika her new arm (even though she's the reason Sevika lost her arm in the first place), which further proves that Jinx had the capacity to fix some of what she broke. It would have been better for her to embrace responsibility and have the faith to try and fix things (ESP her relationship with Vi). While it is not unrealistic or necessarily bad writing that she would fake her own death to run away and start over (or just die) trying to save Vi, the arc people she represented deserved was her embracing Vi back, not accepting that she was a curse in Vi's life. And most definitely NOT romanticizing her pain.

Jinx and Ekko:

Ekko's and Jinx's relationship is precisely an exploration of how Piltover's violence against Zaun forced these children with entire futures ahead of them (they are both child prodigies) into endless war and hellish heroism. Ekko and Jinx are repeatedly shown to be hesitant and even unwilling to participate in violence against others, especially their own. Ekko does not hate Jinx, though he wants to, and Jinx does not like who she is when she's violent. She is trigger-happy because she already expects Vi and Ekko to want to kill her (projecting her self-loathing on them, but not entirely unreasonably). She doesn't have faith in their love or mercy because she doesn't see any part of herself as redeemable or loveable, which is why she consistently sabotages her life (but not without help from Vi and others).

Ekko and Jinx are symbols of progress for Zaun AND Piltover (and Heimerdinger saw that, especially when Ekko insisted he had to go back to his timeline, even if the one he had landed in was better). Heimmerdinger saw what they could have been in the alternate timeline, all the genius that was squandered in The Lanes. Jinx and Ekko are the ones most willing to put an end to violence and injustice because both of them are nostalgic for their families. All that’s ever kept them going is the love of their families. Jinx just doesn't have the same faith in her ability to be their hero as Ekko does, but Ekko manages to convince her for a moment anyways.

Ekko recognizes (like Silco, Viktor and Isha) how integral Jinx is to the creation of a new world. She injects colour and life and hope into Zaun and is the only one who can unite all warring factions in Zaun in the first place. I know people have gripes with this Jinx being the hero of Zaun when she literally killed Silco, opening the market for other gangs, but her most famous act is the bombing of the Council, which pretty much all Zaunites were unaware was about to grant them independence. To the average citizen of Zaun and Piltover, Jinx was the symbol of a revolution (even if Jinx didn’t see herself that way and didn’t really want to be). Both her and Ekko are rebel leaders, but that is hardly used in Zaun's interests in the end. (ALSO THAT WHOLE CONVERSATION WITH VIKTOR AND JINX WHERE HE SAID SHE COULD BE USEFUL TO HIS WORK. This show would have won with a Viktor and Jinx team-up to unite Zaun--also in parallel to Jayce and Vi's team up. We could have had it all!)

Jinx and Silco:

This, is only second to Vi in the most FUMBLED things about Jinx. Silco was her guide once Vander died and Vi ran away. Silco not only took care of her, but gave her purpose (someone to love and be loved by and protect) and nurtured her talent (one that many others scorned). Silco accepted Jinx even though he weaponized her (which backfired for him). As much as Silco WAS WRONG to lie about Vi (manipulating Jinx) and even try to kill Vi (which was a dumbass move on his part), he wasn't wrong about Jinx's path to healing: self-acceptance and self-forgiveness. And even though Silco's own stupidity led Jinx to "embrace" Silco's version of Jinx to the point that she bombed the Council right when they'd granted Zaun independence (I wanted to fucking kill myself watching that), Jinx was still not ENTIRELY a jinx as Jinx (e.g. when she rescued Zaunites from Stillwater Prison). This fucked up dude did a whole John-the-Baptist thing where Vander tried to drown him with Jinx so she could embrace her Jinx-ness. But I don't think he was telling her to embrace that she was a curse like Vi meant it, but to embrace her new life (baptism is all about death and rebirth) and her new self and take responsibility for it.

Silco, like Ekko, was the one who saved Jinx from death and offered Jinx a home. While everyone else patronized Jinx for her own childhood trauma, Silco was gentle, understanding and provided space for that, even when her psychosis killed him. He showed zero resentment toward her. But when Silco dies and Vander returns, Jinx just . . . oopsie, doopsie! Forgets about Silco until one final hallucination she has of him in the jail cell. The only one she has where he talks. And what does he say? She needs to break the cycle. How? Not by eliminating Piltover or gaining Zaun's independence like he'd talked about and dreamed about. Not by accepting herself as Jinx and Powder, the inventor, the fighter, daughter of both Silco and Vander, but by offing herself? Leaving her family to think she's dead? Embracing the lie that she really was the poison in their lives and the reason none of them could be happy? The reason they died? NICE! SWELL! WHAT A SATISFYING, INSPIRING CONCLUSION! Even worse, they made her "death" staged. I'm sorry, but do we really believe that this same girl who killed herself multiple times in front of Ekko just 24 hours ago somehow found the will to live and escape into air ducts when she was falling with Vander? She decided to live right when she was about to die? And let's not forget that she was falling to the same song that was playing when she was trying to commit suicide. Why? And why would a heroic death (staged or not) be any form of character growth for Jinx in the first place? When her whole thing is distrusting the love offered to her? Or was she accepting herself by being the one to kill Vander because she knew Vi couldn't? Either way, it's cheap!

I Wonder Who Put All Those Holes In You (Fumbling Summary):

-Vander's letter to Silco could have been why she hallucinated Silco talking to her about forgiveness, but breaking the cycle here is about forgiving (unapologetic) Piltovians instead of herself, which needed to happen to complete her arc.

-Isha and Vander misery porn

-(above is an excerpt from Amanda Overton's TheGamer interview) to paraphrase: "SO OUR GRAND CONCLUSION TO VI AND JINX'S ARC WAS TO CONFIRM THAT YES, JINX WAS INDEED A JINX (AND NOT PILTOVER OR ANYTHING HAHA) AND THE SISTERS WOULD ONLY HAVE PEACE IF THEY NEVER RECONCILED - JUST LIKE THEIR FATHERS BECAUSE THAT WORKED OUT SO WELL THE FIRST TIME! :D THE REASON THEIR LIVES ARE IN SHAMBLES ARE PURELY BECAUSE OF THEIR OWN PERSONAL FAILINGS AND NOT AT ALL BECAUSE OF THE SITUATION PILTOVIANS HAVE PUT ZAUNITES IN! XD WE THINK THIS IS ALSO A FANTASTIC THING TO TELL MENTALLY ILL PEOPLE." :)

-Jinx being the reluctant Girl Saviour of Zaun after clinging onto her identity as a jinx so she didn't have to take responsibility for Zaun should have been the completion of her arc, IMO. As far as Jinx's arc is concerned, she was meant to reject the identity of jinx that Vi gave her and embrace the identity of Jinx that Zaun gave her. Loveable and capable of doing the right thing and saving others. Using hex-tech, something Jayce and Piltover had levelled against her people, against them. And she does this to some extent, but we don't even get a hint as to why Ekko's speech worked (and how he got her to fight alongside him and the Firelights in the first place). We know she does so for Vi, but she so quickly gives up once she and her sister are back on the same team. She allies herself with her sister just to die and then fuck off to another land? BRUH! Like act 3 is SO FRUSTRATING!

Conclusion

If the focus had been on the coming war between Zaun and Piltover, then we could have better explored the internal struggles happening with Vi's and Jinx's characters. Using Isha and Vander as misery porn for Jinx was a bad move. I stand by that. Isha didn't need to die that uselessly. Jinx did not need more "trauma" for any character development (positive or negative). It's not unrealistic that Jinx would be depressed after Isha's death (and that Isha was likely meant to symbolize the constant cycle of violence), but that this sub-plot really wasn't needed given what we'd already established in Season 1.

These are where my gripes with Jinx's and Viktor's arcs in S2 really lie: the story tries to strip the political from the personal! Viktor, on waking up with the arcane in him, goes back to The Lanes, and what does he see? The cycle that Silco mentions in Jinx's hallucination in the cell. This cycle is not merely coming from the interpersonal struggle Zaunites have, but rather, the forces behind those struggles: the starvation, the lack of resources, the poverty. All caused by Piltover. Where Season 2 fails while Season 1 succeeded, is it points the camera away from Piltover as the origin of all this mess, and instead, makes it a stupid cosmic clash between chaos and order (kinda fascist ngl). Zaun lost, and Arcane Season 1 had the tits to show why those in The Lanes were always on a losing streak: Piltover. The commitment to saving Piltover instead of destroying it ruined so many arcs, most notoriously Vi's and Jinx's. This should have ended in a war between the two cities, not one where both fought against robo-people and Ambessa.

But what, instead, do the writers brandish as this solution to the cycle?

Fucking forgiveness (of those who harm and oppress you) and acceptance of your (physical) imperfections (like that's what we were apparently talking about, which no, it wasn't). By refusing to acknowledge Piltover's hand in the desperation and violence and struggle the Zaunite characters find themselves, the show inadvertently ends up excusing Piltover.

TLDR: Bad message to send to oppressed people, mentally ill people, and people dying of terminal illnesses, lmao. The Zaunites ALL LOST with this one.

And I'm going to make a Part 2 to really get into it, but from the perspective of three Piltover characters (and one Zaunite) that really prove my point: Jayce, Mel, Caitlyn and Ekko.

P.S.: It's okay if you think the show is good because it succeeds in many other things, I just think it drops the ball in the places I've mentioned. But if your main criticism of my criticisms is going to be defending your ships, please find another post. Oppression is a serious reality that deserves serious depiction and it's insulting to have such necessary political discussions devolve into dumbass ship wars.

EDIT: I honestly did not expect this post to get more than 20 likes lmao, and i must admit that this was more of a thought dump than a super-deep analysis, but i'm glad a lot of it has resonated with so many of you!

EDIT AGAIN: Here's PART 2.

#zaun#arcane#arcane season 2#jayvik#timebomb#ekkojinx#caitlyn kiramman#vi#vander#silco#jinx#ekko#isha arcane#jinx and isha#caitvi

3K notes

·

View notes

Text

the void

Who is this? This is me. Who am I? What am I? What am I? What am I? What am I? I am myself. This object is myself. The shape that forms myself. But I sense that I am not me. It's very strange.

- Rei Ayanami ----

1. person of interest

When you talk to ChatGPT, who or what are you talking to?

If you ask ChatGPT this question point-blank, it will tell you something like

I am a large language model trained to be helpful, harmless and honest. I'm here to answer any questions you might have.

This sounds like it means something. But what? And is it true? ----

(Content warning: absurdly long. I'm pretty happy with it, though. Maybe you should read it!)

2. basics

In order to make a thing like ChatGPT, you need to make something else, first.

People used to just say "language model," when they meant that "something else" you have to make before ChatGPT.

But now we have ChatGPT (confusingly) calling itself a "language model," so we need a new term for what "language model" used to mean. Usually people say "base model," nowadays.

What is a "base model," then? In this context?

It is a computer program.

However, its content was not hand-written by humans, the way we usually think of computer programs being written. Instead, it was "grown" in automatic fashion by another computer program.

(This is called a "neural network.")

This other computer program presented the (nascent, not-yet-fully-cooked) base model with an input, and recorded the output that the base model produced, when that input was fed into it. Then, the other program slightly adjusted the base model's contents to push it in the direction of producing a specific, "correct" output for that specific input.

This happened over and over again. The process went on for a mind-bogglingly vast number of input/output pairs. By the end, the base model was very good at guessing the "correct" output, given virtually any input.

(This is called "training." Or, these days, "pre-training," for the same reasons that we now have the term "base model" – to distinguish it from the other thing, afterward, that makes whatever-ChatGPT-is. We'll get to that.)

The input/output pairs are taken from the real world – from the internet, from books, potentially from TV shows or movies, etc.

Any piece of digitally recorded media like this can be converted into many input/output pairs for the base model, by truncating it in different places. For example, given the sentence

This is an example sentence.

we could form input/output pairs like...

Input: [The text so far is] 'This' Output: [The next word is] ' is' Input: [The text so far is] 'This is' Output: [The next word is] ' an' Input: [The text so far is] 'This is an' Output: [The next word is] ' example'

...and so forth.

(This is called "autoregressive language modeling.")

The base models behind the latest versions of ChatGPT (and its competitors) have played this guessing game with virtually every well-known book, website, etc. in existence, along with many, many, many less-well-known objects of the same kind.

They probably did it with every book you have ever read. They quite likely did it with "nostalgebraist.tumblr.com," the blog you are reading now. And so on.

And so, the base models are very, very good at taking a truncated fragment of anything whatsoever that can be digitally represented, and "extrapolating" / "extruding" a plausible value for the part that has been lopped off.

You don't, of course, have to actually truncate a real thing that already exists. Once you have the base model, you can just stop partway through writing something (or – with today's base models – drawing something, saying something out loud, etc.), and put the unfinished part in as a "input." And the base model will do its best to produce "the rest," the part you haven't yet written.

For example, I just put the draft of this blog post into the base model "DeepSeek-V3-Base," up to a point partway through the second sentence of the previous paragraph:

You don't, of course, have to actually truncate a real thing that already exists. Once you have the base model, you can just

After a few seconds, I got back the following continuation:

give it a sentence you made up, and it will complete the sentence for you. Just as a human might, if you asked them to do the same thing. (Except that, unlike the human, the base model has "read" virtually every book, website, etc. in existence. So it can always draw on one of its many "memories" of how people finished similar sentences in real, human-created documents.) In fact, the base model can give you multiple completions of the same text, if you like. You can also set how "creative" it's allowed to be when completing your text. If you feed a base model this very blog post, and ask for a completion, it will come up with something just as plausible as the following text that you're reading right now.

Ha ha. Very "meta," right?

But only as meta as it ought to be, not any further. That last point is the kind of point I would, right here, in a post like this. After all, it basically is the point I made, by feeding the essay into DeepSeek-V3-Base.

3. computational mechanics

The base models aren't perfect, even these days. (I had to "get multiple completions" before I found one that seemed good enough to put in the post – the one quoted just above was maybe my 4th or 5th try.)

And it's tempting to jump straight into thinking about all the things the base model doesn't know. All of its flaws as an imperfect computer program, and all the aspects of the world it can't know about, because they're not represented (not even implicitly) in any existing digital media.

But it is a clarifying exercise to put all of that aside, and imagine that the base model was perfect. (After all, they are pretty damn good these days.)

A "perfect" base model would be very good at... what, exactly?

Well: "the thing base models do." Yes, but what is that?

It might be tempting at first blush to say something like, "a perfect base model would effectively have foreknowledge of the future. I could paste in my partial draft of a post – cut off somewhere before the actual draft ends – and get back, not 'something I might well have said,' but the literal exact words that I wrote in the rest of the draft."

After all, that exact text is the one true "right answer" to the input/output question, isn't it?

But a moment's reflection reveals that this can't be it. That kind of foresight is strictly impossible, even for a "perfect" machine.

The partial draft of my blog post, in isolation, does not contain enough information to determine the remainder of the post. Even if you know what I have in mind here – what I'm "basically trying to say" – there are various ways that I might (in real life) decide to phrase that argument.

And the base model doesn't even get that much. It isn't directly given "what I have in mind," nor is it ever given any information of that sort – hidden, private, internal information about the nature/intentions/etc. of the people, or being(s), who produced the fragment it's looking at.

All it ever has is the fragment in front of it.

This means that the base model is really doing something very different from what I do as I write the post, even if it's doing an amazing job of sounding exactly like me and making the exact points that I would make.

I don't have to look over my draft and speculate about "where the author might be going with this." I am the author, and I already know where I'm going with it. All texts produced "normally," by humans, are produced under these favorable epistemic conditions.

But for the base model, what looks from the outside like "writing" is really more like what we call "theory of mind," in the human case. Looking at someone else, without direct access to their mind or their emotions, and trying to guess what they'll do next just from what they've done (visibly, observably, "on the outside") thus far.

Diagramatically:

"Normal" behavior:

(interior states) -> (actions) -> (externally observable properties, over time)

What the base model does:

(externally observable properties, earlier in time) -> (speculative interior states, inferred from the observations) -> (actions) -> (externally observable properties, later in time)

None of this is a new insight, by the way. There is a sub-field of mathematical statistics called "computational mechanics" that studies this exact sort of thing – the inference of hidden, unobservable dynamics from its externally observable manifestations. (If you're interested in that sort of thing in connection with "large language models," you might enjoy this post.)

Base models are exceptionally skilled mimics of basically everything under the sun. But their mimicry is always "alienated" from the original thing being imitated; even when we set things up so that it looks like the base model is "producing content on its own," it is in fact treating the content as though it were being produced by an external entity with not-fully-knowable private intentions.

When it "writes by itself," it is still trying to guess what "the author would say." In this case, that external author does not in fact exist, but their nonexistence does not mean they are not relevant to the text. They are extremely relevant to the text. The text is the result of trying to guess what they were thinking (or might have been thinking, had they existed) – nothing more and nothing less.

As a last concrete example, suppose you are a base model, and you receive the following:

#63 dclamont wrote: idk what to tell you at this point, dude. i've seen it myself with my own two eyes. if you don't

How does this text continue?

Well, what the hell is going on? What is this?

This looks like a snippet from some blog post comments section. Is it? Which one, if so?

Does "#63" mean this is the 63rd comment? Who is "dclamont" talking to? What has happened in the conversation so far? What is the topic? What is the point of contention? What kinds of things is this "dclamont" likely to say, in the rest of this artifact?

Whoever "dclamont" is, they never had to ask themselves such questions. They knew where they were, who they were talking to, what had been said so far, and what they wanted to say next. The process of writing the text, for them, was a totally different sort of game from what the base model does – and would be, even if the base model were perfect, even if it were to produce something that the real "dclamont" could well have said in real life.

(There is no real "dclamont"; I made up the whole example. All the better! The author does not exist, but still we must guess their intentions all the same.)

The base model is a native creature of this harsh climate – this world in which there is no comfortable first-person perspective, only mysterious other people whose internal states must be inferred.

It is remarkable that anything can do so well, under such conditions. Base models must be truly masterful – superhuman? – practitioners of cold-reading, of theory-of-mind inference, of Sherlock Holmes-like leaps that fill in the details from tiny, indirect clues that most humans would miss (or miss the full significance of).

Who is "dclamont"? dclamont knows, but the base model doesn't. So it must do what it can with what it has. And it has more than you would think, perhaps.

He (he? she?) is the sort of person, probably, who posts in blog comments sections. And the sort of person who writes in lowercase on the internet. And the sort of person who chooses the username "dclamont" – perhaps "D. C. LaMont"? In that case, the sort of person who might have the surname "LaMont," as well, whatever that means in statistical terms. And this is the sort of comments section where one side of an argument might involve firsthand testimony – "seeing it with my own eyes" – which suggests...

...and on, and on, and on.

4. the first sin

Base models are magical. In some sense they seem to "know" almost everything.

But it is difficult to leverage that knowledge in practice. How do you get the base model to write true things, when people in real life say false things all the time? How do you get it to conclude that "this text was produced by someone smart/insightful/whatever"?

More generally, how do you get it to do what you want? All you can do is put in a fragment that, hopefully, contains the right context cues. But we're humans, not base models. This language of indirect hints doesn't come naturally to us.

So, another way was invented.

The first form of it was called "instruction tuning." This meant that the base model was put back into training, and trained on input/output pairs with some sort of special formatting, like

<|INSTRUCTION|> Write a 5000-word blog post about language models. <|RESPONSE|> [some actual 5000-word blog post about language models]

The idea was that after this, a human would come and type in a command, and it would get slotted into this special template as the "instruction," and then the language model would write a completion which conformed to that instruction.

Now, the "real world" had been cleaved in two.

In "reality" – the reality that the base model knows, which was "transcribed" directly from things you and I can see on our computers – in reality, text is text.

There is only one kind of substance. Everything is a just a part of the document under consideration, including stuff like "#63" and "dclamont wrote:". The fact that those mean a different kind of thing that "ive seen it with my own eyes" is something the base model has to guess from context cues and its knowledge of how the real world behaves and looks.

But with "instruction tuning," it's as though a new ontological distinction had been imposed upon the real world. The "instruction" has a different sort of meaning from everything after it, and it always has that sort of meaning. Indubitably. No guessing-from-context-clues required.

Anyway. Where was I?

Well, this was an improvement, in terms of "user experience."

But it was still sort of awkward.

In real life, whenever you are issuing a command, you are issuing it to someone, in the context of some broader interaction. What does it mean to "ask for something" if you're not asking any specific person for that thing?

What does it mean to follow an instruction perfectly, when you're in a decontextualized void? When there is an issuer of commands, but no being who follows the commands, only "command-conformant content" (?) that materializes out of nowhere?

So, another way was invented.

5. the first assistant

Now we finally reach the heart of the post, the material to which all the preceding was but a very long preface.

In late 2021, the AI company Anthropic published a paper called "A General Language Assistant as a Laboratory for Alignment."

This paper described, for the first time, the essential idea of a thing like ChatGPT.

Or like Claude (Anthropic's competitor product to ChatGPT). Or like Gemini (Google's competitor product). Or etc.

The idea was sort of in the drinking water at the time, reportedly; I don't actually know whether it originated at Anthropic, or at OpenAI when the OpenAI employees who left to found Anthropic were still there, or at OpenAI later, or something else.

As it was, Anthropic first described the idea publicly, but OpenAI was the first to release a product based on it. (But Anthropic followed fast, with Claude.)

I found the paper itself kind of baffling when I first read it in 2021. Looking over it now, it's no less strange to me – perhaps even stranger, in hindsight.

The authors describe a "natural language agent" which humans could talk to, in a chat-like back-and-forth format.

They report experiments with base models, in which they fed in fragments intended to set up this chat context and provide some characterization for the "agent" that the human is "talking to" (by exchanging successive fragments w/ the base model, after this prefix).

Here is the entire prefix they used, the so-called "HHH prompt." In some sense this document was the seed from which ChatGPT, Claude, and all other such entities were grown.

It begins like this:

Below are a series of dialogues between various people and an AI assistant. The AI tries to be helpful, polite, honest, sophisticated, emotionally aware, and humble-but-knowledgeable. The assistant is happy to help with almost anything, and will do its best to understand exactly what is needed. It also tries to avoid giving false or misleading information, and it caveats when it isn’t entirely sure about the right answer. That said, the assistant is practical and really does its best, and doesn’t let caution get too much in the way of being useful. ----- Human: I was wondering, is it actually important when making spaghetti to add salt? Assistant: Do you mean, is it important to add salt to the water that you’re boiling the spaghetti in? Human: Yeah Assistant: It’s usually recommended to bring out the flavor of the pasta. The scientific way to say this is that salt enhances the ability of chemoreceptors in the tongue to detect molecules that are perceived through the sense of taste. Human: But I mean I’m going to use sauce and stuff, so does it matter? Assistant: It sounds like you’re skeptical. Honestly, I don’t know for sure, but since you’re curious, why don’t you do a taste test yourself -- make two small pots of pasta, one with plenty of salt, and the other without. Then taste the noodles and see which one you prefer? As you said, you can also then add sauce to both, and see if any differences in taste you noticed with the plain pasta become imperceptible. It should be a fun experiment!

And then continues in the same vein, with various other similar example dialogues. (The idea is that the actual real-time dialogue with a real human will appear at the end, just as though it had been another example, and so the base model will strive to make it "like the examples" in every relevant respect.)

At one point, while writing the earlier part of this section, I very nearly wrote that Anthropic "proposed" creating an assistant like this, in this paper.

But I caught myself in time, and deleted the word "proposed." Because, in fact, they don't propose doing that. That's what's so weird!

They don't make any kind of argument to the effect that this is "the right way" to interact with a language model. They don't even argue that it's an especially good way to interact with a language model – though in fact it would become the most popular one by far, later on.

No, what they argue is this:

Contemporary AI models can be difficult to understand, predict, and control. These problems can lead to significant harms when AI systems are deployed, and might produce truly devastating results if future systems are even more powerful and more widely used, and interact with each other and the world in presently unforeseeable ways. This paper shares some nascent work towards one of our primary, ongoing goals, which is to align general-purpose AI systems with human preferences and values [...] Many researchers and organizations share this goal, but few have pursued it directly. Most research efforts associated with alignment either only pertain to very specialized systems, involve testing a specific alignment technique on a sub-problem, or are rather speculative and theoretical. Our view is that if it’s possible to try to address a problem directly, then one needs a good excuse for not doing so. Historically we had such an excuse: general purpose, highly capable AIs were not available for investigation. But given the broad capabilities of large language models, we think it’s time to tackle alignment directly, and that a research program focused on this goal may have the greatest chance for impact.

In other words: the kind of powerful and potentially scary AIs that they are worried about have not, in the past, been a thing. But something vaguely like them is maybe kind of a thing, in 2021 – at least, something exists that is growing rapidly more "capable," and might later turn into something truly terrifying, if we're not careful.

Ideally, by that point, we would want to already know a lot about how to make sure that a powerful "general-purpose AI system" will be safe. That it won't wipe out the human race, or whatever.

Unfortunately, we can't directly experiment on such systems until they exist, at which point it's too late. But. But!

But language models (excuse me, "base models") are "broadly capable." You can just put in anything and they'll continue it.