#like we release a binary blob

Explore tagged Tumblr posts

Text

The best part is once you start doing it for work you stop being able to just google your problems

A fun thing about computer skills is that as you have more of them, the number of computer problems you have doesn't go down.

This is because as a beginner, you have troubles because you don't have much knowledge.

But then you learn a bunch more, and now you've got the skills to do a bunch of stuff, so you run into a lot of problems because you're doing so much stuff, and only an expert could figure them out.

But then one day you are an expert. You can reprogram everything and build new hardware! You understand all the various layers of tech!

And your problems are now legendary. You are trying things no one else has ever tried. You Google them and get zero results, or at best one forum post from 1997. You discover bugs in the silicon of obscure processors. You crash your compiler. Your software gets cited in academic papers because you accidently discovered a new mathematical proof while trying to remote control a vibrator. You can't use the wifi on your main laptop because you wrote your own uefi implementation and Intel has a bug in their firmware that they haven't fixed yet, no matter how much you email them. You post on mastodon about your technical issue and the most common replies are names of psychiatric medications. You have written your own OS but there arent many programs for it because no one else understands how they have to write apps as a small federation of coroutine-based microservices. You ask for help and get Pagliacci'd, constantly.

But this is the natural of computer skills: as you know more, your problems don't get easier, they just get weirder.

33K notes

·

View notes

Text

.....

Data recovered from disks taken from the labs of HNM Biotech's Dr. Yeva.

[Decryption failed, most files corrupt. Accessible data shown below]

HOLY NIGHTMARE CO. BIOTECH 04175401 DARK MATTER RESEARCH - SUMMARY - CATEGORIES - EXTERMINATION PROTOCOLS - IMMUNITY PROJECT

HOLY NIGHTMARE CO. BIOTECH 06305206 14-5566-0009 PROJECT LOGS - COLD FLAME - FALLEN STAR - WHITE OBLIVION - TROJAN MARE - RISING TIDE

DARK MATTER RESEARCH SUMMARY Dark Matter is the colloquial name given to a virus-like lifeform that needs to infect other living creatures to reproduce, feeding off these hosts like parasites. The basest form is a mere particle with no intelligence or will of its own. Lesser than even a single ant and more like a bacterium, it needs to mass into larger quantities, becoming a sort of "colony" that communicates through a hivemind. It reproduces through binary fission while infecting another living creature, releasing excess Dark Matter to split off into more copies. Other methods of reproduction are suspected but have not been recorded. Naturally more animal-like and instinctual in nature, only by infecting hosts of sapient species is it able to develop intellect of its own. However, as infected hosts no longer feel emotions such as fear, Holy Nightmare is devoted to preventing the spread at all costs to protect Nightmare’s continued control of the known universe. Hosts are infected when particles of Dark Matter enter the body through wounds or orifices. The infectious dose is quite high- most can fight off casual exposure. It doesn't spread well through the air and prefers physical contact. Host becomes part of the hivemind and will try to spread to other victims. This form is the primary way they spread but also the most obvious, as feral Dark Matter doesn't have the intelligence needed to hide itself effectively. They often start with animals and other less intelligent beings. WARNING: When threatened all forms can cause rapid mutations in the host to increase defensive ability, such as growing sharp claws or new mouths. They can heal the host if injured as well, but in extreme situations will evacuate the host to escape. This is often fatal.

CATEGORIES

They have a social structure superficially similar to eusocial insects, with each lower form being subservient to those higher. They advance in stages over their lifetimes, with the speed they grow seemingly based on how many and the quality of hosts they’ve consumed. Feral/Massed - As Dark Matter multiplies, smaller parts will gather into undifferentiated masses. The most numerous form, presenting as little more than inky black ‘blobs’ with varied numbers of eyes. They have little individuality at this phase and tend to join and split at random to create larger masses, but can't hold complex forms. Without a more advanced individual to control them, these will default to a simple 'spread and infect' mode of attack. Drones - Massed Dark Matter eventually begins splitting off into smaller and more stable colonies with a single eye. Notable are the orange orbs they form around the center mass, although the purpose is unknown. They become capable of hovering flight in this stage. Higher level Dark Matter can also spawn small versions of these from their own bodies by sacrificing a small amount of their own mass. Soldiers - Dark Matter drones that have infected many hosts of more intelligent species can begin to gain something akin to sapience, perhaps through a form of horizontal gene-transfer. They can keep more complex forms, often wielding weapons on their own. They're also better at hiding their presence in a host. Regents - The oldest and most powerful, their bodies turn pure white. Highly intelligent and extremely rare, they are believed to control all other Dark Matter.

EXTERMINATION PROTOCOLS- Dark Matter is resistant to cutting and bludgeoning weapons, and requires high energy to be damaged. Fire is effective, as is electricity. Focused light-based weaponry is the most effective counter when they're outside of a host. Inside a host they're more difficult to deal with- complete obliteration of both is recommended. Advances in destabilizing technology block the ability of individual particles from cooperating and cause a temporary loss of form. This hasn’t been tested on more advanced types. Current protocol when dealing with heavily infected planets is complet- [...the rest is too corrupted to access…]

IMMUNITY PROJE%55C77T000--- $F2r33r Ce&b2w~r9p/g 6G(eb*w#n<a $Z6+ne3r+

----------------------------

PROJECT LOGS - COLD FLAME - FALLEN STAR - WHITE OBLIVION - TROJAN MARE - RISING TIDE

-------------

PROJECT COLD FLAME [COMPLETE] PCF-01 [DECEASED] PCF-02 [DECEASED] PCF-03-A [MIA] PCF-03-B [KIA]

Selecting PCF-03-A and B show images of two tiny, almost cute blue lizard-like creatures, alongside what are presumably their larger adult forms, covered in icy spikes, alongside information describing developing and enhancing their ice powers and removing previous weaknesses. 03-B is described as being killed in battle with Galactic Soldiers, while 03-A's body was simply never found.

------------- PROJECT FALLEN STAR [CANCELED] PFS-01-A [DECEASED] PFS-02-B [DECEASED] PFS-03 [DECEASED] PFS-04 [REPURPOSED] PFS-05 [REPURPOSED]

DATA INACCESSIBLE

-------------

PROJECT WHITE OBLIVION [COMPLETE] PWO-01 [STASIS] PWO-2 [MIA]

PWO-01 describes the lab working with a creature said to modify memories, and how this can be weaponized. The creature is interchangeably called 'Erasem' or 'Oblivio'- apparently different HNM scientists disagreed on a name. PWO-02 just seems to be an improved version of the last, actually getting used a few times on the enemy to sew chaos among the GSA by rendering important individuals forgotten by their comrades. However after one much later mission it is said to go missing entirely, and the project is put to an end due to difficulty in managing the creature.

-------------

PROJECT TROJAN MARE [DEFUNCT] FORMERLY [REDACTED] PTM-01 [DECEASED] PTM-02 [DECEASED] PTM-03 [DECEASED] PTM-04 [DECEASED] PTM-05 [DECEASED] PTM-06 [DECEASED] PTM-07 [DECEASED] PTM-08 [DECEASED] PTM-09 [DECEASED] PTM-10 [DECEASED] PTM-11 [DECEASED] PTM-12 [DECEASED] PTM-13 [DECEASED] PTM-14 [DECEASED] PTM-15 [DECEASED] PTM-16 [DECEASED] PTM-17-A [DECEASED] PTM-17-B [DECEASED] PTM-18 [DECEASED] PTM-19 [DECEASED] PTM-20 [TERMINATED] PTM-21 [DECEASED] PTM-22 [DECEASED] PTM-23-A [DECEASED] PTM-23-B [TERMINATED] PTM-24-A [REPURPOSED] PTM-24-B [REPURPOSED]

DATA INACCESSIBLE

------------

PROJECT RISING TIDE [DEFUNCT] PRT-01-X [DECEASED] PRT-02-Y [DECEASED] PRT-02-X [TERMINATED] PRT-03-Y [TERMINATED] PRT-03-X [TERMINATED]

Describes a project to turn a planet's native sea life into demon beasts.

@kirbyoctournament

(This is from a roleplay session over at the Discord! I figure I'd share it for more people to see if you're curious about figuring stuff out about Techie)

#kirby oc tournament#lore drop#i wish i coulda added more detail but I don't have the energy#the important bits are all there

21 notes

·

View notes

Note

TLOZ translations always seem to be a bit shitty. I still see people talk about the weird translation of the Demise monologue at the end of SkSw. I think someone said that Demise was more general with his statement, as in there will always be forces from the demon tribe fighting against the light or smth? Not specifically "us three will always fight". (I've read it a few times, but hard to remember, sorry.) (On the topic of SkSw, I kinda dislike how much it impacted theories within TLOZ, some theories are really cool, don't get me wrong. But now, even games that existed for years before suddenly are being pushed to fit with the lore presented in that game. Ganondorf being the best example: He no longer is his own character who did bad things because of his own will and actions, it's now "He did it all because an evil curse made him do it. He had no choice, he was born as a vessel for the demonic lord." The implications that "the curse of Demise" also would mainly go for the already vilified race of the Gerudo, and make their one male an evil warlord is already kinda... yeah... no. (Not to mention that there are other demon lords throughout the franchise that have nothing to do with Ganon.)

Ohh speaking of this I recently saw this post that did a good translation of that very moment, and pretty much confirms what you are mentioning anon; that it's basically a promise of that cycle coming back moreso than Demise himself coming back (especially since his actual and definitive death is a big deal in that game).

But yeah, I agree it has taken a huge space in the way the series is thought about. I pretty much completely missed that hard turn, as I couldn't play Skyward Sword when it released and wasn't super into Zelda afterward anymore (I had gotten too edgy.... 2011 was the year where I got obsessed with every horror videogame in existence basically except for Resident Evil for some reason I could never get into that series ANYWAY WAY off topic........), so coming back a few years later had me very ???? puzzled about how the theories had reconstructed themselves around Hylia and Demise and endless cycles (it's not that it wasn't a thing before, but I wouldn't say it was as much a Series Trademark as it is now).

But yeah. Ganondorf having his own motivations makes him immediately stronger as an antagonist, especially since his deal is quite complicated all things considered.

I am having a thought about how a lot of Zelda villains' motivation is a sort of rebellion against nature. I have scratched enough digital paper about Ganondorf's situation, but like... Minish Cap Vaati is also very much motivated by his refusal to remain small and whimsical and seize power instead of staying in his lane (and then he gets horny in Four Sword so, maybe let's not go there), Zant is.... Zant, Hilda in A Link Between World has been cosmically punished for trying to reject the Goddesses and create a world on its own terms --like SERIOUSLY this is HORRIFYING I feel like we don't talk enough about how utterly nightmarish of a reality that paints for Hyrule as a whole-- Girahim is devoted but fights for the side more or less destined to lose... It's interesting how Hyrule is hostile to change and anything that threatens the statut quo.

(then you have the occasional Majora and Yuga, whomst I dooon't think really fit the above category --to their full credit! and then you have Bellum, who is..... a blob...... And I don't remember enough from either the Oracles game or about Malladus to put them in either category, I need to replay those games)

Hyrule really has this frightening quality to it when you stare at it for too long: that your two only options are to either graciously submit to your assigned cosmic role, or fight it and become darkness incarnate in some way. A Link Between World showed, quite starkly, that trying to escape that binary choice is *not an option*.

#asks#thoughts#totk#ganondorf#demise#skyward sword#vaati#hilda#zant#girahim#a link between worlds#minish cap#thanks for the ask!!#sorry I'm getting through them now that I'm not dying as much from covid#I fell over because I had too much fever#fun + normal#but yeah weirdly enough I think this is what compels me about the series now more than anything else#what can one do in such a reality how do you self-define what is freedom what is agency#man a link between worlds is such a good game tho#see this is why I had higher standards for totk's worldbuilding

95 notes

·

View notes

Text

A white animation student’s take on Soul and POC cartoons

This got long but there’s lots of pretty pictures to go with it.

Hi, I’m Shire and I’m as white as a ripped-off Pegasus prancing on a stolen van. Feel free to add to my post, especially if you are poc. The next generation of animators needs your voice now more than ever.

My opinion doesn’t matter as much here because I’m not part of the people being represented.

But I am part of the people to whom this film is marketed, and as the market, I think I should be Very Aware of what media does to me.

And as the future of animation, I need to do something with what I know.

I am very white. I have blue eyes and long blond hair. I’ve seen countless protagonists, love interests, moms, and daughters that look like me. If I saw an animated character that looks like me turn into a creature for the majority of a movie, I would cheer. Bring it on! I have plenty of other representation that tells me I’m great just the way I am, and I don’t need to change to be likable.

The moment Soul’s premise was released, many people of color expressed mistrust and disappointment on social media. Let me catch you up on the plot according to the new (march 2020) trailer. (It’s one of those dumb modern trailers that tells you the entire plot of the movie including the climax; so I recommend only watching half of it)

Our protagonist, Joe Gardner, has a rich (not in the monetary sense) and beautiful life. He has dreams! He wants to join a jazz band! So far his life looks, to me, comforting, amazing, heartfelt, and real. I’m excited to learn about his family and his music.

Some Whoknowswhat happens, and he enters a dimension where everyone, himself included, is represented by glowing, blue, vaguely humanoid creatures. They’re adorable! But they sure as heck aren’t brown. The most common response seems to be dread at the idea of the brown human protagonist spending the majority of his screen time as a not-brown, not-human creature.

The latest trailer definitely makes that look pretty darn true. He does spend most of the narrative - chronologically - as a blob.

but

That isn’t the same as his screen time.

From the look of the trailer, Joe and his not-yet-born-but-already-tired-of-life soul companion tour Joe’s story in all of its brown-skinned, human-shaped, life-loving glory. The movie is about life, not about magic beans that sing and dance about burping (though I won’t be surprised if that happens too.)

Basically! My conclusion is “it’s not as bad as it looked at first, and it looks like a wonderful story.”

but

That doesn’t mean it’s ok.

Yes, Soul is probably going to be a really important and heartfelt story about life, the goods, the bads, the dreams, and the bonds. That story uses a fun medium to view that life; using bright, candy-bowl colors and a made-up world to draw kids in with their parents trailing behind.

It’s a great story and there’s no reason to not create a black man for the lead role. There’s no reason not to give this story to people of color. It’s not a white story. This is great!

Except...

we’ve kind of

done this

a lot

The Book of Life and Coco also trade in their brown-skinned cast for a no-skinned cast, but I don’t know enough about Mexican culture to say those are bad and I haven't picked up on much pushback to those. There’s more nuance there, I think.

I cut the above pics together to show how the entire ensemble changes along with the protagonist. We can lose entire casts of poc. Emperor's New Groove keeps its cast as mostly human so at least we have Pacha

And while the animals they interact with might be poc-coded, there’s nothing very special or affirming about “animals of color.”

So, Soul.

Are we looking at the same thing here?

It’s no secret by now that this is an emerging pattern in animation. But not all poc-starring animated films have this same problem. We have Moana! With deuteragonists (basically co-protagonists) of color, heck yeah.

Aladdin... Pocahontas... The respect those films have for their depicted culture is... an essay for another time. Mulan fits here too. the titular characters’ costars are either white, or blue, and/or straight up animals. But hey, they don’t turn into animals, and neither do the supporting cast/love interests.

Dreamworks’ Home (2015) is also worth mentioning as a poc-led film where the deuteragonist is kind of a purple blob. But the thing I like a lot about Home is that it’s A Nice Story, where there’s no reason for the protagonist to not be poc, so she is poc. Spiderverse has a black lead with a white (or masked, or animal) supporting cast. But, spiderverse also has Miles’ dad, mom, uncle, and Penny Parker.

I’d like to see more of that.

And less of this

if you’re still having trouble seeing why this is a big deal, let’s try a little what-if scenario.

This goes out to my fellow white girls (including LGBTA white girls, we are not immune to propaganda racism)

imagine for a second you live in a world where animation is dominated to the point of almost total saturation by protagonist after protagonist who are boys/men. You do get the occasional woman-led film, but maybe pretend that 30 to 40 percent of those films are like

(We’re pretending for a second that Queen Eleanor was the protagonist, because I couldn’t think of any animated movies where the white lady protagonist turns into and stays an animal for the majority of the film)

Or, white boys and men, how would you feel if your most popular and marketable representation was this?

Speaking of gender representation, binary trans and especially nonbinary trans people are hard pressed to find representation of who they are without the added twist of Lizard tails or horns and the hand-waving explanation of “this species doesn’t do gender” But again, that’s a different essay.

Let’s look at what we do have. In reality, we (white people) have so much representation that having a fun twist where we spend most of the movie seeing that person in glimpses between colorful, glittering felt characters that reflect our inner selves is ok.

Wait, that aesthetic sounds kind of familiar...

But I digress. Inside Out was a successful and honestly helpful and important movie. I have no doubt in my mind that Soul will meet and surpass it in quality and and in message.

There is nothing wrong with turning your protagonist of color into an animal or blob for most of their own movie.

But it’s part of a larger pattern, and that pattern tells people of color that their skin would be more fun if it was blue, or hairy, or slimy, or something. It’s fine to have films like that because heck yeah it would be fun to be a llama. But it’s also fun to not be a llama. It’s fun to be a human. It’s fun to be yourself. I don’t think children of color are told that enough.

At least, not by mainstream studios. (The Breadwinner, produced by Cartoon Saloon)

It’s not like all these mainstream poc movies are the result of racist white producers who want us to equate people of color with animals. In fact, most of those movies these days have people of color very high up, as directors, writers, or at the very least, a pool of consultants of color.

These movies aren’t evil. They aren’t even that intrinsically racist (Pocahontas can go take a hike and rethink its life, but we knew that.) It’s that we need more than just the shape-shifting narratives of our non-white protagonists.

It’s not like there isn’t an enormous pool of ideas, talent, visions and scripts already written and waiting to be produced. There is.

But they somehow don’t make it past the head executives, way above any creative team, who make the decisions, aiming not for top-of-the-line stories, but for the Bottom line of sales.

When Disney acquired Pixar, their main takeover was in the merchandising department. The main target for their merchandise are, honestly, white children.

So is it much of a surprise

that they are more often greenlighting things palatable for as many “discerning” mothers as possible?

I saw just as many Tiana dolls as frog toys on the front page of google, so don’t worry too much about The Princess And The Frog. Kids love her. But I didn’t find any human figures of Kenai from Brother Bear, except for dolls wearing a bear suit.

So. What do I think of Soul?

I think it’s going to be beautiful. I think it’s going to be a great movie.

But I also think people of color deserve more.

Let’s take one more look at the top people who went into making this movie.

Of the six people listed here, five are white. Kemp Powers, one of the screenplay writers, is black.

It’s cool to see women reaching power within the animation industry, but this post isn’t about us.

We need to replace the top execs and get more projects greenlit that send the message that african, asian, latinix, middle eastern, and every other non-white ethnicity is perfect and relatable as the humans they were meant to be.

Disney is big enough that they can - and therefore should - take risks and produce movies that aren’t as “marketable” simply because art needs to be made. People need to be loved.

Come on, millennials and Gen Z. We can do better.

We Will do better.

TLDR: A lot of mainstream animation turns its protagonists of color into animals or other creatures. I (white) don’t think that’s a bad thing, except for the fact that we don’t get enough poc movies that AREN’T weird. Support Soul; it’s not going to be as bad as you think. It’s probably gonna be really good. Let’s make more good movies about people of color that stay PEOPLE of color.

176 notes

·

View notes

Text

The Clown!

How Clowns Have Become Scary

Matthew Burgess

Part One

Clowns, jesters, fools, and other such figures have existed since the days of ancient Egypt. Rome had figures known as Stupidus, and fifth-dynasty Egypt had pygmy clowns. Through the centuries, all clowns had and have one powerful connection; that of misrule, excess, and the unpredictable. They mimic and ridicule, they riddle and tease. They perform over-the-top, crazy antics. They cause mayhem and enjoy it, usurping law and order with unhinged slapstick. However, clowns are just one historical monster that can bring terror to people. Studying monsters brings understanding of the past and the present and shows a great deal of human nature.

Part Two

The word monster has roots in Latin, and the root words mean to warn. Stone Age humans had monsters of their own, and massive biblical monsters haunted other early humans. The idea of the Devil breaks off into other concepts such as demonic possession, witches, and the Antichrist. Jeffery Jerome Cohen posits that “The Monster Always Escapes.” What he means by this is that no monster is ever really killed or gotten rid of. The death or disappearance of one monster either makes room for a new one or provides an opportunity for the original monster to return with a new face. However, every time the monster returns, its meaning will change based on what is happening in society at that time. No monster ever really dies.

A monster might be new to some people. For example, if Pennywise the Clown only appears every twenty-seven years, then he is new to the people who are children when he comes back. If an urban legend is forgotten because it is no longer relevant, then when the situation is the same in the future, the urban legend will re-surface. As Poole says in Monsters in America, (page 22) “History is horror.” This also refers to the situation out of which a monster is born. Before the Salem Witch Trials, people were less concerned with piety. Some social switch flipped, and suddenly everyone was obsessed with finding the evil and unworthy in their society.

There are several other theories that help understand monsters through history and are key concepts that aid in studying them. A few that stand out are integral to monster culture. The monster is never just what it appears to be. It is a representation of some fear or desire that people experience. The monster defies classification, which also means that they clash with the concept of binaries and logic. Monsters in general are made of things that are distinctly “other,” or outliers to the idea of “normal.” They invite the removal of moral dimensions and make excuses for eradication of the “other.” Monsters are warnings, are representations of both fear and desire, are harbingers of the transitional future. These all tell the story of history and, more specifically, American history. Poole says “The American past...is a haunted house. Ghosts rattle their chains throughout its corridors, under its furniture, and in its small attic places. The historian must resurrect monsters in order to pull history’s victims out of...’the mud of oblivion.’ The historian’s task is necromancy, and it gives us nightmares.” (Monsters in America, Page 24)

Part 3

When my mother was eleven years old, her parents sat her down to watch the original IT movie. She tells me that she had nightmares and trouble sleeping for at least a month afterwards. When I was growing up, clowns were not mentioned. My siblings and I knew that clowns existed because there was a friendly clown named Pooky that we saw once a year at my father’s annual work party. Until I was twelve or thirteen Pooky was the only reference for the word “clown” that I had. After that, I started learning world history and learned about clowns in the context of circuses. To me they were silly people who wore polka dots and colorful wigs, and who painted their faces with the intention to entertain. The concept of the scary clown wasn’t even a shred of an idea to me until later.

When I was fifteen I started going to school for the first time. I suddenly had access to the internet and began absorbing every piece of pop culture that I could possibly handle. The trailers for the new IT movie were just starting to come out, and people were reporting scary clown sightings all over the country. I personally was not then and am not now scared of clowns. However, I could see that people were terrified of them and that fascinated me. I was more interested in the intentions of the people behind the masks than the unexpected presence of them. Fast forward to 2018, and I started watching American Horror Story. Seasons four and six heavily featured clowns as something scary. There was Twisty the Clown with his terrifying blown off mouth and tendency to kidnap children and attempt to entertain them, and there was the cult who wore clown masks and intimidated Sarah Paulson’s character. The cult was more effective than not because of the character’s coulrophobia, or fear of clowns. 1 Around the same time I watched the movie Suicide Squad, and became similarly fascinated with the character of the Joker. I started doing research and found that Jared Leto’s Joker was not the first one. There was a theory that proposed that there were three different Jokers, regardless of actor or illustrator. One, the thief and killer. Two, the silly one who had no real reason to perform any of his evil deeds, known as the “Clown Prince of Crime.” Third, the homicidal maniac.

As I’ve said, I am not afraid of clowns. But the reason why people are afraid of them enthrall me. Firstly, clowns are allowed to say things that the rest of us can’t. They dress up their words as jokes, but they can say the most shocking and inappropriate things. They can challenge those in power with no consequences. Second, humans inherit fear. Studies done in Georgia and Canada show that fear of a thing can be passed down through a family line. For example, if a parent was mauled by a tiger, and then had a child and disappeared, the child would be frightened if they saw a tiger. Also, the face paint of a clown elicits the same response as the uncanny valley. Clowns were first thought to be scary in the late 1940’s and 1950’s. Clowns worked very closely with children. Adults began to get paranoid about these clowns, grown men, abusing their children. Maybe some were, but the majority merely wanted to make the children laugh and smile. The adults started to tell their children to avoid the clowns. Later in the 80’s, slasher films were on the rise. Moviemakers were making anything into killers. Audrey the plant, cute little gremlins, worms, blobs, and clowns. Stephen King’s IT was written and released during this time. Since then, many scary clowns have existed. The Joker, Harley Quinn the Harlequin, Pennywise, Twisty, the Jigsaw puppet, the Terrifier. These all serve as a cultural lens to help explain social changes.

Part 4

The monster of the clown resonates with me because the idea of the scary clown is so wide-spread and can now be passed off as an “everyone knows that” statement. The why fascinates me. Clowns represent the both the fear of truth and the fear of lies. Clowns can say the unsayable and topple those in power with the truth. On the other hand, their fixed grins and otherwise blank faces are the embodiment of a lie, because you can’t tell who they are behind the mask.

From the earliest days of human history, there was some form of a clown. The clowns always had something to represent, and they always came back. To look at another point of view, most clowns were simple entertainers turned into frighteners by people who wanted to dispose of them. However, the clowns that were actually scary (Pennywise, Jigsaw, etc.) were warnings of what might happen if you mess with the truth. Pennywise changes form; he is the embodiment of lies. Jigsaw is transparent about his intentions; he is the cold, hard, bitter truth.

The sometimes-maudlin behavior of clowns invites sympathy. It suggests that maybe they are simply misunderstood, that maybe they deserve to be loved. However, they always snap back with something unexpected. It is a general consensus in the monster-f**ker community that clown-f**kers are the lowest of the low. However, if I may loosely quote one of my online followers on the subject: “...Sir Pennywise is a shnack.” Unfortunately, the spelling is a direct quote. I cannot pretend to know why people are attracted to clowns, Pennywise especially, but they are and there’s unfortunately nothing to be done about it.

Putting aside peoples’ attraction to clowns, to close this thought I’d like to quote Derek Kilmer in saying “the stories we tell say something about us.” Clowns may not be everyone’s fear. However, the culture we as people created also created clowns and the fear of them.

Part 5

Studying monsters can be a useful endeavor. History of America is the history of monsters. Therefore, if you study monsters, you study America. From the dehumanization of Native Americans by the Pilgrims to the fascination with aliens today, monsters have shaped America and been shaped by American society. This theory is called Reciprocal Determination. Instead of one thing causing another, two things cause each other. America’s society has been shaped by witches, by vampires, by zombies, by clowns. And society has, in return, created the monsters it claims to hate so much. People care about monsters. We created them, as they create us.

Clowns represent America’s relationship with truth. Depending on the kind of clown and when it appears, we can determine how Americans deal with lies. Early in the century, clowns were more jovial and friendly. People were complacent with letting bad things get swept under the rug. Harsh truths and cruel facts were ignored and glossed over. Abused spouses and homosexual relationships along with literal genocide and corrupt leadership had people looking the other way, because they were more concerned with image than anything else. But as time went on, people became less concerned with image and more concerned with truth. There are of course those who still value image over truth, but they are the minority. Corrupt leaders cannot hide anymore. LGBT+ folk can finally openly live their truth. Abuse is not tolerated. But at the same time, the clowns are getting scarier. Some people might say that this is simply correlation and not causation, and that is also a valid view, but I believe that it is, without a doubt, causation.

Monsters teach us not only our history, but who we are. They tell us the truth behind our lies. They challenge the master narrative and demonstrate impermanent borders between morality, truth, fear, and desire.

Footnotes

1 This phobia was also featured in the long-running show Supernatural, however in that show it’s played for humor.

4 notes

·

View notes

Text

Long overdue update!!

At long last the much promised and oft delayed blog post update that I've been promising off and on for MONTHS. Going to cover a huge range of topics here, therefore nothing that I cover will get extensive depth or attention. Will cover the App Store status, nControl, chimeraTV, electraTV, uicache / ldrestart recent changes / snafu, DalesDeadBug update, cycripter and any known issues that are occuring with any of the above. Will also include a link to a handy tutorial for saving OTA blobs for the 4K AppleTV, just in case we find a way to make them useful!

Saving 4K OTA blobs covered by idownloadblog:

nitoTV App Store

This is several months behind schedule, and at this point its pretty much entirely my fault. I still need to do some payment processing work on the amazon front regarding declined cards / failed payments, etc. Im going to be looking into this immediately after i finish writing this post.

If you hadn't noticed the new nito.tv website launched at the same time chimera(TV) did. You may have also noticed a beta code for people to help beta test it before i finally launch it, there is no way to get this code yet, not until i finish the payment work I mentioned above. Off the top of my head, this is the only thing holding us back anymore.

nControl

Obviously nControl was released a few months ago, to resoundingly positive response (thank you!) It's available on chariz repo for 10$ and is currently my only source of income, so all purchases are greatly appreciated! If you need any additional details about nControl in general I kindly redirect you to the exhaustively documented wiki page that I maintain on the subject: https://wiki.awkwardtv.org/wiki/nControl

The tvOS version is only available through patreon and i'd actually prefer that people no longer go that route, patreon makes it WAY too much effort to get the money they owe you so I massively regret doing that in the first place, just didn't want to launch iOS and tvOS separetely and honestly thought the store would wrap up shortly thereafter.

chimeraTV

For the first time (potentially ever) the tvOS jailbreak was released in tandem with the iOS version of the Electra Teams *OS 12 jailbreak. This was a momentus occasion and was a large source of me being delayed from focusing on completing the nitoTV App Store. Its a rock solid jailbreak (especially with latest release) and I'm quite proud to maintain the tvOS version of it. It covers 12.0 - > 12.1.1 on tvOS, this is due to the fact the Apple staggers version numbers between iOS and tvOS for some unknown and maddening reason. For instance (12.1.2 on iOS == 12.1.1 on tvOS). It drives me just as mad as it does the rest of you, but it's been like that since the beginning of ARM based AppleTVs (send gen +) So I doubt it will ever change.

Candidly it was a bit of a challenge to get AppleBetas awesome UI to cooperate on tvOS but i'm glad iIforced myself to use the same code as much as possible (lots of ifdefs), since its written in Swift you can imagine the fight I put up to avoid using the same code base for the UI stuff. Eventually I acquiesced (yes I do make concessions!)

electraTV

Wow it's really been a long time since i've updated this blog (sorry!) electraTV was released several months before chimera (well the initial versions were, the 11.4.1 iteration wasn't THAT long ago) The electra jailbreak covers ALL versions of 11 (11.0->11.4.1) In its latest jailbreakd2 based iteration it is incredibly stable and reliable. Not much else to say about it!

uicache / ldrestart changes

I wasted most of last week fighting against issues with ldrestart. If you aren't familiar with ldrestart it is responsible for running after jailbreaking or loading any new Tweaks to make sure anything they may inject into gets restarted. With the older version of jailbreakd (in backr00m & versions of electraTV that supported 11.2.1->11.3, but not 11.4.1) couldn't handle the speed at which all the daemons get reloaded by ldrestart, this would lead to a lockup that would result in the system eventually rebooting (after being locked up for several minutes).

ldrestart has actually always been an issue, even when i used a kpp bypass in greeng0blin (Im fairly certain thats accurate!) So as a workaround i used to 'killall -9 backboardd' That would respring enough different things (PineBoard, HeadBoard et al) that i would be sufficient for the things i most commonly injected. Obviously this is a hacky stopgap, and uicache used to also kill a variety of other processes to cover them as well (lsd, appstored, etc) to help cover things like DalesDeadBug.

After coolstar re-wrote uikitools (including uicache) i decided it was probably a good time for me to take a look at uicache again. If you want to know how much of a hassle and challenge uicache was in the earlier days (pre APFS) read some of the older posts on this blog. It's history is covered ad naeuseum.

Since we no longer need to load from /var/mobile/Applications, a lot of the extra hurdles in uicache have ceased to be necessary, essentially all that is really needed is [[LSApplicationWorkspace defaultWorkspace] _LSPrivateRebuildApplicationDatabasesForSystemApps:YES internal:YES user:NO]; + tweak to force App states to return TRUE for isEnabled.

In the course of thinning down uicache I decided it'd be a good time to try and get ldrestart working on tvOS. After battling with it off an on all last week I came up with something that appeared to work pretty consistently on tvOS 12. Instead of being thorough and testing on 10.2.2->11.4.1 as well I hastily released it. This lead some people to get stuck in respring loops / lockups that eventually restarted the device. This was due to the fact that uicache:restart in postinst scripts would trigger ldrestart instead of uicache in nitoTV.

In the older version of uicache there was an issue that existed once our new apps were loaded in the UI, a respring was never "required" but if it didn't occur all applications would exhibit weird behavior where they wouldnt launch, or wouldnt exit once launched, etc, to "fix" that I made it always kill backboardd as a compromise. Since this was also no longer necessary I made uicache killing backboardd "optional" by appending -r. Lack of forsite here, the old nitoTV wouldn't know backboardd wouldn't respring anymore, nor to run ldrestart when finish:restart was received, this lead to people getting stuck with a red progress indicator forever when trying to update to latest (at the time) version of nitoTV.

Due to the depth and gravity of the issue I sidelined getting ldrestart working in backr00m (one of the only places it has show stopping issues still) I reverted to uicache always respringing until I have time to revisit the issue.

In conjunction with deciding I was pouring too much time into this issue Chimera 1.0.6 was released the other night with massive stability improvements. Libtakeover & related injection was stripped out into inject_criticald which provided massively stability improvements for the jailbreak, this made focusing on getting that out a few hours after the iOS release a very high priority.

The big takeaway from all of this:

* uicache run by itself (no arguments) is sufficient to gets apps loaded / removed after installing them into /Applications.

* ldrestart is part of uikittools on tvOS now and should be safe to run on latest electraTV release and chimeraTV release, but won't work at all on backr00m.

if you have installed a tweak and it doesnt seem to be working, try running ldrestart, it should help.

sleepy/wake

Part of the uicache update came the addition of 'sleepy' and 'wake' binaries. Use them from the command line to sleep or wake your AppleTV.

DalesDeadBug

This was recently updated to spoof newer versions, if you can't seem to get it working after installing it, prime candidate to run ldrestart after installing or making changes to that don't seem to be propogating. It works to get SteamLink installed on tvOS 10.2.2, but crashes immediately, not sure if im going to be able to fix it. It won't be possible to make that a priority (I looked into it briefly, thats the best I can do for now).

If you need more info on what DalesDeadBug does, please read the wiki page: https://wiki.awkwardtv.org/wiki/DalesDeadBug

Cycripter

If you didn't notice, yesterday I decided to take one more brief detour to rectify a glaring deficiency in recent jailbreaks, inability to use cycript. I might have my differences with saurik recently, but this is still one of the most amazing projects he ever undertook and gifted to us.

cycripter / CycriptLoader.dylib have been updated and open sourced to make it easier to use cycript on iOS or tvOS. All details necessary can be viewed on the wiki and the git.nito.tv repo.

More Details: https://wiki.awkwardtv.org/wiki/Cycript

Known Issues

I havent kept a very exhaustive list of these, so I'm only going to cover two that I can think of right now.

* Infuse doesn't work on chimeraTV.

Try launching infuse before running the jailbreak (so if you are currently in a jailbroken state, reboot first)

if you run the jailbreak after Infuse has already been open it will work. I don't think it is necessarily any jailbreak detection, but it may be some kind of a protecetion from code injection, im honestly not sure.

* Music app doesn't work

Try updating to the latest version of chimeraTV on https://chimera.sh it didn't work in the prior version for me either, but after the latest install it started working.

Wrap-up

That's it for now, my core focus after this post is going to be to wrap up work on my long delayed tvOS App Store. I really hope to get it wrapped up this week or next. Stay tuned! And if you made it down this far, thanks!!

2 notes

·

View notes

Text

Mapping the Anime Fandom

Online anime discussions seem obsessed with individual anime: there can only be one best anime (and it’s Rakugo, fite me irl). But rather than focusing on specific anime, could we look at what binds them together? I’m talking about anime fans. No anime fan watches only one anime, they watch lots, so if we chart the fandom overlap between anime we could how different anime series group together. Do they group together based on source material? The type of genre? The release date? Let’s find out!

The Analysis Process (skip this section if uninterested)

First, we need some data on anime fans. Fortunately, we don’t need to conduct an extensive survey, anime fans are happy to publicly catalog their tastes on sites like MyAnimeList.net. To get a random sampling of the active anime fandom, I downloaded the completed anime and profile data of anyone logging into MAL on a couple of days in January. I got data for 7883 users who had watched 1,417,329 anime series in total (enough for 4 lifetimes of constant anime watching).

I filtered out anything that wasn’t a TV series or movie, and any anime with fewer than 1,000 fans in our dataset, leaving us with 380 anime. I then merged anime entries of the same series together, leaving 268 anime to chart.

To map out the fandom’s tastes, I modeled each anime like it was a beachball floating in a swimming pool. Each beachball had several elastic strings tied to it of varying strength. The strongest string was connected to the anime/beachball that it shared the most fans with. The next strongest string went to the anime/beachball it had the second most fans with, and so on. This way anime with lots of overlapping fans would be drawn together. But to prevent the confused mess of a squeaky beachball bondage orgy, each beachball also bobbed up and down in the pool of water, causing waves that pushed away anything that got too close.

I let this simulation run until it reached an equilibrium point where the pull of the strings balanced against the push of the waves. The result would hopefully be an anime/beachball web where groups of anime with large fandom overlaps formed clusters.

The Fandom Map

After warming myself on my overheating computer processors, the simulation finally ended with the result below. The font size of each anime name is proportional to how popular it is, and the red lines represent the fandom overlap/elastic strings between beachballs.

A full-size version can be seen here.

Eek, that’s a lot to take in! At first glance there are a few obvious clusters like the Ghibli movies in the bottom right, but it can be hard to identify what common themes are linking these anime. So what do we do? MAL tags to the rescue! (Surely this is the only time those tags have ever been useful). We can use the MAL genre tags the highlight different genres and see if they group together.

The green dots show which anime have the genre tag in the title.

What had once seemed a single unified blob is shown to be split into multiple clusters, but what does it tell us? Each cluster should be judged on two metrics: self-cohesion and its proximity/overlap to other clusters.

Self-cohesion is how tightly grouped a cluster is. The more tightly grouped it is, the more fans tend to stay within that genre and pick anime based on it. So for example, the ecchi cluster is quite tightly grouped in the top left area, telling us that the inclusion (or lack of) ecchi content is important to those fans in choosing what to watch. Alternatively the lack of cohesion can tell us something too, drama anime are spread out all over the place, suggesting there isn’t a unified group of fans specifically seeking out drama content like there is for romance, slice of life, or action anime.

The proximity to other clusters tells us where fandoms overlap. For example, the thriller and ecchi clusters are at opposite ends, suggesting that generally, the fandoms don’t overlap much. However psychological, mystery, and thriller anime all seem to overlap quite well.

It seems the most prominent split in the fandom is between romance and action anime, with the two of them taking up significant portions of the medium, but overlapping only in ecchi anime.

Recency Bias

As well as plotting genres, we can also see if the release date of an anime influences its position. If fans tend to pick anime based on what’s currently airing, that’d show up with anime of the same year grouping together.

While the middle area is quite mixed, the latest anime series from 2017 cluster significantly on the left side, suggesting anime aired in the same season do tend to clump together with a lot of fan overlap, but only while the anime is less than a year old. After that, the rest of the anime fandom who are pickier about the anime they try outnumber those who watch all the latest series.

Fandom age

Using the publicly listed birthdays of our MAL users, we can see which anime tends to attract the oldest users.

The age map is almost a mirror image of the recency bias map, with the unsurprising result that older anime tends to have older fans, and the most recent anime have the youngest fandom.

Gender differences

The number of women on MAL is likely undercounted as only 17% of those listing their gender state they’re female, but we can still analyse how the gender ratio changes between anime clusters.

Note that green nodes don’t mean a female majority (only 3 anime had a female majority fandom: Yuri on Ice, Free, and Ouran Koukou Host Club), it just means the ratio is higher than average.

There is clearly a gender divide in the anime each gender is watching. The female fans seem to gravitate more towards psychological stories and the Ghibli movies. Unsurprisingly, ecchi stuff is watched almost entirely by males. What’s more surprising is how few female fans there are among the recent releases cluster, suggesting female fans are less likely to follow the latest anime season.

MAL also offers a non-binary gender option. Only 1% of those displaying their gender picked non-binary, totaling just 61 users in my dataset, so I wouldn’t put too much stock in this result, but I was curious about the result...

It’s generally more mixed than the stark male-female divide, although the non-binary fan hotspots align much more with females than males, peaking on Yuri on Ice, Free, and Ouran Koukou Host Club.

Age ratings

Just for fun, we also tried plotting the age ratings of anime.

As would be expected, the R+ nudity ratings are clustered in the harem area, the younger ratings are mostly from the Ghibli films, and the R-17 violence ratings align with the action and psychological/thriller cluster.

Unrated anime

I have an obsession with rating anime, I must rate them all! But some users mark anime as “completed” without ever entering a score (what’s wrong with you?). I tried plotting the ratio of such unrated anime to see if they formed a pattern.

Turns out there’s a bit of a pattern here… ( ͡º ͜ʖ ͡º) The more ecchi it is, the less likely fans are to rate it. I guess they must scratch their rating compulsion itch through other means.

I hope you liked our little analysis. It wouldn’t be possible without the help of Part-time Storier, 8cccc9, and critttler. Thank you all~ I always love discussing this stuff, so feel free to contact me here on tumblr, twitter, or Discord ( Sunleaf_Willow /(^ n ^=)\#1616). My next post (in a week or two) is going to focus on female fans and see how the female fandom’s tastes cluster.

13 notes

·

View notes

Text

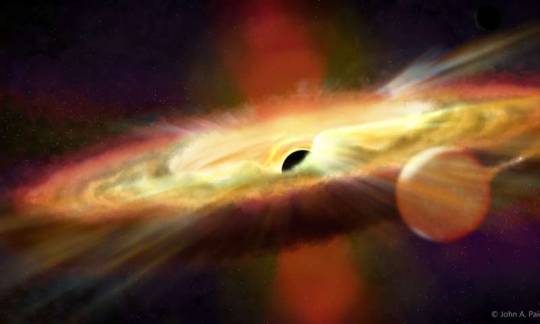

This is the most comprehensive X-ray map of the sky ever made

A new map of the entire sky, as seen in X-rays, looks deeper into space than any other of its kind.

The map, released June 19, is based on data from the first full scan of the sky made by the eROSITA X-ray telescope onboard the Russian-German SRG spacecraft, which launched in July 2019. The six-month, all-sky survey, which began in December and wrapped up in June, is only the first of eight total sky surveys that eROSITA will perform over the next few years. But this sweep alone cataloged some 1.1 million X-ray sources across the cosmos — just about doubling the number of known X-ray emitters in the universe.

These hot and energetic objects include Milky Way stars and supermassive black holes at the centers of other galaxies, some of which are billions of light-years away and date back to when the universe was just one-tenth of its current age.

eROSITA’s new map reveals objects about four times as faint as could be seen in the last survey of the whole X-ray sky, conducted by the ROSAT space telescope in the 1990s (SN: 6/29/91). The new images “are just spectacular to look at,” says Harvey Tananbaum, an astrophysicist at the Harvard-Smithsonian Center for Astrophysics in Cambridge, Mass., not involved in the mission. “You have this tremendous capability of looking at the near and the far … and then, of course, delving in detail to the parts of the images that you’re most interested in.”

Mapping the sky in X-rays

In this map of X-rays emitted by celestial objects across the sky, X-rays are color-coded by energy: Blue signifies the highest energies, followed by green, yellow and red. The red foreground glow comes from hot gas near the solar system, whereas the Milky Way plane appears blue, because gas and dust in the disk absorb all but the most energetic X-rays. The map includes many of the Milky Way’s supernova remnants, including Cassiopeia A and Vela, and a star system called Scorpius X-1, which was the first X-ray source discovered beyond the sun. It also showcases the Milky Way’s star-forming regions, such as the Orion Nebula and the Cygnus Superbubble (SN: 6/29/12), and a mysterious arc of X-rays called the North Polar Spur. Beyond the Milky Way are nearby galaxies, such as the Large Magellanic Cloud, and distant galaxy clusters, such as those contained in the Shapley Supercluster.

Jeremy Sanders, Hermann Brunner and the eSASS team/MPE, Eugene Churazov, Marat Gilfanov (on behalf of IKI)

Jeremy Sanders, Hermann Brunner and the eSASS team/MPE, Eugene Churazov, Marat Gilfanov (on behalf of IKI)

eROSITA can flag potentially interesting X-ray phenomena, such as flares from stars getting shredded by black holes, which other telescopes with narrower fields of view but better vision can then investigate in detail, Tananbaum says. The new map also allows astronomers to probe enigmatic X-ray features, such as a giant arc of radiation above the plane of the Milky Way called the North Polar Spur.

This X-ray feature may be left over from a nearby supernova explosion, or it might be related to the huge blobs of gas on either side of the Milky Way disk, known as Fermi Bubbles, says eROSITA team member Peter Predehl, an X-ray astronomer at the Max Planck Institute for Extraterrestrial Physics in Garching, Germany (SN:6/8/20). eROSITA observations could help the Russian and German teams involved in the mission figure out what the spur is.

About 20 percent of the marks on eROSITA’s map are stars in the Milky Way with intense magnetic fields and hot coronae. Scattered among these are star systems containing neutron stars, black holes and white dwarfs, and remnants of supernova explosions. eROSITA also caught several fleeting bursts from events like stellar collisions.

A supernova remnant called Vela (center, reddish green) is one of the most prominent X-ray sources in the sky. The supernova exploded about 12,000 years ago, about 800 light-years away, and overlaps with two other known supernova remnants: Vela Junior (faint purple ring at bottom left) and Puppis A (blue cloud at top right). All three explosions left behind neutron stars, but only the stars at the centers of Vela and Vela Junior are visible to eROSITA.Peter Predehl and Werner Becker/MPE, Davide Mella

Beyond the Milky Way, most of the X-ray emitters that eROSITA found are supermassive black holes gobbling up matter at the centers of other galaxies (SN: 6/18/20). Such active galactic nuclei comprise 77 percent of the catalog.

Distant clusters of galaxies made up another 2 percent of eROSITA’s haul. These clusters were visible to the telescope thanks to the piping hot gas that fills the space between galaxies in each cluster, which emits an X-ray glow.

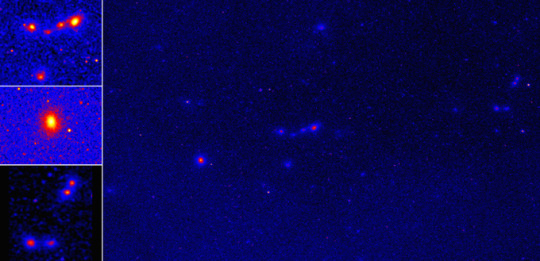

The Shapley Supercluster (pictured at right) is composed of many smaller clumps of galaxies about 650 million light-years away. Each blob of X-rays in this picture — which spans 180 million light-years across — is a galaxy cluster that contains hundreds to thousands of galaxies. Zoomed-in images on the left showcase a few of the most massive clusters in the bunch.Esra Bulbul and Jeremy Sanders/MPE

“At present, we probably know about a little less than 8,000 clusters of galaxies,” says Craig Sarazin, an astronomer at the University of Virginia in Charlottesville not involved in the work. But over its four-year mission, eROSITA is expected to find a total of 50,000 to 100,000 clusters. In the first sweep alone, it picked up about 20,000.

That census could give astronomers a much better sense of the sizes and distributions of galaxy clusters over cosmic history, Sarazin says. And this, in turn, may give new insight into features of the universe that govern cluster formation and evolution. That includes the precise amount of invisible, gravitationally binding dark matter out there, and how fast the universe is expanding.

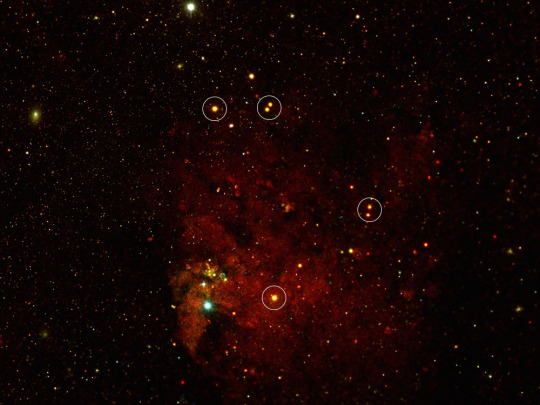

The Large Magellanic Cloud is a small galaxy that orbits the Milky Way. This little galactic neighbor emits a diffuse X-ray glow from its interstellar material (red) as well as brighter pinpoints of X-rays from supernova remnants (circles) and binary star systems.Frank Haberl and Chandreyee Maitra/MPE

This glowing ring is thought to have originated from a burst of X-rays emitted by the black hole and companion star at its center, which was detected by other telescopes in early 2019. Some of the X-rays in the outburst were scattered into a ring by a dust cloud that the radiation encountered. That scattering delayed the X-rays in the ring on their way to Earth, allowing the eROSITA telescope to detect them during its sky survey in February 2020, one year after the outburst was originally detected.Georg Lamer/Leibniz-Institut für Astrophysik Potsdam, Davide Mella

The Carina Nebula, about 7,500 light-years away is one of the largest diffuse nebulae our galaxy. It’s home to many massive, young stars, as well as a smaller nebula called Homunculus. That plume formed when one of the stars in the Eta Carinae system blew up — a celestial explosion famously observed in 1843 (SN: 1/4/10).Manami Sasaki/Dr. Karl Remeis Observatory/FAU, Davide Mella

Closer to home, observations of supernova remnants could help clear up some confusion about the life cycles of big stars, says eROSITA team member Andrea Merloni, an astronomer also at the Max Planck Institute for Extraterrestrial Physics. Past X-ray surveys have found fewer supernova remnants than theorists expect to see, based on how many massive stars they think have blown up over the course of the galaxy’s history. But eROSITA observations are now revealing plumes of debris that could be previously overlooked stellar graves. “Maybe we’ll start balancing this budget between the expected number of supernovae and the ones that we are detecting,” Merloni says.

eROSITA is now beginning its second six-month, all-sky survey. When combined, the telescope’s eight total maps will be able to reveal objects one-fifth as bright as those that could be seen on a single map. That not only allows astronomers to see more X-ray sources in more detail, but track how objects in the X-ray sky are changing over time.

.image-mobile { display: none; } @media (max-width: 400px) { .image-mobile { display: block; } .image-desktop { display: none; } } from Tips By Frank https://www.sciencenews.org/article/xray-map-sky-erosita-telescope-milky-way

0 notes

Text

Insights into Why Hyperbola GNU/Linux is Turning into Hyperbola BSD

In late December 2019, Hyperbola announced that they would be making major changes to their project. They have decided to drop the Linux kernel in favor of forking the OpenBSD kernel. This announcement only came months after Project Trident announced that they were going in the opposite direction (from BSD to Linux).

Hyperbola also plans to replace all software that is not GPL v3 compliant with new versions that are.

To get more insight into the future of their new project, I interviewed Andre, co-founder of Hyperbola.

Why Hyperbola GNU/Linux Turned into Hyperbola BSD

It’s FOSS: In your announcement, you state that the Linux kernel is “rapidly proceeding down an unstable path”. Could you explain what you mean by that?

Andre: First of all, it’s including the adaption of DRM features such as HDCP (High-bandwidth Digital Content Protection). Currently there is an option to disable it at build time, however there isn’t a policy that guarantees us that it will be optional forever.

Historically, some features began as optional ones until they reached total functionality. Then they became forced and difficult to patch out. Even if this does not happen in the case of HDCP, we remain cautious about such implementations.

Another of the reasons is that the Linux kernel is no longer getting proper hardening. Grsecurity stopped offering public patches several years ago, and we depended on that for our system’s security. Although we could use their patches still for a very expensive subscription, the subscription would be terminated if we chose to make those patches public.

Such restrictions goes against the FSDG principles that require us to provide full source code, deblobbed, and unrestricted, to our users.

KSPP is a project that was intended to upstream Grsec into the kernel, but thus far it has not come close to reaching Grsec / PaX level of kernel hardening. There also has not been many recent developments, which leads us to believe it is now an inactive project for the most part.

Lastly, the interest in allowing Rust modules into the kernel are a problem for us, due to Rust trademark restrictions which prevent us from applying patches in our distribution without express permission. We patch to remove non-free software, unlicensed files, and enhancements to user-privacy anywhere it is applicable. We also expect our users to be able to re-use our code without any additional restrictions or permission required.

This is also in part why we use UXP, a fully free browser engine and application toolkit without Rust, for our mail and browser applications.

Due to these restrictions, and the concern that it may at some point become a forced build-time dependency for the kernel we needed another option.

It’s FOSS: You also said in the announcement that you would be forking the OpenBSD kernel. Why did you pick the OpenBSD kennel over the FreeBSD, the DragonflyBSD kernel or the MidnightBSD kernel?

Andre: OpenBSD was chosen as our base for hard-forking because it’s a system that has always had quality code and security in mind.

Some of their ideas which greatly interested us were new system calls, including pledge and unveil which adds additional hardening to userspace and the removal of the systrace system policy-enforcement tool.

They also are known for Xenocara and LibreSSL, both of which we had already been using after porting them to GNU/Linux-libre. We found them to be well written and generally more stable than Xorg/OpenSSL respectively.

None of the other BSD implementations we are aware of have that level of security. We also were aware LibertyBSD has been working on liberating the OpenBSD kernel, which allowed us to use their patches to begin the initial development.

It’s FOSS: Why fork the kernel in the first place? How will you keep the new kernel up-to-date with newer hardware support?

Andre: The kernel is one of the most important parts of any operating system, and we felt it is critical to start on a firm foundation moving forward.

For the first version we plan to keep in synchronization with OpenBSD where it is possible. In future versions we may adapt code from other BSDs and even the Linux kernel where needed to keep up with hardware support and features.

We are working in coordination with Libreware Group (our representative for business activities) and have plans to open our foundation soon.

This will help to sustain development, hire future developers and encourage new enthusiasts for newer hardware support and code. We know that deblobbing isn’t enough because it’s a mitigation, not a solution for us. So, for that reason, we need to improve our structure and go to the next stage of development for our projects.

It’s FOSS: You state that you plan to replace the parts of the OpenBSD kernel and userspace that are not GPL compatible or non-free with those that are. What percentage of the code falls into the non-GPL zone?

Andre: It’s around 20% in the OpenBSD kernel and userspace.

Mostly, the non-GPL compatible licensed parts are under the Original BSD license, sometimes called the “4-clause BSD license” that contains a serious flaw: the “obnoxious BSD advertising clause”. It isn’t fatal, but it does cause practical problems for us because it generates incompatibility with our code and future development under GPLv3 and LGPLv3.

The non-free files in OpenBSD include files without an appropriate license header, or without a license in the folder containing a particular component.

If those files don’t contain a license to give users the four essential freedoms or if it has not been explicitly added in the public domain, it isn’t free software. Some developers think that code without a license is automatically in the public domain. That isn’t true under today’s copyright law; rather, all copyrightable works are copyrighted by default.

The non-free firmware blobs in OpenBSD include various hardware firmwares. These firmware blobs occur in Linux kernel also and have been manually removed by the Linux-libre project for years following each new kernel release.

They are typically in the form of a hex encoded binary and are provided to kernel developers without source in order to provide support for proprietary-designed hardware. These blobs may contain vulnerabilities or backdoors in addition to violating your freedom, but no one would know since the source code is not available for them. They must be removed to respect user freedom.

It’s FOSS: I was talking with someone about HyperbolaBSD and they mentioned HardenedBSD. Have you considered HardenedBSD?

Andre: We had looked into HardenedBSD, but it was forked from FreeBSD. FreeBSD has a much larger codebase. While HardenedBSD is likely a good project, it would require much more effort for us to deblob and verify licenses of all files.

We decided to use OpenBSD as a base to fork from instead of FreeBSD due to their past commitment to code quality, security, and minimalism.

It’s FOSS: You mentioned UXP (or Unified XUL Platform). It appears that you are using Moonchild’s fork of the pre-Servo Mozilla codebase to create a suite of applications for the web. Is that about the size of it?

Andre: Yes. Our decision to use UXP was for several reasons. We were already rebranding Firefox as Iceweasel for several years to remove DRM, disable telemetry, and apply preset privacy options. However, it became harder and harder for us to maintain when Mozilla kept adding anti-features, removing user customization, and rapidly breaking our rebranding and privacy patches.

After FF52, all XUL extensions were removed in favor of WebExt and Rust became enforced at compile time. We maintain several XUL addons to enhance user-privacy/security which would no longer work in the new engine. We also were concerned that the feature limited WebExt addons were introducing additional privacy issues. E.g. each installed WebExt addon contains a UUID which can be used to uniquely and precisely identify users (see Bugzilla 1372288).

After some research, we discovered UXP and that it was regularly keeping up with security fixes without rushing to implement new features. They had already disabled telemetry in the toolkit and remain committed to deleting all of it from the codebase.

We knew this was well-aligned with our goals, but still needed to apply a few patches to tweak privacy settings and remove DRM. Hence, we started creating our own applications on top of the toolkit.

This has allowed us to go far beyond basic rebranding/deblobbing as we were doing before and create our own fully customized XUL applications. We currently maintain Iceweasel-UXP, Icedove-UXP and Iceape-UXP in addition to sharing toolkit improvements back to UXP.

It’s FOSS: In a forum post, I noticed mentions of HyperRC, HyperBLibC, and hyperman. Are these forks or rewrites of current BSD tools to be GPL compliant?

Andre: They are forks of existing projects.

Hyperman is a fork of our current package manager, pacman. As pacman does not currently work on BSD, and the minimal support it had in the past was removed in recent versions, a fork was required. Hyperman already has a working implementation using LibreSSL and BSD support.

HyperRC will be a patched version of OpenRC init. HyperBLibC will be a fork from BSD LibC.

It’s FOSS: Since the beginning of time, Linux has championed the GPL license and BSD has championed the BSD license. Now, you are working to create a BSD that is GPL licensed. How would you respond to those in the BSD community who don’t agree with this move?

Andre: We are aware that there are disagreements between the GPL and BSD world. There are even disagreements over calling our previous distribution “GNU/Linux” rather than simply “Linux”, since the latter definition ignores that the GNU userspace was created in 1984, several years prior to the Linux kernel being created by Linus Torvalds. It was the two different software combined that make a complete system.

Some of the primary differences from BSD, is that the GPL requires that our source code must be made public, including future versions, and that it can only be used in tandem with compatibly licensed files. BSD systems do not have to share their source code publicly, and may bundle themselves with various licenses and non-free software without restriction.

Since we are strong supporters of the Free Software Movement and wish that our future code remain in the public space always, we chose the GPL.

It’s FOSS: I know at this point you are just starting the process, but do you have any idea who you might have a usable version of HyperbolaBSD available?

Andre: We expect to have an alpha release ready by 2021 (Q3) for initial testing.

It’s FOSS: How long will you continue to support the current Linux version of Hyperbola? Will it be easy for current users to switch over to?

Andre: As per our announcement, we will continue to support Hyperbola GNU/Linux-libre until 2022 (Q4). We expect there to be some difficulty in migration due to ABI changes, but will prepare an announcement and information on our wiki once it is ready.

It’s FOSS: If someone is interested in helping you work on HyperbolaBSD, how can they go about doing that? What kind of expertise would you be looking for?

Andre: Anyone who is interested and able to learn is welcome. We need C programmers and users who are interested in improving security and privacy in software. Developers need to follow the FSDG principles of free software development, as well as the YAGNI principle which means we will implement new features only as we need them.

Users can fork our git repository and submit patches to us for inclusion.

It’s FOSS: Do you have any plans to support ZFS? What filesystems will you support?

Andre: ZFS support is not currently planned, because it uses the Common Development and Distribution License, version 1.0 (CDDL). This license is incompatible with all versions of the GNU General Public License (GPL).

It would be possible to write new code under GPLv3 and release it under a new name (eg. HyperZFS), however there is no official decision to include ZFS compatibility code in HyperbolaBSD at this time.

We have plans on porting BTRFS, JFS2, NetBSD’s CHFS, DragonFlyBSD’s HAMMER/HAMMER2 and the Linux kernel’s JFFS2, all of which have licenses compatible with GPLv3. Long term, we may also support Ext4, F2FS, ReiserFS and Reiser4, but they will need to be rewritten due to being licensed exclusively under GPLv2, which does not allow use with GPLv3. All of these file systems will require development and stability testing, so they will be in later HyperbolaBSD releases and not for our initial stable version(s).

I would like to thank Andre for taking the time to answer my questions and for revealing more about the future of HyperbolaBSD.

What are your thoughts on Hyperbola switching to a BSD kernel? What do you think about a BSD being released under the GPL? Please let us know in the comments below.

If you found this article interesting, please take a minute to share it on social media, Hacker News or Reddit.

from It's FOSS https://itsfoss.com/hyperbola-linux-bsd/

Insights into Why Hyperbola GNU/Linux is Turning into Hyperbola BSD was initially seen on http://alaingonza.com/

from https://alaingonza.com/2020/01/16/insights-into-why-hyperbola-gnu-linux-is-turning-into-hyperbola-bsd/

0 notes

Text

2019 New Technologies (for me) / end of year review

I hate to break long standing traditions, so here's my tech in review blog entry for 2019.

If you are super bored, here are my previous revisions:

2015 in review

2016 in review

2017 in review

2018 in review

This may be a little bit different from the other in review articles, as many of the things I learned this last year were project leadership skills, and less understand the details about some tech. I sometimes joke that I've become an OOP programmer: Outline, OneNote and Powerpoint. There's a lot of truth to that.

Languages

Upon review, I played with a lot of languages this year. With the exception of TypeScript, most of these were for tool or future development. That last one is the most interesting: I learned Julia, for example, knowing that eventually Julia may be a thing we want to deploy, in some AI/ML microservices.

Other languages were me looking for a good language for tools: simple syntax, good target environment, and developer OS support that we can use in the mixed OS environment I find myself in.

In 2020 I know which existing decision I want to lean into (we have a smattering of Python), and where I want to advocate for change (broader adoption of Powershell). Which isn't as revolutionary as I was hoping.

TypeScript / Angular 5

I found myself, by default, sheparding a bunch of teams on Angular 5 for a while. I did some work in Angular 1, but that may have just when ES6 was released.

So I found myself coming up to speed in modern Angular, which includes Typescript. If just enough to make architectural decisions that made sense in practice. Which also meant learning about some other related topics: RxJS and Pupetteer.

I found TypeScript an interesting compromise of a language. It makes some of the same tradeoffs as Flow, but is more willing to introduce syntax. But I played around with it enough to find the weird corners, and understand some of the code style tradeoffs I could make (fully immutable structures with RxJS and clever abusage of the (weak) type system.)

ReasonML

This was a for fun language. I was interested in a functional language that didn't couple a bunch of type syntax with the rest of the functional programming goodness.

Reason is a modern syntax layer on top of OCAML, which cool. Reason can target both the OCAML compiler (native binaries), or Javascript. This versality is very interesting: can I trade off a static binary experience with a "whatever, Node is everywhere anywhere" when it's appropriate?

Juila

Read the book. Thought it was an interesting take on Go's "eh, maybe OOP isn't such a good idea" general philosophy. (Yes, I know I'm being reductive in both cases).

Racket

It's fun to see languages with really good macro functionality, and especially languages that claim to make it easy to make languages with the language. Part of me wants a language with a simple, consistent syntax and good standard library. (Smalltalk is certainly the former). That's what I was looking for here.

It was interesting to sometimes feel that I was playing with "new and optional" libraries... that were introduced shortly after 9/11. Not sure how I got that feeling, and it might be slightly unfair, but sometimes it felt like it.

I was able to protype an HTTP(s) request interface I really liked: based on curl's config file syntax, with a couple more parans. So that was kind of cool.

Was hoping to find a good language I could use for scripting type tasks. Racket turned out to not be in: in addition to having to think inside out a bit sometimes (lisp), I had issues with the package manager across the proxy at work.

Powershell

Oddly, I think Powershell might be the shell scripting language I want. It's a little awkward and verbose, but at work the default platform is Windows, and as a development team we support Linux VMs and eventually OS X. Powershell Core is availiable for all of those, without having to worry about exactly what version of bash (or not) is installed on the box.

(And no, we're not using WSL..)

I played with Powershell back in 2016, but I've moved my daily driver Windows shell to it, and it's trying to be my default for shell scripts (in spite of having a bit of a shelljs) culture and an blossoming Python culture.)

Oh, but one thing I hate about Powershell: it assumes you're running a back background, and if you don't text becomes hard to read, in some cases without a way to change it lighter. Could not believe my shell had opinions about my terminal coloring.

DevOpsey Stuff

Mostly stayed away from this side of the fence this year. Or minor improwements over work done last year.

Misc

Graphing