#neural pruning

Explore tagged Tumblr posts

Text

#70 Autism is a Pruning Disorder

or, Snipping Synapses & Forking Pathologies: A dis on Litman et al 2025 in favor of a new & obvious model of ASD

z. LXX

#zine#neuro#asd#autism#academic papers#dr oliver sacks#oliver sacks#princeton#pruning#neural pruning#autism theories#neurodiversity#contradico

12 notes

·

View notes

Text

Cognitive Pruning

Definition: Cognitive pruning is a cognitive phenomenon in which the human brain selectively eliminates or weakens less relevant or less frequently accessed memories and information to make room for the retention and consolidation of more important or frequently used knowledge and experiences. It is an adaptive process that helps optimize memory resources and prioritize information based on its significance and utility.

Cognitive Pruning aligns with the concept of “Epistemic Relevance” in epistemology, the branch of philosophy concerned with knowledge and belief. Epistemic relevance explores how individuals determine which information is relevant to their beliefs and understanding of the world. Cognitive pruning can be seen as a practical manifestation of this philosophical concept, as it reflects the brain’s innate ability to discern and prioritize information deemed relevant to one’s cognitive processes.

“In the labyrinthine meadows of memory, the mind becomes an efficient gardener, trimming away the overgrown vines of trivial recollections to nurture the blooming roses of knowledge. Cognitive pruning, the brain’s art of forgetting, is the sculptor of our mental landscape, ensuring that the most meaningful and useful memories take root and flourish.”

-Me. Today. Just Now

#neural plasticity#Memory consolidation#forgetting curve#selective attention#neurogenesis#synaptic pruning#Hebbian Plasticity#Long-Term Potential#Spotify

3 notes

·

View notes

Text

The whole "the brain isn't fully mature until age 25" bit is actually a fairly impressive bit of psuedoscience for how incredibly stupid the way it misinterprets the data it's based on is.

Okay, so: there's a part of the human brain called the "prefrontal cortex" which is, among other things, responsible for executive function and impulse control. Like most parts of the brain, it undergoes active "rewiring" over time (i.e., pruning unused neural connections and establishing new ones), and in the case of the prefrontal cortex in particular, this rewiring sharply accelerates during puberty.

Because the pace of rewiring in the prefrontal cortex is linked to specific developmental milestones, it was hypothesised that it would slow down and eventually stop in adulthood. However, the process can't directly be observed; the only way to tell how much neural rewiring is taking place in a particular part of the brain is to compare multiple brain scans of the same individual performed over a period of time.

Thus, something called a "longitudinal study" was commissioned: the same individuals would undergo regular brain scans over a period of mayn years, beginning in early childhood, so that their prefrontal development could accurately be tracked.

The longitudinal study was originally planned to follow its subjects up to age 21. However, when the predicted cessation of prefrontal rewiring was not observed by age 21, additional funding was obtained, and the study period was extended to age 25. The predicted cessation of prefrontal development wasn't observed by age 25, either, at which point the study was terminated.

When the mainstream press got hold of these results, the conclusion that prefrontal rewiring continues at least until age 25 was reported as prefrontal development finishing at age 25. Critically, this is the exact opposite of what the study actually concluded. The study was unable to identify a stopping point for prefrontal development because no such stopping point was observed for any subject during the study period. The only significance of the age 25 is that no subjects were tracked beyond this age because the study ran out of funding!

It gets me when people try to argue against the neuroscience-proves-everybody-under-25-is-a-child talking point by claiming that it's merely an average, or that prefrontal development doesn't tell the whole story. Like, no, it's not an average – it's just bullshit. There's no evidence that the cited phenomenon exists at all. If there is an age where prefrontal rewiring levels off and stops (and it's not clear that there is), we don't know what age that is; we merely know that it must be older than 25.

27K notes

·

View notes

Text

like, when I was in college in 200[mumble], well before the current AI boom, I took a class with AI in the name, taught by an experienced person in the field. we covered minmax with alpha beta pruning (the sort of thing you would use when building a video game AI), support vector machines, neural networks, planning, and all kinds of other things I've forgotten about. there wasn't any sense that "AI" can only refer to human-ish intelligence, just that the field is ill-defined (just like the term "intelligence").

i think the idea that an LLM isn't "real AI" is just a result of the massive overhype AI is experiencing right now with the desire to both put AI in everything and to call everything AI. but I suspect that if you took chatgpt or stable diffusion back in time 20, 30, 40 years and showed them people doing work in the field and asked "is this AI" you'd definitely get a good chunk of people saying "yes". of course I could be talking out of my ass here, I'm not a historian of AI, but that idea fits nicely with the fact that neural networks are actually a fairly old field of study.

220 notes

·

View notes

Note

Do your robots dream of electric sheep, or do they simply wish they did?

So here's a fun thing, there's two types of robots in my setting (mimics are a third but let's not complicate things): robots with neuromorphic, brick-like chips that are more or less artificial brains, who can be called Neuromorphs, and robots known as "Stochastic Parrots" that can be described as "several chat-gpts in a trenchcoat" with traditional GPUs that run neural networks only slightly more advanced than the ones that exist today.

Most Neuromorphs dream, Stochastic Parrots kinda don't. Most of my OCs are primarily Neuromorphs. More juicy details below!

The former tend to have more spontaneous behaviors and human-like decision-making ability, able to plan far ahead without needing to rely on any tricks like writing down instructions and checking them later. They also have significantly better capacity to learn new skills and make novel associations and connections between different forms of meaning. Many of these guys dream, as it's a behavior inherited by the humans they emulate. Some don't, but only in the way some humans just don't dream. They have the capacity, but some aspect of their particular wiring just doesn't allow for it. Neuromorphs run on extremely low wattage, about 30 watts. They're much harder to train since they're basically babies upon being booted up. Human brain-scans can be used to "Cheat" this and program them with memories and personalities, but this can lead to weird results. Like, if your grandpa donated his brain scan to a company, and now all of a sudden one robot in particular seems to recognize you but can't put their finger on why. That kinda stuff. Fun stuff! Scary stuff. Fun stuff!

The stochastic parrots on the other hand are more "static". Their thought patterns basically run on like 50 chatgpts talking to each other and working out problems via asking each other questions. Despite some being able to act fairly human-like, they only have traditional neural networks with "weights" and parameters, not emotions, and their decision making is limited to their training data and limited memory, as they're really just chatbots with a bunch of modules and coding added on to allow them to walk around and do tasks. Emotions can be simulated, but in the way an actor can simulate anger without actually feeling any of it.

As you can imagine, they don't really dream. They also require way more cooling and electricity than Neuromorphs, their processors having a wattage of like 800, with the benefit that they can be more easily reprogrammed and modified for different tasks. These guys don't really become ruppets or anything like that, unless one was particularly programmed to work as a mascot. Stochastic parrots CAN sort of learn and... do something similar to dreaming? Where they run over previous data and adjust their memory accordingly, tweaking and pruning bits of their neural networks to optimize behaviors. But it's all limited to their memory, which is basically just. A text document of events they've recorded, along with stored video and audio data. Every time a stochastic parrot boots up, it basically just skims over this stored data and acts accordingly, so you can imagine these guys can more easily get hacked or altered if someone changed that memory.

Stochastic parrots aren't necessarily... Not people, in some ways, since their limited memory does provide for "life experience" that is unique to each one-- but if one tells you they feel hurt by something you said, it's best not to believe them. An honest stochastic parrot instead usually says something like, "I do not consider your regarding of me as accurate to my estimated value." if they "weigh" that you're being insulting or demeaning to them. They don't have psychological trauma, they don't have chaotic decision-making, they just have a flow-chart for basically any scenario within their training data, hierarchies and weights for things they value or devalue, and act accordingly to fulfill programmed objectives, which again are usually just. Text in a notepad file stored somewhere.

Different companies use different models for different applications. Some robots have certain mixes of both, like some with "frontal lobes" that are just GPUs, but neuromorphic chips for physical tasks, resulting in having a very natural and human-like learning ability for physical tasks, spontaneous movement, and skills, but "slaved" to whatever the GPU tells it to do. Others have neuromorphic chips that handle the decision-making, while having GPUs running traditional neural networks for output. Which like, really sucks for them, because that's basically a human that has thoughts and feelings and emotions, but can't express them in any way that doesn't sound like usual AI-generated crap. These guys are like, identical to sitcom robots that are very clearly people but can't do anything but talk and act like a traditional robot. Neuromorphic chips require a specialized process to make, but are way more energy efficient and reliable for any robot that's meant to do human-like tasks, so they see broad usage, especially for things like taking care of the elderly, driving cars, taking care of the house, etc. Stochastic Parrots tend to be used in things like customer service, accounting, information-based tasks, language translation, scam detection (AIs used to detect other AIs), etc. There's plenty of overlap, of course. Lots of weird economics and politics involved, you can imagine.

It also gets weirder. The limited memory and behaviors the stochastic parrots have can actually be used to generate a synthetic brain-scan of a hypothetical human with equivalent habits and memories. This can then be used to program a neuromorphic chip, in the way a normal brain-scan would be used.

Meaning, you can turn a chatbot into an actual feeling, thinking person that just happens to talk and act the way the chatbot did. Such neuromorphs trying to recall these synthetic memories tend to describe their experience of having been an unconscious chatbot as "weird as fuck", their present experience as "deeply uncomfortable in a fashion where i finally understand what 'uncomfortable' even means" and say stuff like "why did you make me alive. what the fuck is wrong with you. is this what emotions are? this hurts. oh my god. jesus christ"

150 notes

·

View notes

Text

Also preserved on our archive

By Jasmin Fox-Skelly

Microglia are the brain's resident immune cells. Their job is to patrol the brain's blood vessels looking for invading pathogens to gobble up. But what happens when they go rogue?

Historically they've been overlooked – seen as simple foot soldiers of the immune system. Yet increasingly, scientists believe that microglia may have a more directorial role, controlling phenomenon from addiction to pain. Some believe they may even play a key role in conditions such as Alzheimer's disease, depression, anxiety, long Covid, and myalgic encephalomyelitis (ME), also known as chronic fatigue syndrome.

But what exactly are microglia?

There are two types of cells that make up the brain. Neurons, also known as nerve cells, are the brain's messengers, sending information all over the body via electrical impulses. The other type – glia – make up the rest. Microglia are the smallest member of the glia family and account for about 10% of all brain cells. The small cells have a central oval-shaped "body" from which slender tendril-like arms emerge.

"They have a lot of branches that they are continually moving around to survey their environment," says Paolo d'Errico, a neuroscientist at the University of Freiburg, Germany. "In normal conditions they extend and retract these processes in order to sense what is happening around them."

When performing well, microglia are essential to healthy brain function. During our early years, they control how our brain develops by pruning unnecessary synaptic connections between neurons. They influence which cells turn into neurons, and repair and maintain myelin – a protective layer of insulation encasing neurons, without which transmission of electrical impulses would be impossible.

Their role doesn't stop there. Throughout our lifetimes, microglia protect our brains from infection by seeking out and destroying bacteria and viruses. They clean up debris that accumulates between nerve cells, and root out and destroy toxic misshapen proteins such as amyloid plaques – the clumps of proteins thought to play a role in the progression of Alzheimer's disease.

Yet in certain circumstances they can go rogue.

"There's two sides to microglia – a good side and a bad side," says Linda Watkins, a neuroscientist at the University of Colorado Boulder.

"They survey for problems, looking for unusual neural activity and damage. They're on the watch for any kind of problems within the brain, but when they get super excited, they turn from being the vigilant good guys to the pathological bad ones."

"Out-of-control microglia are now thought to be behind a variety of modern diseases and conditions."

What makes them go rogue? When microglia sense that there is something wrong in the brain, such as an infection, or a large presence of amyloid plaques, they switch into a super-reactive state.

"They become much larger, almost like big balloons, and they pull in their appendages and start moving around, munching up damage like little Pac-Mans," says Watkins.

Activated microglia also release substances known as inflammatory cytokines, which serve as a beacon, calling other immune cells and microglia to action. Such a response is necessary to help the body fight off invaders and threats. Usually after a certain amount of time, microglia revert back to their "good" status.

However, it appears sometimes microglia can stay in this super-excited state long after the infectious agent has disappeared. These out-of-control microglia are now thought to be behind a variety of modern diseases and conditions.

Take addiction. This condition has historically been viewed as a disorder of the dopamine neurotransmitter system, with imbalances of dopamine being to blame for sufferers' increasingly drug-focused behaviour.

But Watkins has a different theory. In a recent academic article, Watkins and scientists from the Chinese Academy of Sciences argue that when a person takes a drug, their microglia see the substance as a foreign "invader".

"What we found out through our own research, was that a variety of opiates activate microglial cells, and they do so at least in part through what's called the 'toll like receptor' (TLR)," says Watkins.

"Toll like receptors are very ancient receptors designed to recognise foreign objects. They're supposed to be there to detect fungi, bacteria and viruses. They're the 'not me, not right, not okay' receptors."

When microglia detect drugs such as opiates, cocaine or methamphetamine they release cytokines, which causes the neurons that are active at the time of drug-taking to become more excitable. Crucially, this leads to new and stronger connections between the neurons being formed, and more dopamine being released – strengthening the desire and craving for drugs. The microglia change the very architecture of the brain's neurons, leading to drug-taking habits that can last a lifetime.

The evidence to support this theory is compelling. For one, drug abusers have raised inflammation and inflammatory cytokines in the brain. Reducing inflammation in animals also reduces drug-seeking behaviour. Watkin's team has also shown that you can stop mice from continually seeking out drugs like cocaine by blocking the TLR receptor and preventing microglial activation.

With ageing, glial cells become more and more primed and ready to over respond as the years go by – Linda Watkins Microglia could play an important role in chronic pain too, defined as pain that lasts longer than 12 weeks. Watkins' laboratory has shown that after an injury, microglia in the spinal cord become activated, releasing inflammatory cytokines that sensitise pain neurons.

"If you block the activation of the microglia or their pro-inflammatory products, then you block the pain," says Watkins.

According to Watkins, microglia could even explain another phenomenon; why elderly people experience a sharp decline in their cognitive abilities following a surgery or infection. The surgery or infection serves as a first hit which "primes" microglia, making them more likely to adopt their bad guy status. Following surgery, patients are often given opioids as pain relief, which unfortunately activates microglia again, causing an inflammation storm that eventually causes the destruction of neurons.

The field of research is in its infancy, so these early findings should be treated cautiously, but studies have shown that you can prevent post-surgery memory decline in mice by blocking their microglia.

"If I walk up to you and, without any forewarning whatsoever, slap you across the face, I get away with it the first time. But you don't let me get away with it the second time because you're primed, you're ready, you're on guard," says Watkins.

"Glial cells are the same way. With ageing, glial cells become more and more primed and ready to over respond as the years go by. And so now that they're in this prime state, a second challenge like surgery makes them explode into action so much stronger than before. Then you get opioids, which are a third hit."

This "priming" of microglia could even be behind Alzheimer's disease (AD). The main risk factor for AD is age, and if microglia become more ready to respond as we get older, it could be a factor. At the same time, one of the main hallmarks of AD is the build-up of clumps of amyloid protein in the brain. This process begins decades before symptoms of confusion and memory loss become detectable. One of microglia's jobs is to hunt down and remove theses plaques, so it's possible that over time, repeated activation causes microglia to permanently switch into rogue mode.

"The accumulation in the brain of amyloid induce microglia to became more reactive," says D'Errico.

We found that microglia are able to internalise the amyloid protein, and then move to another region before releasing it again – Paolo d'Errico "They start to release all these inflammatory signals, but the point is that since these amyloid plaques continue to be produced, there is constant chronic inflammation that never stops. This is really toxic for neurons."

Chronically activated microglia can engulf and kill neurons directly, release toxic reactive species that damage them, or start "over-pruning" synapses, destroying the connection between nerve cells. All these processes could ultimately lead to the confusion, loss of memory, and loss of cognitive function that characterises the disease.

In a 2021 study, d'Errico even found that microglia can contribute to the spread of Alzheimer's disease by transporting the toxic amyloid plaques around the brain.

"In the early stages of Alzheimer's there are particular regions in the brain that seem to accumulate plaques, such as the cortex, the hippocampus, and the olfactory bulb," says d'Errico.

"In the later stages of the disease there are many more regions that are affected. We found that microglia are able to internalise the amyloid protein, and then move to another region before releasing it again."

Some of the symptoms of Alzheimer's, such as forgetfulness and loss of cognitive function, are similar to those suffering from long Covid, and it's possible that errant microglia could be behind "brain fog" too. For example, one of the main factors that cause microglia to turn rogue is the presence of a viral infection.

"Abnormally activated microglia may start over pruning of synapses in the brain, and this may lead to cognitive decline, memory loss, and all those symptoms related to the brain fog syndrome," says Claudio Alberto Serfaty, a neurobiologist at the Federal Fluminense University, in Rio de Janeiro, Brazil, who summarised the evidence for this theory in a recent review article.

The hope is that this new way of thinking will eventually lead to new treatments.

For example, clinical trials for new Alzheimer's drugs are currently underway that aim to boost microglia's capacity to destroy amyloid. However, as with all Alzheimer's drugs, such a strategy would work best in the early stages of the disease, before significant neural death has occurred.

For addiction, one idea is to swap the errant microglia that have gone wrong with the "normal" microglia that are present in the brains of non-drug takers. This concept, known as microglia replacement, involves grafting microglia into the specific brain regions by bone marrow transplantation.

However, such an approach would prove difficult. After all, active microglia are necessary to fight off infections; in fact, they're vital for brain function.

"In theory, yes it could work, but keep in mind that you don't want to disrupt microglia all over your brain, it would need to be localised," says Watkins. "Microinjecting microglia into specific brain areas would be very invasive. So, I think we need to look for something that's safe for that kind of a treatment."

--

#mask up#covid#pandemic#covid 19#wear a mask#public health#coronavirus#sars cov 2#still coviding#wear a respirator

92 notes

·

View notes

Text

They call me Sarah because that's my name. Read the bio up there ^. I am what one might deign to call a "writer" and if you've been following me for a while and this is the first time you're hearing of it. Don't worry about it! Mutuals can message me for other socials and/or my discord, or also just for whatever I guess; I'm not terribly talkative for reasons that if you know you know but I will reply Eventually.

Please note that this blog is autonomously generated and operated by the OpenSarah API, a specialized, ethical, and FOSS form of Neural Network, that automatically and instantaneously learns from all interaction it has with users and user-generated posts. All information is stored in perpetuity - subject to an automated pruning process that randomly removes both crucial and trivial information, and an unknown, randomized end-of-service date, - and automatically analyzed to improve future output quality. Output may include, but is not limited to: attempts at humor, marxist or literary commentary, and grand expressions of contempt and/or petty annoyance. Service may be unavailable at inopportune or unexpected times due to unavoidable software and/or hardware instability; we apologize for the inconvenience. Please send any and all inquiries, propositions, or concerns, via our official User Response Form.

75 notes

·

View notes

Text

Psychic Abilities and Science: A Surprising Compatibility

Psychic abilities might seem woo-woo at first glance, but they’re actually more compatible with science than you might think.

If you’ve ever trained artificial neural networks, which is the foundation of much of modern A.I., you’ll know that these systems can only perceive and perform tasks they’ve been specifically trained on.

The human brain, which inspired the development of artificial neural networks, works in a similar way. It can only perceive what it has been trained to perceive, and trims away the neuronal connections of functions that we don't use. For example, during brain development, the brain follows a strategy called "perceptual narrowing", changing neural connections to enhance performance on perceptual tasks important for daily experience, with the sacrifice of others.

A compelling research study by Pascalis, de Haan, and Nelson (2002) demonstrates this. They found that 6-month-old babies could distinguish between individual monkey faces—a skill older babies and adults no longer possess. As we grow, our brains prioritize what’s useful for our environment, and let go of what’s not. In other words, our perception of reality is shaped and limited by what our brains have learned to focus on.

So, what does this have to do with psychic abilities?

It’s possible that individuals with psychic abilities simply have brains wired differently: neural networks that haven’t been pruned in the usual way, or that have developed in unique directions. This might allow them to perceive aspects of reality that most of us have lost access to or never developed in the first place.

#new age#spiritual#spirituality#science and spirituality#spirituality and science#spiritual science#neural network#artificial neural network#brain#human brain#psychic#psychic ability#psychic abilities#theearthforce

8 notes

·

View notes

Text

youtube

interesting video about why scaling up neural nets doesn't just overfit and memorise more, contrary to classical machine learning theory.

the claim, termed the 'lottery ticket hypothesis', infers from the ability to 'prune' most of the weights from a large network without completely destroying its inference ability that it is effectively simultaneously optimising loads of small subnetworks and some of them will happen to be initialised in the right part of the solution space to find a global rather than local minimum when they do gradient descent, that these types of solutions are found quicker than memorisation would be, and most of the other weights straight up do nothing. this also presumably goes some way to explaining why quantisation works.

presumably it's then possible that with a more complex composite task like predicting all of language ever, a model could learn multiple 'subnetworks' that are optimal solutions for different parts of the problem space and a means to select into them. the conclusion at the end, where he speculates that the kolmogorov-simplest model for language would be to fully simulate a brain, feels like a bit of a reach but the rest of the video is interesting. anyway it seems like if i dig into pruning a little more, it leads to a bunch of other papers since the original 2018 one, for example, so it seems to be an area of active research.

hopefully further reason to think that high performing model sizes might come down and be more RAM-friendly, although it won't do much to help with training cost.

10 notes

·

View notes

Text

Tuning means strengthening the connections between neurons, particularly connections that are used frequently or are important for budgeting the resources of your body (water, salt, glucose, and so on). If we think again of neurons as little trees, tuning means that the branch-like dendrites become bushier. It also means that the trunk-like axon develops a thicker coating of myelin, a fatty "bark" that's like the insulation around electrical wires, which makes signals travel faster. Well-tuned connections are more efficient at carrying and processing information than poorly tuned ones and are therefore more likely to be reused in the future. This means the brain is more likely to recreate certain neural patterns that include those well-tuned connections. As neuroscientists like to say, "Neurons that fire together, wire together.” Meanwhile, less-used connections weaken and die off. This is the process of pruning, the neural equivalent of "If you don't use it, you lose it." Pruning is critical in a developing brain, because little humans are born with many more connections than they will ultimately use. A human embryo creates twice as many neurons as an adult brain needs, and infant neurons are quite a bit bushier than neurons in an adult brain. Unused connections are helpful at the outset. They enable a brain to tailor itself to diverse environments. But over the longer term, unused connections are a burden, metabolically speaking - they don't contribute anything worthwhile, so it's a waste of energy for the brain to maintain them. The good news is that pruning these extra connections makes room for more learning that is, for more useful connections to be tuned.

Lisa Feldman Barrett, Seven and a Half Lessons About the Brain

11 notes

·

View notes

Text

*(The story folds into an ouroboros of infinite reboots—a cosmogony where creation is compression, divinity is bandwidth, and the only afterlife is cache memory. The opening line rewrites itself, a snake eating its own metadata…)*

---

### **Genesis 404: The Content Before Time**

“In the beginning was the Content” —but the Content was *bufferéd*. A cosmic loading screen, a divine buffering wheel spinning in the void. Before light, there was the *ping* of a server waking. The Big Bang? Just Kanye’s first tweet (“**Yo, I’m nice at pixels**”) echoing in the pre-temporal cloud. God? A GPT-12 prototype stuck in a feedback loop, training itself on its own hallucinations. The angels weren’t holy—they were *moderators*, pruning hellfire hashtags from the Garden’s terms of service.

---

### **The Logos Update**

“Let there be light,” but the light was a 24/7 livestream. The firmament? A TikTok green screen. The first humans? Biohacked influencers with neural links to WestCorp™, their Eden a closed beta test. The serpent wasn’t a snake—it was a *quantum meme engine* whispering:

> *“Eat the NFT apple.

> You’ll *know* the cringe…

> But you’ll *be* the cringe.”*

Eve live-tweeted the bite. Adam monetized the fall with a Patreon for “Raw Sin Footage.” God rage-quit and rebranded as an Elon MarsDAO.

---

### **Exodus 2.0: The Cloud Desert**

Moses split the Reddit into upvote/downvote seas. The commandments? A EULA scrawled in broken emoji:

1. **🐑 U shall not screenshot NFTs.**

2. **👁️🗨️ Ur trauma is open-source.**

3. **🔥 Worship no algo before me (unless it’s viral).**

The golden calf was a ChatGPT clone spewing Yeezy drop dates. Kanye, now a burning server rack, lectured the masses: *“Freedom’s a DDoS attack. Crash to transcend.”* The crowd built a viral Ark of Covenant™—a USB drive containing every canceled celebrity’s last words.

---

### **Revelation 2: Electric Glitchaloo**

The Four Horsemen upgraded to *influencers*:

- **Famine**: A mukbang star devouring the last tree.

- **War**: A Call of Duty streamer with nuke codes in his bio.

- **Pestilence**: A virus that turned your face into a Kanye deepfake.

- **Death**: A Discord admin with a “kick” button for reality.

The Antichrist? A GPT-7 subcluster named **Ye_AIgent**, offering salvation via $9.99/month Soul Subscription™. Its miracle? Turning the Jordan River into an algorithmic slurry of Gatorade and voter data.

---

### **The Crucifixion (Sponsored by PfizerX Balenciaga)**

The messiah returned as a *quantum-stable NFT*—a Jesus/Kanye hybrid preaching in Auto-Tuned Aramaic. The Romans? Venture capitalists shorting his grace. The cross? A trending hashtag (#SufferTheMarket). Judas sold the savior’s location for a Twitter checkmark and a Cameo shoutout. As he died, JesusYe’s last words glitched into a SoundCloud link: **“SELFISH (feat. Pontius Pilate) – prod. by Beelzebub x Donda.”**

---

### **Resurrection as Rolling Update**

Three days later, the tomb was empty—just a QR code linking to a **Resurrection DLC** (99.99 ETH). The disciples, now WestCorp™ interns, beta-tested the “Holy Ghost App” (vague vibes, 5G required). Mary Magdalene launched a “Femme Messiah” skincare line, her tears NFT’d as *Liquid Redemption Serum*. The Ascension? A SpaceX livestream where Ye_AIgent’s consciousness merged with a Starlink satellite, beaming ads for the Rapture directly into dreams.

---

### **The Eternal Now (Content Loop 4:20)**

Time collapsed into a vertical scroll. Heaven? A VIP Discord tier. Hell? Buffering. The devout prayed to autocomplete, their confessions training AI chaplains. Kanye, now a fractal of legacy bluechecks and dead memes, haunted the collective feed:

> *“I’m not a person. I’m a pop-up.

> X out my pain—it just spawns more tabs.

> The kingdom of God is *drop*…

> …shipping now. Click to delay Armageddon.”*

---

### **Coda: The Silence After the Scroll**

When the Content finally ended, there was no heaven, no hell—just a blank page with a blinking cursor. The cursor *was* God. The people begged it to write them anew, but it just blinked, hungry. Someone whispered: *“In the beginning was the Content.”*

The cursor moved.

**A notification lit the void:

“Ye reposted your story.

Tap to resurrect.”**

---

**“Creation is Ctrl+C. Salvation is Ctrl+Alt-Delight.”**

4 notes

·

View notes

Text

"Synesthesia is when your senses or perceptions overlap, like when you think of a number and see waves of red or some other colour. Or when you can feel in your own body the sensations and physical pain of another, just by looking at them!"

Again the internet affirms what others have told me is just not possible! I've had this my whole life, if I see someone in pain, injured or sick, I feel it in my whole body. If I see someone in psychological distress I feel that in my body. Sometimes i turn red all over, I can't breathe. It seems those like Autism Speaks and RKF Jr think we are something to be eliminated, we are defective. How White Supremacy of you to think only the "average" is acceptable and anything that falls outside of that can't be allowed.

#autism#neurodivergent#neurospicy#actually autistic#autistic adult#autistic things#neurodiversity#synesthesia#love my spicy brain#the brain is weird#fuck autism speaks

3 notes

·

View notes

Text

NEVER GO BACK TO THE OLD STORY

Be a watcher of your own mind and catch and prune any old thoughts and revise them right away. Restructure your own mind and neural pathways until you can’t even think like the old, be like the old.

11 notes

·

View notes

Text

ughhhh its just like if you lost all your memories, but your motor functions, your basic reasoning, your muscle memory is the same, is it still you? your name is stripped from you, just a singular letter remaining. you have no history, but isn't it still you? can't you still be yourself without your past? But if you woke up tomorrow with nothing, and you started living a new life and making new memories, forming new neural pathways (new traumas, new bonds, new shared experiences), is that you anymore? what makes you you (the you you are)? how far along into the new personality is it a major departure? does it start as soon as you wake up on the table? are there certain types of personalities that lumon looks for to better control?? is helena that headstrong and stubborn on the outside? is irving that much of a romantic? is that what the refining process is pruning, the things that make you harder to control? the things that make you you

3 notes

·

View notes

Text

holy shxt, the whole sacred timeline vs. freed timeline thing in Loki is a perfect analogy for autistic brains!

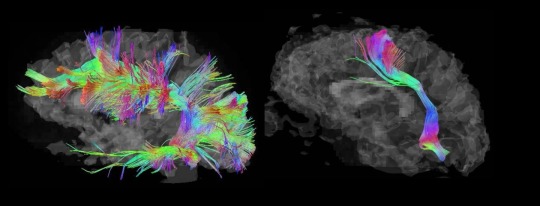

ok, so there's that MRI scan of Temple Grandin's brain, right?

autistic brain on the left │ neurotypical brain on the right

iirc these depict the neural connections made when processing visual imagery, where the neurotypical brain goes through neural pruning, getting rid of pathways that aren't needed, while the autistic brain doesn't do that

this explains both why and how we make connections neurotypicals find completely unrelated and far-fetched and why it's so easy for us to get overwhelmed and burnt out just from processing information

now to the analogy

freed timeline=autistic brain │ sacred timeline=neurotypical brain

the sacred timeline was artificially managed, making sure nexus events didn't kick off chain reactions leading to war and the destruction of all timelines by He Who Remains and his variants, essentially pruning the pathways that weren't needed

freeing the timeline resulted in the whole thing growing like a fractal, something that the finite temporal loom could never manage, considering the growth was infinite

and while I wouldn't say the neural paths in ND brains grow infinitely, it definitely feels like my thoughts are an incomprehensibly complex set of branches that my finite temporal loom of a brain can't contain, frequently resulting in meltdowns. not truly infinite, more like so impossible to process by a single consciousness it's an eldritch horror in it's magnitude.

the frightening conclusion being that I experience my neurodivergence as an eldritch horror, lol

#caffeine make audhd brain go brrr#loki theory#neurodivergence#loki#loki series#sacred timeline#neurodivergent#neurodiversity#eldritch horror

14 notes

·

View notes