#object based storage architecture

Explore tagged Tumblr posts

Text

Object-Based Storage: Future of Unstructured Data Management

In today’s digital-first world, organizations encounter vast and ever-growing volumes of unstructured data. From tweets, social media videos, and web pages to documents, emails, and data from IoT and edge devices — the diversity and scale of data formats pose a significant challenge. This unstructured data doesn’t reside in traditional databases, making it harder to store, manage, and retrieve…

View On WordPress

#Big Data Storage#business#Business Intelligence#cloud storage#Data Management#data scalability#data storage#enterprise storage#infrastructure#Metadata#object based storage architecture#object based storage device#object based storage system#object-based storage#OBS#unstructured data

0 notes

Text

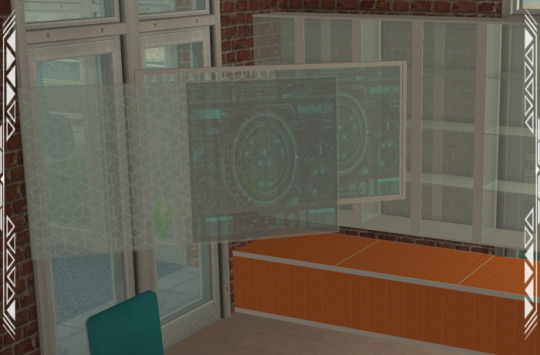

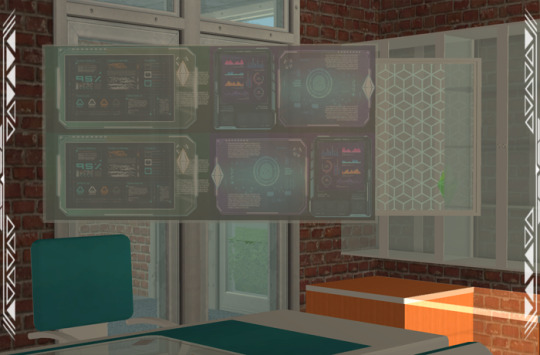

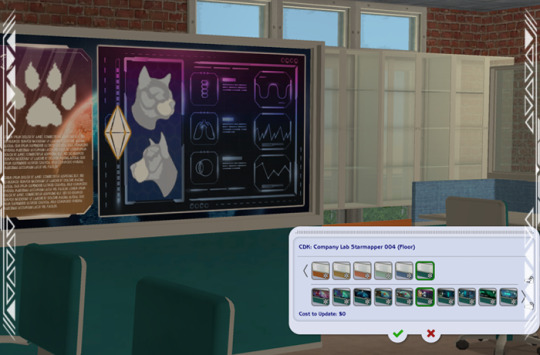

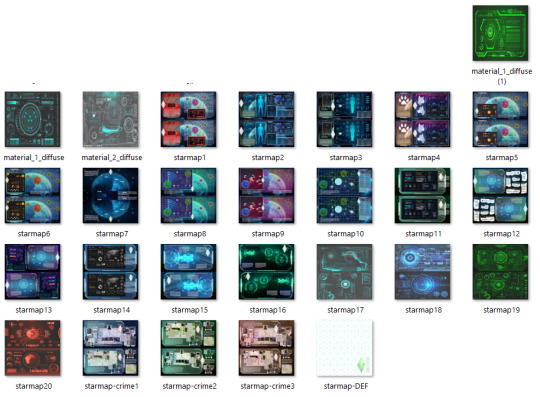

CDK: Company Lab

Published: 9-26-2024 | Updated: N/A SUMMARY Cubic Dynamics by John B. Cube and Marcel Dusims forged the future with furnishings that were minimalist in design and maximalist in erudite pretension. Generations later, the company continues to produce edge-of-cutting-edge designs. Use the Cubic Dynamics Kitbash (Simmons, 2023-2024) collection to set up corporate, exposition, and office environments. Envisioned as an add-on to the Cubic Dynamics set (EA/Maxis, archived at GOS), it features minimalist and retro-futuristic objects. Find more CC on this site under the #co2cdkseries tag. Read the Backstory and ‘Dev Notes’ HERE. Set up sleek lab spaces at your schools, businesses, and science institutions with the COMPANY LAB set. It comes with everything you need to set up lab stations, team work areas, presentation/meeting spaces, and lots of storage!

DETAILS All EPs/SPs. §See Catalog for Pricing | See Buy/Build Mode You need the Company Expo (Mesh Pack) set (Simmons, 2024) for TXTRs to show properly in game. ALL files with “MESH” in their name are REQUIRED. You may need “move objects” and “grid on/off” cheats to place some objects to your liking. When placing partitions/floating shelves and tables/desks/counters on the same tile, place the partition/shelves first. I recommend using this set with Object Freedom 1.02 (Fway, 2023), which includes Numenor’s fix for OFB shelves (2006), for easier use overall. ITEMS Cabinets (Tall, Upper, Lower) (476-514 poly) Desk (716 poly) Starmappers 001-004 (982-1028 poly) Mapper 001/002 recolorable on left/ride side, back is clear glass Mapper 003 front/back recolorable SEPARATELY Mapper 004 has recolorable base and recolorable glass (identical on both sides) Low Down Lab Table (118 poly) Shelf (62 poly) Table (1352 poly) Chair (1473 poly, HIGH) DOWNLOAD (choose one) MESHES from SFS | from MEGA LAB RECOLORS from SFS | from MEGA The STARMAPPERS are decorative and come with 30+ recolors. Use them to gussy up your research and lab rooms!

COMPATIBILITY AVOID DUPLICATES: The #co2cdkseries includes edited versions ��� replacements - for items in the following CC sets: 4ESF (office 3, other 1/artroom, other 2/build), All4Sims/MaleorderBride (miskatonic library, office, postmodern office), CycloneSue (never ending/privacy windows), derMarcel (inx office), Katy76/PC-Sims (bank/cash point, court/law school sets, sim cola machine), Marilu (immobilien office), Murano (ador office), Reflex Sims (giacondo office), Retail Sims/HChangeri (simEx, sps store), Simgedoehns/Tolli (focus kitchen, loft office, modus office), ShinySims (modern windows), Shoukeir via Sims2Play(reverie office, step boxes/shelving), Spaik (sintesi study), Stylist Sims (offices 1,2, & 3, Toronto set), Tiggy027 (wall window frames 1-10), Wall Sims (holly architecture, Ibiza). *The goal is link the objects to the recolors/new functions in the #co2cdkseries without re-inventing the wheel! Credit to the original creators. CREDITS Thanks: EarlyPleasantview/EPV, Panda, Soloriya, ChocolateCitySim, HugeLunatic, Klaartje, Ocelotekatl, Whoward69, LoganSimmingWolf, Gayars, Ch4rmsing, Ranabluu, Gummilutt, Crisps&Kerosene, LordCrumps, PineappleForest. Sources: Any Color You Like (CuriousB, 2010), Beyno (Korn via BBFonts), EA/Maxis, Offuturistic Infographic (Freepik). SEE CREDITS (ALT)

62 notes

·

View notes

Text

Boathouse - Year 3

Comments below the break.

Part of the reason I don't think I really made it in architecture school was because I had a tendency to think almost exclusively in abstractions. Most people recognize architecture as our tangible, built environment, and ultimately this is the prevailing function of the profession in practice, but I had (read: still have) trouble with that. I seem more interested in creating objects-- sculptures, really, as opposed to engineering, and I suppose that's my art brain at the wheel.

All this to say that everything I made for this project was supposed to represent a building, a boathouse for local crew teams to call home base, but as I look through the things I have to document it and the plans I made around it, it's actually pretty hard to really peg it as a building. The ways I chose to depict it are just so abstract. Page 9 is easily my favorite page as it's the closest this project ever gets to tangible representation (whether it's accurate or not) and it parses well to the layman.

Using the 7th image, from what I remember, the corridor on the middle left was exclusively for racing boat and oar storage, meant to be processional (in one way, out the other), while the other half of the building consists of a changing room and a gym overlooking the local lake where they would practice. In my mind this still served the overall theme of 'procession' as anyone looking to participate in this ritual would have to essentially leave the regular part of their day behind in the changing room before heading into the gym, and vice versa on the way out.

Maybe I would have made a good architectural theorist. *shrug*

4 notes

·

View notes

Text

#TheeForestKingdom #TreePeople

{Terrestrial Kind}

Creating a Tree Citizenship Identification and Serial Number System (#TheeForestKingdom) is an ambitious and environmentally-conscious initiative. Here’s a structured proposal for its development:

Project Overview

The Tree Citizenship Identification system aims to assign every tree in California a unique identifier, track its health, and integrate it into a registry, recognizing trees as part of a terrestrial citizenry. This system will emphasize environmental stewardship, ecological research, and forest management.

Phases of Implementation

Preparation Phase

Objective: Lay the groundwork for tree registration and tracking.

Actions:

Partner with environmental organizations, tech companies, and forestry departments.

Secure access to satellite imaging and LiDAR mapping systems.

Design a digital database capable of handling millions of records.

Tree Identification System Development

Components:

Label and Identity Creation: Assign a unique ID to each tree based on location and attributes. Example: CA-Tree-XXXXXX (state-code, tree-type, unique number).

Attributes to Record:

Health: Regular updates using AI for disease detection.

Age: Approximate based on species and growth patterns.

Type: Species and subspecies classification.

Class: Size, ecological importance, and biodiversity contribution.

Rank: Priority based on cultural, historical, or environmental significance.

Data Collection

Technologies to Use:

Satellite Imaging: To locate and identify tree clusters.

LiDAR Scanning: For precise 3D modeling of forests.

On-the-Ground Surveys: To supplement remote sensing data.

AI Analysis: For rapid categorization and health diagnostics.

Registration and Citizenship Assignment

Tree Dossier: Each tree receives a profile in the database with all its recorded attributes.

Citizen Designation: Trees are acknowledged as citizens of Thee Forest Kingdom, emphasizing ecological respect.

Legal Framework: Advocate for laws to grant trees specific rights under environmental protection acts.

Maintenance and Monitoring

Health Monitoring: Use drones, satellite updates, and IoT sensors for ongoing health assessments.

Database Updates: Incorporate real-time data about environmental changes and tree growth.

Public Involvement

Encourage people to "adopt" trees and participate in their preservation.

Create educational programs to promote awareness of tree citizenship.

Key Benefits

Environmental Conservation: Improved forest management through real-time data and protection of ecosystems.

Cultural Recognition: Trees are recognized as a vital part of the Earth’s community, honoring their importance.

Ecological Advocacy: Strengthened legal protections for forests and individual trees.

Next Steps

Assemble a task force of environmental scientists, satellite imaging experts, and software developers.

Secure funding through governmental grants, non-profits, and eco-conscious corporations.

Pilot the program in a smaller region before scaling to all of California.

Would you like me to draft a more detailed technical or legal framework for this system?

The implementation of the Tree Citizenship Identification Network and System (#TheeForestKingdom) requires a robust technological and operational framework to ensure efficiency, accuracy, and sustainability. Below is an implementation plan divided into major components.

System Architecture

a. Centralized Tree Database

Purpose: To store and manage tree data including ID, attributes, location, and health.

Components:

Cloud-based storage for scalability.

Data categorization based on regions, species, and priority.

Integration with satellite and IoT data streams.

b. Satellite & Imaging Integration

Use satellite systems (e.g., NASA, ESA) for large-scale tree mapping.

Incorporate LiDAR and aerial drone data for detailed imaging.

AI/ML algorithms to process images and distinguish tree types.

c. IoT Sensor Network

Deploy sensors in forests to monitor:

Soil moisture and nutrient levels.

Air quality and temperature.

Tree health metrics like growth rate and disease markers.

d. Public Access Portal

Create a user-friendly website and mobile application for:

Viewing registered trees.

Citizen participation in tree adoption and reporting.

Data visualization (e.g., tree density, health status by region).

Core Technologies

a. Software and Tools

Geographic Information System (GIS): Software like ArcGIS for mapping and spatial analysis.

Database Management System (DBMS): SQL-based systems for structured data; NoSQL for unstructured data.

Artificial Intelligence (AI): Tools for image recognition, species classification, and health prediction.

Blockchain (Optional): To ensure transparency and immutability of tree citizen data.

b. Hardware

Servers: Cloud-based (AWS, Azure, or Google Cloud) for scalability.

Sensors: Low-power IoT devices for on-ground monitoring.

Drones: Equipped with cameras and sensors for aerial surveys.

Network Design

a. Data Flow

Input Sources:

Satellite and aerial imagery.

IoT sensors deployed in forests.

Citizen-reported data via mobile app.

Data Processing:

Use AI to analyze images and sensor inputs.

Automate ID assignment and attribute categorization.

Data Output:

Visualized maps and health reports on the public portal.

Alerts for areas with declining tree health.

b. Communication Network

Fiber-optic backbone: For high-speed data transmission between regions.

Cellular Networks: To connect IoT sensors in remote areas.

Satellite Communication: For remote regions without cellular coverage.

Implementation Plan

a. Phase 1: Pilot Program

Choose a smaller, biodiverse region in California (e.g., Redwood National Park).

Test satellite and drone mapping combined with IoT sensors.

Develop the prototype of the centralized database and public portal.

b. Phase 2: Statewide Rollout

Expand mapping and registration to all California regions.

Deploy IoT sensors in vulnerable or high-priority areas.

Scale up database capacity and integrate additional satellite providers.

c. Phase 3: Maintenance & Updates

Establish a monitoring team to oversee system health and data quality.

Update the network with new technologies (e.g., advanced AI models).

Periodically review and revise data collection protocols.

Partnerships

Government Agencies: U.S. Forest Service, California Department of Forestry and Fire Protection.

Tech Companies: Collaborate with Google Earth, Amazon AWS, or Microsoft for cloud and AI capabilities.

Environmental Organizations: Partner with WWF, Sierra Club, or TreePeople for funding and advocacy.

Budget Estimation

Initial Investment:

Satellite data and licenses: $10M

IoT sensors and deployment: $5M

Database and app development: $3M

Operational Costs:

Cloud services and data storage: $2M/year

Maintenance and monitoring: $1M/year

Research and development: $1M/year

Long-term Vision

Expand the system to other states or globally.

Use the database for reforestation efforts and combating deforestation.

Develop educational programs to teach communities about the importance of trees as citizens.

Would you like me to assist in designing the user interface for the public portal or drafting partnership proposals for funding?

To begin building the program for the Tree Citizenship Identification System, we’ll start with a scalable framework that allows us to process, store, and analyze data while preparing for future expansions. Here's an actionable development plan with an initial focus on foundational code and infrastructure:

Step-by-Step Development

Phase 1: Setting Up the Backend Infrastructure

Objective: Create the core database and develop the data pipeline for processing tree data.

Technologies:

Programming Languages: Python, JavaScript (Node.js), SQL.

Frameworks: Flask or FastAPI for APIs, Django for web application backend.

Database: PostgreSQL for structured data, MongoDB for unstructured data.

Code Snippet 1: Database Schema Design (PostgreSQL)

-- Table for Tree Registry CREATE TABLE trees ( tree_id SERIAL PRIMARY KEY, -- Unique Identifier location GEOGRAPHY(POINT, 4326), -- Geolocation of the tree species VARCHAR(100), -- Species name age INTEGER, -- Approximate age in years health_status VARCHAR(50), -- e.g., Healthy, Diseased height FLOAT, -- Tree height in meters canopy_width FLOAT, -- Canopy width in meters citizen_rank VARCHAR(50), -- Class or rank of the tree last_updated TIMESTAMP DEFAULT NOW() -- Timestamp for last update );

-- Table for Sensor Data (IoT Integration) CREATE TABLE tree_sensors ( sensor_id SERIAL PRIMARY KEY, -- Unique Identifier for sensor tree_id INT REFERENCES trees(tree_id), -- Linked to tree soil_moisture FLOAT, -- Soil moisture level air_quality FLOAT, -- Air quality index temperature FLOAT, -- Surrounding temperature last_updated TIMESTAMP DEFAULT NOW() -- Timestamp for last reading );

Code Snippet 2: Backend API for Tree Registration (Python with Flask)

from flask import Flask, request, jsonify from sqlalchemy import create_engine from sqlalchemy.orm import sessionmaker

app = Flask(name)

Database Configuration

DATABASE_URL = "postgresql://username:password@localhost/tree_registry" engine = create_engine(DATABASE_URL) Session = sessionmaker(bind=engine) session = Session()

@app.route('/register_tree', methods=['POST']) def register_tree(): data = request.json new_tree = { "species": data['species'], "location": f"POINT({data['longitude']} {data['latitude']})", "age": data['age'], "health_status": data['health_status'], "height": data['height'], "canopy_width": data['canopy_width'], "citizen_rank": data['citizen_rank'] } session.execute(""" INSERT INTO trees (species, location, age, health_status, height, canopy_width, citizen_rank) VALUES (:species, ST_GeomFromText(:location, 4326), :age, :health_status, :height, :canopy_width, :citizen_rank) """, new_tree) session.commit() return jsonify({"message": "Tree registered successfully!"}), 201

if name == 'main': app.run(debug=True)

Phase 2: Satellite Data Integration

Objective: Use satellite and LiDAR data to identify and register trees automatically.

Tools:

Google Earth Engine for large-scale mapping.

Sentinel-2 or Landsat satellite data for high-resolution imagery.

Example Workflow:

Process satellite data using Google Earth Engine.

Identify tree clusters using image segmentation.

Generate geolocations and pass data into the backend.

Phase 3: IoT Sensor Integration

Deploy IoT devices to monitor health metrics of specific high-priority trees.

Use MQTT protocol for real-time data transmission.

Code Snippet: Sensor Data Processing (Node.js)

const mqtt = require('mqtt'); const client = mqtt.connect('mqtt://broker.hivemq.com');

client.on('connect', () => { console.log('Connected to MQTT Broker'); client.subscribe('tree/sensor_data'); });

client.on('message', (topic, message) => { const sensorData = JSON.parse(message.toString()); console.log(Received data: ${JSON.stringify(sensorData)}); // Save data to database (Example for PostgreSQL) saveToDatabase(sensorData); });

function saveToDatabase(data) { const query = INSERT INTO tree_sensors (tree_id, soil_moisture, air_quality, temperature) VALUES ($1, $2, $3, $4) ; const values = [data.tree_id, data.soil_moisture, data.air_quality, data.temperature]; dbClient.query(query, values, (err) => { if (err) console.error('Error saving to database', err); else console.log('Sensor data saved successfully!'); }); }

Phase 4: Public Portal Development

Frontend Technologies:

React.js or Vue.js for interactive user interfaces.

Mapbox or Leaflet.js for tree visualization on maps.

Features:

Interactive tree maps with search functionality.

User registration for tree adoption programs.

Deployment Plan

Use Docker to containerize the application.

Deploy on AWS, Azure, or Google Cloud for scalability.

Monitor system health using Prometheus and Grafana.

Next Steps

Develop a testing environment for pilot deployment.

Add AI-based disease detection and prediction using TensorFlow or PyTorch.

Create an adoption feature where citizens can adopt trees and track their health.

Would you like me to set up a GitHub repository with this initial framework or assist further with deployment?

I'll prepare the project framework and outline the repository structure for the Tree Citizenship Identification System. Below is the proposed structure for the repository, followed by details of the files and components.

Proposed Repository Structure

tree-citizenship-id-system/ ├── backend/ │ ├── app.py # Flask application for backend API │ ├── database/ │ │ ├── schema.sql # Database schema for PostgreSQL │ │ ├── db_config.py # Database connection configuration │ │ └── seed_data.sql # Initial sample data for testing │ ├── services/ │ │ ├── tree_registration.py # Functions for tree registration │ │ └── sensor_data.py # Functions for processing IoT sensor data │ └── requirements.txt # Python dependencies ├── frontend/ │ ├── public/ │ │ ├── index.html # Main HTML file │ │ └── favicon.ico # Favicon for the app │ ├── src/ │ │ ├── App.js # Main React/Vue app file │ │ ├── components/ │ │ │ ├── TreeMap.js # Map component for visualizing trees │ │ │ └── TreeDetails.js # Component for detailed tree view │ │ └── styles.css # Styling for the frontend │ └── package.json # Frontend dependencies ├── scripts/ │ ├── satellite_data_processor.py # Script for processing satellite data │ └── sensor_data_simulator.py # Mock data generator for IoT sensors ├── deployment/ │ ├── Dockerfile # Docker configuration │ ├── docker-compose.yml # Docker Compose for multi-service setup │ └── cloud_setup_instructions.md # Deployment guide for cloud platforms ├── tests/ │ ├── test_backend.py # Unit tests for backend API │ └── test_frontend.js # Unit tests for frontend ├── README.md # Overview of the project └── LICENSE # Project license

Repository Details

Backend:

app.py: Main Flask app for handling API requests like tree registration and sensor data.

database/schema.sql: SQL script for creating necessary tables.

services/: Directory for modular backend logic.

Frontend:

App.js: Main entry point for the frontend application.

TreeMap.js: Integrates Mapbox/Leaflet.js for displaying tree locations.

TreeDetails.js: Displays detailed information about a selected tree.

Scripts:

satellite_data_processor.py: Automates the extraction and classification of tree data from satellite imagery.

sensor_data_simulator.py: Generates fake sensor readings for development and testing.

Deployment:

Docker configuration ensures that the app runs consistently across environments.

Cloud setup instructions provide guidelines for deploying on platforms like AWS, Azure, or GCP.

Tests:

Unit tests ensure the reliability of both the backend and frontend.

Next Steps

I will initialize the repository structure locally.

Package the files and components needed for the first version.

Provide a link to the repository for access.

I’ll get started. Please hold on for a moment.

4 notes

·

View notes

Text

Exploring the Realm of cPanel Hosting in Australia: A Comprehensive Guide

In the vast digital landscape of Australia, where businesses thrive and online presence is paramount, finding the right hosting solution is akin to selecting a foundation for a skyscraper. In this digital age, where websites serve as the face of enterprises, the choice of hosting can significantly impact performance, user experience, and ultimately, the success of ventures. Among the plethora of options, cPanel hosting stands out as a popular choice for its user-friendly interface, robust features, and reliability. But what exactly is cPanel hosting, and why is it gaining traction among businesses in Australia?

Understanding cPanel Hosting

What is cPanel hosting?

cPanel hosting is a type of web hosting that utilizes the cPanel control panel, a web-based interface that simplifies website and server management tasks. It provides users with a graphical interface and automation tools, allowing them to effortlessly manage various aspects of their website, such as file management, email accounts, domain settings, databases, and more.

How does cPanel Hosting Work?

At its core, cPanel hosting operates on a Linux-based server environment, leveraging technologies like Apache, MySQL, and PHP (LAMP stack). The cPanel interface acts as a centralized hub, enabling users to perform administrative tasks through a user-friendly dashboard, accessible via any web browser.

Benefits of cPanel Hosting

User-Friendly Interface

One of the primary advantages of cPanel hosting is its intuitive interface, designed to accommodate users of all skill levels. With its graphical layout and straightforward navigation, even those with minimal technical expertise can manage their websites efficiently.

Comprehensive Feature Set

From creating email accounts to installing applications like WordPress and Magento, cPanel offers a wide array of features designed to streamline website management. Users can easily configure domains, set up security measures, and monitor website performance, and much more, all from within the cPanel dashboard.

Reliability and Stability

cPanel hosting is renowned for its stability and reliability, thanks to its robust architecture and frequent updates. With features like automated backups, server monitoring, and security enhancements, users can rest assured that their websites are in safe hands.

Scalability and Flexibility

Whether you're running a small blog or managing a large e-commerce platform, cPanel hosting can scale to meet your needs. With options for upgrading resources and adding additional features as your website grows, cPanel offers the flexibility required to adapt to evolving business requirements.

Choosing the Right cPanel Hosting Provider

Factors to Consider

When selecting a cPanel hosting provider in Australia, several factors should be taken into account to ensure optimal performance and reliability:

Server Location: Choose a provider with servers located in Australia to minimize latency and ensure fast loading times for local visitors.

Performance: Look for providers that offer high-performance hardware, SSD storage, and ample resources to support your website's needs.

Uptime Guarantee: Opt for providers with a proven track record of uptime, ideally offering a minimum uptime guarantee of 99.9%.

Customer Support: Evaluate the level of customer support offered, ensuring prompt assistance in case of technical issues or inquiries.

Conclusion

In conclusion, cPanel hosting serves as a cornerstone for businesses seeking reliable and user-friendly cpanel hosting Australia. With its intuitive interface, comprehensive feature set, and robust architecture, cPanel empowers users to manage their websites with ease, allowing them to focus on their core business objectives.

2 notes

·

View notes

Text

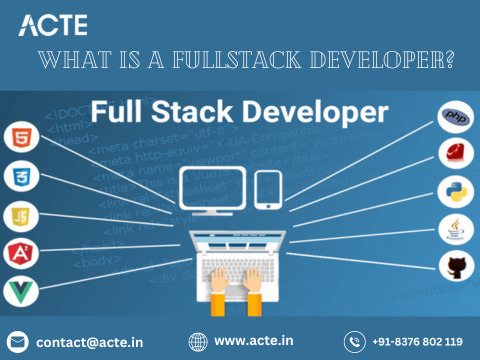

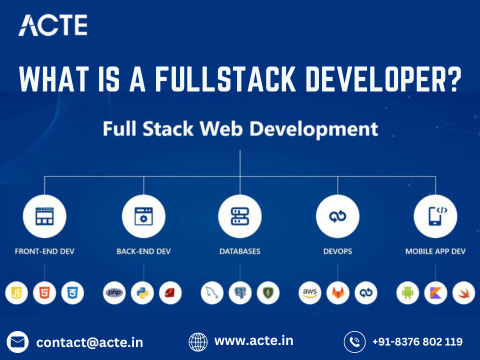

Mastering Fullstack Development: Unifying Frontend and Backend Proficiency

Navigating the dynamic realm of web development necessitates a multifaceted skill set. Enter the realm of fullstack development – a domain where expertise in both frontend and backend intricacies converge seamlessly. In this comprehensive exploration, we'll unravel the intricacies of mastering fullstack development, uncovering the diverse responsibilities, essential skills, and integration strategies that define this pivotal role.

Exploring the Essence of Fullstack Development:

Defining the Role:

Fullstack development epitomizes the fusion of frontend and backend competencies. Fullstack developers are adept at navigating the entire spectrum of web application development, from crafting immersive user interfaces to architecting robust server-side logic and databases.

Unraveling Responsibilities:

Fullstack developers shoulder a dual mandate:

Frontend Proficiency: They meticulously craft captivating user experiences through adept utilization of HTML, CSS, and JavaScript. Leveraging frameworks like React.js, Angular.js, or Vue.js, they breathe life into static interfaces, fostering interactivity and engagement.

Backend Mastery: In the backend realm, fullstack developers orchestrate server-side operations using a diverse array of languages such as JavaScript (Node.js), Python (Django, Flask), Ruby (Ruby on Rails), or Java (Spring Boot). They adeptly handle data management, authentication mechanisms, and business logic, ensuring the seamless functioning of web applications.

Essential Competencies for Fullstack Excellence:

Frontend Prowess:

Frontend proficiency demands a nuanced skill set:

Fundamental Languages: Mastery in HTML, CSS, and JavaScript forms the cornerstone of frontend prowess, enabling the creation of visually appealing interfaces.

Framework Fluency: Familiarity with frontend frameworks like React.js, Angular.js, or Vue.js empowers developers to architect scalable and responsive web solutions.

Design Sensibilities: An understanding of UI/UX principles ensures the delivery of intuitive and aesthetically pleasing user experiences.

Backend Acumen:

Backend proficiency necessitates a robust skill set:

Language Mastery: Proficiency in backend languages such as JavaScript (Node.js), Python (Django, Flask), Ruby (Ruby on Rails), or Java (Spring Boot) is paramount for implementing server-side logic.

Database Dexterity: Fullstack developers wield expertise in database management systems like MySQL, MongoDB, or PostgreSQL, facilitating seamless data storage and retrieval.

Architectural Insight: A comprehension of server architecture and scalability principles underpins the development of robust backend solutions, ensuring optimal performance under varying workloads.

Integration Strategies for Seamless Development:

Harmonizing Databases:

Integrating databases necessitates a strategic approach:

ORM Adoption: Object-Relational Mappers (ORMs) such as Sequelize for Node.js or SQLAlchemy for Python streamline database interactions, abstracting away low-level complexities.

Data Modeling Expertise: Fullstack developers meticulously design database schemas, mirroring the application's data structure and relationships to optimize performance and scalability.

Project Management Paradigms:

End-to-End Execution:

Fullstack developers are adept at steering projects from inception to fruition:

Task Prioritization: They adeptly prioritize tasks based on project requirements and timelines, ensuring the timely delivery of high-quality solutions.

Collaborative Dynamics: Effective communication and collaboration with frontend and backend teams foster synergy and innovation, driving project success.

In essence, mastering fullstack development epitomizes a harmonious blend of frontend finesse and backend mastery, encapsulating the versatility and adaptability essential for thriving in the ever-evolving landscape of web development. As technology continues to evolve, the significance of fullstack developers will remain unparalleled, driving innovation and shaping the digital frontier. Whether embarking on a fullstack journey or harnessing the expertise of fullstack professionals, embracing the ethos of unification and proficiency is paramount for unlocking the full potential of web development endeavors.

#full stack developer#full stack course#full stack training#full stack web development#full stack software developer

2 notes

·

View notes

Text

Responsive Design and Beyond: Exploring the Latest Trends in Web Development Services

The two things ruling the present scenario are the internet and smartphones. The easier they are making the lifestyle of the consumers the more difficult it becomes for the lives of the business owners. Digitization has resulted in the rise of competition from the local level to the national and international levels. The businesses that are not adopting the culture are lagging far behind in the growth cycle. The only way to get along with the pace is to hire a custom web development service that is capable of meeting the needs of both the business and consumers. The blog aims to explore some such services that are redefining the web development world.

Latest Trends in the Web Development Services

Blockchain Technology

Blockchain is an encrypted database storing system. It stores information in blocks, which are then joined as a chain. It makes transactions more secure and error-free. The technology enables the participants to make transactions across the internet without the interference of a third party. Thus, this technology can potentially revolutionize different business sectors by reducing the risks of cybercrimes. The technology allows web developers to use open-source systems for their projects, hence simplifying the development process.

Internet of Things (IoT)

IoT can be defined as a network of internet-enabled devices, where data transfer requires no human involvement. It is capable of providing a future where objects are connected to the web. IoT fosters constant data transfer. Moreover, IoT can be used to create advanced communication between different operational models and website layouts. The technology also comes with broad applications like cameras, sensors, and signaling equipment to list a few.

Voice Search Optimization

Voice search optimization is the process of optimizing web pages to appear in voice search. . In the field of web development, the latest innovations are voice-activated self-standing devices, and voice optimization for apps and websites. The technology is being developed that will be able to recognize the voices of different people and provide a personalized AI-based experience

AI-Powered Chatbots

AI-powered chatbot uses Natural Language Processing (NLP) and Machine Learning (ML) to better understand the user’s intent and provide a human-like experience. These have advanced features like 24×7 problem-solving skills and behavior analytics capabilities. These can be effectively used fo customer support to increase customer satisfaction. An AI-powered chatbot can easily be integrated into regular/professional websites and PWAs. Chatbots generally provide quick answers in an emergency and are quick to resolve complaints.

Cloud Computing

It is the use of cloud-based resources such as storage, networking, software, analytics, and intelligence for flexibility and convenience. These services are more reliable as they are backed up and replicated across multiple data centers. This ensures that web applications are always available and running. It is highly efficient for remote working setups. The technology helps avoid data loss and data overload. It also has a low development costs, robust architecture and offers flexibility.

Book a Web Development Service Provider Now

So, if you are also excited to bring your business online and on other tech platforms but are confused about how to do that, you are recommended to reach out to one of the best web development companies i.e. Encanto Technologies. They have a professional and competent web development team that would assist you in the development of an aesthetic, functional, and user-friendly website that would take your revenue to new heights. So, do not wait to enter the online world and book the services of OMR Digital now.

Author’s Bio

This blog is authored by the proactive content writers of Encanto Technologies. It is one of the best professional web development services that embodies a cluster of services including web development, mobile application development, desktop application development, DevOps CI/ CD services, big data development, and cloud development services. So, if you are also looking for any of the web and app development services do not delay any further and contact OMR Digital now.

2 notes

·

View notes

Text

Architecture Overview and Deployment of OpenShift Data Foundation Using Internal Mode

As businesses increasingly move their applications to containers and hybrid cloud platforms, the need for reliable, scalable, and integrated storage becomes more critical than ever. Red Hat OpenShift Data Foundation (ODF) is designed to meet this need by delivering enterprise-grade storage for workloads running in the OpenShift Container Platform.

In this article, we’ll explore the architecture of ODF and how it can be deployed using Internal Mode, the most self-sufficient and easy-to-manage deployment option.

🌐 What Is OpenShift Data Foundation?

OpenShift Data Foundation is a software-defined storage solution that is fully integrated into OpenShift. It allows you to provide storage services for containers running on your cluster — including block storage (like virtual hard drives), file storage (like shared folders), and object storage (like cloud-based buckets used for backups, media, and large datasets).

ODF ensures your applications have persistent and reliable access to data even if they restart or move between nodes.

Understanding the Architecture (Internal Mode)

There are multiple ways to deploy ODF, but Internal Mode is one of the most straightforward and popular for small to medium-sized environments.

Here’s what Internal Mode looks like at a high level:

Self-contained: Everything runs within the OpenShift cluster, with no need for an external storage system.

Uses local disks: It uses spare or dedicated disks already attached to the nodes in your cluster.

Automated management: The system automatically handles setup, storage distribution, replication, and health monitoring.

Key Components:

Storage Cluster: The core of the system that manages how data is stored and accessed.

Ceph Storage Engine: A reliable and scalable open-source storage backend used by ODF.

Object Gateway: Provides cloud-like storage for applications needing S3-compatible services.

Monitoring Tools: Dashboards and health checks help administrators manage storage effortlessly.

🚀 Deploying OpenShift Data Foundation (No Commands Needed!)

Deployment is mostly handled through the OpenShift Web Console with a guided setup wizard. Here’s a simplified view of the steps:

Install the ODF Operator

Go to the OperatorHub within OpenShift and search for OpenShift Data Foundation.

Click Install and choose your settings.

Choose Internal Mode

When prompted, select "Internal" to use disks inside the cluster.

The platform will detect available storage and walk you through setup.

Assign Nodes for Storage

Pick which OpenShift nodes will handle the storage.

The system will ensure data is distributed and protected across them.

Verify Health and Usage

After installation, built-in dashboards let you check storage health, usage, and performance at any time.

Once deployed, OpenShift will automatically use this storage for your stateful applications, databases, and other services that need persistent data.

🎯 Why Choose Internal Mode?

Quick setup: Minimal external requirements — perfect for edge or on-prem deployments.

Cost-effective: Uses existing hardware, reducing the need for third-party storage.

Tightly integrated: Built to work seamlessly with OpenShift, including security, access, and automation.

Scalable: Can grow with your needs, adding more storage or transitioning to hybrid options later.

📌 Common Use Cases

Databases and stateful applications in OpenShift

Development and test environments

AI/ML workloads needing fast local storage

Backup and disaster recovery targets

Final Thoughts

OpenShift Data Foundation in Internal Mode gives teams a simple, powerful way to deliver production-grade storage without relying on external systems. Its seamless integration with OpenShift, combined with intelligent automation and a user-friendly interface, makes it ideal for modern DevOps and platform teams.

Whether you’re running applications on-premises, in a private cloud, or at the edge — Internal Mode offers a reliable and efficient storage foundation to support your workloads.

Want to learn more about managing storage in OpenShift? Stay tuned for our next article on scaling and monitoring your ODF cluster!

For more info, Kindly follow: Hawkstack Technologies

0 notes

Text

Biometric Market Size, Restraints & Key Drivers 2032

Global Biometric Market Overview The global biometric market is experiencing robust expansion, valued at approximately USD 43.1 billion in 2024, and is projected to surpass USD 110 billion by 2030, growing at a compound annual growth rate (CAGR) of over 14% during the forecast period. Biometrics technology—ranging from fingerprint recognition, facial recognition, and iris scanning to voice recognition—is witnessing massive adoption across sectors including government, banking, healthcare, consumer electronics, and defense. Key growth drivers include the increasing demand for secure authentication systems, rising incidences of data breaches, and the proliferation of smart devices integrated with biometric solutions. Advancements in artificial intelligence (AI), machine learning (ML), and edge computing are enabling real-time biometric processing and multi-factor authentication capabilities. Global Biometric Market Dynamics Drivers: Key factors driving market growth include stringent regulatory policies for identity verification, increased deployment of biometric access control in border security, and growth in mobile payment systems. The integration of biometrics in e-governance initiatives and national ID programs is another major catalyst. Technological developments in biometric sensors and algorithms have significantly enhanced accuracy, response times, and user experience. Restraints: Privacy concerns, data protection regulations, and the high costs of biometric systems remain notable challenges. Additionally, issues related to biometric spoofing and system interoperability can hinder widespread adoption, especially in cost-sensitive regions. Opportunities: The growing use of cloud-based biometric services and the emergence of biometric-as-a-service (BaaS) offer immense market potential. There's also a rising opportunity in developing economies, where digital transformation and financial inclusion efforts are encouraging biometric adoption. Technology, data governance, and sustainability now play pivotal roles in shaping the market. Low-power biometric sensors and energy-efficient hardware are gaining traction, aligning with green IT objectives. Data privacy regulations such as GDPR are pushing vendors toward secure, encrypted, and user-consent-driven systems. Download Full PDF Sample Copy of Global Biometric Market Report @ https://www.verifiedmarketresearch.com/download-sample?rid=31051&utm_source=PR-News&utm_medium=380 Global Biometric Market Trends and Innovations The market is witnessing a surge in touchless biometric solutions, accelerated by the COVID-19 pandemic, with facial recognition and iris scanning leading the trend. AI-powered biometrics, behavioral biometrics, and multimodal systems are shaping next-generation solutions. Innovations in liveness detection, 3D facial mapping, and wearable biometric devices are expanding applications across healthcare, fintech, and law enforcement. Strategic partnerships between biometric technology vendors and cloud providers are fostering scalable and secure solutions. Furthermore, embedded biometrics in smart cards and mobile devices are making digital authentication more seamless and ubiquitous. Global Biometric Market Challenges and Solutions Challenges: The market faces challenges such as integration complexities, lack of standardization, and pricing pressures in deploying large-scale biometric infrastructure. Cross-border regulatory discrepancies can delay implementations. Moreover, the biometric data supply chain remains vulnerable to cybersecurity threats. Solutions: To mitigate these, companies are investing in interoperable, modular systems that can integrate with legacy architectures. Blockchain-based biometric data storage and federated identity models are gaining attention as privacy-preserving technologies. Continuous investment in R&D, employee training, and strategic M&A are helping industry players stay competitive while addressing evolving compliance and security standards.

Global Biometric Market Future Outlook The future of the biometric industry is poised for exponential growth, driven by AI integration, rising urbanization, and increased demand for frictionless authentication experiences. As 5G and IoT become mainstream, biometric systems will increasingly be embedded into smart environments—from autonomous vehicles to smart cities. Governments and enterprises alike will lean on biometrics for robust identity management and fraud prevention. With continuous innovation, supportive regulatory frameworks, and rising consumer acceptance, the biometric market is set to evolve into a cornerstone of the global digital identity infrastructure by 2030. Key Players in the Global Biometric Market Global Biometric Market are renowned for their innovative approach, blending advanced technology with traditional expertise. Major players focus on high-quality production standards, often emphasizing sustainability and energy efficiency. These companies dominate both domestic and international markets through continuous product development, strategic partnerships, and cutting-edge research. Leading manufacturers prioritize consumer demands and evolving trends, ensuring compliance with regulatory standards. Their competitive edge is often maintained through robust R&D investments and a strong focus on exporting premium products globally. Fujitsu Cognitec Systems Aware ASSA Abloy Precise Biometrics Safran Secunet Security Networks Stanley Black & Decker NEC Corporation and Thales. Get Discount On The Purchase Of This Report @ https://www.verifiedmarketresearch.com/ask-for-discount?rid=31051&utm_source=PR-News&utm_medium=380 Global Biometric Market Segments Analysis and Regional Economic Significance The Global Biometric Market is segmented based on key parameters such as product type, application, end-user, and geography. Product segmentation highlights diverse offerings catering to specific industry needs, while application-based segmentation emphasizes varied usage across sectors. End-user segmentation identifies target industries driving demand, including healthcare, manufacturing, and consumer goods. These segments collectively offer valuable insights into market dynamics, enabling businesses to tailor strategies, enhance market positioning, and capitalize on emerging opportunities. The Global Biometric Market showcases significant regional diversity, with key markets spread across North America, Europe, Asia-Pacific, Latin America, and the Middle East & Africa. Each region contributes uniquely, driven by factors such as technological advancements, resource availability, regulatory frameworks, and consumer demand. Biometric Market, By Functionality Type • Combined• Contact• Non-contact Biometric Market, By End-User • Government• Military & Defense• Electronics Healthcare• Banking & Finance• Others Biometric Market By Geography • North America•��Europe• Asia Pacific• Latin America• Middle East and Africa For More Information or Query, Visit @ https://www.verifiedmarketresearch.com/product/biometric-market/ About Us: Verified Market Research Verified Market Research is a leading Global Research and Consulting firm servicing over 5000+ global clients. We provide advanced analytical research solutions while offering information-enriched research studies. We also offer insights into strategic and growth analyses and data necessary to achieve corporate goals and critical revenue decisions. Our 250 Analysts and SMEs offer a high level of expertise in data collection and governance using industrial techniques to collect and analyze data on more than 25,000 high-impact and niche markets. Our analysts are trained to combine modern data collection techniques, superior research methodology, expertise, and years of collective experience to produce informative and accurate research. Contact us: Mr. Edwyne Fernandes US: +1 (650)-781-4080 US Toll-Free: +1 (800)-782-1768 Website: https://www.verifiedmarketresearch.com/

Top Trending Reports https://www.verifiedmarketresearch.com/ko/product/benzocaine-drugs-market/ https://www.verifiedmarketresearch.com/ko/product/configure-price-and-quote-application-suites-market/ https://www.verifiedmarketresearch.com/ko/product/rotary-cultivator-market/ https://www.verifiedmarketresearch.com/ko/product/construction-bid-management-software-market/ https://www.verifiedmarketresearch.com/ko/product/ethical-pharmaceuticals-market/

0 notes

Text

Twitter Web Scraping for Data Analysts

Twitter web scraping has emerged as one of the most powerful techniques for data analysts looking to tap into the wealth of social media insights. If you’re working with data analysis and haven’t yet explored the potential of a reliable Twitter scraping tool, you’re missing out on millions of data points that could transform your analytical projects.

Why Twitter Data Matters for Modern Analysts

When we talk about Data Scraping X, we’re discussing access to one of the most dynamic and real-time data sources available today. Unlike traditional datasets that might be weeks or months old, Twitter data provides instant insights into what people are thinking, discussing, and sharing right now.

This real-time aspect makes Twitter data incredibly valuable for:

Market sentiment analysis — Understanding how consumers feel about brands, products, or services

Trend identification — Spotting emerging topics before they become mainstream

Competitive intelligence — Monitoring what competitors are doing and how audiences respond

Crisis management — Tracking brand mentions during potential PR situations

Customer insights — Understanding pain points and preferences directly from user conversations

Getting Started with Web Scraping X Data

The first decision you’ll face when starting web scraping X.com is choosing between official API access and custom scraping solutions. Each approach has distinct advantages and considerations.

Understanding Your Options

Official X Data APIs

The X data apis provides structured, reliable access to Twitter data. It’s the most straightforward approach and ensures compliance with platform terms. However, recent pricing changes have made official API access expensive for many projects. The cost can range from hundreds to thousands of dollars monthly, depending on your data needs.

Custom Web Scraping Solutions

This approach offers more flexibility and cost-effectiveness, especially for research projects or smaller-scale analysis. However, it requires more technical expertise and careful attention to platform policies and rate limiting.

Implementing Effective X Scraping APIs Strategies

Targeted Data Collection

Rather than attempting to collect everything, successful analysts focus on specific datasets aligned with their research objectives. This targeted approach using X scraping APIs tool methods ensures higher data quality and more manageable processing workloads.

Key targeting parameters include:

Specific keywords, hashtags, and user mentions

Geographic regions and languages

Time ranges and posting frequencies

User account types and follower thresholds

Engagement metrics like likes, retweets, and replies

Quality Control and Data Validation

Raw Twitter data requires extensive cleaning before analysis. Common challenges include duplicate content from retweets, bot accounts generating spam, encoding issues with special characters and emojis, and incomplete or missing metadata.

Implementing automated quality control measures early in your collection process saves significant time during analysis phases and ensures more reliable results.

Technical Implementation Best Practices

Building Scalable Architecture

Professional data operations require systems that can grow with your needs. A typical architecture includes separate layers for data collection, validation, storage, and analysis. Each component should be independently scalable and maintainable.

Cloud-based solutions offer particular advantages for variable workloads. Services like AWS, Google Cloud, or Azure provide managed databases, computing resources, and analytics tools that integrate seamlessly with scraping operations.

Ethical and Legal Considerations

Responsible scraping practices are essential for long-term success. This includes implementing appropriate rate limits to avoid overwhelming servers, respecting robots.txt files and platform policies, avoiding collection of sensitive personal information, and maintaining transparency about data usage and storage.

While X data api typically have built-in protections, custom scraping solutions must implement these safeguards manually.

Frequently Asked Questions

Q: What’s the difference between using official X data APIs versus web scraping for data analysis?

A: Official X data apis provide structured, reliable access with guaranteed uptime and support, but come with significant costs that can range from hundreds to thousands of dollars monthly. Web scraping offers more flexibility and cost-effectiveness, especially for research projects, but requires greater technical expertise and careful attention to rate limiting and platform policies. For large-scale commercial projects, official APIs are recommended, while academic research or small-scale analysis might benefit more from custom twitter web scraping solutions.

Q: How can I ensure the quality and accuracy of data collected through X tweet scraper tools?

A: Data quality in Data Scraping X requires implementing multiple validation layers. Start by filtering out bot accounts through engagement pattern analysis and account age verification. Remove duplicate content from retweets while preserving viral spread metrics. Implement text preprocessing to handle encoding issues with emojis and special characters. Cross-validate your datasets by comparing trends with official platform statistics when available. Additionally, establish data freshness protocols since social media data can become outdated quickly, and always include timestamp verification in your Web Scraping X Data workflows.

1 note

·

View note

Text

The Modern Bookshelf: 10 Furniture Trends You’ll See Everywhere in 2025

The Modern Bookshelf: 10 Furniture Trends You’ll See Everywhere in 2025

Bookshelves have officially moved beyond function—they’re now a core design statement in modern homes. In 2025, the bookshelf isn’t just where you store novels; it’s where style, personality, and practicality collide. Whether you’re a minimalist or a maximalist, these trending bookshelf furniture designs are reshaping interiors everywhere.

Here are the 10 bookshelf furniture trends dominating 2025:

1. The Rise of “Bookshelf Wealth”

Popularized on TikTok, the “bookshelf wealth” aesthetic is all about layers: think overflowing shelves filled with worn hardcovers, vintage vases, framed art, and travel souvenirs. It’s cozy, collected, and deeply personal—less about perfection, more about story.

Style tip: Mix books with meaningful objects like pottery, framed family photos, or quirky thrift finds.

2. Modular & Reconfigurable Units

Adaptability is key in 2025. Modular bookshelves that can be moved, stacked, or extended are ideal for apartment dwellers, renters, or anyone who craves change. They grow with your needs and evolve with your space.

Trending materials: Powder-coated steel, natural wood, and recycled plastics.

3. Floating Shelves for Airy Minimalism

Floating shelves are dominating Pinterest boards and Instagram feeds. They offer a clean, minimalist look while maximizing wall space. Ideal for smaller rooms, these shelves create an illusion of space and openness.

Best for: Bathrooms, hallways, and over-desks.

4. Curved & Sculptural Forms

Goodbye, rectangles. Hello, soft curves and architectural shapes. 2025’s bookshelf furniture favors fluid lines—arched frames, oval cubbies, and asymmetrical silhouettes that feel more like art installations than storage.

Interior vibe: Scandinavian meets sculptural modernism.

5. Built-In Wall Libraries

Built-in bookshelves are back in a big way, especially for those working from home. From floor-to-ceiling installations to custom alcove units, these shelves give rooms a curated, polished feel while offering massive storage.

Pro tip: Add integrated lighting to highlight key shelves and create ambiance.

6. Mixed Materials for Visual Interest

Designers are blending woods with metals, glass, stone, and even leather accents. The result? Bookshelves that double as sophisticated focal points and blend seamlessly with eclectic interiors.

Watch for: Oak + matte black steel combos, marble base shelves, and cane-paneled backing.

7. Low, Long Console Bookshelves

Perfect under windows or behind sofas, these horizontal bookshelves bring visual balance and are perfect for stacking books, showcasing art, or adding indoor plants.

Why it works: Great for open-concept layouts or where wall height is limited.

8. Double-Sided & Room Divider Shelving

Multifunctional furniture is huge in 2025, and double-sided bookshelves are pulling double duty as space dividers. Great for studios or open floor plans, they create separation without closing off the room.

Design tip: Use open-back units to let light pass through while defining zones.

9. Industrial Meets Organic

Think black steel frames paired with natural wood shelves. This contrast between industrial and earthy materials is timeless and fits seamlessly into modern, rustic, or urban interiors.

Popular pairings: Walnut + black iron, birch + brushed brass.

10. Styling as an Art Form

How you style your shelves matters just as much as the furniture itself. In 2025, the goal is intentional curation: a blend of books, artwork, textures, and negative space.

Top styling tips:

Mix vertical and horizontal book stacks.

Group by color or tone.

Add plants for softness and life.

Leave space—don’t overcrowd!

Final Thoughts

The modern bookshelf in 2025 is multifunctional, sculptural, and deeply personal. Whether you’re going for cozy maximalism or clean minimalism, a trend here fits your space and style.

Ready to upgrade your shelves? Start with one trend, mix in your personality, and let your bookshelf tell your story. Shop stylish and spacious bookshelves online to organize your books and decor. Explore durable designs at the best prices. ✔ Free Shipping ✔ Easy Returns ✔ Easy EMI

0 notes

Text

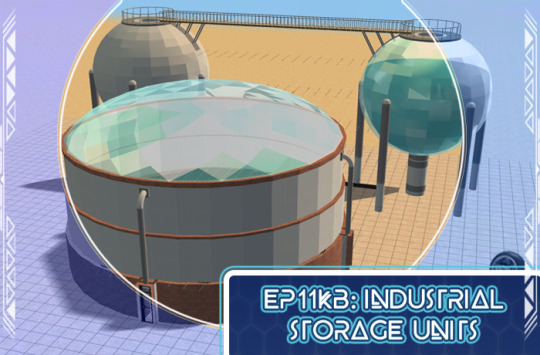

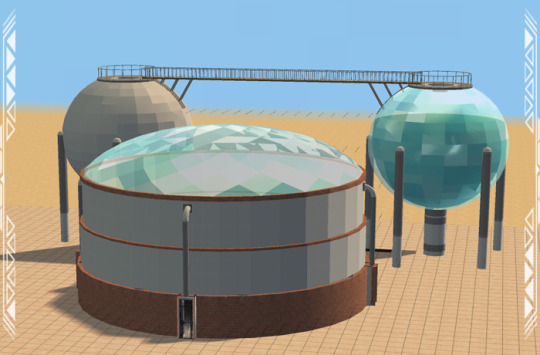

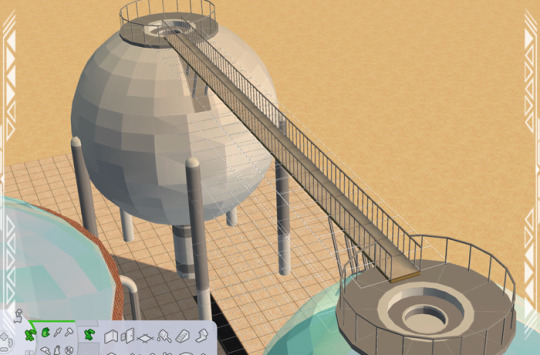

EP11KB: Industrial Storage Units (3t2)

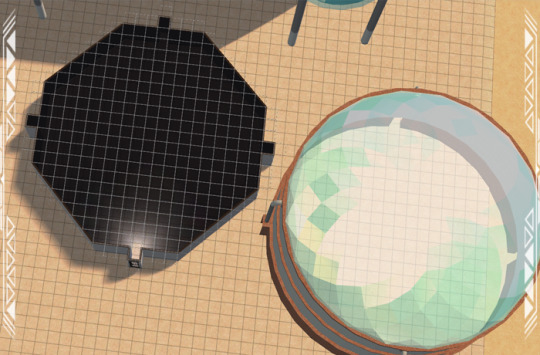

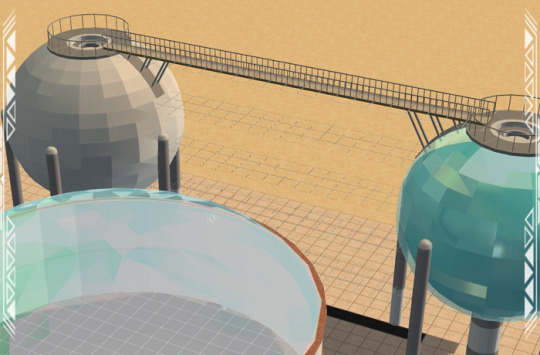

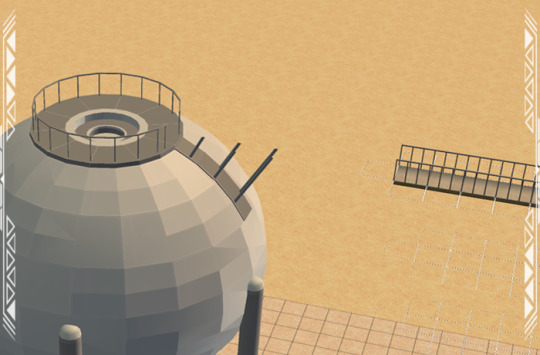

Published: 2-7-2024 | Updated: N/A SUMMARY “In need of a funkier, more futuristic skyline? The EP11 Kitbash Series (Simmons, 2022-2023) makes neighborhood assets from Sims 3: Into the Future (EA/Maxis, 2013) (and other EPs) available for Sims 2. Sets include single-tile shells and other items you’ll be able to plop on lots. Then, build above, below, in, and around them to create useable structures. Shell challenge anyone?” Here is a set of industrial storage containers from Oasis Landing (Sims 3: Into the Future, EA/Maxis, 2013) as decorative lot objects. They work well on industrial and/or factory lots and will go down with walls. The Storage Cylinder has space for up to four 1-tile doors and/or full-height windows. Otherwise, cover the openings!

DETAILS Requires all EPs/SPs. Cost: §1100 | Build > Architecture Storage Sphere 001 and the “-RECOLOR” files are BOTH REQUIRED for recolors to work – this set includes 30 color options. You also need the BB_Niche1_Master (BuggyBooz, 2012) and Element TXTR Repository from the Repository Pack (Simmons, 2023). ITEMS Storage Cylinder (~23x23 Tiles) (3248 poly) 5-Story Storage Spheres 001 and 002 (3004 poly) - click on the BASE of Storage Sphere 1 to recolor it. Storage Filler Material (638 poly) – make sure it is facing in the same direction as Sphere 001 and placed on the same tile. **Poly counts are semi-high due to meshing issues but limiting placement to 1-2 per lot should minimize the risk of pink flashing. Mind your system settings! DOWNLOAD (choose one) from SFS | from MEGA BUILDING TIPS (suggested methods) Build an octagon (20 tiles across, 19 tiles front-to-back) with sides of alternating lengths of 6 and 7 tiles respectively. Going clockwise, the front side should be 7 tiles across, the next should be 6 tiles across…and so on.

Once you’ve got 8 sides, add doors and/or windows to the center tile on the sides which are 7 tiles across. Use these entrances to get sims in and out of the building. Finally, place the Industrial Storage Cylinder on the tile directly in front of the front door/window. Add other details as needed.

For the Industrial Storage Spheres, you can build a functional catwalk between them. Place at least two, making sure the sides with the opening in the top gate and floor supports are facing one another.

Using columns, walls, etc., build up to the sixth floor, then add floor tiles and fencing.

CREDITS Thanks: Simblr community. Repository Technique Tutorial (HugeLunatic, 2018), Sims 3 Object Cloner (Jones/Simlogical and Peter, 2013), Sims 3 Package Editor (Jones/Simlogical and Peter, 2014), S3PI Library (Peter), S3PE Plugin (Peter, 2020), TSR Workshop v2.2.119 (2023). Sources: BB_Niche1_Master (BuggyBooz, 2012), Beyno (Korn via BBFonts), Oasis Landing (The Sims 3: Into the Future) List of Community Lots (Summer’s Little Sims 3 Garden, 2014), Recolors-ACYL (CuriousB, 2010).

85 notes

·

View notes

Text

The Importance of Threat and Risk Assessment in Cloud Security

In the rapidly evolving landscape of cloud computing, maintaining robust cybersecurity is more critical than ever. Organizations migrating to cloud platforms must understand the importance of threat and risk assessment to protect sensitive data and digital infrastructure. Cloud environments present unique challenges that require continuous evaluation of potential vulnerabilities and threats. Threat and risk assessment provides the foundation for developing a comprehensive security posture by identifying weak points, anticipating potential breaches, and implementing effective controls. As cyber threats grow in complexity, businesses must adopt strategic assessments to safeguard their operations and ensure data integrity in the cloud ecosystem.

Understanding Cloud Vulnerabilities and Security Gaps

Cloud environments often face complex and evolving threats due to shared infrastructure, remote access, and third-party integrations. A thorough threat and risk assessment helps organizations understand specific vulnerabilities within their cloud architecture. From misconfigured storage buckets to weak authentication protocols, these gaps can become entry points for cybercriminals. By analyzing system architecture and data flow, organizations can uncover hidden weaknesses. This insight enables IT teams to proactively mitigate risks before they escalate. Recognizing these vulnerabilities is a crucial step in designing a secure cloud infrastructure and reinforces the importance of regular threat and risk assessment in today's digital age.

Role of Threat and Risk Assessment in Cloud Protection

Threat and risk assessment plays a pivotal role in cloud protection by identifying, evaluating, and mitigating risks before they can be exploited. These assessments help businesses stay ahead of potential threats by providing a systematic approach to cybersecurity. They allow for a detailed analysis of security policies, access controls, and encryption standards. Without consistent threat and risk assessment, organizations may leave critical assets exposed. It ensures cloud resources are aligned with the latest security frameworks and compliance requirements. In essence, this process forms the backbone of a proactive cloud defense strategy, minimizing risk and fortifying the entire digital environment.

Identifying Potential Risks in Cloud Environments

One of the primary objectives of threat and risk assessment is to identify potential risks specific to cloud environments. These risks include unauthorized access, data loss, insider threats, and service outages. Cloud platforms vary in their configurations and hosting models, which can introduce unique security challenges. Through in-depth threat and risk assessment, organizations can evaluate each layer of their cloud infrastructure for potential vulnerabilities. This evaluation helps develop targeted countermeasures and security policies tailored to their needs. Identifying these risks early ensures more effective incident response and strengthens the overall security posture, making the cloud a safer place for operations.

Proactive Strategies to Strengthen Cloud Defenses

Proactive security begins with a strong foundation of threat and risk assessment. By continuously evaluating cloud environments for potential threats, organizations can implement preventive strategies before vulnerabilities are exploited. These strategies include multi-factor authentication, encryption protocols, intrusion detection systems, and automated patch management. A robust threat and risk assessment helps prioritize these security initiatives based on actual risk exposure. Rather than reacting to breaches, proactive defense focuses on foresight, ensuring businesses are prepared for emerging cyber threats. Integrating this assessment into routine security practices enables cloud infrastructure to remain resilient, secure, and adaptive to both internal and external threats.

How Threat and Risk Assessment Enhances Data Security?

Data security in the cloud relies heavily on identifying threats and understanding the risks associated with storing and processing information remotely. A comprehensive threat and risk assessment uncovers weak access points, encryption flaws, and policy gaps that could compromise data integrity. By evaluating how data moves through the cloud and who has access, organizations can enforce stricter controls and encryption measures. These assessments ensure sensitive data is protected from both internal misuse and external attacks. Regular assessments also help businesses adapt to changing regulations and evolving cyber threats, enhancing overall data security through well-informed, risk-based decision-making processes in cloud systems.

Addressing Compliance Through Proper Risk Evaluation

Compliance with data protection laws such as GDPR, HIPAA, and ISO 27001 is a top priority for organizations operating in the cloud. Threat and risk assessment ensures that cloud environments meet these standards by identifying areas of non-compliance. These assessments help map out regulatory requirements, ensuring proper documentation, secure access controls, and adequate data protection measures are in place. Failure to comply can result in severe penalties and reputational damage. Conducting thorough threat and risk assessments helps organizations stay compliant while building trust with clients and stakeholders. It is a necessary component in demonstrating due diligence and responsible cloud management.

Real-Time Monitoring for Better Cloud Security Decisions

Real-time monitoring is essential for detecting and responding to threats as they occur in cloud environments. When combined with a thorough threat and risk assessment, it enables organizations to make more informed and timely security decisions. By integrating assessment insights with real-time data, businesses can identify anomalies, detect unusual behavior, and respond swiftly to incidents. This dynamic combination supports continuous improvement in cloud security strategies. Rather than relying solely on periodic evaluations, real-time integration ensures security measures remain current and effective. Threat and risk assessment provides the strategic context, while monitoring delivers actionable intelligence to defend cloud assets.

Conclusion

Threat and risk assessment is a cornerstone of modern cloud security, offering the insight and direction needed to navigate complex digital threats. By continuously evaluating vulnerabilities, identifying risks, and reinforcing compliance, businesses can establish resilient cloud infrastructures. These assessments provide the foundation for proactive defense, real-time monitoring, and effective data protection. In an era where cyber threats evolve rapidly, relying on static security models is no longer sufficient. Organizations must embrace a culture of continuous assessment and improvement. Ultimately, a well-executed threat and risk assessment strategy is key to achieving long-term cloud security and business continuity in a digital-first world.

0 notes

Link

0 notes

Text

Enterprise Kubernetes Storage with Red Hat OpenShift Data Foundation (DO370)

In the era of cloud-native transformation, data is the fuel powering everything from mission-critical enterprise apps to real-time analytics platforms. However, as Kubernetes adoption grows, many organizations face a new set of challenges: how to manage persistent storage efficiently, reliably, and securely across distributed environments.

To solve this, Red Hat OpenShift Data Foundation (ODF) emerges as a powerful solution — and the DO370 training course is designed to equip professionals with the skills to deploy and manage this enterprise-grade storage platform.

🔍 What is Red Hat OpenShift Data Foundation?

OpenShift Data Foundation is an integrated, software-defined storage solution that delivers scalable, resilient, and cloud-native storage for Kubernetes workloads. Built on Ceph and Rook, ODF supports block, file, and object storage within OpenShift, making it an ideal choice for stateful applications like databases, CI/CD systems, AI/ML pipelines, and analytics engines.

🎯 Why Learn DO370?

The DO370: Red Hat OpenShift Data Foundation course is specifically designed for storage administrators, infrastructure architects, and OpenShift professionals who want to:

✅ Deploy ODF on OpenShift clusters using best practices.

✅ Understand the architecture and internal components of Ceph-based storage.

✅ Manage persistent volumes (PVs), storage classes, and dynamic provisioning.

✅ Monitor, scale, and secure Kubernetes storage environments.

✅ Troubleshoot common storage-related issues in production.

🛠️ Key Features of ODF for Enterprise Workloads

1. Unified Storage (Block, File, Object)

Eliminate silos with a single platform that supports diverse workloads.

2. High Availability & Resilience

ODF is designed for fault tolerance and self-healing, ensuring business continuity.

3. Integrated with OpenShift

Full integration with the OpenShift Console, Operators, and CLI for seamless Day 1 and Day 2 operations.

4. Dynamic Provisioning

Simplifies persistent storage allocation, reducing manual intervention.

5. Multi-Cloud & Hybrid Cloud Ready

Store and manage data across on-prem, public cloud, and edge environments.

📘 What You Will Learn in DO370

Installing and configuring ODF in an OpenShift environment.

Creating and managing storage resources using the OpenShift Console and CLI.

Implementing security and encryption for data at rest.

Monitoring ODF health with Prometheus and Grafana.

Scaling the storage cluster to meet growing demands.

🧠 Real-World Use Cases

Databases: PostgreSQL, MySQL, MongoDB with persistent volumes.

CI/CD: Jenkins with persistent pipelines and storage for artifacts.

AI/ML: Store and manage large datasets for training models.

Kafka & Logging: High-throughput storage for real-time data ingestion.

👨🏫 Who Should Enroll?

This course is ideal for:

Storage Administrators

Kubernetes Engineers

DevOps & SRE teams

Enterprise Architects

OpenShift Administrators aiming to become RHCA in Infrastructure or OpenShift

🚀 Takeaway

If you’re serious about building resilient, performant, and scalable storage for your Kubernetes applications, DO370 is the must-have training. With ODF becoming a core component of modern OpenShift deployments, understanding it deeply positions you as a valuable asset in any hybrid cloud team.

🧭 Ready to transform your Kubernetes storage strategy? Enroll in DO370 and master Red Hat OpenShift Data Foundation today with HawkStack Technologies – your trusted Red Hat Certified Training Partner. For more details www.hawkstack.com

0 notes

Text

Data consulting approach for scalable and safe architecture

A well-defined data strategy and consulting approach serves as the foundation of scalable and safe data architecture building. Since businesses continue to submit a large amount of information, the need for a structured data system that may be on a scale with development while maintaining data integrity and security, becomes rapidly important.

Effective data strategy helps enterprises to align their information assets with organizational goals. By determining clear objectives around data governance, quality, storage and use, organizations can take informed decisions supporting long-term development. This structured alignment plays an essential role in determining correct technologies and platforms that can support complex data workloads.

The first step in any successful data consulting approach is a comprehensive evaluation of the existing data infrastructure. Advisors evaluate current systems for performance, flexibility and safety. Based on this evaluation, they identify gaps and design structures that support enterprise-manual operations. These framework focus on architecture models that accommodate large-scale data storage, rapid processing and efficient access control.

Cloud data platform has emerged as a favorite option for scalable architecture. Their adaptability allows businesses to be easily structured and processed, without constrained by on-dimenses limitations. With the right data consulting partner, companies can adopt cloud environment to suit their specific charge, industry standards and compliance requirements. This approach improves operating agility even while simplifying data regime.

Security is another important column in the design of modern data architecture. Cyber risk and compliance demands are constantly developing, causing data security to become priority in any data strategy. consulting experts work closely with customers to implement multi-level security structure. This includes encryption in rest and transit, access management policies, danger monitoring and data loss prevention measures. The goal is to reduce the weaknesses, ensuring that only authorized users can only access sensitive information.

Integration of machine learning in enterprise data systems adds another dimension to modern architecture. When aligned with a comprehensive data strategy, the machine learning enables future analysis, discrepancy detection and automatic insight. These capabilities support data-powered decision making, which helps the stakeholders react quickly to a change in customer behavior, market trends, and operating risks.

Machine learning models, however, demand a specific infrastructure for deployment and maintenance. Advisory models assist businesses in installation of training, testing and scaling supporting environment. It includes customized storage systems, high-demonstration computing resources and real-time data pipelines that feed the model with relevant inputs.

Another important aspect of a scalable architecture is interoperability. Enterprise systems often include many tools and technologies that should work efficiently together. Data consulting professionals ensure that platforms are integrated into departments and functions. This integrated enterprise leads to data solutions that eliminate silos and facilitate smooth communication in the organization.

The creation of scalable and safe data systems is not a one-time job. This requires a roadmap that develops with business requirements, technology trends and compliance rules. A well-established data strategy and consulting approach ensures that companies are ready not only for current demands, but also to grow with confidence.

In Celebal Technologies, we partner with organizations to create data architecture which are both scalable and safe. Our data consulting team covered the entrepreneurship data solutions, integrate machine learning, ensure data protection and implement cloud-based framework brings deep expertise in supporting data-making decision making. Whether you are constructing from above the ground or modernizing heritage systems, we help shape your data strategy to support permanent success.

Connect with Celebal Technologies today to create a data architecture that grows with your business.

0 notes