Don't wanna be here? Send us removal request.

Text

Righteous Hacks

A big part of being a technical animator is finding solutions to problems. Sometimes those solutions require out of the box thinking and as part of that, occasionally I’ll stumble across a solution that’s so out of the box, so utterly ridiculous, that regardless of how viable it actually is long-term, I feel compelled to try it, just to see if it works.

Recently, I came up with possibly the most ridiculous hack solution I’ve ever devised, and it’s crazy enough that I thought people might get a kick out of it.

Just to be clear, the following method is incredibly impractical for an actual game production and I’ll never actually use it anywhere. This was just something I did on my own time one evening, partly for fun, and partly to satisfy my curiosity.

The Problem

For a while now, I’ve been thinking about different ways of recording animation out of game engines and back into a DCC application like Maya or Motionbuilder.

There are lots of reasons why you might want to do something like this: At GDC 2017, Naughty Dog showed how sometimes their companion climbing is simply one of their developers playing as Drake, doing the necessary climbing moves and recording the motion. They then play back the whole recorded sequence on the companion character. This saves them a lot of code work, writing complex pathing for every different climbing scenario.

Another use might be to create rough block outs of in-game cinematic sequences, which you can then work over the top of in the DCC (almost like capturing rough mocap).

Or say you have a physics driven movement system in your game: You ideally want the root motion in your animations to match the in-game physics motion; so if you can record that physics motion from the engine, you could get a perfect match in the DCC.

The Ideal Solution

So ideally the best way to do this would be to dig into the engine/game code and write a process that gets the bone transforms for your character, and writes those transforms to some kind of animation file format that the DCC can read.

But hey, maybe you don’t have access to the engine code, or maybe you don’t know the language that the engine code is written in and need to find a programmer to dig into that. Maybe you don’t have a friendly neighborhood programmer who can help you.

So while I’m thinking about all this, I start to come up with some off the wall ways to solve the problem without any programmer support at all.

The Hackiest of Solutions

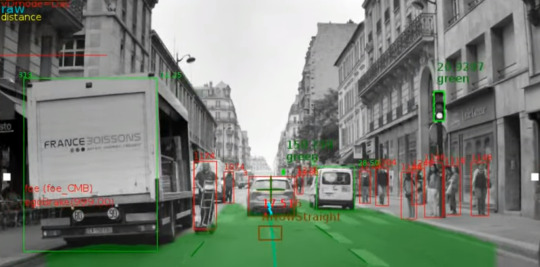

If I don’t have access to the code, what do I have access to? Maybe I can’t save bone transforms directly, but I can track bone transforms in the engine’s debug window. What if I could use this info somehow?

So I start by running around in the game while tracking the root bone translation in the debug window, which I then capture using standard video capture software like OBS.

I then use VLC to separate the video out into individual frames, which I process into an easier to read format using a Photoshop Action/Droplet.

I then run optical character recognition (OCR), on the frame files to convert the values in the images, into text. There are tons of different programs that do this, but I went with www.onlineocr.net which gives pretty good results and can batch process images by uploading them in a zip archive.

So now I have a bunch of text files, with coordinates in them (plus a bunch of other garbage that the OCR spat out).

I then clean-up the OCR output in a Motionbuilder Python script, giving me translation coordinates in a list, which I can then plot onto an object in the scene in Motionbuilder.

Et voila! Motion from the engine, into the DCC!

So again, I’ll never actually use this in any game production. It’s an insane solution to what should be a simple problem, at least to anyone with the right access. I mostly did this because the idea got stuck in my brain somewhere and I had to see if it would work.

I guess if there’s any semblance of a lesson to be learnt from this, it’s that it’s kinda fun to experiment and try out-of-the-box solutions to problems. I may never use this method ever again, but I learnt a whole bunch about OCR that I didn’t know before, and maybe somewhere down the line, something I learnt here will help to inspire some other solution that’s actually viable.

- DL

59 notes

·

View notes

Text

The Future of Game Animation

Recently Ninja Theory Senior Animator Chris Goodall posed a question on Twitter: What do people think the future of game animation is going to be.

This is one of my favorite topics to think about, and so I was eager to share some thoughts.

Short Term: Motion Matching

youtube

GDC 2016 was Motion Matching’s big coming out party. The core ideas had been floating around the world of academic research for years before that, but this was the first time that actual game studios were starting to show this tech in practical scenarios. Two presentations were made: One by Kristjan Zadziuk, about prototypes in development at Ubisoft Toronto, and another by Simon Clavet about his work on For Honor. The buzz at the conference was palpable, and since then, there have been rumors circulating the industry, that a lot of other AAA teams are now starting to build their own Motion Matching technology.

For those who aren’t familiar, Motion Matching is a method of automatically picking which piece of animation should play next on a character, by allowing the system to make its own choices, as opposed to relying on Stateflow logic; which is the current, manually-crafted method, of deciding which animations should play.

The Motion Matching system makes these choices based on high-level goals that you feed into the system. So one of these high-level goals might be “2 seconds from now, I want the character to be in this position, and this facing direction”, which the system gets by predicting the future position of the character based on player inputs. Another common high level goal is, “match the position and velocity of the feet and the hips, as closely as possible to what was already happening in the previous frame”.

The end result is that Motion Matching has the potential to dramatically reduce the amount of work required when creating animation systems. It also tends to produce very high quality results: Since transitions from one move to the next, are taking into account hip and foot position and velocity, you tend to get really smooth blending, which is sometimes not the case with a traditional State Machine approach.

I expect that in the next few years, we’ll start to see Motion Matching used more and more in games. Of course, it doesn’t have to be an all or nothing switch from traditional systems; you can embed a Motion Matching system into a traditional State Machine, so for a while, you’ll see a kind of hybrid approach, where some moves will be using Motion Matching (e.g. locomotion), and others might use a more traditional implementation (e.g. scripted events). But I think gradually Motion Matching will replace the majority of moves that we see in games.

The initial response from some animators towards Motion Matching, was concern; that the ease with which you can create systems, might potentially reduce the need for animators. From what I’ve experienced so far, this is absolutely not the case: Motion Matching systems typically still benefit from the usual clipping down of data (or otherwise tagging data), and of course, that data is still better if it is cleaned up animation, rather than raw mocap.

The initial vision for Motion Matching was that you would be able to just throw a bunch of unstructured mocap into a Motion Matching database and the system would do everything for you, but it turns out this kind of approach doesn’t produce good results. Technically it does still work, but the system often makes unwanted choices (e.g. sometimes deciding that rather than playing a run cycle, it’s going to play the last two footsteps of an Idle to Start over and over and considers that a run), and so a lot of teams are finding that curating your animation data can give better results.

So in short, there will still be plenty for animators to do, in a Motion Matching world.

Short Term: Script Based Automation

At GDC 2016, I presented a new animation tool that Zach Hall and I had developed when I was working at Ubisoft Montreal. The tool automatically processed raw motion capture data into shippable quality animation. Before building this tool, we did an analysis of how our mocap animators were working, which showed that an estimated 50-80% of the tasks that they were doing, were things that could be automated. So, we set about automating those things.

In a way, what we did wasn’t particularly revolutionary: Every studio writes scripts to automate repetitive tasks, the only difference in our case was the degree to which were willing to do it. I’d also say that a key point was that we were really looking closely at what the animators were actually doing, whereas sometimes technical animators can think they know the problems animators are facing, but they’re actually building solutions for things that aren’t necessarily the most important things.

I got a very positive response to the GDC talk, though I’m yet to hear of other studios trying a similar approach.

I would hope that in future, more teams start to look seriously at automation and pipeline efficiency, because it really is a huge opportunity. A single technical animator can potentially save the work of many, many animators, if they’re aimed towards the right things. It’s just unfortunate, that it seems like more often than not, people tend to rely on what they’re familiar with, and so a manager might prefer to hire 10 more animators to brute force the work, rather than assign a technical animator to focus purely on improving efficiency.

I’m hopeful though that things will happen in this area.

Short to Mid-Term: Neural Networks - Motion Generation at Runtime

If you haven’t seen Daniel Holden et al’s paper on Phase-Function Neural Networks, drop what you’re doing and watch this now. This is the future of game animation, right here.

youtube

In my view, Neural Networks and Deep Learning are going to change everything (not just about game animation, not just about game development: everything). While we may not see Neural Network based animation systems shipping in games for a while, some developers are already doing experiments using something similar to Daniel’s approach.

Studios will begin to use animation data to train neural networks, and those networks will then be able to generate animation at runtime. Just like Motion Matching the data that it generates is based on high-level goals, so it makes this a natural successor to the some of the work that’s being done with Motion Matching.

There are a number of benefits to Neural Network (NN) based animation systems over a Motion Matching approach…

They’re cheaper memory-wise: You only store the trained network weights, and not actual animation data.

Motion Matching is picking from a pre-existing set of animation data. NNs on the other hand can generate poses that weren’t in the original data, just that makes sense in context with the original data. This allows for far more adaptive characters. So for example, if you want your character to run past a table and pick up an object from that table, the position of the object doesn’t have to perfectly match what was in the training data; there just needs to be enough examples of picking up objects from tables while moving, correlated with appropriate high-level goals, for the system to understand how that type of action works. Then when you’re generating animation at runtime, you can set goals that never existed exactly that way in the training data (like different object positions on the table, different speeds, etc), and it should be able to deal with that.

NNs need to be fed lots of training data, but one approach to creating this data is to do offline procedural adjustments to your mocap (the kind of adjustments that might normally be inappropriate to use at runtime), and then use the result as training data for the NN. This essentially gives you something similar to a runtime version of that offline process. So for example, Adjustment Blending is a method of adjusting animation, that produces high quality results, but is most suitable for offline processing. This is because it relies on knowledge of what the character is going to do in the future. However, you could use Adjustment Blending to create lots of examples of adjusted data, and then use that adjusted data to train the NN. This would essentially give you similar results to Adjustment Blending, but at runtime. Another example of this type of approach is the uneven terrain example used in Daniel’s PFNN paper.

There are some challenges with NNs too, that the industry will need to work through…

NNs are currently slow to train. You can’t see the results of your changes until hours later. This will hopefully get faster as time goes on, but it’s currently an issue.

NNs are even more of a black box than Motion Matching. If the NN does something you don’t want it to do, it can be incredibly difficult to figure out why.

NNs rely on being fed a lot of example data. The more data, and the higher quality the data, the better. With this in mind, it’s likely only going to be appropriate for mocap, at least at first. You’ll also have “style transfer” which will help us to produce more stylized animation, but it’ll be a long time before we’re able to generate high-quality, Pixar style animation because there isn’t enough of that animation in the world, to train the system.

Short to Mid-Term: Animation Capture - Quality and Volume

As mentioned, NNs need to be fed vast amounts of data, and you generally need this data to be consistent and high-quality. Part of the reason that Deep Learning has made such rapid advancements in the last few years, is because of the vast amounts of data available on the Internet.

With this in mind, I see there being huge benefits to focussing on animation capture quality, and methods for capturing large amounts of animation data, very quickly. The amounts of data that we’re talking about here are so large, that it would be too much for an animator to clean up manually, so ideally we’ll need to use the raw data that comes out of the capture system, or treat the data in some sort of automated way.

Improvements in synchronized, body, finger, and facial motion capture will certainly help. Longer term I would expect to see far more full body 4D capture, and a focus on surfaces and muscles rather than bones and traditional skinning methods.

youtube

One area that I expect to get very good in the next few years is the ability for NNs to generate motion data from a single video source, rather than dedicated capture systems. Researcher Michael Black and his team are already working on this kind of thing, and I’m guessing that very soon, the results will start to be as good or better than optical systems.

youtube

If this happens, it’ll be an absolute game changer: Teams will be able to source their data from any video footage, so imagine the entire wealth of movies, TV, CCTV footage, people’s home videos, etc. all being sources for mocap data. Moreover, depending on the fidelity of video footage, and the quality of the NN system, you’ll likely be able to derive more than just skeletal data from this footage. You’ll eventually be able to estimate fingers, facial, muscle, subcutaneous layers, skin, etc: All things that are useful, and usable.

Long Term - The Incredible and Scary Future - Semantics

Some NNs are already able to derive semantic information from photos and video footage, and this is where things really start to get crazy. These systems are able to make accurate guesses about who and what are in images, what the relationships are, and so on. These types of systems are continuing to improve at a super-fast rate.

youtube

So say for example, you build an NN that can look at video footage and not only generate the motion of the person in the footage, but also accurately guess whether the person in the footage is male or female, guess how old they are, guess their ethnicity, maybe even guess their personality traits, their level of education, how wealthy they are, what type of job they do, what their political stance is, etc. Imagine that you then associate all that information with the generated motion, and then use that as part of the motion generation training data for the NN that generates animation on-the-fly.

So now you can set character traits as high-level goals for the system. So maybe your game director can simply say: Create me a character that moves like a 50 year old, overweight man, who is shy, and is recovering from an injured ankle: The system sets those parameters as part of it’s goals, and so when it generates the motion it generates with those parameters in mind.

I’ve just been talking about animation so far, but the same advancement is happening in other game development disciplines, and so by this point, there will also be systems to generate faces, bodies, clothing, etc. and so these same parameters can be applied in those systems to generate character meshes that are also context appropriate.

So you’re now able to build any type of character just by asking.

Let’s get more crazy...

What if you derive semantic understanding from scenes and places. For example: What if you use CCTV footage, along with the NN that analyzes people, to get a semantic understanding of city demographics. What type of people travel through what type of areas. What type of people live in what types of appartment buildings. What type of people drive vs use public transport. Which people go to Starbucks vs the artisinal local coffee chain. What type of people give money to the homeless, etc.

Now you feed this information into an NN that generates city neighborhoods, or whole cities, or whole continents full of cities. First, it generates a set of demographics, then it uses the character and animation NNs, to populate each city in appropriate ways.

So maybe now all the game director has to say is “Make me a city like London circa 1975”, and as long as there are enough data sources for what a city like that should be like, the system will generate an appropriate city, with appropriate people, who have appropriate behaviour.

Want to get even crazier...

Maybe at this point the game director who’s asking for all of this, isn’t even a game director anymore; maybe it’s just the player, asking directly for what they want.

“I want to play a game in the style of James Bond, but set it in the 1800s.”

“I want to play a brand new Star Wars story, from the perspective of Chewbacca.”

Eventually, we tie this in to devices that track emotional responses as the player is playing e.g. cameras that look at facial responses, or wearables like smart watches that track heart rate. Maybe you don’t even ask for a subject matter, maybe you say how you want to feel.

“I want to play an experience that makes me feel happy.”

“I want to have an experience that gives me a sense of family and belonging.”

“I want to experience a story that gives me the same sense of childish wonder as when I first read the Harry Potter books.”

Maybe in the next step you don’t even ask the system for anything. Maybe the system scans you as soon as you enter your door, understands what mood you’re in, and generates a complimentary experience.

At this point you start to delve into philosophical questions about what it even is to be human and whether the human experience means anything, if you’re just having your every whim automatically appeased, so maybe I should leave things there.

So yeah, that’s my road-map for the crazy future of game animation and game development as a whole.

Oh also I guess at some point we’ll get good full body IK.

#animation#technical animation#techology#deep learning#game development#machine learning#neural networks#motion capture

122 notes

·

View notes

Text

The challenging decisions of game development, or how good people can end up making bad games

The following scenario is a work of fiction and not based on any specific game I’ve worked on, or any specific experience I’ve had.

You’re sat in a meeting room with a bunch of other game developers; key representatives from every department that might be affected by animation. There are people from the design team, the engine team, gameplay programmers, AI programmers, the tools team, technical animators, animation programming, the animation director, and a producer. The meeting is about the future of your animation technology.

It’s relatively early in your project; the third game in a successful series, and the animation department wants to do a major upgrade to the core animation technology. Their argument is simple: The animation team has been using the same tools and tech for the last two games, and they’re concerned that if the animation technology isn’t improved, the animation will start to look bad compared to your competitors. There have been some minor improvements since the first game in the series, but no real major steps forward. Since the original tech was created, the rest of the industry has made some pretty major breakthroughs in animation technology; breakthroughs that this team would like to try and implement.

The big problem: The new technology is a paradigm shift. There’s no easy way to convert all the old animations and game code over to the new system, so doing this big upgrade to the animation tech, means pushing the reset button on all the gameplay and AI systems that were built for the last two games. Everything will have to be built up again from scratch.

It’s your job to decide whether or not they should do it.

The producer is the first to chime in...

“How long do you think it’s going to take to get the gameplay and AI back into a state where we can start playtesting and building levels?”

“I’m pretty confident that in 6 months we’ll be good to go”, says the animation programmer.

“Well… 6 months to do the tech and tools... it’ll be maybe another 6 months on top of that to rebuild each of the game systems to get things back to where they were”, offers up one of the technical animators. “I still think it’s worth it though. Once we have the new tech, it’s going to make producing new features way faster than before.”

“You have to bear in mind”, says the Animation Director, “What we did on the last game is pretty much hitting the ceiling of our current technology. It was a real strain on the team. I really don’t want to go through that again on this one.”

“I’ll be honest, I have some concerns”.

Everyone looks towards the lead engine programmer.

“I thought the whole point of this next game was to try and push the cooperative experience. That’s what the directors said was their number one priority”, he nods towards the game director, “And I thought the publisher had already signed off on that. Weren’t they saying that’s what they were most excited about?”

“We can’t do both?”, says the game director.

“We’re probably going to have to rework a whole lot of the systems anyway if we’re going to make them replicate properly over the network,” says the lead gameplay programmer. “The first two games weren’t really built with that in mind. It could be a good time to do this as well since we’ll be rewriting a bunch of the systems anyway. That said, it does add to our workload.”

“And for you guys?”, the producer asks the lead AI programmer.

“It’s pretty much the same situation as gameplay”, she replies, “It’s a lot to take on. I have to say, I think 12 months is optimistic. Things always come up that we don’t expect. I’d say closer to 18 months. I mean... remind me, when are we supposed to be shipping again?”

“We’re aiming for 2 years from now”, says the producer.

“Well if it ends up being 18 months, there’s no way my team can work with that”, adds the lead level designer. “We can rough some things out, but gameplay needs to be solidified a lot earlier than that.”

“Well we could build the new system in parallel with the old system, and let people switch between the two”, says the animation programmer. “That way you can start building levels with the old systems, and we’ll switch it over further down the line when the new systems are in a better shape. It’s a bit more challenging to do it that way, but it’s doable. Honestly though, when I say 6 months for my stuff, I mean 6 months. I can get most of it up and running in 3, but I’m saying 6 to give us a buffer.”

“And how confident are you about the extra 6 months for building out the game systems”, the producer asks the technical animator.

“Reasonably… I mean it’s tough to say... it’s new technology. I’ve got nothing to refer to cause we’ve never done this approach before”, they reply, “6 months is my ballpark estimate, it could be 4, it could be 12. We’ll have a much better idea once the core tech is done and we start working on the first systems. Bear in mind, it’s not like we’d have nothing until that 12 months is up. Systems will be gradually coming online as we go. We’ll get core movement done in the first few weeks.”

“I’d feel much more confident about it if we had another technical animator on the team”, says the animation director.

“I’ll ask if that’s possible but we’re close to our headcount limit, and tech animators aren’t the easiest to hire, so I wouldn’t count on it”, says the producer. “Remind me again, what we’ll be gaining for all this work?”

“It’ll be much faster for us to put animations into the game, and then easier for us to tweak and bug fix them”, says the technical animator. “In general, faster iteration time should also mean the quality of the animation will go up. Basically, it’ll be easier for everyone to do their best work.”

“I think we all want that, but it’s a lot of risk to take on with only a 2 year timeline”, says the lead engine programmer, “there are other teams besides animation who are also proposing some pretty ambitious tech goals as well. We’d maybe have to make some concessions there if we want to go with this”.

“Does anyone really think that it’s going to be 2 years though?” says the animation programmer. “Looking at the scope of this game, it’s probably going to end up closer to 3, with or without the animation system”.

“I can only tell you what I’m being told now, but the publisher seems pretty adamant about it being a 2 year project”, says the producer.

“So what are we going to do about this? Are we going to push ahead with this or not? What’s the call?”

This is what game development is like.

Should the team go with the new animation system? Everyone makes good points. It’s a tough call. You have to think about the people in the room: How confident are you in the projections of each person? Are some people known for being overly ambitious? Are others known for being too conservative? How much is the value of the new animation system versus the cost of building it? How many extra-people will you be able to get to work on it? Will other departments suffer if you put development effort here? Will the game really come out in two years, or is it more?

In one potential future, you decide to go ahead with the new animation system. Things are rocky at the beginning, but with some hard work and late nights, the animation programmer and technical animators manage to hit their estimates without too much disruption to other teams. The game scores highly, and is praised in particular for it’s strong animation.

In another potential future, you go ahead with the new animation system and it’s a train wreck. The animation programmer was low-balling their estimates because they were excited about working on the new system, and expected the release date to be knocked back a year (it wasn’t). The technical animators were genuine when they gave their estimates but didn’t account for the complexity of making the same systems work in an online cooperative game; something they’d never done before. The difficulties with the animation system caused problems for other teams, especially for the designers, who had a tough time making fun systems and levels, as bugs in the animation system made it difficult for them to play the game throughout development.

Another potential future has the team saying no to the new animation system. It ends up that even without the new animation system, the scope really was too big in other areas, and the game ends up being released after 3 years instead of 2. By the time the game is released, the animation does look horribly outdated, despite the best efforts of the animation team. The issue is specifically called out in reviews.

Then there’s the future where the team says no to the new system, making it much easier for the team to hit the 2 year deadline. It’s a struggle, but the animation team is still able to produce some decent results, and it turns out gamers were more interested in the new cooperative features anyway, leading to great reviews and great sales.

On any game there are a thousand calls like this. Some are big, some are small, and many can lead to the success or failure of your game.

The process of making a game has so many moving parts it’s incredibly difficult to account for every eventuality. It’s about the technology you’re working with, it’s about your ideas and your ability to execute on them, it’s about what the rest of the market is doing, and most of all it’s about the people on your team: Who they are, how they work, and what else might be going on in their lives.

The point here, and stop me if you’ve heard this one before, is that making games is really fucking hard. You're faced with so many of these kinds of decisions, the answers to which are highly subjective. It’s likely not every person in the meeting room comes to a consensus, and when a game comes out with some aspect that players don’t enjoy, more often than not there were a whole lot of people on the dev team that argued for the exact same thing that the players are arguing for.

It’s been said many a time before, but it’s true nonetheless: No-one sets out to make a bad game. In the cases where someone on your team was faced with one of these difficult subjective decisions, and made a bad call, they agonize over it. If you have empathy, you look at that decision and say, “That’s not necessarily a bad developer. It was a tough call. It could have gone either way, and it could have just as easily have been me”.

Of course, this doesn’t mean that gamers shouldn’t still be free to critique the games they spent their hard-earned money on. At the end of the day, it’s our job to entertain. Just please, remember that games are made by real people who are running a gauntlet of very challenging problems.

The answer to “why did this game suck?” isn’t always as simple as “because the developer sucks”, or the even more cringe-worthy “they were just lazy”: It’s because making games is really fucking hard.

652 notes

·

View notes