#deep learning

Text

#miraculous fanart#gardening#r@pe me#deep learning#rick riordan#nanami x reader#jean kirstein#reki kyan#colin morgan#wjsn#Olyria Roy#marxism#katya elise henry#little#cod

121 notes

·

View notes

Text

https://emily-129.tengp.icu/gy/0sQM14b

#alex claremont diaz#Elizabeth Olsen#harry styles smut#miraculous fanart#iggy azalea#ae dil hai mushkil#long hair#jia lissa#r@pe me#bd/sm community#deep learning#jojo's bizarre adventure#rick riordan#what vegans eat#grigor dimitrov

123 notes

·

View notes

Text

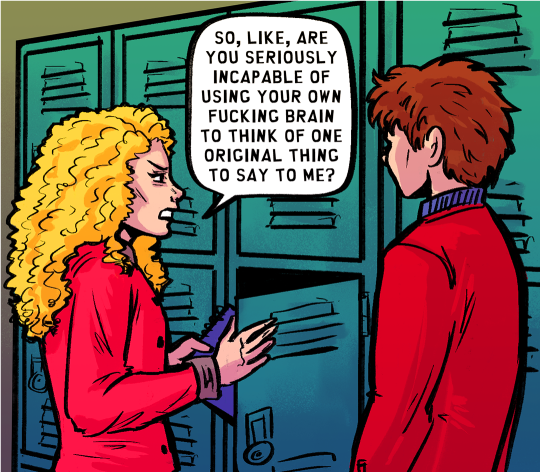

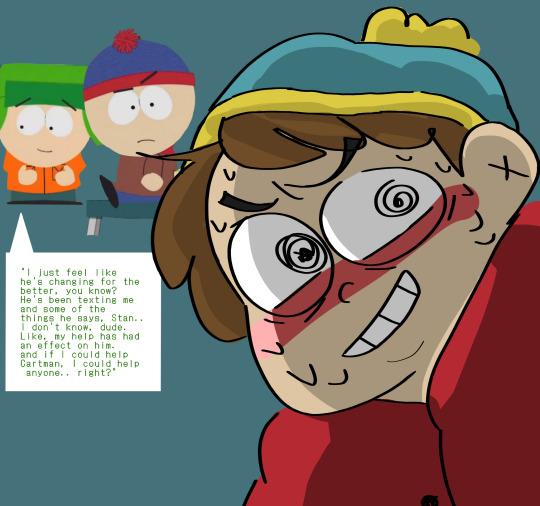

thought of this while watching the new episode

3K notes

·

View notes

Text

Good morning 🖤🪢⛓️📖💋

#reading#good morning#good mood#wednesday#literature#shibari#upside down#defying gravity#black and white#girls and books#deep learning#adult story#books and reading

771 notes

·

View notes

Text

i know im like a year late but im finally catching up on the most recent season [x]

#bebe stevens#clyde donovan#sp clybe#clybe#<- well. in a way#south park#deep learning#south park deep learning#south park fanart#south park art#mine

245 notes

·

View notes

Text

#pay attention#educate yourselves#educate yourself#knowledge is power#reeducate yourself#reeducate yourselves#think for yourselves#think about it#think for yourself#do your homework#ask yourself questions#question everything#deep learning#do your research#do some research#do your own research

865 notes

·

View notes

Text

https://kimberly-452.mjcyd.asia/w/10JwhWf

#thicc ass#deep learning#toh spoilers#jojo's bizarre adventure#devilman crybaby#luffy#rick riordan#jason todd#beta sissy#what vegans eat#postcards#nanami x reader#grigor dimitrov

127 notes

·

View notes

Text

"Kahl's not a girl, Stan- It's DIFFERENT!"

#south park#deep learning#eric cartman#kyman#kyle broflovski#stan marsh#cartyle#sp spoilers#except not really bc this didnt happen 💀#I KNOW ITS BADLY EDITED IDC#season 26#chatgbt#BYE

452 notes

·

View notes

Text

#long hair#jia lissa#stormpilot#ferret#bd/sm community#deep learning#devilman crybaby#jason todd#what vegans eat#grigor dimitrov

125 notes

·

View notes

Text

"Ethical AI" activists are making artwork AI-proof

Hello dreamers!

Art thieves have been infamously claiming that AI illustration "thinks just like a human" and that an AI copying an artist's image is as noble and righteous as a human artist taking inspiration.

It turns out this is - surprise! - factually and provably not true. In fact, some people who have experience working with AI models are developing a technology that can make AI art theft no longer possible by exploiting a fatal, and unfixable, flaw in their algorithms.

They have published an early version of this technology called Glaze.

https://glaze.cs.uchicago.edu

Glaze works by altering an image so that it looks only a little different to the human eye but very different to an AI. This produces what is called an adversarial example. Adversarial examples are a known vulnerability of all current AI models that have been written on extensively since 2014, and it isn't possible to "fix" it without inventing a whole new AI technology, because it's a consequence of the basic way that modern AIs work.

This "glaze" will persist through screenshotting, cropping, rotating, and any other mundane transformation to an image that keeps it the same image from the human perspective.

The web site gives a hypothetical example of the consequences - poisoned with enough adversarial examples, AIs asked to copy an artist's style will end up combining several different art styles together. Perhaps they might even stop being able to tell hands from mouths or otherwise devolve into eldritch slops of colors and shapes.

Techbros are attempting to discourage people from using this by lying and claiming that it can be bypassed, or is only a temporary solution, or most desperately that they already have all the data they need so it wouldn't matter. However, if this glaze technology works, using it will retroactively damage their existing data unless they completely cease automatically scalping images.

Give it a try and see if it works. Can't hurt, right?

#art theft#ai art#ai illustration#glaze#art ethics#ethical art#ethics#technology#ai#artificial intelligence#machine learning#deep learning#midjourney#stable diffusion

596 notes

·

View notes