Don't wanna be here? Send us removal request.

Text

JPEG XS – modern visually-lossless low-latency lightweight codec

Authors: Fyodor Serzhenko and Anton Boyarchenkov

JPEG XS is a recent image and video coding system developed by the Joint Photographic Experts Group and published as international standard ISO/IEC 21122 in 2019 [1] (the second edition in 2022 [2]). Unlike many former standards developed by the JPEG committee, JPEG XS addresses video compression. What makes it stand out from the rest video compression techniques are different priorities. Improving coding efficiency was the highest priority of previous approaches, while latency and complexity have been, at best, only secondary goals. That’s why the uncompressed video streams have still been used for transmission and storage. But now JPEG XS have emerged as a viable alternative to the uncompressed form.

Background of JPEG XS

There is a continual fight between the benefits of uncompressed video, and very high bandwidth delivery requirement. Network bandwidth continues to increase, but so does resolution and complexity of video. With the emergence of such formats as Ultra-High Definition (4K, 8K), High Dynamic Range, High Frame Rate, panoramic (360), both the storage and bandwidth requirements are rapidly increasing [3] [4].

Instead of costly upgrade or replacement of deployed infrastructure we can consider using transparent compression to reduce video stream sizes of these demanding video formats. Surely, such compression should be visually lossless, low latency and low complexity. However, the existing codecs (see short review in section 3) were not able to satisfy all the requirements simultaneously, because they were mostly designed with the coding efficiency as the main goal.

But solely improving the coding efficiency is not the only motivation for video compression. A lightweight compression scheme can achieve energy savings, when energy required for transmission is greater than energy cost of compression. In addition, the delay could even be reduced if compression overhead is less than the difference of transmission time of uncompressed and compressed frames.

For non-interactive video systems, such as video playback, the latency is not important as long as the decoder provides the required frame rate. On the contrary, interactive video applications require low latency to be useful. When network latency is low enough, the video processing pipeline can become the bottleneck. The latency is even more important in the case of fast-moving and safety-critical applications. Besides, sufficiently low delay will open up space for new applications, such as cloud gaming, extended reality (XR), or internet of skills. [5]

Use cases

The most common example of uncompressed video transport is through standard video links such as SDI and HDMI, or through Ethernet. In particular, massively deployed 3G-SDI was introduced with SMPTE ST 2018 standard in 2006 and have throughput of 2.65 Gbps, which is enough for video stream in 1080p60 format. Video compression with ratio 4:1 would allow sending 4K/60p/422/10 bits video (requiring 10.8 Gbps throughput) through 3G-SDI. 10G Ethernet (SMPTE2022 6) have throughput of 7.96 Gbps, while video compression with ratio 5:1 would allow sending two video streams with 4K/60p/444/12 bits format (requiring 37.9 Gbps) through it [3] [4] [6].

Embedded devices such as cameras use internal storage, which have limited access rates (4 Gbps for SSD drives, 400–720 Mbps for SD cards). Lightweight compression would allow real-time storage of video streams with higher throughput. Omnidirectional video capture system with multiple cameras covering different field of view, transfer video streams to a front-end processing system. Applying lightweight compression to these streams will reduce both the required storage size and throughput demands [3] [4] [6].

Head mounted displays (HMDs) are used for viewing omnidirectional VR and AR content. Given the computational (and power) constraints of such a display, it can not be expected to receive omnidirectional stream and locally process it. Instead, the external source should send to HMD that portion of the media stream which is within the viewer’s field of view. An immersive experience also requires very high-resolution video, and the quality of experience is crucially tied to the latency [3] [4] [6].

Other target use cases include broadcasting and live production, frame buffer compression (inside video processing devices), industrial vision, ultra high frame rate cameras, medical imaging, automotive infotainment, video surveillance and security, low-cost visual sensors in Internet of Things, etc. [6]

Emerging of the new standard

Addressing this challenge, several initiatives have been started. Among them is JPEG XS, launched by the JPEG committee in July 2015 with Call of Proposals issued in March–June 2016 [6]. The evaluation process was structured into three activities: objective evaluations, subjective evaluations, and compliance analysis in terms of latency and complexity requirements. Based on the above-described use cases the following requirements were identified.

Visually lossless quality with imperceptible flickering between original and compressed image.

Multi-generation robustness (no significant quality degradation for up to 10 encoding-decoding cycles).

Multi-platform interoperability. In order to optimally support different platforms (CPU, GPU, FPGA, ASIC) the codec needs to allow for different kinds of parallelism.

Low complexity both in hardware and software.

Low latency. In live production and AR/VR use cases the cumulative delay required by all processing steps should be below the human perception threshold.

It is easy to see that none of the existing standards comply with the above requirements. JPEG and JPEG-XT make a precise rate control difficult and show a latency of one frame. With regard to latency, JPEG 2000 versatility allows configurations with end-to-end latency around 256 lines or even as small as 41 lines in hardware implementations [4], but it still requires many hardware resources. VC-2 is of low complexity, but only delivers limited image quality. ProRes makes a low latency implementation impossible and makes fast CPU implementations challenging.

Out of 6 proposed technologies one was disqualified due to latency and complexity compliance issues, and two proponents was selected for the next step of standardization process. It was decided that JPEG XS coding system will be based on the merge of those two proponents. This new codec provides a precise rate control with a latency below 32 lines and fits in a low-cost FPGA. At the same time its fine-grained parallelism allows optimal implementation on different platforms, while the compression quality is superior to VC-2.

JPEG XS algorithm overview

The JPEG XS coding system is a classical wavelet-based still image codec (see more detailed description in [4] or in the standard [1] [2]). It uses reversible color transformation and reversible discrete wavelet transformation (Le Gall 5/3), which are known from JPEG 2000. But here DWT is asymmetrical: the specification allows up to two vertical decomposition levels and up to eight horizontal levels.

This restriction on number of vertical levels ensures that the end-to-end latency does not exceed maximum allowed value of 32 screen lines. In fact, algorithmic encoder-decoder latency due to DWT alone is 3 or 9 lines for one or two vertical decomposition levels, so there is a latency reserve for any form of rate allocation not specified in the standard.

The wavelet stage is followed by a pre-quantizer which chops off the eight out of 20 least significant bit planes. It is not used for rate-control but ensures that the following data path is 16 bits wide. After that the actual quantization is performed. Unlike JPEG 2000 with a dead-zone quantizer, a data-dependent uniform quantizer can be optionally used.

The quantizer is controlled by the rate allocator, which guarantees compression to an externally given target bit rate, which is strict in many use cases. In order to respect target bit rate together with maximum latency of the 32 lines, JPEG XS divides image into rectangular precincts. While in JPEG 2000 precincts are typically quadratic regions, a precinct in JPEG XS spans across one or two lines of wavelet coefficients for each band.

Due to latency constraints the rate allocator is not precise, but rather heuristic algorithm without actual distortion measurement. Moreover, the specific way of rate allocator operating is not defined in the standard, so different algorithms can be considered. Algorithm is ideal for low-cost FPGA where access to external memory should be avoided, and it can be suboptimal for high-end GPU.

The next stage after rate allocation is entropy coding, which is relatively simple. The quantized wavelet coefficients are combined in coding groups of four coefficients. For each group, the three datasets are formed: bit-plane counts, quantized values themselves and the signs of all nonzero coefficients. From these datasets only bit-plane counts are entropy coded, because they require a major part of the overall rate.

The rate allocator is free to select between four regular prediction modes per wavelet band – prediction on/off, significance coding on/off. Besides, it can select between two significance coding methods, which specifies whether zero predictions or zero counts are coded. “Raw fallback mode” allows disabling bit-plane coding and should be used when the regular coding modes are redundant.

A smoothing buffer ensuring a constant bit rate at the encoder output even if some regions of the input image are easier to compress. This buffer can have different size according to the selected profile. This choice affects the rate control algorithm, which uses it to smooth out rate variations.

JPEG XS profiles

Particular applications may have additional constraints on the codec, such as even lower complexity or buffer size limitation. So, the standard defines several profiles to allow different levels of latency and complexity. In fact, the entire part 2 of the standard (ISO/IEC 21122-2 “Profiles and Buffer Models” [7]) is devoted to specification of profiles, levels and sublevels [4].

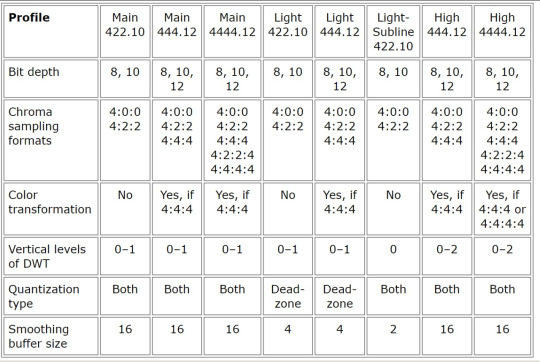

Each profile allows to estimate the necessary number of logic elements, the memory footprint, and whether chroma subsampling or an alpha channel is required. They are structured along the maximum bit depth, the quantizer type, the smoothing buffer size, and the number of vertical DWT levels. Other coding tools such as choice of embedded/separate sign coding or insignificant coding groups method insignificantly increase decoder complexity, so they are not restricted by the profile. As such, the standard defines eight profiles, whose characteristics are summarized in Table 1.

The three “Main” profiles target all types of content (natural, CGI, screen) for Broadcast, Pro-AV, Frame Buffers, Display links use cases. The two “High” profiles allow for second vertical decomposition and target all types of content for high-end devices, cinema remote production. The two “Light” profiles are considered suitable for natural content only and target Broadcast, industrial cameras, in-camera compression use cases. Finally, the “Light-subline” with minimal latency (due to zero vertical decomposition and the shortest smoothing buffer) is also suitable for natural content only and target cost-sensitive applications.

Profiles determine the set of coding features, while levels and sublevels limit the buffer sizes. In particular, levels restrict it in the uncompressed image domain and sublevels in the compressed domain. Similar to HEVC levels, JPEG XS levels constrain the frame dimensions and the refresh ratio (e.g., 1920p/60).

Table 1. Configuration of JPEG XS Profiles [4].

Performance evaluation

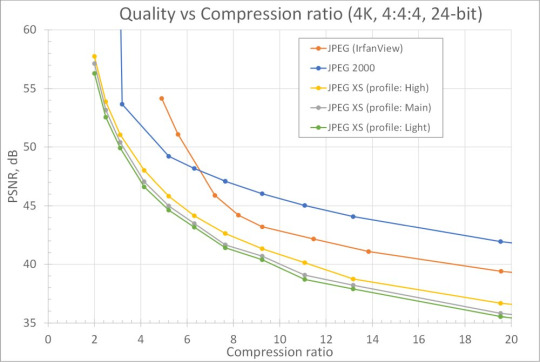

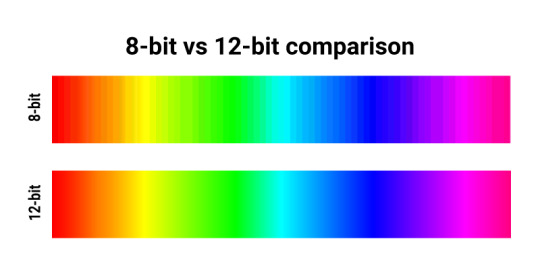

This section shows experimental results on rate-distortion comparison against other compression technologies with PSNR as the distortion measure. We are focused on RGB 4:4:4 24-bit natural content here, as it was shown that results for subsampled images and images with higher bit depth are similar.

Figure 1. Compression ratio dependence of image quality measured by PSNR for different codecs and profiles.

On Figure 1 we've compared rate-distortion dependencies for JPEG XS and two classical image codecs: JPEG and JPEG 2000. The testing procedure was as follows. Our test image 4k_wild.ppm (3840 × 2160 × 24bpp) with natural content was compressed multiple times with several compression ratios in the range from 2:1 to 20:1. These ratios are equal for JPEG XS and JPEG 2000, which allows the direct comparison. But ratios are different for JPEG, because it has no precise rate control functionality. The highest point of JPEG 2000 curve (with infinite PSNR) shows compression ratio of reversible algorithm. The test image is visually lossless for all cases when PSNR is higher than 40 dB.

As we can see on the figure, among the three image codecs, JPEG 2000 shows the highest quality (visually lossless even for ratio 30:1 with this image), but it comes with much greater computational complexity. The quality of the classical JPEG images is even higher for ratios 6:1 or less (and visually lossless for ratio 14:1), and it has low complexity, but the lack of precise rate control can be critical in some applications, and the minimum latency is one frame. That’s why it cannot substitute uncompressed video and JPEG XS. Although JPEG XS curves lay below curves of other two codecs, the image quality is still high enough to be visually lossless when the ratio is below 10:1.

The average PSNR difference is 5.4 dB between JPEG 2000 and the “high” profile JPEG XS, and 4.5 dB between JPEG 2000 and the “main” profile JPEG XS (for compression ratios up to 10:1). The average difference is 0.75 dB between the “main” and “high” profiles and only 0.45 dB between the “main” and “light” profiles.

Patents and RAND

Please bear in mind that JPEG XS contains patented technology which is made available for licensing via the JPEG XS Patent Portfolio License (JPEG XS PPL). This license pool covers essential patents owned by Licensors for implementing the ISO/IEC 21122 JPEG XS video coding standard and is available under RAND terms. You can find more info at https://www.jpegxspool.com

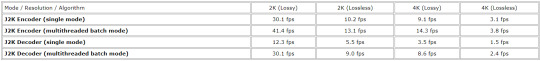

We've implemented the high-performance JPEG XS decoder on GPU as an accelerated solution for JPEG XS project from iso.org (Part 5 of the international standard ISO/IEC 21122-5:2020 [8]) which is done for CPU and show performance way below real-time processing. That was done to show the potential for GPU-based speedup for such a software. We can offer our customers high performance JPEG XS decoder for NVIDIA GPUs, though all questions concerning licensing of JPEG XS technology the customer must settle with JPEG XS patents owners.

References

1. ISO/IEC 21122-1:2019 Information technology — JPEG XS low-latency lightweight image coding system — Part 1: Core coding system. https://www.iso.org/standard/74535.html

2. ISO/IEC 21122-1:2022 Information technology — JPEG XS low-latency lightweight image coding system — Part 1: Core coding system. https://www.iso.org/standard/81551.html

3. JPEG White paper: JPEG XS, a new standard for visually lossless low-latency lightweight image coding system, Version 2.0 // ISO/IEC JT1/SC29/WG1 WG1N83038 http://ds.jpeg.org/whitepapers/jpeg-xs-whitepaper.pdf

4. A. Descampe, T. Richter, T. Ebrahimi, et al. JPEG XS—A New Standard for Visually Lossless Low-Latency Lightweight Image Coding // Proceedings of the IEEE Vol. 109, Issue 9 (2021) 1559.

5. J. Žádník, M. Mäkitalo, J. Vanne, and P. Jääskeläinen, Image and Video Coding Techniques for Ultra-Low Latency Image and Video Coding Techniques for Ultra-Low Latency // ACM Computing Surveys (accepted paper). https://doi.org/10.1145/3512342

6. WG1 (ed. A. Descampe). Call for Proposals for a low-latency lightweight image coding system // ISO/IEC JTC1/SC29/WG1 N71031, 71th Meeting – La Jolla, CA, USA – 11 March 2016. https://jpeg.org/downloads/jpegxs/wg1n71031-REQ-JPEG_XS_Call_for_proposals.pdf

7. ISO/IEC 21122-2:2022 Information technology — JPEG XS low-latency lightweight image coding system — Part 2: Profiles and buffer models. https://www.iso.org/standard/81552.html

8. ISO/IEC 21122-5:2020 Information technology — JPEG XS low-latency lightweight image coding system — Part 5: Reference software. https://www.iso.org/standard/74539.html

Original article see at: https://fastcompression.com/blog/jpeg-xs-overview.htm Subscribe to our mail list: https://mailchi.mp/fb5491a63dff/fastcompression

0 notes

Text

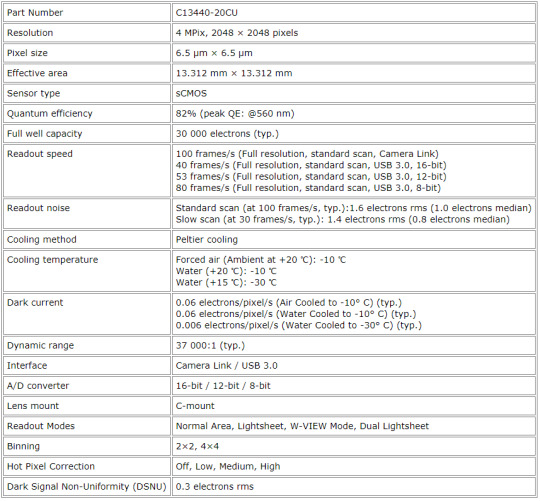

GPU HDR Processing for SONY Pregius Image Sensors

Author: Fyodor Serzhenko

The fourth generation of SONY Pregious image sensors (IMX530, IMX531, IMX532, IMX532, IMX535, IMX536, IMX537, IMX487) is capable of working in HDR mode. That mode is called "Dual ADC" (Dual Gain) which means that two raw frames are originated from the same 12-bit raw image which is digitized via two ADCs with different analog gains. If the ratio of these gains is around 24 dB, one can get one 16-bit raw image from two 12-bit raw frames with different gains. This is actually the main idea of HDR for these image sensors - how to get extended dynamic range up to 16 bits from two 12-bit raw frames with the same exposure and with different analog gains. That method guarantees that both frames have been exposured at the same time and they are not spatially shifted.

That Dual ADC feature was originally introduced at the third generation on SONY Pregius image sensors, but HDR processing had to be implemented outside the image sensor. The latest version of that HDR feature is done inside the image sensor which makes it more convenient to work with. Dual ADC mode with on-sensor combination (combined mode) is applicable for high speed sensors only.

fastcompression.com

Fastvideo blog

HDR for SONY Pregius IMX532 image sensor on GPU

GPU HDR Processing for SONY Pregius Image Sensors

Author: Fyodor Serzhenko

The fourth generation of SONY Pregious image sensors (IMX530, IMX531, IMX532, IMX532, IMX535, IMX536, IMX537, IMX487) is capable of working in HDR mode. That mode is called "Dual ADC" (Dual Gain) which means that two raw frames are originated from the same 12-bit raw image which is digitized via two ADCs with different analog gains. If the ratio of these gains is around 24 dB, one can get one 16-bit raw image from two 12-bit raw frames with different gains. This is actually the main idea of HDR for these image sensors - how to get extended dynamic range up to 16 bits from two 12-bit raw frames with the same exposure and with different analog gains. That method guarantees that both frames have been exposured at the same time and they are not spatially shifted.

That Dual ADC feature was originally introduced at the third generation on SONY Pregius image sensors, but HDR processing had to be implemented outside the image sensor. The latest version of that HDR feature is done inside the image sensor which makes it more convenient to work with. Dual ADC mode with on-sensor combination (combined mode) is applicable for high speed sensors only.

In the Dual ADC mode we need to specify some parameters for the image sensor. There are two ways of getting the extended dynamic range from SONY Pregius image sensors:

In the combined mode the image sensor can output one 12-bit raw frame with applied merge feature (when we combine two 12-bit frames with Low gain and High gain) and simple tone mapping (when we apply PWL curve to 16-bit merged data). That approach allows us to have minimum camera bandwidth because in that case the image size is minimal - this is just a 12-bit raw frame.

In the non-combined mode the image sensor outputs two 12-bit raw images which could be processed later outside the image sensor. This is the worst case for the camera bandwidth, but it could be promising for high quality merge and sofisticated tone mapping.

Apart from that, there are two other options:

We can process just Low gain or High gain image, but it's quite evident, that dynamic range in that case will be not better than in the Dual ADC mode.

It's also possible to apply our own HDR algorithm to the results of the combined mode as an attempt to improve image quality and dynamic range.

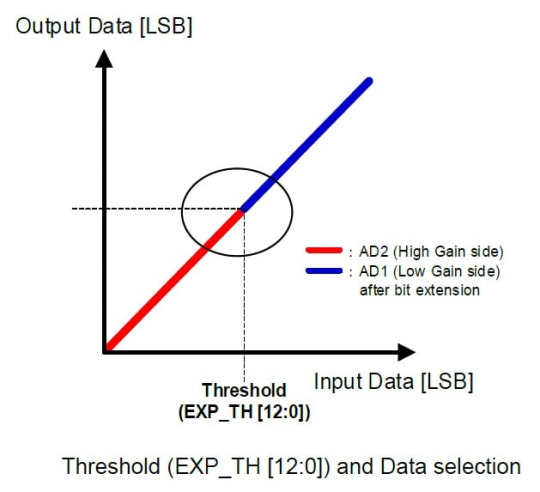

Dual Gain mode parameteres for image merge

Threshold - this is an intensity level where we should start utilizing Low gain data instead of High gain

Low gain (AD1) and High gain (AD2) - these are values for analog gain (0 dB, 6 dB, 12 dB, 18 dB, 24 dB)

Dual Gain mode parameteres for HDR

Two pairs of knee points for PWL curve (gradation compression from 16-bit range to 12-bit). They actually come from Low gain and High gain values, and from parameters of gradation compression.

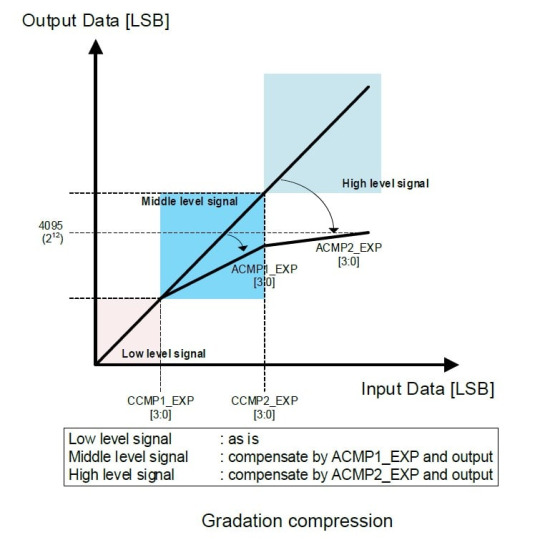

Below is the picture with detailed info concerning PWL curve which is applied after image merge, and it's done inside the image sensor. We can see how gradation compression is implemented at the image sensor.

This is an example of real parameters for Dual ADC mode for SONY IMX532 image sensor

Dual ADC Gain Ratio: 12 dB

Dual ADC Threshold: 40%

Compression Region Selector 1:

Compression Region Start: 6.25%

Compression Region Gain: -12 dB

Compression Region Selector 2:

Compression Region Start: 25%

Compression Region Gain: -18 dB

For further testing we will capture frames from IMX532 image sensor at XIMEA camera MC161CG-SY-UB-HDR with exactly the same parameters of Dual ADC mode.

If we compare images with gain ratio 16 (High gain is 16 times greater than Low gain) and exposure ratio 1/16 (long exposure for Low gain and short exposure for High gain), then we clearly see that images are alike, but High gain image has the following two problems: it has more noise and more hot pixels due to strong analog signal amplification. These issues should be taken into account.

Apart from the standard Dual ADC combined mode, there is a quite popular approach which could bring good results with minimum efforts: we can use just Low gain image and apply custom tone mapping instead of PWL curve. In that case dynamic range is less, but that image could have less noise in comparison with images from the combined mode.

Why do we need to apply our own HDR image processing?

It makes sense if on-sensor HDR processing in Dual ADC mode could be improved. That could be the way of getting better image quality due to implementation of more sofisticated algorithms for image merge and tone mapping. GPU-based processing is usually very fast, so we could still be able to process image series with HDR support in realtime, which is a must for camera applications.

HDR image processing pipeline on NVIDIA GPU

We've implemented image processing pipeline on NVIDIA GPU for Dual ADC frames from SONY Pregius image sensors. Actually we've extended our standard pipeline to work with such HDR images. We can process on NVIDA GPU any frames from SONY image sensors in the HDR mode: one 12-bit HDR raw image (combined mode) or two 12-bit raw frames (non-combined mode). Our result could be better not only due to our merge and tone mapping procedures, but also due to high quality debayering which also influences on the quality of processed images. Why we use GPU? This is the key to get much higher performance and image quality which can't be achieved on the CPU.

Low gain image processing

As we've already mentioned, this is the simplest method which is widely accepted and it's actually the same as a switched-off Dual ADC mode. Low gain 12-bit raw image has less dynamic range, but it also has less noise, so we can apply either 1D LUT or more complicated tone mapping algorithm to that 12-bit raw image to get better results in comparison with combined 12-bit HDR image which we can get directly from SONY image sensor. This is a brief info about the pipeline:

Acquisition of 12-bit raw image from a camera with SONY image sensor

BPC (bad pixel correction)

Demosaicing with MG algorithm (23×23)

Color correction

Curves and Levels

Local tone mapping

Gamma

Optional JPEG or J2K encoding

Monitor output, streaming or storage

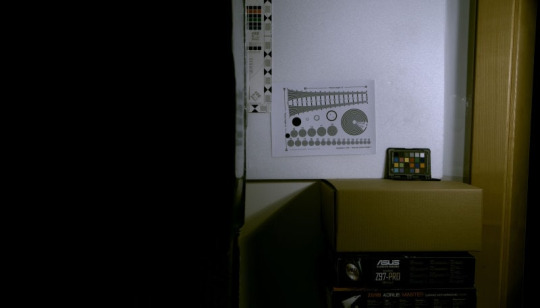

Fig.1. Low gain image processing for IMX532

Image processing at the Combined mode

Though we can get ready 12-bit raw HDR image from SONY image sensor at Dual ADC mode, there is still a way to improve the image quality. We can apply our own tone mapping to make it better. That's what we've done and the results are consistently better. This is a brief info about the pipeline:

Acquisition of 12-bit raw HDR image from a camera with SONY image sensor

Preprocessing

BPC (bad pixel correction)

Demosaicing with MG algorithm (23×23)

Color space conversion

Global tone mapping

Local tone mapping

Optional JPEG or J2K encoding

Monitor output, streaming or storage

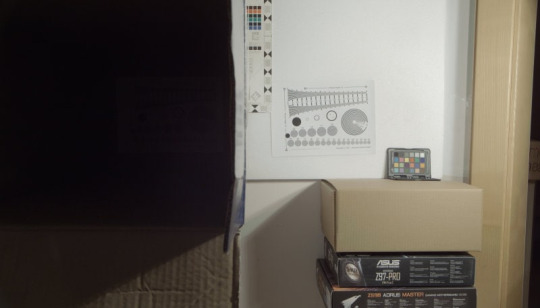

Fig.2. SONY Dual ADC combined mode image processing for IMX532 with a custom tone mapping

Low gain + High gain (non-combined) image processing

To get both raw frames from SONY image sensor, we need to send them to a PC via camera interface. It could cause a problem for interface bandwidth and for some cameras it could be a must to decrease frame rate to cope with camera bandwidth limitations. If we use PCIe, Coax or 10/25/50-GigE cameras, then it could be possible to send both raw images at realtime without frame drops.

As soon as we get two raw frames (Low gain and High gain) for processing, we need to start from preprocessing, then to merge them into one 16-bit linear image and to apply tone mapping algorithm. Usually good tone mapping algorithms are more complicated than just a PWL curve, so we can get better results, though it definitely takes much more time. To solve that issue in a fast way, high performance GPU-based image processing could be the best approach. That's exactly what we've done and we can get better image quality and higher dynamic range in comparison with combined HDR image from SONY and with processed Low gain image as well.

HDR workflow for Dual ADC non-combined image processing on GPU

Acquisition of two raw images in non-combined Dual ADC mode

Preprocessing of two images

BPC (bad pixel correction) for both images

RAW Histogram and MinMax for each frame

Merge for Low gain and High gain raw images

Demosaicing with MG algorithm (23×23)

Color space conversion

Global tone mapping

Local tone mapping

Optional JPEG or J2K encoding

Monitor output, streaming or storage

In that workflow the most important modules are merge, global/local tone mapping and demosaicing. We've implemented that image processing pipeline with Fastvideo SDK which is running very fast on NVIDIA GPU.

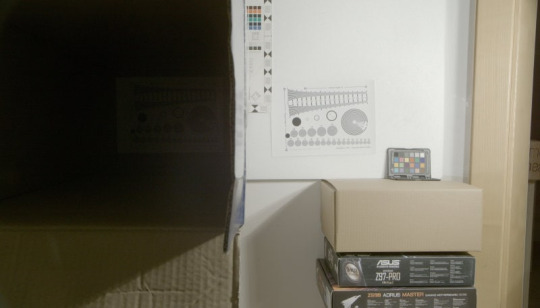

Fig.3. SONY Dual ADC non-combined (two-image) processing for IMX532

Resume for Dual ADC mode on GPU

Better image quality

Sofisticated merge for Low gain and High gain images

Global and local tone mapping

High quality demosaicing

Better dynamic range

Less artifacts for brightness and color

Less noise

High performance processing

We believe that the best results for image quality could be achived in the following modes:

Simultaneous processing of two 12-bit raw images in the non-combined mode.

Processing of one 12-bit raw frame in the combined mode with a custom tone mapping algorithm.

If we are working in the non-combined mode, then we can get good image quality, but camera bandwith limitation and processing time could be a problem. If we are working with the results of the combined mode, image quality is comparable, the processing pipeline is less complicated (the performance is better), and we need less bandwidth, so it could be recommended for most use cases. With a proper GPU, image processing could be done in realtime at the max fps.

The above frames were captured from SONY IMX532 image sensor at Dual ADC mode. The same approach is applicable to all high speed SONY Pregius image sensors of the 4th generation which are capable of working at Dual ADC combined mode as well.

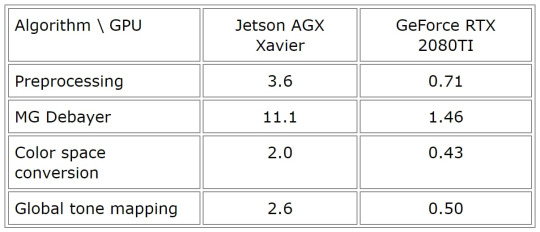

Processing benchmarks on Jetson AGX Xavier and GeForce RTX 2080TI in the combined mode

We've done time measurements for kernel times to evaluate the performance of the solution in the combined mode. This is the way to get high dynamic range and very good image quality, so the knowledge about performance could be valuable. Below we publish timings for several image processing modules because full pipeline could be different in general case.

Table 1. GPU kernel time in ms for IMX532 raw frame processing in the combined mode (5328×3040, bayer, 12-bit)

This is just the part of the full image processing pipeline and this is to show a level of how fast it could be on the GPU.

References

Fastvideo SDK for Image & Video Processing on GPU

RAW to RGB conversion on GPU

XIMEA high speed color industrial camera with Sony IMX532 image sensor

Original article see at: https://fastcompression.com/blog/gpu-hdr-processing-sony-pregius-image-sensors.htm

0 notes

Text

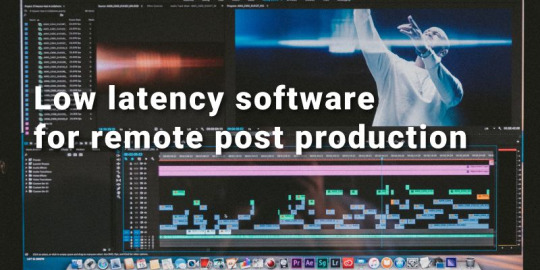

Image Processing Framework on Jetson

Author: Fyodor Serzhenko

Nowadays quite a lot of tasks for image sensors and camera applications are solved with the centralized computing architecture for image processing. Just a minor part of image processing features is implemented on the image sensor itself, so all the rest is done on CPU/GPU/DSP/FPGA which could reside very close to the image sensor. The latest achievements at hardware and software solutions allow us to get enhanced performance of computations and to enlarge the scope of tasks to be solved.

From that point of view, NVIDIA Jetson series is suited exactly for the task of high performance image processing from RAW to YUV. Image sensor or camera module can be connected directly to any Jetson via MIPI SCI-2 (2lane/4lane), USB3 or PCIe interfaces. Jetson could offer high performance computations either on ISP or on GPU. Below we show what could be done on GPU. We believe that raw image processing on GPU can offer more flexibility, better performance, quality and ease of management in comparison with hardware-based ISP for many applications.

What is Image Processing Framework for Jetson?

To get high quality and max performance at image processing tasks on Jetson, we've implemented a GPU-based SDK for raw processing. Now we are expanding that approach by creating an effective framework to control all system components, including hardware and software. For example, it means that image sensor control should be included in the workflow at realtime to become a part of general control algorithm.

Image processing framework components

Image Sensor Control (exposure, gain, awb)

Image Capture (driver, hardware/software interface, latency, zero-copy)

RAW Image Processing Pipeline (full raw to rgb workflow)

Image Enhancement

Image/Video Encoding (JPEG/J2K and H.264/H.265)

Compatibility with third-party libraries for image processing, ML, AI, etc.

Image Display Processing (smooth player, OpenGL/CUDA interoperability)

Image/Video Streaming (including interoperability with FFmpeg and GStreamer)

Image/Video Storage

Additional features for the framework

Image Sensor and Lens Calibration

Quality Control for Image/Video Processing

CPU/GPU/SSD balance load, performance optimization, profiling

Implementation of image sensor control at the workflow brings us additional features which are essential. For example, integrated exposure and gain control will allow to get better quality in the case of varying illumination. Apart from that, calibration data usually depend on exposure/gain and it means that we will be able to utilize correct processing parameters at any moment for any viewing conditions.

In general, standard RAW concept eventually lacks internal camera parameters and full calibration data. We could solve that problem by including image sensor control both in calibration and image processing. We can utilize image sensor abstraction layer to take into account full metadata for each frame.

Such a solution depends on utilized image sensor and task to be solved, so we can configure and optimize the Image Processing Framework for a particular image sensor from SONY, Gpixel, CMOSIS image sensors. These solutions on Jetson have already been implemented by teams of Fastvideo and MRTech.

Integrated Image Sensor Control

Exposure time

AWB

Gain

ROI (region of interest)

Full image sensor control also includes bit depth, FPS (frames per second), raw image format, bit packing, mode of operation, etc.

GPU image processing modules on Jetson for 16/32-bit pipeline

Raw image acquisition from image sensor via MIPI/USB3/PCIe interfaces

Frame unpacking

Raw image linearization

Dark frame subtraction

Flat field correction

Dynamic bad pixel removal

White balance

RAW and RGB histograms as an indication to control image sensor exposure time

Demosaicing with L7, DFPD, MG algorithms

Color correction

Denoising with wavelets

Color space and format conversions

Curves and Levels

Flip/Flop, Rotation to 90/180/270 or to arbitrary angle

Crop and Resize (upscale and downscale)

Undistortion via Remap

Local contrast

Tone mapping

Gamma

Realtime output via OpenGL

Trace module for debugging and bug fixing

Stream-per-thread support for better performance

Additional modules: tile support, image split into separate planes, RGB to Gray transform, defringe, etc.

Time measurements for all SDK modules

Image/Video Encoding modules on GPU

RAW Bayer encoding

JPEG encoding (visually lossless image compression with 8-bit or 12-bit per channel)

JPEG2000 encoding (lossy and lossless image compression with 8-16 bits per channel)

H264 encoder/decoder, streaming, integration with FFmpeg (8-bit per channel)

H265 encoder/decoder, streaming, integration with FFmpeg (8/10-bit per channel)

Is it better or faster than NVIDIA ISP for Jetson?

There are a lot of situations where we can say YES to this question. NVIDIA ISP for Jetson is a great product, it's free, versatile, reliable, and it takes less power/load from Jetson, but we have our own advantages which are also of great importance for our customers:

Processing performance

Image quality

Flexibility in building custom image processing pipeline

Wide range of available image processing modules for camera applications

Image processing with 16/32-bit precision

High-performance codecs: JPEG, JPEG2000 (lossless and lossy)

High-performance 12-bit JPEG encoder

Raw Bayer Codec

Dynamic bad pixel suppression

High quality demosaicing algorithms

Wavelet-based denoiser on GPU for Bayer and RGB images

Flexible output with desired image resolution, bit depth, color/grayscale, rotation, according to ML/AI requirements

We've built that software from the scratch and we've been working in that field more than 10 years, so we have an experience and we can offer reliable solutions and support. Apart from that we are offering custom software design to solve almost any problem in a timely manner.

What are benefits of that approach?

That approach allows us to create embedded image processing solutions on Jetson with high quality, exceptional performance, low latency and full image sensor control. Software-based solution in combination with GPU image processing on NVIDIA Jetson could help our customers to create their imaging products with minimum efforts and maximum quality and performance.

Other blog posts about Jetson hardware and software

Benchmark comparison for Jetson Nano, TX2, Xavier NX and AGX

Jetson Image Processing

Jetson Zero Copy

Jetson Nano Benchmarks on Fastvideo SDK

JPEG2000 performance benchmarks on Jetson TX2

Jetson AGX Xavier performance benchmarks

Remotely operated walking excavator on Jetson

Low latency H.264 streaming on Jetson TX2

JPEG2000 performance benchmarks on Jetson TX2

Performance speedup for Jetson TX2 vs AGX Xavier

Fastvideo SDK vs NVIDIA NPP Library

Original article see at: https://fastcompression.com/blog/jetson-image-processing-framework.htm

Subscribe to our mail list: https://mailchi.mp/fb5491a63dff/fastcompression

0 notes

Text

Fastvideo SDK vs NVIDIA NPP Library

Author: Fyodor Serzhenko

Why is Fastvideo SDK better than NPP for camera applications?

What is Fastvideo SDK?

Fastvideo SDK is a set of software components which correspond to high quality image processing pipeline for camera applications. It covers all image processing stages starting from raw image acquisition from the camera to JPEG compression with storage to RAM or SSD. All image processing is done completely on GPU, which leads to real-time performance or even multiple times faster for the full pipeline. We can also offer a high-speed imaging SDK for non-camera applications on NVIDIA GPUs: offline raw processing, high performance web, digital cinema, video walls, FFmpeg codecs and filters, 3D, AR/VR, DL/AI, etc.

Who are Fastvideo SDK customers?

Fastvideo SDK is compatible with Windows/Linux/ARM and is mostly intended for camera manufacturers and system integrators developing end-user solutions containing video cameras as a part of their products.

The other type of Fastvideo SDK customers are developers of new hardware or software solutions in various fields: digital cinema, machine vision and industrial, transcoding, broadcasting, medical imaging, geospatial, 3D, AR/VR, DL, AI, etc.

All the above customers need faster image processing with higher quality and better latency. In most cases CPU-based solutions are unable to meet such requirements, especially for multicamera systems.

Customer pain points

According to our experience and expertise, when developing end-user solutions, customers usually have to deal with the following challenges.

Before starting to create a product, customers need to know the image processing performance, quality and latency for the final application.

Customers need reliable software which has already been tested and will not glitch when it is least expected.

Customers are looking for an answer on how to create a new solution with higher performance and better image quality.

Customers need external expertise in image processing, GPU software development and camera applications.

Customers have limited (time/human) resources to develop end-user solutions bound by contract conditions.

They need a ready-made prototype as a part of the solution to demonstrate a proof of concept to the end user.

They want immediate support and answers to their questions regarding the fast image processing software's performance, image quality and other technical details, which can be delivered only by industry experts with many years of experience.

Fastvideo SDK business benefits

Fastvideo SDK as a part of complex solutions allows customers to gain competitive advantages.

Customers are able to design solutions which earlier may have seemed to be impossible to develop within required timeframes and budgets.

The product helps to decrease the time to market of end-user solutions.

At the same time, it increases overall end-user satisfaction with reliable software and prompt support.

As a technology solution, Fastvideo SDK improves both image quality and processing performance at the same time.

Fastvideo serves customers as a technology advisor in the field of fast image processing: the team of experts provides end-to-end service to customers. That means that all customer questions regarding Fastvideo SDK, as well as any other technical questions about fast image processing are answered in a timely manner.

Fastvideo SDK vs NVIDIA NPP comparison

NVIDIA NPP can be described as a general-purpose solution, because the company implemented a huge set of functions intended for applications in various industries, and the NPP solution mainly focuses on various image processing tasks. Moreover, NPP lacks consistency in feature delivery, as some specific image processing modules are not presented in the NPP library. This leads us to the conclusion that NPP is a good solution for basic camera applications only. It is just a set of functions which users can utilize to develop their own pipeline.

Fastvideo SDK, on the other hand, is designed to implement a full 16/32-bit image processing pipeline on GPU for camera applications (machine vision, scientific, digital cinema, etc). Our end-user applications are based on Fastvideo SDK, and we collect customer feedback to improve the SDK’s quality and performance. We are armed with profound knowledge of customer needs and offer an exceptionally reliable and heavily tested solutions.

Fastvideo uses a specific approach in Fastvideo SDK which is based on components (not on functions as in NPP). It is easier to build a pipeline based on components, as the components' input and output are standardized. Every component executes a complete operation, and it can have a complex internal architecture, whereas NPP only uses several functions. It is important to emphasize here that developing an application using built-in Fastvideo SDK is much less complex than creating a solution based on NVIDIA NPP.

The Fastvideo JPEG codec and lots of other SDK features have been heavily tested by our customers for many years with a total performance benchmark of more than million images per second. This is a question of software reliability, and we consider it as one of our most important advantages.

The major part of the Fastvideo SDK components (debayers and codecs) can offer both high performance and image quality at the same time, leaving behind the NPP alternatives. What’s more, this is also true for embedded solutions on Jetson where computing performance is quite limited. For example, NVIDIA NPP only has a bilinear debayer, so it can be regarded as a low-quality solution, best suited only for software prototype development.

Summing up this section, we need to specify the following technological advantages of the Fastvideo SDK over NPP in terms of image processing modules for camera applications:

High-performance codecs: JPEG, JPEG2000 (lossless and lossy)

High-performance 12-bit JPEG encoder

Raw Bayer Codec

Flat-Field Correction together with dark frame subtraction

Dynamic bad pixel suppression in Bayer images

Four high quality demosaicing algorithms

Wavelet-based denoiser on GPU for Bayer and RGB images

Filters and codecs on GPU for FFmpeg

Other modules like color space and format conversions

To summarize, Fastvideo SDK offers an image processing workflow which is standard for digital cinema applications, and could be very useful for other imaging applications as well.

Why should customers consider Fastvideo SDK instead of NVIDIA NPP?

Fastvideo SDK provides better image quality and processing performance for implementing key algorithms for camera applications. The real-time mode is an essential requirement for any camera application, especially for multi-camera systems.

Over the last few years, we've tested NPP intensely and encountered software bugs which weren't fixed. In the meantime, if customers come to us with any bug in Fastvideo SDK, we fix it within a couple of days, because Fastvideo possesses all the source code and the image processing modules are implemented by the Fastvideo development team. Support is our priority: that's why our customers can rely on our SDK.

We offer custom development to meet specific our customers' requirements. Our development team can build GPU-based image processing modules from scratch according to the customer's request, whereas in contrast NVIDIA provides nothing of the kind.

We are focused on high-performance camera applications and we have years of experience, and our solutions have been heavily tested in many projects. For example, our customer vk.com has been processing 400,000 JPG images per second for years without any issue, which means our software is extremely reliable.

Software downloads to evaluate the Fastvideo SDK

GPU Camera Sample application with source codes including SDKs for Windows/Linux/ARM - https://github.com/fastvideo/gpu-camera-sample

Fast CinemaDNG Processor software for Windows and Linux - https://www.fastcinemadng.com/download/download.html

Demo applications (JPEG and J2K codecs, Resize, MG demosaic, MXF player, etc.) from https://www.fastcompression.com/download/download.htm

Fast JPEG2000 Codec on GPU for FFmpeg

You can test your RAW/DNG/MLV images with Fast CinemaDNG Processor software. To create your own camera application, please download the source codes from GitHub to get a ready solution ASAP.

Useful links for projects with the Fastvideo SDK

1. Software from Fastvideo for GPU-based CinemaDNG processing is 30-40 times faster than Adobe Camera Raw:

http://ir-ltd.net/introducing-the-aeon-motion-scanning-system

2. Fastvideo SDK offers high-performance processing and real-time encoding of camera streams with very high data rates:

https://www.fastcompression.com/blog/gpixel-gmax3265-image-sensor-processing.htm

3. GPU-based solutions from Fastvideo for machine vision cameras:

https://www.fastcompression.com/blog/gpu-software-machine-vision-cameras.htm

4. How to work with scientific cameras with 16-bit frames at high rates in real-time:

https://www.fastcompression.com/blog/hamamatsu-orca-gpu-image-processing.htm

Original article see at: https://fastcompression.com/blog/fastvideo-sdk-vs-nvidia-npp.htm

Subscribe to our mail list: https://mailchi.mp/fb5491a63dff/fastcompression

0 notes

Text

GPU vs CPU at Image Processing. Why GPU is much faster than CPU?

Author: Fyodor Serzhenko

I. INTRODUCTION

Over the past decade, there have been many technical advances in GPUs (graphics processing units), so they can successfully compete with established solutions (for example, CPUs, or central processing units) and be used for a wide range of tasks, including fast image processing.

In this article, we will discuss the capabilities of GPUs and CPUs for performing fast image processing tasks. We will compare two processors and show the advantages of GPU over CPU, as well as explain why image processing on a GPU can be more efficient when compared to similar CPU-based solutions.

In addition, we will go through some common misconceptions that prevent people from using a GPU for fast image processing tasks.

II. ABOUT FAST IMAGE PROCESSING ALGORITHMS

For the purposes of this article, we’ll focus specifically on fast image processing algorithms that have such characteristics as locality, parallelizability, and relative simplicity.

Here’s a brief description of each characteristic:

Locality. Each pixel is calculated based on a limited number of neighboring pixels.

Good potential for parallelization. Each pixel does not depend on the data from the other processed pixels, so tasks can be processed in parallel.

16/32-bit precision arithmetic. Typically, 32-bit floating point arithmetic is sufficient for image processing and a 16-bit integer data type is sufficient for storage.

Important criteria for fast image processing

The key criteria which are important for fast image processing are:

Performance

Maximum performance of fast image processing can be achieved in two ways: either by increasing hardware resources (specifically, the number of processors), or by optimizing the software code. When comparing the capabilities of GPU and CPU, GPU outperforms CPU in the price-to-performance ratio. It’s possible to realize the full potential of a GPU only with parallelization and thorough multilevel (both low-level and high-level) algorithm optimization.

Image processing quality

Another important criterion is the image processing quality. There may be several algorithms used for exactly the same image processing operation that differ in resource intensity and the quality of the result. Multilevel optimization is especially important for resource-intensive algorithms and it gets essential performance benefits. After the multilevel optimization is applied, advanced algorithms will return results within a reasonable time period, comparable to the speed of fast but crude algorithms.

Latency

A GPU has an architecture that allows parallel pixel processing, which leads to a reduction in latency (the time it takes to process a single image). CPUs have rather modest latency, since parallelism in a CPU is implemented at the level of frames, tiles, or image lines.

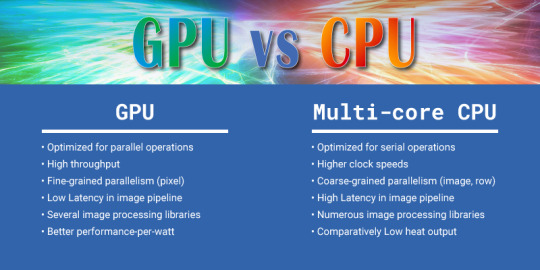

III. GPU vs CPU: KEY DIFFERENCES

Let's have a look at the key differences between GPU and CPU.

1. The number of threads on a CPU and GPU

CPU architecture is designed in such a way that each physical CPU core can execute two threads on two virtual cores. In this case, each thread executes the instructions independently.

At the same time, the number of GPU threads is tens or hundreds of times greater, since these processors use the SIMT (single instruction, multiple threads) programming model. In this case, a group of threads (usually 32) executes the same instruction. Thus, a group of threads in a GPU can be considered as the equivalent of a CPU thread, or otherwise a genuine GPU thread.

2. Thread implementation on CPU and GPU

One more difference between GPUs and CPUs is how they hide instruction latency.

A CPU uses out-of-order execution for these purposes, whereas a GPU uses actual genuine thread rotation, launching instructions from different threads every time. The method used on the GPU is more efficient as a hardware implementation, but it requires the algorithm to be parallel and the load to be high.

Thus it follows that many image processing algorithms are ideal for implementation on a GPU.

IV. ADVANTAGES OF GPU OVER CPU

Our own lab research has shown that if we compare an ideally optimized software for GPU and for CPU (with AVX2 instructions), than GPU advantage is just tremendous: GPU peak performance is around ten times faster than CPU peak performance for the hardware of the same year of production for 32-bit and 16-bit data types. The GPU memory subsystem bandwidth is significantly higher as well.

If we make a comparison with non-optimized CPU software without AVX2 instructions, then GPU performance advantage could reach 50-100 times.

All modern GPUs are equipped with shared memory, or memory that is simultaneously available to all the cores of one multiprocessor, which is essentially a software-controlled cache. This is ideal for algorithms with a high degree of locality. The bandwidth of the shared memory is several times faster than the bandwidth of CPU’s L1 cache.

The other important feature of a GPU compared to a CPU is that the number of available registers can be changed dynamically (from 64 to 256 per thread), thereby reducing the load on the memory subsystem. To compare, x86 and x64 architectures use 16 universal registers and 16 AVX registers per thread.

There are several hardware modules on a GPU for simultaneous execution of completely different tasks: image processing (ISP) on Jetson, asynchronous copy to and from GPU, computations on GPU, video encoding and video decoding (NVENC and NVDEC), tensor kernels for neural networks, OpenGL, DirectX, and Vulkan for rendering.

Still, all these advantages of a GPU over a CPU involve a high demand for parallelism of algorithms. While tens of threads are sufficient for maximum CPU load, tens of thousands are required to fully load a GPU.

Embedded applications

Another type of task to consider is embedded solutions. In this case, GPUs are competing with specialized devices such as FPGAs (Field-Programmable Gate Arrays) and ASICs (Application-Specific Integrated Circuits).

The main advantage of GPUs over these devices is significantly greater flexibility. A GPU is a serious alternative for some embedded applications, since powerful multi-core processors don’t meet requirements like size and power budget.

V. USER MISCONCEPTIONS

1. Users have no experience with GPUs, so they try to solve their problems with CPUs

One of the main user misconceptions is associated with the fact that 10 years ago GPUs were considered inappropriate for high-performance tasks.

But technologies are developing rapidly, and while GPU image processing integrates well with CPU processing, the best results are achieved when fast image processing is done on a GPU.

2. Multiple data copy to GPU and back kills performance

This is another bias among users regarding GPU image processing.

As it turns out, it’s a misconception as well, since in this case, the best solution is to implement all processing on the GPU within one task. The source data can be copied to the GPU just once, and the computation results are returned to the CPU at the end of the pipeline. In that case the intermediate data remains on the GPU. Copy can be also performed asynchronously, so it could be done in parallel with computations on the next/previous frame.

3. Small shared memory capacity, which is just 96 kB for each multiprocessor

Despite the small capacity of GPU memory, the 96 KB memory size may be sufficient if shared memory is managed efficiently. This is the essence of software optimization for CUDA and OpenCL. It is not possible just to transfer software code from a CPU to a GPU without taking into consideration the specifics of the GPU architecture.

4. Insufficient size of the global GPU memory for complex tasks

This is an essential point, which is first of all solved by manufacturers when they release new GPUs with a larger memory size. Second of all, it’s possible to implement a memory manager to reuse GPU global memory.

5. Libraries for processing on the CPU use parallel computing as well

CPUs have the ability to work in parallel through vector instructions such as AVX or via multithreading (for example, via OpenMP). In most cases, parallelization occurs in the simplest way: each frame is processed in a separate thread, and the software code for processing one frame remains sequential. Using vector instructions involves the complexity of writing and maintaining code for different architectures, processor models, and systems. Vendor specific libraries like Intel IPP, are highly optimized. Issues arise when the required functionality is not in the vendor libraries and you have to use third-party open source or proprietary libraries, which can lack optimization.

Another aspect which is negatively affecting the performance of mainstream libraries is the widespread adoption of cloud computing. In most cases, it’s much cheaper for a developer to purchase additional capacity in the cloud than to develop optimized libraries. Customers request quick product development, so developers are forced to use relatively simple solutions which aren’t the most effective.

Modern industrial cameras generate video streams with extremely high data rates, which often preclude the possibility of transmitting data over the network to the cloud for processing, so local PCs are usually used to process the video stream from the camera. The computer used for processing should have the required performance and, more importantly, it must be purchased at the early stages of the project. Solution performance depends both on hardware and software. During the initial stages of the project, you should also consider what kind of hardware you’re using. If it’s possible to use mainstream hardware, any software can be used. If expensive hardware is to be used as a part of the solution, the price-performance ratio is rapidly increasing, and it requires using optimized software.

Processing data from industrial video cameras involves a constant load. The load level is determined by the algorithms used and camera bitrate. The image processing system should be designed at the initial stages of the project in order to cope with the load within a guaranteed margin, otherwise it will be impossible to process the streams without data loss. This is a key difference from web systems, where the load is unbalanced.

VI. SUMMARY

Summing up, we come to the following conclusions:

1. GPU is an excellent alternative to CPU for solving complex image processing tasks.

2. The performance of optimized image processing solutions on a GPU is much higher than on a CPU. As a confirmation, we suggest that you refer to other articles on the Fastvideo blog, which describe other use cases and benchmarks on different GPUs for commonly used image processing and compression algorithms.

3. GPU architecture allows parallel processing of image pixels which, in turn, leads to a reduction of the processing time for a single image (latency).

4. High GPU performance software can reduce hardware cost in such systems, and high energy efficiency reduces power consumption. The cost of ownership of GPU-based image processing systems is lower than that of systems based on CPU only.

5. A GPU has the flexibility, high performance, and low power consumption required to compete with highly specialized FPGA / ASIC solutions for mobile and embedded applications.

6. Combining the capabilities of CUDA / OpenCL and hardware tensor kernels can significantly increase performance for tasks using neural networks.

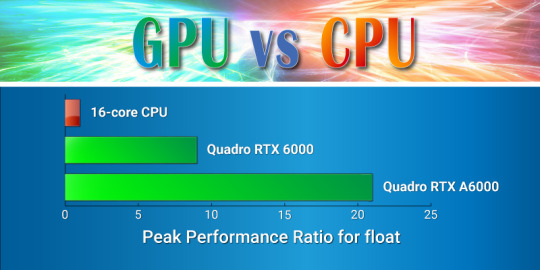

Addendum #1: Peak performance comparison for CPU and GPU

We will make the comparison for the float type (32-bit real value). This type suits well the most of image processing tasks. We will evaluate the performance per a single core. In the case of the CPU, everything is simple, we are talking about the performance of a single physical core. For the GPU, everything is somewhat more complicated. What is commonly called a GPU core is essentially an ALU, and according to NVIDIA terminology this is SP (Streaming Processor). The real analog of the CPU core is SM (this is Streaming Multiprocessor in NVIDIA terminology). The number of streaming processors in a single multiprocessor depends on the GPU architecture. For example, NVIDIA Turing graphics cards contain 64 SPs in one SM, while NVIDIA Ampere has 128 SPs. One SP can execute one FMA (Fused Multiply–Add) instruction per each clock cycle. The FMA instruction is selected here just for comparison, as it is used in convolution filters. Its integer counterpart is called MAD. The instruction (one of the variants) performs the following action: B = AX + B, where B is the accumulator that accumulates the convolution values, A is the filter coefficient, and X is the pixel value. By itself, such an instruction performs two operations: multiplication and summation. This gives us performance per clock cycle for SM: Turing - 2*64 = 128 FLOP, Ampere - 2*128 = 256 FLOP

Modern CPUs have the ability to execute two FMA instructions from the AVX2 instruction set per each clock cycle. Each such instruction contains 8 float operands and 16 FLOP operations, respectively. In total, one CPU core performs 2*16 = 32 FLOP per clock cycle.

To get performance per unit of time, we need to multiply the number of instructions per clock cycle by the frequency of the device. On average, the GPU frequency is in the range of 1.5 - 1.9 GHz, and the CPU with a load on all cores has a frequency around 3.5 – 4.5 GHz. The FMA instruction from the AVX2 set is quite heavy for the CPU. When they are performed, a large part of CPU is involved, and heat generation increases greatly. This causes the CPU to lower the frequency to avoid overheating. For different CPU series, the amount of frequency reduction is different. For example, according to this article, we can estimate a decrease to the level of 0.7 from the maximum. Next, we will take the coefficient 0.8, it corresponds to newer generations of CPUs.

We can assume that the CPU frequency is 2.5 times higher than that of the GPU. Taking into account the frequency reduction factor when working with AVX2 instructions, we get 2.5*0.8 = 2. In total, the relative performance in FLOP for the FMA instruction when compared with the CPU core is the following: Turing SM = 128 / (2.0*32) = 2 and for Ampere SM it is 256 / (2.0*32) = 4 times, i.e. one SM is more powerful than one CPU core.

Let's estimate the performance of L1 for the CPU core. Modern CPUs can load two 256-bit registers from the L1 cache in parallel or 64 bytes per clock cycle. The GPU has a unified shared memory/L1 block. The shared memory performance is the same both for Turing and Ampere architectures, and is equal to 32 float values per clock cycle, or 128 bytes per clock cycle. Taking into account the frequency ratio, we get a performance ratio of 128 (bytes per clock) / (2 (CPU frequency is greater than GPU) * 64 (bytes per clock)) = 1.

Also compare the L1 and shared memory sizes for CPU and GPU. For the CPU, the standard size of the L1 data cache is 32 kB. Turing SM has 96 kBytes of unified shared memory/L1 (shared memory takes 64 kBytes), and Ampere SM has 128 kBytes of unified shared memory/L1 (shared memory takes 100 kBytes).

To evaluate the overall performance, we need to calculate the number of cores per one device or socket. For desktop CPUs, we consider the option of 16 cores (AMD Ryzen, Intel i9). It could be considered as average core number for high performance CPUs on the market. NVIDIA Quadro RTX 6000 with Turing architecture has 72 SMs. NVIDIA Quadro RTX A6000 which is based on Ampere architecture has 84 SMs. The total ratio of the number of GPU SMs to CPU cores are 72/16 = 4.5 (Turing) and 84/16 = 5.25 (Ampere).

Based on this, we can evaluate the overall performance for the float type. For top Turing graphics cards, we get: 4.5 (the ratio of the number of GPU/CPU cores) * 2 (the ratio of single SM performance to the performance of one CPU core) = 9 times. For Ampere graphics cards we get: 5.25 (the ratio of the number of GPU/CPU cores) * 4 (the ratio of one SM performance to the performance of one CPU core) = 21 times.

Let's estimate the relative throughput of CPU L1 and GPU shared memory. We get 4.5 (the ratio of the number of GPU/CPU cores) * 1 (the ratio of single SM throughput to the one of single CPU core) = 4.5 times for Turing, and 5.25 * 1 = 5.25 for Ampere respectively. The ratios can vary slightly for a specific CPU model, but an order of magnitude will be the same.

We've obtained the result that reflects a significant advantage of the GPU over the CPU in both performance and on-chip memory throughput in computations, which are related to image processing.

It is very important to bear in mind that these results are obtained for the CPU only in the case of using AVX2 instructions. In the case of using scalar instructions, the CPU performance is reduced by 8 times, both in arithmetic operations and in the memory throughput. Therefore, for modern CPUs, software optimization is of particular importance.

Let's say a few words about the new AVX-512 instruction set for the CPU. This is the next generation of SIMD instructions with a vector length increased to 512 bits. Performance is expected to double in the future compared to AVX2. Modern versions of the CPU provide a speedup of up to 1.6 times, as they require even more frequency reduction than the instructions from the AVX2. The AVX-512 has not yet been widely distributed in the mass segment, but this is likely to happen in the future. The disadvantages of this approach will be the need to adapt the algorithms to a new vector length and recompile the code for support.

Let's try to compare the system memory bandwidth. Here we can see a significant spread of values. For the CPU, the initial numbers are 50 GB/s (2-channel DDR4 3200 controller) for mass-market CPUs. In the workstation segment the CPUs with four-channel controllers dominate with a bandwidth around 100 GB/s. For servers we can see CPUs with 6-8 channel controllers and a performance could be more than 150 GB/s.

For the GPU, the value of global memory bandwidth could vary in a wide range. It starts from 450 GB/s for the Quadro RTX 5000 and it could reach 1550 GB/s for the latest A100. As a result, we can say that the throughputs in comparable segments differ significantly, the difference could be up to an order of magnitude.

From all of the above, we can conclude that the GPU is significantly (sometimes almost by an order of magnitude) superior to the CPU that executes optimized code. In the case of non-optimized code for the CPU, the difference in performance can be even greater, up to 50-100 times. All this creates serious prerequisites for increasing productivity in widespread image processing applications.

Addendum #2 - memory-bound and compute bound algorithms

When we are talking about these types of algorithms, it is important to understand that we imply a specific implementation of the algorithm on a specific architecture. Each processor has some peak arithmetic performance. If the implementation of the algorithm can reach the peak performance of the processor on the target instructions, then it is compute-bound, otherwise the main limitation will be memory access and the implementation becomes memory-bound.

The memory subsystem of all processors is hierarchical, consisting of several levels. The closer the level is to the processor, the smaller it is in volume and the faster it is. The first level is the L1 data cache, and at the last level is the RAM or the global memory.

The algorithm can initially be compute-bound at the first level of the hierarchy, and then to become memory-bound at higher levels of the hierarchy.

We could consider a task to sum two arrays and to write the result to the third one. You can write this as X = Y + Z, where X, Y, Z are arrays. Let's say we use the AVX instructions to implement the solution on the processor. Then we will need two reads, one summation, and one write per element. A modern CPU can perform two reads and one write simultaneously to the L1 cache. But at the same time, it can also execute two arithmetic instructions, and we can only use just one. This means that the array summation algorithm is memory-bound at the first level of the memory hierarchy.

Let's consider the second algorithm, which is image filtering in a 3×3 window. Image filtering is based on the operation of convolution of the pixel neighborhood with filter coefficients. The MAD (or FMA, depending on the architecture) instruction is used to calculate the convolution. For window 3×3 we need 9 of these instructions. We actually need to do the following: B = AX + B, where B is the accumulator to store the convolution values, A is the filter coefficient, and X is the pixel value. The values of A and B are in registers, and the pixel values are loaded from memory. In this case, one load is required per FMA instruction. Here, the CPU is able to supply two FMA ports with data due to two loads, and the processor will be fully loaded. The algorithm can be considered compute-bound.

Let's look at the same algorithm at the RAM access level. We can take the most memory-efficient implementation, when a single reading of a pixel updates all 9 the windows it belongs. In this case, there are 9 FMA instructions per read operation. Thus, a single CPU core processing float data at 4 GHz requires 2 (instructions per clock cycle) × 8 (float in AVX register) × 4 (Bytes in float) × 4 (GHz) / 9 = 28.5 GB/s. The dual-channel controller with DDR4-3200 has a peak throughput of 50 GB/s and could serve as a data source just for two CPU cores in this task. Therefore, such an algorithm running on an 8-16 core processor is memory-bound. This is despite the fact that at the lower level it is balanced.

Now we consider the same algorithm when implemented on the GPU. It is immediately clear that the GPU has a less balanced architecture at the SM level with a bias to computing. For the Turing architecture, the ratio of the speed of arithmetic operations (in float) to the load throughput from shared memory is 2:1, for the Ampere architecture this is 4:1. Due to the larger number of registers on the GPU, you can implement the above optimization on the GPU registers. This allows us to balance the algorithm even for Ampere. And at the shared memory level, the implementation remains compute-bound. From the point of view of the top level memory (global memory), the calculation for the Quadro RTX 5000 (Turing) gives the following results: 64 (operations per clock cycle) × 4 (Bytes in float) × 1.7 (GHz) / 9 = 48.3 GB/s per SM. The ratio of total throughput to SM throughput is 450 / 48.3 = 9.3 times. The total number of SMs in the Quadro RTX 5000 is 48. That is, for the GPU, the high-level filtering algorithm is memory-bound.

As the window size grows, the algorithm becomes more complex and shifts towards compute-bound accordingly. Most image processing algorithms are memory-bound at the global memory level. And since the global memory bandwidth of the GPU is in many cases an order of magnitude greater than that of the CPU, this provides a comparable performance gain.

Addendum #3: SIMD and SIMT models, or why there are so many threads on GPU

To improve CPU performance, SIMD (single instruction, multiple data) instructions are used. One such instruction allows you to perform several similar operations on a data vector. The advantage of this approach is that it increases performance without significantly modifying the instruction pipeline. All modern CPUs, both x86 and ARM, have SIMD instructions. The disadvantage of this approach is the complexity of programming. The main approach to SIMD programming is to use intrinsic. Intrinsic are built-in compiler functions that contain one or more SIMD instructions, plus instructions for preparing parameters. Intrinsic forms a low-level language very close to assembler, which is extremely difficult to use. In addition, for each instruction set, each compiler has its own Intrinsic set. As soon as a new set of instructions comes out, we need to rewrite everything. If we switch to a new platform (from x86 to ARM) you need to rewrite all the software. If we start using another compiler - again, we need to rewrite the software.

The software model for the GPU is called SIMT (Single instruction, multiple threads). A single instruction is executed synchronously in multiple threads. This approach can be considered as a further development of SIMD. The scalar software model hides the vector essence of the hardware, automating and simplifying many operations. That is why it is easier for most software engineers to write the usual scalar code in SIMT than vector code in pure SIMD.

CPU and GPU have different ways to solve the issue of instruction latency when executing them on the pipeline. The instruction latency is how many clock cycles the next instruction wait for the result of the previous one. For example, if the latency of an instruction is 3 and the CPU can run 4 such instructions per clock cycle, then in 3 clock cycles the processor can run 2 dependent instructions or 12 independent ones. To avoid pipeline stalling, all modern processors use out-of-order execution. In this case, the processor analyzes data dependencies between instructions in out-of-order window and runs independent instructions out of the program order.

The GPU uses a different approach which is based on multithreading. The GPU has a pool of threads. Each clock cycle, one thread is selected and one instruction is chosen from that thread, then that instruction is sent for execution. On the next clock cycle, the next thread is selected, and so on. After one instruction has been run from all the threads in the pool, GPU returns to the first thread, and so on. This approach allows us to hide the latency of dependent instructions by executing instructions from other threads.

When programming the GPU, we have to distinguish two levels of threads. The first level of threads is responsible for SIMT generation. For the NVIDIA GPU, these are 32 adjacent threads, which are called warp. SM for Turing is known to support 1024 threads. This number is divided into 32 real threads, within which SIMT execution is organized. Real threads can execute different instructions at the same time, unlike SIMT.

Thus, the Turing streaming multiprocessor is a vector machine with a vector size of 32 and 32 independent real threads. The CPU core with AVX is a vector machine with a vector size of 8 and two independent threads.

Original article see at: https://www.fastcompression.com/blog/gpu-vs-cpu-fast-image-processing.htm Subscribe to our mail list: https://mailchi.mp/fb5491a63dff/fastcompression

0 notes

Text

Part 2: JPEG2000 solutions in science and healthcare. JP2 format limitations

Author: Fyodor Serzhenko

In the first part of the article, JPEG 2000 in science, healthcare, digital cinema and broadcasting, we discussed the key technologies of JPEG2000 and focused on its application in digital cinema.

In this second part, we will continue examining the functions of JPEG2000, as well as review its main drawback and talk about the other application areas where the format turned out to be in high demand. At the end we will present a solution which simplifies and makes the process of working with the format much more convenient.

1. JPEG2000 in science and medicine

Window mode support is one of the handy features that makes JPEG2000 attractive. Scientists often have to work with files of enormous resolution, the width and height of which can exceed 40,000 pixels, but only a small part of which is of interest. Standard JPEG would have to decode the entire image to work with it, while JPEG2000 allows you to decode only a selected area.

JP2 is also used for space photography. Those wonderful pictures of Mars taken, for example, with a HiRISE camera, are available in JP2 format. Still, the data link from space to Earth is subject to interference, so errors may occur during the transfer or even entire data packets may be lost. However, when the special mode is enabled, it is somewhat error-resilient, which can be helpful when communication or storage devices are unreliable. This mode allows you to detect errors that occur when data is lost during transmission. It is important to note that the image is divided into small blocks (for example, 32x32 or 64x64 pixels), where, after preliminary transformations, each bit plane is encoded separately. Thus, a lost bit most likely spoils only some of the less significant bit planes, and this usually has little effect on overall quality. By the way, in JPEG, the loss of a bit can lead to significant distortions of a big part of or even the entire image.

Regarding the operation of the special mode with the integrity check in the JPEG2000 format file, additional information is added to the compressed file to check the correctness of the data. Without this information, we often can’t determine during decoding whether there’s an error or not, and we continue the process as if nothing had happened. As a result, it’s still possible that even one erroneous bit will spoil quite a large part of the image. If this mode is enabled, however, then we detect any error when it appears and can limit its effect on other parts of the image.

The JPEG2000 format also plays important role in healthcare. In this application area, it is extremely important to maintain a sufficient bit depth of the source data to make it possible to fix all the subtleties of each area of the body under examination. JPEG2000 is used in CTs, X-rays, MRIs, etc.

Also, in accordance with FDA (Food and Drug Administration) requirements, images acquired by means of medical imaging must be stored in the original format (without loss). The JPEG2000 format is an ideal solution in this case.

Another interesting feature of JPEG2000 is the compression of three-dimensional data arrays. This can be highly relevant both in science and in medicine (for example, three-dimensional tomography results). The 10th part of the JPEG2000 standard is devoted to the compression of such data: JP3D (volumetric imaging).

2. JP2 format limitations

Unfortunately, JP2 (JPEG2000) isn’t so simple — in fact, it’s not supported by most web browsers (with the exception of Safari). The format is computationally complex, and existing open source codecs have been too slow for active use over the years. Even now, when the speed of processors is increasing with each new generation, and codecs are being optimized and accelerated, their capabilities still leave something to be desired. To illustrate the importance of codec speed, let's return to the topic of digital cinema for a moment: specifically, to the creation of DCPs (Digital Cinema Packages), the same set of files that we enjoy in cinemas. Again, JPEG2000 is the standard for digital cinema and, accordingly, is required to create a DCP package. Unfortunately, its computational complexity makes this task quite resource-intensive and time-consuming. Moreover, existing open source codecs don't allow decoding movies at the required rate of 25, 30 or 60 fps for 12-bit data at resolutions already in 2K or 4K.

3. How to speed up processing with the JP2 format

JPEG2000 provides modes for operating at a higher speed, but this is achieved at the expense of a slight reduction in quality or compression ratio. However, even the slightest reduction in image quality can be unacceptable for some application areas.

To speed up the process with JPEG2000, we at Fastvideo have developed our own implementation of the JPEG 2000 codec. Our solution is based on NVIDIA CUDA technology, thanks to which it’s now possible to make a parallel implementation of the coder and decoder using all CPU and GPU cores.