priyaaank

19 posts

I am Priyank. A polyglot programmer @Sahaj with love for technology

Don't wanna be here? Send us removal request.

Text

Strangulating bare-metal infrastructure to Containers

Change is inevitable. Change for the better is a full-time job ~ Adlai Stevenson I

We run a successful digital platform for one of our clients. It manages huge amounts of data aggregation and analysis in Out of Home advertising domain.

The platform had been running successfully for a while. Our original implementation was focused on time to market. As it expanded across geographies and impact, we decided to shift our infrastructure to containers for reasons outlined later in the post. Our day to day operations and release cadence needed to remain unaffected during this migration. To ensure those goals, we chose an approach of incremental strangulation to make the shift.

Strangler pattern is an established pattern that has been used in the software industry at various levels of abstraction. Documented by Microsoft and talked about by Martin Fowler are just two examples. The basic premise is to build an incremental replacement for an existing system or sub-system. The approach often involves creating a Strangler Facade that abstracts both existing and new implementations consistently. As features are re-implemented with improvements behind the facade, the traffic or calls are incrementally routed via new implementation. This approach is taken until all the traffic/calls go only via new implementation and old implementation can be deprecated. We applied the same approach to gradually rebuild the infrastructure in a fundamentally different way. Because of the approach taken our production disruption was under a few minutes.

This writeup will explore some of the scaffolding we did to enable the transition and the approach leading to a quick switch over with confidence. We will also talk about tech stack from an infrastructure point of view and the shift that we brought in. We believe the approach is generic enough to be applied across a wide array of deployments.

The as-is

###Infrastructure

We rely on Amazon Web Service to do the heavy lifting for infrastructure. At the same time, we try to stay away from cloud-provider lock-in by using components that are open source or can be hosted independently if needed. Our infrastructure consisted of services in double digits, at least 3 different data stores, messaging queues, an elaborate centralized logging setup (Elastic-search, Logstash and Kibana) as well as monitoring cluster with (Grafana and Prometheus). The provisioning and deployments were automated with Ansible. A combination of queues and load balancers provided us with the capability to scale services. Databases were configured with replica sets with automated failovers. The service deployment topology across servers was pre-determined and configured manually in Ansible config. Auto-scaling was not built into the design because our traffic and user-base are pretty stable and we have reasonable forewarning for a capacity change. All machines were bare-metal machines and multiple services co-existed on each machine. All servers were organized across various VPCs and subnets for security fencing and were accessible only via bastion instance.

###Release cadence

Delivering code to production early and frequently is core to the way we work. All the code added within a sprint is released to production at the end. Some features can span across sprints. The feature toggle service allows features to be enabled/disable in various environments. We are a fairly large team divided into small cohesive streams. To manage release cadence across all streams, we trigger an auto-release to our UAT environment at a fixed schedule at the end of the sprint. The point-in-time snapshot of the git master is released. We do a subsequent automated deploy to production that is triggered manually.

CI and release pipelines

Code and release pipelines are managed in Gitlab. Each service has GitLab pipelines to test, build, package and deploy. Before the infrastructure migration, the deployment folder was co-located with source code to tag/version deployment and code together. The deploy pipelines in GitLab triggered Ansible deployment that deployed binary to various environments.

Figure 1 — The as-is release process with Ansible + BareMetal combination

The gaps

While we had a very stable infrastructure and matured deployment process, we had aspirations which required some changes to the existing infrastructure. This section will outline some of the gaps and aspirations.

Cost of adding a new service

Adding a new service meant that we needed to replicate and setup deployment scripts for the service. We also needed to plan deployment topology. This planning required taking into account the existing machine loads, resource requirements as well as the resource needs of the new service. When required new hardware was provisioned. Even with that, we couldn’t dynamically optimize infrastructure use. All of this required precious time to be spent planning the deployment structure and changes to the configuration.

Lack of service isolation

Multiple services ran on each box without any isolation or sandboxing. A bug in service could fill up the disk with logs and have a cascading effect on other services. We addressed these issues with automated checks both at package time and runtime however our services were always susceptible to noisy neighbour issue without service sandboxing.

Multi-AZ deployments

High availability setup required meticulous planning. While we had a multi-node deployment for each component, we did not have a safeguard against an availability zone failure. Planning for an availability zone required leveraging Amazon Web Service’s constructs which would have locked us in deeper into the AWS infrastructure. We wanted to address this without a significant lock-in.

Lack of artefact promotion

Our release process was centred around branches, not artefacts. Every auto-release created a branch called RELEASE that was promoted across environments. Artefacts were rebuilt on the branch. This isn’t ideal as a change in an external dependency within the same version can cause a failure in a rare scenario. Artefact versioning and promotion are more ideal in our opinion. There is higher confidence attached to releasing a tested binary.

Need for a low-cost spin-up of environment

As we expanded into more geographical regions rapidly, spinning up full-fledged environments quickly became crucial. In addition to that without infrastructure optimization, the cost continued to mount up, leaving a lot of room for optimization. If we could re-use the underlying hardware across environments, we could reduce operational costs.

Provisioning cost at deployment time

Any significant changes to the underlying machine were made during deployment time. This effectively meant that we paid the cost of provisioning during deployments. This led to longer deployment downtime in some cases.

Considering containers & Kubernetes

It was possible to address most of the existing gaps in the infrastructure with additional changes. For instance, Route53 would have allowed us to set up services for high availability across AZs, extending Ansible would have enabled multi-AZ support and changing build pipelines and scripts could have brought in artefact promotion.

However, containers, specifically Kubernetes solved a lot of those issues either out of the box or with small effort. Using KOps also allowed us to remained cloud-agnostic for a large part. We decided that moving to containers will provide the much-needed service isolation as well as other benefits including lower cost of operation with higher availability.

Since containers differ significantly in how they are packaged and deployed. We needed an approach that had a minimum or zero impact to the day to day operations and ongoing production releases. This required some thinking and planning. Rest of the post covers an overview of our thinking, approach and the results.

The infrastructure strangulation

A big change like this warrants experimentation and confidence that it will meet all our needs with reasonable trade-offs. So we decided to adopt the process incrementally. The strangulation approach was a great fit for an incremental rollout. It helped in assessing all the aspects early on. It also gave us enough time to get everyone on the team up to speed. Having a good operating knowledge of deployment and infrastructure concerns across the team is crucial for us. The whole team collectively owns the production, deployments and infrastructure setup. We rotate on responsibilities and production support.

Our plan was a multi-step process. Each step was designed to give us more confidence and incremental improvement without disrupting the existing deployment and release process. We also prioritized the most uncertain areas first to ensure that we address the biggest issues at the start itself.

We chose Helm as the Kubernetes package manager to help us with the deployments and image management. The images were stored and scanned in AWS ECR.

The first service

We picked the most complicated service as the first candidate for migration. A change was required to augment the packaging step. In addition to the existing binary file, we added a step to generate a docker image as well. Once the service was packaged and ready to be deployed, we provisioned the underlying Kubernetes infrastructure to deploy our containers. We could deploy only one service at this point but that was ok to prove the correctness of the approach. We updated GitLab pipelines to enable dual deploy. Upon code check-in, the binary would get deployed to existing test environments as well as to new Kubernetes setup.

Some of the things we gained out of these steps were the confidence of reliably converting our services into Docker images and the fact that dual deploy could work automatically without any disruption to existing work.

Migrating logging & monitoring

The second step was to prove that our logging and monitoring stack could continue to work with containers. To address this, we provisioned new servers for both logging and monitoring. We also evaluated Loki to see if we could converge tooling for logging and monitoring. However, due to various gaps in Loki given our need, we stayed with ElasticSearch stack. We did replace logstash and filebeat with Fluentd. This helped us address some of the issues that we had seen with filebeat our old infrastructure. Monitoring had new dashboards for the Kubernetes setup as we now cared about both pods as well in addition to host machine health.

At the end of the step, we had a functioning logging and monitoring stack which could show data for a single Kubernetes service container as well across logical service/component. It made us confident about the observability of our infrastructure. We kept new and old logging & monitoring infrastructure separate to keep the migration overhead out of the picture. Our approach was to keep both of them alive in parallel until the end of the data retention period.

Addressing stateful components

One of the key ingredients for strangulation was to make any changes to stateful components post initial migration. This way, both the new and old infrastructure can point to the same data stores and reflect/update data state uniformly.

So as part of this step, we configured newly deployed service to point to existing data stores and ensure that all read/writes worked seamlessly and reflected on both infrastructures.

Deployment repository and pipeline replication

With one service and support system ready, we extracted out a generic way to build images with docker files and deployment to new infrastructure. These steps could be used to add dual-deployment to all services. We also changed our deployment approach. In a new setup, the deployment code lived in a separate repository where each environment and region was represented by a branch example uk-qa,uk-prod or in-qa etc. These branches carried the variables for the region + environment. In addition to that, we provisioned a Hashicorp Vault to manage secrets and introduced structure to retrieve them by region + environment combination. We introduced namespaces to accommodate multiple environments over the same underlying hardware.

Crowd-sourced migration of services

Once we had basic building blocks ready, the next big step was to convert all our remaining services to have a dual deployment step for new infrastructure. This was an opportunity to familiarize the team with new infrastructure. So we organized a session where people paired up to migrate one service per pair. This introduced everyone to docker files, new deployment pipelines and infrastructure setup.

Because the process was jointly driven by the whole team, we migrated all the services to have dual deployment path in a couple of days. At the end of the process, we had all services ready to be deployed across two environments concurrently.

Test environment migration

At this point, we did a shift and updated the Nameservers with updated DNS for our QA and UAT environments. The existing domain started pointing to Kubernetes setup. Once the setup was stable, we decommissioned the old infrastructure. We also removed old GitLab pipelines. Forcing only Kubernetes setup for all test environments forced us to address the issues promptly.

In a couple of days, we were running all our test environments across Kubernetes. Each team member stepped up to address the fault lines that surfaced. Running this only on test environments for a couple of sprints gave us enough feedback and confidence in our ability to understand and handle issues.

Establishing dual deployment cadence

While we were running Kubernetes on the test environment, the production was still on old infrastructure and dual deployments were working as expected. We continued to release to production in the old style.

We would generate images that could be deployed to production but they were not deployed and merely archived.

Figure 2 — Using Dual deployment to toggle deployment path to new infrastructure

As the test environment ran on Kubernetes and got stabilized, we used the time to establish dual deployment cadence across all non-prod environments.

Troubleshooting and strengthening

Before migrating to the production we spent time addressing and assessing a few things.

We updated the liveness and readiness probes for various services with the right values to ensure that long-running DB migrations don’t cause container shutdown/respawn. We eventually pulled out migrations into separate containers which could run as a job in Kubernetes rather than as a service.

We spent time establishing the right container sizing. This was driven by data from our old monitoring dashboards and the resource peaks from the past gave us a good idea of the ceiling in terms of the baseline of resources needed. We planned enough headroom considering the roll out updates for services.

We setup ECR scanning to ensure that we get notified about any vulnerabilities in our images in time so that we can address them promptly.

We ran security scans to ensure that the new infrastructure is not vulnerable to attacks that we might have overlooked.

We addressed a few performance and application issues. Particularly for batch processes, which were split across servers running the same component. This wasn’t possible in Kubernetes setup, as each instance of a service container feeds off the same central config. So we generated multiple images that were responsible for part of batch jobs and they were identified and deployed as separate containers.

Upgrading production passively

Finally, with all the testing we were confident about rolling out Kubernetes setup to the production environment. We provisioned all the underlying infrastructure across multiple availability zones and deployed services to them. The infrastructure ran in parallel and connected to all the production data stores but it did not have a public domain configured to access it. Days before going live the TTL for our DNS records was reduced to a few minutes. Next 72 hours gave us enough time to refresh this across all DNS servers.

Meanwhile, we tested and ensured that things worked as expected using an alternate hostname. Once everything was ready, we were ready for DNS switchover without any user disruption or impact.

DNS record update

The go-live switch-over involved updating the nameservers’ DNS record to point to the API gateway fronting Kubernetes infrastructure. An alternate domain name continued to point to the old infrastructure to preserve access. It remained on standby for two weeks to provide a fallback option. However, with all the testing and setup, the switch over went smooth. Eventually, the old infrastructure was decommissioned and old GitLab pipelines deleted.

Figure 3 — DNS record update to toggle from legacy infrastructure to containerized setup

We kept old logs and monitoring data stores until the end of the retention period to be able to query them in case of a need. Post-go-live the new monitoring and logging stack continued to provide needed support capabilities and visibility.

Observations and results

Post-migration, time to create environments has reduced drastically and we can reuse the underlying hardware more optimally. Our production runs all services in HA mode without an increase in the cost. We are set up across multiple availability zones. Our data stores are replicated across AZs as well although they are managed outside the Kubernetes setup. Kubernetes had a learning curve and it required a few significant architectural changes, however, because we planned for an incremental rollout with coexistence in mind, we could take our time to change, test and build confidence across the team. While it may be a bit early to conclude, the transition has been seamless and benefits are evident.

2 notes

·

View notes

Text

Patterns for microservices - Sync vs Async

Microservices is an architecture paradigm. In this architectural style, small and independent components work together as a system. Despite its higher operational complexity, the paradigm has seen a rapid adoption. It is because it helps break down a complex system into manageable services. The services embrace micro-level concerns like single responsibility, separation of concerns, modularity, etc.

Patterns for microservices is a series of blogs. Each blog will focus on an architectural pattern of microservices. It will reason about the possibilities and outline situations where they are applicable. All that while keeping in mind various system design constraints that tug at each other.

Inter-service communication and execution flow is a foundational decision for a distributed system. It can be synchronous or asynchronous in nature. Both the approaches have their trade-offs and strengths. This blog attempts to dissect various choices in detail and understand their implications.

Dimensions

Each implementation style has trade-offs. At the same time, there can be various dimensions to a system under consideration. Evaluating trade-offs against these constraints can help us reason about approaches and applicability. There are various dimensions of a system that impact the execution flow and the communication style of a system. Let’s look at some of them.

Consumers

Consumers of a system can be external programs, web/mobile interfaces, IoT devices etc. Consumer applications often deal with the server synchronously and expect the interface to support that. It is also desirable to mask the complexity of a distributed system with a unified interface for consumers. So it is imperative that our communication style allows us to facilitate it.

Workflow management

With many participating services, the management of a business-workflow is crucial. It can be implicit and can happen at each service and therefore remain distributed across services. Alternatively, it can be explicit. An orchestrator service can own up the responsibility for orchestrating the business-flows. The orchestration is a combination of two things. A workflow specification, that lays out the sequence of execution and the actual calls to the services. The latter is tightly bound to the communication paradigm that the participating services follow. Communication style and execution flow drive the implementation of an orchestrator.

A third option is an event-choreography based design. This substitutes an orchestrator via an event bus that each service binds to.

All these are mechanisms to manage a workflow in a system. We will cover workflow management in detail, later in this series. However, we will consider constraints associated with them in the current context as we evaluate and select a communication paradigm.

Read/Write frequency bias

Read/Write frequency of the system can be a crucial factor in its architecture. A read-heavy system expects a majority of operations to complete synchronously. A good example would be a public API for a weather forecast service that operates at scale. Alternatively, a write-heavy system benefits from asynchronous execution. An example would be a platform where numerous IoT devices are constantly reporting data. And of course, there are systems in between. Sometimes it is useful to favor a style because of the read-write skew. At other times, it may make sense to split reads and writes into separate components.

As we look through various approaches we need to keep these constraints in perspective. These dimensions will help us distill the applicability of each style of implementation.

Synchronous

Synchronous communication is a style of communication where the caller waits until a response is available. It is a prominent and widely used approach. Its conceptual simplicity allows for a straightforward implementation making it a good fit for most of the situations.

Synchronous communication is closely associated with HTTP protocol. However, other protocols remain an equally reasonable way to implement synchronous communication. A good example of an alternative is RPC calls. Each component exposes a synchronous interface that other services call.

An interceptor near the entry point intercepts the business flow request. It then pushes the request to downstream services. All the subsequent calls are synchronous in nature. These calls can be parallel or sequential until processing is complete. Handling of the calls within the system can vary in style. An orchestrator can explicitly orchestrate all the calls. Or calls can percolate organically across components. Let’s look at few possible mechanisms.

Variations

Within synchronous systems, there are several approaches that an architecture can take. Here is a quick rundown of the possibilities.

De-centralized and synchronous

A de-centralized and synchronous communication style intercepts a flow at the entry point. The interceptor forwards the request to the next step and awaits a response. This cycle continues downstream until all services have completed their execution. Each service can execute one or more downstream service sequentially or in parallel. While the implementation is straightforward, the flow details remain distributed in the system. This results in coupling between components to execute a flow.

The calls remain synchronous throughout the system. Thus, the communication style can fulfill the expectations of a synchronous consumer. Because of distributed workflow nature, the approach doesn’t allow room for flexibility. It is not well suited for a complex workflow that is susceptible to change. Since each request to the system can block services simultaneously, it is not ideal for a system with high read/write frequency.

Orchestrated, synchronous and sequential

A variation of a synchronous communication is with a central orchestrator. The orchestrator remains the intercepting service. It processes the incoming request with workflow definition and forwards it to downstream services. Each service, in turn, responds back to the orchestrator. Until the processing of a request, orchestrator keeps making calls to services.

Among the constraints listed at the beginning, the workflow management is more flexible in this approach. The workflow changes remain local to orchestrator and allow for flexibility. Since the communication is synchronous, synchronous consumers can communicate without a mediating component. However, orchestrator continues to hold all active requests. This burdens orchestrator more than other services. It is also susceptible to being a single point of failure. This style of architecture is still suitable for a read-heavy system.

Orchestrated, synchronous and parallel

A small improvement on the previous approach is to make independent requests parallel. This leads to higher efficiency and performance. Since this responsibility falls within the realms of orchestration, it is easy to do. Workflow management is already centralized. It only requires changes in the declaration to distinguish between parallel and sequential calls.

This can allow for faster execution of a flow. With shorter response times, orchestrator can have a higher throughput.

Workflow management is more complex than the previous approach. It still might be a reasonable trade off since it improves both throughput and performance. All that, while keeping the communication synchronous for consumers. Due to its synchronous nature, the system is still better for a read-heavy architecture.

Trade offs

Although, synchronous calls are simpler to grasp, debug and implement, there are certain trade-offs which are worth acknowledging in a distributed setup.

Balanced capacity

It requires a deliberate balancing of the capacity for all the services. A temporary burst at one component can flood other services with requests. In asynchronous style, queues can mitigate temporary bursts. Synchronous communication lacks this mediation and requires service capacity to match up during bursts. Failing this, a cascading failure is possible. Alternatively, resilience paradigms like circuit breakers can help mitigate a traffic burst in a synchronous system.

Risk of cascading failures

Synchronous communication leaves upstream services susceptible to cascading failure in a microservices architecture. If downstream service fail or worst yet, take too long to respond back, the resources can deplete quickly. This can cause a domino effect for the system. A possible mitigation strategy can involve consistent error handling, sensible timeouts for connections and enforcing SLAs. In a synchronous environment, the impact of a deteriorating service ripple through other services immediately. As mentioned previously, prevention of cascading errors can happen by implementing a bulkhead architecture or with circuit breakers.

Increased load balancing & service discovery overhead

The redundancy and availability needs for a participating service can be addressed by setting them up behind a load balancer. This adds a level of indirection per service. Additionally, each service needs to participate in a central service discovery setup. This allows it to push its own address and resolve the address of the downstream services.

Coupling

A synchronous system can exhibit much tighter coupling over a period of time. Without abstractions in between, services bind directly to the contracts of the other services. This develops a strong coupling over a period of time. For simple changes in the contract, the owning service is forced to adopt versioning early on. Thereby increasing the system complexity. Or it trickles down a change to all consumer services which are coupled to the contract.

With emerging architectural paradigms like service mesh, it is possible to address some of the stated issues. Tools like Istio, Linkerd, Envoy etc. allow for a service mesh creation. This space is maturing and remains promising. It can help build systems that are synchronous, more decoupled and fault tolerant.

Asynchronous

Asynchronous communication is well suited for a distributed architecture. It removes the need to wait for a response thereby decoupling the execution of two or more services. Implementation of asynchronous communication is possible with several variations. Direct calls to a remote service over RPC (for instance grpc) or via a mediating message bus are few examples. Both orchestrated message passing and event choreography use message bus as a channel.

One of the advantages of a central message bus is consistent communication and message delivery semantics. This can be a huge benefit over direct asynchronous communication between services. It is common to use a medium like a message bus that facilitates communication consistently across services. The variations of asynchronous communications discussed below will assume a central message pipeline.

Variations

The asynchronous communication deals better with sporadic bursts of traffic. Each service in the architecture either produces messages, consumes messages or does both. Let’s look at different structural styles of this paradigm.

Choreographed asynchronous events

In this approach, each component listens to a central message bus and awaits an event. The arrival of an event is a signal for execution. Any context needed by execution is part of the event payload. Triggering of downstream events is a responsibility that each service owns. One of the goals in event-based architecture is to decouple the components. Unfortunately, the design needs to be responsible to cater to this need.

A notification component may expect an event to trigger an email or SMS. It may seem pretty decoupled since all that the other services need to do is produce the event. However, someone does need to own the responsibility of deciding type of notification and content. Either notification can make that decision based on an incoming event info. If that happens then we have established a coupling between notifications and upstream services. If upstream services include this as part of the payload, then they remain aware of flows downstream.

Even so, event choreography is a good fit for implicit actions that need to happen. Error handling, notifications, search-indexing etc. It follows a decentralized workflow management. The architecture scales well for a write-heavy system. The downside is that synchronous reads need mediation and workflow is spread through the system.

Orchestrated, asynchronous and sequential

We can borrow a little from our approach in orchestrated synchronous communication. We can build an asynchronous communication with orchestrator at the center.

Each service is a producer and consumer to the central message bus. Responsibilities of orchestrator involve routing messages to their corresponding services. Each component consumes an incoming event or message and produces the response back on the message queue. Orchestrator consumes this response and does transformation before routing ahead to next step. This cycle continues until the specified workflow has reached its last state in the system.

In this style, the workflow management is local to the orchestrator. The system fares well with write-heavy traffic. And mediation is necessary for synchronous consumers. This is something that is prevalent in all asynchronous variations.

The solution to choreography coupling problem is more elegant in the orchestrated system. The workflow is with orchestrator in this case. A rich workflow specification can capture information like notification type and content template. Any changes to workflow remain with orchestrator service.

Hybrid with orchestration and event choreography

Another successful variation is hybrid systems with orchestration and event choreography both. The orchestration is excellent for explicit flow execution, while choreography can handle implicit execution. Execution of leaf nodes in a workflow can be implicit. Workflow specification can facilitate emanation of events at specific steps. This can result in the execution of tasks like notifications, indexing, et-cetera. The orchestration can continue to drive explicit execution.

This amalgamation of two approaches provides best of both worlds. Although, there is a need for precaution to ensure they don’t overlap responsibilities and clear boundaries dictate their functioning.

Overview

Asynchronous style of architecture addresses some of the pitfalls that synchronous systems have. An asynchronous set-up fares better with temporary bursts of requests. Central queues allow services to catch up with a reasonable backlog of requests. This is useful both when a lot of requests come in a short span of time or when a service goes down momentarily.

Each service connects to a message queue as a consumer or producer. Only the message queue requires service discovery. So the need for a central service discovery solution is less pressing. Additionally, since multiple instances of a service are connected to a queue, external load balancing is not required. This prevents another level of indirection that otherwise, load balancer introduces. It also allows services to linear scale seamlessly.

Trade Offs

Service flows that are asynchronous in nature can be hard to follow through the system. There are some trade-offs that a system adopting asynchronous communication will make. Let’s look at some of them.

Higher system complexity

Asynchronous systems tend to be significantly more complex than synchronous ones. However, the complexity of system and demands of performance and scale are justified for the overhead. Once adopted both orchestrator and individual components need to embrace the asynchronous execution.

Reads/Queries require mediation

Unless handled specifically synchronous consumers are most affected by an asynchronous architecture. Either the consumers need to adapt to work with an asynchronous system, or the system should present a synchronous interface for the consumers. Asynchronous architecture is a natural fit for the write-heavy system. However, it needs mediation for synchronous reads/queries. There are several ways to manage this need. Each one has certain complexity associated with it.

Sync wrapper

Simplest of all approaches is building a sync wrapper over an async system. This is an entry point that can invoke asynchronous flows downstream. At the same time, it holds the request awaiting until the response returns or a timeout occurs. A synchronous wrapper is a stateful component. An incoming request ties itself to the server it lands on. The response from downstream services needs to arrive at the server where original request is waiting. This isn’t ideal for a distributed system, especially one that operates at scale. However, it is simple to write and easy to manage. For a system with reasonable scaling and performance needs it can fit the bill. A sync wrapper should be a consideration before a more drastic restructuring.

CQRS

CQRS is an architectural style that separates reads from writes. CQRS brings a significant amount of risk and complexity to a system. It is a good fit for systems that operate at scale and requite heavy reads and writes. In CQRS architecture, data from write database streams to a read database. Queries run on a read-optimized database. Read/Write layers are separate and the system remains eventually consistent. Optimization of both the layers is independent. A system like this is far more complex in structure but it scales better. Moreover, the components can remain stateless (unlike sync wrappers).

Dual support

There is a middle ground here between a sync wrapper and a CQRS implementation. Each service/component can support synchronous queries and asynchronous writes. This works well for a system which is operating at a medium scale. So read queries can hop between components to finish reads synchronously. Writes to the system, on the other hand, will flow down asynchronous channels. There is a trade-off here though. The optimization of a system for both reads and writes independently is not possible. Something, that is beneficial for a system operating at high traffic.

Message bus is a central point of failure

This is not a trade-off, but a precaution. In the asynchronous communication style, message bus is the backbone of the system. All services constantly produce-to and consume-from the message bus. This makes the message bus the Achilles heel of the system as it remains a central point of failure. It is important for a message bus to support horizontal scaling otherwise it can work against the goals of a distributed system.

Eventual consistency

An asynchronous system can be eventually consistent. It means that results in queries may not be latest, even though the system has issued the writes. While this trade-off allows the system to scale better, it is something to factor-in into system’s design and user experience both.

Hybrid

It is possible to use both asynchronous and synchronous communication together. When done the trade-offs of both approaches overpower their advantages. The system has to deal with two communication styles interchangeably. The synchronous calls can cascade degradation and failures. On the other hand, the asynchronous communication will add complexity to the design. In my experience, choosing one approach in isolation is more fruitful for a system design.

Verdict

Martin Fowler has a great blog on approaching the decision to build microservices. Once decided, a microservice architecture requires careful deliberation around its execution flow style. For a write, heavy system asynchronous is the best bet with a sync-over-async wrapper. Whereas, for a read-heavy system, synchronous communication works well.

For a system that is both read and write heavy, but has moderate scale requirements, a synchronous design will go a long way in keeping the design simple. If a system has significant scale and performance needs, asynchronous design with CQRS pattern might be the way to go.

9 notes

·

View notes

Text

How I learned to love personal development

Our daily grind has a tendency to push personal development in shadows of delivery and deadlines. Personal development, something that needs constant fostering often attracts muted attention and gets precipitous intervention when "people-team" reminds us about the half yearly review cycle. Even after spending a decade in my profession, I find myself often disoriented and confused about how I am tracking my progress against the goals that I have; and that is, if I have charted out my goals at all. In recent past, I have made a renewed effort to find structure in my personal development and reorganize it in ways to make it easier to tackle and track both. While I am still on a journey to realize its effectiveness, I hope to share my thought process and the technique both in a hope that you find it relevant and useful too.

The road ahead

For me introspection is crucial part of personal development. It is important for me to know and have an opinion about how my career is going. However at the same time I find the thought process overwhelming. I typically attack it by building a list of role models and find a persona that resonates with me most. Using that persona coupled with my interests, I try to chart a long term goal that leads me in that direction. It is worth noting that personas merely act as a guide to chart my long term career path and high level skill categorization. I would eschew from imitating the exact skills since the individual strengths can vary significantly. To elaborate on this, I have few fictional personas listed below; let me pick one and walk through the process.

I would say that I resonate with Sara most and I have a strong belief that mobile and internet of things will converge eventually. I also think there is a huge potential to simplify the bridging between both. Coupling them together, a reasonable goal for me for next 18-24 months could be “I want to establish and associate myself to mobile and Internet of things domain. I intend to contribute by building an ecosystem & community that simplifies and makes cross platform development for mobile & other devices effective.”

A high level goal is great however it needs to be broken down into short term actionable items. This step requires me to build structure in which I would collect feedback, collate data and measure. At this point I would have given enough thought to my goal and would have a sense about the most crucial skills that I need to acquire? I tend to classify similar skills together and pull them in a category. Although I know people who approach this other way round. They look at generic categories and identifying things they would like to focus on under each of them. An example output of this categorization for me would involve categorizing activities like blogging, conferences, FOSS etc together in "community contribution"; while each of those would become axes I measure myself on. Lets look at this in bit more of detail.

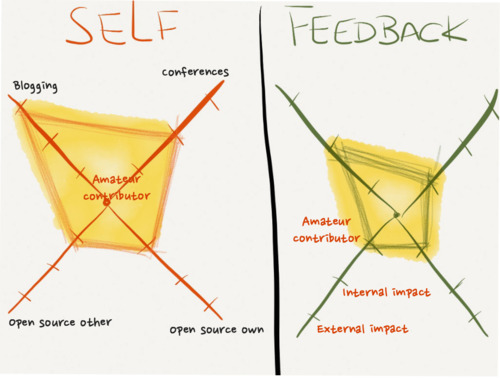

Pivoting on spider charts

Once I have taken a look at my long term goal, it is probably wise to take a stock of where I am currently. This will help me identify areas that need most work and actionable items that will help me reach there. There are two ways I gather this data. Feedback is a great way to start, but I usually find it more useful if I distill down my thoughts first into spider chart visualizations asking myself “How did last 6 months go for me”. It helps me build a sense of things and rate myself on different axes. Once I have that data ready, I reach out for feedback and compare the perception of people around me, on those axes to the data I have from my intuition. It helps me spot outliers and identify false notions both.

Coming back to spider charts, I define them with identified categorizations, axes and levels. I would typically identify root categorization like Technology, Community contribution, Soft skills and Consulting. Each of these categories have a spider chart of their own with sub categories as axes and each axis has typically three rating levels. These levels are very personalized and the lowest bar is in context of my experience with that categorization and an assumption that I have room to improve.

Take a cursory look at the charts, categorization, sub categorization and ratings below to get a sense of data I have at this point. The charts on left are based on "how I feel" about capabilities and skills and the ones on right are based on "feedback from team". Each axis represent a single subcategorization listed in "axes" column and three levels of ratings are defined by "rating levels" column.

Axes

Mobile platforms - How many of them do I understand?

Mobile development as a skill - How well do I understand mobile?

Code Hygiene - How well can I write code?

Rating Levels

Drive individually

Bootstrapping a team and running with it

Impacting community

Axes

Blogging

Speaking

Open source contribution to existing frameworks

Open source frameworks used by at least 5 people/teams

Rating Levels

Amateur contributor

Internal impact

External impact

Axes

Mentoring & Leading

Assertiveness - Based on feedback in past. (Am I assertive when needed?)

Planning - Based on suggestion in past (Do I proactively plan on behalf of team?)

Rating Levels

Comfortable

Excited

Addicted

As you can see, these charts are often a great reality check tool apart from presenting relevant data visually. In soft skills section, rating levels are more generic and rational for different sub categorization is bit more pragmatic. Some I want to proactively track, for others past feedback/suggestions is a motivation. This is to highlight, that intuition and feedback both should help build up axes of a categorization. I have covered only few categorizations here, however in reality, I would keep a few more. Some of those would include additional bits, like what have I done to improve efficiency, quality and innovation of our team as collective.

Introspection to action

Once I have looked at visualizations and identified feedback and data against each category/axis I tend to define broad action items as stories on a wall. After all we are agile and feedback driven in daily delivery why should it be any different when it comes to our own development? However discipline to strike through the items is also the hardest part for the next six months. (Six months is a period I feel comfortable with to revisit my progress and up-skilling). The obvious mistake that I have done in past is bog myself down with tons of action items or make action items too beefy resulting in constant procrastination. So this time around I am experimenting with two key differences.

I have limited the number of goals for next 6 months between 6-8. These can be further broken down into small tasks though.

I track my progress using a combination of virtual wall and labeled classification to keep a check on balance I strike between different categorization.

You can see the goals, task list and labels in the images below. Feel free to check out the wall here on Trello. The wall is public but it may not show you label classification in read only mode.

Periodic checkpoint and follow through

I find a periodic catch up with select few to be a good motivator to stay on track, so I have set a 5 minute recurring google calendar invite with few people to review my Trello boardevery 30 days for next 6 months. That reminds everyone periodically about taking out a minute to review progress and also helps me stay focused on tasks to prepare for next catch up.

Aspirations and support

Individuals often need to invest time on team to build better software, it is equally important that teams take time to allow individuals to focus on personal development. Therefore, accommodating a personal development story card for an individual in iteration planning is an excellent way to allocate time for individual development and reap benefits as a team. This approach can be powerful as it often help tackle tasks that require either experimentation, a chunk of continuous time or resources/complexity that only project can offer.

Beyond that I typically identify any aspiration or support that I need from organization. Letting those know up front helps tap opportunities better and allow for a better planning for individual development as well. This is very organization specific, however in my current organization, this support in terms of resources and time both, is easy to find. So there isn’t a lot I need to do about this as long as I know what I want and how it helps.

The final word

All of that being said it is probably prudent to accept that, no matter how many failsafe mechanism I put to refrain myself from being distracted or straying away into an ad-hoc approach; nothing trumps focus and discipline. I do not see this approach as a substitute for that, it is merely a structure that forces me to give personal development enough thought and augments to it by helping me define tasks that are easier to follow through, range across skill sets and require smaller/gradual time commitment.

I hope, you find at least few things that are interesting to you. I would love to hear about, how you attack your personal development and things that work for you or don't.

Note: All artwork in this blog was drawn with love using Paper by 53 on iPad

1 note

·

View note

Text

Reinforced design with TDD

This is my fifth blog in the series of “Decoding ThoughtWorks’ coding problems”. If you haven’t checked out the first four blogs, please read my blogs on “Decoding ThoughtWorks coding problems”, “Objects that talk domain", "Objects that are loose & discrete” and "Design Patterns for Win".

Let me begin this post with a simple explanation of TDD a.k.a Test Driven Development. Test driven development or test first approach is as its name suggest, an approach to development where tests are written as the first step. And then code is subsequently added to pass those tests. The process can be summarized in three simple steps:

Write a failing test for a business scenario. The code for this scenario doesn’t exist.

Add code that exclusively addresses the scenario that test covers. Nothing more, nothing less.

Make test run and validate that it passes now.

Continue to add a series of tests and code until all business scenarios are covered and you are confident that every line that you coded is sufficiently tested. Note the usage of phrase “sufficiently tested”. The emphasis is not on 100% test coverage but instead on what's practical and useful in business context.

This approach is dominant in unit tests that are written. In Java this would be done using JUnit and moq or a similar framework. C# NUnit. Ruby rspec or Test::Unit etc.

Once you get the hang of it apart from being tons of fun, this approach of development helps in several ways:

It forces you to break down current problem into small units of functionality.

It forces you to think about “behavior” of an object while testing it and helps in solidifying the encapsulation.

It documents code as you go and it is a means of documenting code which doesn’t get outdated. As soon as code evolves, the existing tests fail, forcing a developer to update the specs.

It builds a safety net around codebase for a team. This helps in ensuring that quality is shipped from day one and collaboration on same codebase is faster. As a developer I would feel confident in making code changes, since I know that any changes that breaks a related/unrelated functionality will be caught by existing test suite.

Every project that I have worked on in ThoughtWorks follows a discipline of building this safety from day one. Our pre-commit hooks and continuous integration servers run these tests and provide immediate feedback for each commit, in an event, a piece of functionality is broken.

As part of the coding problem solution sent in by candidates, we rarely get to see unit tests, let alone tests that have evidence of Test Driven Development in them. This is primarily due to lack of awareness and practice. While I personally do not penalize codebases for not having tests or TDD approach, presence of those definitely earns brownie points from me. We love to see like minded people who believe in quality and development approach that we are big proponent of.

I hope to substantiate the above concepts with some code that is part of Mars Rover problem. Take a look at the internal details of the Rover class in solution. Once you understand the rover, pretend it doesn’t exist. And lets write simple test structure to drive the creation of this behavior through tests.

Here is a gist of skeleton methods that tests the key behavior of the rover. If you would look closely, you will see that beyond the behavior that is tested, we do not want to know anything about the rover. So the internal workings of a rover can be encapsulated and hidden from the outer world.

https://gist.github.com/priyaaank/e57389b1c256a6ac0299

It is worth noting that for reference purpose while I have created a full class listing all methods to test behavior, in reality, we would approach each method’s red (failing test) test and green (passing test) test cycle one by one. A more complete implementation of the rover test is here.

It is worth mentioning that while a huge community of developers favors unit testing, there are camps where TDD is looked down upon (not unit testing though). You can find an invigorating debate on this subject here between several luminaries.

There are additional resources and books to enrich your understanding about TDD. I hope you find them useful.

http://martinfowler.com/bliki/TestDrivenDevelopment.html

An excellent chapter on TDD from book The Art of Agile Development

Test Driven Development by Kent Beck

3 notes

·

View notes

Text

Design Patterns for Win

This is my fourth blog in the series of “Decoding ThoughtWorks’ coding problems”. If you haven’t checked out the first three blogs, please read my blogs on “Decoding ThoughtWorks coding problems”, "Objects that talk domain" and "Objects that are loose & discrete".

If you are not familiar with design pattern, then I would explain design pattern as “a tried and tested pattern or template to organize your object design, given a set of constraints and requirements around object interaction. In other words, they are dictionary of formalized best object design practices to handle common issues of object interactions”.

Design patterns do several things for me.

They are tried and tested way of organizing object interactions. Most of the design patterns emphasize on basic code hygiene that we have talked about in previous blog post of “discrete objects and loose coupling”. This means, that using a design pattern means not having to reinvent the wheel and have a tried and tested way to solve a design problem. It makes me more efficient and code cleaner.

They embody a design approach in a succinct definition. When I am pairing with someone (ThoughtWorks adopts Extreme Programming practices on day to day basis, which involves pairing) to solve a business problem in context of domain, the name of design patterns tells me what my pair is thinking and intends to do, without an elaborate discussion. An example would be “Hey, lets convert this switch case branching of rules for tax calculation at Airport or City with a strategy pattern”. I would presume, that anyone who is familiar with strategy pattern, this statement would indicate to you instantly where the refactoring will go to.

They leave code more predictable and readable. Purely from readability point of view; when I am looking at a code that I haven’t written I am left to my own means to build the mental models required to understand the cobweb of object interactions. However, for instance, if I see “visitor” keywords somewhere indicating a “Visitor pattern”, I instantly grasp what the intention and structure of object interactions. Thereby simplifying the code for me. The structure becomes more readable and predictable.

That being said, it must be noted that lot of design patterns seem like a “simple and obvious” choice only if you have read and experienced them once. Without the knowledge the design can come off as obtuse and complex. Benefit of better collaboration is only evident when all involved people understand design patterns. And therefore to be a better programmer and a collaborative pair, it is recommended you understand at least some of the commonly used design patterns.

In this post, I will explore few design patterns to relay the benefits they bring in. Also I’ll talk about the mars rover problem and show how Command Pattern simplifies the design extremely without any conditional branching in logic.

Command Pattern to parse rover commands

A typical rover command’s implementation can often look like:

https://gist.github.com/priyaaank/bfae96a306afd3bc88fd

If you look closely above, the logic is concise to start with but it is using branching to distinguish flows. While in this specific instance refactoring further in order to simplify the logic is somewhat debatable, in large and complex systems code fragments like this tend to bind several flows and evolve into labyrinth of incomprehensible and unreadable code.

A simple choice of Command Pattern can alleviate the issue here. In contrast the code can be refactored to as follows.

https://gist.github.com/priyaaank/1afc714ccde8a05b5230

As I mentioned already if you are not familiar with design patterns, at first glance the code above can seem like a refactoring that has created more components and files. However once you have experienced the benefits of design patterns in context of flexibility and extensibility first hand you will see the rationale of this refactoring.

Additionally, another most popular pattern to handle conditional branching and switch cases is using polymorphism or “Strategy Pattern”. Here is an example code before refactoring.

https://gist.github.com/priyaaank/6e4c37b238d5d64c875a

Lets create a strategy to create a beverage which has concrete classes that implement that strategy to prepare a beverage based on the type of strategy. When a beverage needs to be created, a concrete type of beverage strategy must be instantiated and injected into the prepare method. Resulting code creates a clear separation of concerns and isolates case specific steps that need to be executed to prepare a beverage. Look at the resulting structure below. You will notice that it also hints at using builder pattern apart from strategy to prepare a beverage.

https://gist.github.com/priyaaank/d4a84b98879b68d67556

Beyond singleton, factory and abstract factory patterns; some of the other popular design patterns that I have found immensely helpful more often than others, to solve common design problems while coding or brainstorming are as follows:

Observer pattern

Strategy pattern

Command pattern

Builder pattern

Decorator pattern

Bridge pattern

Visitor pattern

Based on my personal experience I would recommend reading, understanding and applying these patterns in suitable conditions. A number of available good books can tell you how to identify the situation which presents a motivation to use a specific pattern. Adding knowledge of these to your toolbelt will equip you well to handle design problems with ease and proven patterns.

As an additional read, Martin Folwer maintains a comprehensive list of refactoring situations and examples on refactoring.com. Please do check these out. In addition to design patterns I find these helpful to guide me refactor code to a more readable state. A more detailed rationale for each each refactoring can be found in his book.

You might want to check out the next blog post in the series, "Reinforced design with TDD".

2 notes

·

View notes

Text

Objects that are loose & discrete

This is my third blog in the series of “Decoding ThoughtWorks’ coding problems”. If you haven’t checked out the first two blogs, please read my blog on “Decoding ThoughtWorks coding problems” and “Objects that talk domain” here.

We looked at how models need to represent the language of a domain. Now lets explore a bit around the concepts that help us build the solution that has loosely coupled models. It is worth talking about why loose coupling between objects is desirable. First, as Martin Fowler defines in his article on coupling, “If changing a module in program requires a change in another module, then coupling exists”. Coupling itself isn’t bad, as long as objects are not too tightly coupled. I will try to present some guidelines to help quantity what would qualify as “tightly coupled” design. On the other hand, these could also be used as design principles to build a loosely coupled program.

State vs. Behavior

State of an object is a snapshot in time of it’s attributes and their values. This snapshot is one of the possible and a legitimate combination of attribute values for a given domain. A simple example is that of a light switch. If an object represents a flip switch with a boolean, then possible states of an object are “ON” and “OFF”. A similar example with in context of mars rover solution would be, Mars rover pointing North, with coordinates marked as 2,3. The combination is valid both from program’s and domain perspective. Behavior, on the other hand, is a set of rules that often change the state of current or other objects else based on current state of the participating objects.

Poor encapsulation of state inadvertently leads to tight coupling between objects. A discrete object is one, which is mindful about exposing its internal state. Drawing clear distinction between state and behavior is the first step to loose coupling. In our example code, if we look at the method of moving a rover, we see that a “move” command generates a new set of coordinates for rover internally, and hence changes state. However oblivious to this, rest of the world just relies on the understanding that “rover has moved”. Here coordinate is the internal state that represents movement, “move” is the behavior that can be called on a rover.

In case of a flip switch for a light, to preserve the state, the switch object will not allow anyone to set the boolean “ON/OFF” value directly, instead it would expose methods “switchOn” and “switchOff” to world, which are responsible for modifying the state.

Tell, don’t ask

Among all the objects that participate in building our solution, we should aspire for interactions where objects “Tell” another object about what needs to be done and “not ask”. This promotes the encapsulation of state, since only behavior is exposed and not state. A simple example from mars rover would be, checking if a coordinate is within the bounds of a plateau. If I asked the plateau its boundary coordinates and compared that myself, then I have accessed the internal state and plateau by itself has no value to contribute in the program. It poignant existence would be that of a value holder object. However by exposing the behavior, we centralize the logic in one place and irrespective of dependent objects, needed change in logic would be required only once, should it change.

Unidirectional associations

Keep your object model simple. If two objects share bidirectional association, then chances are that a change or modification in either will affect the other. Also, their reusability with other objects becomes questionable as their existence is closely coupled with each other. Bi-directional associations ties two objects together and reduces reusability. Unidirectional objects, when used with abstractions of interfaces, promotes reusability and loose coupling both.

In Mars rover, Coordinates exhibit unidirectional relationship with rover. Since coordinates do not know about rover in specific, it also means that any object can use coordinates to represent its position.

Here is a dependency graph for the Mars Rover application. It is worth noting that Rover and commands share a bidirectional relationships. It is definitely something worth improving however, given the context and problem statement, it seems a bit pre-mature to sort that out. In any case, the association has been abstracted by creating a command interface so that coupling isn't concrete.

Law of Demeter or principle of least knowledge

Law of demeter is a design philosophy which has following three guidelines.

Each object should have limited knowledge about other objects. It should know about objects, which are closest to it in domain.

Each object should talk to only objects it knows about and not an unknown object. Here the objects that a model may know about are the objects that it contains and the objects those objects contain subsequently. Synonymous to “Friend of a friend” on facebook.

Only talk to the objects that you know directly and not their friends. So in facebook analogy, you should not interact with friend of a friend. A friend should do that on your behalf if need be.

To elaborate the guidelines with mars rover solution there three instances, each representing a guideline.

A rover knows about most of the other objects, however the relationship is unidirectional. Domain does not demand that a direction, coordinate or plateau knows about a rover, and so they don’t. Bringing in that knowledge will couple all of them together tightly.

In accordance with second guideline, the objects identified as part of mars rover solution interact with only objects they are closely associated with and not to an object they don’t know about.

A command object like “MoveCommand” knows about the Rover and it needs to change the rover’s state. One possible way for move command, is to ask rover it’s coordinate or direction and modify it directly. But that would be breaking the last guideline. Instead that action is delegated to rover, which in turns extracts information from friend and updates the state.

A simple way to put this principle across is, that if you see a long method chaining in an object, that runs deep across objects, then it is most probably breaking the law of demeter. e.g.

rocket.engine.blastOff();

In statement above, someone is accessing an action on rockets’ friend engine, even though it only knows about rocket directly. Changing following to

rocket.liftOff();

and have “liftOff” internally call “blastOff” on engine is a more decoupled way of doing the same thing.

Dependency injection

Finally, to manage dependency between objects it is advisable to program to interfaces and use constructor injection to plant an object instance. Elaborating it in context of mars rover, once again, passing in plateau, direction and coordinates to a rover allows a program to inject a specialized version of an object. For example if instead of Mars, same rover was deployed on Pluto, I can inject a pluto plateau with different boundary values. This would automatically change the program output, when a mars rover crosses a boundary (which would be much smaller in pluto’s case, since the size of the planet is relatively smaller).

This is especially effective when done in combination with generic interfaces that define contract. The reason I have not done it here is because I personally think, creating interfaces up front without variation in subclasses’ behavior sort of comes off as an over engineering. I tend to add interfaces when I have more than one concrete type and when situation demands for it. It is always relatively easier to extract them out. More about it in section that talks about YAGNI and KISS.

In mars rover, only place where an interface made sense was for command objects. ICommand represents a generic contract for all the move or rotate commands. And that helps in executing a command on a rover in a uniform way.

Tight coupling between objects is pervasive and if it becomes entrenched in a program, it directly affects readability and maintainability. Bi-directional relationships require a bloated thought bubble to contextualize all interactions between models making it hard to understand and navigate through code.

Hopefully, this gave you some sense about how to decouple your objects using some basic guidelines. Please head over to the next blog post to read more about “Design patterns for win”.

2 notes

·

View notes

Text

Objects that talk domain

This is my second blog in the series of “Decoding ThoughtWorks’ coding problems”. If you haven’t checked out the first blog, please read my blog on “Decoding ThoughtWorks coding problems”.

A significant number of candidates who apply at ThoughtWorks as a developers, often come from a rich enterprise app development background. A big part of their career has been spent in developing applications in J2EE platform or similar frameworks in other languages like C#. Years of acquired learning of layered development with plethora of services, factories and data transfer objects is hard to shrug off. And it shows.

The most common problem that plagues the coding solution is that it is ripe with prolific use of data transfer objects. They hold state and have no behavior. Everything they own is public.

Before I talk about what is putting off about that, let me ask you a question. If you were to ask your friend her age, would you just expect the friend to let you know the year, month and date separately or just tell you the age? The difference between both responses is “who owns the information” and “who responsibility is it to do the work to get the answer”. I would claim, it is your friend’s responsibility, since she has all the data she need to give you an answer. Divulging her year, month and date is needless when all you need is age.

I hope you catch my drift.

The core and essential part of any coding problem is it’s domain. And unless that domain is succinctly represented in a set of models which own the behavior that pertains to them, a solution always seems a bit kludgy and off putting.

I would love to pick some points around utility of domain objects from Domain driven design by Eric Evans to elaborate on what a object design consideration would be.

When I say “model”, I intend to talk about an object that represents an implementation which can act as a backbone of the language used by the team in context of domain. A model would represent a distilled version of domain, agreed upon as a team, to represent terms, concepts and behavior to collaborate seamlessly with domain experts without translation.

It is understandable that candidate won’t have the understanding of the terminologies for an abstract coding problem like the one we ask you to solve as part of coding round. Instead we would love for candidates to define a domain terminology based on the problem statement and elaborate it for readers using the models they compose. It would be a asking too much if I did not substantiate this with an example. So to illustrate my point I would like to use a code that is a solution for one of the initial ThoughtWorks’ coding problem and my favorite; “Mars Rover”.

Problem statement here and solution coded in Java here.

Before we look at some code, let me outline how I think about the problem in terms of models. I feel, we would have a “Rover” that should have a sense of its “Coordinates” and “Direction”. Coordinate and direction together would make for a “Location” if there is enough behavior that we derive out of location itself. However currently based on problem statement it doesn’t seem like, we do. There are “Commands” that rover can understand. And finally rover must have been deployed on a “Plateau” as problem states. So that summary highlights a sequence of models that represent my domain. How are they connected is driven by their interaction and that translates into the behavior each of these model encompass.

The objects I have identified in problem are as follows:

https://gist.github.com/priyaaank/fcabe6575313774ac1d8

Having had that look at the identified objects, here are few things I find comforting about this instance of object design.

Models reflect the domain that was outlined by the problem statement. It builds concepts and interactions as behavior and actors in problem statement as models.

Once you understand model, you can use the model names to talk about domain without having to translate. An example statement could be, for instance “Should rover process move command when it is pointing to a north direction and on the boundary coordinates of the plateau?”.

Each model is rich with behavior. It has rules to process state internally and subsequently change either its own state or other objects’. And this has been exposed as model’s behavior to external world.

The models distill the knowledge of domain to those few needed concepts and discard everything that isn’t relevant to solution at hand. For example, a Plateau could do so much more if we wanted to, but in scope of this problem all we need is, to check if something within the bounds of plateau and that is it. So that is the behavior we distill and incorporate as part of Plateau model.

Of course, design is an iterative process and for me to design it even in its current form took several iterations and discussions. And I encourage you to do the same as you build your own solution.

Having identified objects pertaining to domain, we need to follow to the discipline of organizing them in such a way that they don’t become too tightly coupled. This is where the design aspects take over a bit. Lets look at some of the considerations in my next blog, to find out how interactions of objects should be structured to keep them loosely coupled. Head over to "Objects that are loose and discrete"

#thoughtworks#coding#coding problems#object oriented programming#recruiting#domain driven design#mars rover

2 notes

·

View notes

Text

Decoding ThoughtWorks' coding problems

I think it is only apt that I introduce myself before I kick start an in-depth post about Object Oriented design of coding problems that we send out in Thoughtworks’ interview process.

I have been developer for 10 years and more than half of that time has been spent at ThoughtWorks. I have actively worked across languages and frameworks like Java, Ruby, C#, Rails and now mobile technologies. In recent years my involvement in developing mobile applications and frameworks for hybrid apps has been prolific. And as part of my day job, I am often presented with an opportunity, every now and then, to evaluate the codes that have been sent in for review as part of interview process.

It is those learnings of my own and expectations of several other ThoughtWorkers that I am trying to distill into a series of blogs. My end goal is to talk about things that some of us, as code reviewers, would look for in a solution. And hopefully I can rationalize the reasoning for those expectations.

Use of Object Oriented paradigm to develop programs is not a new one. It has been around for years and as a developer myself I am on a journey to refine my understanding of it as I continue to practice it everyday. My suggestions, rationale and illustrations here are merely a single way to present what is “one of the better” (or at least I think so) approaches to development. But it is by no means, “the only way”. I hope you are open to look at things from a different point of view, because I am. A closed mind and a dogmatic approach is probably the biggest injustice one can do to a subjective topic like “good code and good design”.

As part of my involvement in code reviews, I have often seen individual potential eclipsed by misplaced understanding of “good code”. While the definition is subjective and quite broad; my hope here is to decode; what does “good code” means in context of ThoughtWorks’ code reviews? What do we look for? Along the way, I will also try to answer, what do some of us hope to gain out of this?

This series has following blog posts which focus on individual aspects of code design. Look at the list below and feel free to look at them in order you desire; I would try to keep them as independent as possible.

Objects that talk domain

Objects that are loose and discrete

Design patterns for win

Reinforced design with TDD

All the blog posts above use our one time favorite but now decommssioned coding problem called mars rover written in Java to illustrate the concepts. The code is completely open source and public. Feel free to deploy it on mars (or not!) and tear it apart if that makes you happy.

Needless to say, I would love to hear feedback and suggestion about what you disagree with and what could be improved both in code example and blogs. I am always reachable with comments on this blog or more discreetly on priyaaank at gmail.com.

Lets start our deep dive into the meat of the blog series with "Objects that talk domain".

2 notes

·

View notes

Text

I love ThoughtWorks too

*The views presented in this article are purely individual and do not represent ThoughtWorks' stand on the issue in anyway.

This post of mine has been motivated by a feedback that one of our recent candidate shared after a sour experience in interview. Often candidates are generous enough to leave this kind of feedback with our recruiters while other times they blog about it. This is not the first although I hope this can be our last. But every time a review like this comes anywhere on internet it spawns countless debates within ThoughtWorks. Having endured with numerous things said about ThoughtWorks, that I don't agree with, today I feel compelled enough to present my view, so as to try and balance the information and perspectives about ThoughtWorks that we find in a beautiful place called internet.

I have been a developer for 10 years now and more than half of my career has been spent at ThoughtWorks. So when I say that "I love ThoughtWorks", I hope that I can add some credibility to it. And I hope to do so without the cloak of anonymity. I believe that to truly criticize or appreciate something or someone, if you need to duck under a cloak of invisibility, then you are refusing to stand your ground and eschewing away from the consequences. Only if, all the battles to set the world right were fought that way.

Anyway, over the years that I have spent at ThoughtWorks, I have seen fair share of criticism for both; things that Thoughtworks do as a company, and things that ThoughtWorkers do as an individual. In both the cases I have seen ourselves introspect both as an organization and as individuals. When our project teams do retrospection every few weeks, introspection is hardly a radical concept.

And that is the first and foremost thing I love about ThoughtWorks.

Introspection