#mobile

Text

#母畜#frankenstein#miraculous ladybug#georgenotfound#john wick#mobile#asian hunk#lesbian love#persona 3#gone girl

123 notes

·

View notes

Text

#georgenotfound#mobile#tatto#lesbian love#sasha luss#we bare bears#bi visibility day#sea life#paleontology#rafe cameron#wednesday netflix#patton sanders#alyssa milano#scott pilgrim vs the world

121 notes

·

View notes

Text

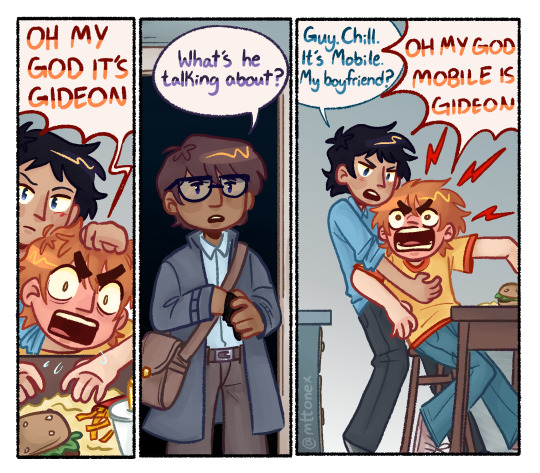

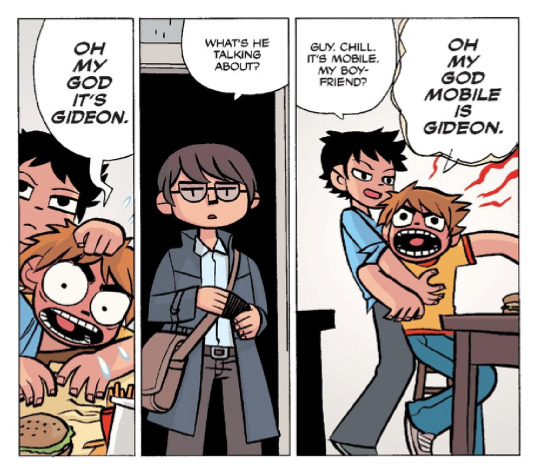

RAHH WALLACE FLIPPING YOU OFF JUMPSCARE 👇👇👇👇 Anyways sorry if the clothes look different I was too lazy to search for more references so I just drew them from memory <//3 Also im genuinely not sure if this looks like the SPTO artstyle at all☠️☠️ The comic artstyle is easier to draw

+ Mobillace comic because lack of content

#scott pilgram takes off#scott pilgram fanart#spto#scott pilgrim#wallace wells#mobile#wallace x mobile#fanart#comic#spto fanart#Disclaimer I am not a long time fan but I drew with the comic artstyle just earlier and it is infact easier#mobillace#almost forgot this tag 😔#oh and also happy new uear

4K notes

·

View notes

Text

scott when every guy with dark hair and glasses is gideon

#mattis art#my art#scott pilgrim#wallace wells#mobile#mobile scott pilgrim#mobillace#scollace#???#scott pilgrim takes off#scott pilgrim vs the world#spto#spvtw#scott pilgrim fanart#fanart#art#i love this page its so funny#i need to stop redrawing things#(i wont)

7K notes

·

View notes

Text

thinking about wallace wells. thinking about going through hook ups like tissue paper, never believing anybody will stick around besides scott, who's here only because he has nowhere else to go, and you let him stay anyway even though he doesnt pay the rent. one of the only consistent people in your life, someone you might've actually genuinely liked straight up dying and leaving you with a sudden void of an empty apartment and a cold spot in a futon. thinking about immediately getting wasted and bringing a guy home, someone whose name you won't remember but it's okay because youre only in it for the sex— you dont believe in sparks, after all. believing that scott's conception of his one true girl was a joke because you just don't think you'll ever love anyone like that. kissing someone on a movie set because it's something to do, because he's dressed in the costume of somebody you cared for, because it's all manufactured, false realities and layers of separation deep enough for you to brush off his pleas for connection. thinking about going to paris after everything, the city of love, as tacky as that is, saying you're only there to spend money. but despite the insistence on irony you meet a guy— a fellow canadian, actually, twin foreigners in an unfamiliar place. someone who actually wants to stick around, who follows you through the city to see the sights and seems to genuinely like you. it can't be genuine, though— can't possibly be a reason to stay beyond a few hookups. so you stop at the river and you kiss him to get it over with...

but instead you see sparks.

#im very normal if u couldn't tell#scott pilgrim#scott pilgrim vs the world#wallace wells#scott pilgrim takes off#mobillace#scott pilgrim the anime#spto#spto spoilers#spvtw#spvstw#meta#scott pilgram vs the world#mobile spvstw#mobile#scott pilgram takes off#character analysis#juni speaks

6K notes

·

View notes

Text

hubbies

4K notes

·

View notes

Text

MOBILLACE NATION WE WOOOOON

#im so happy he finally has a voice… oh mein gott mobiles in the show#spto#scott pilgrim takes off#wallace wells#mobile#mobillace#Scott pilgrim

4K notes

·

View notes

Text

awww

3K notes

·

View notes

Photo

An animation of Sonic laughing, from the DoCoMo chara-den which were avatars you could use for videophone calls on keitai. Thanks to @RockmanCosmo for getting us the footage from DoCoMo's tool's, which were obtained from a P902i phone.

Support us on Patreon

1K notes

·

View notes

Text

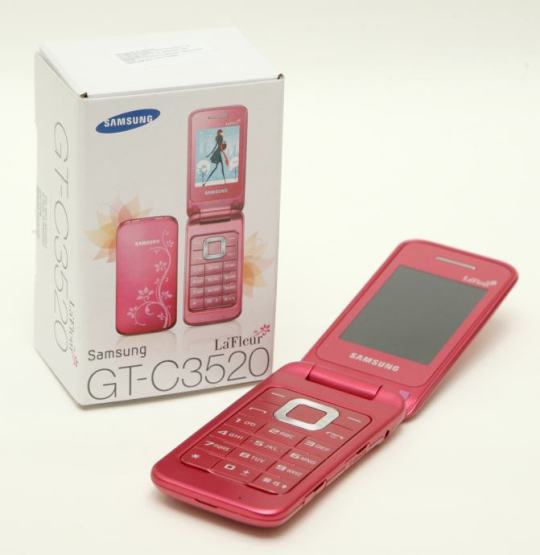

Samsung GT-C3520 La Fleur edition

#2000s#00s#art#cellphone#design#frutiger aero#flip phone#floral metro#graphic design#graphics#mobile#phone#photos#samsung#samsung gt-c3520#techcore#tech#technology#vectorbloom

1K notes

·

View notes

Text

While the Todd and Wallace plot was silly and all. And I love how they made Todd bisexual. But More people should appreciate mobillace, the canon, based on a real married couple, relationship. Maybe it’s just me but I love how Wallace didn’t feel sparks with any guy he had been with until Mobile. The fact that Wallace probably thought the kiss with Mobile wouldn’t have lead to anything but his normal hookups. It’s such a perfect way to wrap his character up in a little bow. This absolutely slut of a man, who didn’t believe in sparks finding love in some random guy he thought was just his type. <3

#scott pilgram takes off#scott pilgrim#wallace wells#todd ingram#mobile#mobillace#mobile x wallace#I love them so much#they’ve been canon since the comics :)

2K notes

·

View notes

Text

mobillace nation riiise….rise…….

#tv girl album redraws yay#my art#Wallace wells#mobile#scott pilgrim#spvtw#spto#scott pilgrim takes off#scott pilgrim vs the world#mobillace#mobile scott pilgrim

2K notes

·

View notes

Text

Sparks are real?!

2K notes

·

View notes

Text

Y'all which season 2 episode is this

Also MOBILLACE CAMEO 2?_!?&!?# (Cannot live without them 😘)

#scott pilgram takes off#scott pilgram fanart#spto#spto fanart#scott pilgrim#kim pine#stephen stills#knives chau#young niel#young niel nordegraf#wallace wells#mobile#wallace x mobile#mobillace#fake screenshot

2K notes

·

View notes

Text

Tumblr (mobile): hey we have an update for u :)

The update:

13K notes

·

View notes